Super-Resolution Reconstruction of Formation MicroScanner Images Based on the SRGAN Algorithm

Abstract

1. Introduction

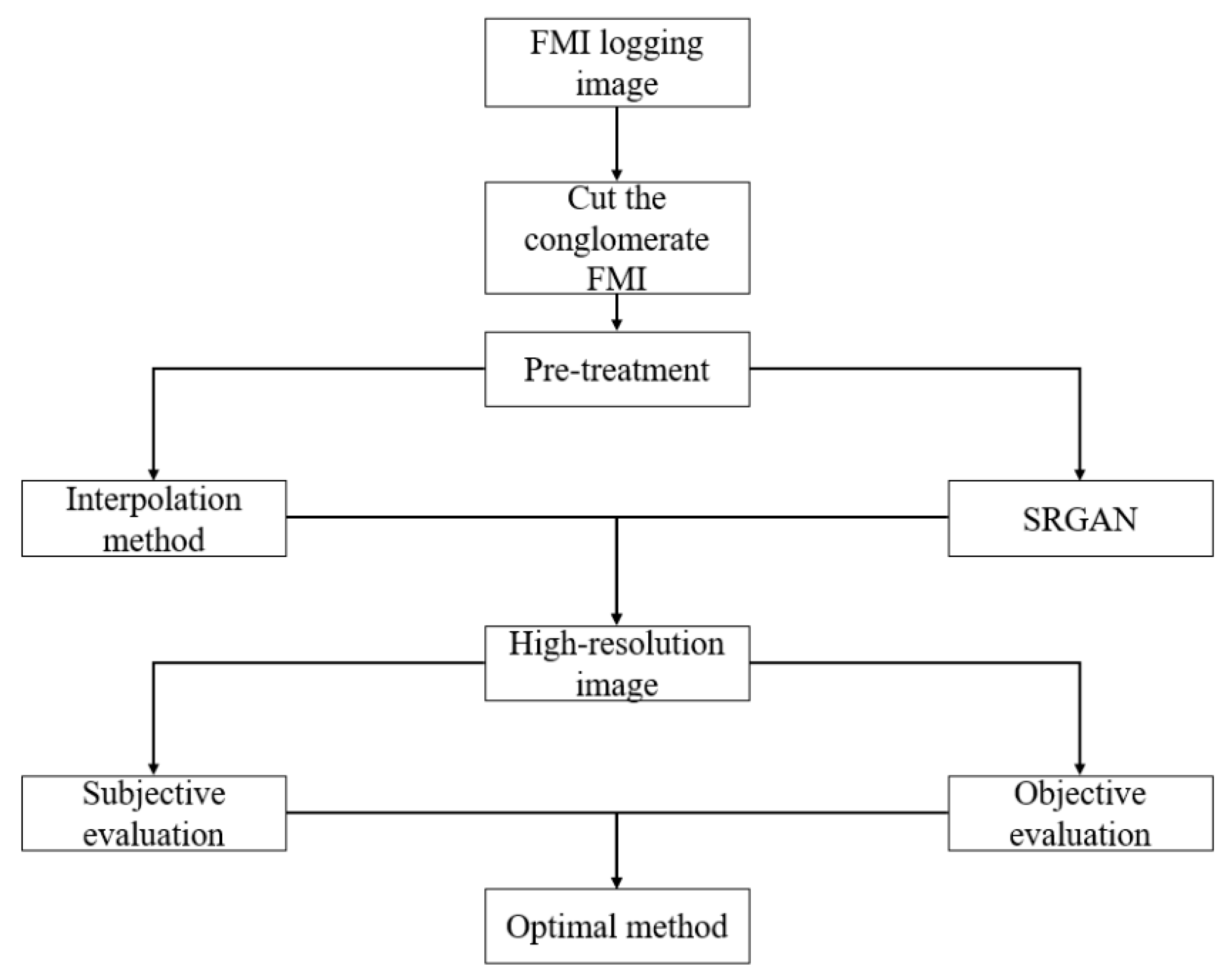

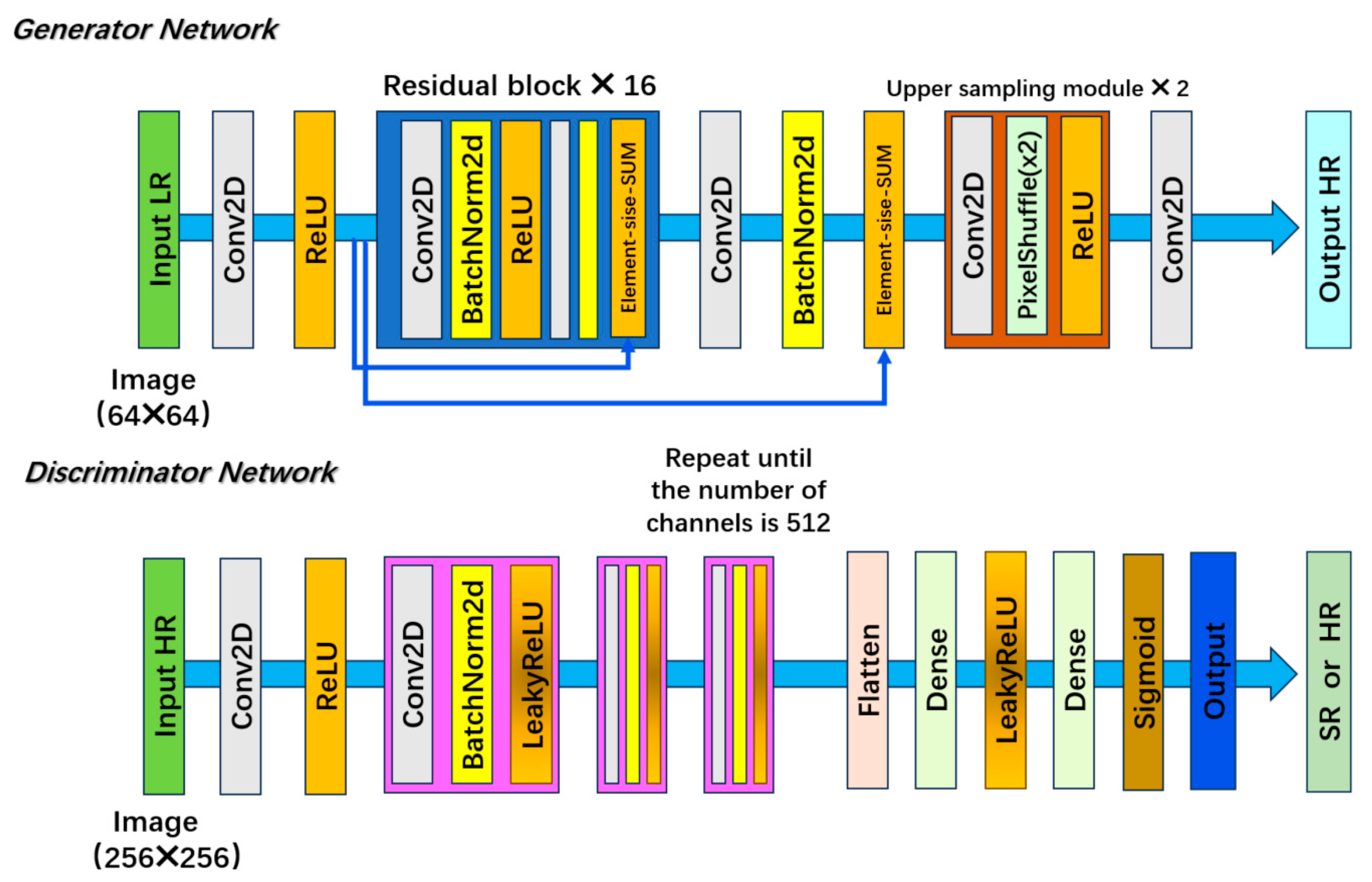

2. Research Methods

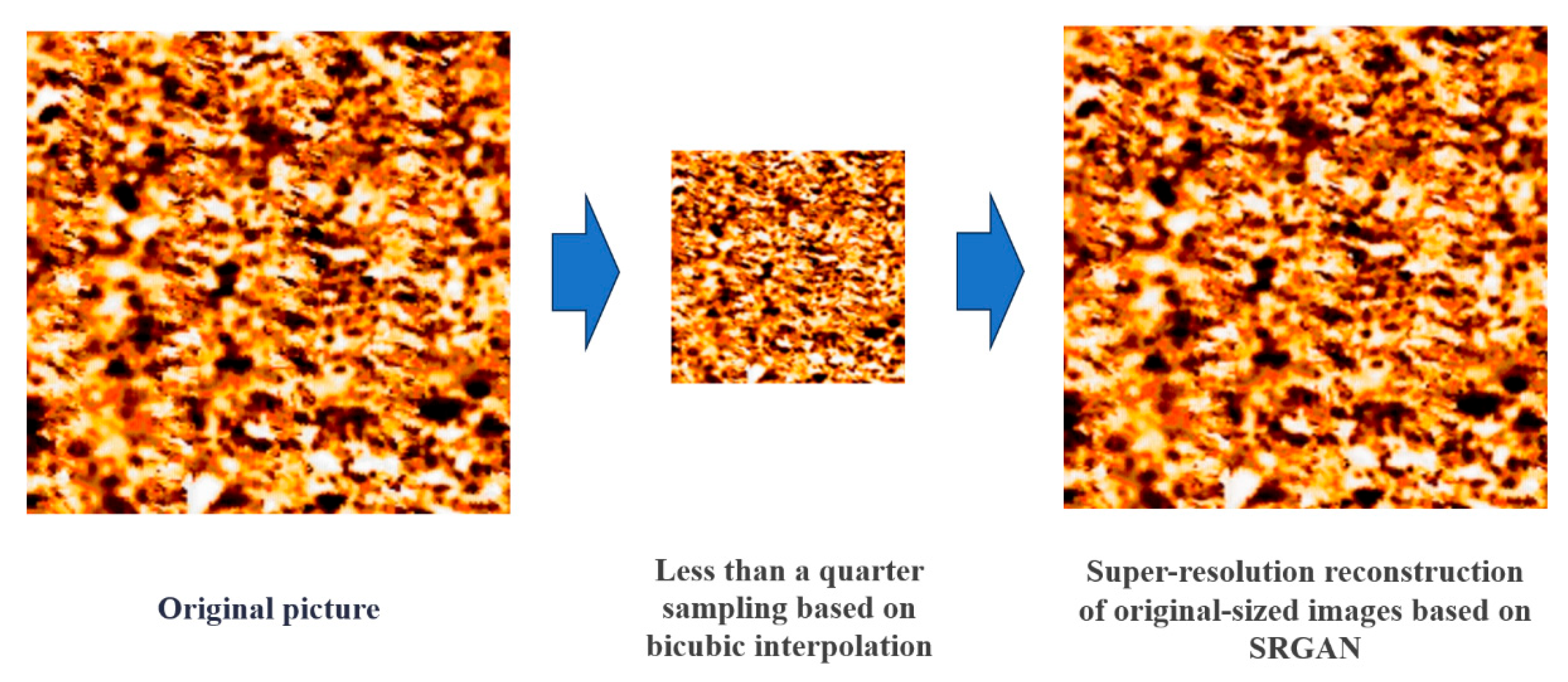

2.1. Research Ideas

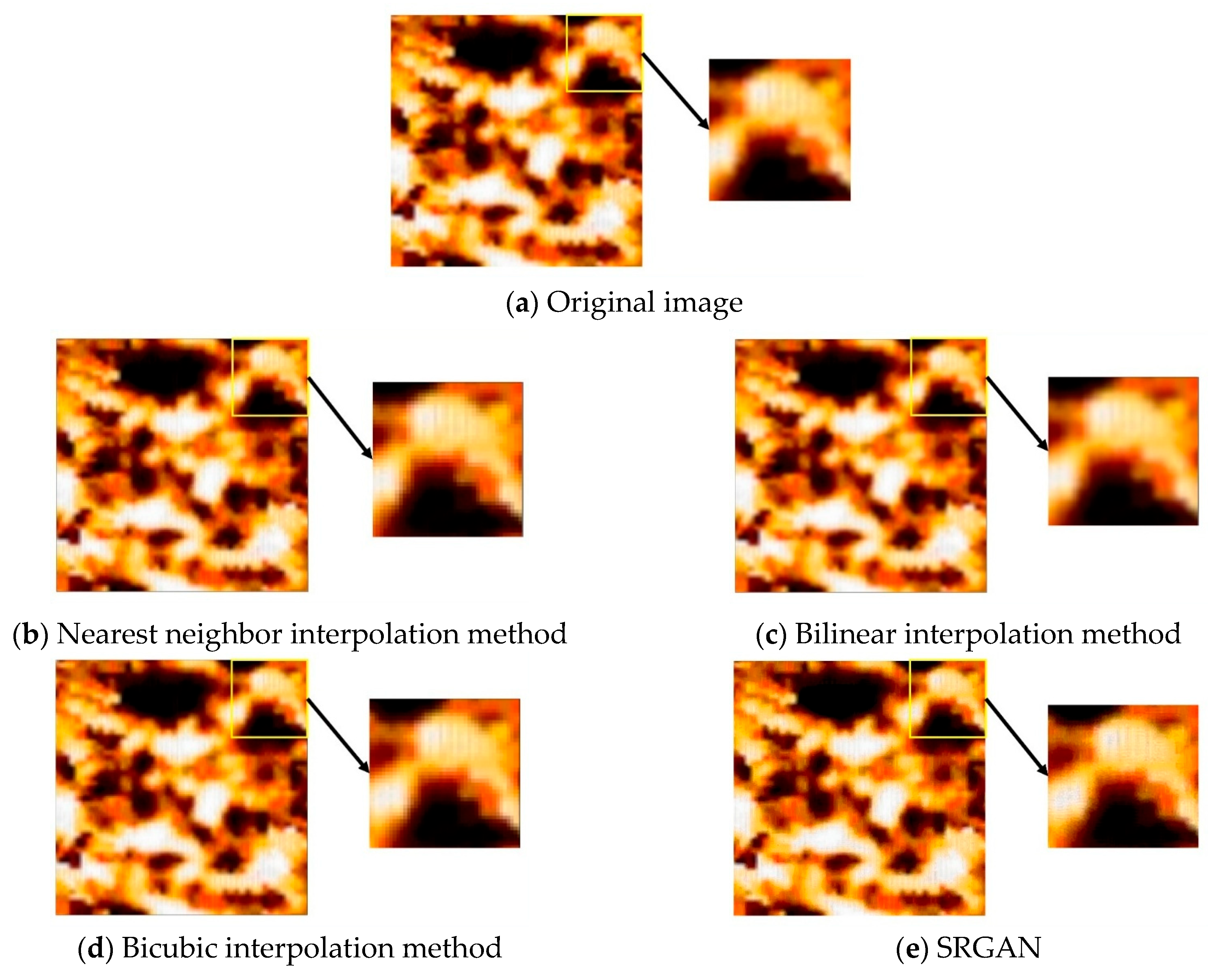

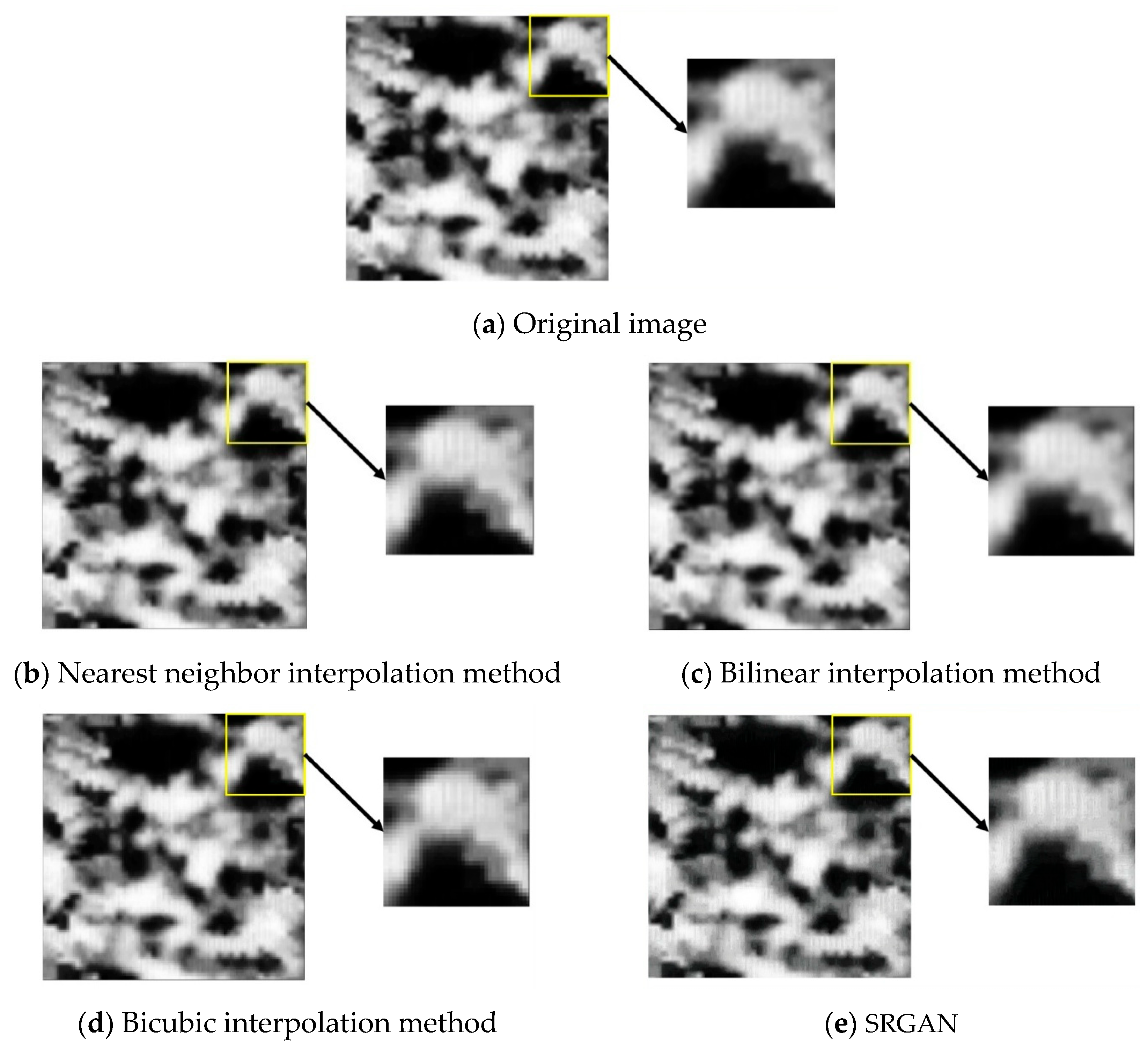

2.2. The Basic Principle of Interpolation Method

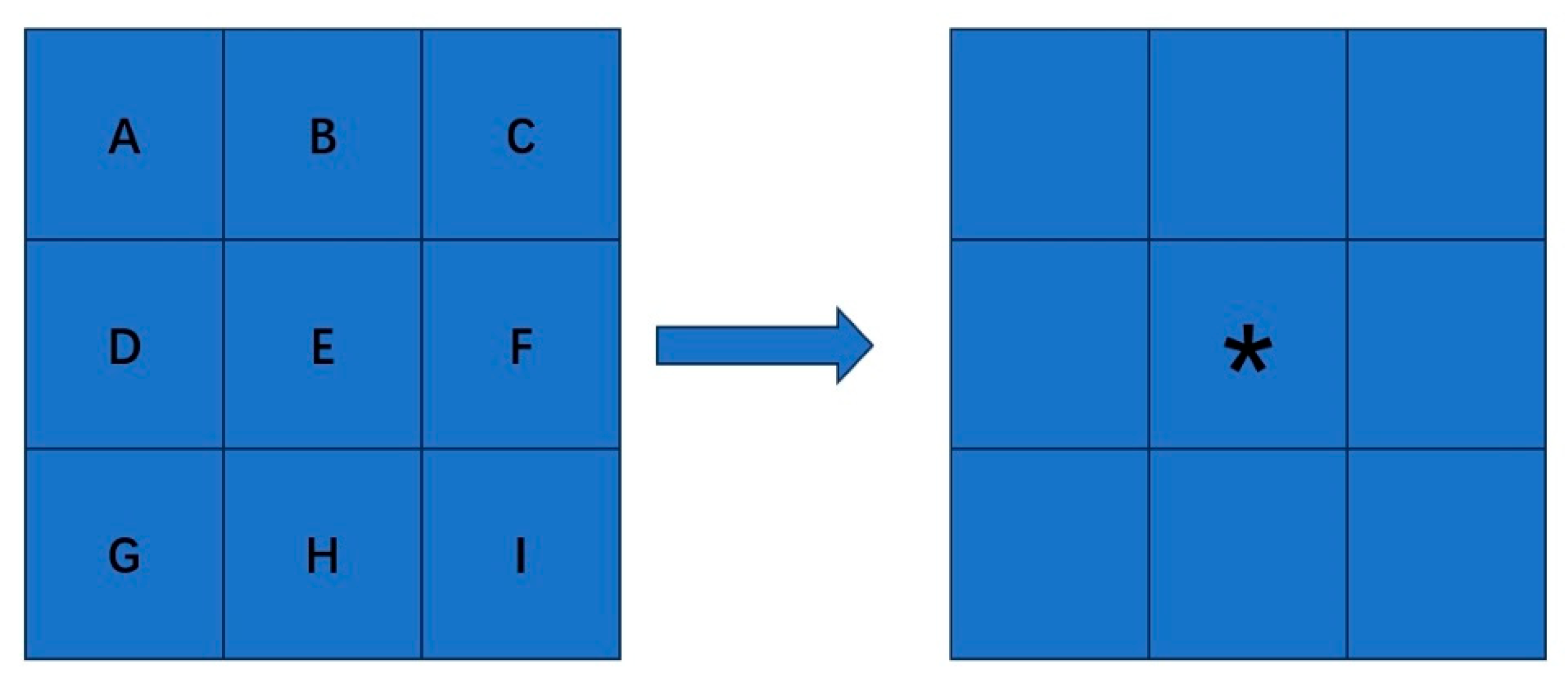

2.2.1. Nearest Neighbor Interpolation Method

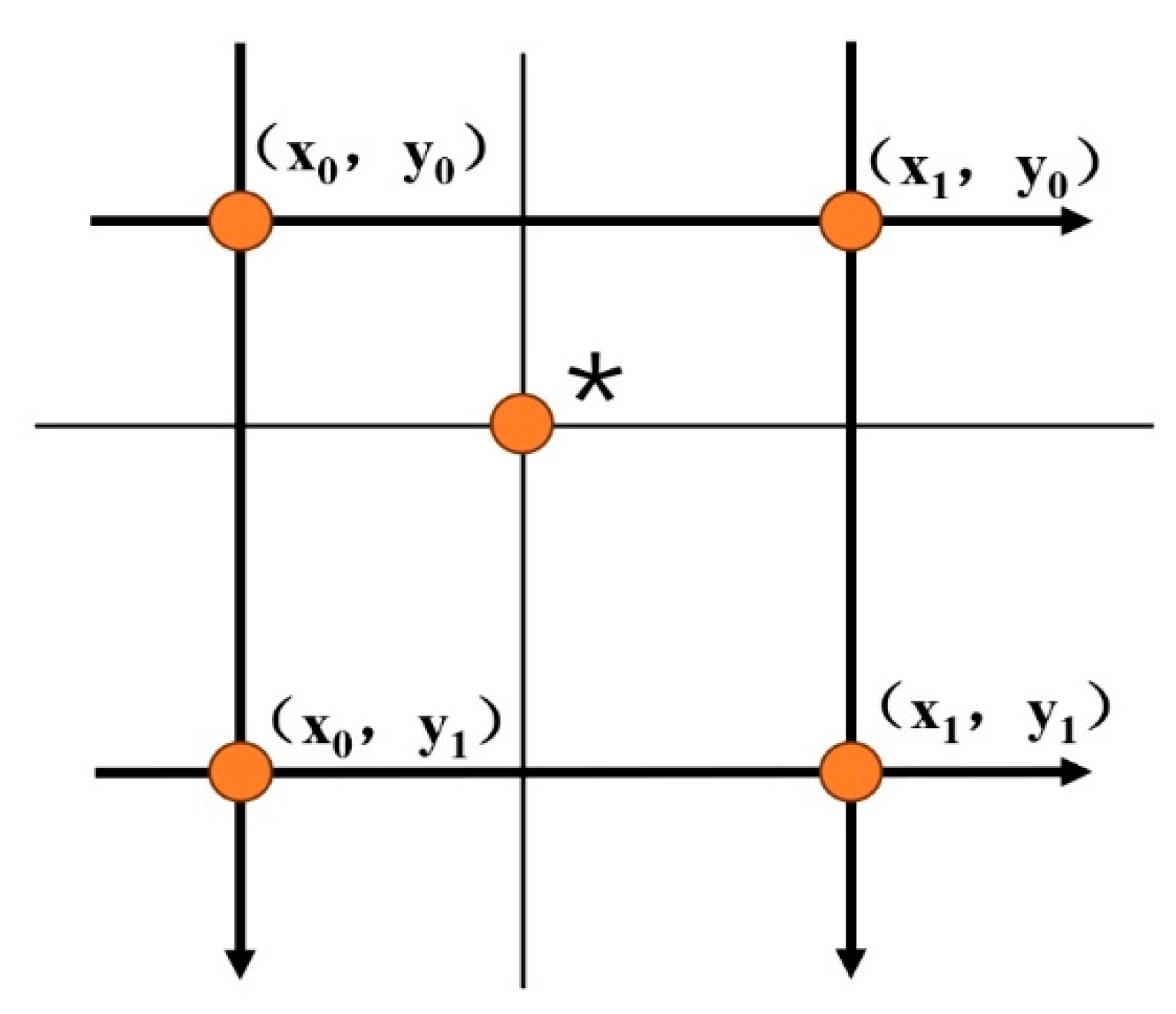

2.2.2. Bilinear Interpolation Method

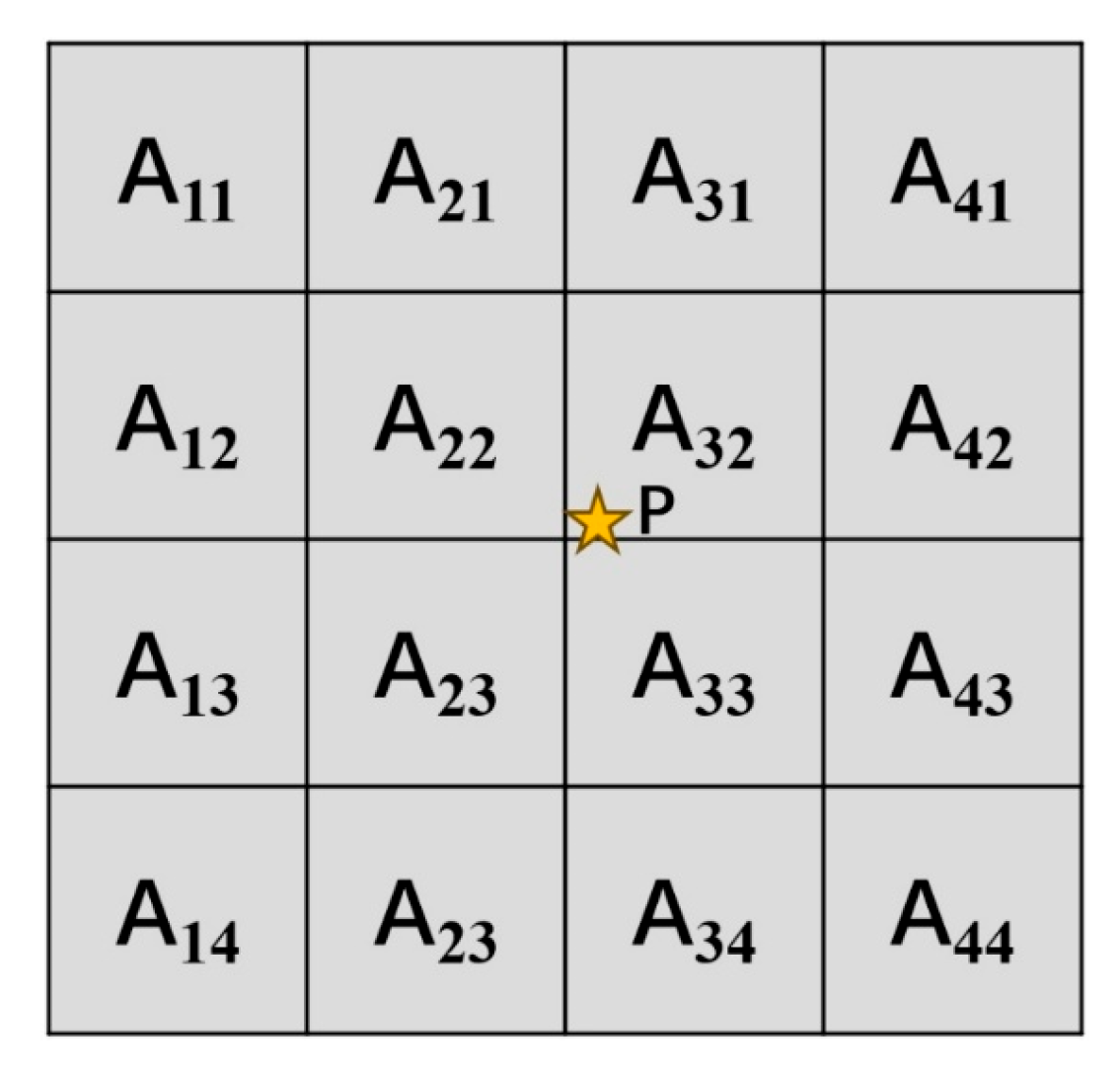

2.2.3. Bicubic Interpolation Method

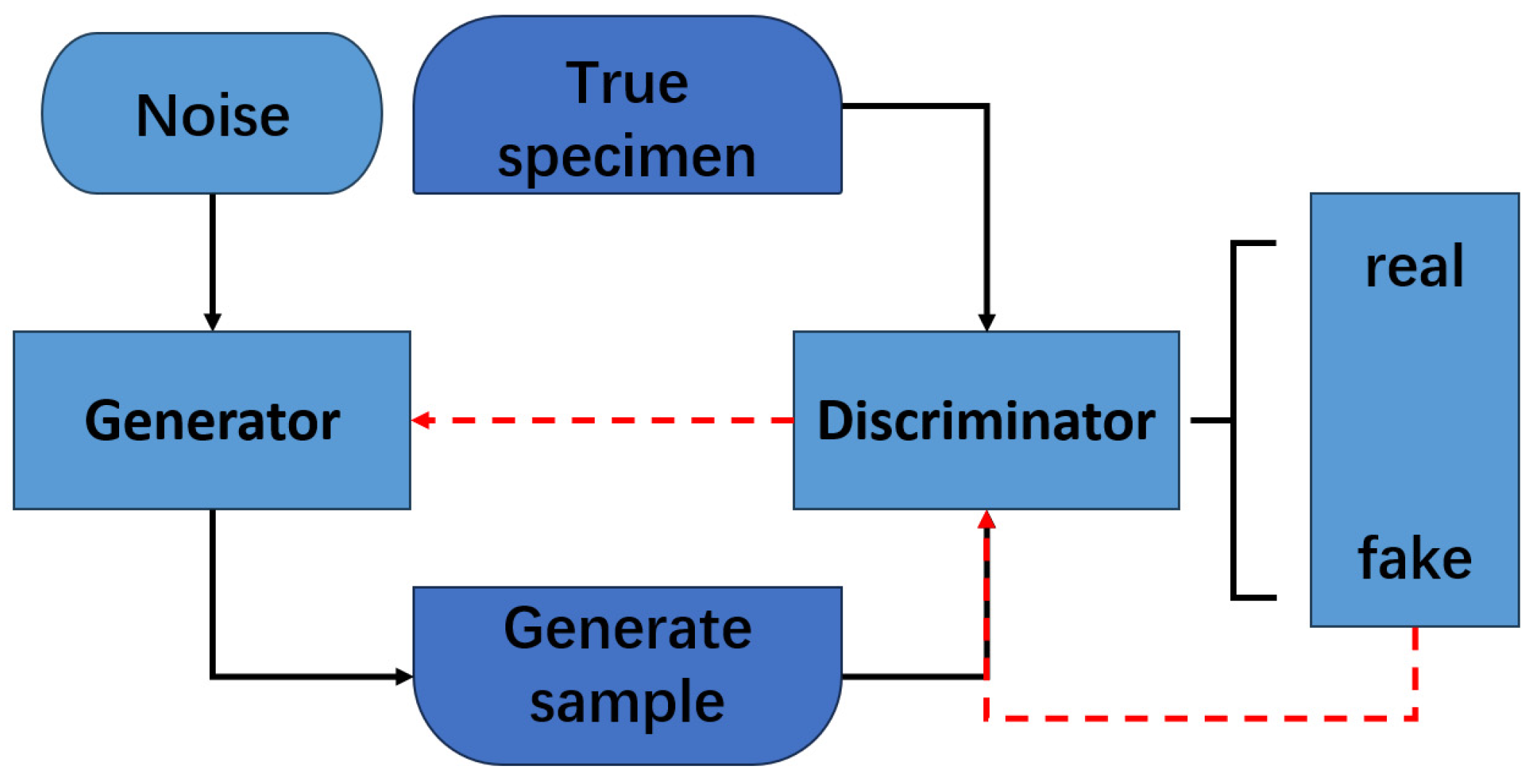

2.3. The Basic Principles of Generative Adversarial Networks

2.4. Evaluation Index

2.4.1. Mean Square Error (MSE)

2.4.2. Peak Signal-to-Noise Ratio (PSNR)

2.4.3. Structural Similarity Index (SSIM)

3. Image Preprocessing

3.1. Dataset Preprocessing

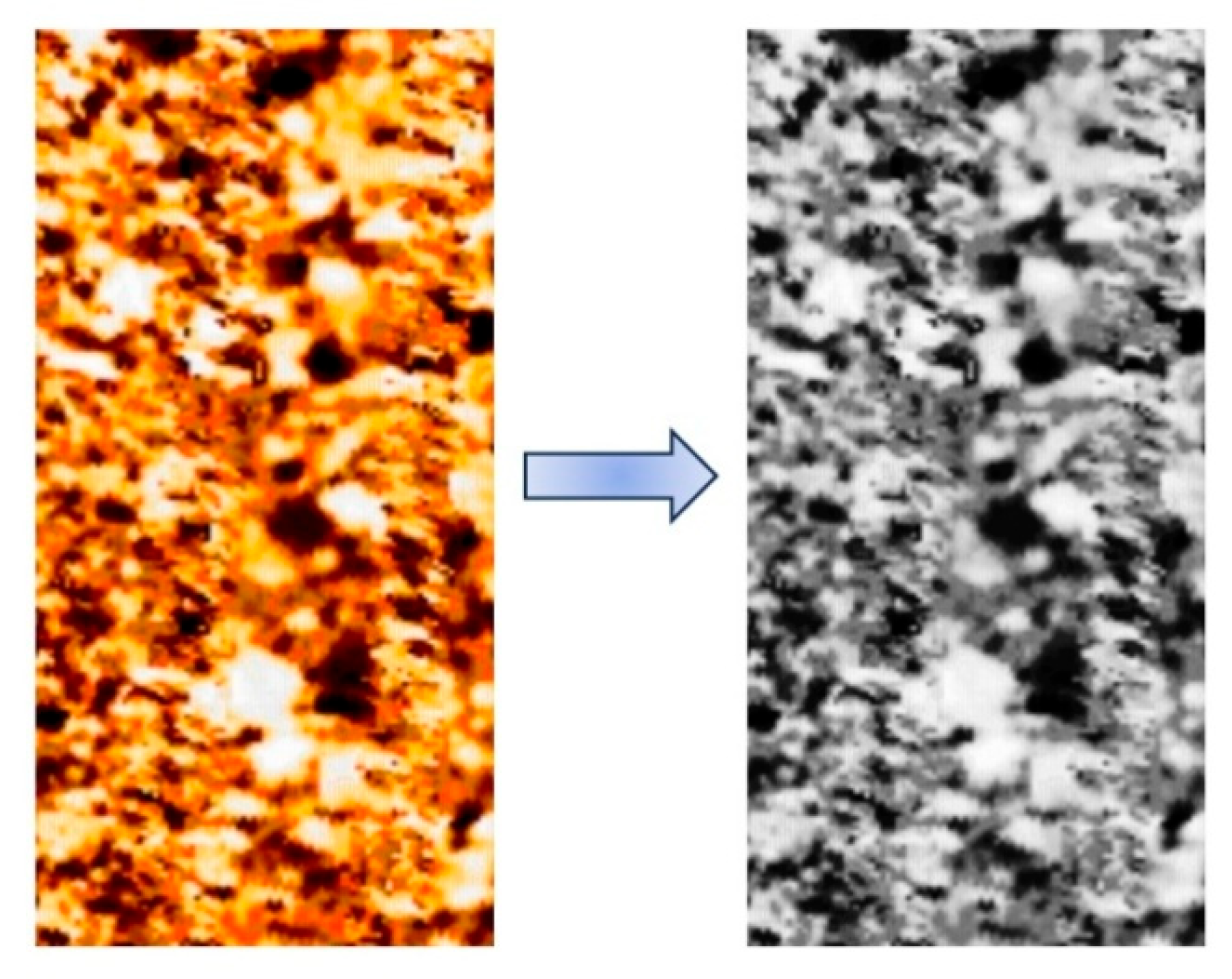

3.1.1. Image Grayscale Processing

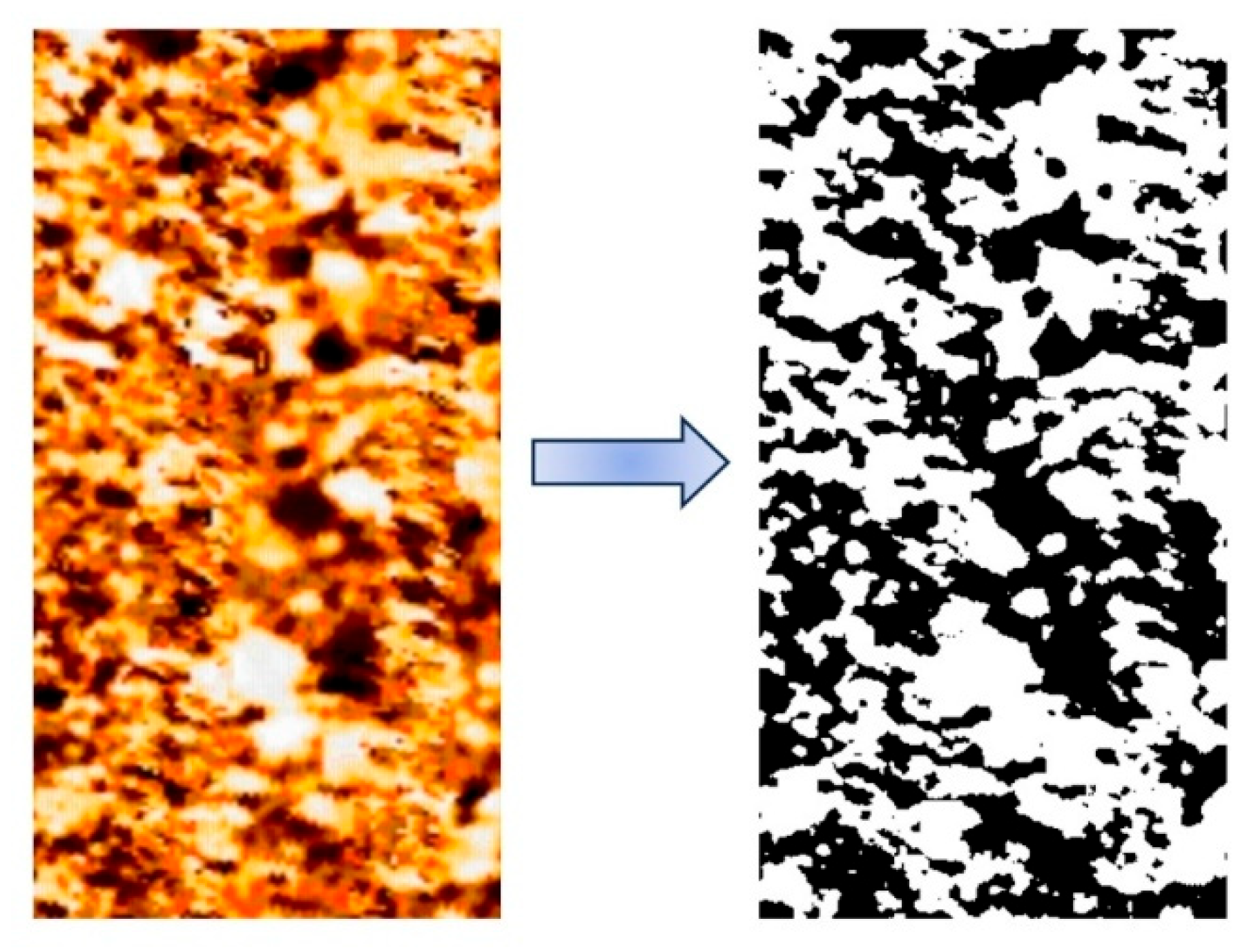

3.1.2. Image Binarization Processing

3.1.3. Training Dataset

3.2. Training Process

3.2.1. RGB Training Model

3.2.2. Grayscale Training Mode

3.2.3. Binary Training Model

3.3. Binary Training Model

4. Training Results and Analysis

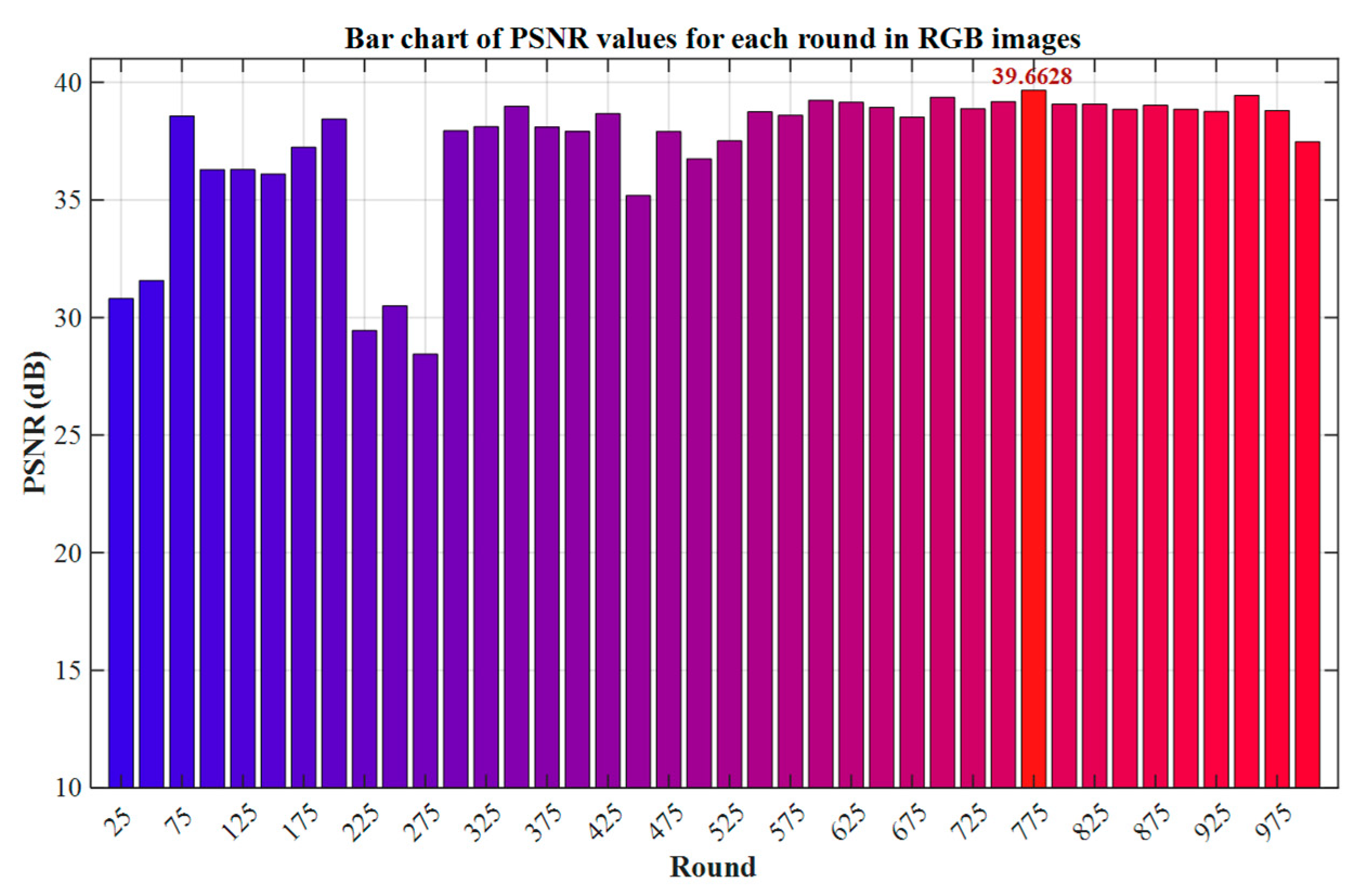

4.1. Analysis of RGB Image Results

4.2. Analysis of Grayscale Image Results

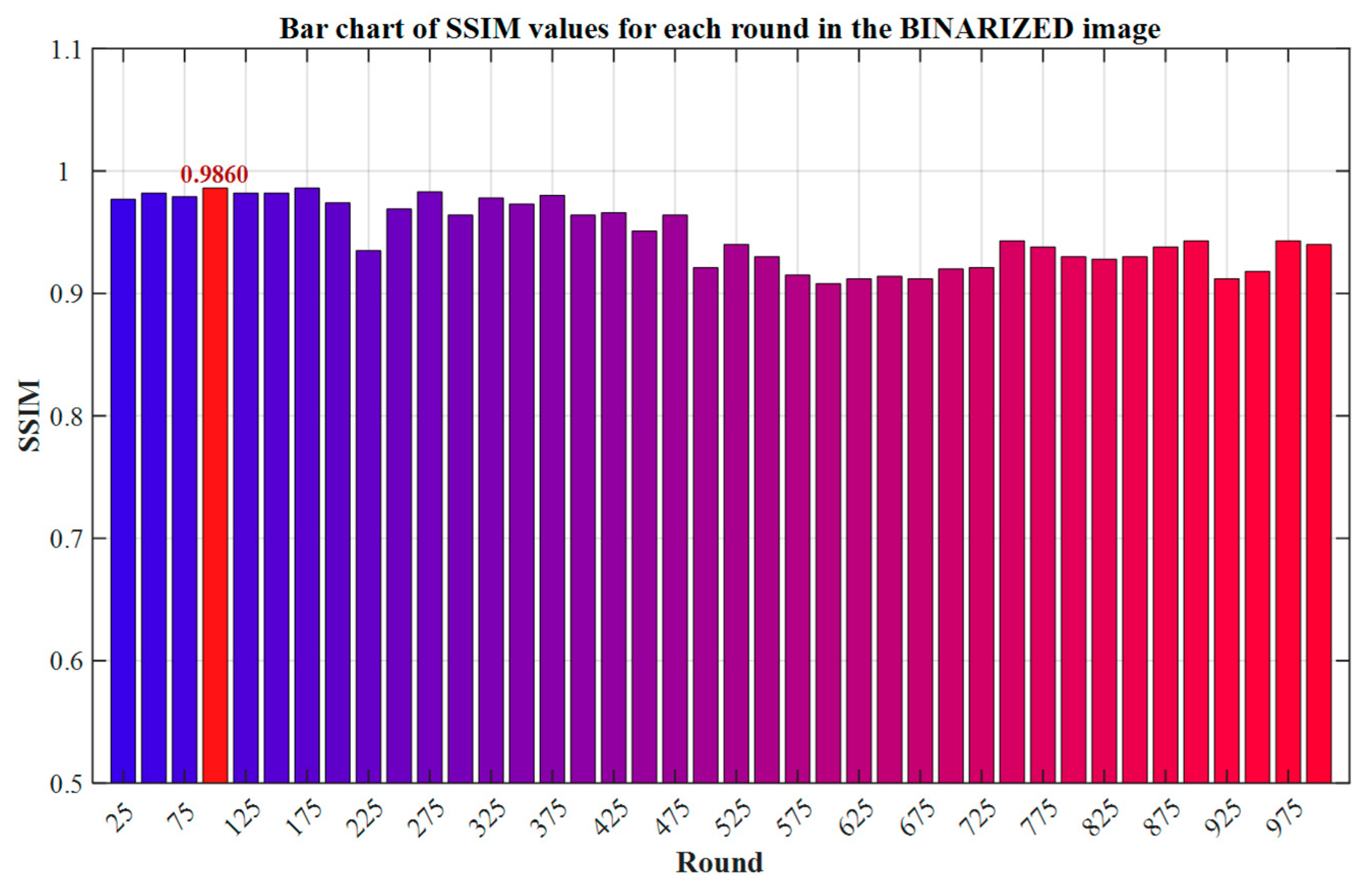

4.3. Analysis of BINARY Image Results

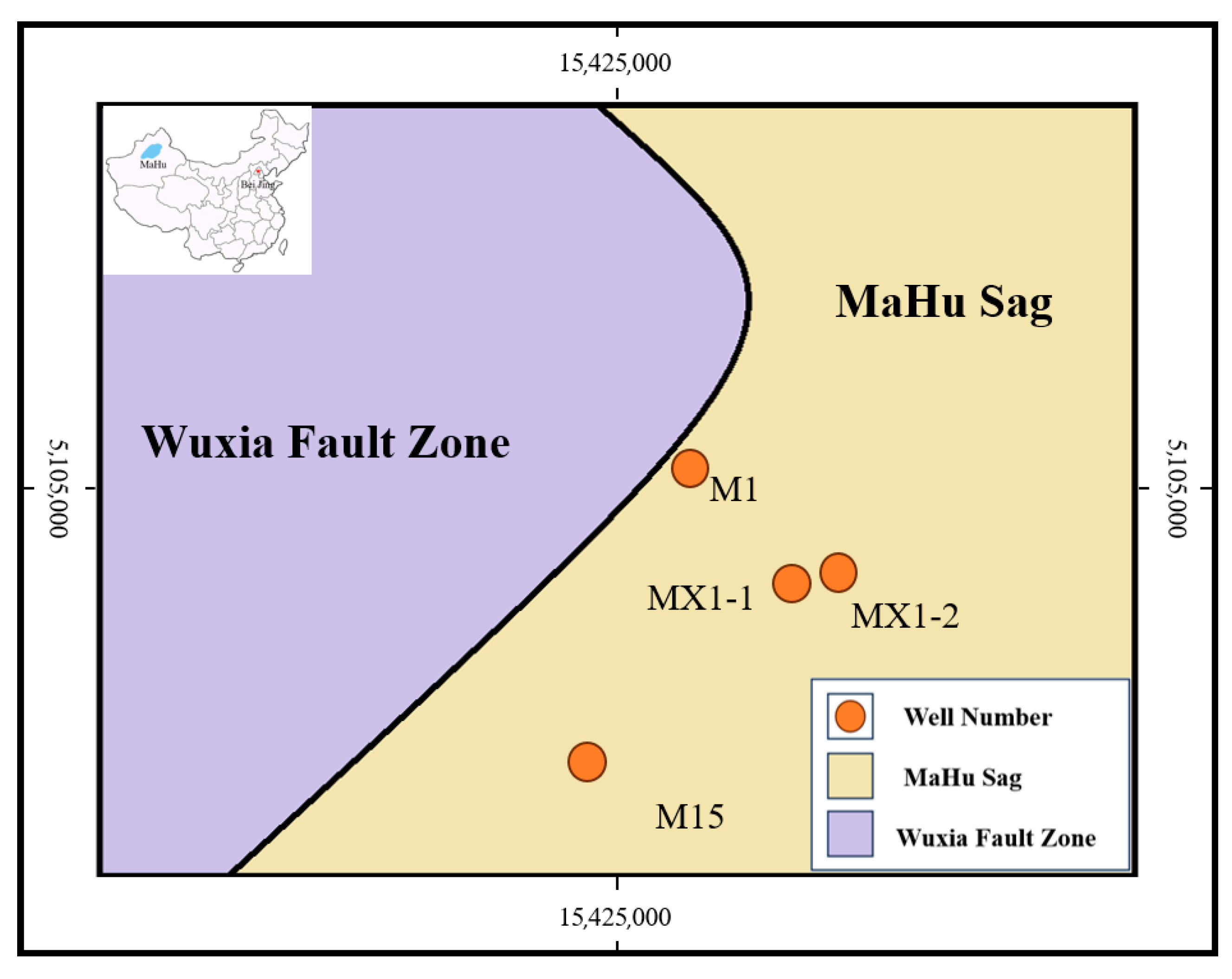

4.4. Image Tests in Other Wells

5. Discussion

- Core objective compatibility: As the first study to introduce GAN into the field of FMI super-resolution, it is necessary to first verify the basic feasibility. The SRGAN structure is simple and the training is stable, which is more conducive to the preliminary verification of cross-domain technology transfer.

- Computational efficiency and data size: The complex structure of ESRGAN (such as the RRDB module) requires higher computational resources and data volume. However, this study is limited by the professional FMI dataset size, and the lightweight design of SRGAN can reduce the risk of overfitting.

- Geological feature fidelity requirements: The enhanced natural image textures of ESRGAN may interfere with the geometric structure of rock layer fractures (such as introducing artifacts), while SRGAN retains the structural foundation through the VGG content loss, which is more in line with the geological interpretation requirements.

6. Conclusions

- (1)

- Using data analysis, the image representation form and algorithm characteristics jointly affect the super-resolution reconstruction effect: In the traditional interpolation method, the grayscale image achieves the optimal balance (PSNR 29.39/SSIM 0.901) through bilinear interpolation, and its SSIM value is increased by 21.7% compared with the same method in the RGB domain; however, SRGAN shows significant advantages in the RGB domain. With the indicators of PSNR 41.39 and SSIM 0.992, it far exceeds the best interpolation algorithm (bicubic interpolation: PSNR increased by 61.6%, SSIM increased by 34.1%).

- (2)

- The above data indicate that SRGAN demonstrates the optimal boundary reconstruction ability in RGB, grayscale, and binary images: In the RGB domain, it shows significant superiority with a PSNR of 41.39 (an increase of 61.6% compared to the best interpolation method by two or three times) and an SSIM of 0.992 (an increase of 49.3%). In the grayscale domain, its PSNR of 40.15 and SSIM of 0.99 still maintain an advantage, increasing by 36.6% and 9.9%, respectively, compared to bilinear interpolation. This stable performance across modalities verifies the algorithmic advantages of SRGAN in FMI boundary restoration.

- (3)

- Although the binary images achieved the lowest MSE (1353.66) and the highest SSIM (0.981) in SRGAN, the structural details of the gravel could not be effectively reconstructed due to the absence of the color dimension, revealing the decoupling phenomenon between the objective evaluation indicators and the characterization ability of geological features.

- (4)

- The effect of generative adversarial neural networks in improving image resolution is superior to that of traditional interpolation methods, indicating that the feasibility of using computer deep learning methods to improve the resolution of FMI logging images of conglomerate is high. In the field of image enhancement algorithms, traditional interpolation methods have limited resolution improvement when dealing with complex logging FMI logging image of conglomerate. However, generative adversarial neural networks can learn image features through adversarial training and generate high-quality and high-resolution images with broad prospects. In oil and gas exploration, high-precision FMI logging image are very crucial. Deep learning methods can meet the requirements, provide better solutions, and facilitate scientific decision-making and efficient exploration.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiu, K.; Ding, W.; Huang, W.; Zhang, Y.; Zhao, S.; Hu, L. Fractures of lacustrine shale reservoirs, the Zhanhua Depression in the Bohai Bay Basin, eastern China. Mar. Pet. Geol. 2013, 48, 113–123. [Google Scholar] [CrossRef]

- Wang, Z.; Xiang, H.; Wang, L.; Xie, L.; Zhang, Z.; Gao, L.; Yan, Z.; Li, F. Fracture Characteristics and its Role in Bedrock Reservoirs in the Kunbei Fault Terrace Belt of Qaidam Basin, China. Front. Earth Sci. 2022, 10, 865534. [Google Scholar] [CrossRef]

- Sun, S.; Huang, S.; Gomez-Rivas, E.; Griera, A.; Liu, B.; Xu, L.; Wen, Y.; Dong, D.; Shi, Z.; Chang, Y.; et al. Characterization of natural fractures in deep-marine shales: A case study of the Wufeng and Longmaxi shale in the Luzhou Block Sichuan Basin, China. Front. Earth Sci. 2022, 17, 337–350. [Google Scholar] [CrossRef]

- Yin, S.; Tian, T.; Wu, Z. Developmental characteristics and distribution law of fractures in a tight sandstone reservoir in a low-amplitude tectonic zone, eastern Ordos Basin, China. Geol. J. 2020, 55, 17. [Google Scholar] [CrossRef]

- He, B.; Liu, Y.; Qiu, C.; Liu, Y.; Su, C.; Tang, Q.; Tian, W.; Wu, G. The Strike-Slip Fault Effects on the Ediacaran Carbonate Tight Reservoirs in the Central Sichuan Basin, China. Energies 2023, 16, 4041. [Google Scholar] [CrossRef]

- Bei, W.; Xiangjun, L.; Liqiang, S.I.M.A. Grading evaluation and prediction of fracture-cavity reservoirs in Cambrian Longwangmiao Formation of Moxi area, Sichuan Basin, SW China. Pet. Explor. Dev. 2019, 46, 301–313. [Google Scholar] [CrossRef]

- Ren, Y.; Wei, W.; Zhu, P.; Zhang, X.; Chen, K.; Liu, Y. Characteristics, classification and KNN-based evaluation of paleokarst carbonate reservoirs: A case study of Feixianguan Formation in northeastern Sichuan Basin, China. Energy Geosci. 2023, 4, 100156. [Google Scholar] [CrossRef]

- Gao, L.; Shi, X.; Liu, J.; Chen, X. Simulation-based three-dimensional model of wellbore stability in fractured formation using discrete element method based on formation microscanner image: A case study of Tarim Basin, China. J. Nat. Gas Sci. Eng. 2022, 97, 104341. [Google Scholar] [CrossRef]

- Pang, H.; Chen, M.; Wang, H.; Jin, Y.; Lu, Y.; Li, J. Lost circulation pattern in the vug-fractured limestone formation. Energy Rep. 2023, 9, 941–954. [Google Scholar] [CrossRef]

- Chen, S. The Wavelet Transformation Sensitiveness to Direction of Image Characteristics and its Application in Formation MicroScanner Image Fracture Identification. Sens. Transducers J. 2014, 173, 16. [Google Scholar]

- Yu, Z.; Wang, Z.; Wang, J. Continuous Wavelet Transform and Dynamic Time Warping-Based Fine Division and Correlation of Glutenite Sedimentary Cycles. Math. Geosci. 2022, 55, 521–539. [Google Scholar] [CrossRef]

- Xiang, R.; Yang, H.; Yan, Z.; Mohamed Taha, A.M.; Xu, X.; Wu, T. Super-resolution reconstruction of GOSAT CO2 products using bicubic interpolation. Geocarto Int. 2022, 37, 15187–15211. [Google Scholar] [CrossRef]

- Wang, Y.; Rahman, S.S.; Arns, C.H. Super resolution reconstruction of μ-CT image of rock sample using neighbour embedding algorithm. Phys. A 2018, 493, 177–188. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Da Wang, Y.; Armstrong, R.T.; Mostaghimi, P. Enhancing Resolution of Digital Rock Images with Super Resolution Convolutional Neural Networks. J. Pet. Sci. Eng. 2019, 182, 106261. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Deng, X.; Hua, W.; Liu, X.; Chen, S.; Zhang, W.; Duan, J. D-SRCAGAN: DEM Super-resolution Generative Adversarial Network. IEEE Geosci. Remote Sens. Lett. 2022. [Google Scholar] [CrossRef]

- Shan, L.; Liu, C.; Liu, Y.; Kong, W.; Hei, X. Rock CT Image Super-Resolution Using Residual Dual-Channel Attention Generative Adversarial Network. Energies 2022, 15, 5115. [Google Scholar] [CrossRef]

- Soltanmohammadi, R.; Faroughi, S.A. A comparative analysis of super-resolution techniques for enhancing micro-CT images of carbonate rocks. Appl. Comput. Geosci. 2023, 20, 100143. [Google Scholar] [CrossRef]

- Lil, R.; Liu, W.; Gong, W.; Zhu, X.; Wang, X. Super resolution for single satellite image using a generative adversarial network. ISPRS annals of the photogrammetry, remote sensing and spatial information sciences. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 5, 591–596. [Google Scholar]

- Zhang, T.; Hu, G.; Yang, Y.; Du, Y. A Super-Resolution Reconstruction Method for Shale Based on Generative Adversarial Network. Transp. Porous Media 2023, 150, 383–426. [Google Scholar] [CrossRef]

- Li, J.; Teng, Q.; Zhang, N.; Chen, H.; He, X. Deep learning method of stochastic reconstruction of three-dimensional digital cores from a two-dimensional image. Phys. Rev. E 2023, 107, 055309. [Google Scholar] [CrossRef] [PubMed]

- Zhou, R.; Wu, C. 3D reconstruction of digital rock guided by petrophysical parameters with deep learning. Geoenergy Sci. Eng. 2023, 231, 212320. [Google Scholar] [CrossRef]

- Liu, B.; Chen, J. A super resolution algorithm based on attention mechanism and SRGAN network. IEEE Access 2021, 9, 139138–139145. [Google Scholar] [CrossRef]

- Wang, C.H.; Yan, Y.M.; Han, X.W.; Liang, T.; Wan, Z.; Wang, Q. Dehazing Algorithm for UAV Aerial Images Based on Improved SRGAN. Laser Infrared 2024, 54, 991–997. [Google Scholar]

- Jadhav, P.; Sairam, V.A.; Bhojane, N.; Singh, A.; Gite, S.; Pradhan, B.; Bachute, M.; Alamri, A. Multimodal Gas Detection Using E-Nose and Thermal Images: An Approach Utilizing SRGAN and Sparse Autoencoder. Comput. Mater. Contin. 2025, 83, 3493–3517. [Google Scholar] [CrossRef]

- Sun, C.; Wang, C.; He, C. Image Super-Resolution Reconstruction Algorithm Based on SRGAN and Swin Transformer. Symmetry 2025, 17, 337. [Google Scholar] [CrossRef]

- Güngür, Z.; Ayaz, I.; Tümen, V. Biomedical Image Super-Resolution Using SRGAN: Enhancing Diagnostic Accuracy. BEU Fen Bilim. Derg. 2025, 14, 198–212. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, J. Digital Core Image Reconstruction Based on Deep Learning and Evaluation of Reconstruction Effect. J. Cent. South Univ. (Nat. Sci. Ed.) 2022, 53, 4412–4424. [Google Scholar]

- Xie, H.; Xie, K.; Yang, H. Research Progress of Image Super-resolution Methods. Comput. Eng. Appl. 2020, 56, 34–41. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2020, 27, 2672–2680. [Google Scholar]

- Jia, J. Face Super-Resolution Reconstruction Based on Generative Adversarial Nets and Face Recognition. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018; p. 14. [Google Scholar]

- Zhu, X.; Yao, S.; Sun, B.; Qian, Y. Image quality assessment: Combining the characteristics of HVS and structural similarity index. J. Harbin Inst. Technol. 2018, 50, 121–128. [Google Scholar]

- Li, W.; Zhang, X. Depth Image Super-resolution Reconstruction Method Based on Convolutional Neural Network. J. Electron. Meas. Instrum. 2017, 31, 1918–1928. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Horé, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

| Research Objectives/Application Areas | Researchers (Year) | Methods/Techniques | Problems Solved | Limitations |

|---|---|---|---|---|

| Research on fractures in shale reservoirs | Kai Jiu (2013), Zhao Sheng Wang (2022), Sha Sha Sun (2022) [1,2,3] | FMI + core samples, thin sections, scanning electron microscopy (SEM). | The system identifies the types and development characteristics of fractures in shale reservoirs. | The resolution of the FMI is lower than that of the core scanning image, which affects the accuracy of micro-crack identification. |

| Characterization of fractures in tight sandstone reservoirs | Shuai Yin (2020) [4] | FMI + lithology and logging data; FMI + rock mechanics and hydraulic fracturing data. | Identify reservoir distribution; characterize the coupling relationship between fractures and stress. | The FMI lacks detailed information, which hinders the precise depiction of small-scale cracks and structural features. |

| Research on fractured reservoirs in fault-broken zones | Bing He (2023) [5] | FMI + earthquakes, production data. | In-depth study of fractured reservoirs within the fault zone. | The resolution limitation results in insufficient characterization of the fine structures within the fault zone. |

| Reservoir identification | Wang Bei (2019), Yang Ren (2023) [6,7] | FMI + ore samples, logging + Seismic waveform classification, K-nearest neighbors. | Utilizing multiple data to identify reservoirs. | The data quality of FMI affects the accuracy of the input features of the identification model. |

| numerical modeling | Lei Yu Gao (2022), Hui Wen Pang (2023) [8,9] | The FMI is applied to finite element (FEM) and discrete element (DEM) modeling. | Provide geometric information for the numerical model. | The geometric information provided by the low-resolution FMI logging image has limited accuracy. |

| Crack identification and reservoir judgment | Sai Jun Chen (2014), Zhi Chao Yu (2022) [10,11] | FMI + Wavelet Transform. | Utilizing a variety of signal processing techniques to assist in crack identification. | The resolution itself is a major drawback, and the signal processing methods are unable to fundamentally restore the lost high-frequency details. |

| Image super-resolution reconstruction (general) | (Interpolation: Ru Xiang (2022) [12]); (Adjacent Embedding: Yuzhu Wang (2018) [13]); (SRCNN: Chao Dong (2016) [14]) | Non-learning methods: Interpolation (such as bicubic), reconstruction methods (MAP). Learning methods: Adjacent embedding, SRCNN. | Generate high-resolution images from low-resolution images. | The interpolation method has an unclear effect; traditional learning methods have limited capabilities; SRCNN has a low perception ability and lacks high-frequency details. |

| Rock image super-resolution | Ying Da Wang (2019) [15] | SRCNN is used for CT images of sandstone/carbonate rocks. | Compared to bicubic interpolation, it significantly improves the quality of CT image reconstruction. | The reconstructed results of SRCNN still lack a sense of reality and fail to restore the high-frequency details adequately; it has not been applied to FMI logging image. |

| Improvement of Super-Resolution Technology | Christian Ledig et al. (2016) [16] | SRGAN (introducing perceptual loss and discriminator). | The generated images are more realistic, significantly improving the perception quality. | The potential application in the field of FMI super-resolution reconstruction has not been explored or verified. |

| Expansion of SRGAN Application | Xiao Tong Deng (2022) [17], Liqun Shan (2022) [18], Ramin Soltanmohammadi (2023) [19] Ran Li (2022) [20]; Ting Zhang (2023) [21], Jian Li (2023) [22], Rong Zhou (2023) [23]) | Applied to digital elevation, rock CT scan images, satellite remote sensing images, and digital core samples. | Verify the effectiveness of SRGAN in various image types. | The lack of research on the application of SRGAN in FMI logging image. |

| Improve the quality of general image super-resolution and optimize the ability to restore high-frequency details | Baozhong Liu (2021) [24] | The generator introduces a channel attention mechanism (CA module). The BN layer is removed. The loss function is replaced with L1 loss instead of MSE. | 1. The SRGAN fails to adequately represent high-frequency features. 2. The BN layer leads to unstable training and artifacts. 3. The MSE loss is sensitive to noise. | The expansion of the network leads to an increase in computational load. |

| Dust removal from drone aerial images | Chaohui Wang (2024) [25] | DH-SRGAN (Improved Discriminator SResblock + CBAM Attention Mechanism, without upsampling module). | The smog causes image blurring and loss of details, and the traditional algorithms have poor generalization ability. | 1. Relying on synthetic fog dataset, the generalization ability in real scenarios needs to be verified. 2. The problem of high noise environment has not been solved. |

| Multimodal gas detection | Pratik Jadhav (2025) [26] | SRGAN (thermal image super-resolution) + sparse autoencoder (colorization) + multimodal CNN (fusion of electronic nose and thermal image). | Low-cost thermal imaging equipment has low resolution and gray-scale output, resulting in insufficient detection accuracy. | 1. SRGAN has a heavy computational load and significant latency for real-time deployment. 2. It only supports two types of gases: smoke and perfume. 3. The training process has significant fluctuations. |

| Image super-resolution reconstruction | Chuilian Sun (2025) [27] | HO-SRGAN-ST (SRGAN + Swin Transformer + Adaptive Denoising Strategy). | Complex image details are lost and edges are blurred. The traditional SRGAN has a weak ability for long-distance dependency. | 1. Blurred details under high noise or complex textures. 2. Swin Transformer has high computational complexity. |

| Verify the effectiveness of SRGAN in biomedical image diagnosis | Zübeyr Güngür (2025) [28] | Adopt the WGAN-GP framework to enhance training stability and retain perceptual loss to optimize visual quality. | 1. The traditional SRGAN is unstable in training on medical data. 2. The problem of blurred pathological details. | Insufficient domain generalization ability. |

| This research | SRGAN is applied to the super-resolution reconstruction of FMI logging image (constructing the FMI dataset, preprocessing, training, and application of the SRGAN model). | Core objective: To address the key bottleneck of the inherent resolution deficiency in FMI logging image. | The assessment was not conducted using the latest model. |

| Parameter Name | Value |

|---|---|

| Size of the kernel in the first and last convolutional layers | 9 |

| Size of the convolutional kernel in the middle layer | 3 |

| Number of channels in the middle layer | 64 |

| Number of residual blocks | 16 |

| Activation function used in the residual blocks | PReLU |

| Upsampling method | Sub-pixel convolution |

| Number of layers in the generator network | 36 |

| Parameter Name | Value |

|---|---|

| The size of the kernels for all convolutional layers | 3 |

| The initial number of channels (doubled for each layer) | 64 |

| The number of convolutional blocks | 8 |

| The number of neurons in the fully connected layer | 1024 |

| The activation function used after the convolutional layers | LeakyReLU |

| The number of layers in the discriminator network | 19 |

| Parameter Name | Value |

|---|---|

| Generator optimizer | Adam |

| Discriminator optimizer | Adam |

| Initial learning rate | 0.0002 |

| Learning rate decay | Reduced to 0.1 times at 50% of the epochs |

| Loss Type | Loss Function | Weight | Functionality |

|---|---|---|---|

| Content Loss | MSE | 1.0 | Measures the difference in the feature space |

| Adversarial Loss | BCEWithLogitsLoss | 0.001 | Enhances visual authenticity |

| Discrimination Loss | BCEWithLogitsLoss | 1.0 | Trains the discriminator |

| Original Image | Nearest Neighbor Interpolation | Bilinear Interpolation | Bicubic Interpolation | SRGAN | |

|---|---|---|---|---|---|

| PNSR | 0 | 28.423 | 30.387 | 25.6192 | 41.390 |

| SSIM | 0 | 0.688 | 0.740 | 0.665 | 0.992 |

| Original Image | Nearest Neighbor Interpolation | Bilinear Interpolation | Bicubic Interpolation | SRGAN | |

|---|---|---|---|---|---|

| PNSR | 0 | 28.9890 | 29.3879 | 26.8758 | 40.1510 |

| SSIM | 0 | 0.883 | 0.901 | 0.846 | 0.990 |

| Original Image | Nearest Neighbor Interpolation | Bilinear Interpolation | Bicubic Interpolation | SRGAN | |

|---|---|---|---|---|---|

| MSE | 0 | 1851.35 | 1970.27 | 1435.6845 | 1353.66 |

| SSIM | 0 | 0.803 | 0.795 | 0.837 | 0.981 |

| Original Image | RGB Image | PNSR | SSIM | |

|---|---|---|---|---|

| M1 |  |  | 40.7137 | 0.996 |

| MX1-1 |  |  | 39.5877 | 0.993 |

| MX1-2 |  |  | 39.5745 | 0.995 |

| X93 |  |  | 39.1256 | 0.996 |

| Original Image | Grayscale Image | PNSR | SSIM | |

|---|---|---|---|---|

| M1 |  |  | 39.1156 | 0.986 |

| MX1-1 |  |  | 38.9902 | 0.991 |

| MX1-2 |  |  | 39.1024 | 0.992 |

| X93 |  |  | 38.9958 | 0.992 |

| Original Image | Grayscale Image | SSIM | MSE | |

|---|---|---|---|---|

| M1 |  |  | 0.979 | 1343.78 |

| MX1-1 |  |  | 0.978 | 1320.45 |

| MX1-2 |  |  | 0.983 | 1377.52 |

| X93 |  |  | 0.980 | 1389.43 |

| Image Type | Traditional Interpolation Method | Performance Improvement | ||

|---|---|---|---|---|

| Nearest Neighbor Interpolation | Bilinear Interpolation | Bicubic Interpolation | ||

| RGB | 0.688 | 0.740 | 0.665 | +34.0% (Relative bilinear) |

| GRAYSCALE | 0.883 | 0.901 | 0.846 | +9.9% (Relative bilinear) |

| BINARY | 0.803 | 0.795 | 0.837 | +17.2% (Relative bicubic) |

| Researcher (Year) | Application Field | Dataset | Model Architecture | Key Improvements | Performance Metrics | Key Findings |

|---|---|---|---|---|---|---|

| Xiaotong Deng [17]. (2022) | Digital Elevation Model | DEM of the plateau basins in Sichuan and Guizhou regions | D-SRCAGAN | 1. Dual-layer Residual Channel Attention (ResCAB). 2. Remove the BN layer. 3. L1 loss function. | RMSE decreased by 17.4% (60 m → 30 m task) | 1. Compared to the bicubic and SRGAN methods, the D-SRCAGAN method reconstruction results can retain more topographic features. 2. Removing the BN layer can enhance the stability of model training and reduce the computational complexity. |

| Liqun Shan [18]. (2022) | Rock CT Imaging | Sandstone/Coal/Limestone CT | RDCA-SRGAN | 1. Residual Dual-Channel Attention Block (RDCAB). 2. Discriminator incorporates global pooling. 3. Fusion of adversarial and perceptual losses. | SSIM:0.906 | 1.The characteristics of the cracks were clearly analyzed. 2.Dual-channel attention enables the network to focus on more valuable channels and improving discriminative learning. |

| Ran Li [20]. (2022) | Satellite Image Super-resolution Reconstruction General | GF-2 satellite image | SRGAN | Generator: Pre-trained deep residual network (ResNet). 2. Discriminator: Distinguishing between generated SR images and real HR images. 3. Loss function: Weighted content loss (MSE) + Adversarial loss . | Residential area: PSNR: 29.93 dB SSIM: 0.8733 River: PSNR: 29.17 dB SSIM: 0.8957 Farmland: PSNR: 24.65 dB SSIM: 0.7356 | 1. Visual Advantage: The rooftops of buildings and the edges of streets appear sharper, and the texture details of farmlands are more abundant (Figure 2). 2. High-frequency Information: The reconstructed images retain more high-frequency details. 3. Generalization: Utilizing the existing model parameters to optimize performance. |

| Baozhong Liu [24](2021) | General image | Pictures of daily scenes containing 20 types of objects | SRGAN + Channel Attention | 1. For the first time, channel attention is introduced in SRGAN: the ResCA module enhances the weight of high-frequency features. 2. Remove the BN layer of the generator: To solve the instability problem in the training of deep networks and reduce artifacts. 3. Optimization of the loss function. | PSNR: 30.20 SSIM: 0.873 | 1. Effectiveness of the attention mechanism: The 10-layer ResCA module improves the PSNR by 0.58 dB compared to the 5-layer PSNR (Set5), and the restoration of high-frequency details is better. 2. Impact of removing the BN layer: Eliminates artifacts caused by the difference between the training set and the test set, and improves the stability of the model. 3. L1 vs. MSE loss: The L1 loss is superior to the MSE loss in terms of PSNR/SSIM, and reduces the interference of large error terms on the reconstruction. |

| Chaohui Wang [25] (2024) | UAV aerial image processing | VisDrone2019 Outdoor Aerial Photography | DH-SRGAN | 1. For the first time, SRGAN was applied to the task of haze removal. 2. Lightweight design: SResblock reduces the number of layers to improve the inference speed. 3. CBAM mechanism optimization: Adaptive enhancement of channel features related to haze concentration, and suppression of irrelevant interference. | PNSR: 24.71 SSIM: 0.9529 | 1. Advantages: The defogging effect for urban high-reflective objects is superior to Retinex/DCP. CBAM significantly enhances the ability to restore details. 2. Limitations: The training data consists of simulated fog images. The generalization ability for real dense fog scenarios needs to be verified. SSIM is slightly lower than the original SRGAN on some test images. |

| Pratik Jadhav [26]. (2025) | Industrial gas safety inspection | MultiModalGasData | Three-level architecture: 1. SRGAN 2. SCAE 3. Multimodal CNN | 1. Low-cost thermal imaging adapter: Designed for the Seek Compact camera. 2. End-to-end preprocessing: SRGAN enhances resolution. 3. Late fusion strategy: Feature high-level fusion. | PSNR = 68.74 SSIM = 90.28 | 1. Advantages of late fusion: 0.66% higher accuracy rate compared to early fusion (due to reduction in modal interference). 2. Necessity of image enhancement: The PSNR of SRGAN is 9.85 higher than that of bicubic interpolation (Table 4). 3. Computational bottleneck: SRGAN training requires 25 rounds (A400 GPU), and real-time deployment needs optimization. |

| Chuilian Sun [27] (2025) | General image | Natural landscapes, urban buildings, complex textures, and portrait images | HO-SRGAN-ST | 1. The first integration of SRGAN and Swin Transformer: Utilizing the window attention and moving window mechanism of Swin Transformer to optimize global feature extraction. Combined with MSE loss, perceptual loss, and adversarial loss. 2. Adaptive denoising: Based on local variance to estimate the noise level, dynamically adjust the convolution kernel weights. | PNSR: 43.81 SSIM: 094 | 1. On images with complex textures (Urban100) and rich edge details, the PSNR increased by 3.15 dB, and the SSIM increased by 0.08 (compared to pure HO-SRGAN). 2. The reconstruction time was reduced to 0.02 s, and the efficiency was 8 times that of SRGAN. 3. Adaptive denoising significantly reduced noise interference, with the MAE (mean absolute error) as low as 0.0978. |

| Zübeyr Güngür [28] | Biomedical image super-resolution | 1. Skin cancer dataset. 2. Blood cell cancer dataset. 3. Retina fundus dataset. | SRGAN | 1. Medical image adaptation: Optimizing training for three types of medical images. 2. Perceptual loss as an alternative to MSE: Enhancing visual quality (non-pixel-level error). 3. Cross-dataset validation: Unified framework for processing different modalities of medical images. | Skin cancer: PSNR = 31.06 SSIM = 0.858 Retina fundus: PSNR = 30.71 SSIM = 0.9430 Leukemia: PSNR = 30.97 SSIM = 0.8818 | 1. Diagnostic quality: All PSNR > 30. 2. Structural fidelity: Retina SSIM reaches 0.943. 3. Generalization ability: A single model can be adapted to three types of medical images. 4. Clinical value: Enhances the clarity of skin lesions/blood cells/retinal details. |

| This research | Super-resolution of FMI logging image | FMI set | FMI + SRGAN | 1. Apply SRGAN to FMI logging image. 2. Optimize the input features through grayscale/binarization preprocessing. | PSNR: 41.39 SSIM: 0.992 | 1. SRGAN performs the best in RGB images, effectively restoring edge details. 2. Binary images lose the gradient information of grayscale, thus unable to reconstruct the details of geological structures. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.; Qi, X.; Chen, L.; Li, Y.; Fu, J.; Liu, Z. Super-Resolution Reconstruction of Formation MicroScanner Images Based on the SRGAN Algorithm. Processes 2025, 13, 2284. https://doi.org/10.3390/pr13072284

Ma C, Qi X, Chen L, Li Y, Fu J, Liu Z. Super-Resolution Reconstruction of Formation MicroScanner Images Based on the SRGAN Algorithm. Processes. 2025; 13(7):2284. https://doi.org/10.3390/pr13072284

Chicago/Turabian StyleMa, Changqiang, Xinghua Qi, Liangyu Chen, Yonggui Li, Jianwei Fu, and Zejun Liu. 2025. "Super-Resolution Reconstruction of Formation MicroScanner Images Based on the SRGAN Algorithm" Processes 13, no. 7: 2284. https://doi.org/10.3390/pr13072284

APA StyleMa, C., Qi, X., Chen, L., Li, Y., Fu, J., & Liu, Z. (2025). Super-Resolution Reconstruction of Formation MicroScanner Images Based on the SRGAN Algorithm. Processes, 13(7), 2284. https://doi.org/10.3390/pr13072284