1. Introduction

Transformer equipment plays a critical role in ensuring the stability and reliability of power systems. During their long-term operation and maintenance, transformers generate large volumes of unstructured textual data—such as defect records, maintenance reports, and fault logs—which encapsulate essential knowledge about fault causes, equipment health, and prior repair strategies. Effectively extracting and utilizing these data is vital for enhancing transformer reliability, reducing risk, and supporting intelligent maintenance decisions.

Before the advent of data-driven models, transformer fault diagnosis was dominated by rule-based and signal-based techniques. Dissolved gas analysis (DGA) remains a widely adopted method for identifying incipient insulation faults based on gas ratios [

1], while fuzzy logic systems and expert systems were developed to model expert reasoning under uncertainty [

2]. These methods, although interpretable and grounded in engineering heuristics, often lacked adaptability to dynamic or composite fault patterns.

To overcome the limitations of rule-based systems, researchers introduced various machine learning and deep learning methods for transformer diagnosis [

3]. References [

4,

5] introduced the application of CNNs (convolutional neural networks) and RNNs (recurrent neural networks) in natural language-processing tasks, specifically focusing on syntactic parsing and sentiment classification, where CNNs captured local syntactic features, and RNNs modeled sequential dependencies. Reference [

6] further proposed a named entity recognition method based on CRFs (conditional random fields), effectively integrating contextual text features for structured information extraction. This method has been widely applied in the field of power. References [

7,

8,

9,

10,

11] proposed and improved a Bi-LSTM-CRF (bidirectional-long short-term memory—conditional random field) method, which was applied to unstructured text data recognition in power systems. This approach enhanced the robustness of named entity and relation extraction. Further, they proposed a Bi-LSTM-CNN-CRF hybrid model optimized for dispatching work orders in smart grid environments, improving the recognition of technical terms and equipment entities in semi-structured maintenance texts. Despite these advances, most of the aforementioned approaches focus on structured signals and require intensive feature engineering. They often lack the semantic understanding required for integrating unstructured maintenance records, limiting their adaptability to context-rich diagnostic reasoning.

In recent years, numerous LLMs (large language models) have emerged, including GPT-4 [

12], PaLM [

13], ChatGLM4 [

14], Alpaca [

15], BERT [

16], and Qwen [

17]. Extensive experiments have demonstrated that, with hundreds of billions of parameters, these LLMs possess advanced capabilities in natural language understanding and logical reasoning [

12,

13,

14,

15,

16,

17]. In particular, Qwen has demonstrated strong generalization ability and domain adaptability. It surpasses the large SOTA (state-of-the-art) model in all evaluations and proves its possibility to solve various tasks in small-sample scenarios or even zero-sample scenarios [

17]. However, at present, their application in power equipment fault analysis remains underexplored, especially regarding tasks requiring expert-level reasoning and knowledge generation. Most of them conduct simple information extraction using word vectors through pre-training methods. Reference [

18] is based on the BERT model and utilizes the knowledge of event triples to enhance the representation ability of the language model. Reference [

19] improved the masking mechanism on this basis and trained and optimized it for the text analysis scenario of power equipment. Reference [

20] enhanced the ERNIE (enhanced representation through knowledge integration) model by integrating a multi-segment masking mechanism, which allowed the model to better capture long-range semantic dependencies and improved both its semantic representation capability and generalization performance in downstream tasks.

The pre-training of large language models typically demands extensive computational resources and prolonged training time, as well as access to large-scale, high-quality datasets. However, in transformer fault diagnosis, the rarity of certain fault types leads to data insufficiency, posing challenges for model training. This data sparsity presents a significant challenge for effectively applying LLMs in such specialized domains and underscores the need for small-sample learning strategies. Furthermore, the unstructured textual data related to transformer equipment are rich in domain-specific terminology and expert-level knowledge unique to the power industry. Conventional external knowledge augmentation methods used in LLMs often fail to capture this domain specificity, thereby falling short in satisfying the demands of practical knowledge generation tasks. To address this, it is essential to apply domain-adaptive fine-tuning techniques that enable LLMs to internalize specialized task patterns and industry-specific knowledge structures.

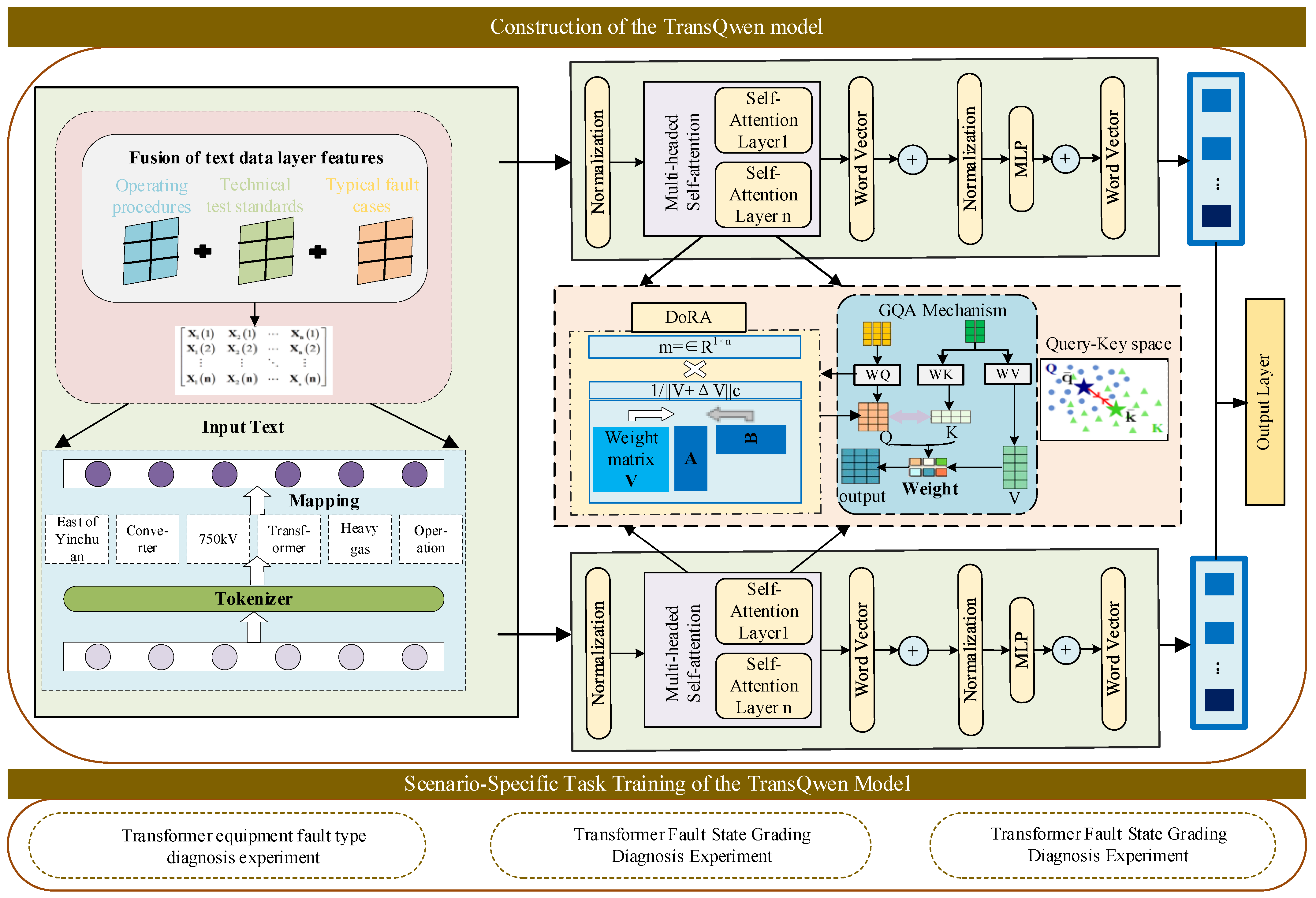

To address the challenges associated with transformer fault diagnosis and maintenance decision-making, this study investigates the characteristics of unstructured textual data in transformer operation records and proposes a domain-adapted large language model, TransQwen. Built upon the Qwen-7B-Chat architecture, TransQwen is fine-tuned using a curated corpus of transformer fault cases and technical documentation, incorporating DoRA (weight-decomposition low-rank adaptation) to align with fault classification standards, operational guidelines, and maintenance protocols. This enables the model to acquire domain-specific knowledge and generate accurate question–answer pairs related to transformer diagnostics. The architectural framework and training scenarios of TransQwen are illustrated in

Figure 1. Each module is elaborated in

Section 2.1,

Section 2.2,

Section 2.3 and

Section 2.4 with mathematical formulations and functional explanations. The model integrates the GQA (group query attention) mechanism within its attention layers and employs RoPE (rotary positional encoding) to enhance its capacity for long-sequence semantic modeling. By leveraging historical fault data, maintenance records, and operational norms, TransQwen demonstrates superior capability in identifying latent equipment anomalies. It significantly improves diagnostic accuracy for transformer faults and defects under low-sample conditions and supports the generation of precise, scenario-specific maintenance strategies. This enhances both the reliability and efficiency of transformer operation and maintenance workflows.

Comparative experiments with traditional NLP models and training approaches demonstrate that TransQwen achieves superior performance in transformer fault diagnosis tasks. Notably, it successfully accomplishes complex knowledge-generation tasks—such as generating context-specific troubleshooting strategies—which conventional models and pre-training methods fail to address. By effectively integrating LLM capabilities with domain-specific knowledge, TransQwen significantly reduces the probability of undetected faults, mitigates potential impacts on power system stability, and enhances the comprehensiveness and reliability of diagnostic decision-making. This enables the transformation of unstructured textual data into actionable expert knowledge. Moreover, the application of TransQwen provides a scalable framework for intelligent maintenance support, offering valuable insights for the future deployment of LLM-based solutions in the power industry. By aligning model outputs with technical standards, operational procedures, and expert knowledge in the power domain, TransQwen enables accurate and practical decision-making, breaking through the limitations of traditional diagnostic models that lack interpretability or actionability. It opens new avenues for extending LLM technologies to broader domains, including integrated grid services, intelligent dispatching, and decision-making support for equipment lifecycle management.

2. Construction of the Transformer Fault Diagnosis and Strategy Generation Model

The basic architecture of the TransQwen model is based on the Qwen-7B-Chat model, which is a typical decoder-only transformer large-model structure. Its main structure includes the text input layer, embedding layer, decoder layer and output layer.

2.1. Text Input Layer

The input text Xtext first goes through a word segmentation process, where the Xtext is subdivided into words or subwords by the tokenizer process, and each word is then mapped to a unique integer identifier. These identifiers (IDs) form the array index Itext, which represents the digitized form of text.

This mechanism ensures that the lengths of all input data are consistent, enabling the model to efficiently batch process text data of different lengths and improving the processing speed and computational efficiency.

To prepare unstructured text data for input into the LLM, we conducted a multi-stage pre-processing pipeline. This includes:

Removal of irrelevant or redundant symbols and formatting inconsistencies;

Standardization of terminology and technical expressions according to transformer maintenance standards;

Normalization of text structure by segmenting records into coherent sentences; after pre-processing, the cleaned text is tokenized using the BPE (byte pair encoding) tokenizer employed by the Qwen-7B-Chat model.

This tokenizer segments the input into subword units and maps them to integer indices.

Additionally, special tokens such as ‘[CLS]’ (classification token) and ‘[SEP]’ (separator token) are inserted to guide the model’s attention and demarcate question–answer pairs in fine-tuning tasks. All input sequences are padded or truncated to a uniform length (typically 1024 tokens) to ensure compatibility with the transformer architecture. This approach improves processing efficiency and preserves semantic coherence, which is essential for accurate question–answer generation and fault classification. This pre-processing and tokenization strategy improves consistency, enhances vocabulary handling of technical terms, and preserves semantic coherence, thereby facilitating more effective learning and inference in domain-specific tasks.

2.2. Embedding Layer

The embedding layer consists of three parts: the word-embedding layer, the position-encoding layer, and the paragraph-encoding layer. Each ID is mapped to a vector of a fixed dimension to generate a vector sequence as the initial input representation of the model, providing effective input for the subsequent decoder layer.

Among them, the word embedding layer converts the formed integer identifier after mapping each word or sub-word into the corresponding vector

Yt by searching the embedding matrix

Et, that is:

Since the transformer architecture does not have a built-in sequence information capture mechanism, sequence information needs to be introduced through position coding. The position coding layer is divided into a fixed position coding layer and a learnable position coding layer. Among them, learnable position coding is the parameter learned through backpropagation during the training process, while fixed position coding can be calculated using the following formula:

where

pos-the position;

i-the dimension index;

dTransQwen-the embedded dimension.

Similar to the word-embedding layer, the paragraph encoding layer is also obtained by looking up the embedding matrix

Es, converting the index of each paragraph into the corresponding vector

Ys, that is:

The final output vector is the sum result

Y of word-embedding, position-encoding, and paragraph-encoding:

Compared with traditional recurrent neural network models, the embedding layer architecture of TransQwen ensures that the model can batch process text data of different lengths, containing rich semantic and positional information, thereby enhancing the natural language understanding and generation capabilities of the TransQwen model.

2.3. Decoder Layer

Each of the decoder layers first contains a multi-head self-attention layer, and each multi-head self-attention layer captures different context information through multiple different self-attention heads. The self-attention mechanism allows the words at each position to focus on the words at all positions in the input sequence, thereby capturing long-range dependencies. The self-attention mechanism can focus on the important information related to the current word, thereby generating the final attention output. It ensures that the model can effectively capture and utilize the key context information in the input sequence. The calculation is performed as follows:

where

Q—the query matrix;

K—the key value matrix;

V—the value matrix;

dk—the dimension of the input matrix; Softmax—the activation function.

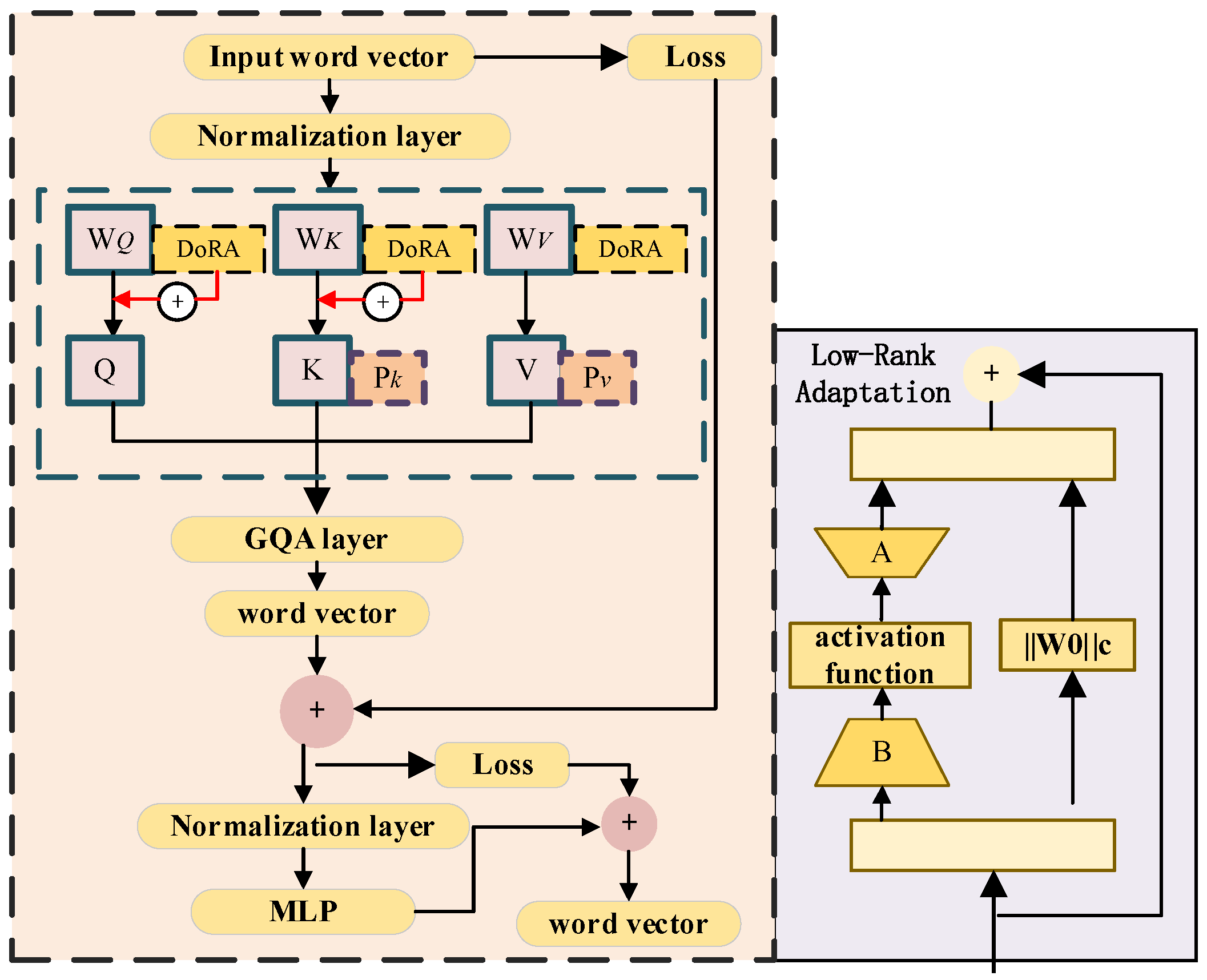

Since the text data of transformer equipment are mostly long text data with professional terms, to effectively enhance the model’s parsing ability for long text data and avoid the problem of large model illusion, the multi-head self-attention layer of the TransQwen model adopts the GQA mechanism. The GQA mechanism interpolates between query attention and MHA (multi-head attention) in the model, dividing the query heads into G groups, and each group shares a key and value.

Figure 2 shows the differences between the GQA mechanism and the original self-attention mechanism. This architecture greatly enhances the computational efficiency of the model, enables fuller utilization of computing resources, and also improves the parallel computing capability of the model. Through this improvement, the TransQwen model performs more efficiently and effectively when dealing with large-scale data and complex tasks, effectively avoiding the LLM illusion problem caused by the inference training process.

Usually, matrix calculations require normalization to prevent the values of different features from being too large. In the TransQwen model, the normalization layer uses

RMSNorm instead of the traditional LayerNorm. The formula of

RMSNorm is:

where

ω(

i)—the value of the last dimension of the input;

n—the number of dimensions of the input;

xN—the input of the normalization layer;

HS(

YRMSNorm)—the output of the normalization layer;

HS(

YAttention)—the output of the multi-head self-attention layer.

RMSNorm normalizes by calculating the mean sum of the squares of each element of the input vector and then taking the square root. This approach does not change the mean value of the input but standardizes based on the norm of the input vector, which can effectively increase the training speed and improve the generalization ability of the model.

The fully connected layer of the TransQwen model introduces a gating mechanism by adding an activation function

SwiGLU, enabling the model to select different activation modes in different input regions and improving the model’s ability to extract features from nonlinear data. The calculation is performed as follows:

where

Linear(

x)—the linear transformation;

σ—the nonlinear activation function (e.g., sigmoid or ReLU);

HSMLP—the output of the fully connected layer.

The structure of SwiGLU enables it to adaptively adjust according to different tasks and data, thus performing well in various application scenarios.

2.4. Output Layer

After the word vectors processed by stacking multiple decoder layers are input into the output layer, they are first fitted through linear transformation to map the high-dimensional word vectors to the vector space of vocabulary size. Then, the output of the linear transformation layer is converted into a probability distribution through the Softmax function. Finally, the greedy algorithm is used to select the word with the highest probability from the probability distribution as the prediction result.

With this architecture, the TransQwen model can generate accurate outputs and be effectively fine-tuned.

3. Fine-Tuning Training Mechanism for Transformer Fault Diagnosis and Strategy Generation Model

This paper adopts DoRA for the fine-tuning of the TransQwen model. Firstly, a low-rank adapter layer is added between the fully connected layer and the attention layer of the Qwen-7B-Chat model, all parameters of the pre-trained model are frozen, and only the added adapter layer is trained. By training these parameters on the pre-constructed QA pair dataset, the TransQwen model can be knowledge-driven. It is adapted to the QA task scenario in the vertical field of the power industry generated by the fault diagnosis and maintenance strategy of the transformer. Meanwhile, the rotational coding RoPE mechanism is introduced into the mask mechanism, enabling it to handle text sequences of any length. This effectively guarantees the coherence of text sequences during the training of the QA pair dataset composed of texts such as transformer maintenance and operation and maintenance norms and standards, status evaluation guidelines, and fault case analyses. This enables the TransQwen model to better understand the context information in this scenario and solves the problem of large model reasoning illusion caused by the inability to associate the context after the QA pairs in the training dataset become too long.

3.1. DoRA Fine-Tunes Sample Database

The Qwen-7B-Chat model has undergone extensive training with up to 30 million pieces of data and trillions of different texts and codes, and has consistently demonstrated outstanding performance in numerous downstream industries. However, since the unstructured text data of transformer equipment contain a large amount of professional terms and knowledge in the power industry, the Qwen-7B-Chat model does not have a corresponding knowledge base to support it. It performs poorly in the tasks of transformer fault diagnosis and knowledge generation of maintenance and operation strategies in the vertical field of the power industry and cannot be applied to engineering practice.

Therefore, this paper collects the operation regulations and guidelines of transformer equipment, relevant technical standards and test specifications of transformer equipment, as well as typical defect and fault cases of transformer equipment in many power supply bureaus in recent years as the initial training corpus. The dataset is composed of three sub-corpora—standards and regulations, technical specifications, and real-world fault cases—each contributing to a corresponding QA pair set for fine-tuning.

Table 1 and

Table 2 present the text volume and example QA structures used for training.

The fault cases and maintenance records used in this study were collected from multiple regional power supply bureaus under the State Grid Corporation of China, including Zhejiang, Hubei, and Jiangsu provinces. These records encompass real-world transformer defect reports, routine maintenance logs, and annotated fault classification documents accumulated between 2018 and 2023. All data were anonymized and pre-screened to ensure the exclusion of sensitive operational information and to comply with data confidentiality requirements. The resulting corpus provides a representative and high-quality dataset of domain-specific language, which forms the basis for constructing QA pairs for fine-tuning the TransQwen model.

During the fine-tuning process of DoRA, a QA pair dataset needs to be constructed. The dataset is usually composed of pairs of input questions and output answers, and its form is as follows:

where

Qi—the

i-th question;

Ai—the answer corresponding to the

i-th question;

N—the number of pairs of questions and answers.

Based on the initial training corpus, a sample database of QA pairs incorporates extensive domain-specific knowledge related to transformer fault diagnosis was constructed, as shown in

Table 2. During the construction of the QA pair dataset, each entry undergoes standardized formatting, including domain-specific term alignment, removal of non-informative text, and segmentation into concise question–answer units. The pre-processed data are then tokenized using the same BPE tokenizer as the base model to ensure consistency in subword representation. This process enhances the model’s capacity to understand domain knowledge embedded in long, complex sequences and reduces noise in downstream learning.

The text data of QA pairs are converted into sequences of words or subwords. For each question-and-answer pair of data, the tokenizer is applied to convert it into a marked sequence, as shown in the following formula:

The special labels required by the model are added to the input label sequence. For the QA pair dataset constructed in this paper, the input of the TransQwen training text once is as follows:

where [

CLS]—the starting token of the sentence; [

SEP]—the separating token of the sentence.

3.2. DoRA Fine-Tuning Mechanism

During the full-parameter fine-tuning process, the LLM is initialized by loading the pre-training parameter Φ

0 and iterates the parameters Φ

0 + ΔΦ by maximizing the probability of the conditional language model. At this point, the dimension of the parameter increment ΔΦ is consistent with the pre-training parameter Φ

0. With the development of large language models, the number of model parameters is becoming larger and larger [

21]. The number of parameters of the Qwen-7B-Chat model has reached as high as 32 billion, and fine-tuning all model parameters has become unfeasible.

The research of Gao et al. indicates that the pre-trained model has an extremely small intrinsic dimension, that is, there exists a parameter of an extremely low dimension [

22]. Fine-tuning it can achieve the same effect as fine-tuning it in the full parameter space. The LoRA fine-tuning method freezes the pre-trained model weight parameters and adds additional network layers to the model at the same time, injecting the decomposition matrix of the trainable rank into each layer of the transformer architecture. The LoRA fine-tuning method can automatically adjust the weights between each layer in the neural network to improve the performance of the model. Meanwhile, by only fine-tuning the newly added parameters, it significantly reduces the number of trainable parameters for downstream tasks, and the training parameters are only one ten-thousandth of the total parameters.

For the pre-trained weight matrix W ∈ R

m×n, low-rank decomposition is adopted to represent the parameter update ΔW, that is:

where

A ∈ R

m×n;

B ∈ N

r×n;

r≪min(

m,

n);

r—the rank of the parameter matrix Δ

W, which is composed of the product of two low-rank matrices through low-rank decomposition.

However, during the LoRA training process, the matrix is projected onto a smaller dimension, resulting in the loss of some information. According to the research of Zhang et al., the relationship between direction and amplitude in LoRA is different from that of complete fine-tuning, and there is a certain performance gap [

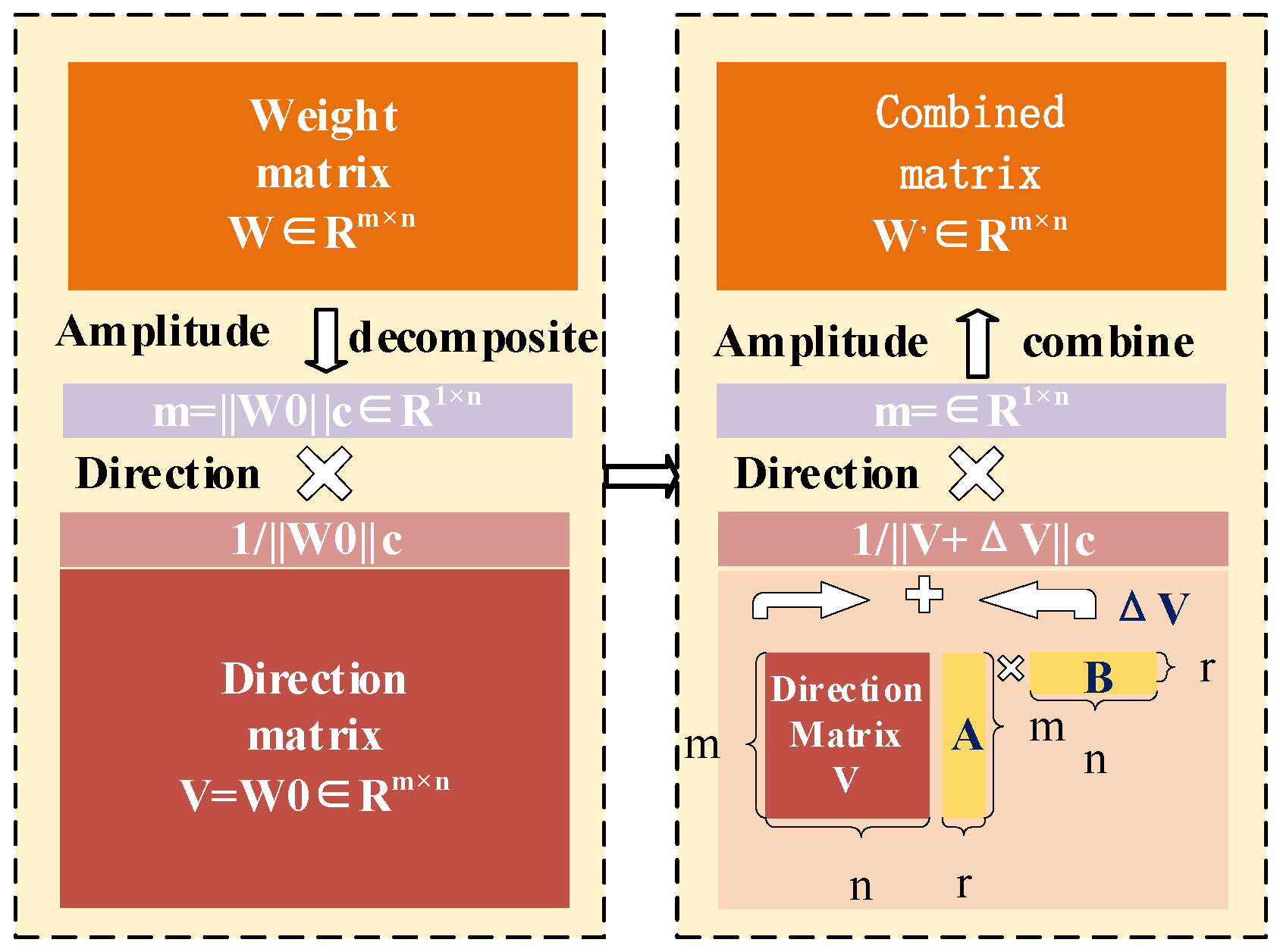

23]. Therefore, DoRA can be adopted for improvement.

DoRA has been further developed on the basis of LoRA by fine-tuning the pre-training weights by decomposing them into two parts: “amplitude” and “direction”, as shown in

Figure 3. DoRA decomposes the pre-trained weight

W to obtain the amplitude of the weight ||

W||

c ∈ R

1×n and the direction of the weight

V ∈ R

m×n. The decomposition process is as follows:

where ‖ ‖

c—the regularization of the matrix on each column.

The direction matrix

V is adjusted by using low-rank matrices

A and

B to generate Δ

V =

A ×

B. The adapted direction is

V + Δ

V. The adapted weights are combined with the original weights to obtain the new combined weight

W’:

The DoRA fine-tuning training process is shown in

Figure 4. When training the A and B matrices, the DoRA fine-tuning training mechanism maintains the efficiency of LoRA and avoids adding additional inference burdens. This weight decomposition strategy enhances the learning ability of the model during the fine-tuning process. DoRA enables the TransQwen model to effectively incorporate domain-specific knowledge from a curated corpus of transformer fault cases, operation standards, and maintenance regulations. It enhances the model’s generalization ability under limited data conditions—an essential requirement in the power industry where labeled fault data are often scarce. Moreover, DoRA improves the semantic representation of long-form professional texts, thereby strengthening the model’s capacity to generate accurate, context-aware responses in complex question-answering scenarios.

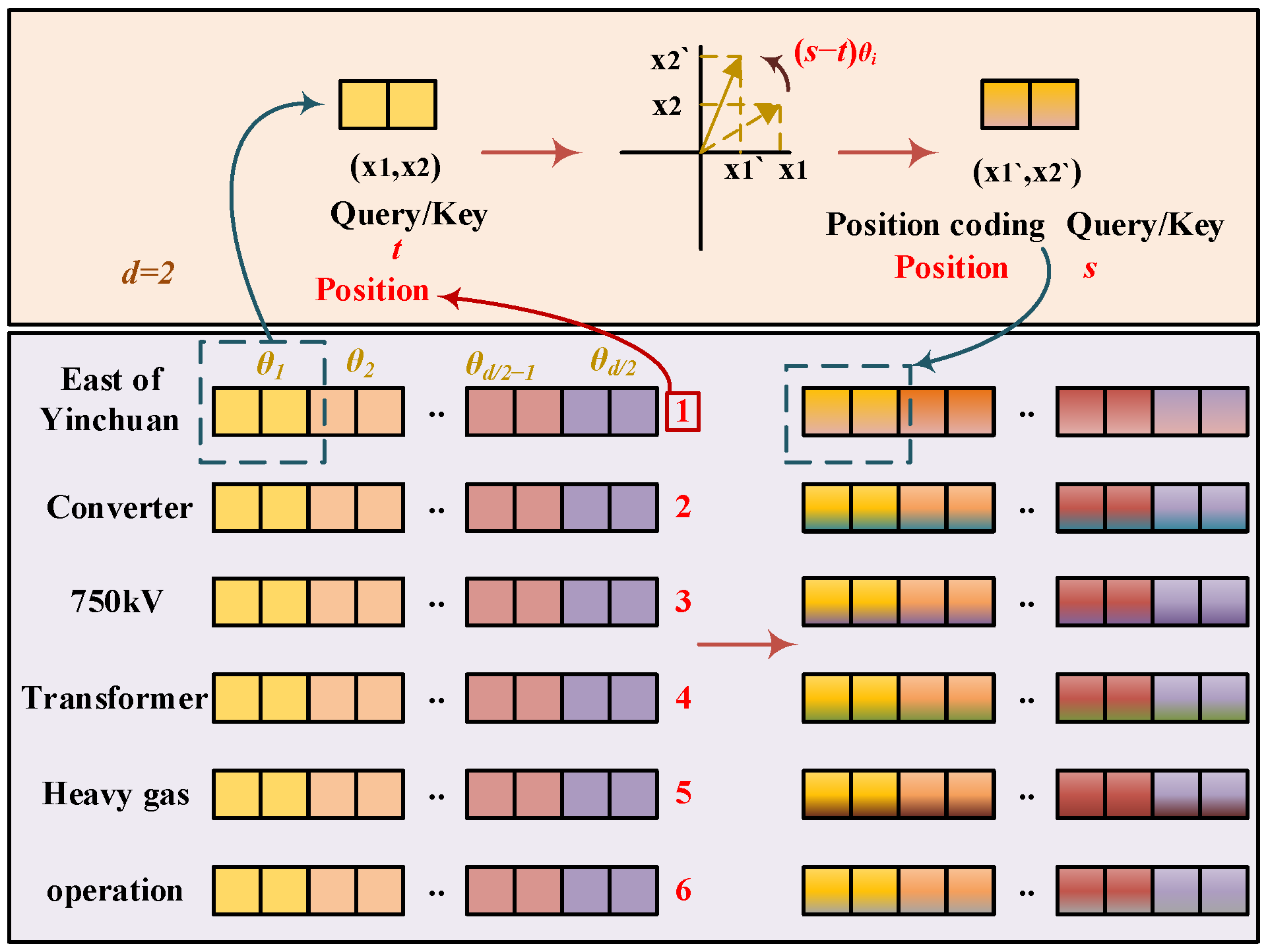

3.3. Rotational Coding RoPE Mechanism

RoPE implements relative position-encoding through absolute position-encoding, integrating the advantages of both absolute position-encoding and relative position-encoding. It allows the model to encode position information by understanding the absolute position of the mark and its relative distance.

For the given dimension

d and position

i, the query vector

q and the key value vector

k can be expressed as:

The rotation position-encoding can be defined as:

Among them, θi = i∙θ, where θ is a constant and can be set as 2π/L, and L is the maximum length of the sequence.

In actual calculation, the above rotational transformation can be transformed into:

Therefore, the formulas of the rotary coding RoPE mechanism are as shown in Formulas (17) and (18):

where

n—the amount of data;

R—the position-encoding matrix;

x—the data corresponding to the weight matrix

W;

Q and

K —the query matrix and key value matrix of the attention mechanism;

s and

t—the two positions of the sentence token. Therefore,

s–

t represents relative position-encoding. The first half of Equation (18) shows the effect of relative position-encoding, and the second half of the equation represents the absolute position-encoding part.

The training process of RoPE rotation coding is shown in

Figure 5. By introducing rotation transformation into the query and key vectors, they are made to contain position information during attention calculation. This method enables the model to capture the relative positional relationship between elements in the sequence more effectively, without losing positional information due to the increase in sequence length, effectively ensuring the ability of the TransQwen model to handle long sequence data. Enhance the training effect of QAs composed of texts such as transformer maintenance and operation norms and standards, status evaluation guidelines, and fault case analyses on the dataset.

3.4. Fine-Tuning Training Process

Based on the DoRA fine-tuning sample database constructed in

Section 2.1, this paper conducts DoRA fine-tuning training on the base Qwen-7B-Chat model. The input sequence undergoes data cleaning and pre-processing through the text input layer. The text vector

Y embedding is output through the embedding layer. The decoding layer adopts the DoRA fine-tuning mechanism to decompose the weight matrix

W into two parts ||

W||

c and

V for fine-tuning training, enabling the TransQwen model to have the ability of knowledge generation. The query matrix

Q and key value matrix

K in the attention mechanism adopt the RoPE mechanism to capture the relative positional relationship between elements, enhancing the processing ability of long sequence data of the TransQwen model. Finally, as shown in Equation (19), the probability distribution matrix

P on the word list corresponding to word

I is calculated.

where

w—the vocabulary vector;

b—the bias vector; the output of the linear transformation is a vector of the same size as the vocabulary; each element represents the un-normalized logits of the word at that position; Z

i—the output after the linear transformation of word

I;

P(

wi)—the probability of word

i.

The loss between the predicted result and the actual result

yi is calculated, and the cross-entropy loss is adopted as the loss function. Its formula is:

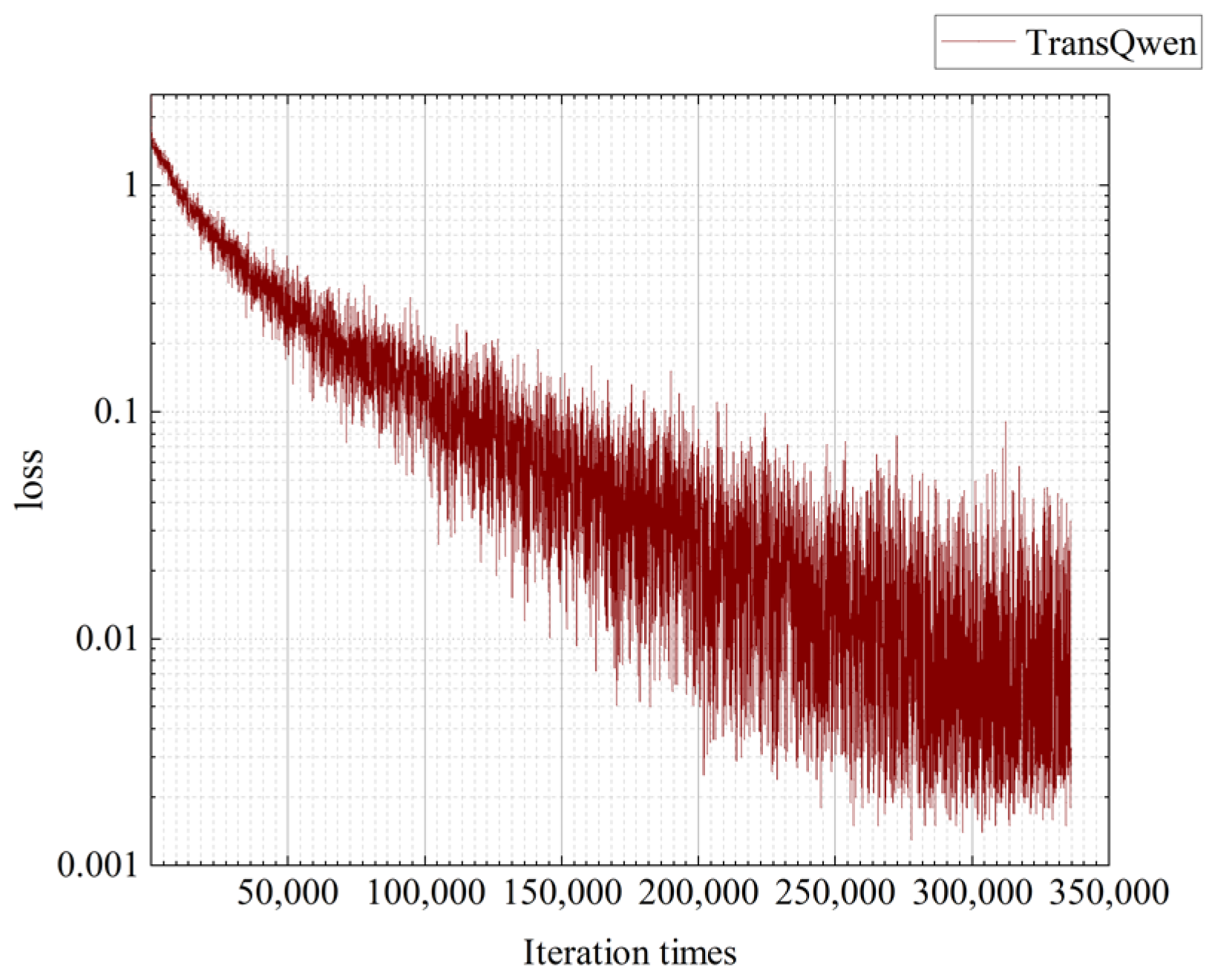

The training of the TransQwen model was conducted on an NVIDIA A100 GPU with 80 GB memory. The full fine-tuning process using the DoRA mechanism required approximately 16 h on a corpus of transformer fault diagnosis records and maintenance QA pairs. The model was implemented using the PyTorch 2.1.2 deep learning framework with support from Hugging Face’s Transformers library.

At present, due to proprietary restrictions associated with the annotated transformer fault data, the dataset and fine-tuned model weights are not publicly released.

From a scalability perspective, TransQwen supports low-rank adaptation during fine-tuning, enabling parameter-efficient training with reduced memory overhead. The model can be deployed on standard high-performance server GPUs and shows potential for lightweight optimization via quantization or pruning in future work. The model fine-tuning parameters are shown in

Table 3, and the errors during the training process are shown in

Figure 6.

4. Results and Discussion

This chapter studies the performance of the TransQwen model through three scenario tasks: diagnosing the types of transformer equipment faults, diagnosing the operating status levels of transformer equipment faults, and generating maintenance strategies for transformer equipment faults. Thus, it is verified that the fine-tuning strategy of DoRA training adopted by the TransQwen model can enable the model to learn the professional knowledge related to transformer defects in the power industry and generate questions and answers related to transformer fault diagnosis.

Through comparative analysis with the traditional neural network models TextRCNN, ChatGLM4, the Qwen-7B-Chat model, and the pre-trained Qwen model, which refers to the base Qwen model fine-tuned without task-specific supervision, the TransQwen model achieves the best level in the fault type diagnosis scenario tasks. Additionally, the TransQwen model is capable of completing knowledge generation tasks that traditional neural network models, NLP models, and pre-training methods cannot achieve, as well as generating transformer fault repair and operation and maintenance strategies.

In this chapter, we use precision, recall, and F1 score to evaluate classification tasks (fault type and fault state grading diagnosis), and BLEU-4 and ROUGE-1/2/L scores to assess the quality of generated maintenance strategies. These metrics reflect both accuracy and semantic completeness of model predictions.

4.1. Transformer Equipment Fault Type Diagnosis Experiment

4.1.1. Experimental Design

The main task of the transformer equipment fault type diagnosis experiment is to verify that the TransQwen model can extract fault information from unstructured text data based on the defect description of transformer faults, combined with the professional knowledge in the power field learned during the fine-tuning process, and determine the types of implicit fault defects in the defect text. The diagnosis of transformer fault defect types is carried out in combination with the classification of transformer fault defect types in standard and specification documents such as “GB/T 1094 Power Transformer” [

24]. Among them, the classification criteria of the samples are shown in

Table 4.

4.1.2. Dataset Preparation

Based on the constructed sample database of QA pairs, the question-and-answer pair construction dataset containing the content related to the diagnosis of transformer equipment fault types is searched, randomly segmenting it at a ratio of 70% as the training set, 15% as the verification set, and 15% as the test set. Then, 70% of the question-and-answer pair data are input into the TransQwen model for DoRA fine-tuning training. The model undergoes repeated iterations based on the errors of the fine-tuning results, constantly updating the weights and biases of the model. After completing one round of training, the performance of the model is evaluated on the validation set to prevent overfitting and determine whether the model stops training. After the model training is completed, it is verified on the test set to evaluate the final generalization ability of the model.

To evaluate the robustness of the TransQwen model under low-resource scenarios, we conducted additional experiments using progressively reduced training sample sizes. Specifically, we randomly sampled 1000, 900, …, 200, and 100 QA pairs from the training corpus to simulate small-sample conditions and assessed the model performance under each setting. The validation and test sets remained unchanged to ensure fair comparison across different sampling conditions.

4.1.3. Experimental Result

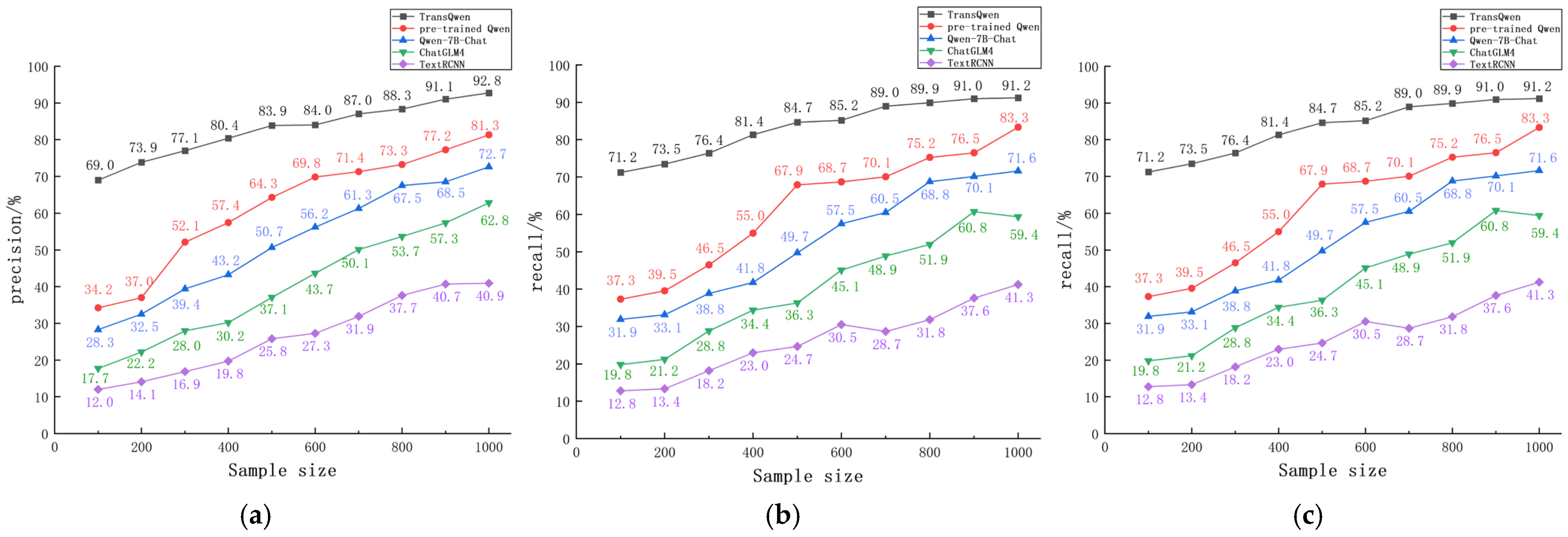

Based on the comparative performance of different models in transformer fault type classification, including their precision, recall, and F1 scores, the TransQwen model, the pre-trained Qwen model, the Qwen-7B-Chat model, the ChatGLM4 model, and the TextRCNN model were selected for comparative experiments on the same training set. The experimental results are shown in

Figure 7.

The data presented in

Figure 7 show that all LLMs achieved over 20% improvements across all evaluation metrics compared to the traditional TextRCNN model. Among them, the TransQwen model achieves the best in all indicators and has an improvement of 9–22% to varying degrees compared with other LLMs. These results demonstrate that the integration of RoPE significantly enhances TransQwen’s capability in retrieving long-sequence knowledge. The DoRA fine-tuning mechanism has also significantly improved the semantic parsing performance of transformer fault text descriptions. It can capture the semantic features of fault types in the text information and has the best diagnostic effect on transformer fault types in vertical fields. The improvement of the ability to extract text data information by the pre-training method, and the various indicators of the pre-trained Qwen model have also been improved to a certain extent compared with the base Qwen-7B-Chat model and ChatGLM4 model.

Meanwhile, in order to verify the diagnostic ability of transformer fault types of the TransQwen model in the case of small samples, this paper evaluated the performance of each model under varying dataset sizes, and the results are shown in

Figure 8.

Figure 8 demonstrates that LLMs outperform traditional neural network models across multiple evaluation metrics under varying sample sizes. Notably, the pre-trained Qwen model exhibits moderate improvements over the base Qwen-7B-Chat model. However, its performance remains suboptimal under small-sample conditions. These results indicate that relying solely on pre-trained semantic feature representations is insufficient for accurate transformer fault type diagnosis in scenarios with limited data availability. The TransQwen model achieves the best performance in all indicators under each sample condition. Especially under the small-sample condition, each indicator has a performance improvement of more than 30% (e.g., 82.6% vs. 51.3%) compared with other models. This indicates that the TransQwen model trained by DoRA fine-tuning can effectively extract the fault type information from the transformer fault text data in the case of not much transformer fault sample data, and realize the accurate fault type diagnosis of transformer equipment.

Compared with traditional expert systems and rule-based diagnostic tools, which typically rely on manually encoded if–then rules derived from domain heuristics, TransQwen demonstrates significant advantages in terms of flexibility, scalability, and generalization. Rule-based systems often struggle with the complexity and ambiguity of unstructured maintenance texts and require extensive expert effort for rule curation and updates. In contrast, TransQwen automatically learns latent patterns and domain knowledge from large-scale historical data, enabling it to interpret free-form fault descriptions, infer hidden fault types, and generate adaptive maintenance strategies without explicit human-crafted rules. Moreover, the LLM-based approach is inherently more robust to linguistic variation and novel fault scenarios, which traditional rule engines typically fail to address. Experimental results further confirm that TransQwen achieves significantly higher diagnostic accuracy and strategic completeness across all evaluation metrics, especially under low-sample conditions where expert systems often suffer from brittle logic and poor generalization.

4.2. Transformer Fault State Grading Diagnosis Experiment

4.2.1. Experimental Design

The transformer fault state grading diagnosis experiment, in combination with technical specifications and standards such as the national standard document “GB/T 19517 National Safety Technical Code for Electrical Equipment” [

25], classifies the fault status of transformers into three levels: general, severe, and critical. The specific classification criteria are shown in

Table 5, and the diagnostic examples of transformer equipment fault status levels are presented in

Table 6. Through DoRA fine-tuning training, the TransQwen model diagnosed the fault state grade of transformer equipment defect faults described in the defect record text according to the transformer fault defect record, and conducted comparative experiments with other models.

4.2.2. Dataset Preparation

The constructed transformer fault QA pairs are screened for the data in the sample database. The construction of the dataset was informed by a range of industry standards and technical guidelines, particularly those addressing the classification and diagnosis of transformer fault severity. Representative case studies and comprehensive defect descriptions were included to enhance the domain specificity and contextual richness of the corpus. Random segmentation was carried out at a ratio of 70% as the training set, 15% as the validation set, and 15% as the test set.

4.2.3. Experimental Result

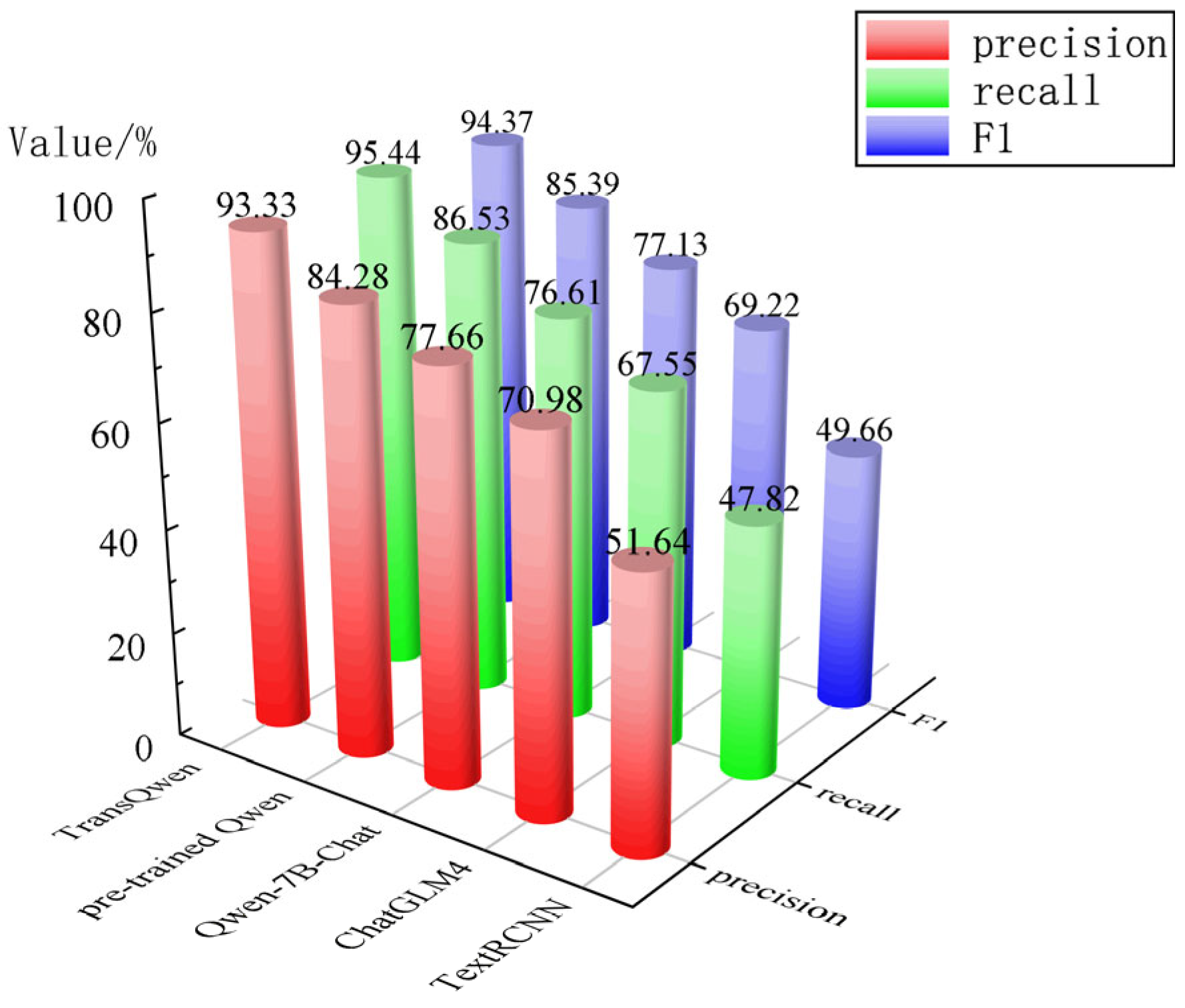

The TransQwen model, the pre-trained Qwen model, the Qwen-7B-Chat model, the ChatGLM4 model, and the TextRCNN model were selected to conduct comparative experiments on the diagnosis of transformer equipment fault state levels on the same training set. The experimental results are shown in

Figure 9.

It can be concluded from the results in the figure that the traditional neural network model TextRCNN has poor evaluation results in terms of precision rate, recall rate, and F1 index, and is unable to effectively extract the fault information in the text data of transformer equipment faults. All LLMs have improved by 20% or more in various indicators compared to the traditional neural network model TextRCNN. Among them, the TransQwen model has the best performance, with an improvement of 8–25% compared to other LLMs. The experimental results confirm that the TransQwen model fine-tuned by DoRA can effectively extract information from the text data of transformer fault and defect records, and conduct semantic parsing of the extracted transformer fault information. It can accurately diagnose the fault status level of transformer equipment in combination with relevant technical specifications and standards, and reach the maximum values in all indicators.

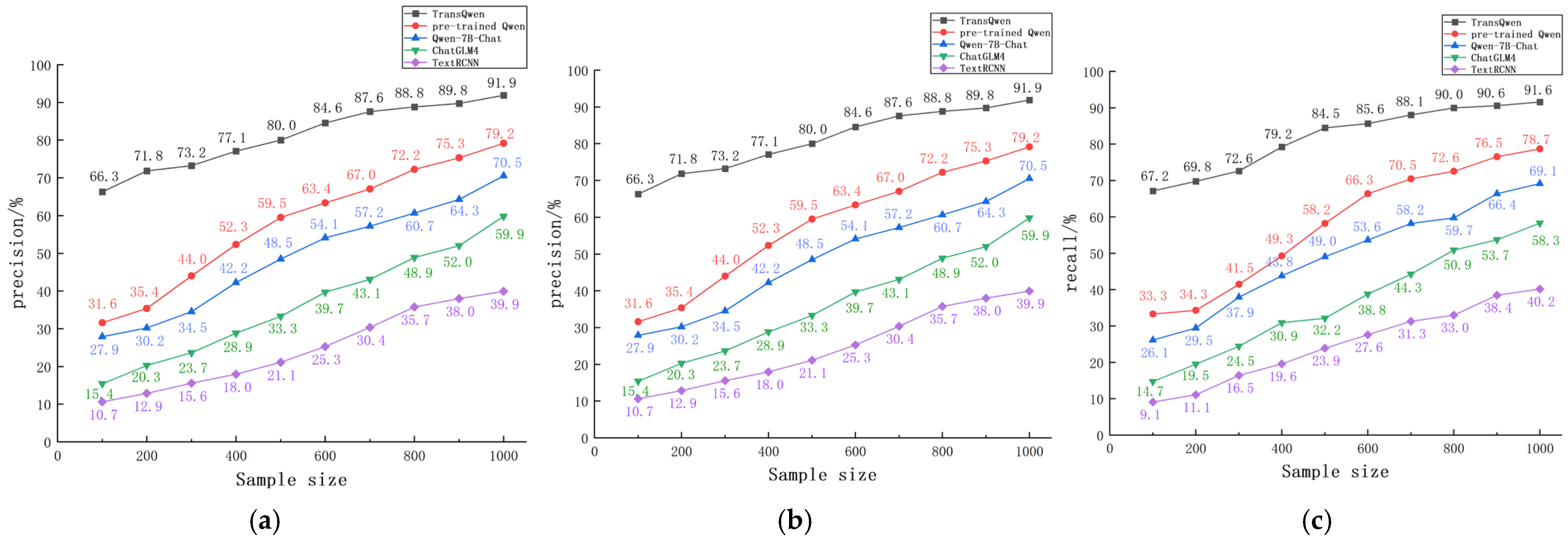

To evaluate the diagnostic performance of the TransQwen model in identifying transformer fault states under limited data conditions, we conducted comparative experiments across multiple dataset scales. The evaluation involved the TransQwen model, the pre-trained Qwen model, Qwen-7B-Chat, ChatGLM4, and TextRCNN. The result is shown in

Figure 10.

As shown in

Figure 10, the traditional neural network model TextRCNN yields the lowest performance across all evaluation metrics. In contrast, the remaining LLMs demonstrate improvements ranging from 5% to 50%, depending on the specific metric. The pre-trained Qwen model shows more substantial gains under large-sample conditions; however, its advantage over the base model diminishes significantly in small-sample scenarios. In comparison, the TransQwen model—fine-tuned via the DoRA framework—achieves the best performance across all metrics. Notably, under limited data conditions, it surpasses the pre-trained Qwen model by more than 30%, effectively addressing the performance degradation typically associated with pre-training-only approaches in low-resource settings.

The TransQwen model can accurately extract transformer fault information from small-sample text data, and combine transformer technical specifications and standards to accurately diagnose the transformer equipment fault state level. It can achieve transformer fault diagnosis when the sample data are insufficient in practical engineering applications.

It is also worth noting that traditional expert systems and rule-based diagnostic tools often struggle with the diagnosis of transformer fault severity levels when input data is expressed in free-form language or lacks explicit rule matches. These systems typically require domain experts to manually define classification rules based on thresholds or static logic trees, which are difficult to scale and adapt to novel or ambiguous scenarios. In contrast, the TransQwen model leverages its language understanding and knowledge-driven inference capabilities to dynamically interpret fault descriptions and map them to appropriate severity categories, even when explicit cues are lacking. This enables higher diagnostic flexibility and robustness in real-world maintenance records. The superior performance of TransQwen under low-sample and weakly structured conditions underscores its practical advantage over traditional systems.

4.3. Transformer Maintenance Strategy Generation Experiment

4.3.1. Experimental Design

The task of generating maintenance strategies for transformer equipment faults involves processing fault description texts to identify the fault and propose maintenance strategies. Different models automatically identify the types of faults based on the input semantic data. Based on the type and historical data of the equipment, the most suitable maintenance strategy is automatically generated. Comparative experiments are conducted by evaluating the maintenance strategies generated by different models.

In the transformer fault state grading diagnosis experiment, the results of maintenance strategies generated by different models were evaluated through the BLEU (Bilingual Evaluation Understudy) index and the ROUGE (Recall-Oriented Understudy for Gisting Evaluation) index. Among them, BLEU is a commonly used indicator for evaluating the quality of text generated by LLMs. In this paper, the four-element grammar BLEU score BLEU-4 is selected to measure the quadruple matching degree between the text generated by the model and the reference text, thereby evaluating the accuracy rate of the model text generation. The calculation is performed as follows:

where

BP—the penalty factor;

c—the total length of the generated sentence;

r—the total length of the reference text; and

pn—the precision rate of the

n-th tuple.

ROUGE-N is used to evaluate the overlap degree between the generated transformer equipment fault maintenance strategy and the key power professional terms in the reference maintenance strategy, thereby evaluating the recall rate of the model. The calculation is performed as follows:

where

Countmatch(gramn)—the number of occurrences of

n-th tuple in the generated text;

Count(gramn)—the number of occurrences of

n-th tuple in the reference text.

ROUGE-L not only considers the overlap degree of power professional vocabulary but also their sequential relationship. By comparing the longest common subsequence of the generated maintenance strategy and the reference maintenance strategy, it evaluates the ability of the generated maintenance strategy to maintain the structure and important information of the reference maintenance strategy. The calculation is performed as follows:

where

Rlcs—the recall of the longest common subsequence;

Plcs—the precision of the longest common subsequence;

β—the weighting ratio of recall to precision; and

β = 1is taken in this paper.

To evaluate whether DoRA fine-tuning enhances TransQwen’s capacity to establish deeper knowledge associations and improve its performance on domain-specific question answering, we further investigate its behavior transformation. The model shifts from a traditional LLM’s data-driven, retrieval-based paradigm to a knowledge-driven generative framework tailored for professional diagnostics in the power domain. This paper selects 70 different transformer equipment failure scenarios and uses the scoring system to evaluate the transformer fault maintenance strategy generation ability of the TransQwen model. Its evaluation metrics are shown in the following formula:

where

Ssum—the total score of the model using a percentage system;

Savg—the average score rate of the model;

Si—the score of a single fault scenario;

Si’—the full score of that fault scenario.

To validate the quality and credibility of the generated maintenance strategies, we benchmarked the outputs of different models against authoritative industry standards, including the “Transformer Operation and Maintenance Manual” and the “DL/T 572-2010 Transformer Operating Regulations” [

26]. Additionally, we invited three senior engineers with over 10 years of transformer maintenance experience from State Grid Corporation to perform blind evaluations of the generated strategies based on completeness, technical correctness, operational safety, and compliance with real-world procedures. A standardized scoring rubric was used to ensure consistency across reviewers.

4.3.2. Dataset Preparation

The constructed transformer fault question-and-answer is used to screen the data in the sample database, and the historical fault records, equipment detection data, fault type labeling, and fault maintenance records of transformer equipment in the database are selected to construct a dataset. Random segmentation was carried out at a ratio of 70% as the training set, 15% as the validation set, and 15% as the test set.

4.3.3. Experimental Result

Due to the poor text generation ability of traditional deep learning models, in the transformer fault state grading diagnosis experiment, only the Qwen-7B-Chat model, the pre-trained Qwen model and the TransQwen model were selected for comparative experiments on the same test set. Taking the fracture fault of the A-phase bushing on the high-voltage side of the No. 1 main transformer in Wuzhong 110 kV Substation as an example, the generation results of maintenance strategies by different models are shown in

Table 7.

Using the fracture fault of the A-phase bushing on the high-voltage side of the No. 1 main transformer at Wuzhong 110 kV Substation as a representative case (

Table 7), the Qwen-7B-Chat model generates only a coarse-grained description of the incident, failing to capture critical operational details necessary for actionable maintenance guidance. The pre-trained Qwen model adds the steps of insulating oil treatment and equipment testing, but only answers with general knowledge and troubleshooting methods. The TransQwen model is knowledge-driven, introducing professional knowledge in the vertical field of transformer fault diagnosis and conducting a detailed analysis of fault cases. It precisely generates more detailed and specific operation steps based on its specific fault conditions and potential fault causes, reducing the ambiguous space in the operation and ensuring that each step can be carried out in accordance with the standards. The maintenance strategy generated by the TransQwen model is comprehensive and systematic, effectively resolving the fault and improving operational reliability.

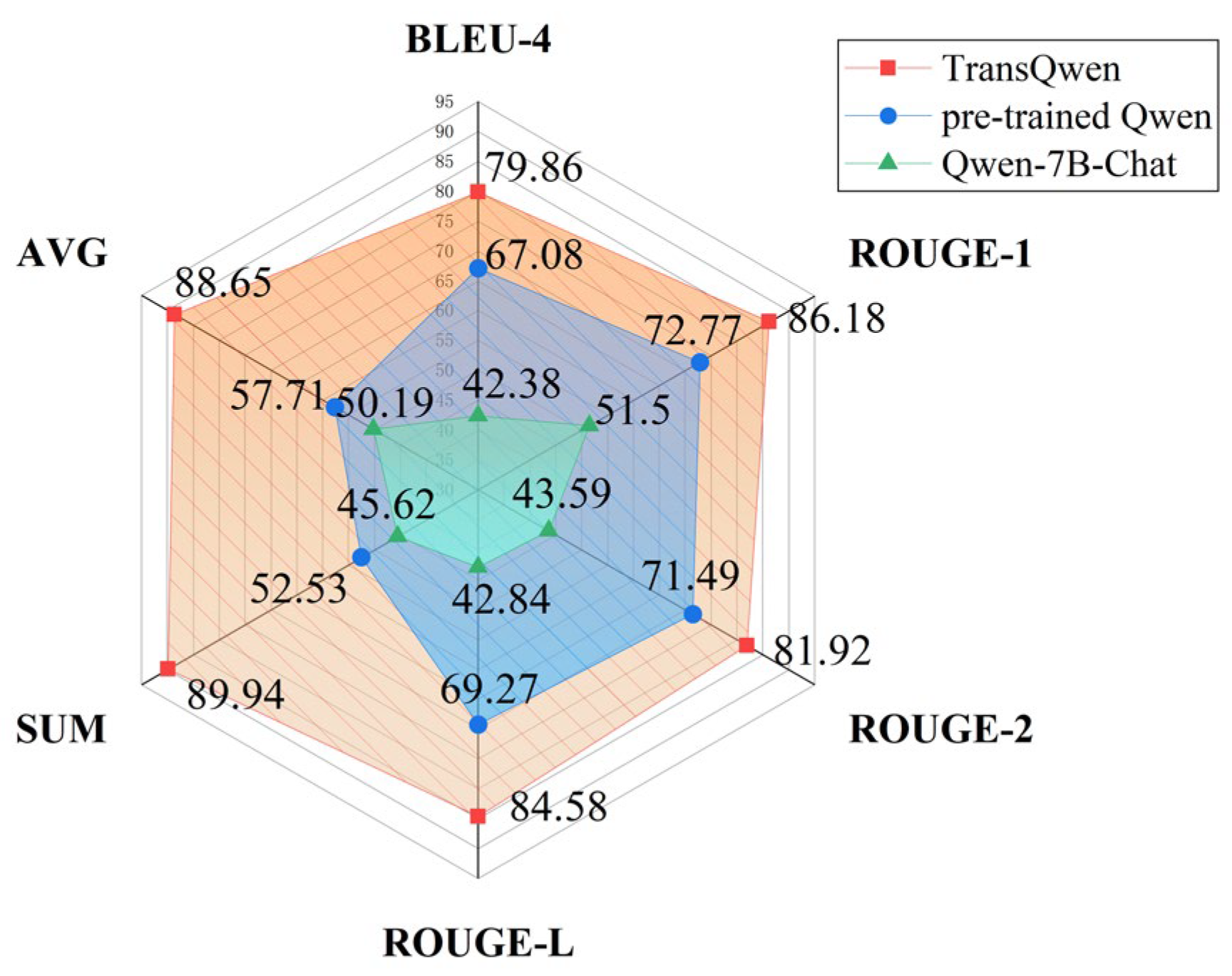

The evaluation results of the maintenance strategies generated by different models are shown in

Figure 11.

The evaluation results of maintenance strategy generation indicate that the TransQwen model achieves optimal performance across all metrics. Specifically, for the BLEU-4 score, the TransQwen model attained 79.86, while the pre-trained Qwen model and the Qwen-7B-Chat model scored 67.08 and 42.38, respectively. The BLEU-4 score of TransQwen thus exceeds that of the pre-trained Qwen model and Qwen-7B-Chat by 12.78 and 37.48 points, respectively. These results demonstrate that TransQwen significantly enhances the accuracy of text generation compared to the baseline models, confirming its capability to generate precise and contextually appropriate maintenance strategies for specific fault scenarios.

For the ROUGE metrics, the TransQwen model outperformed the other models with ROUGE-1, ROUGE-2, and ROUGE-L scores that were 13.41, 10.43, and 15.31 points higher than those of the pre-trained Qwen model, and 34.68, 38.33, and 41.74 points higher than those of the Qwen-7B-Chat model, respectively. These results indicate that the recall performance of the maintenance strategies generated by the TransQwen model has been significantly enhanced. The generated strategies demonstrate higher similarity to the standard reference procedures and maintain superior text generation quality, even in scenarios involving long-form content.

From the scoring results across various transformer fault scenarios, the TransQwen model achieves the highest total score and average score rate among all models. Specifically, it outperforms the pre-trained Qwen model by 37.41 and 30.94 points, and the Qwen-7B-Chat model by 44.32 and 38.46 points, respectively. These results indicate that the TransQwen model is capable of generating maintenance strategies that best align with standard operational procedures across different fault conditions. In contrast, the question-answering scores of the pre-trained Qwen model and the Qwen-7B-Chat model are relatively similar, suggesting that the retrieval-based pre-training method offers limited improvement in fault-specific question answering for transformer equipment. By contrast, the knowledge-driven TransQwen model demonstrates the ability to perform expert-level question answering in the power domain and to generate customized, accurate maintenance strategies tailored to specific fault scenarios—capabilities that are not achievable through pre-training alone. Moreover, the expert assessments revealed that strategies generated by the TransQwen model aligned closely with those prescribed in actual industry manuals. Senior engineers from State Grid Corporation particularly noted the TransQwen model’s ability to capture nuanced procedural details, safety requirements, and diagnostic reasoning, which are essential in high-risk industrial applications. These findings confirm that the generated strategies are not only linguistically fluent but also technically valid and executable in practical power grid maintenance workflows.

5. Conclusions

This study introduces TransQwen, a transformer-specific large language model (LLM) designed for fault diagnosis and maintenance strategy generation. Leveraging DoRA fine-tuning, RoPE positional encoding, and domain-adaptive training on transformer-related corpora, TransQwen effectively learns industry-specific knowledge from unstructured textual data. Through comprehensive experimental validation, the following conclusions are drawn:

In transformer fault type diagnosis tasks, TransQwen outperforms baseline LLMs—such as the pre-trained Qwen model, Qwen-7B-Chat, and ChatGLM4—by 9% to 22% across all evaluated metrics. Similarly, in fault state grading tasks, the model demonstrates 8% to 25% improvements over the same baselines.

Under low-resource conditions, TransQwen maintains strong performance and generalization. When trained with as few as 100 samples, it exceeds the best-performing baseline by over 30% across all indicators, demonstrating high adaptability to real-world data scarcity.

In the maintenance strategy generation task, TransQwen achieves the highest BLEU and ROUGE scores among all models. Compared to the pre-trained Qwen and Qwen-7B-Chat models, its scenario-based total and average scores improve by up to 44.32 and 38.46 points, respectively.

TransQwen not only enables accurate fault identification and semantic interpretation of transformer defect records but also facilitates the generation of precise, context-aware maintenance recommendations. This significantly enhances the efficiency, standardization, and reliability of power system maintenance decision-making. By enabling early detection, targeted intervention, and informed risk management, the model serves as a powerful tool for supporting condition-based and intelligent maintenance strategies in modern power grids.

6. Limitations

Despite the strong performance of the TransQwen model in transformer fault diagnosis and maintenance strategy generation, several limitations remain. First, the current study is restricted to unstructured textual data and does not integrate multi-modal sources such as infrared images, acoustic signals, or sensor measurements, which are essential for comprehensive diagnostics in real-world scenarios. Second, although TransQwen achieves accurate outputs, it lacks interpretability in its reasoning process, posing potential safety concerns in critical applications where decision traceability is required. Third, the model’s effectiveness is currently validated only on transformer-related cases, and its generalizability to other types of power equipment remains untested. Additionally, temporal dynamics—critical to understanding fault evolution—are not explicitly modeled within the current architecture.

Furthermore, as with most LLM-based methods, TransQwen may be susceptible to hallucinated outputs, especially in safety-critical environments where factual accuracy is paramount. The model’s performance also heavily depends on the quality and representativeness of constructed QA pairs, which may limit robustness if domain coverage is incomplete. These challenges highlight promising directions for future research, including (1) the incorporation of multi-modal data for enriched situational understanding, (2) improved interpretability mechanisms to support verifiable reasoning, (3) lightweight real-time deployment architectures for field-level applications, and (4) the exploration of zero-shot and few-shot adaptability across diverse power system contexts.

Author Contributions

Conceptualization, Z.X., B.W., H.M., J.Z. (Jiaxin Zhang), H.Z. and J.Z. (Jinhui Zhou); Methodology, Z.X., B.W., H.M., J.Z. (Jiaxin Zhang), H.Z. and J.Z. (Jinhui Zhou); Software, Z.X., B.W., H.M., J.Z. (Jiaxin Zhang), H.Z. and J.Z. (Jinhui Zhou); Validation, Z.X., B.W., H.M., J.Z. (Jiaxin Zhang), H.Z. and J.Z. (Jinhui Zhou); Writing—original draft, Z.X., B.W., H.M., J.Z. (Jiaxin Zhang), H.Z. and J.Z. (Jinhui Zhou). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Science and Technology Project of State Grid Corporation of China, grant number 5400-20241918A-1-1-ZN.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Jinhui Zhou was employed by the State Grid Zhejiang Electric Power Corporation and State Grid Wenzhou Electric Power Supply Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Cabral, T.W.; Gomes, F.V.; de Lima, E.R.; Filho, J.C.S.S.; Meloni, L.G.P. Kolmogorov–Arnold Network in the Fault Diagnosis of Oil-Immersed Power Transformers. Sensors 2024, 24, 7585. [Google Scholar] [CrossRef] [PubMed]

- Villa-Ávila, E.; Ochoa-Correa, D.; Arévalo, P. Advancements in Power Converter Technologies for Integrated Energy Storage Systems: Optimizing Renewable Energy Storage and Grid Integration. Processes 2025, 13, 1819. [Google Scholar] [CrossRef]

- Jiang, Y.W.; Li, L.; Li, Z.W. An Information Mining Method of Power Transformer Operation and Maintenance Texts Based on Deep Semantic Learning. Proc. CSEE 2019, 39, 4162–4172. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Mandic, D.P.; Chambers, J.A. On the choice of parameters of the cost function in nested modular RNN’s. IEEE Trans. Neural Netw. 2000, 11, 315–322. [Google Scholar] [CrossRef][Green Version]

- Wei, Z.; Qu, S.; Zhao, L.; Shi, Q.; Zhang, C. A Position- and Similarity-Aware Named Entity Recognition Model for Power Equipment Maintenance Work Orders. Sensors 2025, 25, 2062. [Google Scholar] [CrossRef]

- Shu, J.W.; Yang, T.; Geng, Y.N. Joint Extraction Method for Overlapping Entity Relationships in the Construction of Electric Power Knowledge Graph. High Volt. Eng. 2024, 50, 4912–4922. [Google Scholar]

- Wei, S.R.; Zhang, X.; Fu, Y. Early Fault Warning and Diagnosis of Offshore Wind DFIG Based on GRA-LSTM-Stacking Model. Proc. CSEE 2021, 41, 2373–2383. [Google Scholar]

- Yang, Y.J.; Dong, Z.; Yang, F. Fault Diagnosis of Double Bridge Parallel Excitation Power Unit Based on 1D-CNN-LSTM Hybrid Neural Network Model. Power Syst. Technol. 2021, 45, 2025–2032. [Google Scholar]

- Xu, Y.; Wang, H.; Xu, F.; Bi, S.; Ye, J. A Sensor Data-Driven Fault Diagnosis Method for Automotive Transmission Gearboxes Based on Improved EEMD and CNN-BiLSTM. Processes 2025, 13, 1200. [Google Scholar] [CrossRef]

- Yin, Z.; Shi, L.; Yuan, Y.; Tan, X.; Xu, S. A Study on a Knowledge Graph Construction Method of Safety Reports for Process Industries. Processes 2023, 11, 146. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Hyung, W.; Le, H.; Shayne, L. Scaling Instruction-Finetuned Language Models. J. Mach. Learn. Res. 2024, 25, 1–53. [Google Scholar]

- He, J.; Liu, X.; Lei, Y.; Cao, A.; Xiong, J. An End-to-End General Language Model (GLM)-4-Based Milling Cutter Fault Diagnosis Framework for Intelligent Manufacturing. Sensors 2025, 25, 2295. [Google Scholar] [CrossRef]

- Kiwan, M.; Alexei, C.; Brandon, L. Alpaca: Intermittent Execution without Checkpoints. Proc. ACM Program 2017, 1, 1–30. [Google Scholar]

- Saha, T.; Jayashree, S.R.; Saha, S.; Bhattacharyya, P. BERT-Caps: A Transformer-Based Capsule Network for Tweet Act Classification. IEEE Trans. Comput. Soc. Syst. 2020, 7, 1168–1179. [Google Scholar] [CrossRef]

- Bai, J.Z.; Bai, S.; Chu, Y.F.; Cui, Z.Y.; Dang, K. Qwen Technical Report. arXiv 2023, arXiv:2309.16609. [Google Scholar]

- Wang, J.G.; Ji, X.; Wu, T.X. Device defect detection in power field based on pre-trained language model. Electr. Meas. Instrum. 2022, 59, 180–186. [Google Scholar]

- Jia, J.; Yang, Q.; Fu, H. Research on Pre-training Language Model Construction and Text Semantic Analysis Based on Power Equipment Big Data. Proc. CSEE 2023, 43, 1027–1037. [Google Scholar]

- Che Mid, E.; Dua, V. Fault Detection in Wastewater Treatment Systems Using Multiparametric Programming. Processes 2018, 6, 231. [Google Scholar] [CrossRef]

- Li, G.; Fang, H.; Liu, Y.P. Large-model Drive Technology in New Power System: Status, Challenges and Prospects. High Volt. Eng. 2024, 50, 2864–2878. [Google Scholar]

- Gao, S.; Li, Z.Y.; Yang, M.H.; Cheng, M.M.; Han, J.; Torr, P. Large-Scale Unsupervised Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 7457–7476. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Shao, L.; Wu, H.; Gu, X.; Zhang, X. Dora: A Low-Latency Partial Reconfiguration Controller for Reconfigurable System. IEEE Trans. Circuits Syst. II Express Briefs 2025, 72, 268–272. [Google Scholar] [CrossRef]

- GB/T 1094; Power Transformer. Standardization Administration of China: Beijing, China, 2013.

- GB/T 19517; National Safety Technical Code for Electrical Equipment. Standardization Administration of China: Beijing, China, 2023.

- DL/T 572-2010; Transformer Operating Regulations. National Energy Administration: Beijing, China, 2023.

Figure 1.

The architectural framework and training scenarios of TransQwen.

Figure 1.

The architectural framework and training scenarios of TransQwen.

Figure 2.

Architectural comparison of original self-attention and GQA mechanisms.

Figure 2.

Architectural comparison of original self-attention and GQA mechanisms.

Figure 3.

Schematic diagram of DoRA fine-tuning mechanism.

Figure 3.

Schematic diagram of DoRA fine-tuning mechanism.

Figure 4.

Schematic diagram of DoRA fine-tuning process.

Figure 4.

Schematic diagram of DoRA fine-tuning process.

Figure 5.

Schematic diagram of RoPE’s rotational encoding training process.

Figure 5.

Schematic diagram of RoPE’s rotational encoding training process.

Figure 6.

Loss analysis during DoRA fine-tuning process.

Figure 6.

Loss analysis during DoRA fine-tuning process.

Figure 7.

Diagnostic performance benchmarking of transformer fault detection algorithms.

Figure 7.

Diagnostic performance benchmarking of transformer fault detection algorithms.

Figure 8.

Comparative experimental results of transformer fault diagnosis under low-sample-scale conditions using multiple models: (a) the precision rate of the model under different sample conditions; (b) the recall rate of the model under different sample conditions; (c) the F1 rate of the model under different sample conditions.

Figure 8.

Comparative experimental results of transformer fault diagnosis under low-sample-scale conditions using multiple models: (a) the precision rate of the model under different sample conditions; (b) the recall rate of the model under different sample conditions; (c) the F1 rate of the model under different sample conditions.

Figure 9.

Experimental results of transformer fault state grading diagnosis.

Figure 9.

Experimental results of transformer fault state grading diagnosis.

Figure 10.

Comparative experimental results of transformer fault state grading diagnosis under low-sample-scale conditions: (a) the precision rate of the model under different sample conditions; (b) the recall rate of the model under different sample conditions; (c) the F1 rate of the model under different sample conditions.

Figure 10.

Comparative experimental results of transformer fault state grading diagnosis under low-sample-scale conditions: (a) the precision rate of the model under different sample conditions; (b) the recall rate of the model under different sample conditions; (c) the F1 rate of the model under different sample conditions.

Figure 11.

Assessment results of maintenance strategy generation capability.

Figure 11.

Assessment results of maintenance strategy generation capability.

Table 1.

The initial training corpus of TransQwen.

Table 1.

The initial training corpus of TransQwen.

| Initial Training Corpus | Specific Content and Examples | The Number of Characters |

|---|

| Operation regulations and guidelines | A total of 74 regulations, standards, and books on daily operation, monitoring, maintenance and repair during the operation of transformers were selected.

e.g.,: “Transformer Operation and Maintenance Manual”, “Transformer Fault Diagnosis and Repair”, etc. | 1.4 × 107 |

| Relevant technical standards and test specifications | A total of 762 items of text data related to transformer equipment, including International Electrotechnical Commission (IEC) standards, IEEE standards, industry standards, and enterprise standards, were selected.

e.g.,: “IEC 60076 Series Standards”, “GB 1094 Series Power Transformer Standards”, “DL/T 572-2010 Transformer Operating Regulations”, etc. | 9.9 × 106 |

| Typical defect and fault cases | A total of 2318 typical transformer fault cases and defect records from multiple power supply bureaus were selected. | 1.3 × 107 |

| Among them, there were 521 faults related to the casing, 732 faults related to the body, 496 faults related to the protection device, 276 faults related to the pressure-regulating device, 251 faults related to the cooling device, and 42 other faults. |

Table 2.

Question-and-answer pair dataset for TransQwen.

Table 2.

Question-and-answer pair dataset for TransQwen.

| Sample Database | Example | The Number of Question-and-Answer Pairs |

|---|

| the operation regulations and guidelines | Q: What standards should be followed for the maintenance of on-load tap changers in transformers?

A: The maintenance work of the on-load tap changer of the transformer should be carried out in accordance with the regulations of the manufacturer or by referring to the relevant standard DL/T 574. Maintenance work includes removing oxide films and oil stains, measuring the DC resistance of windings, and recording tap changer conditions, etc. Standard-compliant maintenance ensures reliable operation of the on-load tap changer. | 17,526 |

| relevant technical standards and test specifications | Q: What contents are included in the handover test report during the installation of a transformer?

A: Technical contracts for transformer orders and technical documents for design changes; The installation and operation manual, certificate of conformity, drawings and factory test report provided by the manufacturer; Records of the entire installation process in accordance with the requirements of GB 50148 and relevant regulations of the manufacturer, etc. | 9.9 × 106 |

| typical defect and fault cases | Q: Under what circumstances is it necessary to re-spray composite insulators or porcelain insulators that have been sprayed with RTV? | 1.3 × 107 |

| A: When the hydrophobicity of composite insulators or porcelain insulators sprayed with RTV drops to grade 3 or below, re-spraying should be considered. In addition, if the coating is damaged or fails, it also needs to be resprayed. By re-spraying composite insulators or porcelain insulators coated with RTV, the good insulation performance of insulators can be maintained, ensuring the safe operation of equipment. |

Table 3.

Fine-tuning parameters of TransQwen.

Table 3.

Fine-tuning parameters of TransQwen.

| Training Parameters | Set Value | Training Parameters | Set Value |

|---|

| Learning rate | 5 × 10−5 | Max samples | 100,000 |

| Type | DoRA | Maximum grad norm | 1 |

| Max length | 1024 | Grad-accumulation | 16 |

| Batch size | 2 | DoRA rank | 8 |

| Torch_d type | fp16 | Epoch | 10 |

Table 4.

Taxonomy of transformer equipment failure types and classification criteria.

Table 4.

Taxonomy of transformer equipment failure types and classification criteria.

| Types | Specific Content and Examples |

|---|

| Faults of casing type | Seepage and contamination caused by moisture or other contaminants entering the interior or surface of the casing; The failure of insulating materials leads to the formation of conductive paths, resulting in insulation breakdown faults, etc. |

| Faults of the device body type | Core faults caused by abnormal magnetic circuits or core cracks; Shell damage caused by mechanical damage or corrosion of the fuel tank; Structural deformation or component displacement faults caused by vibration, shock or unstable foundation during long-term operation, etc. |

| Faults of the protection device | The relay fails to operate correctly; Misoperation caused by faults in the circuit breaker control system; Communication interruption or data transmission error between protection devices or with the monitoring system, etc. |

| Faults of the pressure-regulating device | The mechanical components of the tap changer, such as the switch rod and the driving mechanism, are malfunctioning; The electrical contact part of the tap changer shows erosion, poor contact or insulation damage, etc. |

| Faults of the cooling device | Poor sealing of the cooling device, pipe rupture, and leakage at the connection points; The cooling fan of the air-cooled transformer is damaged or operates abnormally; The oil pump in the oil cooling system failed, etc. |

| Other faults | Lightning strike faults caused by high-voltage lightning strikes on transformers during thunderstorm weather; Faults caused by adverse factors such as environmental pollution, humidity and excessive temperature around the transformer, etc. |

Table 5.

Taxonomy of transformer fault states and grading standards.

Table 5.

Taxonomy of transformer fault states and grading standards.

| Fault Status Level | Specific Content and Examples |

|---|

| Normal defect | During the operation of the transformer equipment, errors deviating from the operation standards occurred, but they did not exceed the allowable error range and had little impact on the safe operation of the equipment within a certain period of time. |

| Serious defect | During the operation of the transformer equipment, if the error deviates from and exceeds the allowable range of the operation standard error, it poses a significant threat to personnel and equipment. It can be maintained for the time being, but if not dealt with in time, then it will cause an accident. |

| Emergency defect | During the operation of the transformer equipment, errors that deviate from and exceed the allowable range of the operation standard error directly threaten the safe operation and need to be dealt with promptly; otherwise, accidents may occur at any time. |

Table 6.

Sample taxonomy of transformer fault state grading standards.

Table 6.

Sample taxonomy of transformer fault state grading standards.

| | Fault Status Level |

|---|

| The transformer’s bushing has been leaking oil continuously for more than 10 min. | Normal |

| The secondary cables on the transformer body are not placed on the cable rack and are not bound | Normal |

| More than half of the cooling fans are not operating normally | Serious |

| Under normal load, the temperature rise of the transformer is abnormal and keeps increasing | Serious |

| The main transformer body is severely leaking oil (one drop or more every 10 s) | Serious |

| The bushings of the transformer equipment have severe damage and discharge phenomena | Emergency |

| Severe oil leakage or spraying causes the oil level to drop below the indicated limit of the oil level gauge | Emergency |

Table 7.

Comparative generation results of maintenance strategies across different models.

Table 7.

Comparative generation results of maintenance strategies across different models.

| Model | Answer |

|---|

| Fault Description | At the high-voltage side bushing of Phase A of the No. 1 main transformer of Wuzhong 110 kV Substation, insulating oil flows downward along the upper edge of the porcelain bushing and surrounds it. No. 1 main transformer is oil-immersed self-cooling transformer, and the 110 kV side casing is high-voltage oil–paper capacitor casing, with an electric capacity of 308 pF, a creepage distance of 3906 mm, and a weight of about 80 kg. |

| Qwen-7B-Chat | 1. Power outage: Circuit breaker opens; 2. Check whether the gasket is damaged; 3. Repair: Repair cracks or ruptures in the casing; 4. Inspection: Inspect the faulty transformer again; 5. Trial operation: If the trial operation is normal, then the No. 1 main transformer can be restored to operation. If the trial operation is not normal, then it is necessary to conduct another inspection and repair. |

| Pre-trained Qwen | 1. On-site inspection: Determine the range and severity of the leakage; 2. Disassemble the casing: Disassemble the faulty casing; 3. Insulating oil treatment: Collect the leaked insulating oil and handle it properly; 4. Equipment testing: Conduct insulation resistance tests; 5. Recording and Reporting; 6. Report Submission: Submit the maintenance process and result report to the relevant department |

| TransQwen | 1. Power off immediately; 2. On-site isolation: Determine the leakage location; 3. Drain the leaked oil:; 4. Remove the casing: Confirm whether there is any fracture at the connection between the cable threading aluminum tube and the conical porcelain sleeve at the lower part of the casing; 5. Replace the high-pressure bushing: Ensure that all components are tightly connected; 6. Handling leaked insulating oil: Test the oil quality; 7. Infrared temperature measurement re-measurement of the equipment; 8. Power-on test: electrified inspection; 9. Regular inspection: Infrared detection is carried out in accordance with the “Test Regulations for Condition-based Maintenance of Power Transmission and Transformation Equipment”; 10. Personnel Training and Improvement: Update the maintenance manual and operation specifications. |

| Standard operation | 1. Power off immediately; 2. On-site isolation; 3. Safety protection; 4. Confirmation of leakage location; 5. Disassembly and replacement; 6. Insulating oil treatment; 7. Insulation test; 8. Oil quality testing; 9. Power-on test; 10. Monitor the operating status of the equipment; 11. Regular inspection and maintenance; 12. Strengthen professional training. |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).