Load Frequency Control of Power Systems with an Energy Storage System Based on Safety Reinforcement Learning

Abstract

1. Introduction

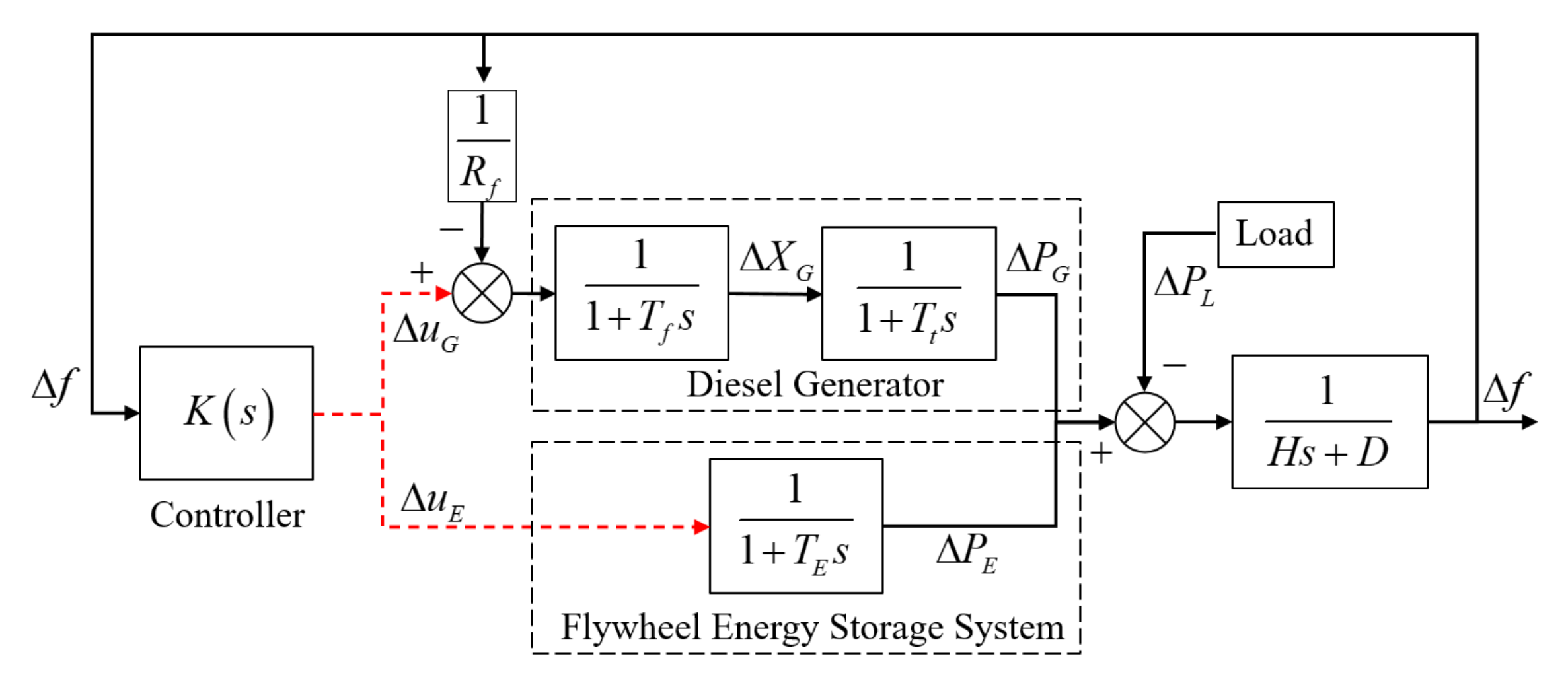

2. Power System Frequency Response Model

2.1. Linearized LFC Model

2.2. Nonlinear Behaviors

3. Main Results

3.1. Constrained Markov Decision Process

3.2. LSTM-Based Cost Critic Network

3.3. The Proposed Primal-Dual Ddpg

- 1.

- Target reward critic value calculation: K interaction experiences are sampled from the experience pool . The target reward critic value is computed using the equation:where and denote the parameters of the target actor network and the target reward critic network, respectively. is the discount factor. is the immediate reward at step k. is the next state from experience tuple .

- 2.

- Updating reward critic network: The parameters of the reward critic network are updated by minimizing the loss function :

- 3.

- Action gradient calculation: Based on Equation (18), the action gradient is computed usingwhere and are computed based on chain rule, shown in Equations (22) and (23).where , , and are derived from the reward critic network, cost prediction network, and actor network, respectively, using the backpropagation algorithm. The parameters of the actor network are updated using gradient descent:where is the learning rate of the actor network.

- 4.

- Dual variable gradient calculation: Based on Equation (18), the gradient of the dual variable is calculated as Equation (25).The dual variable are updated via gradient descent shown in Equation (26).where is the learning rate of the dual variable.

- 5.

- Soft update of target networks: The target actor network and target reward critic network are updated using the soft update rule:where is a preset soft update parameter.

4. Case Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, C.; Topcu, U.; Li, N.; Low, S. Design and stability of load-side primary frequency control in power systems. IEEE Trans. Autom. Control 2014, 59, 1177–1189. [Google Scholar] [CrossRef]

- Shi, T.; Wang, C.; Chen, Z. The multi-point cooperative control strategy for electrode boilers supporting grid frequency regulation. Processes 2025, 13, 785. [Google Scholar] [CrossRef]

- Singh, V.P.; Kishor, N.; Samuel, P. Load frequency control with communication topology changes in smart grid. IEEE Trans. Ind. Inform. 2016, 12, 1943–1952. [Google Scholar] [CrossRef]

- El-Rifaie, A.M. A novel lyrebird optimization algorithm for enhanced generation rate-constrained load frequency control in multi-area power systems with proportional integral derivative controllers. Processes 2025, 13, 949. [Google Scholar] [CrossRef]

- Huynh, V.V.; Minh, B.L.N.; Amaefule, E.N.; Tran, A.-T.; Tran, P.T. Highly robust observer sliding mode based frequency control for multi area power systems with renewable power plants. Electronics 2021, 10, 274. [Google Scholar] [CrossRef]

- Zhang, C.-K.; Jiang, L.; Wu, Q.; He, Y.; Wu, M. Delay-dependent robust load frequency control for time delay power systems. IEEE Trans. Power Syst. 2013, 28, 2192–2201. [Google Scholar] [CrossRef]

- Miao, Z.; Meng, X.; Li, X.; Liang, B.; Watanabe, H. Enhancement of net output power of thermoelectric modules with a novel air-water combination. Appl. Therm. Eng. 2025, 258, 124745. [Google Scholar] [CrossRef]

- Ding, S.; Liu, C.; Fan, Z.; Hang, J. Lumped parameter adaptation-based automatic mtpa control for ipmsm drives by using stator current impulse response. IEEE Trans. Energy Convers. 2025. [Google Scholar] [CrossRef]

- Lin, L.; Liu, J.; Huang, N.; Li, S.; Zhang, Y. Multiscale spatio-temporal feature fusion based non-intrusive appliance load monitoring for multiple industrial industries. Appl. Soft Comput. 2024, 167, 112445. [Google Scholar] [CrossRef]

- Lin, L.; Ma, X.; Chen, C.; Xu, J.; Huang, N. Imbalanced industrial load identification based on optimized catboost with entropy features. J. Electr. Eng. Technol. 2024, 19, 4817–4832. [Google Scholar] [CrossRef]

- Rong, Q.; Hu, P.; Yu, Y.; Wang, D.; Cao, Y.; Xin, H. Virtual external perturbance-based impedance measurement of grid-connected converter. IEEE Trans. Ind. Electron. 2024, 72, 2644–2654. [Google Scholar] [CrossRef]

- Kusekar, S.K.; Pirani, M.; Birajdar, V.D.; Borkar, T.; Farahani, S. Toward the progression of sustainable structural batteries: State-of-the-art review. SAE Int. J. Sustain. Transp. Energy Environ. Policy 2025, 5, 283–308. [Google Scholar] [CrossRef]

- Akbari, E.; Naghibi, A.F.; Veisi, M.; Shahparnia, A.; Pirouzi, S. Multi-objective economic operation of smart distribution network with renewable-flexible virtual power plants considering voltage security index. Sci. Rep. 2024, 14, 19136. [Google Scholar] [CrossRef] [PubMed]

- Navesi, R.B.; Jadidoleslam, M.; Moradi-Shahrbabak, Z.; Naghibi, A.F. Capability of battery-based integrated renewable energy systems in the energy management and flexibility regulation of smart distribution networks considering energy and flexibility markets. J. Energy Storage 2024, 98, 113007. [Google Scholar] [CrossRef]

- Tan, W. Unified tuning of pid load frequency controller for power systems via imc. IEEE Trans. Power Syst. 2009, 25, 341–350. [Google Scholar] [CrossRef]

- Yousef, H.A.; Khalfan, A.-K.; Albadi, M.H.; Hosseinzadeh, N. Load frequency control of a multi-area power system: An adaptive fuzzy logic approach. IEEE Trans. Power Syst. 2014, 29, 1822–1830. [Google Scholar] [CrossRef]

- Liu, F.; Li, Y.; Cao, Y.; She, J.; Wu, M. A two-layer active disturbance rejection controller design for load frequency control of interconnected power system. IEEE Trans. Power Syst. 2015, 31, 3320–3321. [Google Scholar] [CrossRef]

- Liao, K.; Xu, Y. A robust load frequency control scheme for power systems based on second-order sliding mode and extended disturbance observer. IEEE Trans. Ind. Inform. 2017, 14, 3076–3086. [Google Scholar] [CrossRef]

- Ersdal, A.M.; Imsland, L.; Uhlen, K. Model predictive load-frequency control. IEEE Trans. Power Syst. 2015, 31, 777–785. [Google Scholar] [CrossRef]

- Ogar, V.N.; Hussain, S.; Gamage, K.A. Load frequency control using the particle swarm optimisation algorithm and pid controller for effective monitoring of transmission line. Energies 2023, 16, 5748. [Google Scholar] [CrossRef]

- Liu, X.; Wang, C.; Kong, X.; Zhang, Y.; Wang, W.; Lee, K.Y. Tube-based distributed mpc for load frequency control of power system with high wind power penetration. IEEE Trans. Power Syst. 2023, 39, 3118–3129. [Google Scholar] [CrossRef]

- Yu, T.; Wang, H.; Zhou, B.; Chan, K.W.; Tang, J. Multi-agent correlated equilibrium q (λ) learning for coordinated smart generation control of interconnected power grids. IEEE Trans. Power Syst. 2014, 30, 1669–1679. [Google Scholar] [CrossRef]

- Singh, V.P.; Kishor, N.; Samuel, P. Distributed multi-agent system-based load frequency control for multi-area power system in smart grid. IEEE Trans. Ind. Electron. 2017, 64, 5151–5160. [Google Scholar] [CrossRef]

- Daneshfar, F. Intelligent load-frequency control in a deregulated environment: Continuous-valued input, extended classifier system approach. IET Gener. Transm. Distrib. 2013, 7, 551–559. [Google Scholar] [CrossRef]

- Yin, L.; Yu, T.; Zhou, L.; Huang, L.; Zhang, X.; Zheng, B. Artificial emotional reinforcement learning for automatic generation control of large-scale interconnected power grids. IET Gener. Transm. Distrib. 2017, 11, 2305–2313. [Google Scholar] [CrossRef]

- Yan, Z.; Xu, Y. Data-driven load frequency control for stochastic power systems: A deep reinforcement learning method with continuous action search. IEEE Trans. Power Syst. 2018, 34, 1653–1656. [Google Scholar] [CrossRef]

- Yang, F.; Huang, D.; Li, D.; Lin, S.; Muyeen, S.; Zhai, H. Data-driven load frequency control based on multi-agent reinforcement learning with attention mechanism. IEEE Trans. Power Syst. 2022, 38, 5560–5569. [Google Scholar] [CrossRef]

- Zhang, M.; Dong, S.; Wu, Z.-G.; Chen, G.; Guan, X. Reliable event-triggered load frequency control of uncertain multiarea power systems with actuator failures. IEEE Trans. Autom. Sci. Eng. 2022, 20, 2516–2526. [Google Scholar] [CrossRef]

- Zhang, M.; Dong, S.; Shi, P.; Chen, G.; Guan, X. Distributed observer-based event-triggered load frequency control of multiarea power systems under cyber attacks. IEEE Trans. Autom. Sci. Eng. 2022, 20, 2435–2444. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, M.; Wu, Z.; Wu, L.; Guan, X. Model-free load frequency control of nonlinear power systems based on deep reinforcement learning. IEEE Trans. Ind. Inform. 2024, 20, 6825–6833. [Google Scholar] [CrossRef]

- Bevrani, H. Robust Power System Frequency Control; Springer: Berlin/Heidelberg, Germany, 2014; Volume 4. [Google Scholar]

- Wachi, A.; Sui, Y. Safe reinforcement learning in constrained markov decision processes. In Proceedings of the International Conference on Machine Learning PMLR, Virtual, 13–18 July 2020; pp. 9797–9806. [Google Scholar]

- Altman, E. Constrained Markov Decision Processes; Routledge: London, UK, 2021. [Google Scholar]

- Liang, Q.; Que, F.; Modiano, E. Accelerated primal-dual policy optimization for safe reinforcement learning. arXiv 2018, arXiv:1802.06480. [Google Scholar]

- Liu, G.-X.; Liu, Z.-W.; Wei, G.-X. Model-free load frequency control based on multi-agent deep reinforcement learning. In Proceedings of the 2021 IEEE International Conference on Unmanned Systems, Beijing, China, 15–17 October 2021; pp. 815–819. [Google Scholar]

- Morsali, J.; Zare, K.; Hagh, M.T. Appropriate generation rate constraint (grc) modeling method for reheat thermal units to obtain optimal load frequency controller (LFC). In Proceedings of the 5th Conference on Thermal Power Plants (CTPP), Tehran, Iran, 10–11 June 2014; pp. 29–34. [Google Scholar]

| Parameter | Value | Parameter | Value | Parameter | Value |

|---|---|---|---|---|---|

| (p.u.) | (s) | 10 | H (p.u./Hz) | 14.22 | |

| (p.u.) | (s) | 0.10 | D (p.u./Hz) | 0 | |

| (p.u.) | (p.u./s) | 0.0017 [36] | R (Hz/p.u.) | 0.05 | |

| (p.u.) | (s) | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, S.; Li, Y.; Chen, X.; Liang, Z.; Liu, E.; Liu, K.; Zhang, M. Load Frequency Control of Power Systems with an Energy Storage System Based on Safety Reinforcement Learning. Processes 2025, 13, 1897. https://doi.org/10.3390/pr13061897

Gao S, Li Y, Chen X, Liang Z, Liu E, Liu K, Zhang M. Load Frequency Control of Power Systems with an Energy Storage System Based on Safety Reinforcement Learning. Processes. 2025; 13(6):1897. https://doi.org/10.3390/pr13061897

Chicago/Turabian StyleGao, Song, Yudun Li, Xiaodi Chen, Zhengtang Liang, Enren Liu, Kang Liu, and Meng Zhang. 2025. "Load Frequency Control of Power Systems with an Energy Storage System Based on Safety Reinforcement Learning" Processes 13, no. 6: 1897. https://doi.org/10.3390/pr13061897

APA StyleGao, S., Li, Y., Chen, X., Liang, Z., Liu, E., Liu, K., & Zhang, M. (2025). Load Frequency Control of Power Systems with an Energy Storage System Based on Safety Reinforcement Learning. Processes, 13(6), 1897. https://doi.org/10.3390/pr13061897