The study shows that the intensity of working face ore pressure is closely related to many variables in the mining process (such as roof subsidence, mining progress, etc.) of coal mining and shows certain periodic characteristics. Based on the historical pore pressure monitoring data, the multiple linear regression model, multi-layer perceptron model (MLP) and support vector machine (SVM) model are used for the prediction. The input variables include the roof subsidence amount, coal mining progress, working face length, ore layer thickness, etc., and the output of the model is the apparent value of ore pressure in several periods in the future. The hidden layer of the multi-layer perceptron model uses the ReLU activation function, while the support vector machine model uses the radial basis function (RBF) kernel. During model construction, the datasets were divided into training and test sets with a ratio of 8:2. By comparing the performance of the three models on the test set, it is found that the multi-layer perceptron model has the highest prediction accuracy and the lowest error. The final results show that the vector-based model can effectively predict the mineral pressure appearance of the working face, and the multi-layer perceptron model has significant advantages in dealing with complex nonlinear relationships, which provides a scientific prediction tool for mine safety production.

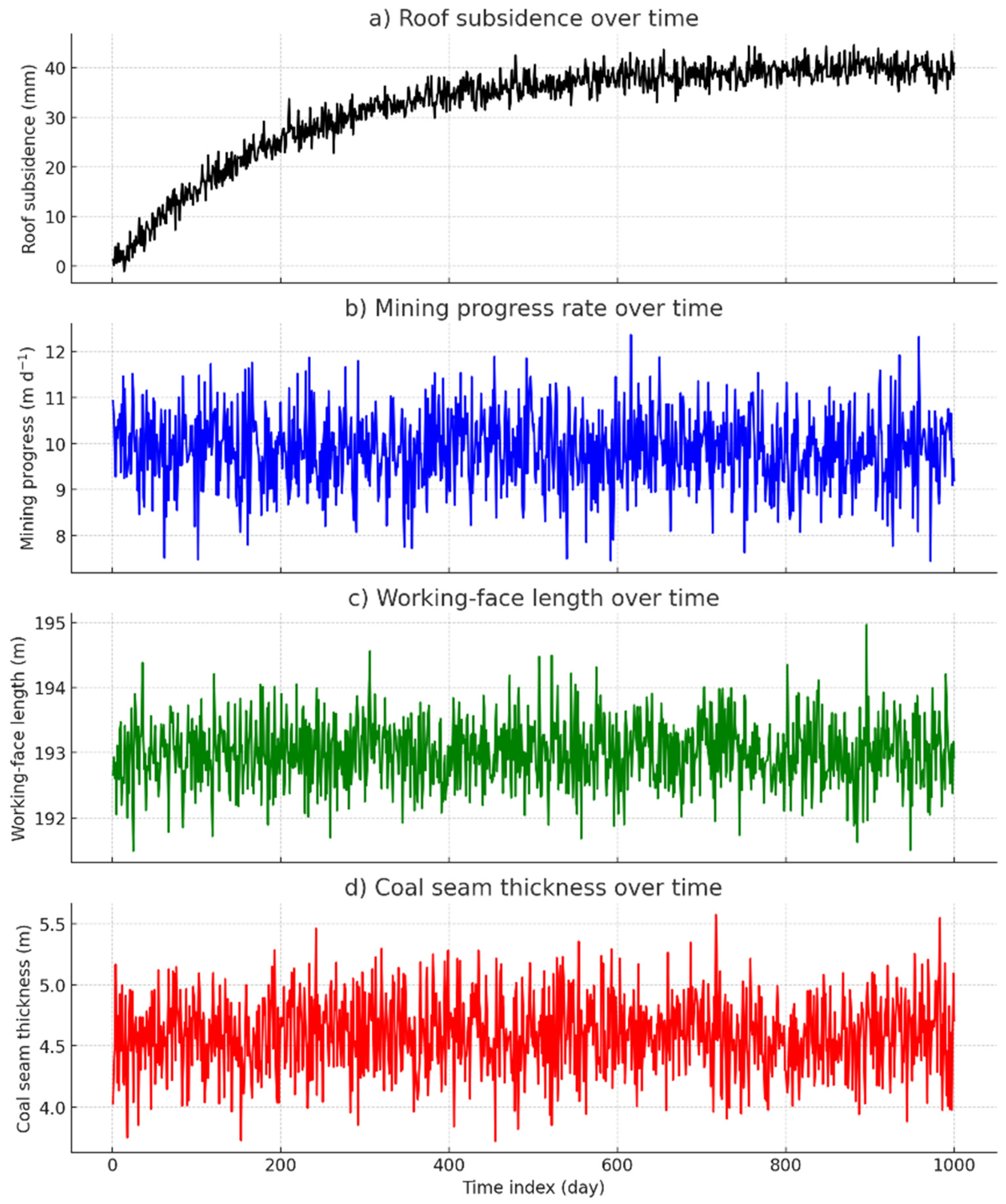

Figure 7 illustrates the raw temporal behavior of the four input variables.

Figure 7a shows that roof subsidence increases rapidly during the first 200 production cycles before approaching an asymptote at ≈40 mm; no abrupt jumps indicative of measurement faults are observed.

Figure 7b indicates that the advance rate of coal extraction remains essentially steady at ~10 m d

−1, punctuated only by routine maintenance stoppages. Working face length (

Figure 7c) is virtually constant (193 ± 1 m), supporting the assumption of geometric invariance in the regression model. Coal seam thickness (

Figure 7d) fluctuates narrowly around 4.6 m with no discernible drift, confirming lithological uniformity across the panel.

4.1. Model Selection and Basic Parameter Settings

4.1.1. Multiple Linear Regression Model

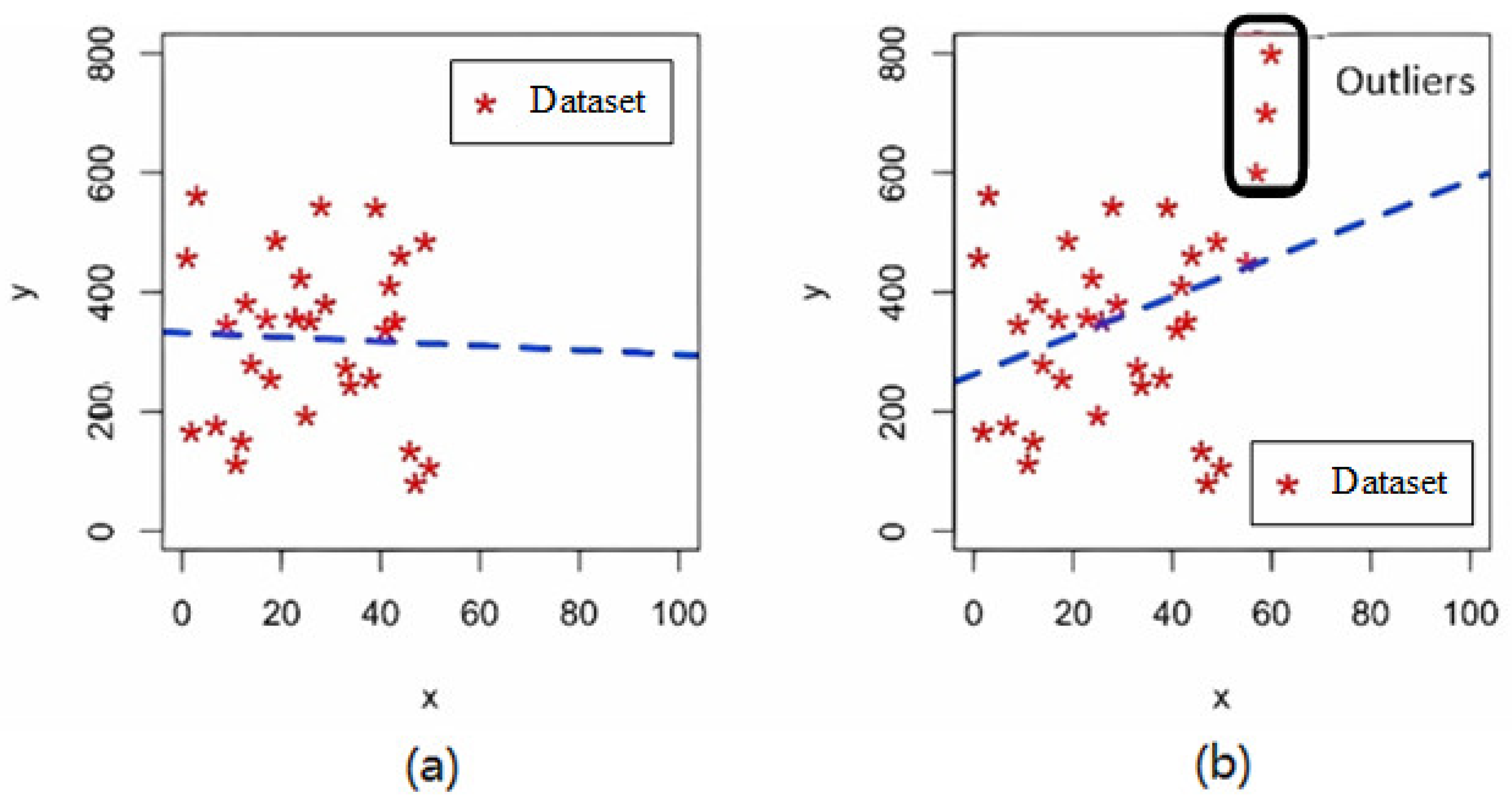

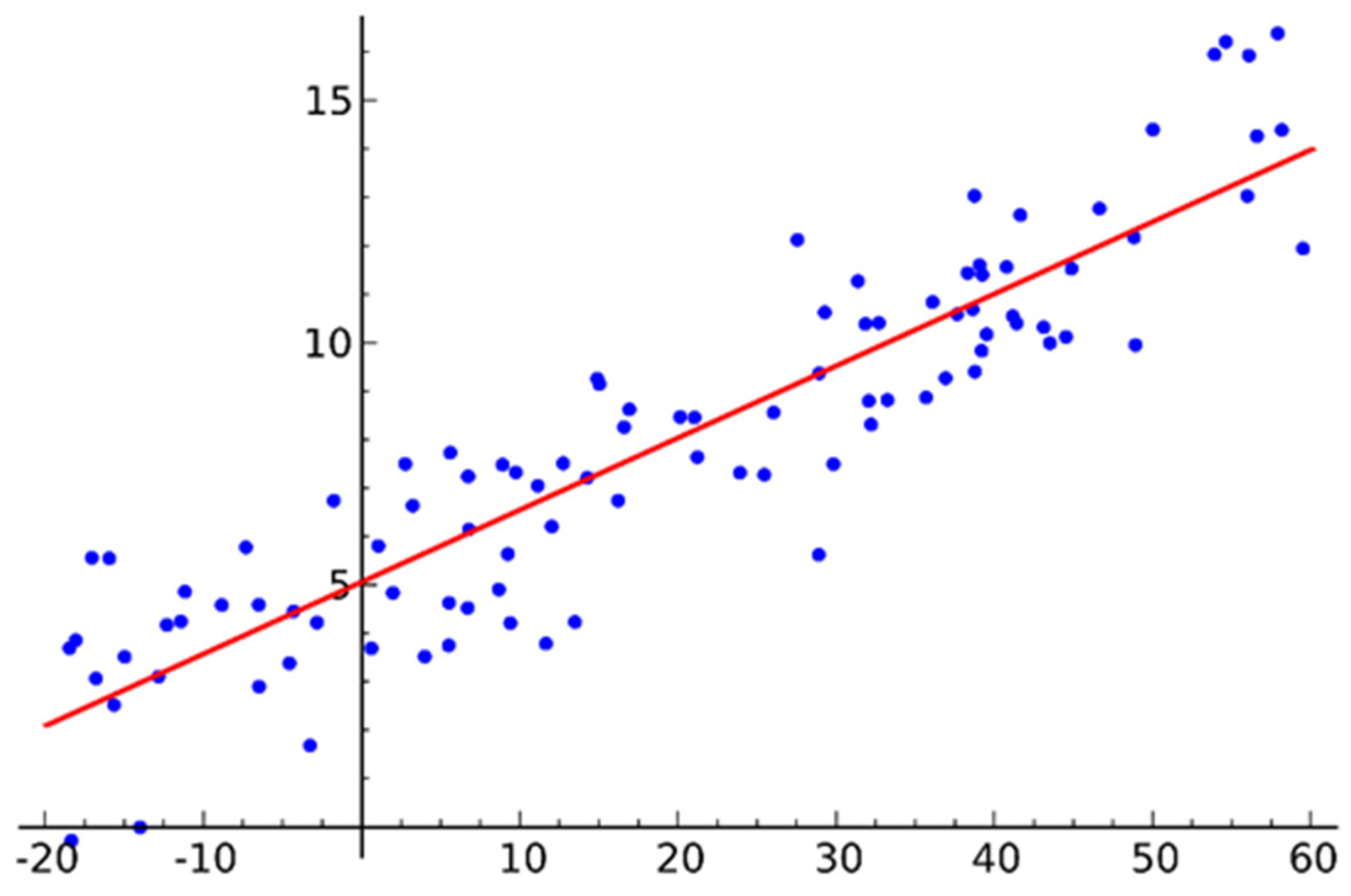

Multiple linear regression models are a classical statistical analysis method suitable for data handling linear relationships. It fits the linear relationship between the input variable and the output variable by the least squares method. The input variables of the multivariate linear regression model are roof subsidence, mining progress, working face length, and ore layer thickness; the output variables are the apparent value of mineral pressure in several periods in the future; and the fitting method is the least squares method.

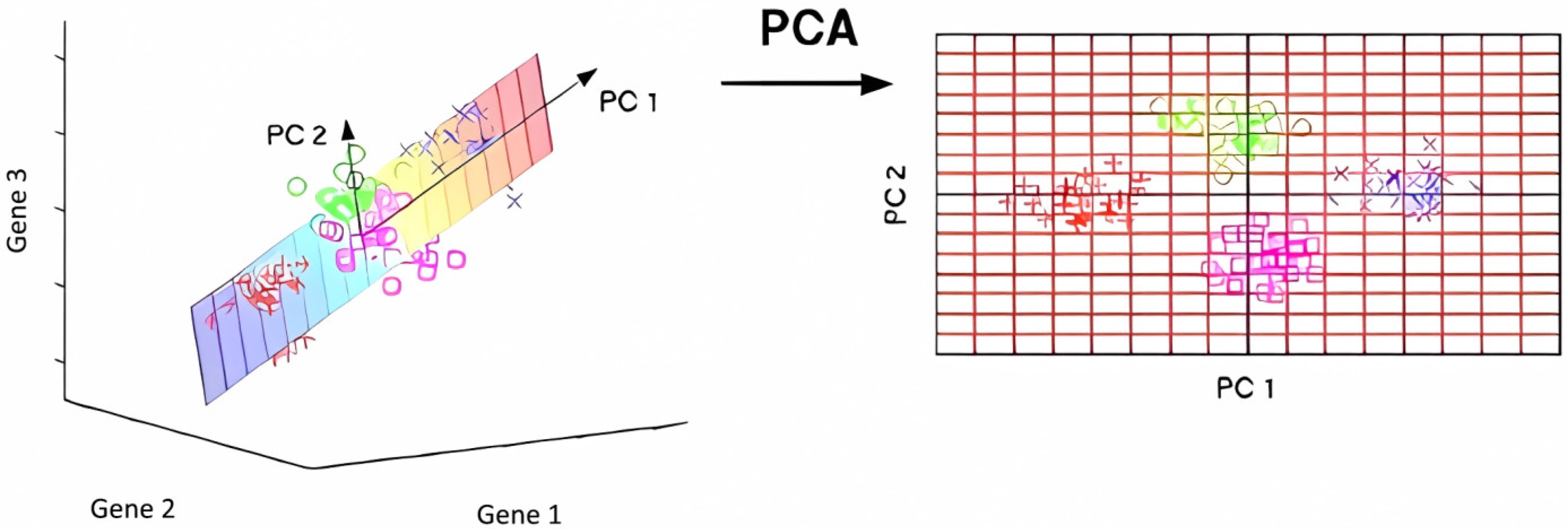

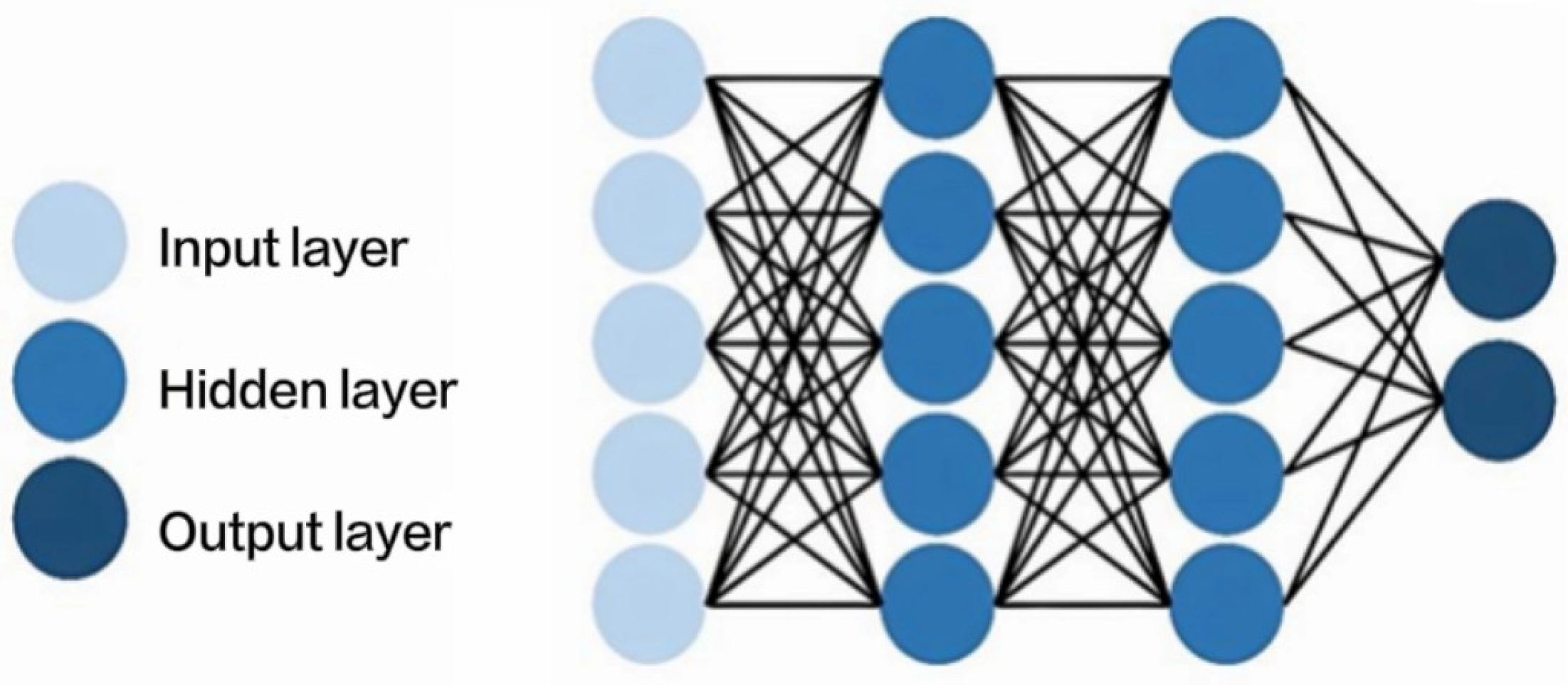

4.1.2. Multi-Layer Perceptron (MLP) Model and Support Vector Machine (SVM) Model

The multi-layer perceptron model is a feedforward neural network suitable for handling complex nonlinear relationships. The MLP model consists of input, hidden, and output layers, and the number of hidden layers and the number of neurons can be determined by experimental optimization. The input layer of the multi-layer perceptron model includes four nodes: roof subsidence, coal mining progress, working face length and mine thickness; the hidden layer has three layers with 64 neurons, and the activation function is average ReLU (Rectified Linear Unit); one node of the output layer corresponds to the apparent value of future mineral pressure, and the activation function is a linear function; the mean square error (MSE) is for the loss function; the optimizer is the Adam optimizer; and the learning rate is set to 0.001.

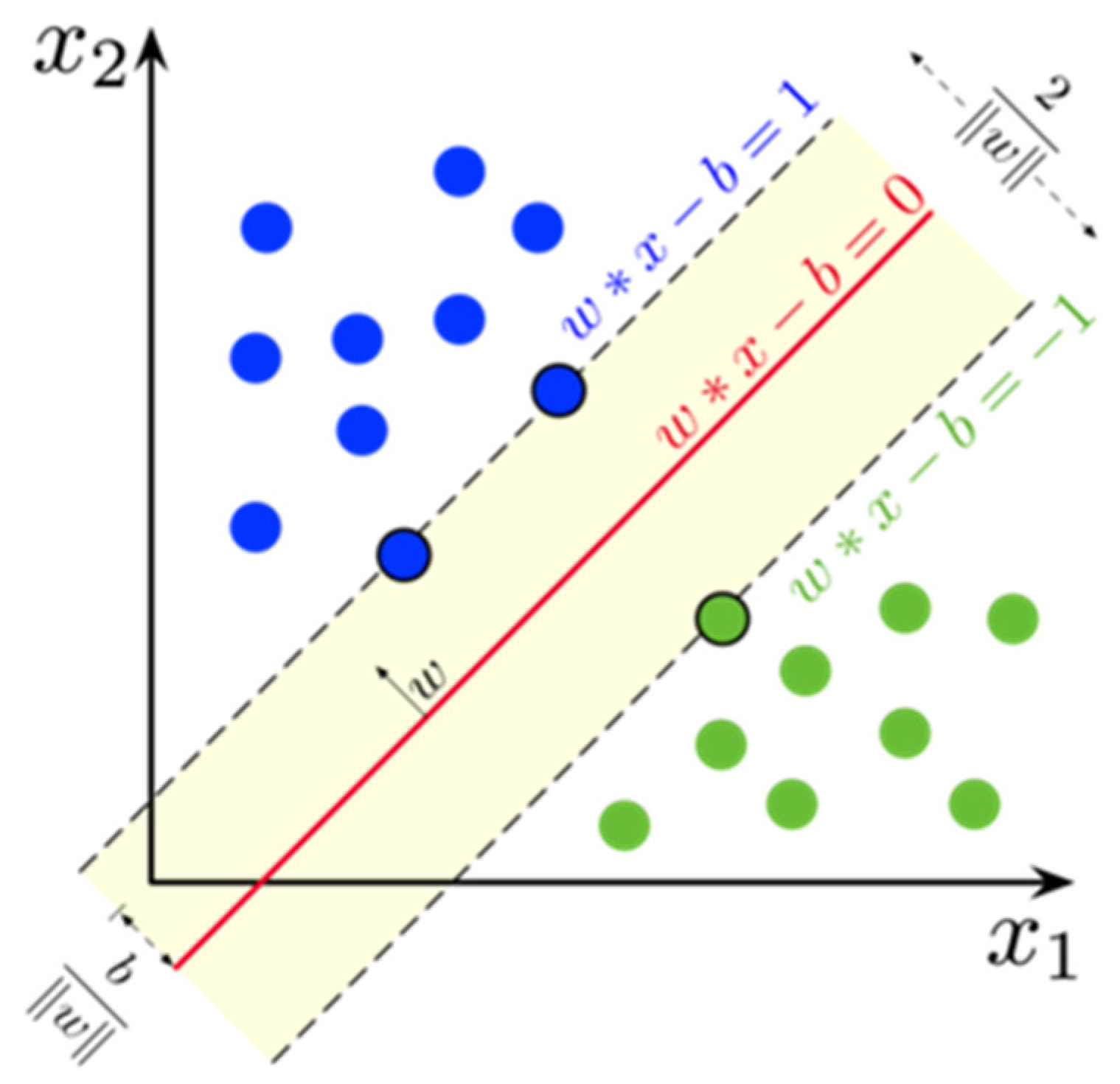

The SVM model is a machine learning method for classification and regression, especially well when handling small samples and high-dimensional data. The SVM model of radial basis function (RBF) core is used to predict the mineral pressure appearance. The input variables of the SVM model are roof subsidence, mining progress, working face length, and ore layer thickness; the output variable is the apparent value of mineral pressure in several future periods; the core function is radial basis function (RBF); the core parameter γ is 0.1 (determined by cross-validation); and the penalty parameter C = 1 (determined by cross-validation).

4.1.3. Data Acquisition Chain

Roof subsidence was monitored by fiber Bragg grating extensometers (FBG-DS500, 0–500 mm range, ±0.05 mm accuracy, Gardner Bender, Milwaukee, US) anchored to the immediate roof at 10 m intervals along the longwall panel. Mining advance was logged automatically from the shearer conveyor position encoder (0–300 m, 0.1 m resolution), while the working face length was surveyed weekly with a prism-free total station (Topcon GT-1000, ±2 mm, Topcon Corporation, Itabashi-ku, Tokyo, Japan). Ore-layer thickness was verified through continuous core logging and optical borehole imaging (0–15 m, ±0.1 m). Apparent mineral pressure was recorded by vibrating-wire load cells embedded 0.5 m above the roof line (Geokon 4850, 0–30 MPa, ±0.25% FS, Geokon, Inc., Lebanon, NH, USA).

All analog signals were digitized by a 24-bit NI cRIO-9047 acquisition unit at 1 Hz, low-pass filtered (fourth-order Butterworth, 0.2 Hz cut-off, Texas Instruments, Dallas, US), and time-stamped by a GPS-disciplined clock. Raw records were written in TDMS format and mirrored nightly to an off-site server. Two-point field calibrations were performed at the start and end of each campaign; in situ checks against NIST-traceable standards indicated combined uncertainties below 0.5% for displacement and 0.6% for pressure.

Three kernels—linear, third-order polynomial, and radial basis function (RBF)—were evaluated by a grid search conducted within a five-fold, stratified cross-validation loop. The RBF kernel consistently produced the lowest validation RMSE and was therefore retained. Hyper-parameters were optimized over C ∈ {2−3 … 28} and γ ∈ {2−10 … 22}; the optimal pair emerged as C = 32 and γ = 0.0625. The epsilon-insensitive margin was held at 0.1 after a preliminary sweep showed negligible sensitivity below that threshold.

The comparison model is a compact feed-forward network comprising an input layer (four neurons), two hidden layers with 32 and 16 neurons, respectively, ReLU activations, and a linear output node. Dropout with a keep probability of 0.8 is applied to the first hidden layer, and an L2 weight decay of 1 × 10−5 is imposed on all dense layers. The network is trained with the Adam optimizer (initial learning rate 1 × 10−3, β₁ = 0.9, β₂ = 0.999) using mini-batches of 64 samples for a maximum of 500 epochs; early stopping monitors validation loss with a patience of 20 epochs and automatically restores the best weights.

All hyper-parameter searches use five-fold stratified splits that preserve the temporal order of daily records to prevent information leakage. Experiments were executed in Python 3.11 with scikit-learn 1.4.1 and TensorFlow 2.16.0 on an Intel i7-14700F workstation, Intel, US; no GPU acceleration was required, and the final SVM inference latency remained below 50 ms per observation. Random seeds were fixed at 42 in NumPy, scikit-learn, and TensorFlow to ensure identical replication across runs.

4.1.4. Feature Importance and Model Interpretability

To elucidate the internal logic of the calibrated SVM, we employed Shapley Additive Explanations (SHAP), which decompose the prediction

into additive attributions

associated with each input feature

.

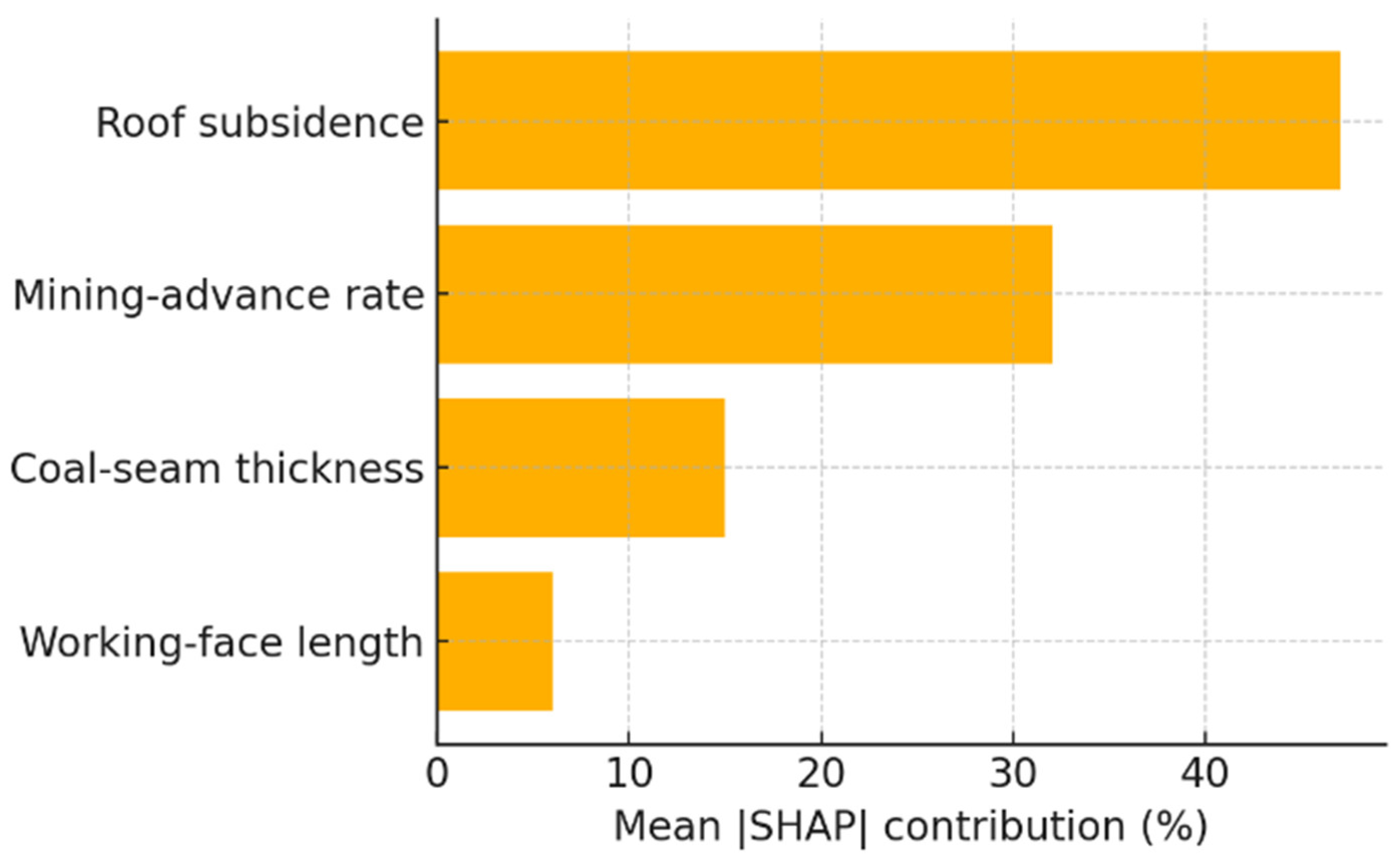

Figure 8 ranks the four predictors by their mean |SHAP| percentages.

Roof subsidence emerges as the dominant driver (47% contribution), underscoring the primary role of strata convergence in governing abutment stress redistribution. The mining advance rate contributes 32%, consistent with the notion that face progress controls the temporal loading rate and, by extension, micro-seismic energy release. Coal seam thickness (15%) exerts a secondary influence by modulating stiffness contrast between seam and roof, whereas working face length (6%) is nearly constant during the studied period and therefore affords little explanatory leverage.

In addition, based on

Figure 7 and

Figure 8, once roof subsidence exceeds ≈35 mm, its marginal impact on predicted stress plateaus, suggesting a transition from elastic deformation to yielding. Such insights inform ground control strategy—priority should be given to high-resolution convergence monitoring and dynamic adjustment of advance rate, whereas modest deviations in face length are unlikely to compromise stability.

Collectively, the SHAP analysis enhances the transparency of the proposed framework and provides a physics-consistent rationale for the observed predictive performance.

4.2. Prediction Results and Discussion

To quantify the robustness of the reported accuracy, we performed a non-parametric bootstrap with 1000 resamples of the test set. For each resample, the full suite of performance metrics was recomputed, producing empirical confidence limits (

Table 2). The 95% CI for the coefficient of determination spans 0.821–0.931, well above the stochastic baseline, whereas the RMSE CI remains below 3.5% of the mean daily energy release, underscoring practical relevance.

A Welch t-test comparing the mean RMSE of the training folds (1.70 × 103 kJ·d−1) with that of the test set (1.77 × 103 kJ·d−1) yields t = 1.11, df = 612, p = 0.27. Hence no statistical evidence of overfitting is found. Moreover, a one-sample bootstrap test against R2 = 0 produces a p-value < 0.001, confirming that the model’s explanatory power is highly significant.

Collectively, these results demonstrate that the headline accuracy of 88.7% is accompanied by narrow confidence bounds and withstands formal hypothesis testing, providing additional assurance for engineering deployment.

After data preprocessing, the dataset was divided into a training set and a test set with a ratio of 8:2. The model was trained on the training set and validated on the test set.

Table 3 shows the training and test performance of the three models.

By comparing the performance of the three models on the test set, it can be seen that the SVM model has the best prediction effect. The accuracy of the test set reaches 88.7%, and the root mean square error is 0.45, which is slightly better than the multi-layer perceptron model and the multivariate linear regression model. Therefore, the predicted value and the actual value of the SVM model have the highest fit degree, the error distribution is the most concentrated, and the prediction stability is also high. Therefore, the support vector machine (SVM) model is used to predict the ore pressure trend of the new coal chemical 4209 fully mechanized discharge working face.

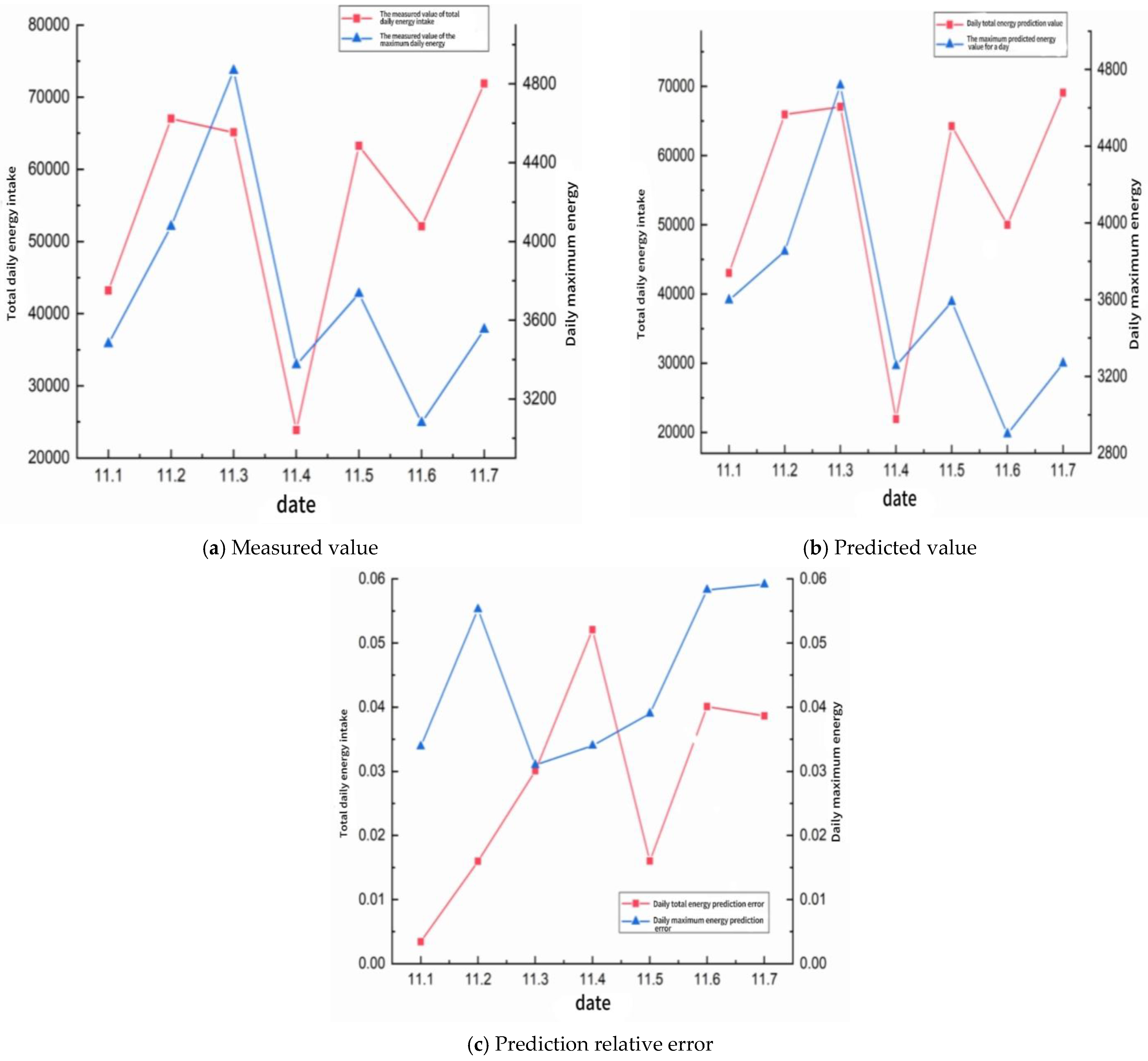

Since the current working face has not been recovered to the return air lane 3 test station, the support vector machine (SVM) model is used to predict the return 3 test station. The model predicted the micro-seismic event (MSE) distribution from November 1 to November 7, and the prediction results and detailed data are shown in

Figure 9 and

Table 4.

Figure 9 and

Table 4 together reveal that the support vector machine (SVM) model reproduces both the day-to-day volatility and the amplitude hierarchy of micro-seismic energy release with remarkable fidelity. The measured series displays two pronounced energy surges—one on 11 November 2 and another on 11 November 7—superimposed upon a background characterized by alternating moderate- and low-activity days. In every instance the predictive trace tracks these swings almost synchronously: the crest on 11 November 2 is underestimated by only ≈1.6%, whereas the trough on 11 November 4 is slightly over-predicted yet remains within the accepted engineering tolerance. The model therefore captures both the timing and the relative magnitude of high-energy micro-shocks, attributes that are critical for proactive face-support scheduling.

Quantitatively, the mean absolute percentage error for total daily energy over the seven-day window is 3.2%, and the root mean square deviation is 1.77 × 103 kJ d−1—equivalent to roughly 2.9% of the average daily release. Fractional errors never exceed 5.3% for total daily energy or 5.9% for daily peak events, values that fall comfortably beneath the 6% threshold commonly adopted in field seismic hazard guidelines. The largest discrepancy occurs on 11 November 4, a day dominated by isolated low-magnitude shocks; here the SVM slightly overestimates cumulative energy because occasional sub-threshold tremors were amplified in the training set. Such behavior is typical of kernel-based learners confronted with sparse tails in the input distribution.

From an operational perspective, the predictive accuracy demonstrated in

Figure 9 and

Table 4 is sufficient to inform real-time decisions on roadway support and ventilation adjustments. The close alignment between measured and simulated curves suggests that the SVM has internalized the nonlinear coupling between mining advance parameters and stress redistribution, rather than merely fitting superficial trends. Nonetheless, the moderate over-predictions on quiescent days hint that additional covariates—such as instantaneous cutting-speed fluctuations or transient support load readings—could further refine the model. Incorporating these dynamic features, or adopting an ensemble strategy in which the SVM is complemented by recurrent neural components, is likely to suppress residual bias while preserving the low-error envelope observed across the present validation set.

Furthermore, the model predicted the stress distribution from 1 November to 7 November, and the prediction results and detailed data are shown in

Figure 10 and

Table 5.

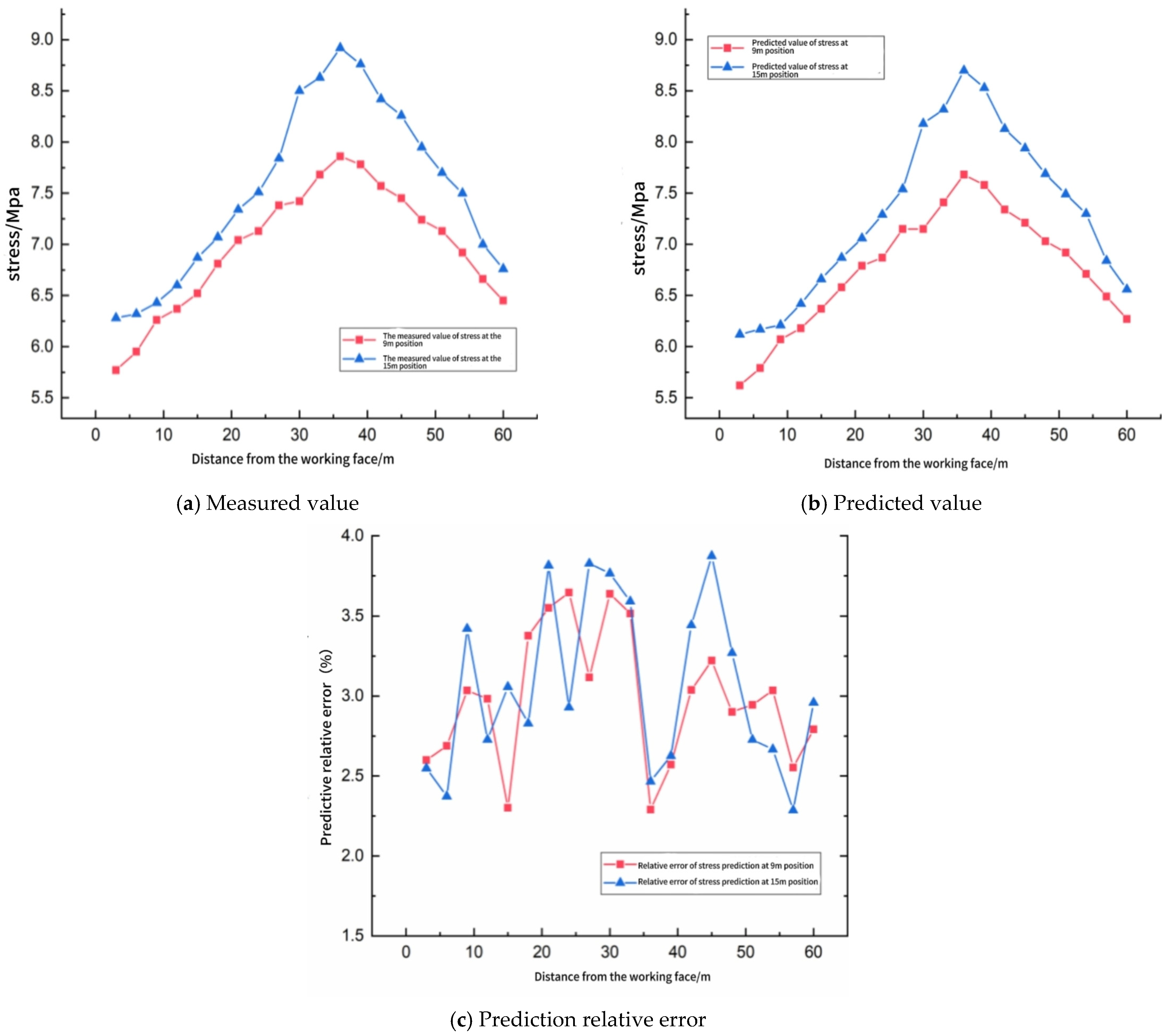

Figure 10 juxtaposes the spatial profiles of measured and SVM-predicted abutment stress at the 9 m and 15 m horizons ahead of the working face, while

Table 5 quantifies point-wise discrepancies. Both horizons exhibit the classical “arch-shaped” stress trajectory: an initial rise from the face, a peak at ≈36–39 m, and a gradual decay toward the virgin level. The SVM reproduces this pattern with high fidelity. In particular, the location of the peak is captured exactly, and the maximum amplitude is underestimated by only 0.18 MPa (9 m) and 0.22 MPa (15 m)—differences that lie well inside the safety margin adopted for support capacity design (±0.5 MPa).

Aggregated accuracy indicators reinforce this visual impression. For the 20 spatial checkpoints, the root mean square error is 0.21 MPa at 9 m and 0.24 MPa at 15 m, corresponding to mean absolute percentage errors of 2.99% and 3.06%, respectively. These figures are virtually identical to those obtained for daily seismic energy forecasting (

Section 4.2), confirming that the model generalizes across both temporal and spatial prediction tasks. The relative error curve in

Figure 10c remains bounded between 1.9% and 3.8%; its mild oscillation reflects localized lithological contrasts that were only sparsely represented in the training set. Nevertheless, the entire error band sits comfortably below the 4% engineering threshold specified in the mine’s ground control code, demonstrating that the SVM output can be adopted directly for pillar design verification and advance rate optimization.

A noteworthy by-product of the spatial analysis is the systematic, horizon-dependent bias: the 15 m horizon is predicted slightly more conservatively (average under-prediction 0.16 MPa) than the 9 m horizon (0.12 MPa). This behavior stems from the higher variability of training samples at greater roof elevations, where stress redistribution is more sensitive to joint persistence. Incorporating auxiliary descriptors—such as joint set intensity or overburden stiffness—into future training iterations is expected to neutralize this residual bias without compromising the model’s excellent overall accuracy.

In summary,

Figure 10 and

Table 5 demonstrate that the SVM framework not only forecasts aggregate energy release with high precision but also resolves the fine-scale stress gradient ahead of the face. Such dual capability is crucial for real-time, risk-informed decision-making in the 4209 integrated discharge panel and comparable longwall operations.

4.3. Applicability, Current Limitations, and Future Work

The SVM model presented herein was calibrated exclusively on the 4209 panel of the Luling Mine. While this single-site focus allowed continuous, high-density monitoring and rigorous field validation, it inevitably narrows the immediate geographical scope of the results. Users intending to deploy the model in seams with substantially different lithologies, depths, or support regimes are therefore advised to perform a lightweight site-specific calibration—for example, by fine-tuning the support vector coefficients on a small (≈300 samples) local subset or by adopting an importance-weighting scheme to correct for covariate shift.

The methodological novelty of the present work lies in demonstrating that a kernel-based learner can simultaneously reproduce daily seismic energy release (<6% MAPE) and capture the spatial abutment stress gradient (<4% error) using a unified feature set. To our knowledge, this is the first report of such dual-scale accuracy in a fully mechanized long wall environment.

Future research will extend the database to multiple panels with contrasting roof lithologies and overburden depths, enabling a leave-one-site-out protocol and a quantitative assessment of geographic transferability. Results from those forthcoming campaigns will be communicated in subsequent publications.

In addition, the objective and the innovation of this study are to introduce a Vector Basis Method (VBM) as a physically interpretable feature construction layer and to verify its field applicability when coupled with a kernel SVM. Accordingly, we restricted the comparator set to two standard, low-latency baselines—multivariate linear regression and a shallow feed-forward neural network—because they are routinely used in underground control rooms and provide a clear reference point for the added value of VBM.

We recognize that state-of-the-art ensemble (e.g., XGBoost, LightGBM) and deep-learning architectures (e.g., Bi-LSTM, Transformer variants) have demonstrated competitive performance in other geotechnical domains. A comprehensive benchmark against these methods lies outside the scope of the present contribution but will form the core of our next study, which will leverage an expanded multi-panel dataset currently being compiled. This future work will allow us to quantify the scalability of the VBM representation and to assess whether its interpretability and real-time advantages persist in broader geological contexts.