1. Introduction

With rapid global economic growth and accelerated industrialization, air pollution has become a widespread environmental challenge. It poses a severe threat to human health and the sustainable development of ecosystems. The glass manufacturing industry is a high-energy, high-pollution sector. It emits harmful gases such as nitrogen oxides (NO

x) and sulfur dioxide during the furnace firing process, while consuming large amounts of fossil fuels [

1]. NO

x is a key pollutant in atmospheric contamination. Its emissions worsen acid rain, photochemical smog, and haze, and increase the risk of respiratory and cardiovascular diseases in humans [

2,

3]. Effective control and reduction of NO

x emissions are vital for better air quality, ecological sustainability, and public health protection [

4].

Currently, industrial denitrification systems predominantly employ Selective Catalytic Reduction (SCR) as the core technology for flue gas purification [

5]. SCR converts NO

x into harmless nitrogen and water in the presence of a catalyst, enabling highly efficient NO

x abatement [

6,

7]. Since its emergence in the 1980s, SCR has become the mainstream solution for industrial denitrification, relying on ammonia as a reducing agent to achieve efficient NO

x reduction on catalysts such as V

2O

5-WO

3/TiO

2 [

8,

9,

10]. With denitrification efficiencies exceeding 90% and broad operational adaptability, SCR has substantially reduced industrial NO

x emissions worldwide, establishing itself as a pivotal technology for achieving clean emissions in modern denitrification systems [

11,

12]. Moreover, SCR exhibits favorable low-temperature activity and compatibility with existing industrial infrastructure, offering significant cost-effectiveness and ease of integration in retrofit applications [

13].

Despite the significant success of SCR technology in industrial applications, numerous issues and challenges persist in its practical operation. As the operation time extends and operating conditions become more complex, the performance of SCR systems is constrained by various factors, including catalyst aging, reduced activity, and the accumulation of impurities such as SO

2 and ash in the flue gas [

14,

15]. These changes make precise control of ammonia water flow extremely difficult [

16,

17]. Improper ammonia flow control may lead to ammonia slip, reduce denitrification efficiency, and cause secondary pollution, while excessive ammonia usage increases operational costs [

18]. Furthermore, with increasingly stringent environmental regulations, both domestic and international standards for NO

x emissions in the glass industry have become progressively more demanding. In key regions of China, such as the Beijing–Tianjin–Hebei area, NO

x concentration limits for flat glass plants have been reduced to below 100 mg/m

3, with some areas requiring levels under 50 mg/m

3. European Union standards are even more rigorous, mandating emissions not exceeding 20 mg/Nm

3. Against this backdrop, achieving efficient and precise ammonia flow control under dynamically changing operating conditions has emerged as a critical challenge for industrial denitrification systems.

To address these issues, researchers have proposed various methods. Bonfils et al. [

19] proposed a closed-loop control strategy based on NO

x sensors, optimizing the ammonia injection of the SCR system by leveraging the sensors’ cross-sensitivity to NH

3. Chen et al. [

20] developed a mathematical model and optimization design method, which reduced NO

x emissions by simulating the performance of the SCR reactor. Li et al. [

21] introduced a two-stage SCR control strategy that, combined with dynamic condition adjustments, met the ultra-low NO

x emission requirements for heavy-duty diesel engines. However, these approaches predominantly rely on conventional sensors or mechanistic models, making it difficult to accurately capture and represent the intrinsic nonlinearities and temporal dependencies within SCR system data. This limitation undermines the model’s ability to maintain predictive accuracy and adaptability when confronted with the system’s complex dynamic behaviors, ultimately impeding robust and consistent performance optimization.

In recent years, data-driven approaches have demonstrated remarkable potential for optimizing industrial denitrification systems. Machine learning techniques—represented by artificial neural networks (ANNs), support vector machines (SVMs), long short-term memory (LSTM) networks, and their variants such as Seq2Seq LSTM—have achieved steady advances in predicting NOx emission concentrations. Meanwhile, evolutionary algorithms, particle swarm optimization, and surrogate-based optimization strategies have offered diverse pathways for parameter tuning in the denitrification process. Despite these advancements, current methodologies still face fundamental limitations in achieving the coordinated optimization of ultra-low NOx emissions and economic operation. The overall evolution of this research field thus reveals a distinct gap, transitioning from isolated perception to shallow optimization without a truly integrated framework.

Specifically, early studies predominantly focused on improving the predictive accuracy of NO

x concentration models, yet a fundamental paradigm gap persisted—namely, the disconnection between prediction and optimization. For instance, the evolutionary neural network proposed by Azzam et al. [

22], the Auto-encoder–ELM model developed by Tang et al. [

23], and the Seq2Seq-LSTM network employed by Xie et al. [

24] all aimed to enhance prediction accuracy through architectural optimization or advanced feature extraction. More recently, the CS-CNN model introduced by Wang et al. [

25] further strengthened dynamic modeling capability. Nevertheless, these efforts have largely treated high-precision prediction as the ultimate goal, without integrating it into a closed-loop control framework. As a result, the prediction models function merely as isolated perceptual units, unable to directly guide real-time ammonia injection strategies, thereby forming an inherently open-loop system architecture.

As research has progressed, several studies have incorporated optimization into the denitrification process. However, significant limitations remain in both how objectives are formulated and how optimization mechanisms are designed. These often result in narrow objectives or low computational efficiency. For example, Wang et al. [

26] used a Gaussian process with a genetic algorithm to optimize the combustion process for NO

x reduction. Yet, their framework did not consider key economic indicators such as ammonia consumption, resulting in an incomplete assessment of system benefits. Similarly, this research—together with Liu et al.’s [

27] feedforward control strategy—typically uses static or highly simplified surrogate models for optimization. The optimization algorithms themselves also have issues, including random initialization and poor search guidance. These make them poorly suited for the strongly nonlinear, multivariable-coupled dynamics of SCR systems. Such deficiencies constrain both convergence speed and adaptability under different operating conditions.

Notably, recent advances in collaborative optimization have highlighted the frontier challenges of this field. These advances have also provided a more precise benchmark for positioning the present work. Li et al. [

28] proposed a synergistic framework based on an SAE–Bi-LSTM model integrated with an improved particle swarm optimization (PSO) algorithm. Xu et al. [

29] developed a DNN–MPC structure that achieved notable progress in coupling prediction and control. However, these approaches still have limited capacity to capture the system’s intricate spatiotemporal dynamics. Their optimization modules also generally lack the capability to intelligently extract informative priors from historical data. As a result, they struggle to generate high-quality initial solutions, which reduces overall optimization efficiency and control stability under fluctuating operating conditions. These analyses collectively reveal that the central bottleneck of current research lies in constructing an integrated optimization framework. All components should be deeply coupled to achieve both high-precision dynamic perception and efficient directional search.

In summary, existing data-driven approaches for denitrification systems face three fundamental challenges: (1) insufficient capability of model architectures to capture multivariate spatiotemporal coupling dynamics; (2) a disconnection between the prediction and optimization stages, leading to open-loop decision-making; and (3) a lack of intelligent guidance in the optimization process, resulting in inefficient search strategies.

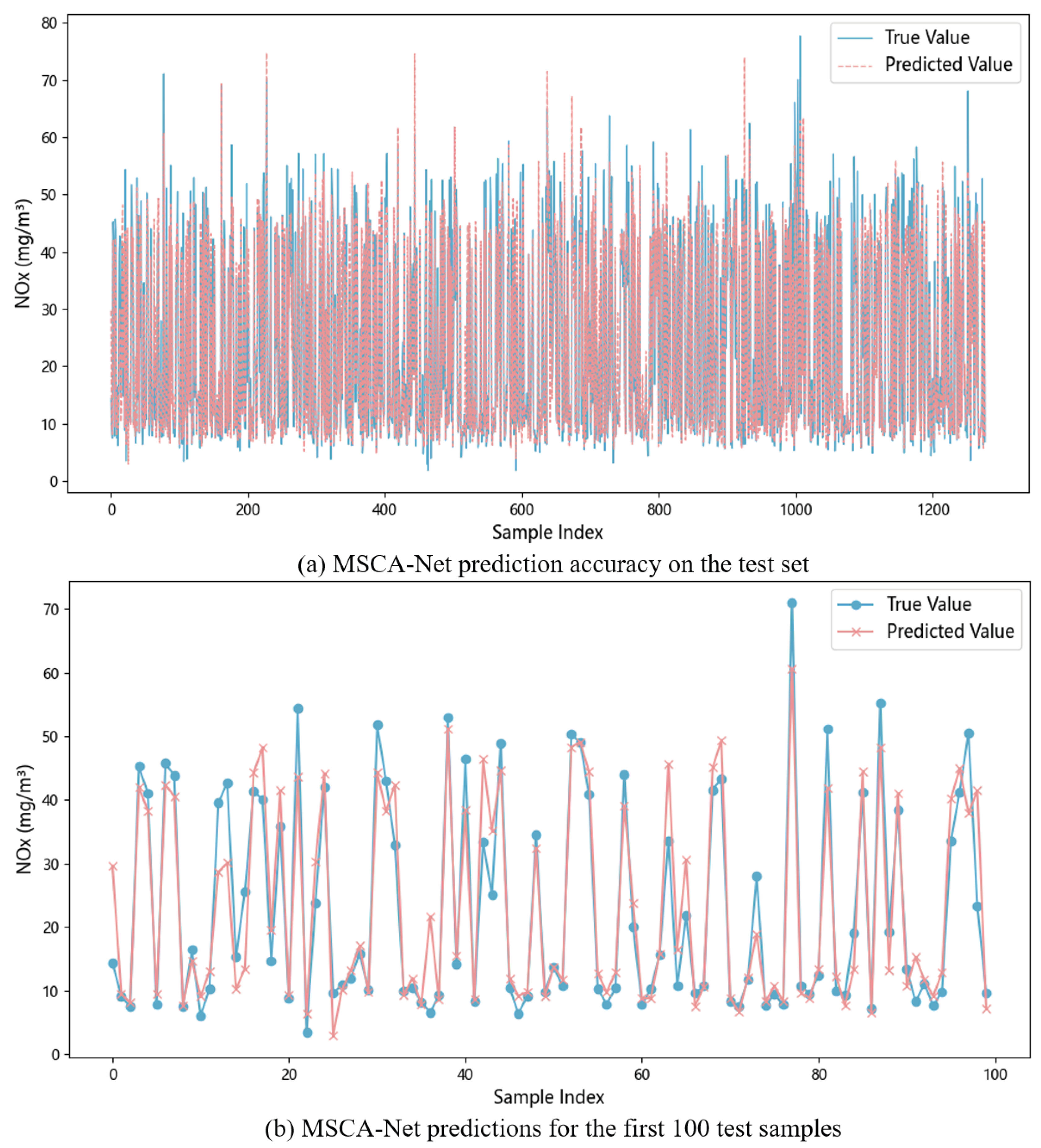

To address these challenges, this study suggests a new way to control and optimize SCR systems by combining two modeling methods with an improved genetic algorithm. A new network, called a multidimensional spatiotemporal convolutional attention network (MSCA-Net), is created to help the model better understand the system’s complex behaviors. Building on this, a Constraint-aware Genetic Algorithm with Smoothing (CSGA) is introduced to optimize both NOx emissions and ammonia use, creating a decision-making loop. In addition, an Extreme Gradient Boosting (XGBoost) initializer is used to quickly find good starting solutions, making the process faster and more stable.

The core innovations of this work are summarized as follows:

To achieve a unified optimization of control precision and economic efficiency in denitrification systems, a hybrid modeling and CSGA-based co-optimization strategy was proposed, ensuring stable compliance with NOx emission standards while reducing ammonia consumption.

To address the challenges of multivariable coupling, strong nonlinearity, and complex operating conditions in industrial flue gas treatment, the MSCA-Net was developed, significantly enhancing the accuracy and robustness of NOx concentration predictions at the system outlet.

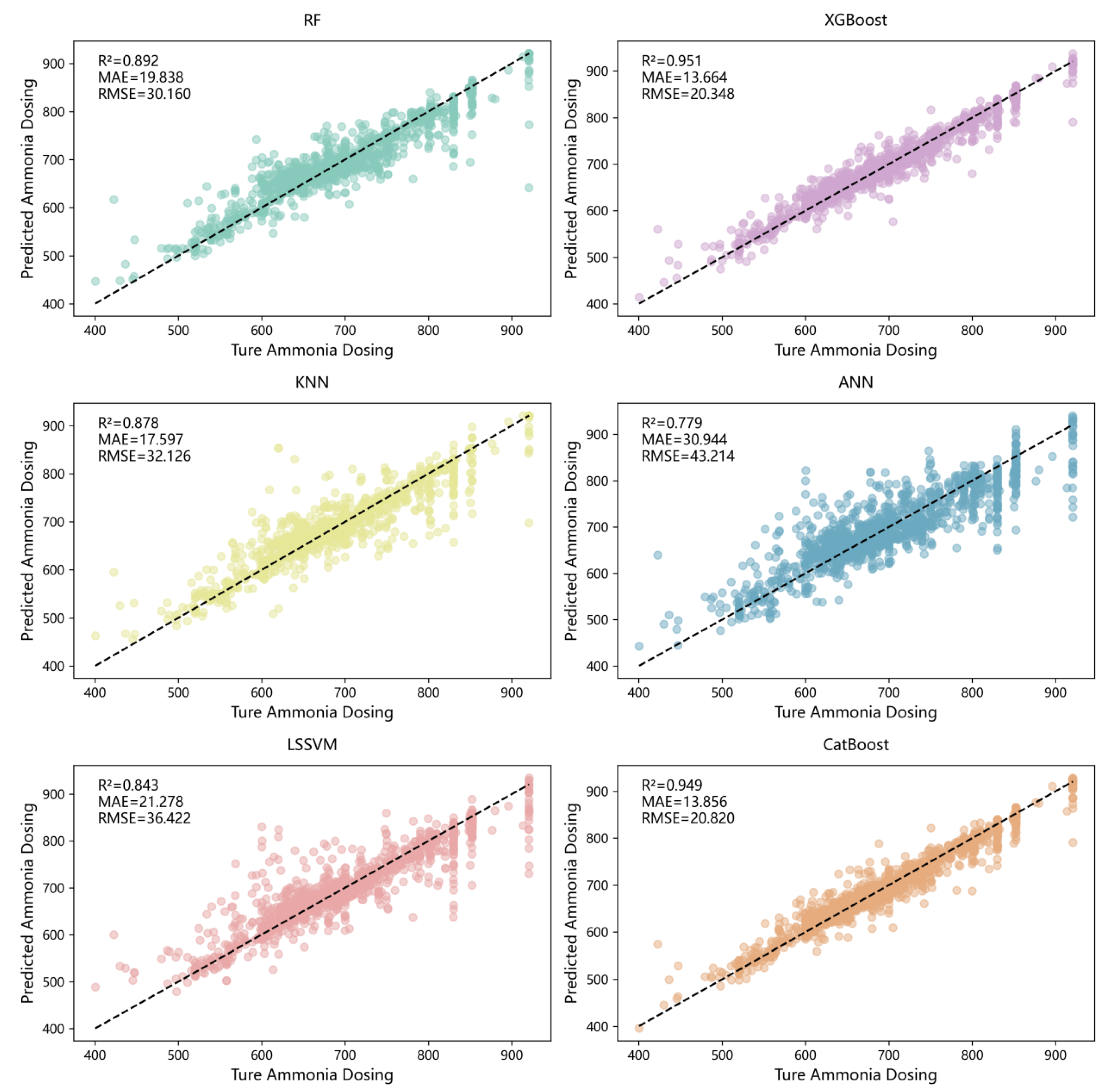

An XGBoost model was introduced to provide high-quality initial solutions for the optimization process, narrowing the search space and mitigating the randomness of algorithm initialization. Furthermore, a comparative analysis with five baseline models confirmed the superior performance of XGBoost in predicting ammonia flow, achieving an R2 of 0.951.

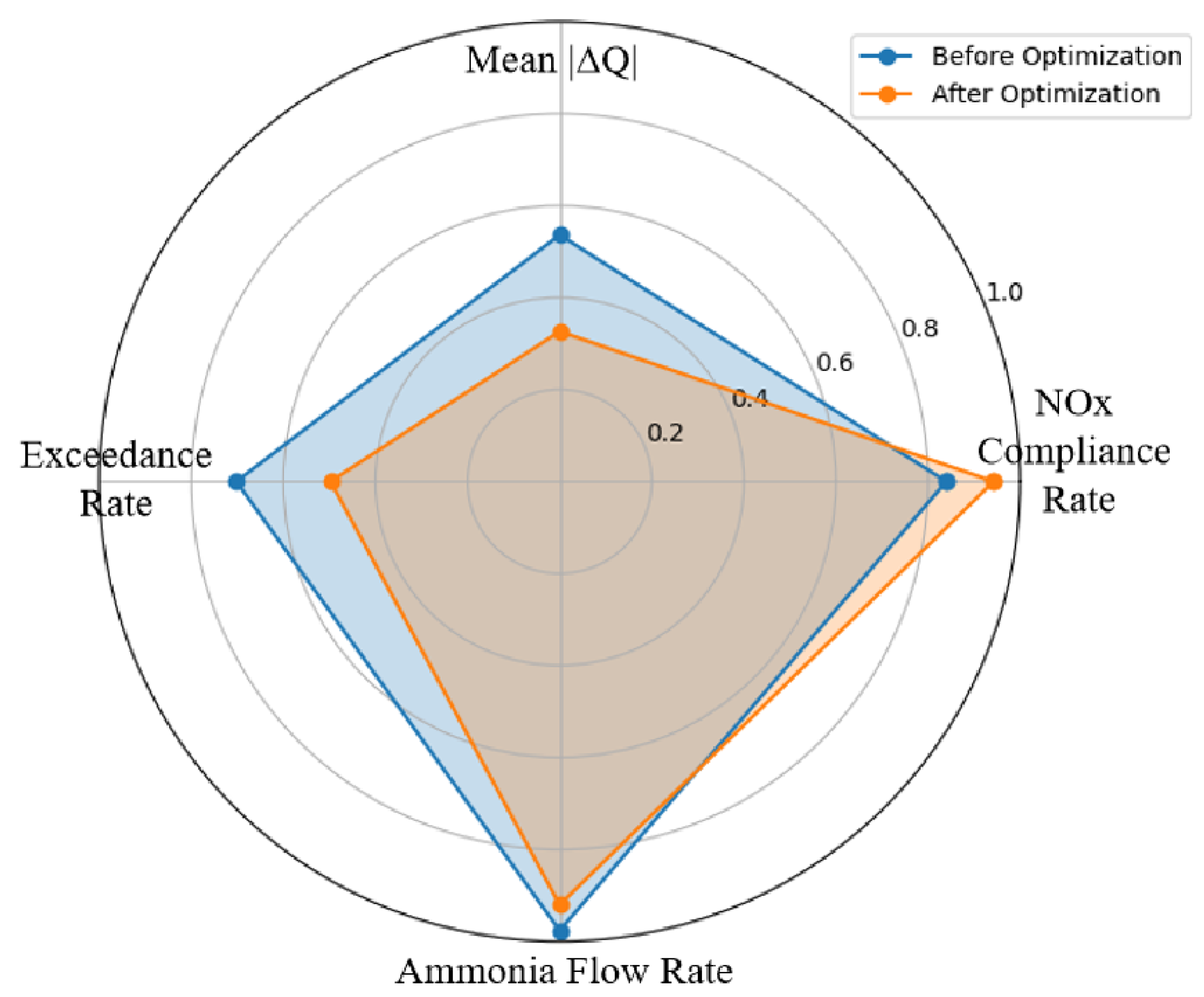

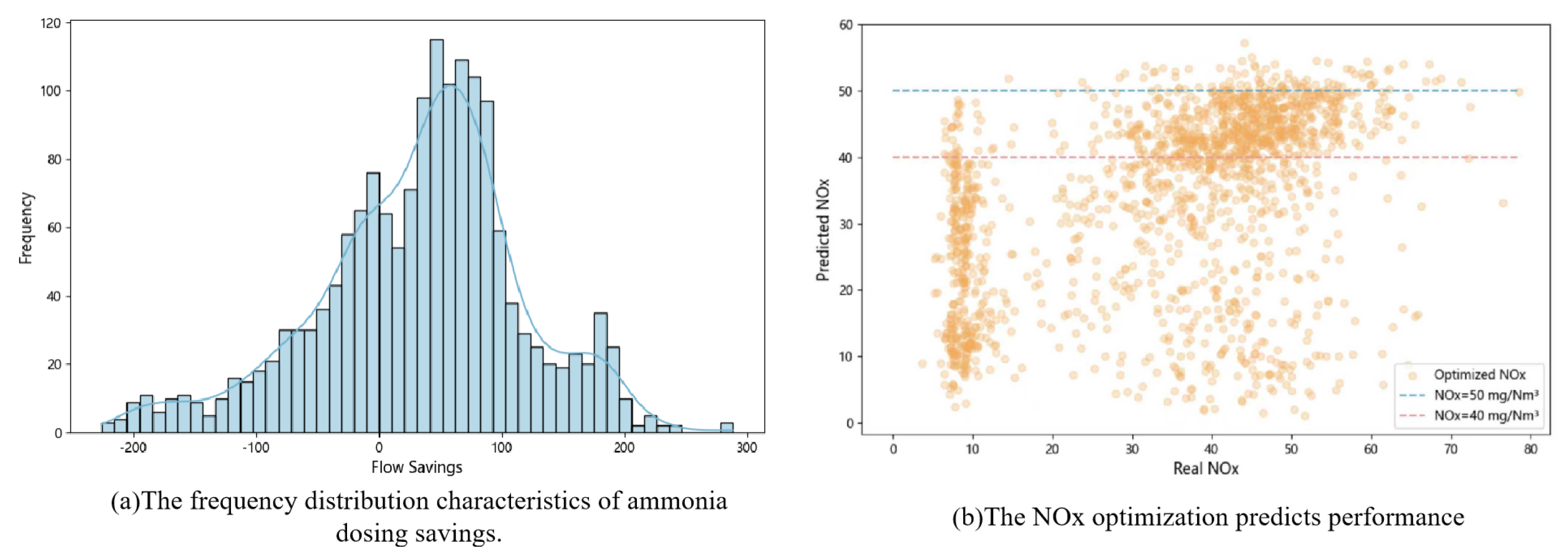

Global optimization was performed using the CSGA, enabling multi-objective co-optimization of NOx emissions and ammonia flow, effectively balancing environmental performance with operational cost. Experimental results demonstrate that the proposed strategy increased the NOx compliance rate by 10.26%, reduced average ammonia consumption by 33.56 L/h, and decreased the rate of threshold exceedance by 8.39%. These improvements not only significantly lower operational costs but also further enhance the stability of the denitrification system.

The remainder of this paper is organized as follows.

Section 2 presents the materials and methods.

Section 3 reports the results and discussion.

Section 4 concludes the study.

2. Materials and Methods

In this section, we propose an optimization strategy for denitrification systems that integrates MSCA-Net, XGBoost, and CSGA.

Section 2.1 describes the procedures for data collection and preprocessing.

Section 2.2 details the overall framework architecture, including NO

x concentration prediction using MSCA-Net, initial ammonia flow estimation with XGBoost, and the collaborative optimization process enabled by CSGA.

Section 2.3 specifies the evaluation metrics employed for model assessment.

2.1. Data Collection and Preprocessing

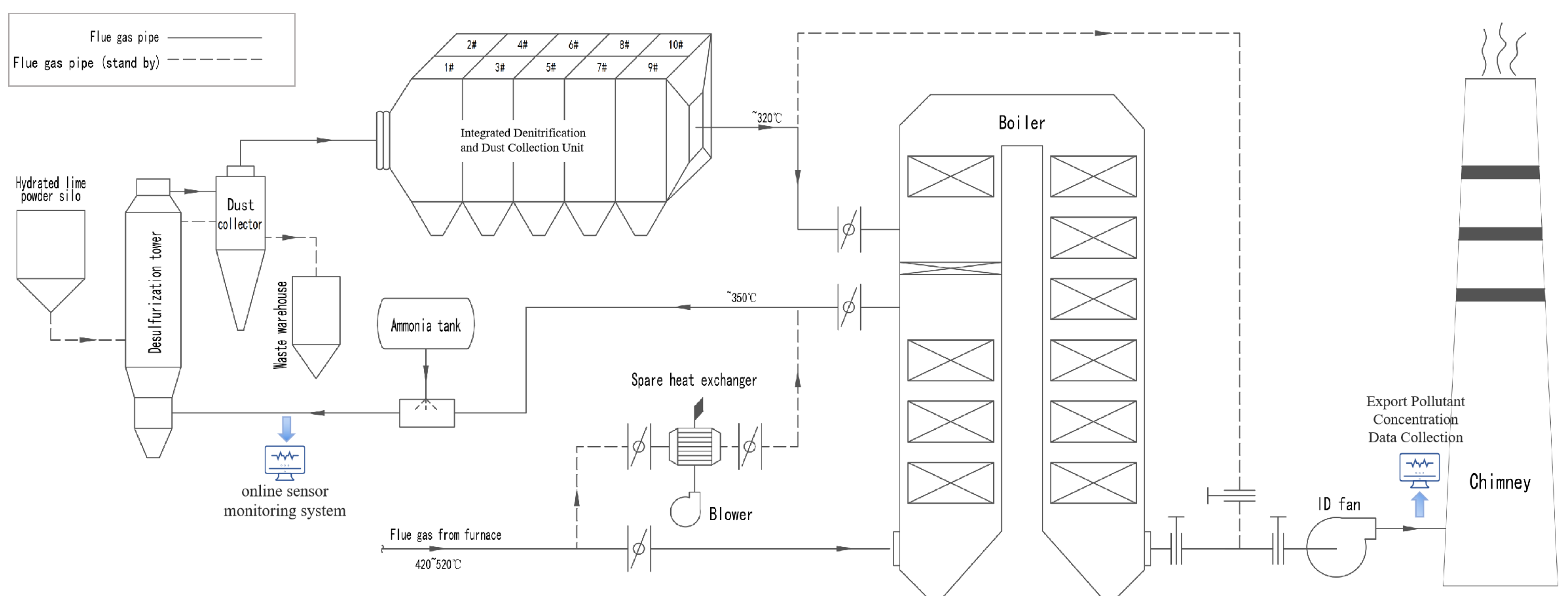

In this study, the experimental data were obtained from a flue gas treatment plant serving a glass-melting furnace located in Guangdong Province, China. The plant employs a comprehensive high-temperature flue gas purification process, as illustrated in

Figure 1, which primarily consists of waste heat recovery, desulfurization, denitrification, and dust removal stages. Specifically, the high-temperature flue gas emitted from the glass-melting furnace first enters a boiler unit for waste heat recovery, where its temperature is reduced to approximately 350 °C before proceeding to the conditioning and desulfurization stage. In this stage, the flue gas is thoroughly mixed with ammonia solution injected from an ammonia tank and then directed into the desulfurization tower. Simultaneously, hydrated lime powder from the lime silo is fed into the tower to participate in the desulfurization reaction. Subsequently, the flue gas passes through a dust collector, where separated particulate matter is conveyed to a waste silo. The preliminarily purified flue gas is then introduced into an integrated denitrification–dust-removal unit. Within this unit, NO

x and ammonia undergo selective catalytic reduction on the catalyst surface of the unit’s modular cells, producing nitrogen and water. Meanwhile, alkaline particulates in the gas stream form a filter cake layer that enhances desulfurization, and captured particles are collected through the ash-cleaning system. After completing this high-temperature desulfurization–denitrification–dust-removal process, the purified flue gas is drawn by an induced draft fan and finally discharged through the chimney.

The plant is equipped with an online sensor monitoring system that continuously records key operational parameters. The collected data consist of two frequency categories:

- 1.

Daily data—furnace temperature (°C): This parameter is measured at four monitoring points positioned inside the glass-melting furnace, and the average value is recorded. As a core process control variable, the furnace temperature exhibits minimal intra-day fluctuations and typically changes only during process adjustments. Therefore, it can be regarded as constant within a single operational day.

- 2.

Data collected at 1 min intervals: kiln opening signal (%), flue gas temperature (°C), pressure (Pa), flue gas flow rate (Nm3/h), SO2 concentration (mg/m3), O2 concentration (%), humidity (%), and ammonia water flow rate (L/h).

In addition to the online sensor monitoring data, the dataset also includes pollutant concentration analyses. Specifically, the NOx concentration (mg/m3) was measured at the chimney outlet with a one-minute sampling frequency, providing high-resolution monitoring of exhaust gas emissions. The data collection period spanned from 9 April 2024, to 30 March 2025 (356 days in total), yielding approximately 512,640 data samples. The data encompasses various operating conditions of the glass melting furnace, providing a reliable data foundation for the subsequent optimization of ammonia water flow to achieve low NOx emissions (<50 mg/Nm3).

To ensure data quality and model reliability, a rigorous data preprocessing procedure was implemented. First, all data were resampled to a uniform temporal resolution to generate daily and hourly datasets [

30]. The daily dataset was constructed by averaging the one-minute data collected within the same day. The hourly dataset was generated based on the characteristics of each parameter: for the furnace temperature, which remains stable throughout the day, a constant value was used, whereas for dynamic variables, the hourly data were obtained by averaging all minute-level samples within each hour [

31]. Second, missing values caused by sensor malfunctions were filled using linear interpolation to preserve temporal continuity [

32]. Finally, a multi-level outlier detection and correction strategy was applied to remove anomalous observations.

A multi-level data quality control protocol was implemented using specific parameter thresholds:

- 1.

Static threshold filtering: measurement values violating operational limits (O

2 > 16%, pressure < −1750 Pa, or humidity > 20%) were marked as invalid and estimated using piecewise linear interpolation [

33].

- 2.

Dynamic outlier detection: a 168 h (7 day) sliding window was used to identify dynamic anomalies. Values deviating more than

from the mean within the window were replaced using linear interpolation [

34].

After completing the data preprocessing, a preliminary comparison analysis was conducted between the daily and hourly datasets to evaluate the accuracy of NOx emission prediction. The hourly dataset, which showed better performance, was ultimately selected, with a total of 8522 data points.

2.2. Overall Framework

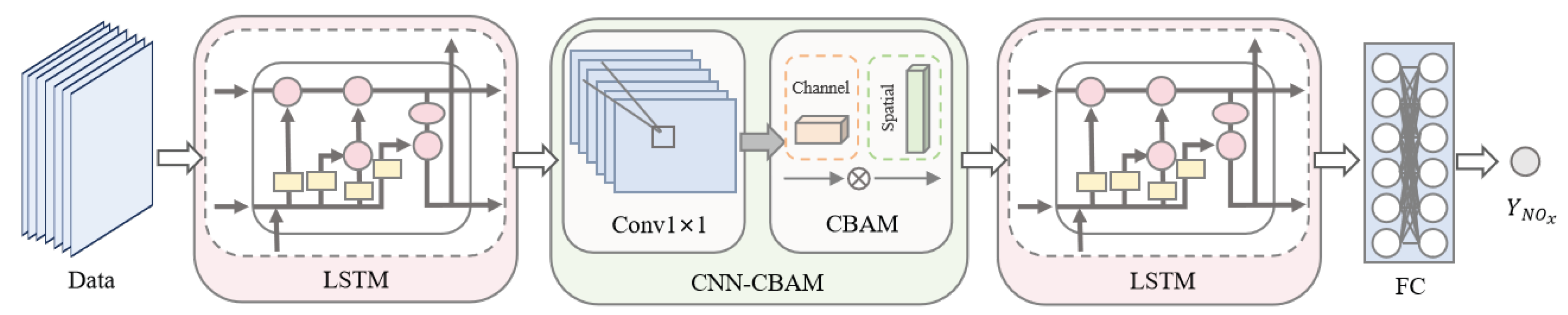

The workflow of the proposed framework is illustrated in

Figure 2. We introduce a three-tier synergistic architecture that integrates MSCA-Net, XGBoost, and CSGA to optimize ammonia flow and regulate NO

x emissions in denitrification systems. MSCA-Net, composed of convolutional neural networks (CNN), convolutional block attention modules (CBAM), and long short-term memory networks (LSTM), enables accurate prediction of NO

x concentrations under highly dynamic and nonlinear operating conditions. XGBoost, leveraging efficient feature selection and regression analysis, generates high-quality initial ammonia flow estimates for CSGA, thereby substantially reducing the optimization search space and enhancing computational efficiency. Finally, CSGA functions as the global optimization engine, employing iterative search strategies to minimize ammonia consumption while ensuring full compliance with emission standards.

To validate the effectiveness of the proposed framework, we evaluated multiple key performance indicators, including NOx compliance rate, average ammonia consumption, and exceedance ratio. Experimental results demonstrate that the integrated optimization framework significantly outperforms existing benchmark methods in both NOx concentration prediction accuracy and multi-objective dynamic optimization. These findings confirm the superiority, adaptability, and practical utility of the proposed strategy for denitrification in complex industrial flue gas environments.

2.2.1. MSCA-Net

The MSCA-Net is a hybrid deep learning architecture that integrates LSTM, CNN, and CBAM. Its design concept emulates the cognitive reasoning process of engineers in analyzing complex industrial systems: first capturing the overall dynamic evolution of the system, then extracting critical local patterns, and finally focusing on salient information to enhance discriminative capability. The model is built upon a hierarchical feature-processing paradigm, forming a multilayer feature learning framework that jointly leverages temporal modeling, spatial pattern recognition, and attention-based feature refinement. By sequentially incorporating temporal dependency capture, local pattern extraction, and adaptive feature optimization, MSCA-Net enables a progressive information-processing flow—from macro-level dynamic modeling to micro-level feature enhancement. Unlike conventional single-structure networks, MSCA-Net emphasizes the collaborative modeling of global and local representations and the hierarchical optimization of information flow. This design endows the model with memory capability, discriminative power, and robustness when handling the nonlinear and multivariable coupling characteristics of combustion processes, providing an interpretable and generalizable solution for NOx emission prediction.

The model architecture is shown in

Figure 3. The MSCA-Net adopts a strictly sequential, end-to-end architecture, with the data flow designed to capture both global and local temporal–spatial dependencies. Specifically, the input multivariate time-series data are first processed by an initial LSTM layer, which captures long-term temporal dependencies and provides rich global contextual information for subsequent modules. Next, a one-dimensional convolutional layer extracts local spatial patterns from the LSTM outputs, strengthening the model’s ability to perceive correlations among neighboring feature points. The CBAM attention module is then applied to the convolutional feature maps to adaptively reweight channels and spatial locations, highlighting features that are highly correlated with NO

x emission dynamics while suppressing irrelevant noise. After that, a second LSTM layer functions as a contextual aggregator, deeply integrating the refined feature sequences to achieve fine-grained modeling of global temporal patterns—significantly enhancing the model’s representational capacity for complex dynamic processes. Finally, the extracted features are regularized via a Dropout layer and passed through a fully connected layer to perform regression-based NO

x concentration prediction.

LSTM, an enhanced variant of the Recurrent Neural Network (RNN), addresses the vanishing gradient problem commonly encountered in traditional RNNs when processing long sequential data through the introduction of a gating mechanism [

35]. The core structure of an LSTM unit consists of three essential gates—the forget gate, input gate, and output gate—which control the retention, updating, and output of information, respectively [

36].

where

is the weight matrix of the forgetting gate,

denotes the concatenation of the previous hidden state

and the current input

into a single vector,

is the bias term,

and

are the weight matrices of the input gate and output gate, respectively, with corresponding bias terms

and

, and

represents the sigmoid activation function. Specifically, the forgetting gate governs the retention of information from the previous cell state, determining which components should be discarded. The input gate regulates the extent to which the current candidate state influences the update process, while the output gate controls the degree to which the current cell state contributes to the final hidden state. The ultimate output of the LSTM unit is jointly determined by the output gate and the cell state:

where

is the input cell state,

is the cell state at the current moment, and the symbol ⊙ denotes element-wise multiplication. This design allows the LSTM to effectively capture long-term dependencies in complex time series.

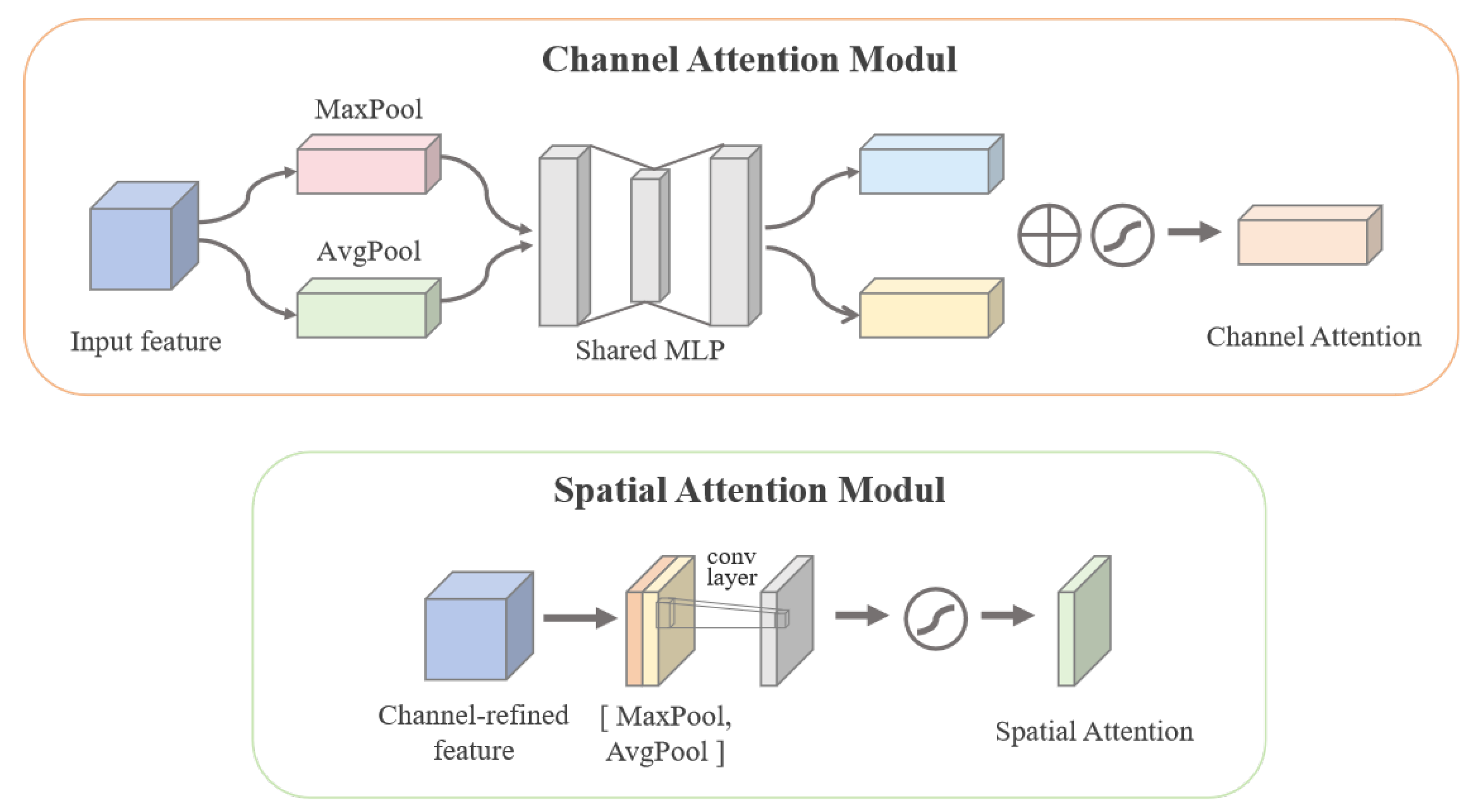

However, standard LSTM networks often struggle to adaptively focus on salient features when processing high-dimensional input data. To overcome this limitation, a CNN module is introduced to extract local high-dimensional representations. Through one-dimensional convolution operations, the CNN captures local dependencies along the temporal axis, effectively identifying high-level patterns between adjacent time steps and compensating for the LSTM’s limitations in local feature modeling. Additionally, the CBAM adaptively recalibrates features along both channel and spatial dimensions, strengthening the representation of important channels and key spatial locations. This mechanism effectively selects and amplifies relevant input features while suppressing noise interference, substantially improving the model’s attention allocation to critical information. CBAM is a lightweight and general-purpose attention module designed to enhance convolutional neural networks’ sensitivity to salient features [

37]. The module consists of two submodules: a channel attention module and a spatial attention module. These submodules refine the feature maps by adaptively learning importance weights along the channel and spatial dimensions. The channel attention module first aggregates global spatial information through global average pooling (GAP) and global maximum pooling (GMP). Then it computes channel-wise importance weights using a multilayer perceptron (MLP).

where

is the channel attention feature, F is the input feature,

is the sigmoid function, and MLP is the shared fully connected layer with shared weights. The spatial attention module, on the other hand, generates an attention map over spatial locations by compressing the channel dimensions to emphasize key regions and suppress noise [

38]:

where

is the spatial attention feature. F is the feature after the spatial attention module.

is the sigmoid function, and 7 × 7 denotes the size of the convolution kernel. By integrating CBAM into the CNN layer, the model is able to further enhance the feature responses that have a significant impact on the NO

x concentration prediction on the basis of local high-dimensional features, thus improving the overall prediction accuracy and robustness. The structure of the CBAM is shown in

Figure 4.

In this study, CBAM is effectively integrated into the deep neural network architecture to enhance the model’s selective attention to critical features. Based on this design principle, the workflow of MSCA-Net is as follows: first, LSTM functions as a macroscopic temporal feature extractor, capturing long-term dependencies in the input data and grasping overall system dynamics. Subsequently, CNN is introduced to focus on local detailed feature extraction; through multiple convolutional and pooling layers, the model’s ability to perceive complex spatiotemporal patterns is strengthened. Building on this, CBAM adaptively recalibrates feature weights through both channel and spatial attention mechanisms, highlighting the contributions of important channels and key time steps. This enables selective feature enhancement and noise suppression, substantially improving discriminative capability. Finally, the optimized and weighted features are fed into an LSTM unit for fine-grained temporal modeling, generating robust time-series representations that accurately capture the complex dynamic variations of NOx concentrations in denitrification systems, thereby significantly improving prediction accuracy.

2.2.2. XGBoost

XGBoost is an efficient gradient boosting decision tree algorithm widely used for its high accuracy and computational efficiency in regression tasks [

39]. It optimizes the loss function containing L1 and L2 regularization by integrating multiple decision trees to improve model generalization [

40]. The objective function is defined as:

where

is the actual value.

is the predicted value.

l is the loss function.

is the regularization term of the tree.

T is the number of leaf nodes.

is the leaf node weights, and

,

are the regularization parameters.XGBoost approximates loss using second-order gradient information:

where

and are the first and second order derivatives, respectively. Tree construction maximizes the gain by selecting the optimal features through greedy splitting:

where

,

are the left and right child node sample sets, and

I is the parent node sample set. XGBoost utilizes second-order gradient information and parallel computation to efficiently process high-dimensional features and large-scale datasets.

In the proposed framework, the XGBoost model estimates the initial ammonia water flow based on preprocessed input features, including flue gas flow, temperature, O2 concentration, pressure, and humidity. During the training process, XGBoost employs an additive model structure and optimizes the loss function using gradient descent. In each iteration, the model generates a new decision tree by fitting the current residuals and incorporates second-order derivative information to accelerate the convergence process. Additionally, the use of column subsampling and row subsampling strategies further improves the model’s computational efficiency and generalization ability. The high-precision initial prediction output by the model effectively narrows the search space for CSGA, reducing the overall computational cost of the optimization process. This enables efficient optimization and control of ammonia water flow, ensuring that NOx emission concentrations remain below 50 mg/Nm3.

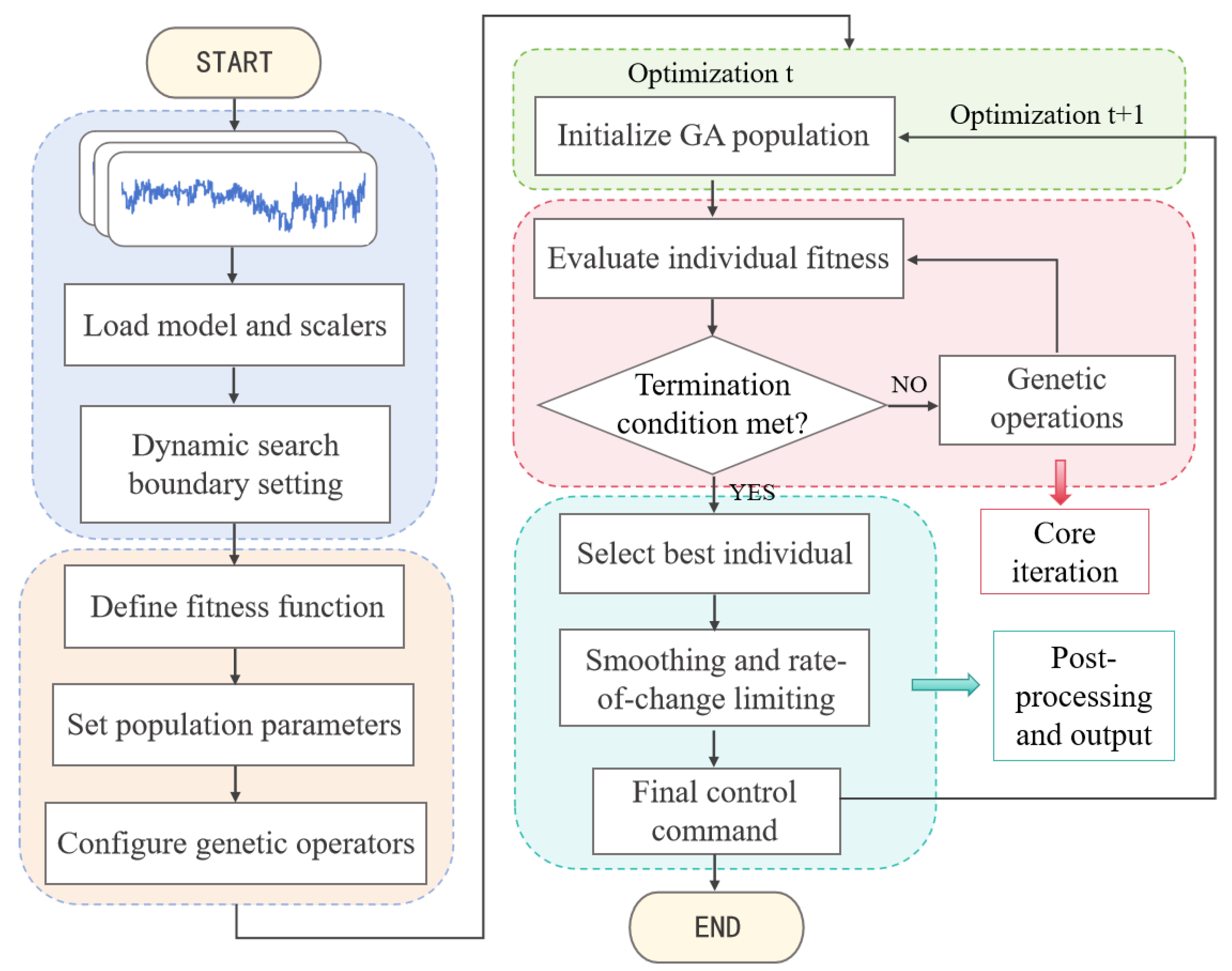

2.2.3. CSGA

Genetic algorithms (GA) are optimization techniques inspired by natural selection and genetic mechanisms, widely applied to solve complex nonlinear optimization problems [

41]. The algorithm evolves a population of candidate solutions, with each individual representing a set of ammonia flow parameters, through iterative selection, crossover, and mutation operations to approach the global optimum. In this study, we propose a CSGA, which incorporates several deep modifications tailored for industrial denitrification systems. By introducing a weighted aggregation fitness function and a dynamic search space optimization mechanism, CSGA significantly improves convergence speed and solution quality in the co-optimization of ammonia flow and NO

x emissions, effectively overcoming the premature convergence and low search efficiency limitations often encountered by conventional GA in high-dimensional, strongly constrained problems. The optimization workflow of CSGA is illustrated in

Figure 5.

The fitness function is designed to evaluate the performance of each individual solution. In the CSGA algorithm, a weighted aggregate fitness function is formulated to transform the multi-objective optimization problem into a single-objective one through a combination of weighting coefficients and a penalty mechanism. Specifically, the weight coefficient

regulates the contribution of ammonia consumption

to the overall objective, reflecting the consideration of economic operating costs. The weight coefficient

, combined with a nonlinear penalty term

, constitutes the environmental constraint mechanism. When the predicted NO

x emission exceeds the threshold of 50 mg/m

3, the penalty term becomes positive and increases proportionally with the degree of violation, thereby guiding the algorithm to search for solutions that comply with environmental regulations.This design ensures that the algorithm achieves multi-objective collaborative optimization of the denitrification system by maintaining an appropriate balance among environmental compliance, economic efficiency, and operational stability. The weighted aggregate fitness function is expressed as follows:

where

x denotes the ammonia dosing parameter,

is the ammonia consumption (L/h),

is the concentration (mg/Nm

3) predicted by MSCA-Net, and

,

are the weights balancing cost and emission constraints.

The assignment of weight coefficients is crucial for guiding the search direction of the algorithm. In this study, the final values of the weighting parameters were determined based on an analysis of the typical fluctuation range of ammonia consumption in historical operation data (standard deviation L/h), combined with a series of preliminary trade-off experiments. The core principle was to strictly ensure environmental compliance while considering economic operating costs. To guarantee that even a slight NOx exceedance (e.g., 1 mg/Nm3) would produce a penalty large enough to outweigh any potential fitness gain from ammonia savings, the following condition must be satisfied: . Experimental results demonstrated that when the weight ratio satisfies approximately the optimization achieves an optimal balance between maintaining a high NOx compliance rate (>94%) and effectively reducing ammonia consumption. Based on this analysis, the final coefficients were set as = 1, = 500.

The individual with higher adaptation is selected by the mechanism with selection probability:

where

N is the population size. Crossover (probability

P, usually taken as 0.6–0.9) and mutation (probability

, usually taken as 0.01–0.1) operations generate new individuals to ensure population diversity.

A data-driven search space optimization mechanism is employed to enhance both the efficiency and practicality of the algorithm. This mechanism dynamically narrows the search boundaries based on historical operational data and incorporates a post-processing control layer. By applying smoothing filters, the theoretically optimal solutions are converted into stable commands that can be safely executed by physical equipment, thereby enabling engineering-ready optimization. Specifically:

- 1.

Dynamic Search Boundaries: rather than using traditional fixed boundaries, the upper and lower limits are determined dynamically based on the 10th and 90th percentiles of historical data. This guides the algorithm to focus on regions most likely to contain high-quality solutions, improving search efficiency while ensuring the spatial plausibility of the results.

- 2.

Smoothing and Rate-of-Change Constraints: a moving average filter is applied to the optimized instruction sequence, and the maximum allowable change in ammonia flow between consecutive time steps is enforced. Smooth flow variations are critical for maintaining stable temperature, pressure, and concentration within denitrification systems. This control effectively prevents system disturbances caused by abrupt fluctuations in optimization commands, ensuring physical feasibility and overall system stability while achieving the optimization objectives.

Compared with optimization methods such as gradient descent and particle swarm optimization, GA offers strong global search capabilities, relaxed assumptions on problem structure, and robust adaptability to complex nonlinear constraints, demonstrating clear advantages in high-dimensional and multimodal industrial optimization problems. Accordingly, this study adopts GA as the foundational framework to develop CSGA, further integrating problem-specific knowledge and data-driven strategies. Building on GA’s global exploration capability, CSGA incorporates dynamic boundary adjustment, a weighted aggregation fitness function, and smoothing post-processing to effectively enhance convergence speed, solution quality, and engineering feasibility in denitrification system control. This enables the synergistic optimization of NOx emission control and economic operation.

2.3. Model Evaluation

To comprehensively validate the overall effectiveness of the proposed framework and the predictive performance of its key components, an evaluation system was established at two levels: system control performance and model prediction accuracy. At the system control level, three key performance indicators were employed: NO

x compliance rate, average ammonia consumption, and exceedance ratio, providing a holistic assessment of the denitrification system in terms of environmental compliance, economic efficiency, and operational reliability. At the predictive model level, MSCA-Net and XGBoost were quantitatively evaluated using three commonly adopted metrics: coefficient of determination (R

2), mean absolute error (MAE), and root mean square error (RMSE). R

2 measures the proportion of variance in the data explained by the model, with values closer to 1 indicating a better fit. MAE quantifies the average absolute deviation between predicted and observed values; it is robust to outliers and provides a reliable measure of error. RMSE emphasizes larger deviations by squaring the residuals, making it sensitive to outliers. Together, MAE and RMSE provide a comprehensive evaluation of model performance across different error characteristics, with smaller values indicating higher predictive accuracy. The specific formulas for each evaluation metric are presented below.

where

N is the total number of samples.

is the actual value of the ith sample.

is the predicted value of the ith sample. And

y is the mean of the sample.