Abstract

Designing reliable fault detection and diagnosis (FDD) systems remains difficult when only limited fault-free data are available. Kolmogorov–Arnold Networks (KANs) have recently been proposed as parameter-efficient alternatives to multilayer perceptrons, yet their effectiveness for unsupervised FDD has not been systematically established. This study presents a statistically grounded comparison of Kolmogorov–Arnold Autoencoders (KAN-AEs) against an orthogonal autoencoder and a PCA baseline using the Tennessee Eastman Process benchmark. Four KAN-AE variants (EfficientKAN-AE, FastKAN-AE, FourierKAN-AE, and WavKAN-AE) were trained on fault-free data subsets ranging from 625 to 250,000 samples and evaluated over 30 independent runs. Detection performance was assessed using Bayesian signed-rank tests to estimate posterior probabilities of model superiority across fault scenarios. The results show that WavKAN-AE and EfficientKAN-AE achieve approximately 90–92% fault detection rate with only 2500 samples. In contrast, the orthogonal autoencoder requires over 30,000 samples to reach comparable performance, while PCA remains markedly below this level regardless of data size. Under data-rich conditions, Bayesian tests show that the orthogonal autoencoder matches or slightly outperforms the KAN-AEs on the more challenging fault scenarios, while remaining computationally more efficient. These findings position KAN-AEs as compact, data-efficient tools for industrial fault detection when historical fault-free data are scarce.

1. Introduction

Fault detection and diagnosis (FDD) is essential for maintaining safe, efficient, and sustainable industrial operations. Equipment malfunctions, sensor degradation, and control system anomalies can jeopardize safety, reduce productivity, and cause environmental harm. Although faults cannot always be prevented, process monitoring systems can detect deviations from normal operation and enable timely intervention [1,2]. A central challenge in industrial process monitoring lies in designing FDD methods that remain reliable under multivariate, dynamic, and drifting conditions [3]. The advent of Industry 4.0 has expanded sensor deployment across process units, producing large volumes of high-resolution multivariate data [4]. This abundance of data has shifted research toward data-driven frameworks that infer system behavior directly from measurements [5]. Data-driven frameworks encompass a wide range of approaches, from classical methods such as principal component analysis (PCA) [6,7] to machine learning models based on artificial neural networks (ANNs), including multilayer perceptrons (MLPs) [8,9], convolutional neural networks (CNNs) [10,11], and recurrent neural networks (RNNs) [5,12].

Despite their success, ANN-based FDD methods face persistent drawbacks. They typically require large datasets to achieve reliable performance, and their black-box nature hinders interpretability [13,14]. Motivated by these concerns, Liu et al. [15] introduced the Kolmogorov–Arnold Networks (KANs) as parameter-efficient and potentially more transparent alternatives to the traditional MLPs. In a KAN, each connection is parameterized by a learnable univariate function, and each node sums its transformed inputs [16]. This architecture appears interpretable because every transformation is explicit and can be examined individually. However, interpretability declines with depth: multilayer compositions of univariate functions generate complex, non-unique mappings that are difficult to analyze [15,17]. Although KANs only partially fulfill their interpretability promise, empirical evidence indicates that they remain effective as parameter-efficient models [18,19,20], motivating their application to supervised and unsupervised FDD tasks.

Recent research has applied KANs to unsupervised anomaly detection by integrating them with convolutional and recurrent architectures. Tang et al. [21] introduced a KAN-enhanced U-Net with mask-constrained reconstruction for surface defect localization, while Li et al. [22] proposed a temporal KAN autoencoder that incorporates domain knowledge into reconstruction error analysis for pipeline leak detection. For time-series anomaly detection, Wu et al. [23] combined CNN, LSTM, and KAN modules with residual filtering to monitor UAV sensors, achieving higher true positive and lower false positive rates than competing models. These efforts highlight the potential of KANs to enhance unsupervised anomaly detection through hybrid designs, though the capabilities and limitations of standalone KAN models in this setting remain largely underexplored.

KANs have also been applied to supervised FDD, often serving as lightweight classifiers. Rigas et al. [24] employed shallow KANs for rotating machinery fault diagnosis, achieving high F1-scores with far fewer parameters than deep network baselines. Cabral et al. [25] developed KANDiag, a KAN-based model for oil-immersed transformer fault diagnosis under imbalanced data conditions, achieving improved accuracy over standards methods. Hybrid architectures have further combined KANs with signal processing and deep feature extraction. For instance, He and Mo [26] paired wavelet time-frequency analysis with KAN classification, while Siddique et al. [27] fused wavelet coherent analysis and S-transform scalograms with a CNN-KAN model for centrifugal pump fault diagnosis, reporting near-perfect accuracy across multiple datasets. For structural and HVAC applications, Yan et al. [28] proposed KoCG-Net, a CNN–GRU–KAN model for impact-induced damage detection, outperforming baseline models in accuracy and noise robustness under data-limited conditions. Zhang et al. [29] introduced Conv-KAN for refrigerant fault diagnosis in variable refrigerant flow (VRF) systems. Collectively, these studies show that KANs act as parameter-efficient classifiers whose integration with domain-specific preprocessing or deep feature extraction yields competitive FDD performance.

Despite growing interest, current KAN-based FDD research still faces several open challenges: (1) most studies use the original B-spline formulation, while alternative basis families (e.g., wavelets, Fourier modes, Chebyshev polynomials) remain underexplored; (2) research has focused mainly on supervised tasks, with limited attention to unsupervised fault detection; (3) the impact of training set size on fault detection performance has not been systematically investigated; and (4) multi-seed statistical testing to distinguish genuine improvements from random fluctuations is rarely performed. To address these issues, we conduct a systematic evaluation of Kolmogorov–Arnold Autoencoders (KAN-AEs) for unsupervised fault detection in chemical process systems, using the Tennessee Eastman Process benchmark as a representative industrial case. We compare four KAN-AE variants, EfficientKAN-AE, FastKAN-AE, FourierKAN-AE, and WavKAN-AE, against an orthogonal autoencoder and PCA baseline, with each model trained and evaluated independently over 30 runs.

This study contributes to advancing KAN-based fault detection in three distinct ways: (1) the influence of functional parameterization in KAN edge functions on fault sensitivity is investigated by comparing the fault detection performance of different KAN-AE variants; (2) the data efficiency of KAN-AEs is evaluated by analyzing how fault detection performance scales with training set size, offering practical insight into the amount of data required for optimal detection; (3) model comparisons are statistically grounded through Bayesian signed-rank testing analysis to quantify probabilities of model superiority or practical equivalence.

The remainder of this paper is organized as follows: Section 2 introduces the theoretical background on PCA, autoencoders, and Kolmogorov–Arnold Networks. Section 3 details the experimental setup, including the Tennessee Eastman Process benchmark, model training procedures, fault detection strategy, and performance evaluation. Section 4 presents and analyzes the experimental results. Finally, Section 5 summarizes the key findings and outlines implications for industrial fault detection.

2. Preliminaries

This section outlines the modeling approaches used in this study: PCA, the conventional autoencoder, and its KAN extension.

2.1. Principal Component Analysis

Principal component analysis (PCA) is a linear dimensionality-reduction method introduced by Pearson [30] and later formalized by Hotelling [31]. This method remains widely used in process monitoring due to its simplicity, interpretability, and computational efficiency [32]. PCA is commonly performed on the standardized data matrix via Singular Value Decomposition (SVD). The loading matrix is obtained by retaining the k leading right singular vectors, which define the latent subspace. The score matrix is then computed by projecting the original data onto this subspace, . Thus, the data matrix can be decomposed as:

where is the residual matrix capturing the variance unexplained by the model.

2.2. Autoencoders

Autoencoders (AEs) are neural networks that learn low-dimensional representations of high-dimensional data by reconstructing inputs from compressed latent variables. Compared with linear methods such as PCA or PLS, autoencoders capture the nonlinear dependencies common in chemical process data [33]. An AE consists of an encoder–decoder pair trained to minimize the mean squared reconstruction error:

where and denote the encoder and decoder, respectively. The orthogonal autoencoder (OAE) [8] extends this formulation by adding a penalty that enforces decorrelation among latent dimensions:

where is the matrix of latent representations for a mini-batch of N samples and k latent features, is the identity matrix, and controls the regularization strength.

2.3. Kolmogorov–Arnold Networks

The Kolmogorov–Arnold Network (KAN) is a neural architecture inspired by the Kolmogorov–Arnold representation theorem [15]. Unlike conventional neural networks, which use fixed activation functions at nodes, KANs employ learnable activation functions on edges. A KAN layer transforms its input by applying learnable univariate functions to each feature and summing the results. Each connection from neuron j in layer to neuron i in layer ℓ is associated with a function . The output of neuron i is then given by:

where each is parameterized using a smooth basis expansion (e.g., B-splines, Gaussian RBFs). In this work, we focus on four representative KAN variants: Efficient-KAN, Fast-KAN, Fourier-KAN, and Wav-KAN, which are detailed in Appendix A.

2.4. Kolmogorov–Arnold Autoencoders

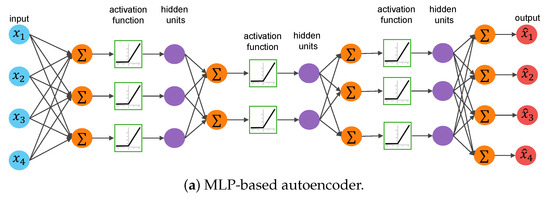

Kolmogorov–Arnold autoencoders (KAN-AEs) extend conventional autoencoders by replacing the dense layers in the encoder-decoder pair with KAN layers, as illustrated in Figure 1. Each connection in a KAN-AE learns its own transformation, allowing complex nonlinearities to be captured without deeper or wider architectures. KAN-AEs can be fully KAN-based [34] or hybrid, combining KAN and MLP layers [35]. In this study, all KAN-AEs employ only KAN layers, ensuring that performance differences can be attributed to the KAN architecture.

Figure 1.

Comparison of (a) MLP-based and (b) KAN-based autoencoders. KAN-based models replace fixed activation functions with learnable univariate transformations along each edge.

3. Methodology

This section presents the methodological framework used to configure, train, and evaluate the autoencoder models developed in this study. The analysis compares conventional and KAN-based autoencoders for unsupervised fault detection under matched conditions. All models were evaluated on the Tennessee Eastman Process (TEP), a widely adopted benchmark that provides a diverse and representative suite of industrial fault scenarios.

3.1. Case Study: The Tennessee Eastman Process

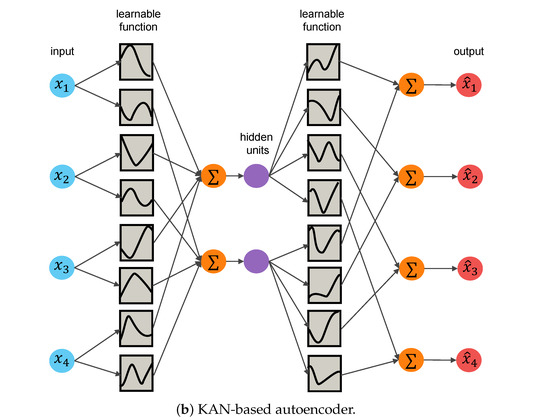

The Tennessee Eastman Process (TEP) serves as a widely used benchmark for process monitoring, providing a realistic yet controlled representation of a chemical plant. Originally introduced by Down and Vogel [36], it models a production system comprising eight chemical components and five interconnected process units (Figure 2). The process involves two primary exothermic reactions ( and ) and two secondary byproduct reactions ( and ), all occurring in the presence of an inert component ().

Figure 2.

Tennessee Eastman Process flowsheet.

Researchers have developed multiple TEP implementations with different control strategies [37,38,39], leading to several publicly available datasets. In this study, we use the dataset of Rieth et al. [40] to train the models under varying data availability conditions, and the fault dataset of Russell et al. [41] to evaluate fault detection performance. The training set comprises 500 simulation runs under normal operating conditions, each containing 500 samples. The testing set includes one simulation run for each fault scenario, with measurements recorded every three minutes over a 48-h period (960 samples per run). In each fault simulation, the first 160 samples correspond to normal operation, and the remaining 800 samples represent the abnormal state. The dataset contains 11 manipulated variables, –, and 41 process measurements, –.

The benchmark includes 21 faults spanning diverse disturbance types. Table 1 summarizes each fault along with its identifier, disturbance type, and affected variable or mechanism. Faults 16–20 were originally labeled as unknown in the original benchmark; Bathelt et al. [42] later clarified the mechanisms for Faults 16–19, while Fault 20 was analyzed in our previous work [43], where its disturbance mechanism and process effects were described.

Table 1.

Summary of fault scenarios in the TEP benchmark, as reported in [36,41,42].

3.2. Data Preprocessing

We selected 33 process variables from the benchmark data. Variables to , corresponding to analyzer measurements in the reactor feed, purge stream, and final product stream, were excluded because analyzer data are rarely available in real time [8]. Each observation corresponds to a single time step, and no temporal windowing was applied. In order to assess the data efficiency of the autoencoder models, we generated thirteen fault-free subsets ranging from 625 to 250,000 samples (see Table 2). Each followed an 80/20 training-validation split. Furthermore, to preserve temporal structure, the data were split at the simulation level: entire simulation runs were randomly assigned to training and validation, truncating the last included run (in either partition) when necessary to match the target number of samples.

Table 2.

Subset sizes (prior to train/validation split) used for training autoencoder variants.

A z-score normalization scaler was fitted to the training set and applied consistently to both data partitions. The fitted scaler was saved and reused during inference to ensure consistent preprocessing. To support reproducibility, fixed random seeds were used for data partitioning and shared across model variants to ensure matched training conditions. Additional implementation details are provided in Section 3.4.

3.3. Process Monitoring Models

All models process input samples with 33 standardized features (see Section 3.1), following the benchmarking setup of Cacciarelli and Kulahci [8]. Consistent with that study, the PCA baseline retains 14 principal components, while the autoencoder models compress the inputs into a 25-dimensional latent space. Each autoencoder differs only in the parameterization of its encoder–decoder mappings and is trained to minimize the mean squared reconstruction error (Equation (2)), with additional regularization terms applied according to their architecture. Specifically, the OAE employs orthogonality regularization (Equation (3)), while EfficientKAN-AE introduces both L1 and entropy-based penalties (Equations (A3) and (A4)). Additionally, decoupled weight decay [44] is applied across all models as a shared regularization strategy. Table 3 summarizes the architectural and regularization settings.

Table 3.

Summary of process monitoring model configurations.

3.4. Autoencoders Training Procedure

Each AE variant was trained independently on each subset of fault-free data (see Section 3.2), using 30 random seeds per configuration to capture stochastic effects from weight initialization and mini-batch sampling. This setup produced 390 independent training runs per model type.

Training employed the AdamW optimizer with a mini-batch size and a maximum of epochs. Mixed-precision computation, enabled through PyTorch (v2.5.1+cu121) and its AMP module, accelerated training and reduced memory usage. A ReduceLROnPlateau scheduler decreased the learning rate after five validation epochs without improvement, and early stopping was triggered after 15 epochs without improvement. These settings were fixed across all AE variants. The initial learning rate , weight decay, scheduler reduction factor, and regularization coefficients were tuned for each AE variant using the Tree-Structured Parzen Estimator (TPE) algorithm [45], implemented through the Optuna framework [46]. Hyperparameter optimization used a fixed subset of 625 fault-free samples with an 80/20 training-validation split. Because fault labels were not used during training, the validation reconstruction loss served as an unsupervised proxy for detection performance. The optimized hyperparameters for each model are summarized in Table 4.

Table 4.

Optimized training hyperparameters for each autoencoder variant (via TPE).

Optimizing the hyperparameters for each architecture ensured that all models were evaluated under comparable and near-optimal conditions, enabling a fair and meaningful performance comparison.

3.5. Fault Detection

PCA-based models and autoencoders are widely used for fault detection in industrial systems, particularly when labeled fault data are scarce. Trained solely on normal process data, these models learn compact latent representations that enable accurate reconstruction of fault-free observations. Faults disrupt these learned patterns, leading to elevated reconstruction errors that signal abnormal behavior.

Two statistics are commonly employed in fault detection: the squared prediction error (SPE), or Q-statistic, and Hotelling’s statistic. The SPE measures the difference between an input and its reconstruction, whereas Hotelling’s quantifies deviations of latent features from the center of the fault-free distribution. Although both can be combined to improve sensitivity and robustness, this work focuses on the SPE.

For an input sample , the SPE is defined as:

where is the reconstruction of . High values indicate poor reconstruction, suggesting the presence of a fault.

A detection threshold is obtained using kernel density estimation (KDE), a nonparametric approach that avoids assumptions about the distribution of SPE values and flexibly models nonlinear reconstruction behavior. The estimated probability density function is given by:

where are the observed SPE values under normal conditions, is a Gaussian kernel function, and h is the bandwidth determined via Scott’s Rule [47]:

where is the standard deviation of values and n is the number of training samples.

The detection threshold corresponds to the -quantile of the estimated density:

where is the significance level. In this study, we set , corresponding to an expected false alarm rate of approximately 5% under normal operating conditions.

3.6. Monitoring Performance Evaluation

Each trained model was evaluated on a fixed test set containing both fault-free and faulty samples. A sample was classified as normal or faulty according to the threshold ; samples with are flagged as faulty.

Fault detection performance was quantified using two standard metrics: the fault detection rate (FDR) and the false alarm rate (FAR), defined as:

where TP, FP, FN, and TN denote the number of true positives, false positives, false negatives, and true negatives, respectively. FDR represents the proportion of actual faults correctly detected, while FAR measures the frequency of false alarms. Together, these metrics describe the trade-off between sensitivity and specificity. Robust detection requires models that maintain high FDR with consistently low FAR.

3.7. Computational Efficiency Benchmark

The computational efficiency of the monitoring nonlinear models in Table 3 was evaluated on the TEP dataset using the training configuration described in Section 3.4, including each model’s respective loss function and regularization terms. A dedicated PyTorch benchmarking routine was executed to measure the average forward and backward pass durations per batch on both CPU and GPU devices. Benchmarks were conducted on a workstation equipped with a 12th Gen Intel® Core™ i9-12900H processor (20 cores, 2.5 GHz, 16 GB RAM) and an NVIDIA® GeForce RTX 3070 Ti Laptop GPU (8 GB memory).

Each benchmark included 50 warm-up batches to stabilize hardware utilization, followed by 500 timed iterations. Forward and backward durations were recorded using CUDA events on the GPU and wall-clock timing on the CPU, and mean values with 95% confidence intervals were computed across timed batches to quantify runtime variability. Because KAN-based architectures are known to be computationally heavier than conventional neural networks [48,49,50] due to the evaluation of multiple basis functions along each network edge, performance is reported in terms of slowdown rather than speed-up. The OAE served as the baseline, and relative slowdown factors were computed by dividing each model’s mean forward or backward pass duration by that of the OAE on the same device. The resulting slowdown ratios and timing statistics are presented and discussed in Section 4.3.

3.8. Bayesian Signed-Rank Test for Model Comparison

While the FDR summarizes average performance, it does not reveal whether performance differences between models are statistically meaningful. To address this, we applied the Bayesian signed-rank test proposed by Benavoli et al. [51,52]. This method estimates the posterior probability that one model is practically superior to another across multiple fault scenarios, accounting for uncertainty in the results and defining a region of practical equivalence (ROPE) that treats small performance differences as negligible. In this study, the ROPE radius was set to , corresponding to a absolute change in FDR.

For each model pair, the test yields three probabilities: (model A better), (practically equivalent), and (model B better). These probabilities provide a probabilistic assessment of relative model performance rather than a binary significance outcome.

4. Results and Discussion

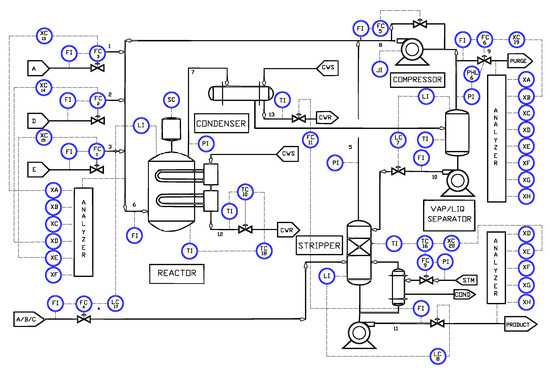

4.1. Performance Scaling with Training Set Size

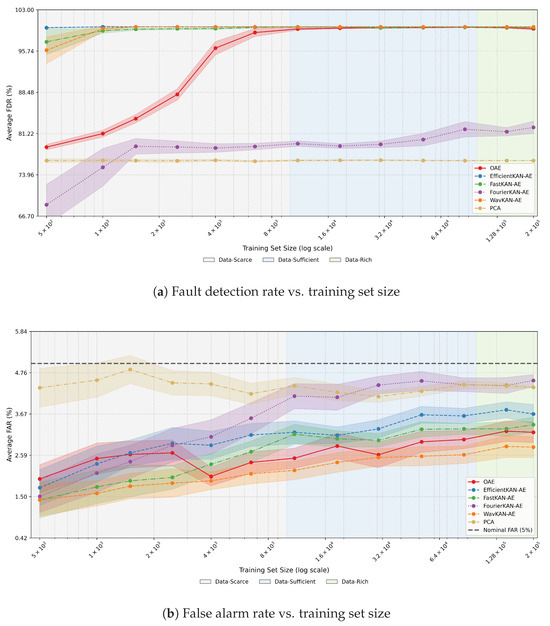

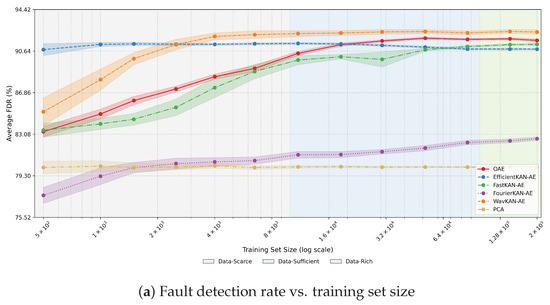

To understand how model performance varies across both fault type and data availability, we follow the classification proposed by Sun et al. [5], which distinguishes three fault categories: controllable, back-to-control, and uncontrollable. High FDR values are desirable for back-to-control and uncontrollable faults, as they indicate timely detection of abnormal behavior. In contrast, elevated FDRs for controllable faults may signal excessive sensitivity to normal fluctuations and result in unnecessary process interventions. An effective monitoring system should maintain the FDR near the nominal false alarm level for controllable faults while achieving high detection rates for the other two categories. Building on this distinction, training set sizes () were grouped into three regimes: data-scarce (), data-sufficient (), and data-rich (). For each model, FDRs were first averaged across faults within each category and then across training runs. Figure 3, Figure 4 and Figure 5 summarize these results, with shaded 95% confidence intervals representing variability across training runs.

Figure 3.

Influence of training sample size on monitoring performance for controllable faults.

Figure 4.

Influence of training sample size on monitoring performance for back-to-control faults.

Figure 5.

Influence of training sample size on monitoring performance for uncontrollable faults.

4.1.1. Controllable Faults

For controllable faults, an ideal monitoring system should maintain a low and stable fault detection rate, as excessive alarms can lead to unnecessary interventions. Figure 3a summarizes FDR trends, indicating that the average rate for nonlinear models remained within a narrow 4–5.5% range across all training regimes. This consistency shows that increasing the amount of fault-free data had little influence on sensitivity to minor, self-correcting disturbances. Among nonlinear models, OAE displayed nearly constant performance, whereas EfficientKAN-AE showed a slight decline in FDR with larger datasets. FastKAN-AE achieved the strongest improvement, reaching an average FDR of 4.6% as increased. In contrast, WavKAN-AE and FourierKAN-AE exhibited small FDR increases, implying mild over-sensitivity to nominal process variability.

The false alarm rates (see Figure 3b) complement these observations. EfficientKAN-AE and FourierKAN-AE consistently achieved the lowest FAR values (4–4.5%), while OAE and WavKAN-AE maintained slightly higher yet acceptable rates around 5%. Notably, FastKAN-AE exhibited a moderate rise in FAR at larger training sizes, suggesting a conservative detection bias. The PCA baseline performed worst on both metrics, yielding the highest FDR (≈7.6%) and FAR (≈5.7%) at higher data volumes. This underscores the advantage of nonlinear models in distinguishing normal fluctuations from genuine fault conditions.

4.1.2. Back-to-Control Faults

For back-to-control faults, high fault detection rates are desirable, as they reflect the model’s ability to detect persistent process deviations. Detection performance improved steadily with increasing (Figure 4a). Among KAN-AE variants, EfficientKAN-AE and WavKAN-AE reached near-perfect FDRs with as few as 1000 samples and maintained this level thereafter, highlighting their strong data efficiency in capturing deviations in manipulated variables. FastKAN-AE followed a similar trajectory but required larger data volumes to reach peak performance, indicating reduced data efficiency in the data-scarce regime. The OAE model improved more gradually, attaining high FDRs only in the data-sufficient regime, suggesting that conventional orthogonal regularization benefits from larger sample diversity. In contrast, FourierKAN-AE consistently underperformed, with FDR values plateauing below those of the other models; this may result from the limited flexibility of its low-order global basis functions. Finally, the PCA baseline achieved a consistent, albeit limited, performance of approximately 76% regardless of data volume. Taken together, the results show that KAN-AEs achieve reliable detection of back-to-control faults with substantially fewer training samples than PCA and OAE, confirming their superior data efficiency for this fault category.

False alarm rates for all nonlinear models exhibited a modest upward trend with increasing but remained substantially below the nominal FAR, holding between 2–4% (Figure 4b). This indicates that the FDR gains did not result in an undue increase in false positives. The PCA baseline maintained a FAR of approximately 4.5%, indicating satisfactory performance, though consistently below that of the nonlinear models.

4.1.3. Uncontrollable Faults

In the case of uncontrollable faults, high detection rates are essential, as these events represent severe process deviations that cannot self-correct. Detection performance improved consistently with increasing across all nonlinear models, as illustrated in Figure 5a, although the rate and extent of improvement varied. EfficientKAN-AE achieved the highest initial FDR at and maintained stable performance throughout, with only a minor dip in the data-sufficient regime, possibly due to over-regularization. WavKAN-AE started lower but improved rapidly, reaching over 92% FDR with just 2500 training samples, which demonstrates strong data efficiency and effective wavelet-based feature extraction. FastKAN-AE showed a slower response, with higher variability across runs and strong performance only in the data-rich regime. OAE improved gradually, achieving competitive FDRs in the data-sufficient regime but never surpassing WavKAN-AE even at larger data volumes. FourierKAN-AE consistently lagged behind, reflecting the limited expressivity of its global basis representation. The PCA baseline showed stable but much lower FDR (≈80%) across all training sizes. Overall, KAN-AEs demonstrated superior data efficiency in detecting uncontrollable faults. Note that these high FDR values did not lead to a substantial increase in false positives (see Figure 5b). All models maintained an acceptable FAR behavior, generally below the nominal 5% threshold. The nonlinear architectures showed stable performance within the 2–3% range, except for FastKAN-AE, which exhibited a mild increase approaching 5% in the largest data regime. PCA yielded slightly higher values around 4–5.5%. These results confirm that all models, particularly the KAN-AE variants, effectively distinguished severe faults from nominal operation.

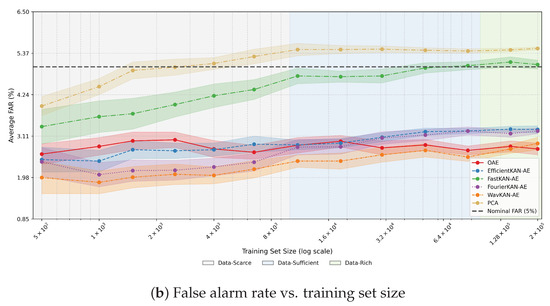

4.2. Model Behavior Under Data-Scarcity (1000 Samples)

The previous analysis of monitoring performance trends averaged fault detection rate (FDR) values across the fault clusters defined by Sun et al. [5]. While this approach is useful for identifying overall model behavior as the amount of training data increases, it conceals model-specific differences among individual fault scenarios. In practice, assessing the robustness and generalization of monitoring models requires understanding which specific faults each model can detect reliably and which remain challenging. Accordingly, this subsection examines model behavior under a data-scarce regime using normal samples. The corresponding FDR results for each model and fault scenario are summarized in Table 5. This configuration closely matches the dataset sizes employed in prior TEP monitoring studies and provides a representative benchmark for evaluating model performance when only limited fault-free data are available. As controllable faults were previously shown to maintain fault detection rates close to the nominal false-alarm level across all models, they are not further analyzed here, and the discussion focuses instead on back-to-control and uncontrollable fault categories where performance differences are more pronounced.

Table 5.

Fault Detection Rate (FDR) per fault for each model (1000 training samples). Values are reported as mean ± 95% confidence interval, expressed as percentages.

Within the step fault category, all models achieved detection rates above 98%, demonstrating consistent detection of large, steady-state deviations. Fault 5, however, proved considerably more challenging (see Figure 6a). Although the control system restored the measured process variables to their nominal values, the cooling water valve position remained outside the normal operating region. Models that do not capture the coupling between manipulated and controlled variables failed to recognize this deviation, as seen in PCA, OAE, and FourierKAN-AE. In contrast, EfficientKAN-AE, FastKAN-AE, and WavKAN-AE achieved near-perfect detection (98–100%), indicating that their nonlinear parameterization captured subtle correlations between valve position and the system’s thermal balance.

Figure 6.

Temporal evolution of SPE values across models under Faults 5, 16, and 19. All models were trained on 1000 fault-free samples.

Faults arising from random deviations in process parameters or from sticking valves constitute one of the most challenging categories for process monitoring. Their detectability depends strongly on how the disturbance propagates through the process. When the induced variability drives key measured variables far outside the normal operating region, the fault becomes easy to detect despite the fluctuations in the monitoring index, as observed for faults 12, 14, 17, and 18. In other cases, where the disturbance is partially compensated by control action or results from valve stiction, the monitoring index often fluctuates around or even drops below the detection threshold during the fault window, leading to missed detections as depicted in Figure 6b,c. This behavior reflects the inability of static reconstruction models to capture temporal dependencies and to respond consistently to delayed or fluctuating fault signatures. The limitation is not unique to KAN-AEs but applies broadly to all models that lack explicit temporal dynamics. Incorporating recurrent or sequence-aware architectures, such as RNNs or LSTMs, could mitigate this issue by embedding temporal context into the latent representation. Within the static model class, EfficientKAN-AE consistently outperformed the other architectures, demonstrating greater sensitivity to time-varying disturbances.

4.3. Computational Efficiency

Table 6 summarizes the average forward and backward pass durations per training batch and slowdown ratios for all autoencoder variants on CPU and GPU. Slowdown ratios indicate the factor by which computation times increase relative to the OAE baseline on the same device. As expected, using a basis expansion to define KAN edge functions (see Appendix A) increases computational cost in KAN-AEs compared with the MLP-based OAE. On the CPU, slowdown ratios range from 2 to 7×, while on the GPU they range from 1.4 to 5.6× depending on the variant. FastKAN-AE and FourierKAN-AE exhibit the lowest overheads (GPU slowdowns < 2.5×), whereas EfficientKAN-AE and WavKAN-AE are notably slower.

Table 6.

Average forward and backward pass durations (mean ± 95% CI) and slowdown ratios for all autoencoder variants on CPU and GPU.

Nevertheless, the absolute per-batch times remain modest. Even the slowest variant, EfficientKAN-AE, requires only ≈ for a forward pass on GPU, while the fastest models (FastKAN-AE and FourierKAN-AE) complete the same operation in ≈. These latencies correspond to processing rates above , several orders of magnitude faster than the sampling interval of the TEP. Inference speed is therefore not a limiting factor for real-time deployment.

Beyond inference, full retraining is also computationally feasible. With a batch size of and fault-free samples, the slowest model (EfficientKAN-AE) completes an epoch in ≈ on GPU and ≈ on CPU, leading to total retraining times of ≈ and ≈, respectively, for 600 epochs. For comparison, the OAE baseline requires only 3–4 min under identical conditions. Thus, even the most computationally demanding KAN-AE variant is less than a factor of three slower than the OAE, a difference that is negligible in practical retraining scenarios.

The analysis above assumes that hyperparameter tuning has already been completed and that models are retrained using fixed, optimized settings. If extensive hyperparameter searches are included (e.g., 500 configurations via grid or TPE search), the total wall-clock time increases proportionally to the per-epoch cost. Under the same settings (, ), EfficientKAN-AE (GPU) requires approximately per epoch, corresponding to ≈ per trial (100 epochs) or ≈ for 500 trials on a single GPU. In contrast, the OAE completes a trial in ≈, or ≈ for 500 trials. While parallelization can reduce overall tuning time, KAN-based architectures remain inherently more computationally demanding during large-scale hyperparameter optimization.

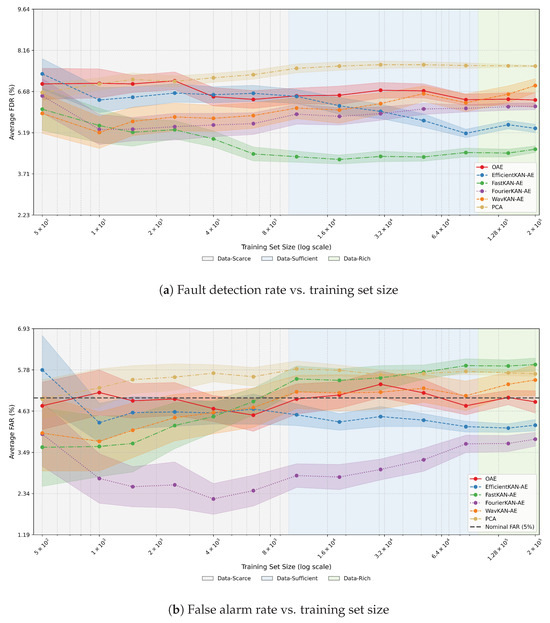

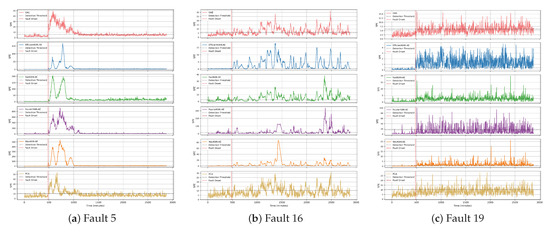

4.4. Bayesian Comparison Across Training Set Sizes

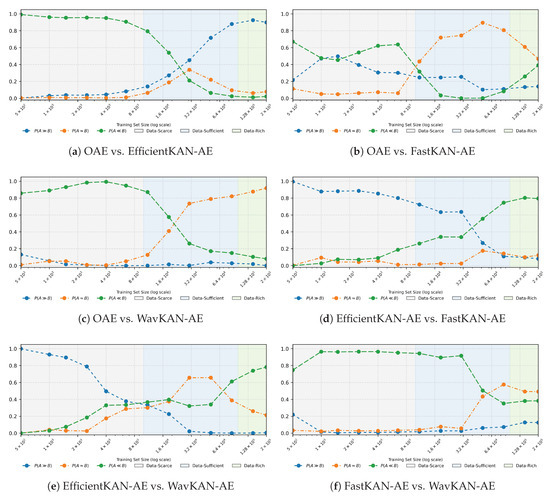

To determine whether observed differences in detection performance among nonlinear methods were statistically meaningful, we applied the Bayesian signed-rank test (Section 3.8). The analysis focused on a subset of challenging faults, where performance differences were most likely to emerge. Faults in which PCA achieved near-perfect detection (FDR , as reported in [5]) and controllable faults, where high FDRs may reflect over-sensitivity rather than genuine detection capability, were excluded. The resulting evaluation set comprised nine faults: Fault 5, 10, 11, 16–21, each treated as an independent comparison unit. For each model pair, we computed the posterior probabilities , , and , which represent the belief that model A is practically superior to model B, that their performance is equivalent within the ROPE, or that model B is better. These probabilities were tracked across training set sizes to reveal how relative performance evolved as more data became available. The resulting trends complement the mean FDR profiles discussed in Section 4.1, providing a probabilistic basis for model selection as a function of .

FourierKAN-AE was omitted from this analysis because of its consistently poor performance across fault types and training set sizes. Including it in pairwise tests would not yield informative distinctions, since posterior comparisons involving clearly dominated models tend to collapse onto the extremes (e.g., ), offering little inferential value.

4.4.1. Orthogonal Autoencoder vs. KAN-Based Autoencoders

As shown in Figure 7a, EfficientKAN-AE demonstrated a clear advantage in the data-scarce regime. For , the posterior mass lay overwhelmingly in (>0.90), peaking at 0.992 for . This provides strong evidence that EfficientKAN-AE outperforms OAE when data are limited. However, this advantage diminished steadily as increased. Between 11,000 and 30,000 training samples, the posterior probability shifted toward the ROPE, and eventually to , indicating a reversal in relative performance. By 51,500, OAE was favored with , and this belief strengthened to by 144,000 training samples. These results highlight EfficientKAN-AE’s strong data efficiency but also its declining competitiveness as more training data became available.

Figure 7.

Evolution of Bayesian signed-rank probabilities across training regimes. Each panel compares two autoencoder variants: (a) OAE vs. EfficientKAN-AE, (b) OAE vs. FastKAN-AE, (c) OAE vs. WavKAN-AE, (d) EfficientKAN-AE vs. FastKAN-AE, (e) EfficientKAN-AE vs. WavKAN-AE, and (f) FastKAN-AE vs. WavKAN-AE. Shaded zones indicate data-scarce, data-sufficient, and data-rich regimes.

FastKAN-AE showed a more ambiguous performance profile. At smaller values (4000–6500), it held a modest advantage, with peaking at 0.64. Yet, this advantage was never decisive, and the posterior mass shifted rapidly toward the ROPE as increased. Beyond 18,500 samples, practical equivalence dominated the posterior (), reaching 0.90 at 51,500 training samples. Notably, FastKAN was never overtaken by OAE, as remained consistently low, but it also failed to establish a clear lead, with never exceeding 0.70 (see Figure 7b). This pattern indicates that FastKAN-AE converged toward performance parity with OAE across most data regimes, offering only marginal gains in detection capability.

WavKAN-AE delivered the most robust early-stage performance. For , exceeded 0.94, peaking at 0.993 for . As increased, the posterior mass migrated toward the ROPE, reflecting a transition toward practical equivalence rather than a reversal in relative performance (Figure 7c). By 30,500 training samples, ROPE accounted for 74% of the posterior mass, rising to 92% at 200,000 samples. Importantly, remained near zero throughout, showing that OAE never overtook WavKAN-AE. This underscores WavKAN-AE’s robustness in data-scarce regimes and its capacity to maintain competitive performance as data availability increased, without the degradation observed for EfficientKAN-AE.

Collectively, these results reveal distinct generalization behaviors across KAN-based architectures. EfficientKAN-AE excelled when data were limited but was ultimately surpassed as the training set grew. FastKAN-AE provided modest gains in low-data regimes and converged toward equivalence with OAE. WavKAN-AE achieved the strongest early-stage performance and maintained competitiveness across all data regimes. These findings emphasize the importance of aligning model choice with data availability: EfficientKAN-AE is preferable under data scarcity, WavKAN-AE offers stable performance across conditions, and OAE remains a competitive option in data-rich scenarios.

4.4.2. Pairwise Comparisons Among KAN-Based Autoencoders

In the data-scarce regime, EfficientKAN-AE consistently outperformed the other KAN-AEs by a substantial margin. The posterior probability mass favoring EfficientKAN-AE exceeded 0.85 in all pairwise comparisons, reaching against FastKAN-AE (Figure 7d) and against WavKAN-AE (Figure 7e). Within the same regime, WavKAN-AE maintained a moderate yet consistent advantage over FastKAN-AE, with posterior mass above 0.74 (Figure 7f), although this margin was considerably smaller than that of EfficientKAN-AE.

As the training set entered the data-sufficient regime, WavKAN-AE emerged as the most robust model. It outperformed FastKAN-AE throughout this range, with in every relevant comparison. Against EfficientKAN-AE, the posterior distribution shifted more gradually: a broad region of practical equivalence initially dominated, but belief in WavKAN-AE’s superior performance strengthened with additional data and eventually overtook EfficientKAN-AE midway through the regime.

In the data-rich regime, both WavKAN-AE and FastKAN-AE surpassed EfficientKAN-AE. Posterior mass favoring FastKAN-AE increased steadily, reaching 0.80 at the upper end of the regime, while WavKAN-AE continued to outperform EfficientKAN-AE with . However, FastKAN-AE never overtook WavKAN-AE since its posterior advantage grew only slightly with data and remained below 0.40 throughout. In large-data comparisons between WavKAN-AE and FastKAN-AE, the posterior mass concentrated within the ROPE, indicating practical equivalence.

4.5. Limitations and Future Work

This study has some limitations worth noting. The evaluation was conducted exclusively on the Tennessee Eastman Process, which, although widely used as a benchmark, does not fully capture the variability and operational complexity of real industrial systems. Extending the analysis to other dynamic benchmarks or real plant data would provide stronger empirical support for the observed trends.

A second limitation is that the current framework does not explicitly account for temporal dependencies among process variables. The autoencoders were trained on static inputs, which capture local correlations but not the time-dependent patterns characteristic of normal operation. Incorporating explicit sequence modeling through recurrent, convolutional, or attention-based architectures could influence the scaling behavior of fault detection performance and potentially modify the relative trends observed between KAN- and MLP-based models. Evaluating such temporal extensions within the same comparative framework would clarify whether the data-efficiency advantages of KANs persist when temporal structure is modeled explicitly.

Finally, hybrid architectures combining KAN and MLP layers were not explored. The present analysis focused exclusively on fully KAN-based or fully MLP-based autoencoders, leaving open the question of whether hybrid designs can better balance data efficiency and computational cost. Architectures in which KAN layers serve as nonlinear feature expansions, or where the encoder employs KAN layers while the decoder remains an MLP, could retain the representational richness of KANs while mitigating their computational overhead. Future comparative studies should examine whether such hybrid configurations provide a more practical trade-off between accuracy, data efficiency and computational cost for industrial fault detection.

5. Conclusions

This study evaluated Kolmogorov–Arnold Autoencoders (KAN-AEs) for unsupervised fault detection in chemical process systems. The results demonstrate that KAN-AEs outperform conventional MLP-based autoencoders under limited data availability but provide no significant advantage in data-rich regimes. Model performance also depended on the choice of basis family in the univariate edge functions: local bases (B-splines, wavelets) were more effective than global ones (Fourier modes) in capturing process variability. Overall, KAN-AEs offer a compact and data-efficient alternative for industrial monitoring applications where historical fault-free data are limited or costly to obtain.

Author Contributions

E.L.-V.: Conceptualization, Methodology, Software, Writing—original draft. V.M.: Supervision, Conceptualization, Methodology, Writing—review & editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC), grant number ALLRP 590260-23.

Data Availability Statement

The original data presented in the study are openly available in the KANAE-ProcessMonitoring GitHub repository at https://github.com/lunavile/KANAE-ProcessMonitoring (accessed on 1 November 2025).

Acknowledgments

This work was supported by the McMaster Advanced Control Consortium (MACC).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Selected KAN Implementations

In Kolmogorov–Arnold Networks (KANs), the functions can be parameterized with different basis families, including B-splines, Fourier modes, and orthogonal polynomials. This appendix details the specific KAN implementations considered in this study.

Appendix A.1. Selection Rationale

The original Kolmogorov–Arnold Network (KAN) formulation is computationally intensive and becomes prohibitive in large-scale, multi-seed experiments. Therefore, this study focused on representative variants that preserve the core functional mapping principle while improving computational efficiency and scalability.

Efficient-KAN was selected to represent the adaptive, localized basis family. As the variant most faithful to the original KAN, it retains the B-spline parameterization, which is well suited for capturing smooth nonlinear dependencies, while substantially reducing memory usage and computational overhead through shared basis evaluations. Fast-KAN was included as a fixed, localized alternative employing radial basis functions (RBFs), allowing faster training and improved numerical stability in larger datasets without significantly compromising expressiveness. Finally, Fourier-KAN and Wav-KAN were incorporated to explore complementary basis families whose behavior in process data remains less established: the Fourier basis may favor periodic components often observed in oscillatory variables, whereas the wavelet basis could potentially capture transient or localized fault patterns. Their inclusion aims to examine whether frequency- or scale-aware representations provide additional benefits over traditional localized mappings.

Appendix A.2. Efficient-KAN

Efficient-KAN is a memory-efficient KAN implementation designed to address the scalability bottlenecks of the original formulation [53]. In the original implementation [15], each function is parameterized as a weighted combination of a fixed base activation and a B-spline expansion:

where is included to stabilize training and improve convergence, are B-spline basis functions of order r, and , , and are learnable parameters. Although this per-edge B-spline formulation enables the network to flexibly capture localized nonlinearities, it requires evaluating a distinct transformation for every input–output edge. This results in large intermediate tensors and high memory usage, particularly in deep networks or large mini-batches. Efficient-KAN mitigates this issue by applying the B-spline basis once per input feature and reusing the resulting activations across all outputs via matrix multiplication, significantly improving memory and computational efficiency.

In Efficient-KAN, the L1 and entropy regularization terms proposed by Liu et al. [15] are approximated using the average absolute value of the spline coefficients, which serves as a proxy for the activity of each edge. Specifically, for edge in layer ℓ, activity is estimated as:

and the total activity in the layer is given by:

The entropy penalty is then computed as:

Appendix A.3. Fast-KAN

Fast-KAN is a computationally streamlined KAN implementation that improves forward-pass efficiency by replacing the adaptive B-spline basis with a fixed set of Gaussian radial basis functions (RBFs) [54]. Each function is expressed as a combination of a base activation and a linear combination of g Gaussian RBFs centered at fixed locations , with common width :

where and are trainable parameters. Unlike B-spline-based KANs, the basis is fixed and not adapted during training. This reduces computational complexity and supports scalability, while retaining per-edge functional flexibility. To keep inputs within the RBF support region, a layer normalization step is often applied before the RBF transformation.

Appendix A.4. Fourier-KAN

Fourier-KAN replaces localized basis expansions with globally supported, periodic functions by parameterizing each learnable function using a truncated Fourier series [55]:

where g denotes the number of Fourier modes, , , and are learnable parameters. The globally supported Fourier basis simplifies implementation by eliminating knot management and promotes stable training through smoother gradients.

Appendix A.5. Wav-KAN

Wav-KAN is a KAN variant proposed by Bozorgasl and Chen [56], in which each learnable function is parameterized as a scaled and shifted instance of a single wavelet:

where , , and are trainable parameters controlling amplitude, translation, and scale, respectively. This direct parameterization eliminates the need for explicit basis expansions or adaptive knot placement, as required in B-spline-based KANs. By assigning one wavelet per edge, WavKAN supports localized adaptation to both low- and high-frequency patterns. This design enables multiresolution representations, allowing the model to capture sharp variations and broader trends simultaneously, which is advantageous for modeling structured signals in industrial process data.

References

- Wang, H.; Chai, T.Y.; Ding, J.L.; Brown, M. Data Driven Fault Diagnosis and Fault Tolerant Control: Some Advances and Possible New Directions. Acta Autom. Sin. 2009, 35, 739–747. [Google Scholar] [CrossRef]

- Kopbayev, A.; Khan, F.; Yang, M.; Halim, S.Z. Fault detection and diagnosis to enhance safety in digitalized process system. Comput. Chem. Eng. 2022, 158, 107609. [Google Scholar] [CrossRef]

- Park, Y.J.; Fan, S.K.S.; Hsu, C.Y. A Review on Fault Detection and Process Diagnostics in Industrial Processes. Processes 2020, 8, 1123. [Google Scholar] [CrossRef]

- Kaupp, L.; Webert, H.; Nazemi, K.; Humm, B.; Simons, S. CONTEXT: An Industry 4.0 Dataset of Contextual Faults in a Smart Factory. Procedia Comput. Sci. 2021, 180, 492–501. [Google Scholar] [CrossRef]

- Sun, W.; Paiva, A.R.; Xu, P.; Sundaram, A.; Braatz, R.D. Fault detection and identification using Bayesian recurrent neural networks. Comput. Chem. Eng. 2020, 141, 106991. [Google Scholar] [CrossRef]

- Tamura, M.; Tsujita, S. A study on the number of principal components and sensitivity of fault detection using PCA. Comput. Chem. Eng. 2007, 31, 1035–1046. [Google Scholar] [CrossRef]

- Ge, Z.; Song, Z. Distributed PCA model for plant-wide process monitoring. Ind. Eng. Chem. Res. 2013, 52, 1947–1957. [Google Scholar] [CrossRef]

- Cacciarelli, D.; Kulahci, M. A novel fault detection and diagnosis approach based on orthogonal autoencoders. Comput. Chem. Eng. 2022, 163, 107853. [Google Scholar] [CrossRef]

- Zhu, J.; Jiang, M.; Liu, Z. Fault detection and diagnosis in industrial processes with variational autoencoder: A comprehensive study. Sensors 2021, 22, 227. [Google Scholar] [CrossRef]

- Ren, X.; Zou, Y.; Zhang, Z. Fault detection and classification with feature representation based on deep residual convolutional neural network. J. Chemom. 2019, 33, e3170. [Google Scholar] [CrossRef]

- Lomov, I.; Lyubimov, M.; Makarov, I.; Zhukov, L.E. Fault detection in Tennessee Eastman process with temporal deep learning models. J. Ind. Inf. Integr. 2021, 23, 100216. [Google Scholar] [CrossRef]

- Kang, J.L. Visualization analysis for fault diagnosis in chemical processes using recurrent neural networks. J. Taiwan Inst. Chem. Eng. 2020, 112, 137–151. [Google Scholar] [CrossRef]

- Cheded, L.; Doraiswami, R. A novel integrated framework for fault diagnosis with application to process safety. Process Saf. Environ. Prot. 2021, 154, 168–188. [Google Scholar] [CrossRef]

- Danesh, T.; Ouaret, R.; Floquet, P.; Negny, S. Hybridization of model-specific and model-agnostic methods for interpretability of Neural network predictions: Application to a power plant. Comput. Chem. Eng. 2023, 176, 108306. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov-Arnold Networks. arXiv 2025, arXiv:2404.19756. [Google Scholar] [CrossRef]

- Ji, T.; Hou, Y.; Zhang, D. A Comprehensive Survey on Kolmogorov Arnold Networks (KAN). arXiv 2025, arXiv:2407.11075v7. [Google Scholar]

- Hou, Y.; Ji, T.; Zhang, D.; Stefanidis, A. Kolmogorov-Arnold Networks: A Critical Assessment of Claims, Performance, and Practical Viability. arXiv 2025, arXiv:2407.11075. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, J.; Cui, X.; Peng, M.; Liang, X. A novel adaptive adjustment Kolmogorov-Arnold network for heat load prediction in district heating systems. Appl. Therm. Eng. 2025, 274, 126552. [Google Scholar] [CrossRef]

- Ellinas, P.; Karampinis, I.; Ventura Nadal, I.; Nellikkath, R.; Vorwerk, J.; Chatzivasileiadis, S. Physics-informed machine learning for power system dynamics: A framework incorporating trustworthiness. Sustain. Energy Grids Netw. 2025, 43, 101818. [Google Scholar] [CrossRef]

- Seydi, S.T.; Sadegh, M. Novel Kolmogorov-Arnold network architectures for accurate flood susceptibility mapping: A comparative study. J. Hydrol. 2025, 661, 133553. [Google Scholar] [CrossRef]

- Tang, S.; Xu, X.; Li, H.; Zhou, T. Unsupervised Detection of Surface Defects in Varistors with Reconstructed Normal Distribution Under Mask Constraints. Appl. Sci. 2025, 15, 10479. [Google Scholar] [CrossRef]

- Li, C.; Xie, W.; Zheng, B.; Yi, Q.; Yang, L.; Hu, B.; Deng, C. An enhanced CLKAN-RF framework for robust anomaly detection in unmanned aerial vehicle sensor data. Knowl.-Based Syst. 2025, 319, 113690. [Google Scholar] [CrossRef]

- Wu, H.; Jiang, Z.; Zhang, X.; Cheng, J. Research on a Novel Unsupervised-Learning-Based Pipeline Leak Detection Method Based on Temporal Kolmogorov–Arnold Network with Autoencoder Integration. Sensors 2025, 25, 384. [Google Scholar] [CrossRef] [PubMed]

- Rigas, S.; Papachristou, M.; Sotiropoulos, I.; Alexandridis, G. Explainable Fault Classification and Severity Diagnosis in Rotating Machinery Using Kolmogorov–Arnold Networks. Entropy 2025, 27, 403. [Google Scholar] [CrossRef] [PubMed]

- Cabral, T.W.; Gomes, F.V.; de Lima, E.R.; Filho, J.C.S.S.; Meloni, L.G.P. Kolmogorov–Arnold Network in the Fault Diagnosis of Oil-Immersed Power Transformers. Sensors 2024, 24, 7585. [Google Scholar] [CrossRef]

- He, J.; Mo, L. WCNN-KAN: A Novel Feature Enhancement Framework for Rotating Machinery Fault Diagnosis. In Proceedings of the 2024 International Conference on Sensing, Measurement & Data Analytics in the era of Artificial Intelligence (ICSMD), Huangshan, China, 31 October–3 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Siddique, M.F.; Ullah, S.; Kim, J.M. A Deep Learning Approach for Fault Diagnosis in Centrifugal Pumps through Wavelet Coherent Analysis and S-Transform Scalograms with CNN-KAN. Comput. Mater. Contin. 2025, 84, 3577–3603. [Google Scholar] [CrossRef]

- Yan, Q.; Yang, Y.; Zhang, C.; Xiong, Z.; Zhong, H.; Xu, Y.; Yang, W. Intelligent monitoring of impact damage within concrete through deep learning-empowered electromechanical impedance technique. Measurement 2025, 253, 117642. [Google Scholar] [CrossRef]

- Zhang, X.; Cheng, Y.; Chen, H.; Cheng, H.; Gao, Y. Refrigerant charge fault diagnosis in VRF systems using Kolmogorov-Arnold networks and their convolutional variants: A comparative analysis with traditional models. Energy Build. 2025, 336, 115608. [Google Scholar] [CrossRef]

- Pearson, K. LIII. On Lines and Planes of Closest Fit to Systems of Points in Space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Hotelling, H. Analysis of a Complex of Statistical Variables into Principal Components. J. Educ. Psychol. 1933, 24, 417–441. [Google Scholar] [CrossRef]

- MacGregor, J.F.; Kourti, T. Statistical Process Control of Multivariate Processes. Control Eng. Pract. 1995, 3, 403–414. [Google Scholar] [CrossRef]

- Kong, X.; Jiang, X.; Zhang, B.; Yuan, J.; Ge, Z. Latent variable models in the era of industrial big data: Extension and beyond. Annu. Rev. Control 2022, 54, 167–199. [Google Scholar] [CrossRef]

- Yu, F.; Hu, R.; Lin, Y.; Ma, Y.; Huang, Z.; Li, W. KAE: Kolmogorov-Arnold Auto-Encoder for Representation Learning. arXiv 2024, arXiv:2501.00420. [Google Scholar] [CrossRef]

- Moradi, M.; Panahi, S.; Bollt, E.; Lai, Y.C. Kolmogorov-Arnold Network Autoencoders. arXiv 2024, arXiv:2410.02077. [Google Scholar] [CrossRef]

- Downs, J.J.; Vogel, E.F. A Plant-Wide Industrial Process Control Problem. Comput. Chem. Eng. 1993, 17, 245–255. [Google Scholar] [CrossRef]

- McAvoy, T.J.; Ye, N. Base control for the Tennessee Eastman problem. Comput. Chem. Eng. 1994, 18, 383–413. [Google Scholar] [CrossRef]

- Lyman, P.R.; Georgakis, C. Plant-wide control of the Tennessee Eastman problem. Comput. Chem. Eng. 1995, 19, 321–331. [Google Scholar] [CrossRef]

- Lawrence Ricker, N. Decentralized control of the Tennessee Eastman Challenge Process. J. Process Control 1996, 6, 205–221. [Google Scholar] [CrossRef]

- Rieth, C.A.; Amsel, B.D.; Tran, R.; Cook, M.B. Additional Tennessee Eastman Process Simulation Data for Anomaly Detection Evaluation; Harvard Dataverse: Cambridge, MA, USA, 2017. [Google Scholar] [CrossRef]

- Russell, E.L.; Chiang, L.H.; Braatz, R.D. Fault detection in industrial processes using canonical variate analysis and dynamic principal component analysis. Chemom. Intell. Lab. Syst. 2000, 51, 81–93. [Google Scholar] [CrossRef]

- Bathelt, A.; Ricker, N.L.; Jelali, M. Revision of the Tennessee eastman process model. IFAC-PapersOnLine 2015, 48, 309–314. [Google Scholar] [CrossRef]

- Villagómez, E.L.; Mahalec, V. Fault detection and identification using a novel process decomposition algorithm for distributed process monitoring. J. Process Control 2025, 149, 103423. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. Adv. Neural Inf. Process. Syst. 2011, 24, 2546–2554. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- Scott, D.W. Multivariate Density Estimation: Theory, Practice, and Visualization; John Wiley & Sons: New York, NY, USA; Chichester, UK, 1992. [Google Scholar]

- Zhang, T.; Shao, S.; Zhang, H.; Zhao, Z.; Zhao, X.; Zhang, X.; Wang, Z.; Wang, G. KansformerEPI: A Deep Learning Framework Integrating KAN and Transformer for Predicting Enhancer–Promoter Interactions. Briefings Bioinform. 2025, 26, bbaf272. [Google Scholar] [CrossRef] [PubMed]

- Allogmani, E. Exploring KAN as a Next-Generation Replacement for MLPs in EEG-Based Seizure Detection. Neurosci. Informatics 2025, 5, 100226. [Google Scholar] [CrossRef]

- Liu, H.; Jia, H.; Li, W.; Li, W.; Yuan, Y. KAN-MoDTI: Drug Target Interaction Prediction Based on Kolmogorov–Arnold Network and Multimodal Feature Fusion. Expert Syst. Appl. 2026, 298, 129828. [Google Scholar] [CrossRef]

- Benavoli, A.; Mangili, F.; Corani, G.; Zaffalon, M.; Ruggeri, F. A Bayesian Wilcoxon signed-rank test based on the dirichlet process. In Proceedings of the 31st International Conference on International Conference on Machine Learning—Volume 32. JMLR.org, Beijing, China, 22–24 June 2014; ICML’14. pp. 1026–1034. [Google Scholar]

- Benavoli, A.; Corani, G.; Demšar, J.; Zaffalon, M. Time for a Change: A Tutorial for Comparing Multiple Classifiers Through Bayesian Analysis. J. Mach. Learn. Res. 2017, 18, 1–36. [Google Scholar]

- Blealtan. Efficient KAN (Kolmogorov-Arnold Network). 2024. Available online: https://github.com/Blealtan/efficient-kan (accessed on 10 May 2025).

- Li, Z. Kolmogorov-Arnold Networks are Radial Basis Function Networks. arXiv 2024, arXiv:2405.06721. [Google Scholar] [CrossRef]

- Xu, J.; Chen, Z.; Li, J.; Yang, S.; Wang, W.; Hu, X.; Ngai, E.C.H. FourierKAN-GCF: Fourier Kolmogorov-Arnold Network—An Effective and Efficient Feature Transformation for Graph Collaborative Filtering. arXiv 2024, arXiv:2406.01034v1. [Google Scholar]

- Bozorgasl, Z.; Chen, H. Wav-KAN: Wavelet Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2405.12832. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).