Classification Prediction of Natural Gas Pipeline Leakage Faults Based on Deep Learning: Employing a Lightweight CNN with Attention Mechanisms

Abstract

1. Introduction

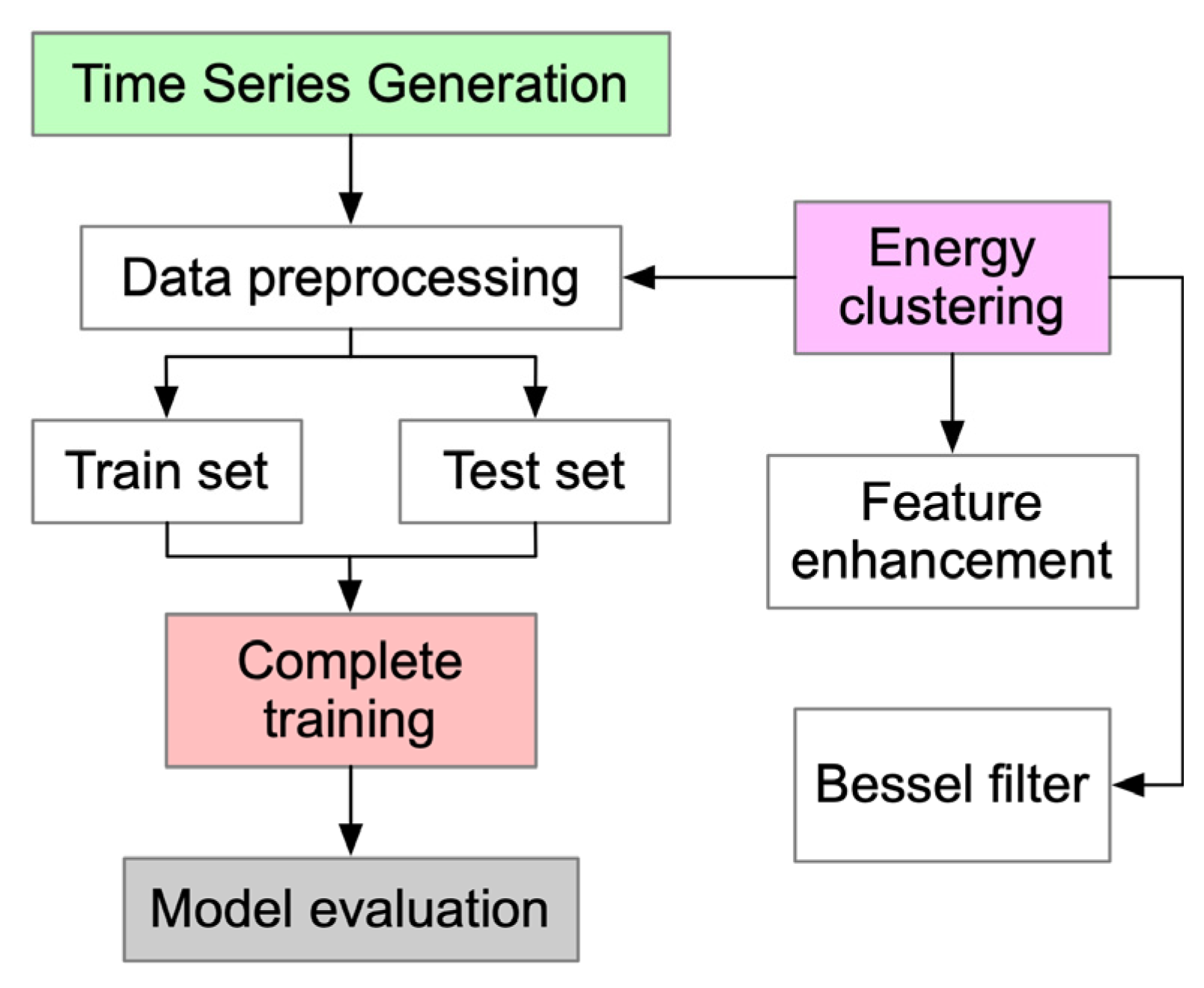

2. Sources of Datasets

3. Methodology

3.1. Clustering Methods for Leakage Acoustic Signals

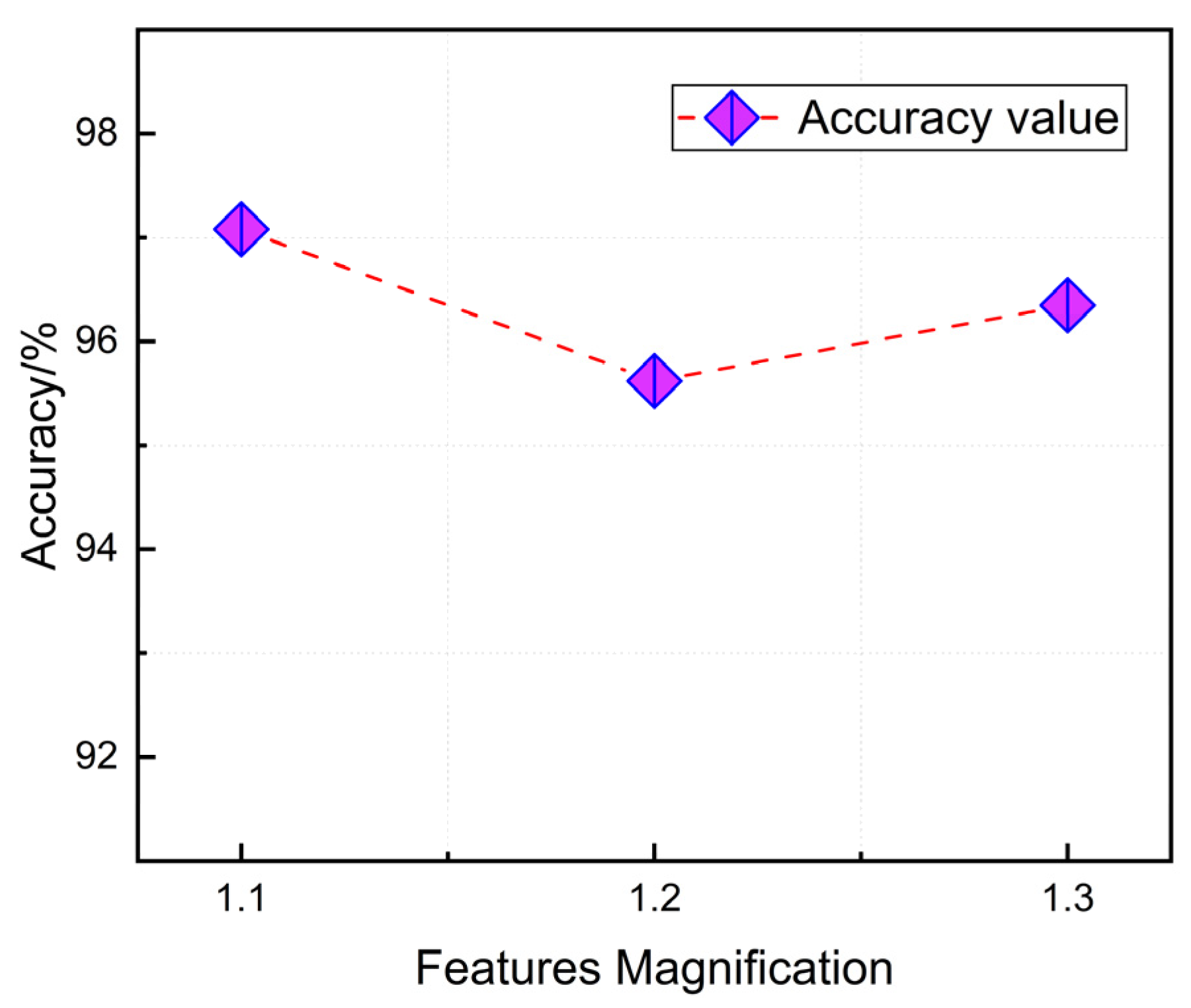

3.1.1. Feature Augmentation

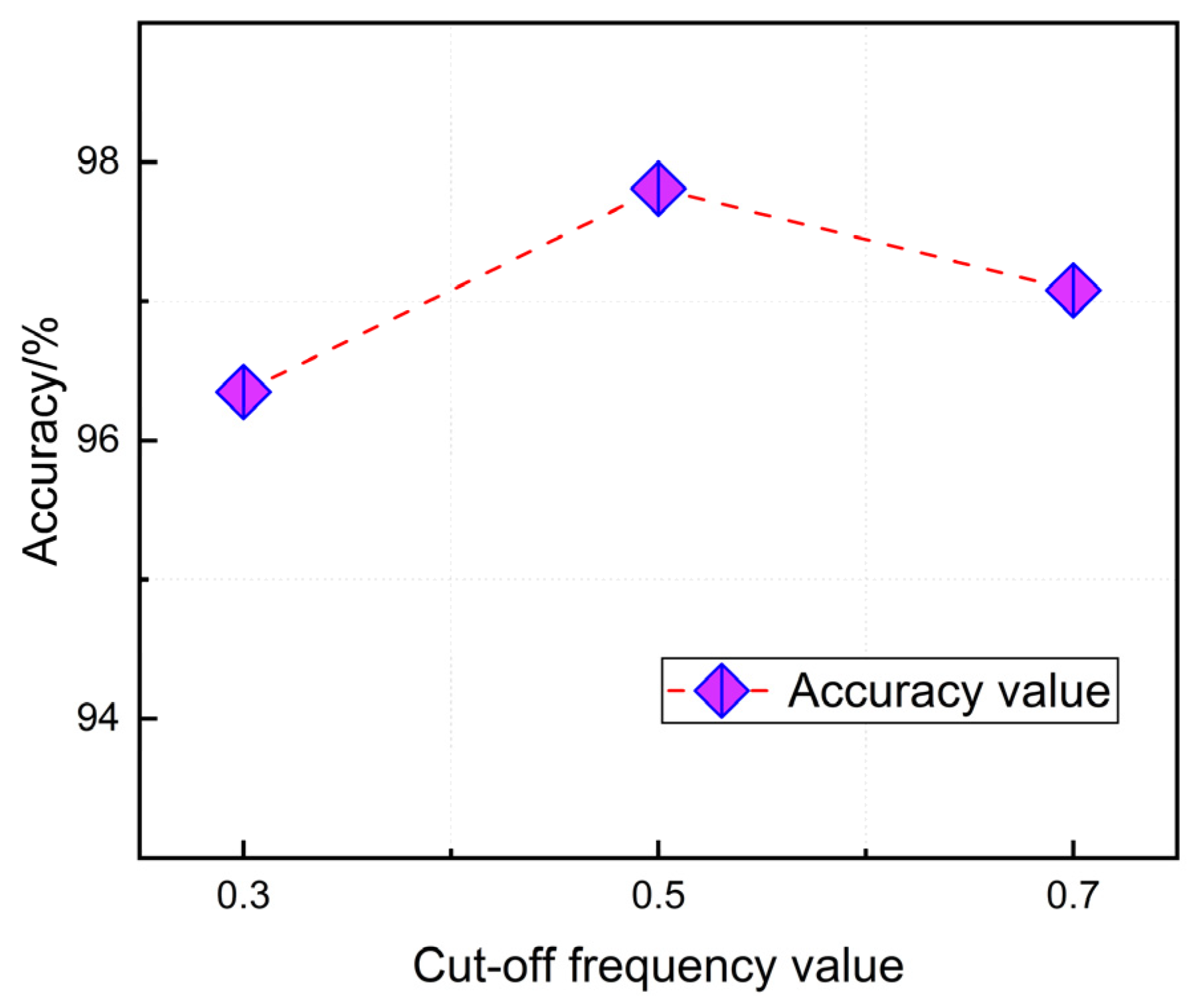

3.1.2. Bessel Filtering

3.2. Convolutional Neural Network

3.3. Optimized Design of the Model

3.3.1. Batch Normalization (BN)

3.3.2. Dropout Process

3.3.3. Channel Attention (CA)

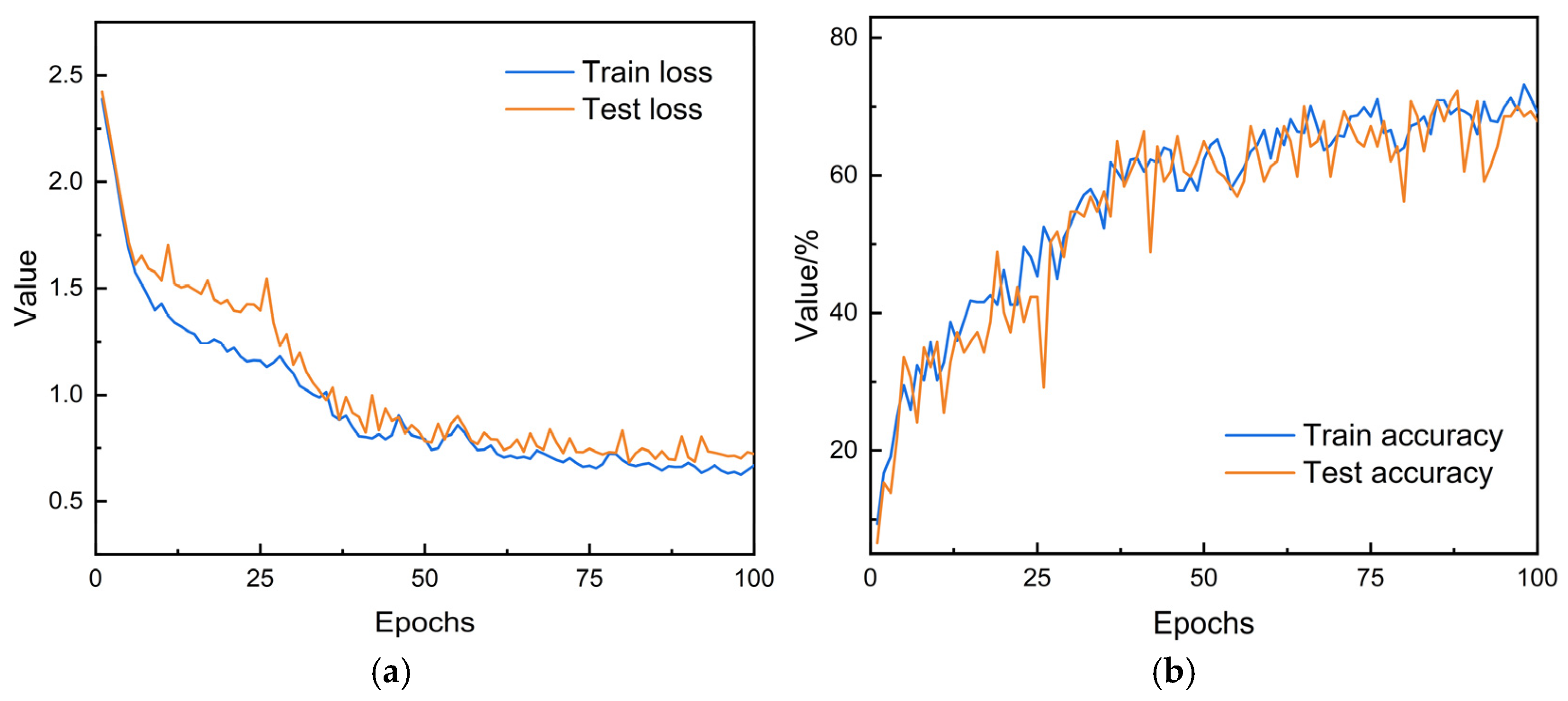

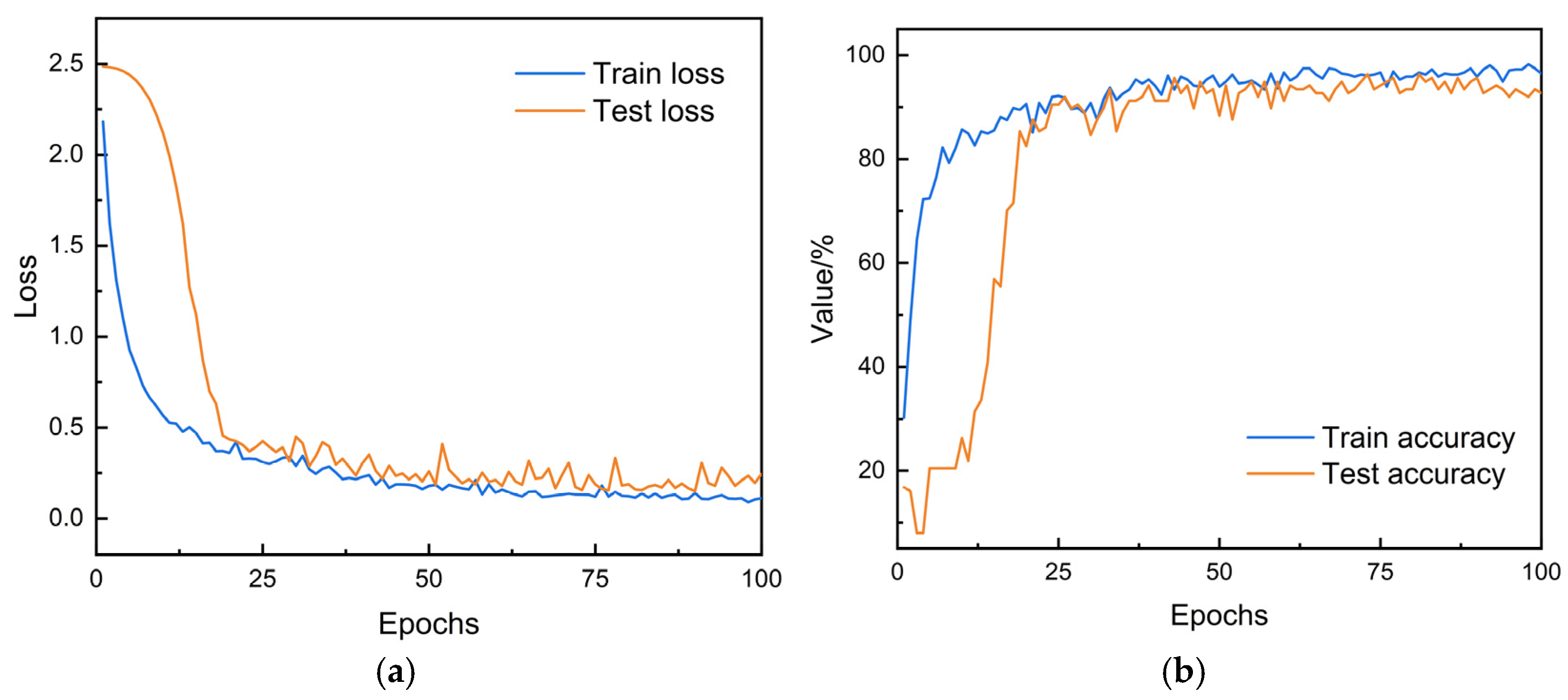

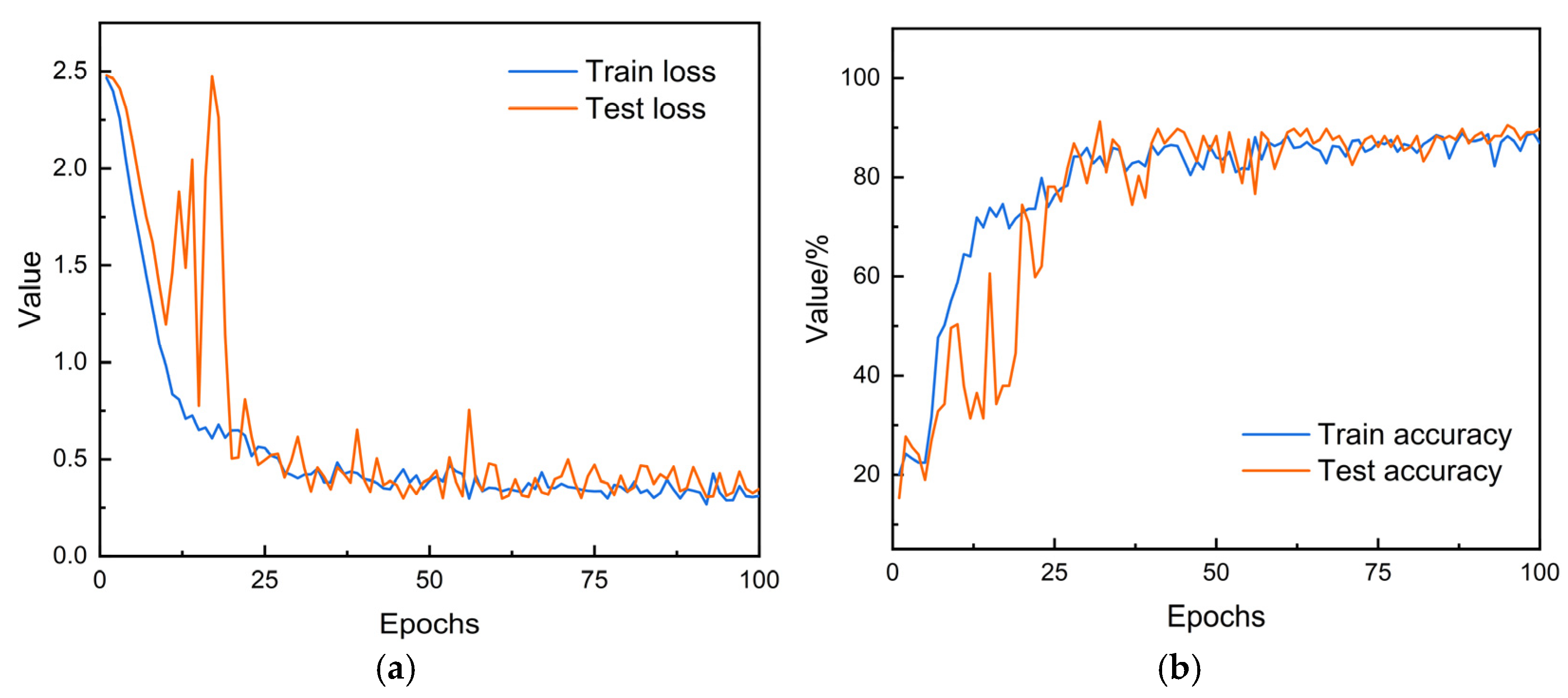

4. Model Training

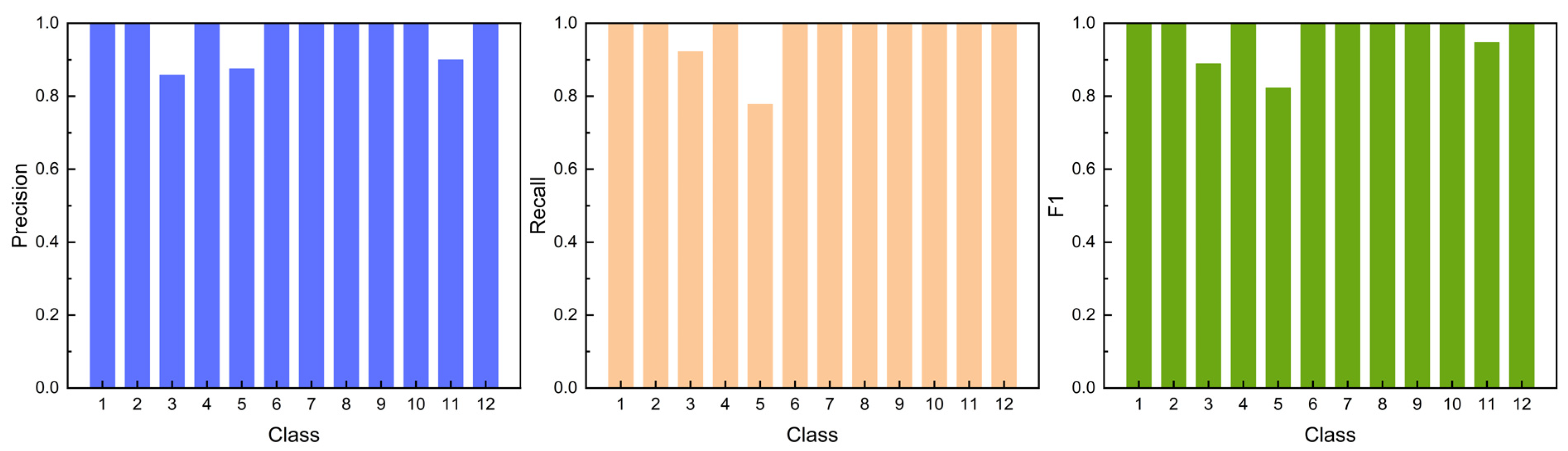

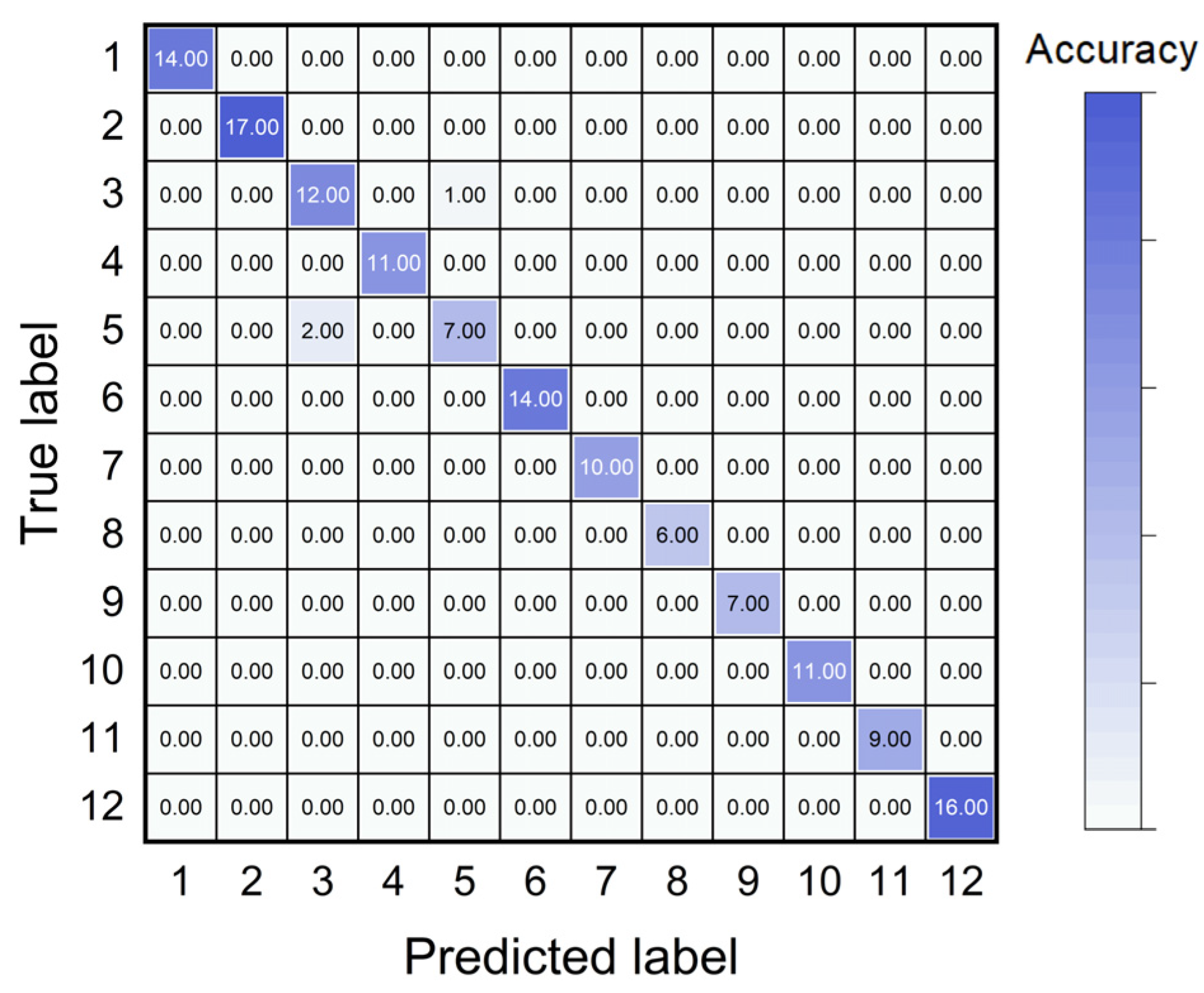

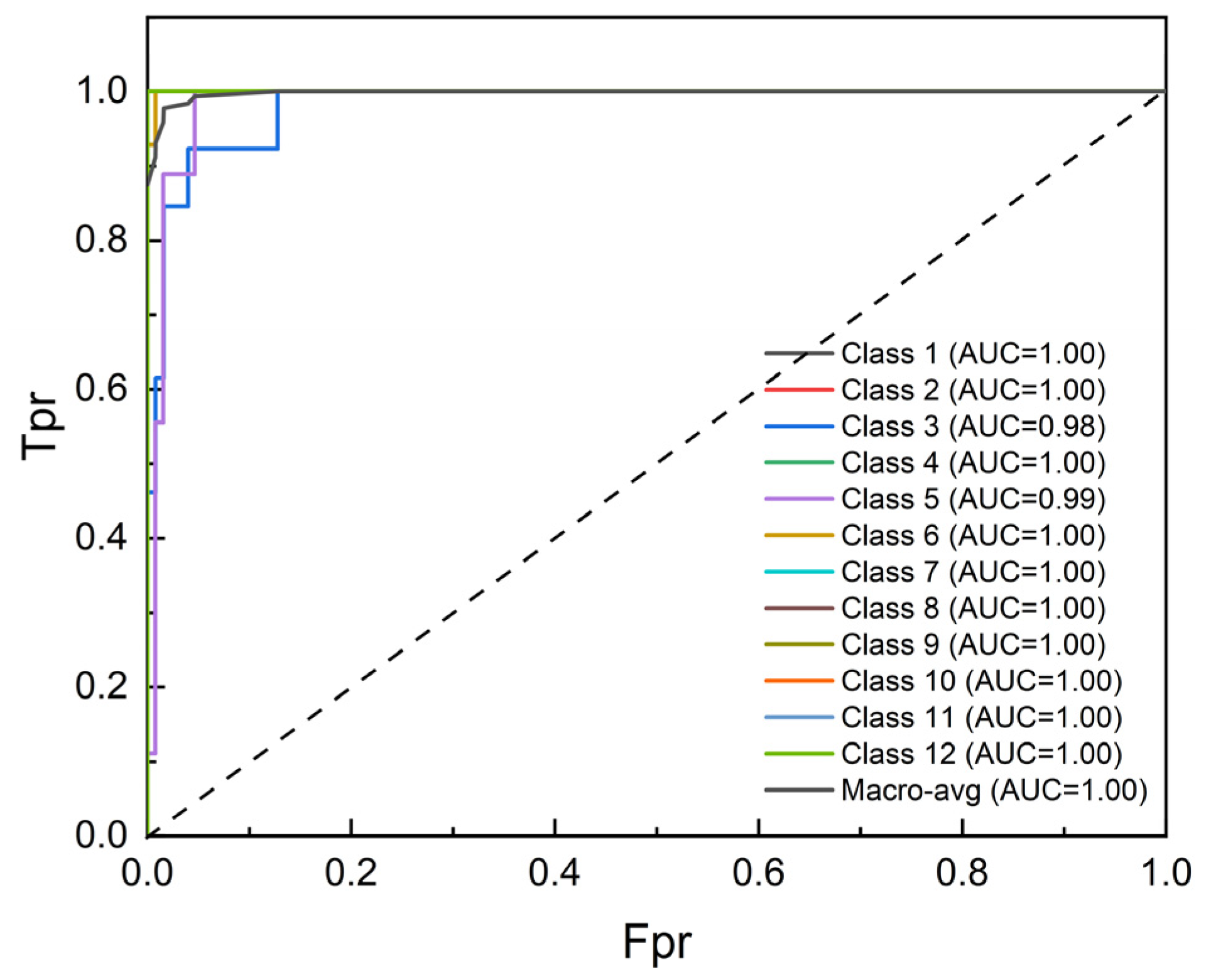

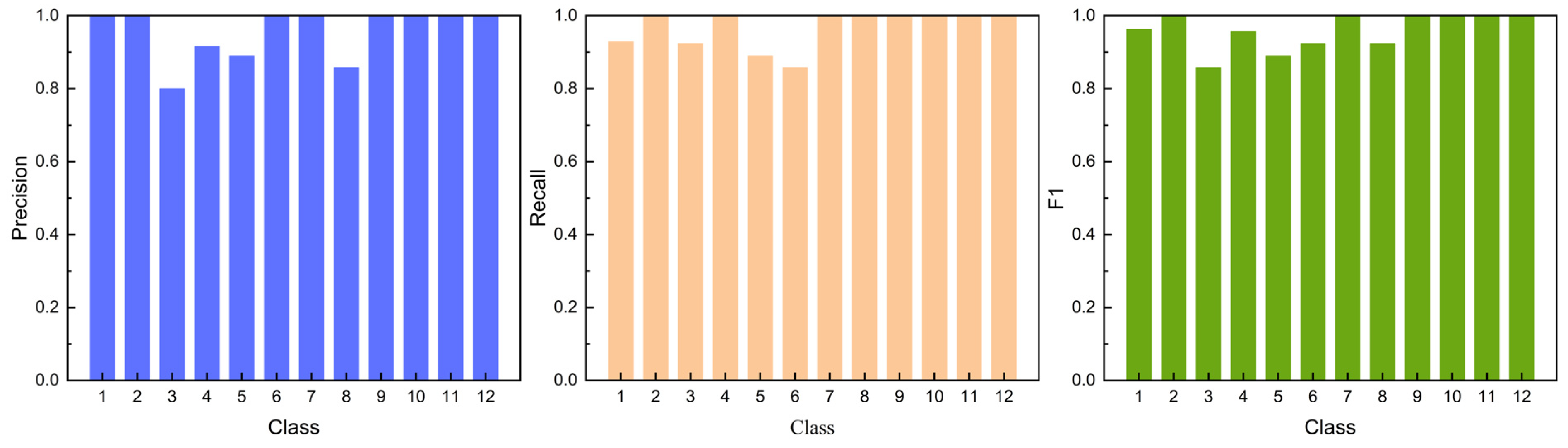

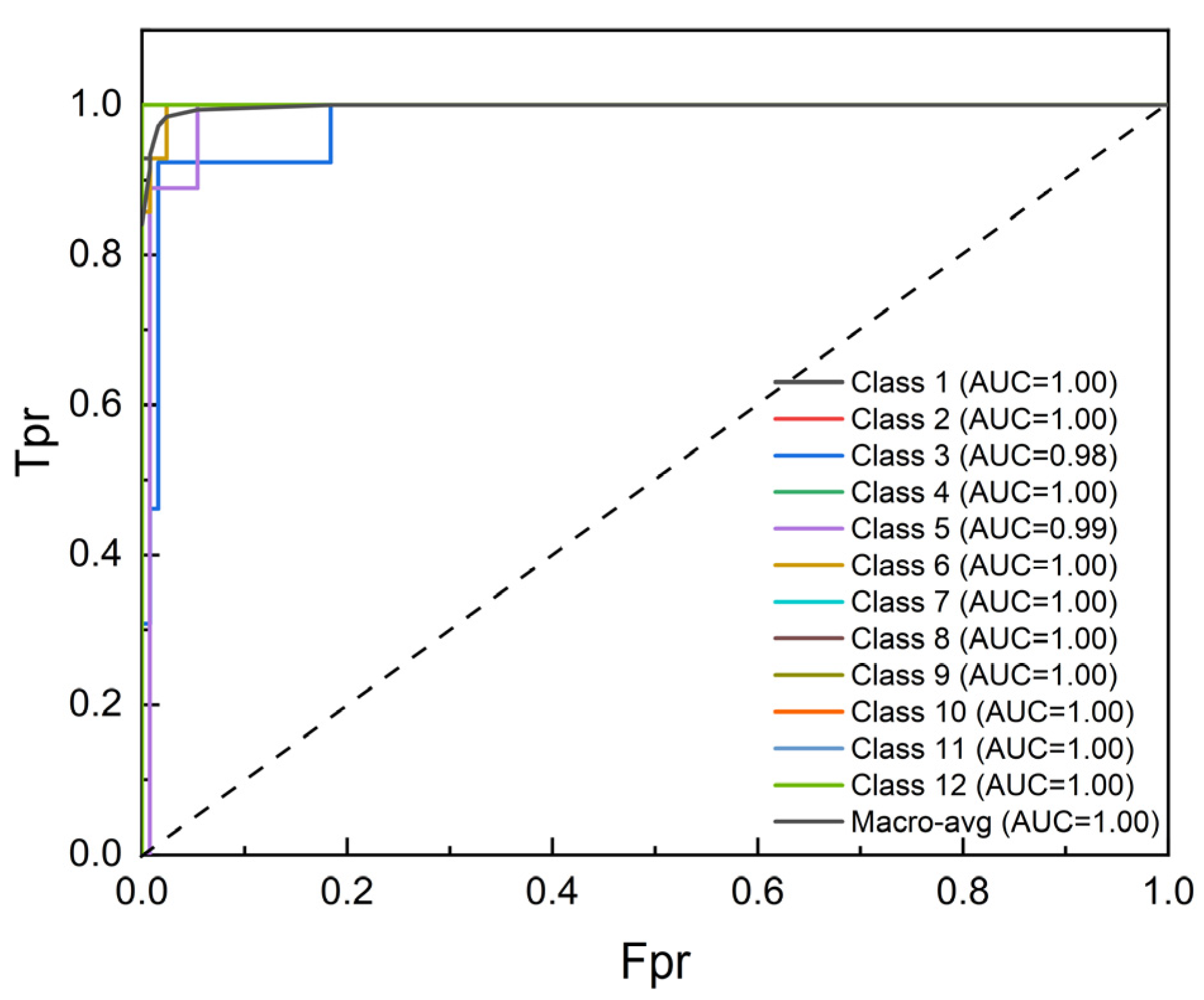

5. Model Performance and Evaluation

6. Discussions

7. Limitations

8. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Feng, Y.; Gao, J.; Yin, X.; Chen, J.; Wu, X. Risk assessment and simulation of gas pipeline leakage based on Markov chain theory. J. Loss Prev. Process Ind. 2024, 91, 105370. [Google Scholar] [CrossRef]

- Zeng, F.; Jiang, Z.; Zheng, D.; Si, M.; Wang, Y. Study on numerical simulation of leakage and diffusion law of parallel buried gas pipelines in tunnels. Process Saf. Environ. Prot. 2023, 177, 258–277. [Google Scholar] [CrossRef]

- Han, H.; Chang, X.; Duan, P.; Li, Y.; Zhu, J.; Kong, Y. Study on the leakage and diffusion behavior of hydrogen-blended natural gas in utility tunnels. J. Loss Prev. Process Ind. 2023, 85, 105151. [Google Scholar] [CrossRef]

- Xia, H.; Liu, W.; Zhang, Y.; Kan, R.; Wang, M.; He, Y.; Cui, Y.; Ruan, J.; Geng, H. An approach of open-path gas sensor based on tunable diode laser absorption spectroscopy. Chin. Opt. Lett. 2008, 6, 437–440. [Google Scholar] [CrossRef]

- You, K.; Zhang, Y.J.; Wang, L.M.; Li, H.; Li, H.B.; He, Y. Improving the Stability of Tunable Diode Laser Sensor for Natural Gas Leakage Monitoring. Adv. Mat. Res. 2013, 760–762, 84–87. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, W.; Zhang, Y.; Shu, X.; Yu, D.; Kan, R.; Dong, J.; Geng, H.; Liu, J. Gas leakage monitoring with scanned-wavelength direct absorption spectroscopy. Chin. Opt. Lett. 2010, 8, 443–446. Available online: https://opg.optica.org/col/abstract.cfm?uri=col-8-5-443 (accessed on 1 September 2025). [CrossRef]

- Zhang, L.; Pang, T.; Zhang, Z.; Sun, P.; Xia, H.; Wu, B.; Guo, Q.; Sigrist, M.W.; Shu, C. A novel compact intrinsic safety full range Methane microprobe sensor using “trans-world” processing method based on near-infrared spectroscopy. Sens. Actuators B Chem. 2021, 334, 129680. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Z.; Sun, P.; Pang, T.; Xia, H.; Cui, X.; Guo, Q.; Sigrist, M.W.; Shu, C.; Shu, Z. A dual-gas sensor for simultaneous detection of methane and acetylene based on time-sharing scanning assisted wavelength modulation spectroscopy. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2020, 239, 118495. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, T.; Paul, A. Dielectric Breakdown-Based Gas Leakage Detector Using Poly-Si Microtips. IEEE Trans. Electron. Devices 2018, 65, 5029–5037. [Google Scholar] [CrossRef]

- Meng, G.; Hu, H.; Qiu, Y.; Chen, S.; Gu, S.; Chang, X.; Du, S. Natural gas leakage: Forward and inverse method of leakage field based on multipath concentration monitoring. Measurement 2025, 240, 115494. [Google Scholar] [CrossRef]

- Yang, H.; Yao, X.F.; Wang, S.; Yuan, L.; Ke, Y.C.; Liu, Y.H. Simultaneous determination of gas leakage location and leakage rate based on local temperature gradient. Measurement 2019, 133, 233–240. [Google Scholar] [CrossRef]

- Miao, X.; Zhao, H. Leakage diagnosis of natural gas pipeline based on multi-source heterogeneous information fusion. Int. J. Press. Vessel. Pip. 2024, 209, 105202. [Google Scholar] [CrossRef]

- Lu, H.; Iseley, T.; Behbahani, S.; Fu, L. Leakage detection techniques for oil and gas pipelines: State-of-the-art. Tunn. Undergr. Space Technol. 2020, 98, 103249. [Google Scholar] [CrossRef]

- Vanitha, C.N.; Easwaramoorthy, S.V.; Krishna, S.A.; Cho, J. Efficient qualitative risk assessment of pipelines using relative risk score based on machine learning. Sci. Rep. 2023, 13, 14918. [Google Scholar] [CrossRef]

- Aromoye, I.A.; Lo, H.H.; Sebastian, P.; Abro, G.E.M.; Ayinla, S.L. Significant Advancements in UAV Technology for Reliable Oil and Gas Pipeline Monitoring. CMES Comput. Model. Eng. Sci. 2025, 142, 1155–1197. [Google Scholar] [CrossRef]

- Tian, X.; Jiao, W.; Liu, T.; Ren, L.; Song, B. Leakage detection of low-pressure gas distribution pipeline system based on linear fitting and extreme learning machine. Int. J. Press. Vessel. Pip. 2021, 194, 104553. [Google Scholar] [CrossRef]

- Ma, D.; Fang, H.; Wang, N.; Hu, H.; Jiang, X. The state-of-the-art in pipe defect inspection with computer vision-based methods. Measurement 2026, 257, 118431. [Google Scholar] [CrossRef]

- da Cruz, R.P.; da Silva, F.V.; Fileti, A.M.F. Machine learning and acoustic method applied to leak detection and location in low-pressure gas pipelines. Clean Technol. Environ. Policy 2020, 22, 627–638. [Google Scholar] [CrossRef]

- Baek, S.; Bacon, D.H.; Huerta, N.J. Enabling site-specific well leakage risk estimation during geologic carbon sequestration using a modular deep-learning-based wellbore leakage model. Int. J. Greenh. Gas Control 2023, 126, 103903. [Google Scholar] [CrossRef]

- Tao, T.; Deng, Z.; Chen, Z.; Chen, L.; Chen, L.; Huang, S. Intelligent Urban Sensing for Gas Leakage Risk Assessment. IEEE Access 2023, 11, 37900–37910. [Google Scholar] [CrossRef]

- Zhu, S.B.; Li, Z.L.; Zhang, S.M.; Ying, Y.; Zhang, H.F. Deep belief network-based internal valve leakage rate prediction approach. Measurement 2019, 133, 182–192. [Google Scholar] [CrossRef]

- Zuo, Z.; Ma, L.; Liang, S.; Liang, J.; Zhang, H.; Liu, T. A semi-supervised leakage detection method driven by multivariate time series for natural gas gathering pipeline. Process Saf. Environ. Prot. 2022, 164, 468–478. [Google Scholar] [CrossRef]

- Rehmer, A.; Kroll, A. On the vanishing and exploding gradient problem in Gated Recurrent Units. IFAC-PapersOnLine 2020, 53, 1243–1248. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, J.; Yang, M.; Huang, X.; Usmani, A.S.; Chen, G.; Fu, J.; Huang, J.; Li, J. Real-time pipeline leak detection and localization using an attention-based LSTM approach. Process Saf. Environ. Prot. 2023, 174, 460–472. [Google Scholar] [CrossRef]

- Xu, H.; Tian, Y.; Ren, H.; Liu, X. A Lightweight Channel and Time Attention Enhanced 1D CNN Model for Environmental Sound Classification. Expert Syst. Appl. 2024, 249, 123768. [Google Scholar] [CrossRef]

- Yao, L.; Zhang, Y.; He, T.; Luo, H. Natural gas pipeline leak detection based on acoustic signal analysis and feature reconstruction. Appl. Energy 2023, 352, 121975. [Google Scholar] [CrossRef]

- Liao, H.; Zhu, W.; Zhang, B.; Zhang, X.; Sun, Y.; Wang, C.; Li, J. Application of Natural Gas Pipeline Leakage Detection Based on Improved DRSN-CW. In Proceedings of the 2021 IEEE International Conference on Emergency Science and Information Technology, Chongqing, China, 22–24 November 2021; pp. 514–518. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations. arXiv 2014, arXiv:1409.1556v6. Available online: https://api.semanticscholar.org/CorpusID:14124313 (accessed on 1 September 2025).

- Petersen, P.; Voigtlaender, F. Optimal approximation of piecewise smooth functions using deep ReLU neural networks. Neural Netw. 2018, 108, 296–330. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. In Proceedings of the 2nd International Conference on Learning Representations. arXiv 2013, arXiv:1312.4400v3. Available online: http://arxiv.org/abs/1312.4400 (accessed on 1 September 2025).

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning. arXiv 2015, arXiv:1502.03167v3. Available online: https://arxiv.org/abs/1502.03167 (accessed on 1 September 2025).

- Xu, Z.; Jia, Z.; Wei, Y.W.; Zhang, S.; Jin, Z.; Dong, W. A strong anti-noise and easily deployable bearing fault diagnosis model based on time–frequency dual-channel Transformer. Measurement 2024, 236, 115054. [Google Scholar] [CrossRef]

- Brauwers, G.; Frasincar, F. A General Survey on Attention Mechanisms in Deep Learning. IEEE Trans. Knowl. Data Eng. 2023, 35, 3279–3298. [Google Scholar] [CrossRef]

| Layer (Type) | Kernel | Channel | Stride | Padding |

|---|---|---|---|---|

| Input | - | - | - | - |

| Conv1d×2 | 3 | 64 | 1 | 1 |

| MaxPool | 2 | - | - | - |

| Conv1d×2 | 3 | 128 | 1 | 1 |

| MaxPool | 2 | - | - | - |

| Conv1d×2 | 3 | 256 | 1 | 1 |

| MaxPool | 2 | - | - | - |

| Features | ||||

| Average pooling × 3 | 7 | |||

| Full connected layer | 2000 | 1000 | 600 | |

| C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 | ||||

| Network Structure | CNN | CNN + BN + Dropout | CNN + CA | CNN + BN + Dropout + CA |

|---|---|---|---|---|

| Accuracy/% | 72.26 | 90.51 | 96.35 | 91.24 |

| Parameter/MB | 25.09 | 25.09 | 8.37 | 25.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Gu, Z.; Qin, L.; Mi, H.; Zhou, C.; Zhang, H.; Feng, X.; Song, T.; Wu, K.; Wang, X.; et al. Classification Prediction of Natural Gas Pipeline Leakage Faults Based on Deep Learning: Employing a Lightweight CNN with Attention Mechanisms. Processes 2025, 13, 3454. https://doi.org/10.3390/pr13113454

Chen Z, Gu Z, Qin L, Mi H, Zhou C, Zhang H, Feng X, Song T, Wu K, Wang X, et al. Classification Prediction of Natural Gas Pipeline Leakage Faults Based on Deep Learning: Employing a Lightweight CNN with Attention Mechanisms. Processes. 2025; 13(11):3454. https://doi.org/10.3390/pr13113454

Chicago/Turabian StyleChen, Zhi, Zhibing Gu, Long Qin, Hongfu Mi, Changlin Zhou, Haoliang Zhang, Xingzheng Feng, Tao Song, Ke Wu, Xin Wang, and et al. 2025. "Classification Prediction of Natural Gas Pipeline Leakage Faults Based on Deep Learning: Employing a Lightweight CNN with Attention Mechanisms" Processes 13, no. 11: 3454. https://doi.org/10.3390/pr13113454

APA StyleChen, Z., Gu, Z., Qin, L., Mi, H., Zhou, C., Zhang, H., Feng, X., Song, T., Wu, K., Wang, X., & Wang, S. (2025). Classification Prediction of Natural Gas Pipeline Leakage Faults Based on Deep Learning: Employing a Lightweight CNN with Attention Mechanisms. Processes, 13(11), 3454. https://doi.org/10.3390/pr13113454