Mechanism-Guided and Attention-Enhanced Time-Series Model for Rate of Penetration Prediction in Deep and Ultra-Deep Wells

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Preparation

2.1.1. Data Overview

2.1.2. Data Processing Methods

- 1.

- Outlier Removal Based on 3σ Criterion

- 2.

- Data Imputation using K-Nearest Neighbors (KNNs) Algorithm

- 3.

- Data Smoothing with Savitzky–Golay (SG) Filter

- 4.

- Feature Selection via Pearson Correlation Analysis

2.1.3. Dataset Splitting Strategy

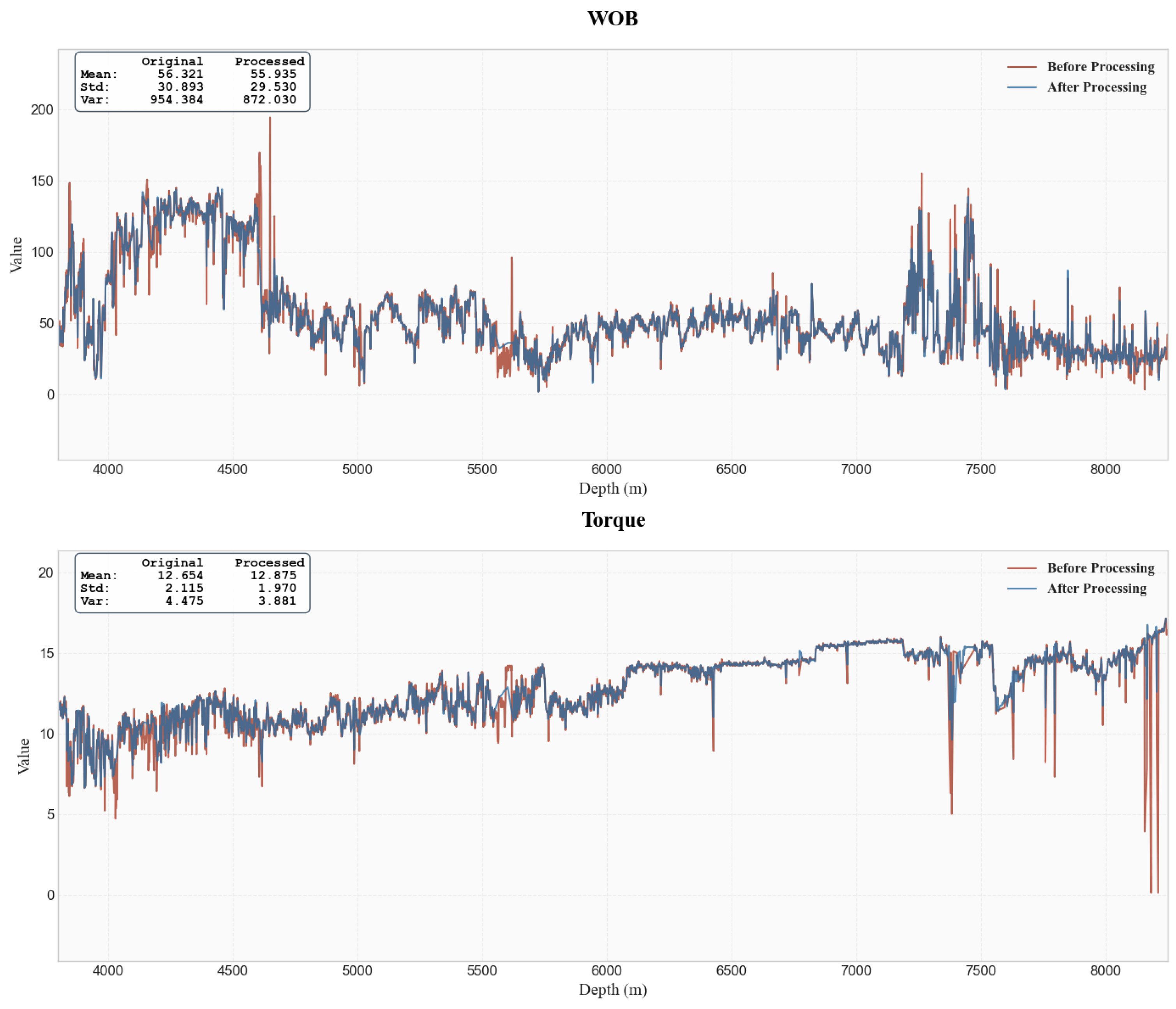

- Training Set (9 wells): Wells labeled X1 through X9 were used for model training. This set provides the model with a broad learning base, encompassing a wide depth range from 1226 m to 8639 m, which includes diverse drilling conditions and geological features.

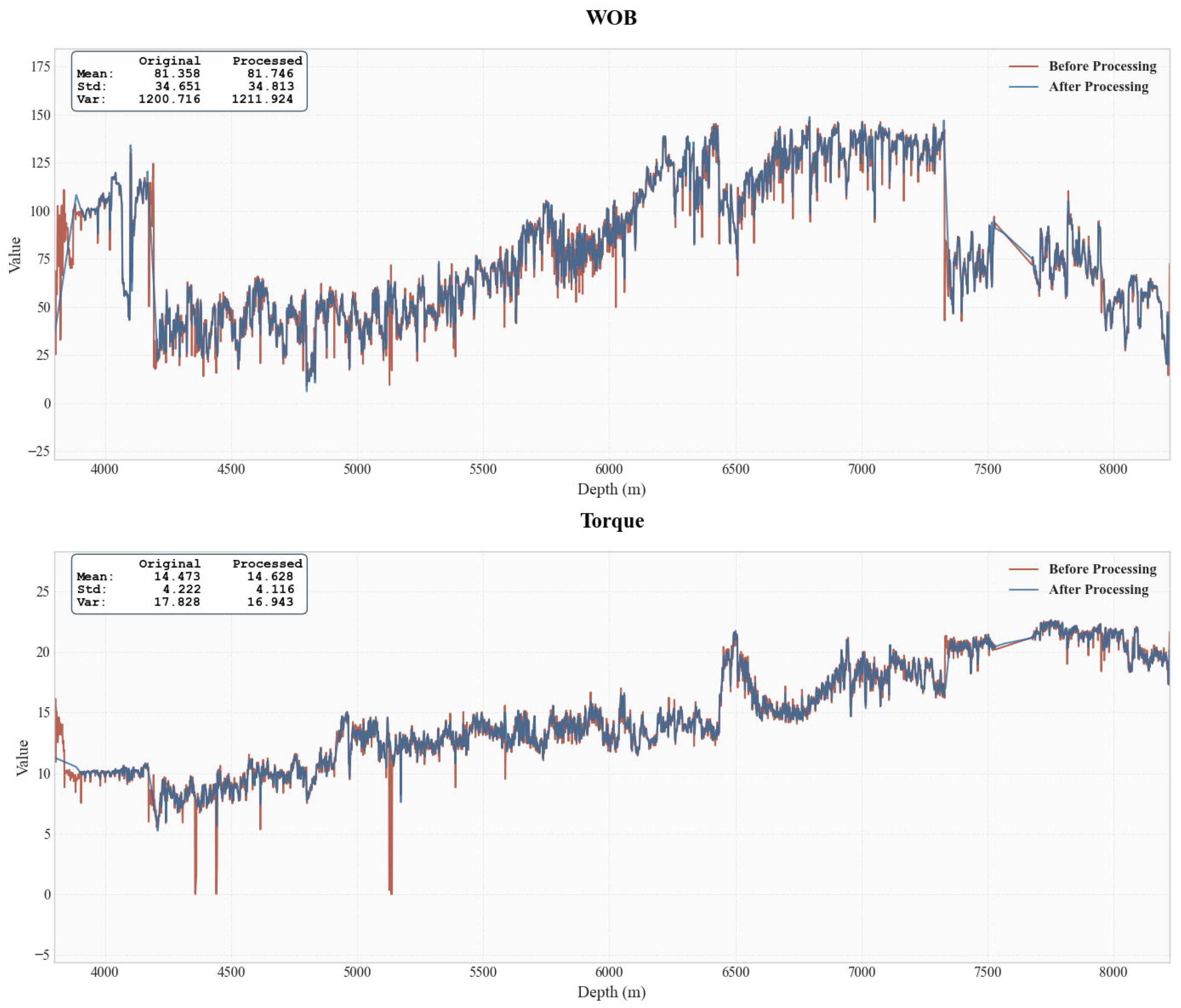

- Validation Set (1 well): Well Y1 (depth range: 3800–8242 m) was specifically designated as the validation well. This well was used to provide an intermediate assessment of the model’s predictive capability, ensuring that the model was performing well on unseen data before final evaluation.

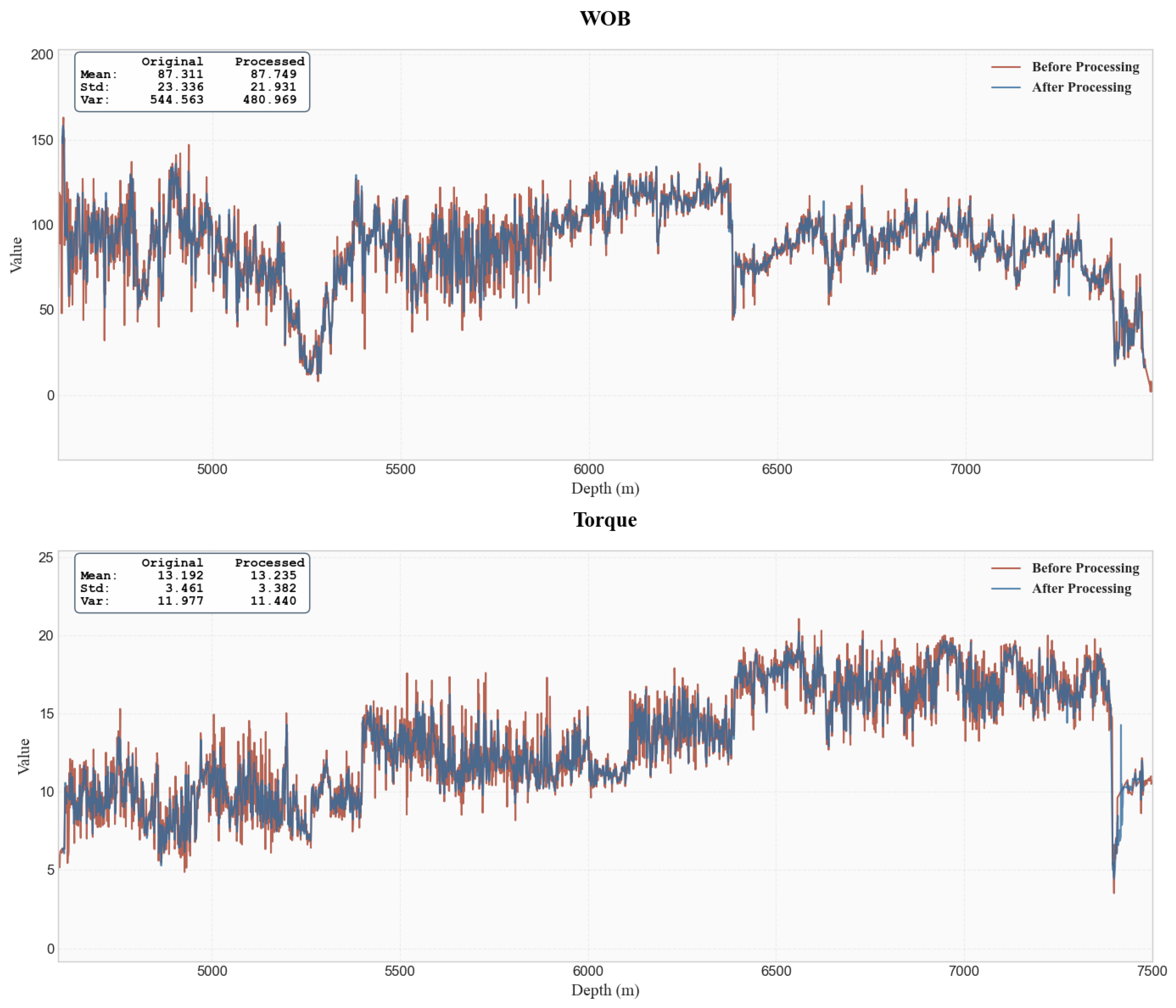

- Test Set (1 well): Well Z1 (depth range: 4602–7542 m) was designated as the fully blind test well. This well was not used during the training or validation phases and was reserved exclusively for the final, unbiased evaluation of the model’s performance, providing a true measure of its predictive ability on completely unseen data.

2.2. Applications of the Attention

2.2.1. Implicit Attention

2.2.2. Explicit Attention

2.3. Hybrid Loss Function Formulation

2.3.1. Data Loss Term

2.3.2. Physics-Informed Constraints

- Mechanical Energy Constraint

- 2.

- Hydraulic Energy Constraint

- 3.

- Formation Strength Constraint

- 4.

- Composite Loss Integration

2.3.3. Loss Function and Optimization Objective

2.4. Model Selection and Development

2.4.1. Tree-Based Models

- 1.

- Random Forest

- 2.

- Extreme Gradient Boosting (XGBoost)

- 3.

- Light Gradient Boosting Machine (LightGBM)

- 4.

- Categorical Boosting (CatBoost)

2.4.2. Time-Series Models

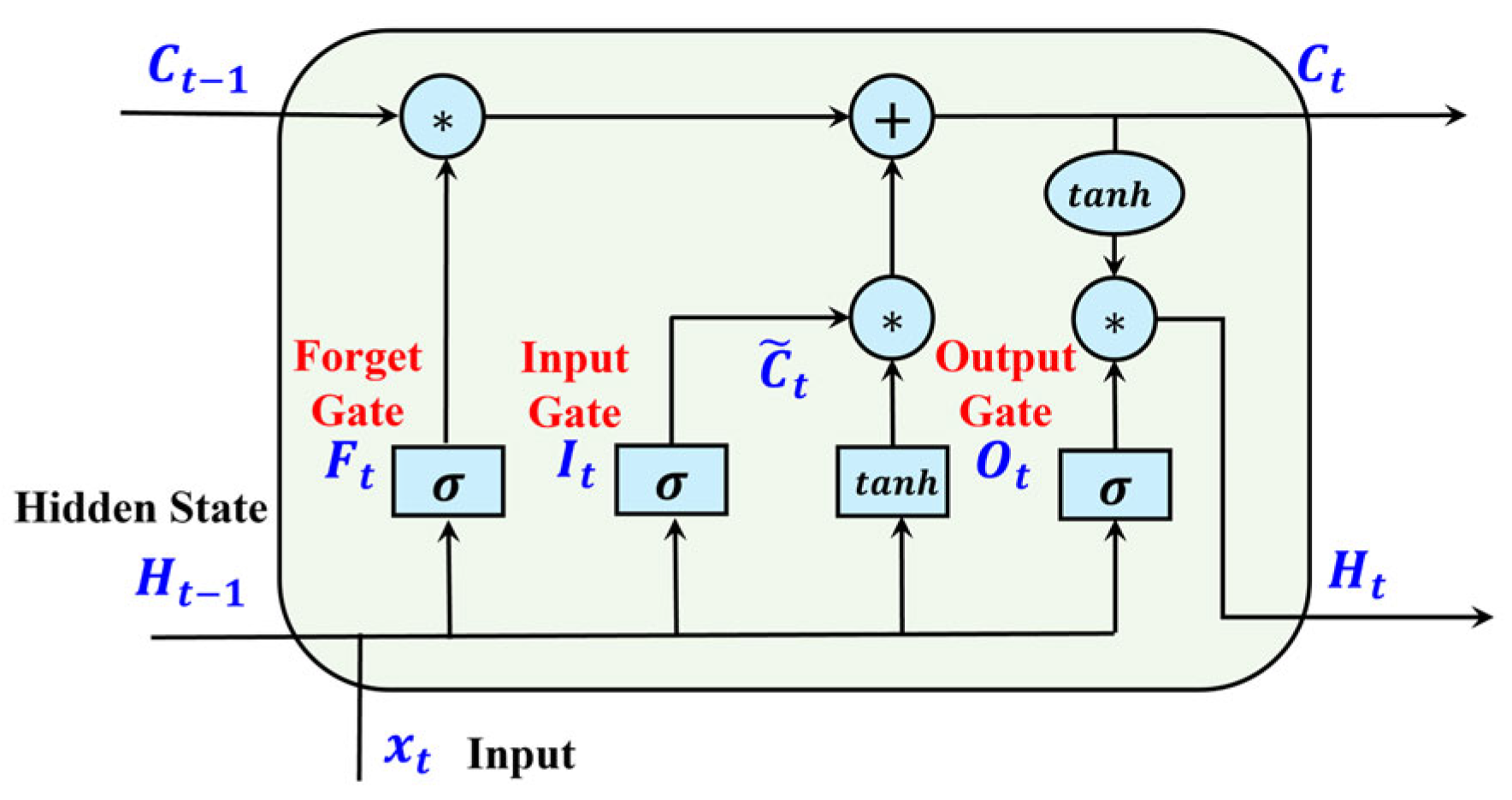

- Long Short-Term Memory (LSTM)

- 2.

- Gated Recurrent Unit (GRU)

2.5. Evaluation Indicators

2.5.1. Mean Absolute Error (MAE)

2.5.2. Coefficient of Determination (R2)

2.5.3. Mean Absolute Percentage Error (MAPE)

3. Results

3.1. Data Processing

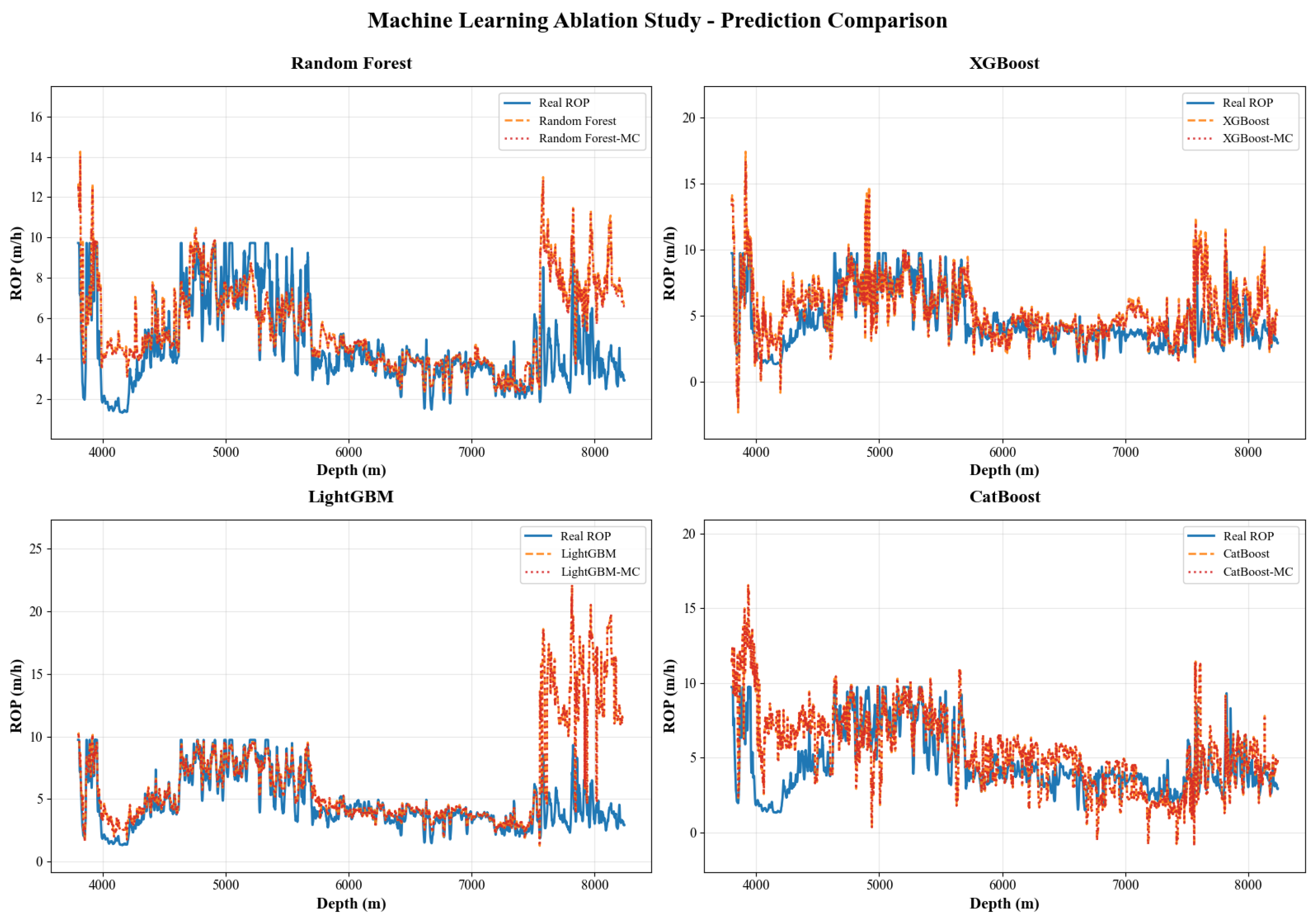

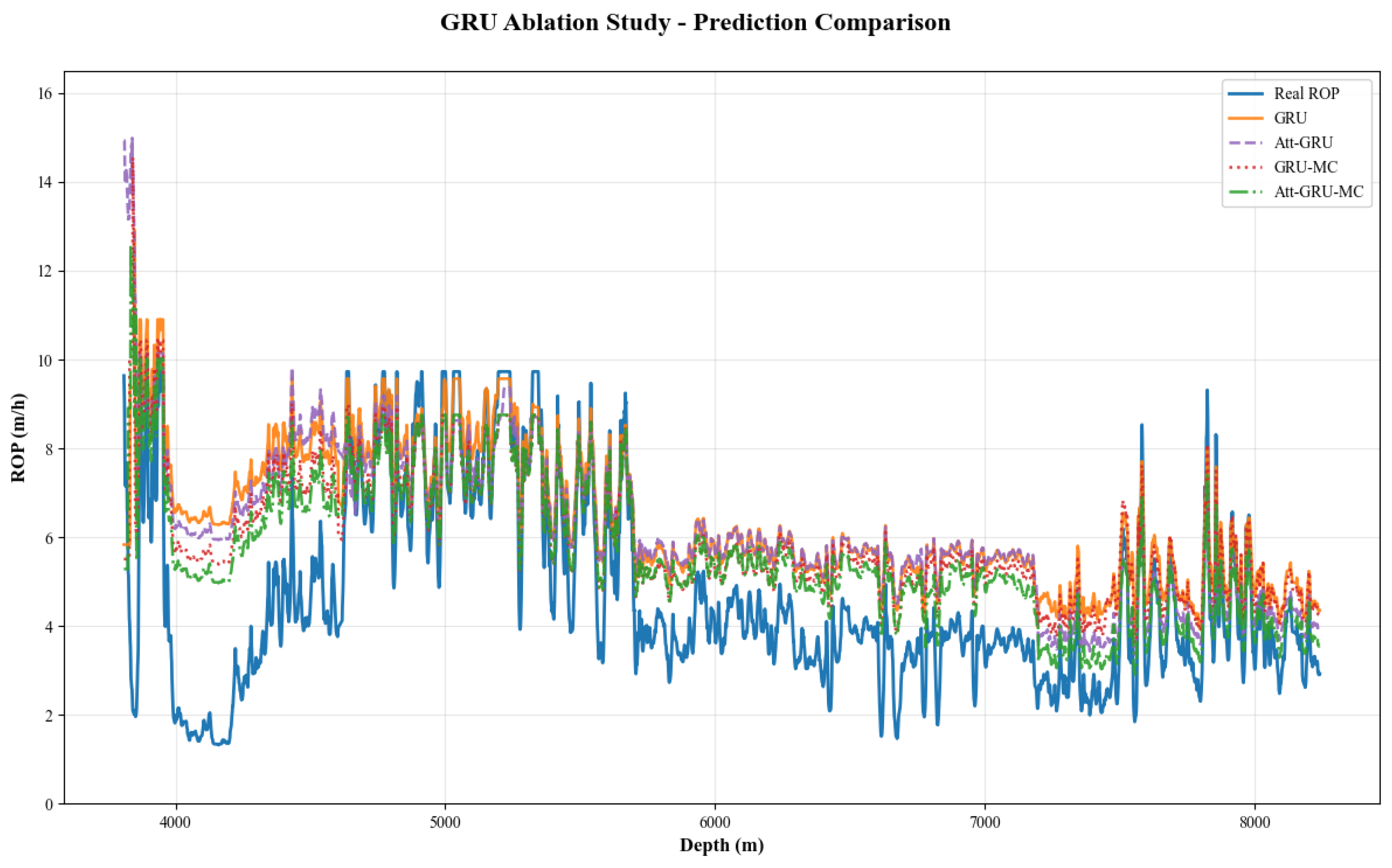

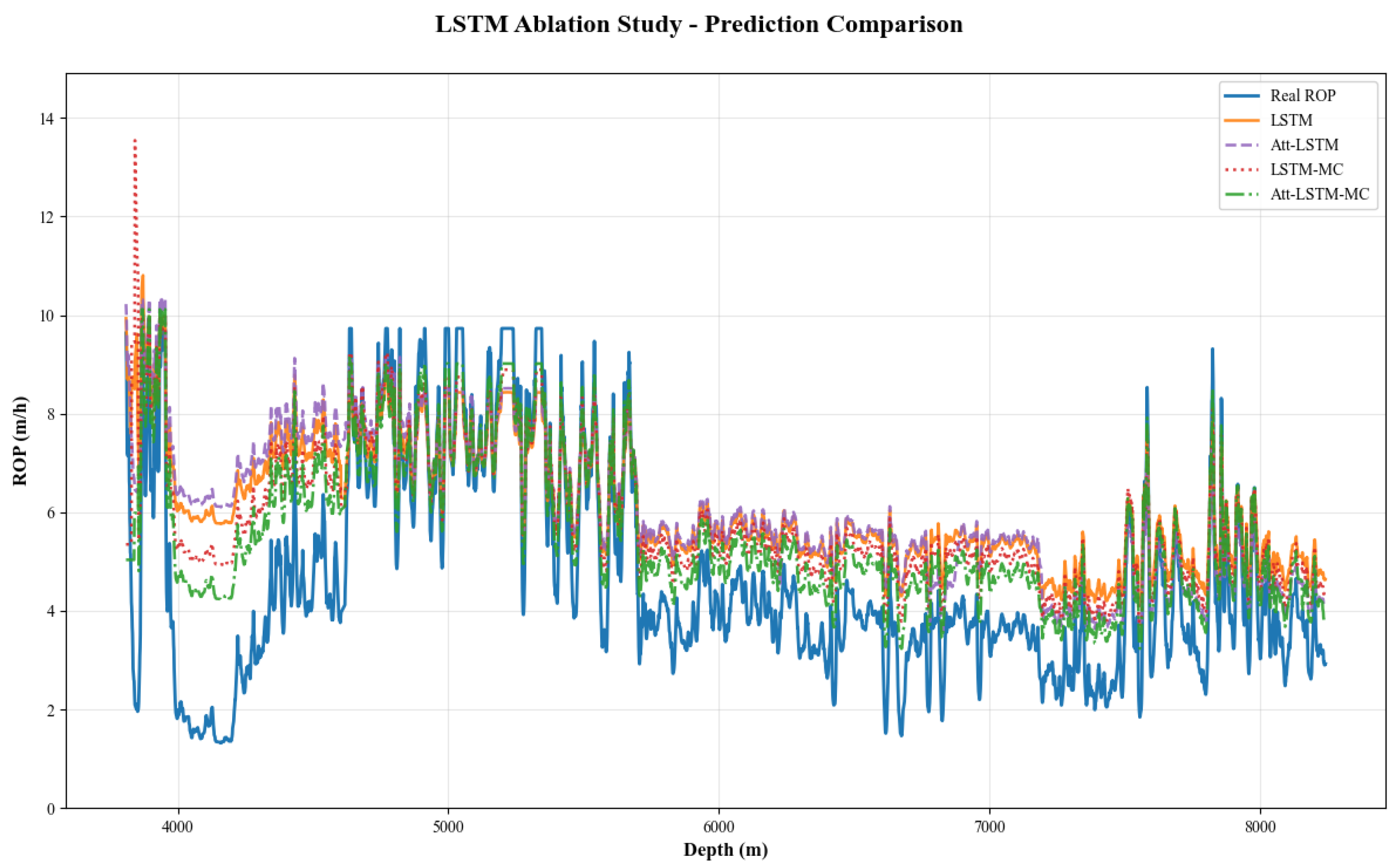

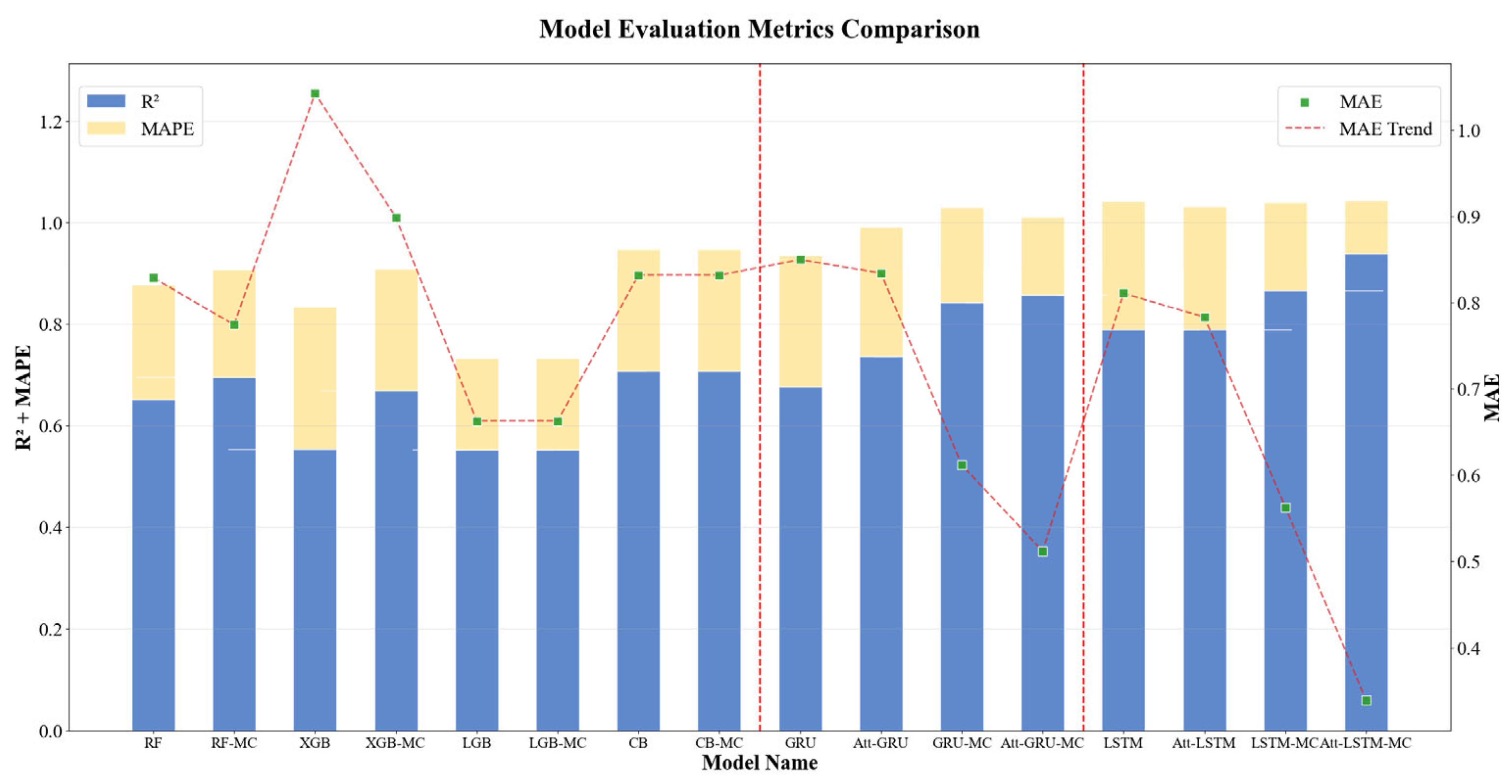

3.2. Ablation Study

4. Discussion

4.1. Analysis of Attention Mechanism

4.2. Analysis of Physics-Based Constraints

4.3. Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ROP | Rate of Penetration |

| WOB | Weight on Bit |

| RPM | Rotational Speed |

| SPP | Standpipe Pressure |

| ECD | Equivalent Circulating Density |

| FlowIn | Inlet Flow Rate |

| LSTM | Long Short-Term Memory |

| Att-LSTM | Attention-based Long Short-Term Memory |

| Att-LSTM-MC | Attention-based Long Short-Term Memory with Mechanism Constraints |

| GRU | Gated Recurrent Unit |

| Att-GRU | Attention-based Gated Recurrent Unit |

| Att-GRU-MC | Attention-based Gated Recurrent Unit with Mechanism Constraints |

References

- Li, G.; Song, X.; Zhu, Z.; Tian, S.; Sheng, M. Research progress and the prospect of intelligent drilling and completion technologies. Pet. Drill. Technol. 2023, 51, 35–47. [Google Scholar]

- Bourgoyne, A.T.; Millheim, K.K.; Chenevert, M.E.; Young, F.S. Applied Drilling Engineering; Society of Petroleum Engineers: Richardson, TX, USA, 1986. [Google Scholar]

- Eren, T.; Ozbayoglu, M.E. Real time optimization of drilling parameters during drilling operations. In Proceedings of the SPE Oil and Gas India Conference and Exhibition? Mumbai, India, 20–22 January 2010; p. SPE-129126-MS. [Google Scholar]

- Bourgoyne, A.T., Jr.; Young, F., Jr. A multiple regression approach to optimal drilling and abnormal pressure detection. Soc. Pet. Eng. J. 1974, 14, 371–384. [Google Scholar] [CrossRef]

- Bingham, M.G. A New Approach to Interpreting—Rock Drillability; The Petroleum Publishing Co.: Tulsa, OK, USA, 1965. [Google Scholar]

- Dupriest, F.E.; Koederitz, W.L. Maximizing drill rates with real-time surveillance of mechanical specific energy. In Proceedings of the SPE/IADC Drilling Conference and Exhibition, Amsterdam, The Netherlands, 23–25 February 2005; p. SPE-92194-MS. [Google Scholar]

- Soares, C.; Gray, K. Real-time predictive capabilities of analytical and machine learning rate of penetration (ROP) models. J. Pet. Sci. Eng. 2019, 172, 934–959. [Google Scholar] [CrossRef]

- Noshi, C.I.; Schubert, J.J. The role of machine learning in drilling operations; a review. In Proceedings of the SPE Eastern Regional Meeting, Pittsburgh, PA, USA, 7–11 October 2018; p. D043S005R006. [Google Scholar]

- Hegde, C.; Gray, K. Use of machine learning and data analytics to increase drilling efficiency for nearby wells. J. Nat. Gas Sci. Eng. 2017, 40, 327–335. [Google Scholar] [CrossRef]

- Singh, K.; Yalamarty, S.S.; Kamyab, M.; Cheatham, C. Cloud-based ROP prediction and optimization in real-time using supervised machine learning. In Proceedings of the Unconventional Resources Technology Conference, Denver, CO, USA, 22–24 July 2019; pp. 3067–3078. [Google Scholar]

- Liu, W.; Fu, J.; Tang, C.; Huang, X.; Sun, T. Real-time prediction of multivariate ROP (rate of penetration) based on machine learning regression algorithms: Algorithm comparison, model evaluation and parameter analysis. Energy Explor. Exploit. 2023, 41, 1779–1801. [Google Scholar] [CrossRef]

- Wang, Y.; Lou, Y.; Lin, Y.; Cai, Q.; Zhu, L. ROP Prediction Method Based on PCA—Informer Modeling. ACS Omega 2024, 9, 23822–23831. [Google Scholar] [CrossRef]

- Allawi, R.H.; Al-Mudhafar, W.J.; Abbas, M.A.; Wood, D.A. Leveraging Boosting Machine Learning for Drilling Rate of Penetration (ROP) Prediction Based on Drilling and Petrophysical Parameters. Artif. Intell. Geosci. 2025, 6, 100121. [Google Scholar] [CrossRef]

- Hao, J.; Xu, H.; Peng, Z.; Cao, Z. An online adaptive ROP prediction model using GBDT and Bayesian Optimization algorithm in drilling. Geoenergy Sci. Eng. 2025, 246, 213596. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, F.; Yang, S.; Cao, J. Self-attention mechanism for dynamic multi-step ROP prediction under continuous learning structure. Geoenergy Sci. Eng. 2023, 229, 212083. [Google Scholar] [CrossRef]

- Tu, B.; Bai, K.; Zhan, C.; Zhang, W. Real-time prediction of ROP based on GRU-Informer. Sci. Rep. 2024, 14, 2133. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Zhu, L.; Wang, C.; Zhang, C.; Li, Q.; Jia, Y.; Wang, L. Intelligent Prediction of Rate of Penetration Using Mechanism-Data Fusion and Transfer Learning. Processes 2024, 12, 2133. [Google Scholar] [CrossRef]

- Wang, J.; Li, C.; Cheng, P.; Yu, J.; Cheng, C.; Ozbayoglu, E.; Baldino, S. Data integration enabling advanced machine learning ROP predictions and its applications. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 6–9 May 2024; p. D041S049R003. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Barbosa, L.F.F.M.; Nascimento, A.; Mathias, M.H.; de Carvalho, J.A. Machine learning methods applied to drilling rate of penetration prediction and optimization—A review. J. Pet. Sci. Eng. 2019, 183, 106332. [Google Scholar] [CrossRef]

- Peng, C.; Pang, J.; Fu, J.; Cao, Q.; Zhang, J.; Li, Q.; Deng, Z.; Yang, Y.; Yu, Z.; Zheng, D. Predicting Rate of Penetration in Ultra-deep Wells Based on Deep Learning Method. Arab. J. Sci. Eng. 2023, 48, 16753–16768. [Google Scholar] [CrossRef]

- Barnett, V.; Lewis, T. Outliers in Statistical Data; Wiley: New York, NY, USA, 1994; Volume 3. [Google Scholar]

- Troyanskaya, O.; Cantor, M.; Sherlock, G.; Brown, P.; Hastie, T.; Tibshirani, R.; Botstein, D.; Altman, R.B. Missing value estimation methods for DNA microarrays. Bioinformatics 2001, 17, 520–525. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J. Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Kendall, H.; Goins, W., Jr. Design and operation of jet-bit programs for maximum hydraulic horsepower, impact force or jet velocity. Trans. AIME 1960, 219, 238–250. [Google Scholar] [CrossRef]

- Maidla, E.; Ohara, S. Field verification of drilling models and computerized selection off drill bit, WOB, and drillstring rotation. SPE Drill. Eng. 1991, 6, 189–195. [Google Scholar] [CrossRef]

- Warren, T. Penetration-rate performance of roller-cone bits. SPE Drill. Eng. 1987, 2, 9–18. [Google Scholar] [CrossRef]

- Schuh, F. The critical buckling force and stresses for pipe in inclined curved boreholes. In Proceedings of the SPE/IADC Drilling Conference and Exhibition, Amsterdam, The Netherlands, 5–7 March 1991; p. SPE-21942-MS. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3149–3157. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 31, 6639–6649. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Draper, N. Applied Regression Analysis; McGraw-Hill. Inc.: Columbus, OH, USA, 1998. [Google Scholar]

- De Myttenaere, A.; Golden, B.; Le Grand, B.; Rossi, F. Mean absolute percentage error for regression models. Neurocomputing 2016, 192, 38–48. [Google Scholar] [CrossRef]

| Category | Parameters |

|---|---|

| Engineering | Depth |

| Torque | |

| WOB | |

| RPM | |

| SPP | |

| ROP | |

| ECD | |

| Inlet Density | |

| Inlet Temperature | |

| Outlet Temperature | |

| Formation | Formation |

| Drilling Tools | Bit Size |

| Bit Type | |

| Drill Footage | |

| Total Area of Water Holes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Zhang, C.; Li, N.; Wang, C.; Chen, L.; Zhang, R.; Zhu, L.; Ye, S.; Li, Q.; Liu, H. Mechanism-Guided and Attention-Enhanced Time-Series Model for Rate of Penetration Prediction in Deep and Ultra-Deep Wells. Processes 2025, 13, 3433. https://doi.org/10.3390/pr13113433

Zhang C, Zhang C, Li N, Wang C, Chen L, Zhang R, Zhu L, Ye S, Li Q, Liu H. Mechanism-Guided and Attention-Enhanced Time-Series Model for Rate of Penetration Prediction in Deep and Ultra-Deep Wells. Processes. 2025; 13(11):3433. https://doi.org/10.3390/pr13113433

Chicago/Turabian StyleZhang, Chongyuan, Chengkai Zhang, Ning Li, Chaochen Wang, Long Chen, Rui Zhang, Lin Zhu, Shanlin Ye, Qihao Li, and Haotian Liu. 2025. "Mechanism-Guided and Attention-Enhanced Time-Series Model for Rate of Penetration Prediction in Deep and Ultra-Deep Wells" Processes 13, no. 11: 3433. https://doi.org/10.3390/pr13113433

APA StyleZhang, C., Zhang, C., Li, N., Wang, C., Chen, L., Zhang, R., Zhu, L., Ye, S., Li, Q., & Liu, H. (2025). Mechanism-Guided and Attention-Enhanced Time-Series Model for Rate of Penetration Prediction in Deep and Ultra-Deep Wells. Processes, 13(11), 3433. https://doi.org/10.3390/pr13113433