1. Introduction

Monoclonal antibodies (mAbs) are among the most successful biopharmaceutical products, with approved uses in oncology, autoimmune disorders, and infectious diseases [

1], and global annual sales exceeding

$120 billion as of 2017 [

2]. As demand continues to rise, manufacturers face growing pressure to increase production efficiency and maintain quality under stringent regulatory standards. Upstream bioprocessing plays a critical role in determining final yield and product quality, as even minor deviations in cell culture conditions can significantly affect process outcomes [

3].

Traditional process-optimization methods such as design of experiments (DoE) and mechanistic modeling have long supported process understanding and control [

4]. However, these approaches struggle to capture the nonlinear and multivariable dynamics of large-scale mammalian cell cultures, where numerous interacting parameters create complex dependencies that are difficult to interpret [

5].

Recent advances in data availability and computational power have driven the adoption of machine learning (ML) and related data-driven methods in bioprocessing [

6]. ML models can reveal hidden patterns in process data that conventional statistical methods may overlook [

7], complementing traditional tools for yield prediction, process control, and real-time decision-making using process analytical technologies (PAT) and manufacturing execution systems [

8]. In parallel, hybrid modeling approaches that integrate ML with mechanistic insights have shown promise for improving interpretability and regulatory acceptance in continuous and automated manufacturing settings [

9,

10,

11].

Despite this progress, the application of ML in Good Manufacturing Practice (GMP) environments remains limited by data heterogeneity, small batch numbers, and the black-box nature of many algorithms [

12,

13,

14,

15]. Mechanistic models, while interpretable, are constrained by the lack of quantitative descriptors for mammalian cell behavior and by difficulties in scaling to industrial systems [

16,

17,

18]. Moreover, even well-designed mechanistic frameworks often fail to accurately capture real-world process variability, due to biological complexity, nonlinear responses, and incomplete experimental observability [

19,

20,

21]. These factors make it challenging to achieve predictive precision and adaptability using mechanistic approaches alone.

As a result, purely mechanistic models are increasingly being complemented by ML-based and hybrid strategies that can learn directly from empirical data without requiring fully specified biochemical equations [

22,

23,

24]. By identifying hidden correlations and nonlinear dependencies among process parameters, such data-driven frameworks can support more responsive and predictive control strategies in complex bioprocess environments. Furthermore, advances in deep learning have shown that models can automatically extract abstract data representations without prior mechanistic assumptions, providing powerful tools for uncovering latent structure in high-dimensional process datasets [

25].

In this study, we apply a data-driven modeling framework to the upstream phase of mAb production using industrial-scale batch data provided by a global CDMO. The methodology combines exploratory data analysis (EDA) and machine learning regression to identify process parameters most strongly influencing harvested yield. By focusing on empirical patterns rather than predefined kinetic models, this work demonstrates how ML-based analysis of historical data can provide actionable insights for upstream optimization. The study highlights both the potential and limitations of ML for practical bioprocess improvement, emphasizing interpretability, data quality, and scalability as key considerations for future industrial implementation.

2. Materials and Methods

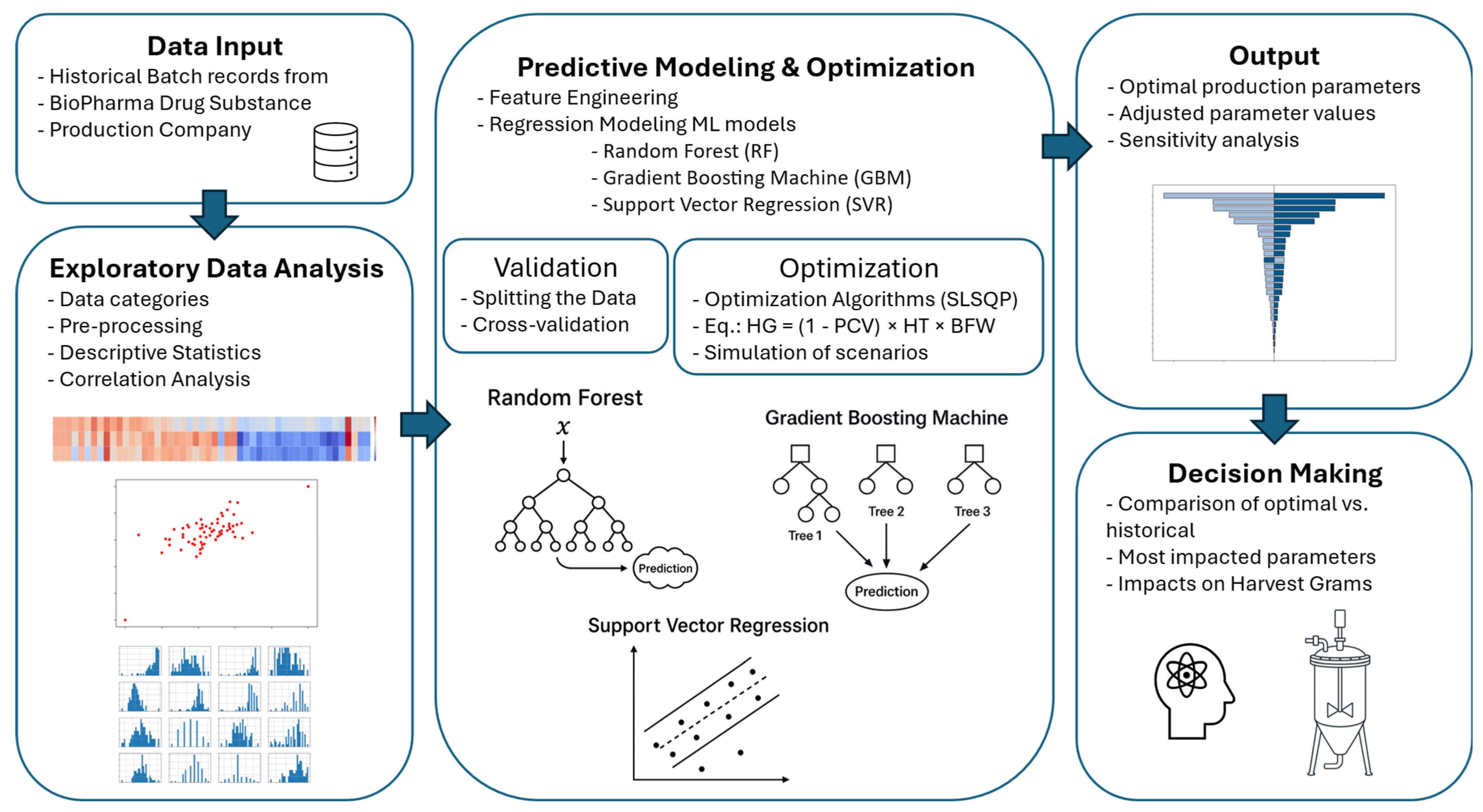

The dataset for this study was provided by a global CDMO specializing in large-scale mAb production. The objective was to leverage ML techniques to improve the upstream bioprocessing yield by analyzing historical batch records, identifying key process parameters, and applying predictive modeling and exploratory analysis strategies. This study adopted a statistical approach, leveraging ML models trained on historical batch data without relying on mechanistic or first-principles modeling. Its methodology encompassed several key steps, including data preprocessing, exploratory data analysis (EDA), ML model development, and process analysis. An overview of the methodology is provided in

Figure 1.

2.1. Process Overview

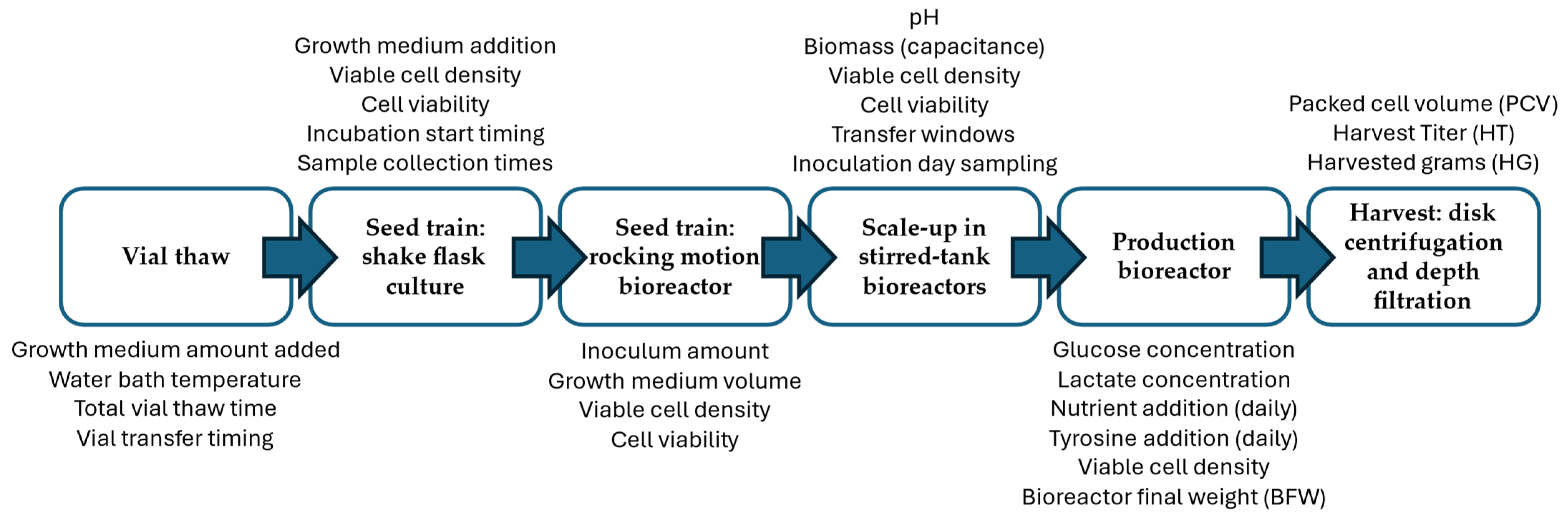

The production of mAbs involves several upstream processing steps that influence the final yield. The upstream phase primarily includes cell culture and expansion before the harvest step, when the drug substance is extracted for further downstream processing. In this study, the upstream process under analysis consists of the following key steps. The process begins with the thawing of a cryopreserved working cell bank vial of a recombinant Chinese hamster ovary (CHO) cell line expressing a monoclonal antibody, maintained under controlled, GMP-like conditions. The thawing rate and initial viability of cells can impact the subsequent expansion steps. After thawing, the cells are first expanded into small-scale shake flasks, providing an initial controlled environment for growth. Key parameters such as temperature, pH, and nutrient availability ensure that the cells reach target density before further scale-up. The culture is then transferred to a wave bag bioreactor, allowing for a greater volume of culture while maintaining proper oxygenation and mixing. Challenges at this stage include transfer timing and adaptation lag, which can affect the overall growth trajectory.

Following this, the upstream process includes several scale-up stages in bioreactors of increasing capacity, leading to the final production scale. Each transfer presents the risk of post transfer growth lag as cells adjust to the new environment. Proper transfer timing, cell viability, and inoculation density are critical to maintaining exponential growth during these stages. The final stage of upstream processing is carried out in a high-capacity production bioreactor, where the bulk of mAb production is achieved. Here, nutrient additions, pH regulation, and aeration control ensure that cells remain in a favorable production state. A key operational factor at this stage is the transfer window from the preceding bioreactor to the production bioreactor, which was investigated in this study for potential yield improvement. At the end of the cell culture phase, the drug substance is harvested through centrifugation and depth filtration. The final harvested yield is influenced by cell viability, viable cell density, and packed cell volume, which determine the efficiency of separation and product recovery. The upstream process flow is illustrated in

Figure 2, which summarizes the sequence of unit operations from vial thaw to harvest and includes representative examples of process variables at each stage. These stages represent only the upstream phase of monoclonal antibody production analyzed in this study and do not include downstream purification steps.

Several process parameters influencing the final harvested yield were analyzed in this study. These included transfer timing, which impacts cell adaptation and growth consistency during the transition between bioreactors; cell growth lag, quantified as the time interval between inoculation and the onset of exponential growth based on viable cell density data; the adjustment of nutrient additions, such as tyrosine and glucose, which plays a critical role in maintaining cell viability and productivity; and process control constraints, including time windows for inoculation, incubation duration, and monitored variables, which influence process robustness.

2.2. Data Collection and Preprocessing

Historical batch records were used for the analysis. The dataset comprised three years of batch data from an industrial-scale upstream bioprocess covering various operational parameters, process variables, and batch outcomes.

Prior to the analysis, data preprocessing was performed to ensure consistency and reliability. Batches with missing critical values or incomplete production records were excluded. Additionally, nonrelevant variables, such as row counters and metadata unrelated to process outcomes, were removed. Outlier detection was conducted through exploratory data visualization, including the inspection of histograms, scatter plots, and box plots to identify extreme values. These potential outliers were subsequently analyzed to determine whether they resulted from genuine process deviations or data entry errors [

23,

26]. To maintain uniformity, numerical features were normalized to ensure comparable scales across different parameters.

The dataset was categorized into distinct groups based on their role in the bioprocess. For clarity, the terminology used in this study follows standard process-control conventions: “process inputs” denote adjustable operational settings; “monitored variables” represent measured process-state conditions; and “dependent variables” are the modeled yield outcomes.

Dependent Variables (Yield Indicators): The primary output metric was harvested grams (HG), with packed cell volume (PCV), bioreactor final weight (BFW), and harvest titer (HT) serving as critical yield-determining factors.

Process Inputs (Adjustable Process Variables): This group includes all controllable operational factors, such as growth medium addition, nutrient and tyrosine supplementation, inoculum weight, and time-based process parameters (e.g., incubation durations, sampling times, and transfer windows).

Monitored Variables (Process-State Conditions): These variables were measured throughout the process but not directly adjusted. Key variables included pH, glucose, lactate, viable cell density (VCD), biomass capacitance, and aeration conditions.

After preprocessing, 65 batches were retained for analysis, containing 79 process inputs and 104 monitored variables. These cleaned and structured data served as the foundation for EDA and ML modeling.

2.3. Exploratory Data Analysis

Before applying ML models, EDA was conducted to assess the dataset’s structure, identify key trends, and detect potential data inconsistencies. The goal of this analysis was to understand the relationships between process parameters and yield outcomes and to establish a baseline for model development.

EDA was performed using descriptive statistics, correlation analysis, and visualization techniques [

5,

27]. Descriptive statistics were used to summarize the key variables, providing insights into the distribution of CPPs. Correlation matrices were generated to evaluate the relationships between process inputs, monitored variables, and dependent yield indicators. Heatmaps were employed to visualize the correlations between process parameters and harvested yield. Parameters showing negligible or inconsistent correlations with yield outcomes were excluded, while those exhibiting meaningful relationships were retained as candidate features. In addition, scatter plots and histograms were used to analyze parameter distributions across batches, helping identify potential trends or process inconsistencies.

EDA insights were used to guide feature selection and model development, ensuring that only the most relevant parameters were considered in the ML models. By examining correlations and variable distributions, EDA helped refine the input space for predictive modeling and improve model interpretability and performance.

2.4. Machine Learning Model Development

To build predictive models for bioprocess yield improvement, various ML techniques were employed in a statistical modeling framework. As this work employed a data-driven statistical methodology, no mechanistic representations of the bioprocess were incorporated. The models captured empirical relationships solely from historical process data. The goal of the model development was to identify key process parameters that significantly influenced production yield and to use these insights to improve future batch performance. Three regression models were implemented to predict the key yield indicators: HT, PCV, and BFW. The models included random forest (RF) regression, gradient boosting machine (GBM), and support vector regression (SVR). Each model analyzed one dependent variable at a time using a multivariate set of input features that included process inputs and monitored variables. While each regression model predicted one dependent variable (univariate output), the modeling framework operated within a fully multivariate input space encompassing process inputs and monitored variables. In ML practice, it is common to approach multivariate problems by training one model per output, particularly when the outputs differ significantly in behavior or predictive difficulty [

28]. Therefore, while our modeling approach was technically univariate in structure, the overall framework reflected a multivariate modeling context.

RF was used to capture nonlinear relationships and feature interactions [

29,

30]. A grid search was applied to optimize hyperparameters, such as tree depth [

31], the number of estimators, and the minimum sample split, while cross-validation (CV = 5) was used to evaluate model generalizability. GBM was selected for its ability to improve prediction accuracy by iteratively minimizing errors, using additive training of weak learners to focus on the residuals of prior models [

32]. Hyperparameters such as learning rate, the number of estimators, and tree depth were tuned using GridSearchCV. Hyperparameter tuning was performed using GridSearchCV, which systematically evaluated multiple combinations of model parameters to identify the configuration yielding the highest cross-validated R

2 score. For example, the grid included ranges such as: RF−number of trees (100–500), maximum depth (3–10), and feature sampling (‘sqrt’, ‘log2′); GBM—estimators (25–500), learning rate (0.005–0.05), and maximum depth (3–5); SVR—kernel (‘linear’, ‘rbf’), C (0.01–50), gamma (‘scale’, ‘auto’), and epsilon (0.01–0.15). The final configuration for each model corresponded to the best-performing combination under 5-fold cross-validation. SVR was implemented to model complex relationships with a kernel-based approach that maximizes margin tolerance while minimizing prediction error—a widely adopted method in nonlinear regression tasks [

33].

The models were evaluated using coefficient of determination (R

2) scores, which measure the proportion of variance explained; mean absolute error (MAE), which provides an average magnitude of prediction errors; and mean squared error (MSE), which gives higher weight to large deviations. These metrics are standard in model evaluation for continuous output predictions [

34].

The training pipeline was designed to optimize the following objective function:

where HG (harvested grams) represents the total product mass obtained after harvesting (g), PCV (packed cell volume) is expressed as a fraction of total bioreactor volume (dimensionless), HT (harvest titer) is measured in grams of antibody per liter of harvest fluid (g/L), and BFW (bioreactor final weight) corresponds to the total liquid weight in the production bioreactor (kg or L, assuming 1 kg ≈ 1 L). This formulation captures that increasing bioreactor content (BFW) and antibody concentration (HT), while minimizing cell mass fraction (PCV), leads to higher overall product yield. This objective function was formulated as a process-informed composite metric representing the total harvested product mass, combining the effects of antibody concentration (HT), harvest volume (BFW), and the non-productive cell fraction (PCV).

After training and validating the models, an exploratory parameter-search framework was applied. Sequential least squares programming (SLSQP) [

35] was used to maximize HG, subject to process constraints. Sensitivity analysis was performed to identify the most influential features of yield, ensuring that the optimization strategy focused on the most impactful process variables.

2.5. Output

The output of the ML models consisted of model-recommended production parameters and adjusted process variable values aimed at improving the final harvested yield. The predictive models generated estimates for BFW, HT, and PCV based on historical batch data and identified key process parameters that influenced these outcomes.

To determine the most impactful parameters, a feature importance analysis was conducted, ranking the input variables based on their contribution to yield improvement [

29,

36]. The optimization step utilized the best-performing model to recommend adjusted process conditions that would lead to an increase in HG.

Additionally, a sensitivity analysis was performed to evaluate how variations in the process inputs affected the yield [

37]. This allowed for the identification of CPPs that had the greatest influence on production outcomes. The results of this sensitivity analysis provided insight into how specific parameter adjustments could improve process robustness and batch-to-batch consistency.

2.6. Decision-Making

After developing and validating the ML models, the next step involved leveraging the model predictions to support data-driven decision-making for upstream bioprocess improvement. The primary objective of this stage was to translate model insights into actionable process modifications that could enhance the production yield.

The decision-making framework was designed to compare historical process performance against the model-recommended parameter settings. This analysis focused on identifying the key process variables with the highest impact on the final HG. By assessing the influence of monitored variables and process inputs on yield outcomes, process engineers can make informed adjustments to enhance batch consistency and maximize production efficiency [

23,

38,

39].

A comparative analysis was performed between the predicted recommended conditions and actual historical batch performance, including the following key steps:

Parameter Adjustment Recommendations: The ML models identified critical factors that significantly impacted yield. These insights were used to propose recommended parameter settings.

Impact Assessment: The sensitivity analysis results provided a quantitative measure of how changes in specific process variables would affect overall HG.

3. Results

3.1. Exploratory Data Analysis

EDA was conducted to understand the dataset structure, identify correlations between variables, and determine the key features for ML modeling. The primary objective of EDA was to filter out noninformative parameters, visualize trends, and assess feature distributions.

3.1.1. Feature Distribution and Variability Analysis

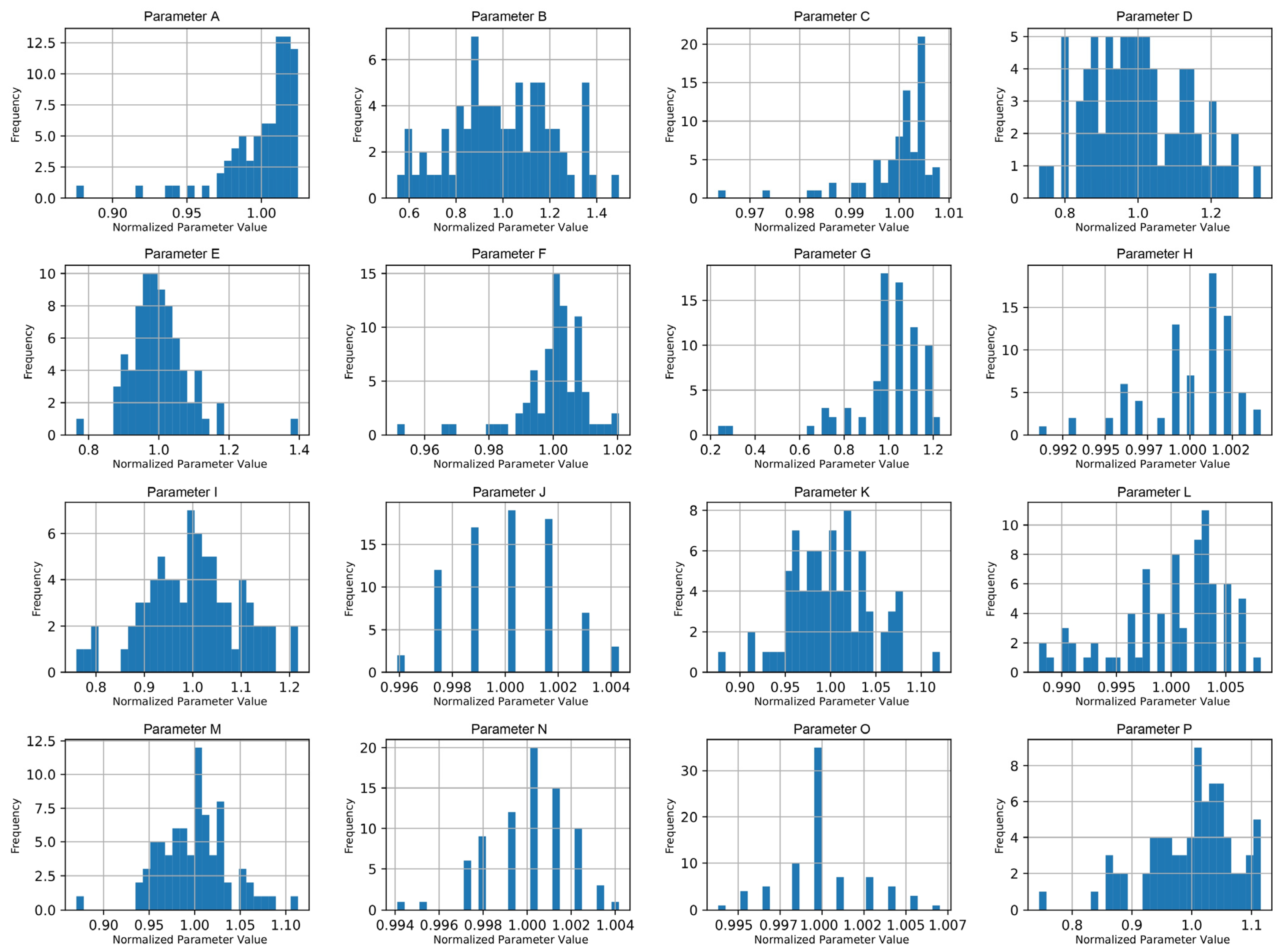

To analyze variability, histograms (

Figure 3) were generated for all parameters, allowing for the identification of those with minimal variance across batches. Parameters showing little to no variation were excluded from further analysis, as they would not contribute meaningful insights to the predictive model.

Figure 3 presents representative examples of these histograms for a subset of sixteen parameters, illustrating the general shapes of the normalized distributions and enabling visual comparison of variability and skewness across parameters.

Variability was assessed visually from the parameter histograms and descriptive statistics. Parameters displaying nearly uniform or constant values across all batches were considered non-informative and excluded from further modeling. Additionally, normalization ensured comparability across parameters, enabling effective ML modeling.

3.1.2. Correlation Analysis

Following the initial screening, a comprehensive correlation analysis was performed to quantify the relationships among process inputs, monitored variables, and the dependent variables (PCV, BFW, and HT). Pearson correlation coefficients were calculated separately for these categories of independent variables, and correlation matrices were generated to identify the parameters most strongly associated with each dependent variable.

The top five parameters with the strongest correlations to each dependent variable are summarized in

Table 1. The parameter names were generalized to preserve confidentiality while maintaining interpretability.

The BFW showed notably strong correlations with key process inputs, such as tyrosine and nutrient additions, indicating potential for predictive modeling. In contrast, the correlation strength for HT and PCV was substantially lower, suggesting inherent complexity or nonlinear relationships requiring more advanced modeling methods. These insights guided subsequent feature engineering and the selection of parameters for ML model development.

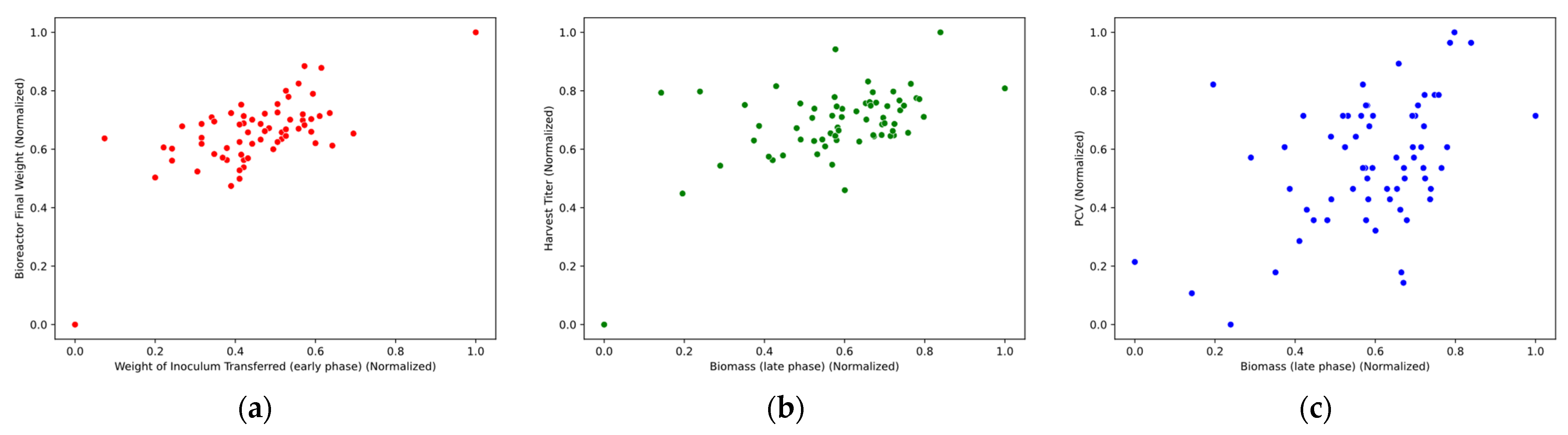

3.1.3. Scatter Plot Analysis

Scatter plots were constructed to visually assess the relationships among important parameters and dependent variables. While correlation coefficients quantify linear relationships, scatter plots reveal potential nonlinear patterns, clustering, or outliers that may not be evident numerically. However, none of the parameters demonstrated a distinct, strong linear relationship with the dependent variables, as illustrated in

Figure 4. This underscores the necessity for ML methods capable of capturing complex, nonlinear patterns.

The scatter plots confirmed that no strong visual correlations existed between the independent and dependent variables. This finding supports the decision to apply nonlinear regression methods, such as RF, GBM, and SVR, to model the underlying relationships more effectively.

In addition, batch-to-batch variability was analyzed through visual distribution studies to assess the consistency and reliability of historical data as a foundation for modeling. The distribution analysis demonstrated that the process parameters remained consistent across historical batches, indicating stable operational conditions. This consistency validates the suitability of historical batch data for predictive modeling, as it suggests a reliable and well-controlled manufacturing process.

3.2. Machine Learning Model Performance

Predictive models were developed for the three key dependent variables (BFW, HT, and PCV). To model these variables, three regression models were implemented: RF, GBM, and SVR. Each model was evaluated using the R2 score, MAE, and MSE performance metrics. Feature engineering was applied to improve the models’ performance, with different combinations of parameters tested to assess their impact. As part of this process, feature importance rankings were extracted from each algorithm to quantify the contribution of individual process parameters to model predictions. These rankings guided the elimination of low-impact features and the construction of refined feature sets tailored to each output. By focusing on variables with the highest predictive relevance, the modeling pipeline was streamlined and better aligned with the underlying process dynamics.

After feature screening, approximately 40–50 parameters were retained for model training. The dataset of 65 historical batches was randomly divided into 80% for model training and 20% for testing. Within the training set, a 5-fold cross-validation approach was used for model validation and hyperparameter optimization, ensuring generalizable model performance. Following extensive model evaluation, the predictive performance of each model across all dependent variables is summarized in

Table 2, which also highlights the best-performing configuration identified for each dependent variable.

The SVR model that achieved the best predictive performance for BFW relied on a combination of timing and biological parameters. The most influential features included the inoculum transfer weight, biomass levels during early production, process duration metrics across sequential expansion phases, interbioreactor transfer timing, and cumulative sample times collected during the initial production window. These variables contributed to improving model accuracy by capturing critical dynamics in scale-up and cell growth trajectories.

Following this analysis, key takeaways were identified. SVR significantly outperformed RF and GBM for BFW prediction, achieving an R2 of 0.978, making it the most reliable model for yield improvement analysis. In contrast, the HT and PCV models performed poorly, with very low or even negative R2 values, indicating they could not be reliably predicted with the available dataset.

Given these results, BFW was selected as the primary dependent variable for prediction in the exploratory analysis, while HT and PCV were assigned their historical average values due to their unreliable predictive performance.

The results confirmed that SVR provided the best predictive accuracy for BFW, while HT and PCV exhibited poor predictability across all models. These findings justify the decision to focus the exploratory parameter analysis on BFW, utilizing historical averages for HT and PCV to ensure practical model implementation.

3.3. Optimization

Since the HT and PCV models did not achieve strong predictive accuracy, the BFW-SVR model was selected for optimization. The optimization aimed to maximize HG by leveraging the best-performing predictive model for BFW while holding HT and PCV at their historical average values. This simplification means that the optimization results primarily reflect the model’s ability to improve total harvest weight, rather than product concentration or cell density. While BFW is a robust proxy for overall yield performance, excluding HT and PCV from direct modeling may limit the precision of the predicted trade-offs between productivity and cell viability. Accordingly, the proposed parameter adjustments should be interpreted as indicative trends for yield enhancement rather than as prescriptive control settings.

The optimization methodology employed SLSQP to determine the suitable balance of process parameters under these constraints. To evaluate the outcome,

Table 3 presents a comparison between the predicted optimized yield and the historical batch performance.

To preserve confidentiality while retaining the relative trends in model performance, all numeric values were scaled relative to the historical average, which was set to 1.000. Each metric’s minimum, maximum, and optimized values are presented as ratios relative to this baseline. This relative scaling approach enables the transparent assessment of model improvements while protecting proprietary process data.

The optimized yield exceeded the historical maximum by approximately 4.6%, demonstrating the effectiveness of parameter tuning in improving overall performance. To identify the most impacted process variables, we evaluated the relative change between each parameter’s historical average and its optimized value. These deviations indicate how the optimization algorithm adjusted parameter levels relative to the baseline, rather than their statistical importance in the model. The ‘Target’ column, when present, denotes predefined process setpoints specified in the production recipe. The top five parameters showing the largest adjustments within the category of process inputs are presented in

Table 4. To preserve confidentiality, parameter names were generalized, and all values were scaled relative to the historical average, which was set to 1.000.

Similarly, the top five variables within the category of monitored variables were selected based on their relative changes from historical averages to optimized values. As shown in

Table 5, all values were scaled with the historical average and set to 1.000, and parameter names were generalized for confidentiality.

3.4. Sensitivity Analysis

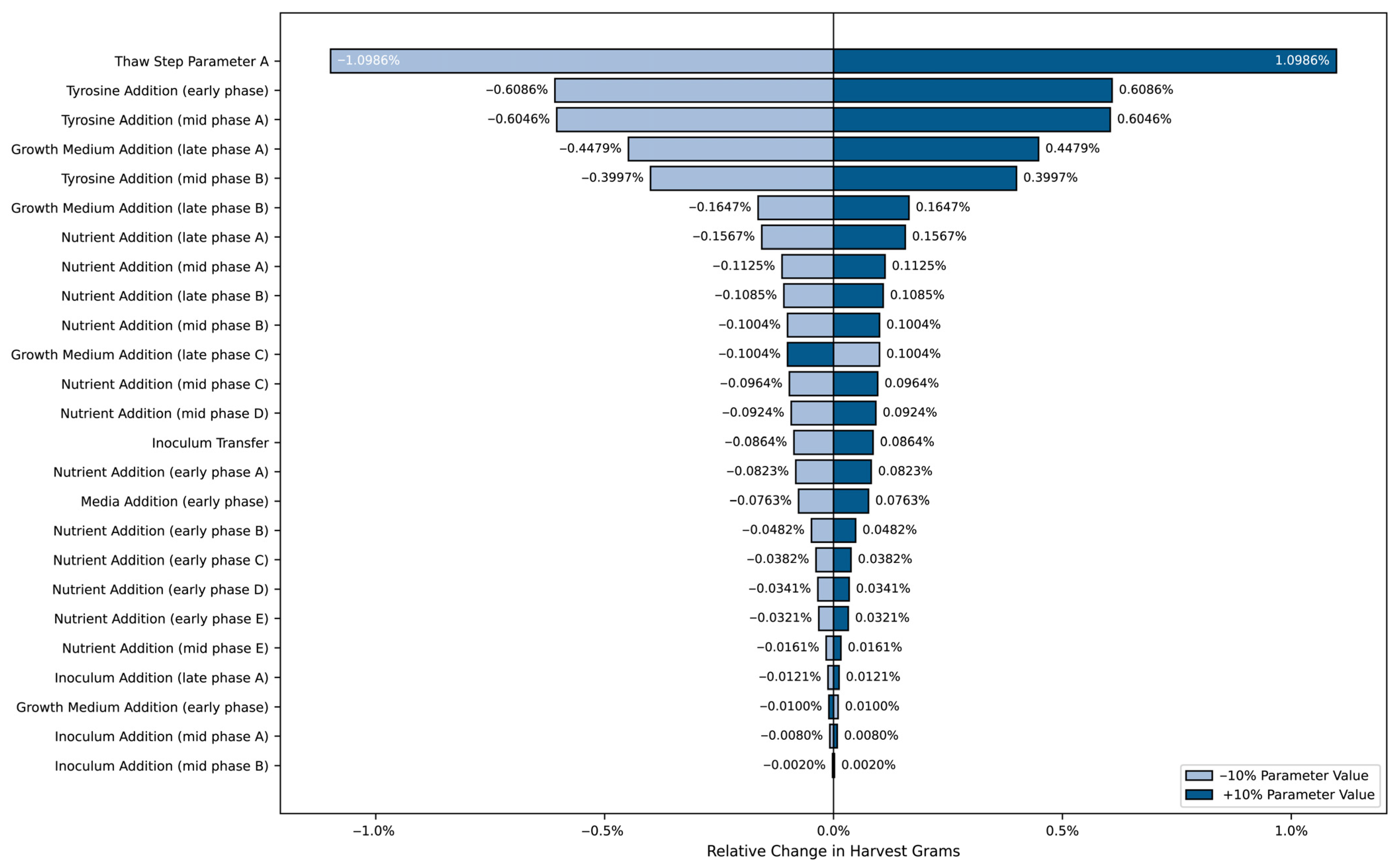

Unlike the optimization results, which identified the absolute best parameter values to maximize HG, the sensitivity analysis investigated the relative impact of parameter variations. By adjusting each parameter by ±10% from its baseline value, the tornado chart highlights those that contributed most significantly to changes in HG.

Figure 5 illustrates how these variations affected yield optimization, showing which parameters exerted the greatest influence on HG and providing insights into the factors most critical for the final yield.

As shown in

Figure 5, certain parameters strongly affected yield fluctuations. The most impactful parameters were thaw media warming time, tyrosine addition weight at various stages, and nutrient addition volumes. Parameters with the largest bar lengths indicate a greater effect on HG when varied. Notably, an increase or decrease in thaw media warming time and tyrosine addition weight resulted in the highest sensitivity, highlighting the need for precise control to improve production yield.

4. Discussion

This study demonstrates the practical potential of data-driven methodologies for yield improvement in the upstream bioprocessing of mAbs. Using ML models trained on industrial batch data, we identified a subset of CPPs that consistently impacted HG, including nutrient addition timing, transfer windows, and biochemical markers such as glucose and lactate concentrations.

The ML models predicted values beyond historical averages, reinforcing the viability of data-driven decision-making in bioprocess environments. Importantly, these findings support earlier observations by Cheon et al. [

27], who highlighted the ability of ML models to uncover nonlinear parameter dependencies and guide operational stability in complex bioprocesses. In our study, because the exploratory framework relied primarily on BFW predictions, the resulting parameter recommendations represent aggregate yield behavior and may not fully capture HT- or PCV-specific effects.

While ML models delivered actionable insights, their black-box nature limited interpretability and restricted the scope of model-based exploration. The RF, GBM, and SVR models frequently selected parameter values near predefined bounds, highlighting their tendency to overfit without embedded process constraints. This echoes the challenges identified by Helleckes et al. [

14] and Cheng et al. [

15], who cited ML model opacity as a barrier to implementation in GMP environments. The need to integrate expert knowledge and operational constraints into model-based analyses is critical to ensuring real-world applicability, particularly in highly regulated settings. A lack of specialized development environments and limited regulatory guidance further complicate efforts to scale ML implementations within manufacturing-grade bioprocess operations [

8]. Furthermore, the models exhibited reduced performance for certain targets (e.g., HT and PCV), which aligns with the findings of Jones and Gerogiorgis [

30], who emphasized the selective importance of variables across predictive tasks.

The sensitivity analysis helped identify high-impact variables, despite model opacity. By evaluating the effect of ± 10% changes in key parameters, the analysis guided the prioritization of process variables. This not only supports more focused control strategies but also reduces operational complexity by identifying parameters that can tolerate variation without compromising yield. These results reinforce the insights of Kaysfeld et al. [

38], who showed that integrating dynamic control and scheduling analyses into mAb fed-batch operations could significantly enhance performance by manipulating nutrient profiles and timing. In both studies, model-guided analysis revealed that time-based process-input parameters were particularly influential, highlighting the importance of dynamic scheduling and phase-specific control in upstream processes.

Our findings add to the evidence supporting ML-driven yield improvement approaches in biologics production. The process insights derived from yield predictions align with prior work by Kornecki and Strube [

5], who demonstrated that model-based upstream decision-making can reduce experimental burden and accelerate bioprocess development. Moreover, the hyperbox mixture regression approach proposed by Nik-Khorasani et al. [

39] emphasizes the potential of interpretable, data-driven tools for evaluating parameter sensitivity and recommending practical adjustments. Their use of regression-based clustering complements the insights derived from our univariate models by offering an additional layer of interpretability and parameter segmentation. Together, these results strengthen the case for ML-assisted process control strategies that are not only predictive but also actionable in real-world production environments.

The following remarks summarize the key methodological uncertainties and limitations of the study. First, the model’s reliance on historical batch data—while practical—limits its capacity to capture process variability due to changing raw materials, bioreactor scale-up issues, and cell line evolution. Additionally, while the dataset contained 65 batches, the relatively low sample size limited generalizability and predictive accuracy for certain targets, particularly HT and PCV. This reflects broader challenges in biopharmaceutical ML adoption, as discussed by Singh and Singhal [

23], including data fragmentation, low-frequency measurements, and inconsistent parameter recording.

To address these challenges, future work should incorporate hybrid models that blend mechanistic knowledge with data-driven learning—also highlighted by Rathore et al. [

8] as a promising pathway for improving both interpretability and regulatory acceptance in bioprocess applications. Integrating real-time monitoring systems and PAT tools could enhance input resolution and provide more reliable decision-making under uncertainty. In addition, strategies such as simulation-based data augmentation or transfer learning may help overcome data scarcity in highly regulated environments. Furthermore, typical industry challenges, such as data silos, sparse sampling, and inconsistent record-keeping, continue to hinder widespread adoption of ML in biomanufacturing environments and must be addressed to fully leverage model-driven strategies. Exploring model generalizability across different mAb platforms or production scales would also help extend the impact of this approach.

Finally, although this study focused on offline, data-driven yield prediction, the proposed approach could support future integration into automated or supervisory control frameworks. In such settings, predictive models may serve as advisory or surrogate components that generate yield forecasts or recommend process adjustments based on real-time monitoring data. This conceptual linkage emphasizes how exploratory modeling outcomes, once validated, could inform control-oriented strategies for process adaptation and decision support.

5. Conclusions

This study highlights the practical potential of ML for yield improvement in the upstream bioprocessing of mAbs. By applying data-driven modeling and parameter-exploration techniques to historical production data, we achieved a predicted increase in harvested yield beyond previously recorded batch performance.

Despite this success, the analysis revealed key limitations of purely statistical approaches. The predictive models for HT and PCV showed limited reliability, emphasizing the need for further refinement or integration with mechanistic understanding. Moreover, the exploratory analysis often resulted in parameter values at the extreme ends of their allowable ranges—a behavior rooted in the black-box nature of the models and the absence of physical constraints or target ranges.

Future work should consider incorporating soft constraints or penalty functions to guide the exploratory data analysis toward realistic and controllable parameter values. Additionally, hybrid approaches that combine empirical modeling with mechanistic insights may offer more robust and interpretable solutions. Enhancing the prediction accuracy of HT and PCV, either through feature enrichment or model selection, remains an important area for continued investigation. In a broader context, the outcomes presented here may also inform future efforts to connect predictive modeling with process-control strategies, contributing to the gradual advancement toward data-driven and adaptive biomanufacturing.

Ultimately, this study provides a foundation for integrating ML into upstream process development, offering a pathway toward data-driven decision-making in biomanufacturing settings. However, success in scaling such approaches will depend not only on algorithmic performance but also on access to high-quality, structured process data. As highlighted in prior studies, the lack of standardized, readily accessible production data still limits broader ML adoption in bioprocessing. Addressing this through improved data infrastructure, contextual data integration, and possibly simulation-enhanced datasets will be essential to fully enable AI in industry.