Drone-Based Marigold Flower Detection Using Convolutional Neural Networks

Abstract

1. Introduction

1.1. State-of-the-Art Review

1.2. Main Contributions

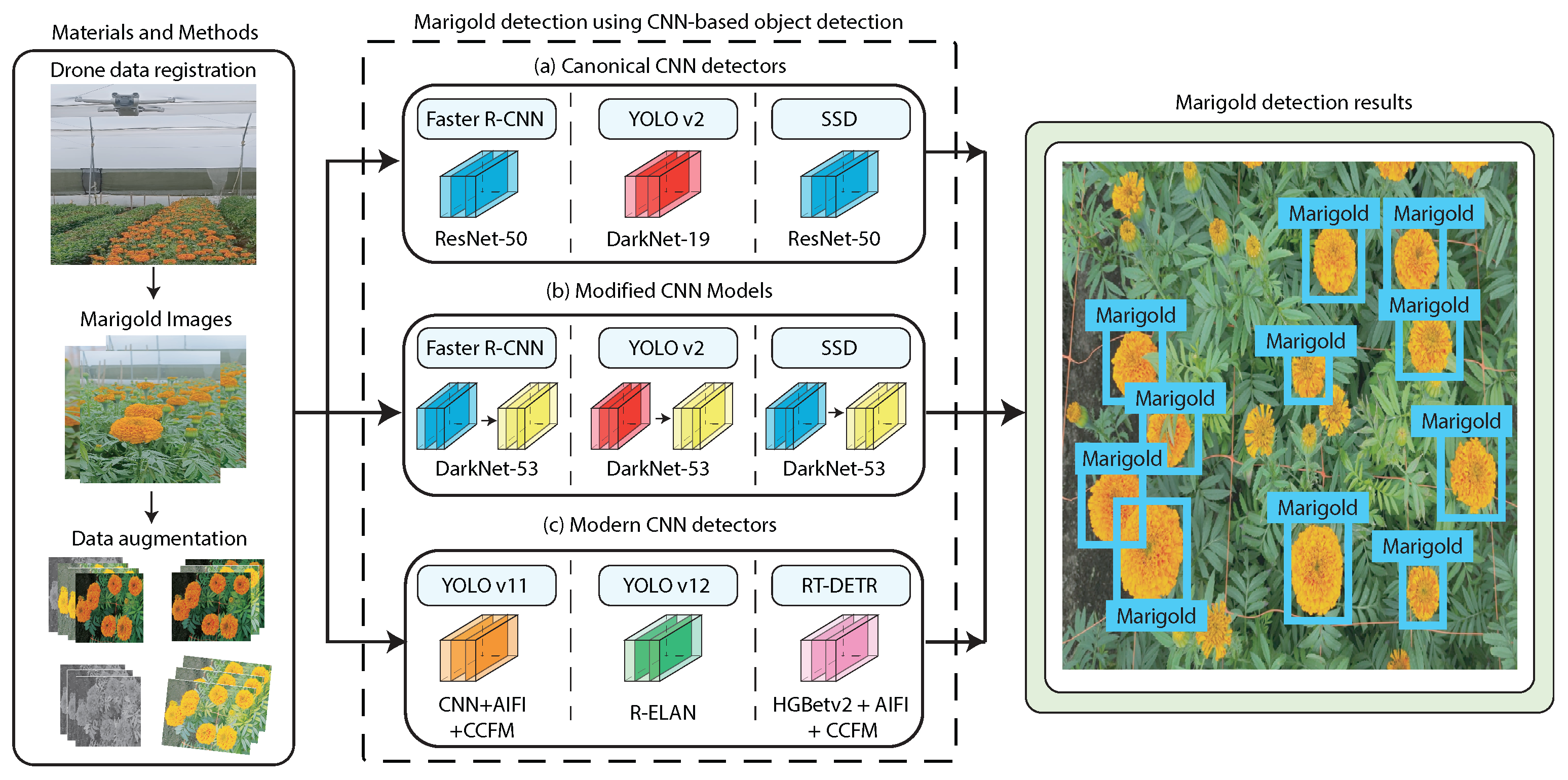

- A unique marigold flower dataset was built from 392 drone images captured with a DJI Mini 3 Pro, manually annotated, and augmented to represent the final blooming stage under real field conditions.

- Three groups of detectors were evaluated: canonical models (YOLOv2, Faster R-CNN, SSD with their standard backbones), modified versions using DarkNet-53 as a common backbone, and modern detectors (YOLOv11, YOLOv12, RT-DETR).

- An experimental work was carried out on the modified detectors by varying optimizers, learning rates, and training epochs, analyzing their influence on model stability, underfitting, and overfitting.

- Model performance was assessed through mAP@0.5, precision–recall curves, and F1-scores, and complemented with an analysis of model complexity and inference speed to evaluate efficiency in drone-based applications.

2. Materials and Methods

2.1. Data Acquisition

2.2. RGB Versus Hyperspectral Imagery

2.3. Dataset Split Strategy

2.4. Marigold Detection Using CNN-Based Object Detection

2.4.1. Canonical CNN Detectors

YOLO V2

Faster R-CNN

SSD

2.4.2. Modern CNN Detectors

YOLOv11 and YOLOv12

RT-DETR

2.4.3. Backbone Networks

ResNet-50 as Backbone

DarkNet-53 as Backbone

2.4.4. Canonical and Modern CNNs Configurations

2.4.5. Hyperparameter Selection

3. Results

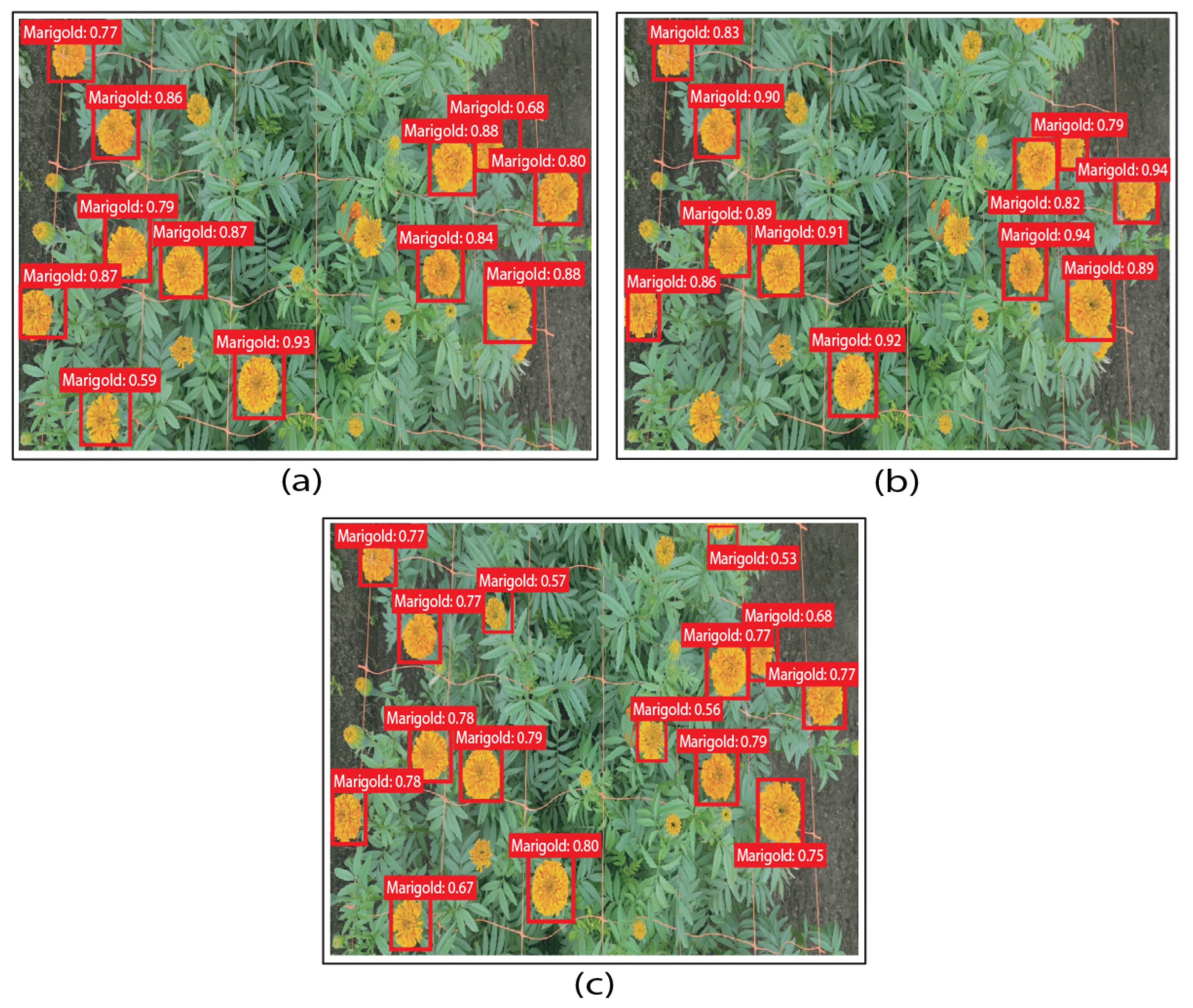

3.1. Canonical and Modern CNN Detector Results

3.2. Modified CNN Detector Results with DarkNet-53

3.3. Model Complexity and Inference Speed

3.4. Comparative Analysis of Modified and Modern Detectors

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bayraktar, E.; Basarkan, M.E.; Celebi, N. A low-cost UAV framework towards ornamental plant detection and counting in the wild. ISPRS J. Photogramm. Remote Sens. 2020, 167, 1–11. [Google Scholar] [CrossRef]

- Schnalke, M.; Funk, J.; Wagner, A. Bridging technology and ecology: Enhancing applicability of deep learning and UAV-based flower recognition. Front. Plant Sci. 2025, 16, 1498913. [Google Scholar] [CrossRef] [PubMed]

- Gallmann, J.; Schüpbach, B.; Jacot, K.; Albrecht, M.; Winizki, J.; Kirchgessner, N.; Aasen, H. Flower mapping in grasslands with drones and deep learning. Front. Plant Sci. 2022, 12, 774965. [Google Scholar] [CrossRef] [PubMed]

- Sângeorzan, D.D.; Păcurar, F.; Reif, A.; Weinacker, H.; Rușdea, E.; Vaida, I.; Rotar, I. Detection and quantification of Arnica montana L. inflorescences in grassland ecosystems using convolutional neural networks and drone-based remote sensing. Remote Sens. 2024, 16, 2012. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Usha, V.; Sathya, V.; Kujani, T.; Anitha, T.; Priya, S.S.; Abhinash, N.C. Diagnosing Floral Diseases Automatically using Deep Convolutional Neural Nets. In Proceedings of the 2024 2nd International Conference on Advances in Computation, Communication and Information Technology (ICAICCIT), Faridabad, India, 28–29 November 2024; Volume 1, pp. 491–495. [Google Scholar]

- Zhang, C.; Sun, X.; Xuan, S.; Zhang, J.; Zhang, D.; Yuan, X.; Fan, X.; Suo, X. Monitoring of Broccoli Flower Head Development in Fields Using Drone Imagery and Deep Learning Methods. Agronomy 2024, 14, 2496. [Google Scholar] [CrossRef]

- Moya, V.; Quito, A.; Pilco, A.; Vásconez, J.P.; Vargas, C. Crop Detection and Maturity Classification Using a YOLOv5-Based Image Analysis. Emerg. Sci. J. 2024, 8, 496–512. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Salvo, J.; Auat, F. Toward Semantic Action Recognition for Avocado Harvesting Process based on Single Shot MultiBox Detector. In Proceedings of the 2018 IEEE International Conference on Automation/XXIII Congress of the Chilean Association of Automatic Control (ICA-ACCA), Concepcion, Chile, 17–19 October 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Wakchaure, M.; Patle, B.; Mahindrakar, A. Application of AI techniques and robotics in agriculture: A review. Artif. Intell. Life Sci. 2023, 3, 100057. [Google Scholar] [CrossRef]

- Pilco, A.; Moya, V.; Quito, A.; Vásconez, J.P.; Limaico, M. Image Processing-Based System for Apple Sorting. J. Image Graph. 2024, 12, 362–371. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Yan, N.; Chang, S.; Zhao, Y.; Dong, Q.; et al. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef]

- Moya, V.; Espinosa, V.; Chávez, D.; Leica, P.; Camacho, O. Trajectory tracking for quadcopter’s formation with two control strategies. In Proceedings of the 2016 IEEE Ecuador Technical Chapters Meeting (ETCM), Guayaquil, Ecuador, 12–14 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Peña, S.; Pilco, A.; Moya, V.; Chamorro, W.; Vásconez, J.P.; Zuniga, J.A. Color Sorting System Using YOLOv5 for Robotic Mobile Applications. In Proceedings of the 2024 6th International Conference on Robotics and Computer Vision (ICRCV), Wuxi, China, 20–22 September 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Vasconez, J.; Delpiano, J.; Vougioukas, S.; Auat Cheein, F. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Duan, Z.; Liu, W.; Zeng, S.; Zhu, C.; Chen, L.; Cui, W. Research on a real-time, high-precision end-to-end sorting system for fresh-cut flowers. Agriculture 2024, 14, 1532. [Google Scholar] [CrossRef]

- Estrada, J.S.; Vasconez, J.P.; Fu, L.; Cheein, F.A. Deep Learning based flower detection and counting in highly populated images: A peach grove case study. J. Agric. Food Res. 2024, 15, 100930. [Google Scholar] [CrossRef]

- Ma, B.; Wu, Z.; Ge, Y.; Chen, B.; Zhang, H.; Xia, H.; Wang, D. A Recognition Method for Marigold Picking Points Based on the Lightweight SCS-YOLO-Seg Model. Sensors 2025, 25, 4820. [Google Scholar] [CrossRef]

- Patel, S. Marigold flower blooming stage detection in complex scene environment using faster RCNN with data augmentation. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 676–684. [Google Scholar] [CrossRef]

- Abbas, T.; Razzaq, A.; Zia, M.A.; Mumtaz, I.; Saleem, M.A.; Akbar, W.; Khan, M.A.; Akhtar, G.; Shivachi, C.S. Deep neural networks for automatic flower species localization and recognition. Comput. Intell. Neurosci. 2022, 2022, 9359353. [Google Scholar] [CrossRef] [PubMed]

- Sert, E. A deep learning based approach for the detection of diseases in pepper and potato leaves. Anadolu Tarım Bilim. Derg. 2021, 36, 167–178. [Google Scholar] [CrossRef]

- Horng, G.J.; Liu, M.X.; Chen, C.C. The smart image recognition mechanism for crop harvesting system in intelligent agriculture. IEEE Sens. J. 2019, 20, 2766–2781. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Chen, J.; Chen, W.; Zeb, A.; Yang, S.; Zhang, D. Lightweight inception networks for the recognition and detection of rice plant diseases. IEEE Sens. J. 2022, 22, 14628–14638. [Google Scholar] [CrossRef]

- Cheng, Z.; Zhang, F. Flower End-to-End Detection Based on YOLOv4 Using a Mobile Device. Wirel. Commun. Mob. Comput. 2020, 2020, 8870649. [Google Scholar] [CrossRef]

- Banerjee, D.; Kukreja, V.; Sharma, V.; Jain, V.; Hariharan, S. Automated Diagnosis of Marigold Leaf Diseases using a Hybrid CNN-SVM Model. In Proceedings of the 2023 8th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 1–3 June 2023; pp. 901–906. [Google Scholar]

- Fan, Y.; Tohti, G.; Geni, M.; Zhang, G.; Yang, J. A marigold corolla detection model based on the improved YOLOv7 lightweight. Signal Image Video Process. 2024, 18, 4703–4712. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Advances in hyperspectral remote sensing of vegetation and agricultural crops. In Fundamentals, Sensor Systems, Spectral Libraries, and Data Mining for Vegetation; CRC Press: Boca Raton, FL, USA, 2018; pp. 3–37. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Du, J. Understanding of object detection based on CNN family and YOLO. In Proceedings of the 2nd International Conference on Machine Vision and Information Technology (CMVIT 2018), Hong Kong, China, 23–25 February 2018; Journal of Physics: Conference Series. IOP Publishing: Bristol, UK, 2018; Volume 1004, p. 012029. [Google Scholar]

- Vilcapoma, P.; Parra Meléndez, D.; Fernández, A.; Vásconez, I.N.; Hillmann, N.C.; Gatica, G.; Vásconez, J.P. Comparison of faster R-CNN, YOLO, and SSD for third molar angle detection in dental panoramic X-rays. Sensors 2024, 24, 6053. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Hamidisepehr, A.; Mirnezami, S.V.; Ward, J.K. Comparison of object detection methods for corn damage assessment using deep learning. Trans. ASABE 2020, 63, 1969–1980. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Ram, B.G.; Schumacher, L.; Yellavajjala, R.K.; Bajwa, S.; Sun, X. Applications of deep learning in precision weed management: A review. Comput. Electron. Agric. 2023, 206, 107698. [Google Scholar] [CrossRef]

- Maity, M.; Banerjee, S.; Chaudhuri, S.S. Faster r-cnn and yolo based vehicle detection: A survey. In Proceedings of the 2021 5th international conference on computing methodologies and communication (ICCMC), Erode, India, 8–10 April 2021; pp. 1442–1447. [Google Scholar]

- Yuan, T.; Lv, L.; Zhang, F.; Fu, J.; Gao, J.; Zhang, J.; Li, W.; Zhang, C.; Zhang, W. Robust cherry tomatoes detection algorithm in greenhouse scene based on SSD. Agriculture 2020, 10, 160. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Z.; Lei, L.; Wang, X.; Guo, X. Agricultural greenhouses detection in high-resolution satellite images based on convolutional neural networks: Comparison of faster R-CNN, YOLO v3 and SSD. Sensors 2020, 20, 4938. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Ultralytics. Ultralytics YOLO11, version 11.0.0; License: AGPL-3.0; Ultralytics: Frederick, MD, USA, 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 August 2025).

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974.

- Shaheed, K.; Qureshi, I.; Abbas, F.; Jabbar, S.; Abbas, Q.; Ahmad, H.; Sajid, M.Z. EfficientRMT-Net—An efficient ResNet-50 and vision transformers approach for classifying potato plant leaf diseases. Sensors 2023, 23, 9516. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, J.; Zhao, X.; Su, X.; Wu, W. Lightweight detection networks for tea bud on complex agricultural environment via improved YOLO v4. Comput. Electron. Agric. 2023, 211, 107955. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Miranda, J.C.; Gené-Mola, J.; Zude-Sasse, M.; Tsoulias, N.; Escolà, A.; Arnó, J.; Rosell-Polo, J.R.; Sanz-Cortiella, R.; Martínez-Casasnovas, J.A.; Gregorio, E. Fruit sizing using AI: A review of methods and challenges. Postharvest Biol. Technol. 2023, 206, 112587. [Google Scholar] [CrossRef]

- Mimma, N.E.A.; Ahmed, S.; Rahman, T.; Khan, R. Fruits classification and detection application using deep learning. Sci. Program. 2022, 2022, 4194874. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9905. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tieleman, T.; Hinton, G. Rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 17, 6. [Google Scholar]

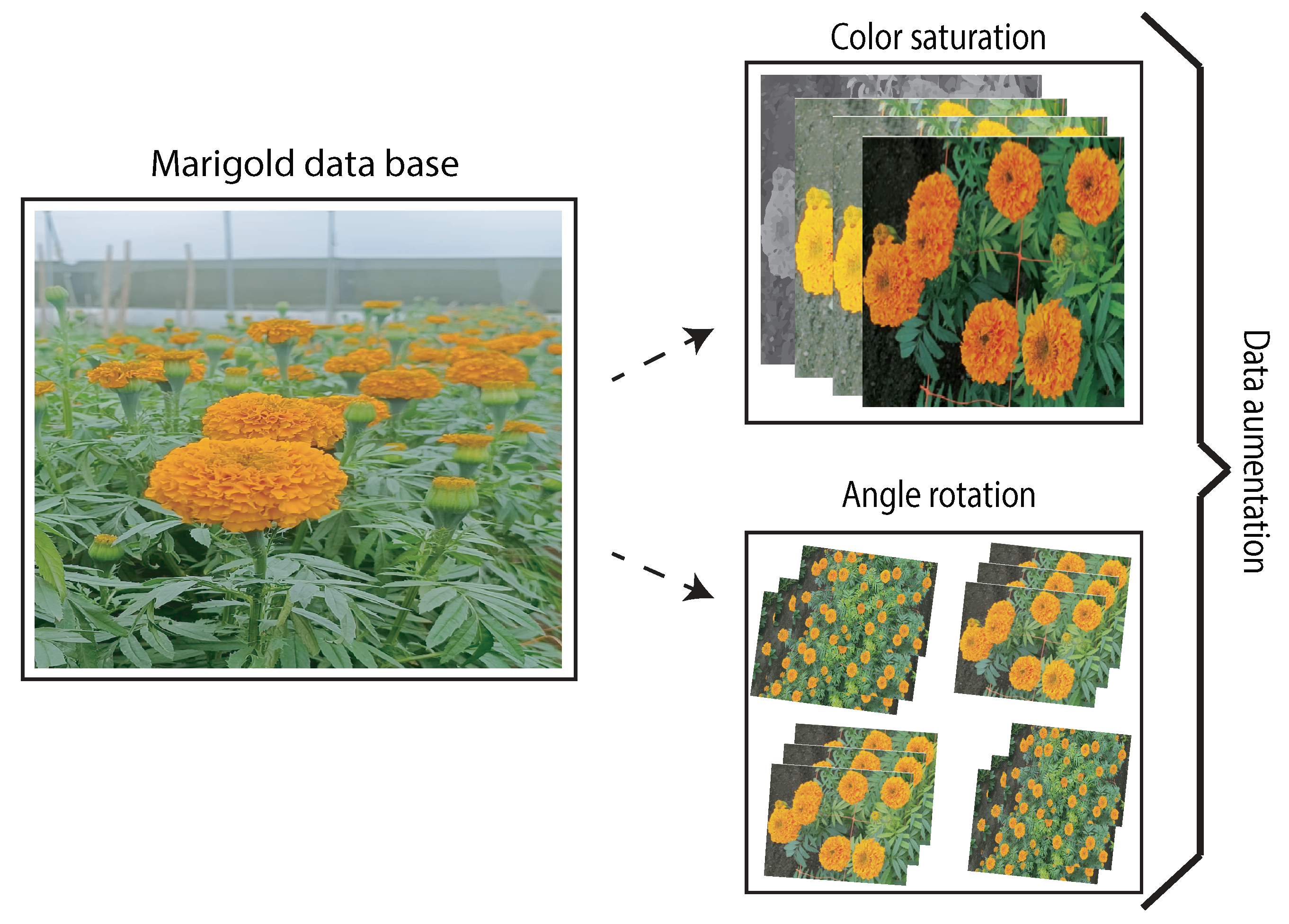

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Sethy, P.; Barpanda, N.; Rath, A.; Behera, S. Counting of marigold flowers using image processing techniques. Int. J. Recent Technol. Eng. 2019, 8, 385–389. [Google Scholar]

| Data Category | Training | Validation | Testing |

|---|---|---|---|

| Normal (original) | 313 | 39 | 40 |

| Data Augmentation | 627 | 77 | 78 |

| Total | 940 | 116 | 118 |

| Augmentation Method | Training | Validation | Testing |

|---|---|---|---|

| Rotation (±9°) | 150 | 20 | 20 |

| Horizontal Flip | 150 | 20 | 20 |

| Brightness Adjustment | 160 | 20 | 20 |

| Zoom/Cropping | 167 | 17 | 18 |

| Total Augmented | 627 | 77 | 78 |

| Characteristics | YOLOv2 | Faster R-CNN | SSD |

|---|---|---|---|

| Detection type | One stage | Two stage | One stage |

| Methodology | Fixed grids with anchors | Multiple scales with default boxes | Region generation (RPN) + classification |

| Default backbone | Darknet-19 | VGG/ResNet | ResNet |

| Model | Backbone (Used) | Optimizer (Default) | Reference |

|---|---|---|---|

| Faster R-CNN (MATLAB) | ResNet-50 (orig.: ZFNet/VGG-16) | SGD with momentum (0.9) | Ren et al. [49] |

| SSD (MATLAB) | ResNet-50 (orig.: VGG-16, SSD300) | SGD with momentum (0.9) | Liu et al. [50] |

| YOLOv2 (MATLAB) | DarkNet-19 | SGD with momentum (0.9) | Redmon et al. [35] |

| YOLOv11/12 (Ultralytics) | Default backbone | Auto (SGD/Adam) | Ultralytics [41,42] |

| RT-DETR (Ultralytics) | ResNet-50 | SGD (default Ultralytics) | Zhao et al. [43] |

| Model | Anchor Boxes | Reference |

|---|---|---|

| Faster R-CNN (ResNet-50) | 32, 64, 128, 256, 512 × (1:1, 1:2, 2:1) | Ren et al. [49] |

| SSD (ResNet-50) | , , , , , | Liu et al. [50] |

| YOLOv2 (DarkNet-19) | , , , , | Redmon et al. [35] |

| YOLOv11/12 (Ultralytics) | auto-computed by k-means clustering (dataset-specific) | Ultralytics [41] |

| RT-DETR (Ultralytics) | dynamic anchors via transformer attention | Tian et al. [42] |

| Parameter | MATLAB Detectors (Faster R-CNN, SSD, YOLOv2) | Ultralytics Detectors (YOLOv11-12, RT-DETR) |

|---|---|---|

| Max epochs | 15 and 30 | 15 and 30 |

| Batch size | 16 | 16 |

| Input resolution | 416 × 416 px | 416 × 416 px |

| Initial learning rate | 0.001 | 0.001 |

| Optimizer | SGDM | SGD |

| Test | Optimizer | Max Epochs | Learning Rate |

|---|---|---|---|

| Test 1 | Adam | 15 | 0.001 |

| Test 2 | SGDM | 15 | 0.001 |

| Test 3 | RMSProp | 15 | 0.001 |

| Test 4 | Adam | 15 | 0.0001 |

| Test 5 | SGDM | 15 | 0.0001 |

| Test 6 | RMSProp | 15 | 0.0001 |

| Test 7 | Adam | 15 | 0.0005 |

| Test 8 | SGDM | 15 | 0.0005 |

| Test 9 | RMSProp | 15 | 0.0005 |

| Test 10 | Adam | 30 | 0.001 |

| Test 11 | SGDM | 30 | 0.001 |

| Test 12 | RMSProp | 30 | 0.001 |

| Test 13 | Adam | 30 | 0.0001 |

| Test 14 | SGDM | 30 | 0.0001 |

| Test 15 | RMSProp | 30 | 0.0001 |

| Test 16 | Adam | 30 | 0.0005 |

| Test 17 | SGDM | 30 | 0.0005 |

| Test 18 | RMSProp | 30 | 0.0005 |

| 15 Epochs | 30 Epochs | |||||||

|---|---|---|---|---|---|---|---|---|

| Model | Backbone | Optimizer | Train | Val | Test | Train | Val | Test |

| Faster R-CNN | ResNet-50 | SGDM | 44.1% | 45.2% | 43.1% | 53.1% | 51.1% | 52.1% |

| SSD | ResNet-50 | SGDM | 41.2% | 41.0% | 40.3% | 38.3% | 39.4% | 38.2% |

| YOLOv2 | DarkNet-19 | SGDM | 39.6% | 38.7% | 39.1% | 66.8% | 63.0% | 62.9% |

| YOLOv11 | Default | SGD | 95.9% | 96.2% | 96.7% | 95.9% | 95.7% | 96.5% |

| YOLOv12 | Default | SGD | 96.4% | 95.9% | 96.5% | 96.5% | 95.9% | 96.3% |

| RT-DETR | ResNet-50 | SGD | 81.0% | 79.3% | 83.1% | 91.3% | 92.6% | 93.5% |

| Training | Validation | Testing | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Test | Faster | YOLOv2 | SSD | Faster | YOLOv2 | SSD | Faster | YOLOv2 | SSD |

| Test 1 | 46.2% | 98.1% | 57.9% | 46.1% | 97.2% | 55.6% | 43.8% | 96.3% | 56.8% |

| Test 2 | 49.3% | 74.0% | 63.2% | 47.7% | 73.0% | 61.3% | 47.1% | 73.0% | 63.1% |

| Test 3 | 25.2% | 93.4% | 60.1% | 23.1% | 91.2% | 57.5% | 23.2% | 91.5% | 57.8% |

| Test 4 | 42.0% | 98.7% | 58.0% | 40.0% | 98.6% | 58.0% | 40.0% | 96.5% | 58.0% |

| Test 5 | 35.6% | 19.0% | 51.0% | 36.0% | 19.0% | 51.0% | 33.1% | 19.0% | 51.0% |

| Test 6 | 8.0% | 98.0% | 62.0% | 6.0% | 97.0% | 61.0% | 6.0% | 97.0% | 62.0% |

| Test 7 | 29.0% | 99.0% | 58.0% | 28.1% | 98.0% | 58.0% | 28.2% | 97.0% | 57.0% |

| Test 8 | 44.9% | 58.0% | 57.0% | 44.7% | 57.0% | 56.0% | 42.0% | 60.0% | 56.0% |

| Test 9 | 13.0% | 94.0% | 62.0% | 13.0% | 91.0% | 61.0% | 12.0% | 91.0% | 60.0% |

| Test 10 | 55.0% | 99.0% | 59.2% | 53.0% | 98.0% | 58.8% | 51.0% | 97.0% | 57.1% |

| Test 11 | 56.9% | 85.0% | 53.0% | 55.8% | 84.0% | 55.0% | 52.8% | 83.0% | 54.0% |

| Test 12 | 39.0% | 98.0% | 56.0% | 38.0% | 96.0% | 54.0% | 38.0% | 97.0% | 54.0% |

| Test 13 | 51.0% | 99.2% | 59.3% | 50.0% | 98.9% | 58.2% | 51.0% | 98.8% | 58.1% |

| Test 14 | 53.0% | 30.3% | 56.9% | 53.0% | 31.2% | 56.9% | 52.0% | 33.3% | 56.7% |

| Test 15 | 17.0% | 99.0% | 57.2% | 17.0% | 98.0% | 57.7% | 16.0% | 97.0% | 57.2% |

| Test 16 | 37.0% | 99.0% | 54.0% | 35.0% | 98.0% | 53.0% | 35.0% | 98.0% | 52.0% |

| Test 17 | 49.0% | 75.0% | 55.1% | 49.0% | 75.0% | 54.5% | 49.0% | 74.0% | 53.8% |

| Test 18 | 21.2% | 98.0% | 56.0% | 20.3% | 98.0% | 55.0% | 21.1% | 97.0% | 54.0% |

| Model | Test ID | Backbone | Epochs | Optimizer | Train | Validation | Test |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | Test 11 | DarkNet-53 | 30 | Adam | 56.9% | 55.8% | 52.8% |

| SSD | Test 2 | DarkNet-53 | 15 | SGDM | 63.2% | 61.3% | 63.1% |

| YOLOv2 | Test 13 | DarkNet-53 | 30 | Adam | 99.2% | 98.9% | 98.8% |

| Model | Parameters (M) | Model Size (MB) | Inference Speed (ms/Img) |

|---|---|---|---|

| Faster R-CNN (ResNet-50, canonical) | 33.0 | 117.3 | 102.6 |

| SSD (ResNet-50, canonical) | 11.4 | 40.7 | 21.2 |

| YOLOv2 (DarkNet-19, canonical) | 9.4 | 33.3 | 8.6 |

| Faster R-CNN (DarkNet-53, modified) | 42.98 | 153.1 | 99.85 |

| SSD (DarkNet-53, modified) | 6.87 | 24.5 | 35.07 |

| YOLOv2 (DarkNet-53, modified) | 9.13 | 32.6 | 12.13 |

| YOLOv11 (Ultralytics) | 2.6 | 5.2 | 14.7 |

| YOLOv12 (Ultralytics) | 2.6 | 5.2 | 19.8 |

| RT-DETR (ResNet-50) | 32.8 | 63.1 | 28.0 |

| Model | Backbone | Epochs | Optimizer | Best mAP (Test) |

|---|---|---|---|---|

| Faster R-CNN | ResNet-50 (canonical) | 30 | SGDM | 52.1% |

| DarkNet-53 (modified) | 30 | Adam | 52.8% | |

| SSD | ResNet-50 (canonical) | 15 | SGDM | 40.3% |

| DarkNet-53 (modified) | 15 | SGDM | 63.1% | |

| YOLOv2 | DarkNet-19 (canonical) | 30 | SGDM | 62.9% |

| DarkNet-53 (modified) | 30 | Adam | 98.8% | |

| YOLOv11 | Default (Ultralytics) | 15 | SGD | 96.7% |

| YOLOv12 | Default (Ultralytics) | 15 | SGD | 96.5% |

| RT-DETR | ResNet-50 (Ultralytics) | 30 | SGD | 93.5% |

| Category | Model | Backbone | Test F1 (%) |

|---|---|---|---|

| Modified | Faster R-CNN | DarkNet-53 | 64.7 |

| SSD | DarkNet-53 | 66.1 | |

| YOLOv2 | DarkNet-53 | 97.9 | |

| Modern | YOLOv11 | Default | 93.0 |

| YOLOv12 | Default | 93.1 | |

| RT-DETR | ResNet-50 | 89.5 |

| Reference | Model | Backbone | Best mAP (Test) | Speed (ms/Img) |

|---|---|---|---|---|

| This work | YOLOv2 (canonical) | DarkNet-19 | 62.9% | 8.6 |

| YOLOv2 (modified) | DarkNet-53 | 98.8% | 12.13 | |

| YOLOv11 (modern) | Default | 96.7% | 14.7 | |

| Sethy et al. [54] | Classical (HSV+CHT) | Backbone A | 95.6% | – |

| Patel et al. [20] | Faster R-CNN | ResNet-50 (TL COCO) | 88.71% | 4.31 |

| Patel et al. [20] | SSD | MobileNet | 74.30% | 0.64 |

| Ma et al. [19] | SCS-YOLO-Seg | StarNet + C2f-Star + Seg-head | 93.3% | 100 |

| Fan et al. [29] | YOLOv7 (lite, pruned) | DSConv + SPPF + pruning | 93.9% | 166.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vilcapoma, P.; Vásconez, I.N.; Prado, A.J.; Moya, V.; Vásconez, J.P. Drone-Based Marigold Flower Detection Using Convolutional Neural Networks. Processes 2025, 13, 3169. https://doi.org/10.3390/pr13103169

Vilcapoma P, Vásconez IN, Prado AJ, Moya V, Vásconez JP. Drone-Based Marigold Flower Detection Using Convolutional Neural Networks. Processes. 2025; 13(10):3169. https://doi.org/10.3390/pr13103169

Chicago/Turabian StyleVilcapoma, Piero, Ingrid Nicole Vásconez, Alvaro Javier Prado, Viviana Moya, and Juan Pablo Vásconez. 2025. "Drone-Based Marigold Flower Detection Using Convolutional Neural Networks" Processes 13, no. 10: 3169. https://doi.org/10.3390/pr13103169

APA StyleVilcapoma, P., Vásconez, I. N., Prado, A. J., Moya, V., & Vásconez, J. P. (2025). Drone-Based Marigold Flower Detection Using Convolutional Neural Networks. Processes, 13(10), 3169. https://doi.org/10.3390/pr13103169