1. Introduction

With the improvement of living standards, the demand for personalized clothing and apparel products has significantly increased. The deep integration of information technology and industrialization, along with the enhancement of digital capabilities, has greatly improved the industry’s resilience and competitiveness, making personalized demands a reality [

1]. Therefore, improving labor productivity has become a key focus of digital transformation in the cotton spinning industry [

2]. The textile industry is also seeking development through digital transformation, enhancing independent innovation capabilities, and optimizing industrial structures. Currently, research on the improvement of the textile industry through artificial intelligence is relatively limited. However, this has also brought challenges such as increased production demands and labor shortages [

3]. Some textiles, such as cotton cup indentation, with complex a curved machining process, still require manual segmentation.

Some researchers have attempted to optimize cutting paths [

4] and cutting tool models [

5,

6,

7] to reduce cutting errors, but the ability to improve cutting precision is limited. Achieving precise cutting of cotton cup indentations relies fundamentally on the fast and accurate identification of indentation trajectories. But textile materials such as cotton are highly flexible, and the production process of 3D cotton cups involves the coupling of rigid needle-type, knife-type, curved-type, and other mechanical parts. It is necessary to achieve its 3D segmentation and processing without destroying the softness. The increasing variety and shape of 3D cotton cups due to personalized requirements make the use of fixed molds for cutting inefficient, leading to a large number of molds and high costs. So, what we do in this study is develop a machine to segment accurately and automatically, based on a deep learning model.

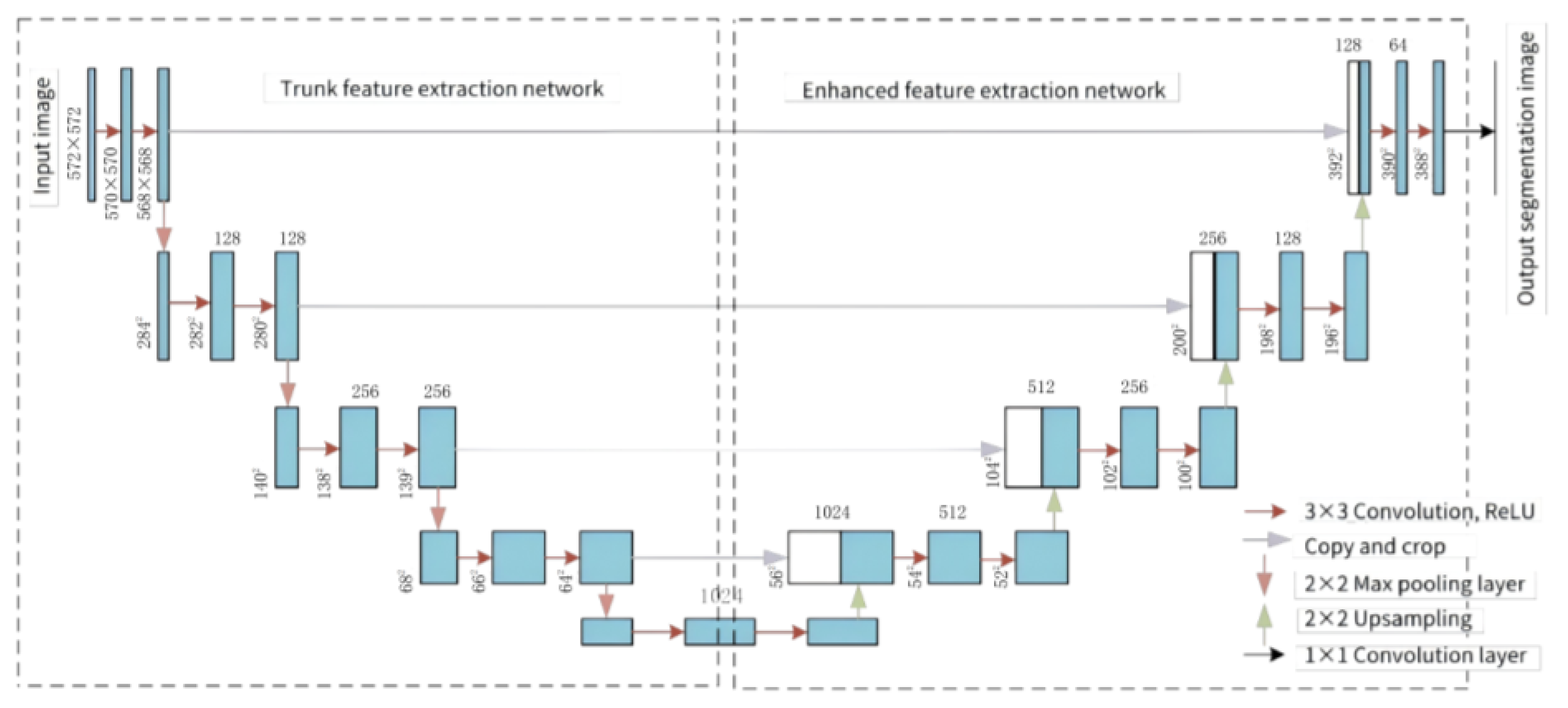

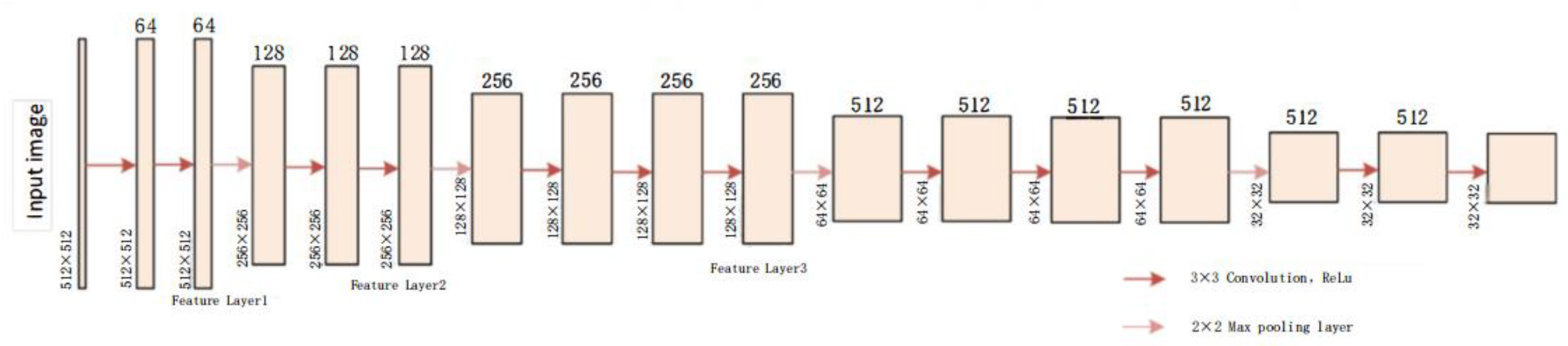

The most commonly used deep learning image segmentation algorithms include UNet [

8], PSPNet [

9], and DeepLabv3+ [

10], which have shown excellent performance in image segmentation tasks. UNet, a variant of the fully convolutional network, is the simplest and most efficient end-to-end segmentation algorithm. It mainly consists of a primary feature extraction part and an enhanced feature extraction part. Zhao et al. [

11] proposed a region-based comprehensive matching method that extracts color information from different regions of clothing materials and retrieves matching areas through weighted region matching. Jiang et al. [

12] proposed a deep learning-based method that combines dilated convolution features with traditional convolution features to achieve pixel-level material segmentation across the entire image. Zhong et al. [

13] developed the FMNet algorithm with a multi-directional attention mechanism for clothing image segmentation. This algorithm learns semantic information from three perspectives: space, channel, and category, enabling accurate and fast segmentation of clothing images. Deng et al. [

14] improved the detection of unclear cracks by introducing attention modules and enhanced residual connections. By using modified residual connections and adding attention modules, they improved the detection of unclear cracks.

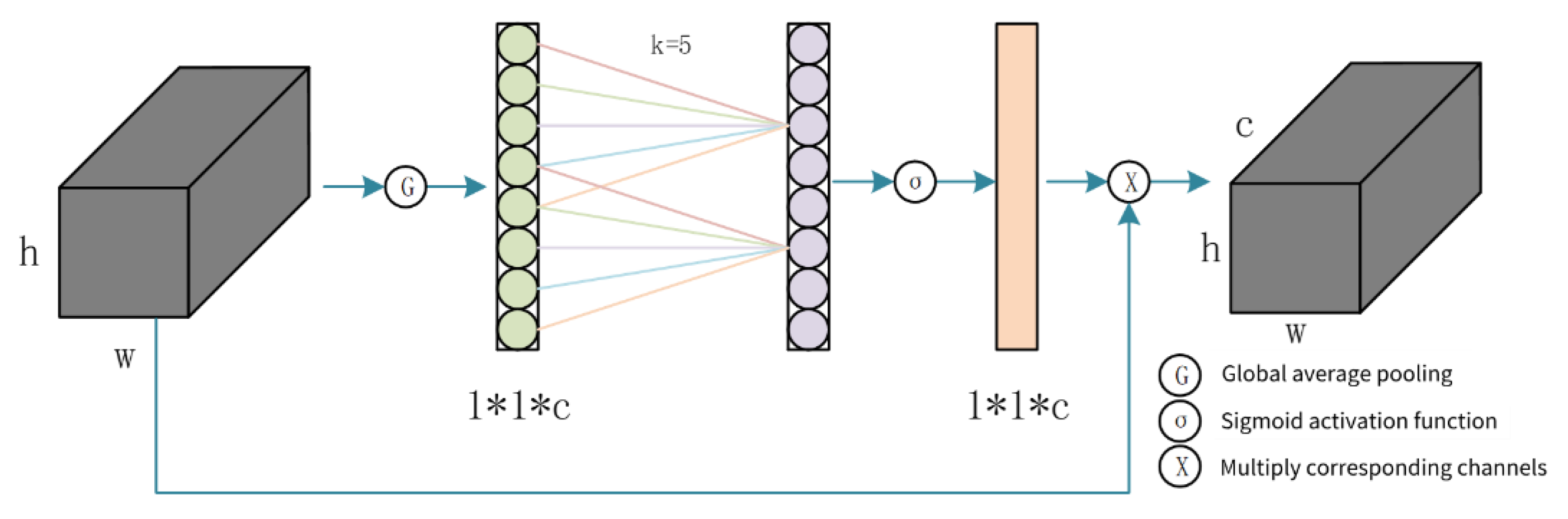

There are also many recent studies on improving deep learning through PSP structure, ECA, ASPP, Leaky-ReLU, etc. For example, Ge et al. [

15] improved the classification task (of GNNs) through PSP technique, achieved the performance improvement of PSP framework in the sample-less scenario, and pointed out that the PSP is an effective path to solve the problem of prototype vector construction with few samples. Shu et al. [

16] improved the U-Net network by ECA to ensure accurate segmentation while significantly reducing the model parameters during fetal ultrasound cerebellar segmentation, and pointed out that ECA is an effective solution to the problem of “balancing high accuracy with low parameter redundancy” in the segmentation task. Ding et al. [

17] improved the structure of deep learning models and efficient semantic segmentation in complex scenes in real time by ASPP, and also pointed out that ASPP can significantly improve the segmentation performance of DeepLabv3+, which is a good adaptation choice for segmentation tasks. Ma et al. [

18] optimized the performance enhancement of neural network image classification, target detection, and semantic segmentation tasks through customized activation function techniques such as Leak-ReLU and meta-ACON, and pointed out that the activation mechanism can significantly improve the performance of models of different scales in multi-class computer vision tasks, which is an effective direction for the improvement of traditional activation functions. However, these studies demonstrate the significant usefulness of deep learning in 3D cotton cup image segmentation [

19]. However, existing research has discussed how to accurately segment the target under regularized conditions, but accurate cutting of soft materials such as cotton cups with rigid cutting control has rarely been seen [

20], and in particular, deep learning techniques for automated cutting of flexible garments with CNC (computer numerical control) have not yet been discussed.

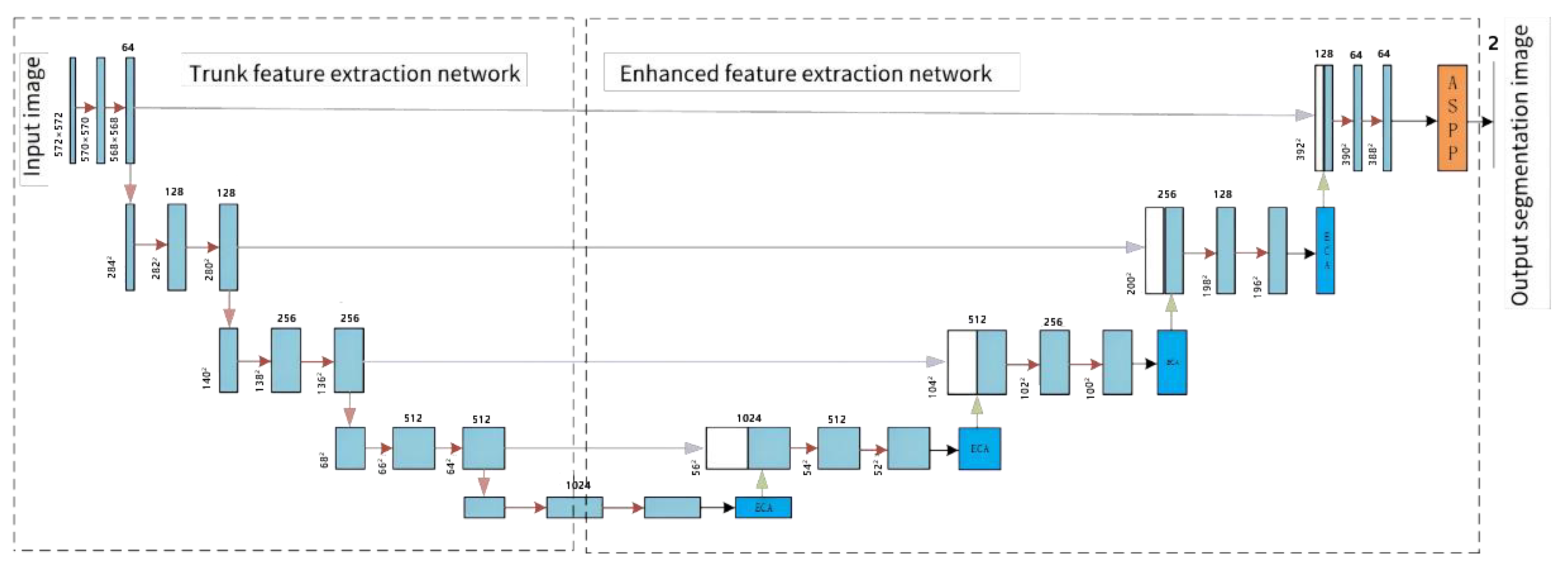

To address the aforementioned issues, the main contribution of this paper lies in the proposal of an improved UNet algorithm (UNet-IV) specifically optimized for the segmentation of 3D cotton cup indentations. Specifically, the innovations of this paper include: (1) The innovative combination of VGG16, ECA (Efficient Channel Attention) attention mechanism, ASPP (Atrous Spatial Pyramid Pooling) module, and Leaky-ReLU activation function significantly enhances the model’s segmentation accuracy and robustness for cotton cup contours in complex backgrounds; (2) The algorithm has been successfully applied to industrial CNC cutting machines, achieving a fully automated process from image recognition to physical cutting; (3) Through error analysis of the cut products using a three-coordinate measuring machine, the high precision and feasibility of this method in industrial applications have been verified. The work in this paper focuses on algorithmic innovations and their application validation in specific industrial scenarios.

1.1. Image Collection

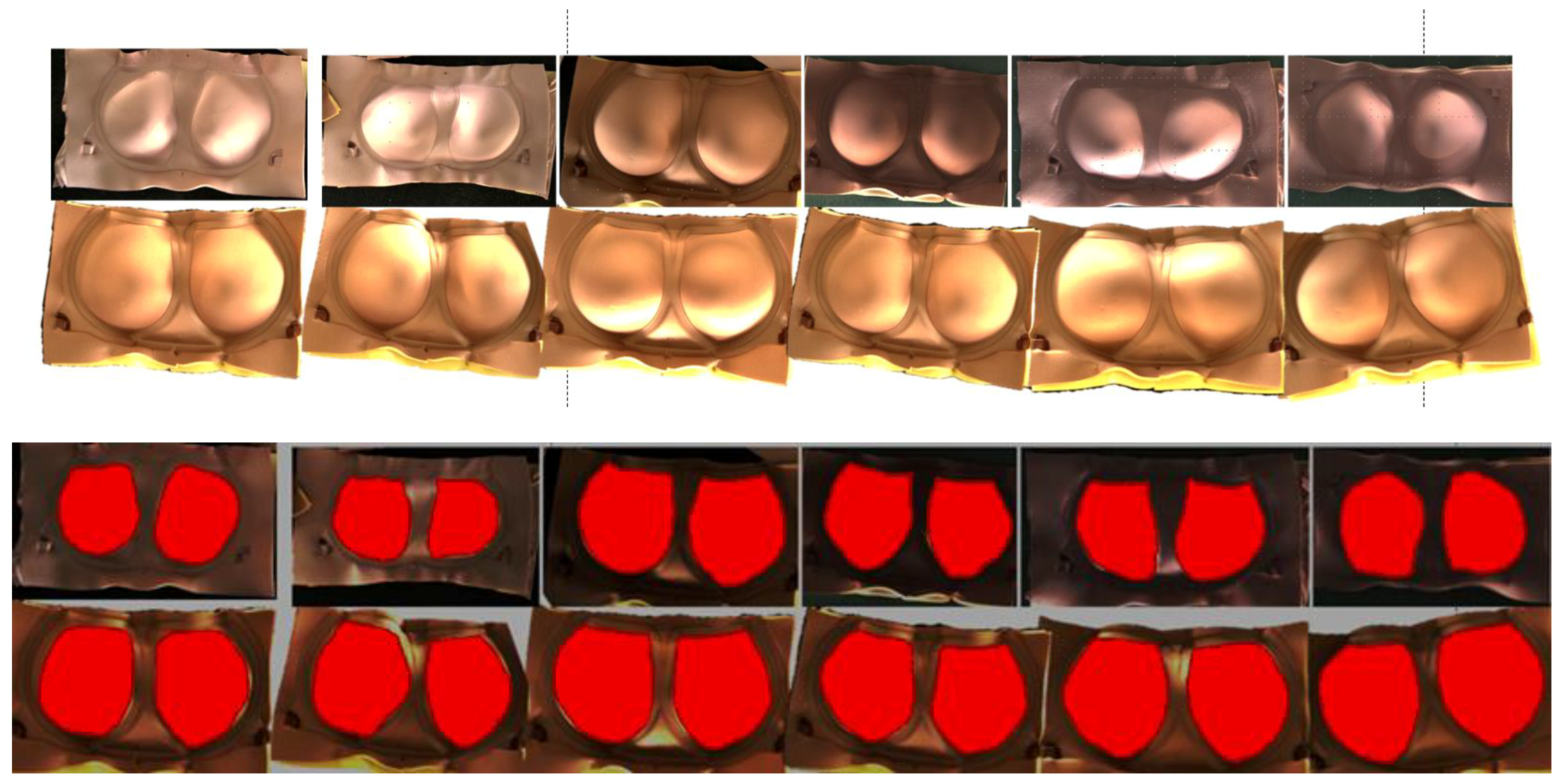

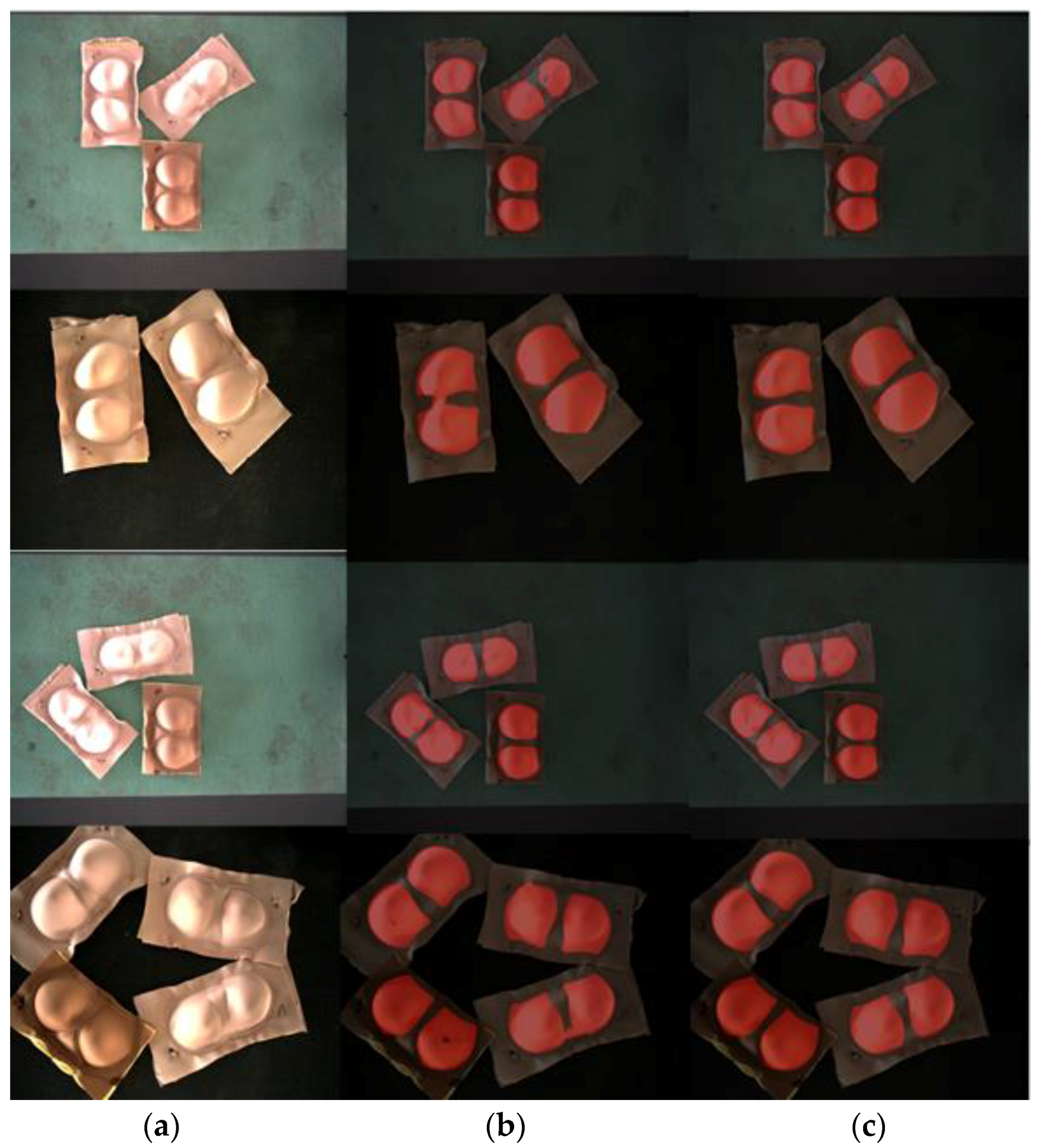

A Hikvision industrial camera (MV-CA060-11GM) (Hikvision Digital Technology Co., Ltd., Hangzhou, China) with an effective resolution of 6 megapixels was used for image acquisition. The camera was positioned 1.2 m away from the experimental shooting platform, resulting in the collection of 632 images, each with a resolution of 2048 × 2048 pixels. To enable the network to learn target features under various background noise conditions, the collected cotton cup images included different lighting conditions, different numbers of 3D cotton cups, and different levels of image clarity. Some of the collected images are shown in

Figure 1.

1.2. Image Labeling

Image labeling: From 632 images of cotton cups, 500 images of well-rendered 3D cotton cups were selected. The “LabelMe” software was used to label the semantic segmentation dataset; 450 of these 3D cotton cup images were labelled, with the remaining 50 serving as the test set, as shown in

Figure 2. The dataset of cotton cup indentation annotations provides significant value for subsequent machine learning algorithms. Unlike data labeling in object detection tasks, where the target region in the image only needs to be enclosed in a rectangular box, in the semantic segmentation task, the image needs to be zoomed in, and the contours of the target need to be labeled point by point for sub-pixel level cotton cup indentation annotation. The annotation is completed by enclosing all labeled points in a ring shape. Any areas outside the closed region are considered background. Throughout the labeling process, careful observation of each image is necessary to ensure that each cotton cup area is accurately labeled and to avoid mistakenly labeling the surrounding background as part of the cotton cup region. The JSON file recording the labeled results (Ground Truth mask and image name) was divided into training, validation, and test sets in a ratio of 7:2:1.

3. Experiment and Result Analysis

3.1. Experimental Environment and Evaluation Metrics

The hardware configuration used in the experiment includes the following: GPU: NVIDIA GeForce RTX 3060; Processor: Intel Core i7-11800H; Video Memory: 6 GB; Software environment: 64-bit Windows 10 operating system; Python 3.9.12, Torch 1.11.0.

For the results obtained in the experiment, commonly used image segmentation performance metrics are selected for evaluation, including Precision, Recall, Mean Intersection over Union (MIoU), and Mean Pixel Accuracy (mPA) [

22,

23,

24]. The formulas for these metrics are as follows:

Here, TP represents the number of pixels correctly predicted as the 3D cotton cup surface, i.e., the number of pixels correctly classified as belonging to the 3D cotton cup surface. FP represents the number of pixels incorrectly predicted as the 3D cotton cup surface, i.e., the number of background pixels misclassified as the 3D cotton cup surface. FN represents the number of pixels incorrectly predicted as background, i.e., the number of 3D cotton cup surface pixels misclassified as background. In Equation (7), k + 1 refers to k classes plus one background class. Equation (8) represents the average precision across all target classes, which is used to measure the overall performance of the network.

3.2. Ablation Experiment

The improved UNet-IV uses the VGG16 network as the backbone for feature extraction in transfer learning, incorporating the ECA attention mechanism and ASPP module, and utilizing the Leaky-ReLU activation function. To compare the impact of the improved modules on the overall performance of the network and to analyze the effect of different improvement modules on image segmentation [

25,

26], an ablation experiment is conducted. To ensure the accuracy of the ablation experiment, the same dataset and training parameters are used for each group of experiments. In the experiment, the total number of training batches for the model is set to 100, with the first 50 batches employing frozen training. At this stage, the backbone of the model is frozen, allocating more resources to train the parameters of the subsequent network layers, aiming to reduce the complexity of the model and improve its generalization capability. The remaining 50 batches employ unfrozen training. The batch size is set to 2 throughout the experiment. The results of the experiment are shown in

Table 1.

In

Table 1, checkmarks (√) and crosses (×) indicate whether such models are adopted or not. Although the improvements in the accuracy indicators of each model in

Table 1 seem minor (for example, UNet-IV has improved mIoU by 0.26% compared to the baseline UNet), such differences are of great significance in large-scale and high-demand industrial production. As shown in

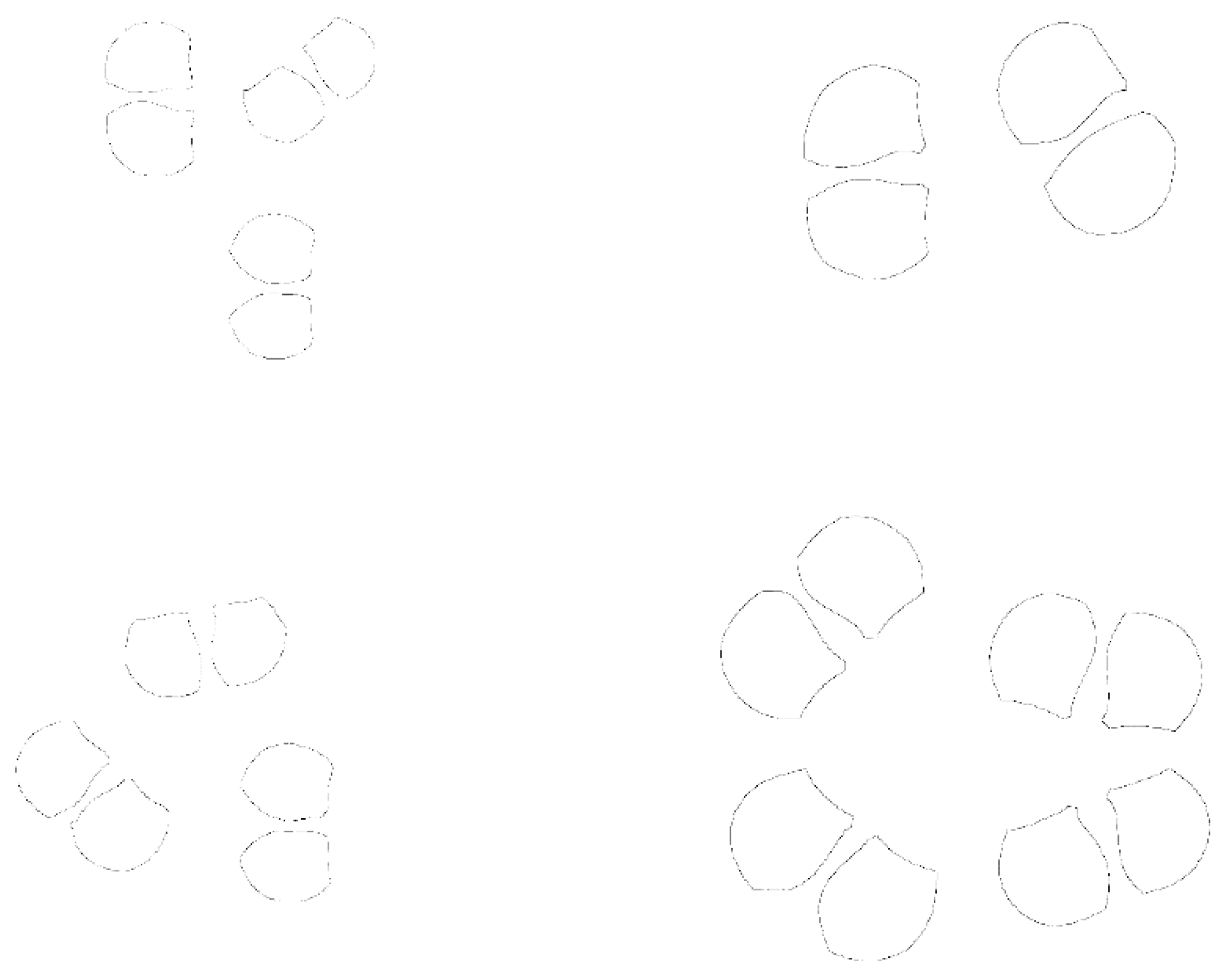

Figure 9, these numerical improvements are directly reflected in the quality of the segmentation results: the UNet-IV model (

Figure 9c) can generate smoother and more complete edge contours, effectively avoiding the edge sawtooth, breakage, and false detection problems that occur in the baseline UNet model (

Figure 9b). In the automated cutting process, these defects can lead to an increase in the scrap rate and material waste. Therefore, even seemingly minor improvements in precision are crucial for ensuring the quality of the final product and controlling production costs.

In

Table 1, all network models use VGG16 as the backbone for feature extraction. UNet-I adds the ECA attention mechanism, resulting in a 0.07% and 0.2% improvement in precision and recall, respectively, a 0.1% increase in the mean mIoU, and a 0.06% increase in the mPA. UNet-II strengthens feature extraction by adding the ASPP module to the UNet, leading to a 0.03% and 0.06% increase in precision and recall, respectively, while the mIoU decreased by 0.07%, and the mPA increased by 0.16% compared to the original network. UNet-III introduces the Leaky-ReLU activation function, resulting in a 0.02% decrease in precision, a 0.01% increase in recall, and a 0.03% and 0.01% increase in mIoU and mPA, respectively, compared to the original network. UNet-IV represents the final improved network model, with a 0.1% and 0.27% increase in precision and recall, respectively, a 0.26% improvement in mIoU, and a 0.24% increase in mPA compared to the original network. Through the improvement of different network modules, the final improved network model shows varying degrees of performance enhancement compared to the original UNet. The precision reached 99.53%, the recall reached 99.69%, mIoU reached 99.18%, and mPA reached 99.73%, meeting the performance requirements for detecting indentations in 3D cotton cups. The feasibility of these proposed methods was mutually verified, and it was found that UNet-IV had the best performance.

3.3. Comparison of Different Network Models

To further evaluate the performance of the improved algorithm, a comparative analysis of the mean mIoU and mPA was conducted between UNet-IV and other methods such as PSPNet, UNet++, and DeepLabV3+ on the dataset. The analysis results are shown in

Table 2. UNet-IV improved by 0.35% and 0.22% in mIoU and mPA, respectively, compared to PSPNet; by 0.21% and 0.17% compared to UNet++; and by 2.35% and 2.41% compared to DeepLabV3+. By comparing the data from these experiments, it is evident that the improved UNet-IV outperforms PSPNet, UNet++, and DeepLabV3+ in overall performance. This demonstrates the significance of the final improvements, providing a solid foundation for the practical application of 3D cotton cup indentation detection.

In terms of the four parameters MIoU/mPA/Recall/F1, the accuracy of different deep learning models is ranked as follows: UNet-IV (F1 score: 99.11%) > UNet++ (98.85%) > PSPNet (98.50%) > DeepLabV3++ (96.25%), and the other three parameters have a similar order of magnitude. It can be seen that UNet-IV performs optimally in terms of accuracy and is more in line with the flexibility of the cotton cup, complex object segmentation; it can also be demonstrated that better results are obtained under the dataset and training conditions of this paper [

27]. In addition, from the perspective of four parameters: parameter count/FLOPs/training time/inference time, the performance ranking in terms of efficiency is as follows: DeepLabV3++ (the shortest inference time: 180 ms, with a performance of 1/0.18 HZ) > UNet++ (240 ms) > UNet-IV (280 ms) > PSPNet (320 ms). It can be seen that DeepLabV3 has the best speed performance with poor accuracy performance, UNet++ has balanced efficiency, UNet-IV has lower efficiency but high accuracy, and PSPNet has the worst efficiency and average accuracy, and this result parameter can be analyzed similarly from a similar number of parameters/FLOPs/training time. UNet-IV is the best in terms of accuracy and faster in terms of speed performance, although there is still a processing speed difference of about 100 ms compared to DeepLabV3++. But in fact, for the later CNC startup and working process, the impact of 100 ms is not significant; and for the accuracy, higher accuracy has higher cutting precision and lower bad product rate, which is very important for the cost control of the industrial Winfield.

3.4. Analysis of Test Results

To further demonstrate the effectiveness of the UNet-IV model, a more in-depth analysis was conducted. By comparing the performance of the UNet and UNet-IV models across different metrics, it was found that the UNet-IV model outperforms the original UNet model in terms of detection accuracy and robustness. Additionally, application experiments were conducted with the UNet-IV model to detect indentations in 3D cotton cups across different scenarios, and the detection results were evaluated and analyzed. The detection results are shown in

Figure 10.

Figure 9a represents the original image,

Figure 9b shows the detection results of the UNet model, and

Figure 9c shows the detection results of the UNet-IV model. As observed in

Figure 10, the UNet model’s detection results show issues such as incomplete detection of the 3D cotton cup surface, unsmooth edges, and incorrect detection of the surface, leading to significant errors in the extracted 3D cotton cup indentations. In contrast, the detection results of the UNet-IV model are complete and accurate, with smooth edges and no isolated points, successfully extracting complete 3D cotton cup indentations for subsequent cutting processes. This demonstrates the strong practical value of the UNet-IV model.

Future research will enrich the 3D cotton cup dataset with samples under extreme conditions (e.g., strong reflection, uneven texture) and explore dynamic adaptive segmentation techniques to enhance the model’s stability in variable industrial environments. Efforts will be made to lightweight the UNet-IV model (e.g., via depth-wise separable convolution or knowledge distillation) to meet the real-time response requirements of high-speed production lines and enable deployment on edge computing devices. Multi-sensor data fusion (integrating 3D point cloud, force, and temperature data) and extension to multi-category flexible materials (e.g., sponge pads, non-woven fabrics) will be explored to broaden the method’s application scope and improve comprehensive quality control.

In conclusion, the improved UNet-IV image segmentation model has a significant advantage in detection accuracy compared to the original UNet model. This model is capable of clearly extracting the track of the three-dimensional cotton cup impression, providing a reliable basis for subsequent segmentation work. The extracted three-dimensional cotton cup impression, as shown in

Figure 10, displays a clear impression trajectory, indicating that the improved UNet-IV model effectively addresses the shortcomings of the original model. This improves the accuracy and efficiency of image segmentation, making it highly significant in practical applications.

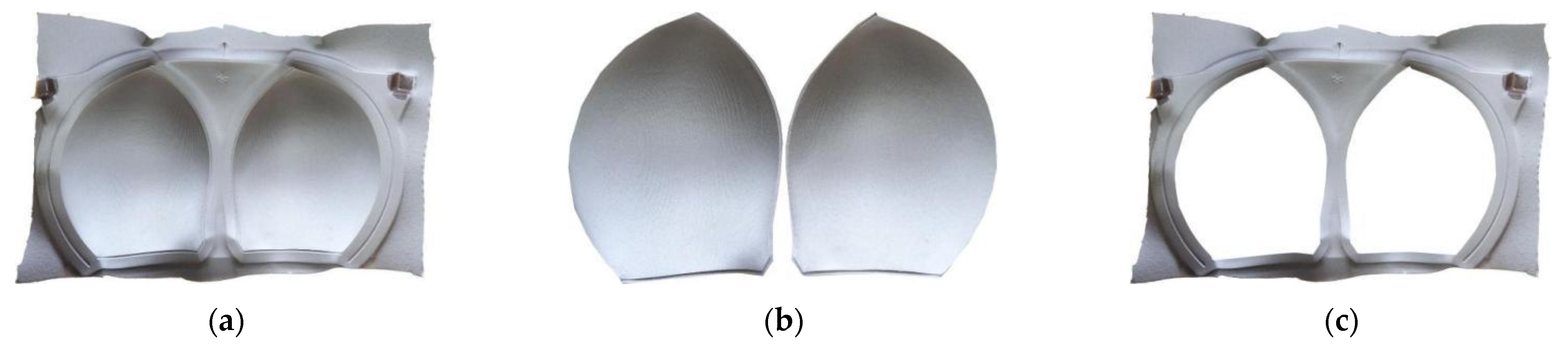

4. Segmentation and Error Analysis of the 3D Cotton Cup

After collecting the impression trajectory data of the three-dimensional cotton cup using the improved UNet-IV image segmentation model, it was sent in real time to the high-frequency vibration CNC cutting machine (RZCRT5-2516EF, Guangdong Ruizhou Technology Co., Ltd., Foshan, Guangdong, China) for cutting, as shown in

Figure 11. A cut 3D cotton cup result with the CNC cutting machine is shown in

Figure 12. As shown in

Figure 12b, a non-contact coordinate measuring machine (model: DuraMax HTG, Zhejiang Langtong Precision Instrument Co., Ltd., Hangzhou, China), an error analysis is conducted on the three-dimensional cotton cups after cutting.

This device collects 400 uniformly distributed data points on the cutting edge and compares them with the original theoretical design model (such as a CAD digital model). Error is defined as the deviation value of each measurement point from the theoretical model in the direction of its contour normal.

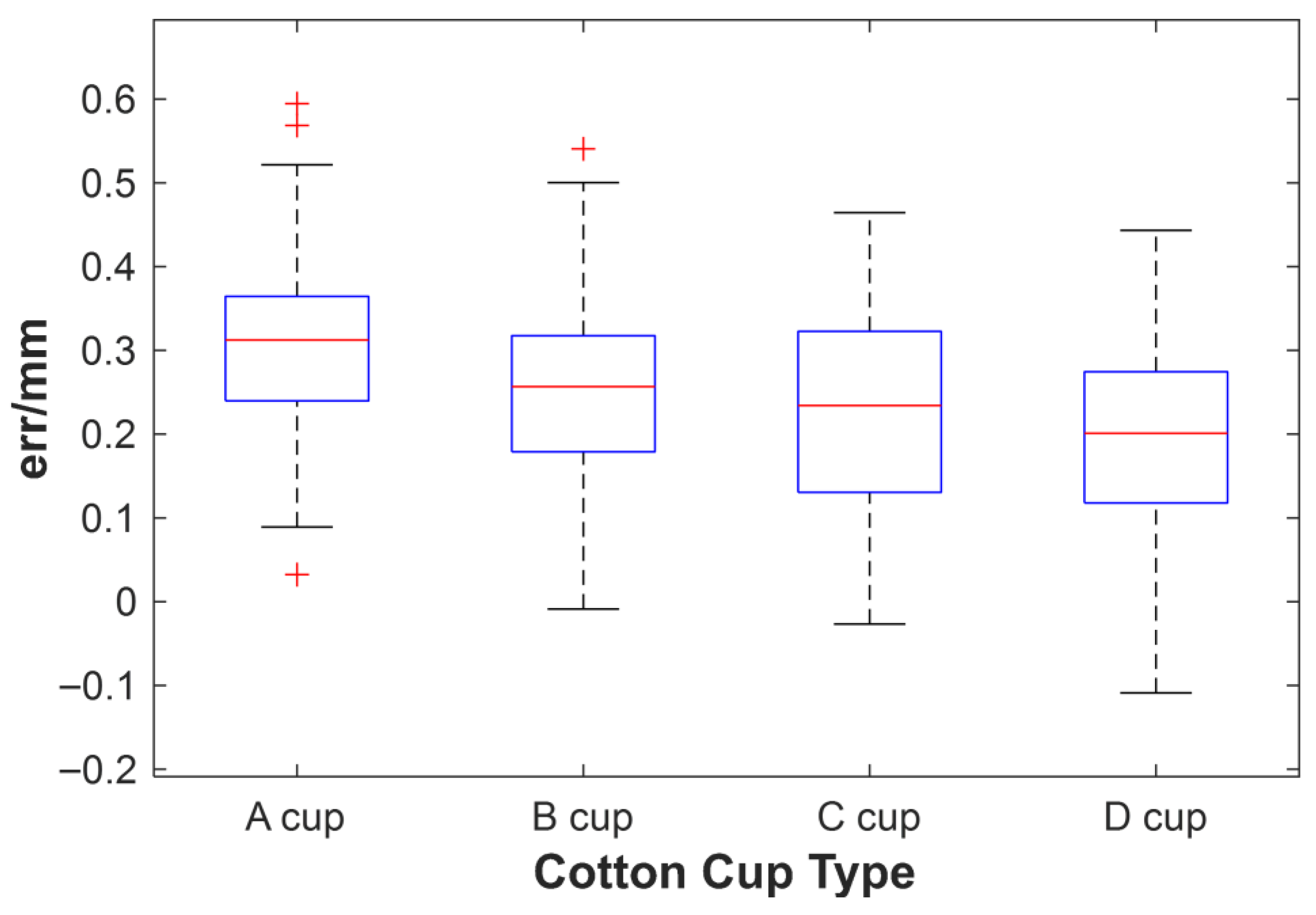

Figure 13 shows the error distribution of these 400 points.” The average error is 0.20 mm, and the σ (standard deviation) is 0.14 mm. According to the 3σ principle, the cutting error is within the range of 0.20 mm ± 0.42 mm, meeting the cutting accuracy requirements of 3D cotton cups in flexible clothing materials. Then,

Figure 13 shows the boxplot of the cutting result, and its

X-axis represents different cup types, while the

Y-axis indicates the cutting errors of the CNC. It can be observed that the margin of error varies slightly across different cup types: smaller cup types exhibit a slightly larger margin of error, whereas larger cup types demonstrate a smaller margin of error (e.g., A cup versus D cup). It can be seen from the quartile types of the four cup types, the “range of fluctuation” in error is essentially the same (about 0.14 mm) regardless of cup types. Although the error of the A cup has an overall high bias, the magnitude of error fluctuation of a single measurement is similar to the magnitude of the error fluctuation of the D cup. This suggests that the influence of cup type on the error is mainly reflected in the “overall offset” (mean), rather than “stability” (dispersion). This also may suggest that the small curved edges of the smaller (A cup) are difficult capture accurately and are prone to measurement bias; the large curved edges of the large (D cup) are easier to be measured by the measurement tools (e.g., laser distance measurement, visual inspection), and the deviation is smaller. This may also be related to the fabric’s softness under stress; under identical fabric conditions, smaller cup sizes may exhibit differing degrees of post-cutting elongation. This paper focuses on reducing manual labor involvement through camera detection and automated cutting, with an average error margin of 0.2 mm that largely meets the requirements outlined herein. We further infer that subsequent refinements could enhance accuracy by leveraging material microstructural characteristics and CNC control methodologies.