Fire Resistance Prediction in FRP-Strengthened Structural Elements: Application of Advanced Modeling and Data Augmentation Techniques

Abstract

1. Introduction

2. Materials and Methods

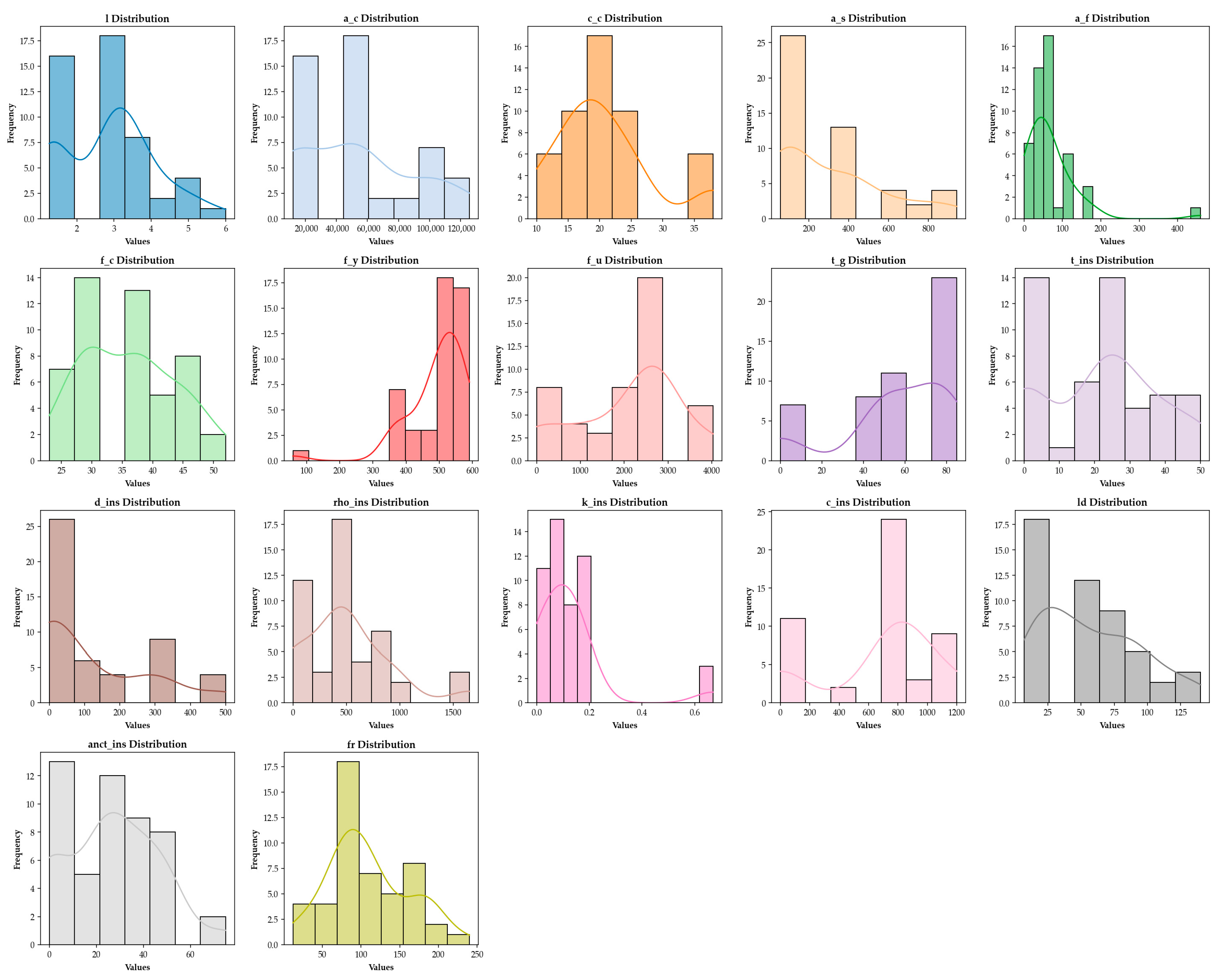

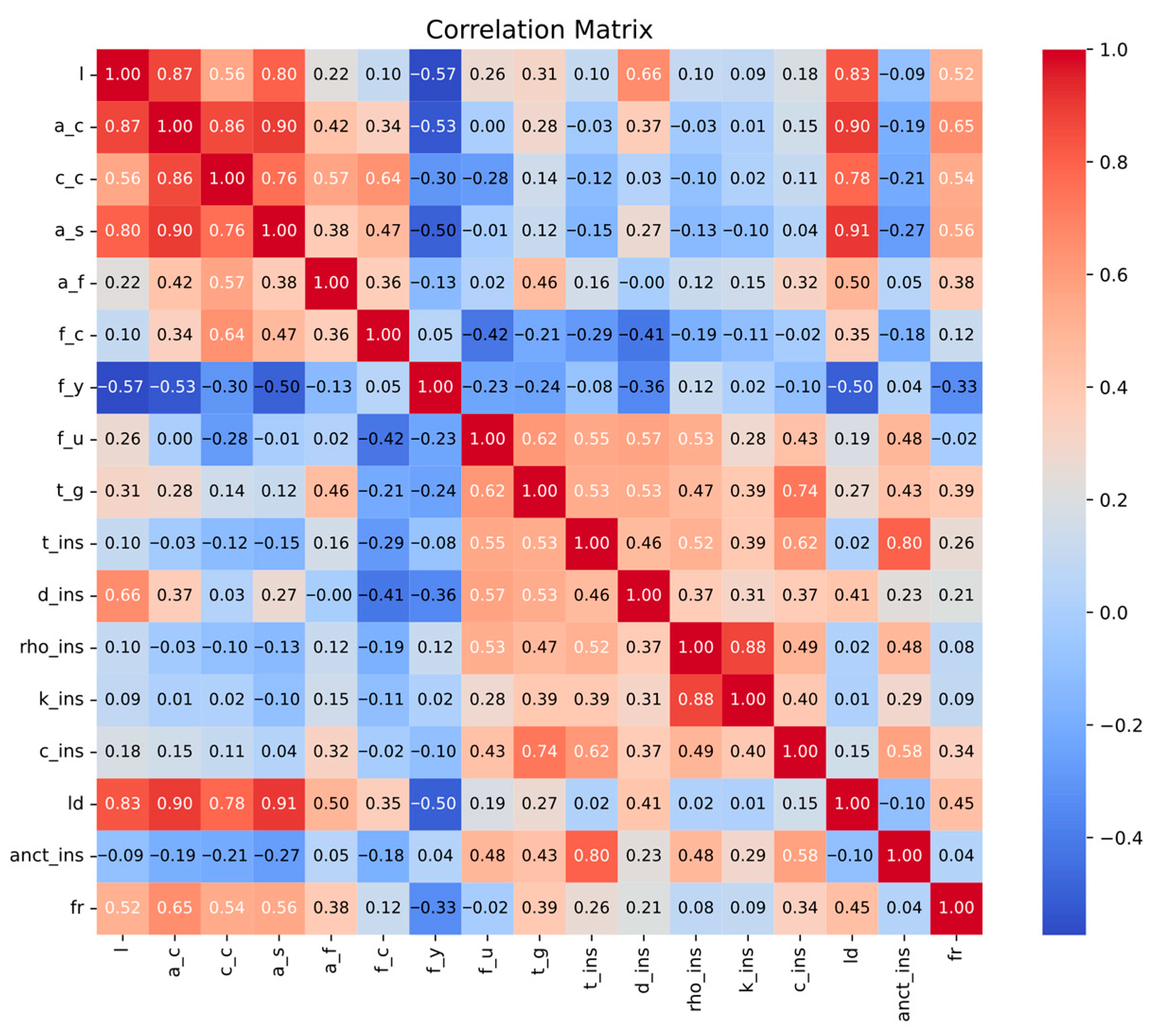

2.1. Dataset Description

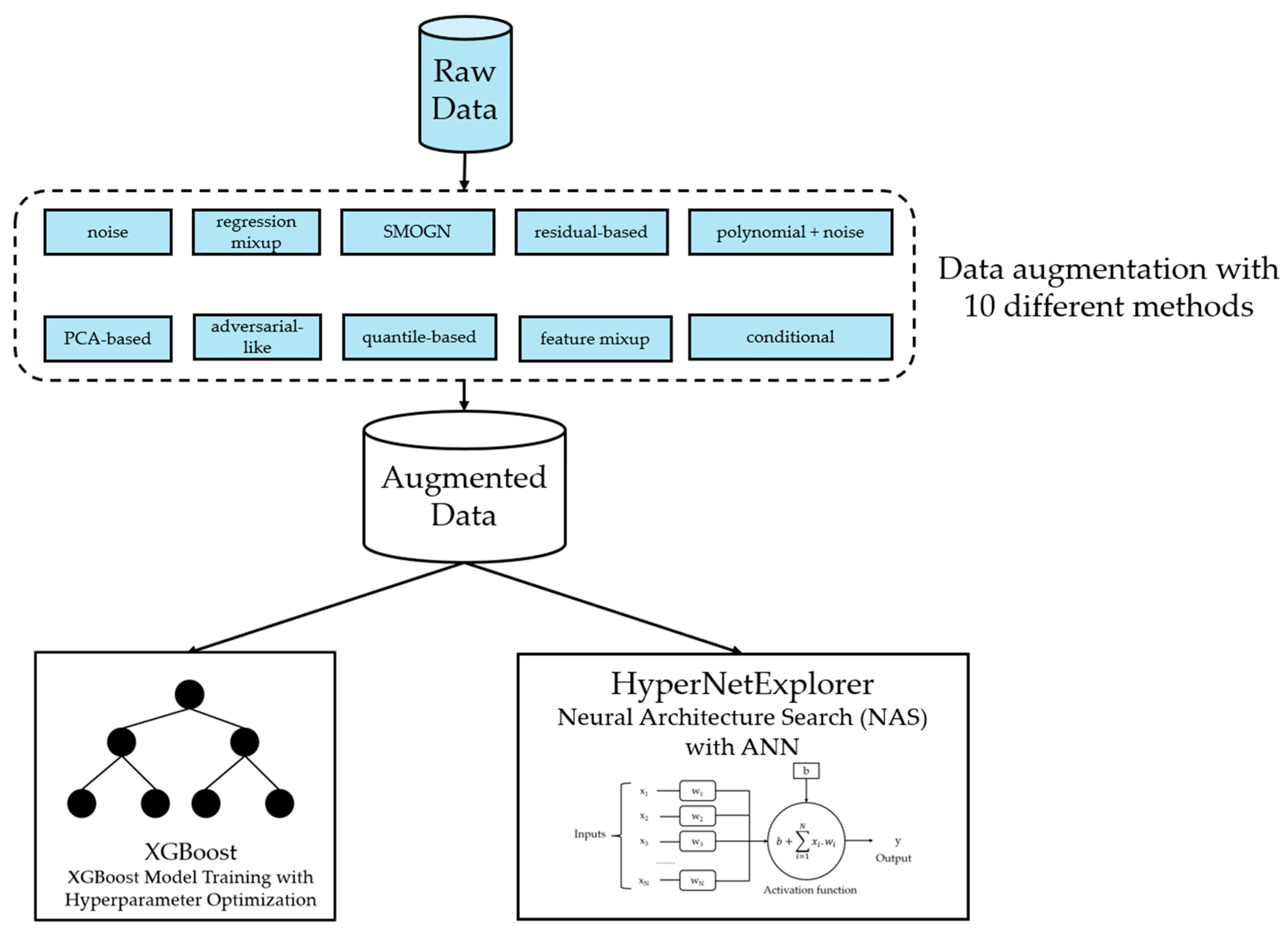

2.2. Data Augmentation Methods Implemented

2.2.1. Gaussian Noise

2.2.2. Regression Mixup

2.2.3. Synthetic Minority Over-Sampling Technique for Regression with Gaussian Noise (SMOGN)

2.2.4. Residual-Based

2.2.5. Polynomial + Noise

2.2.6. Principal Component Analysis (PCA)-Based Augmentation

2.2.7. Adversarial-like Augmentation

2.2.8. Quantile-Based Sampling

2.2.9. Feature Mixup

2.2.10. Conditional Sampling

2.3. Machine Learning

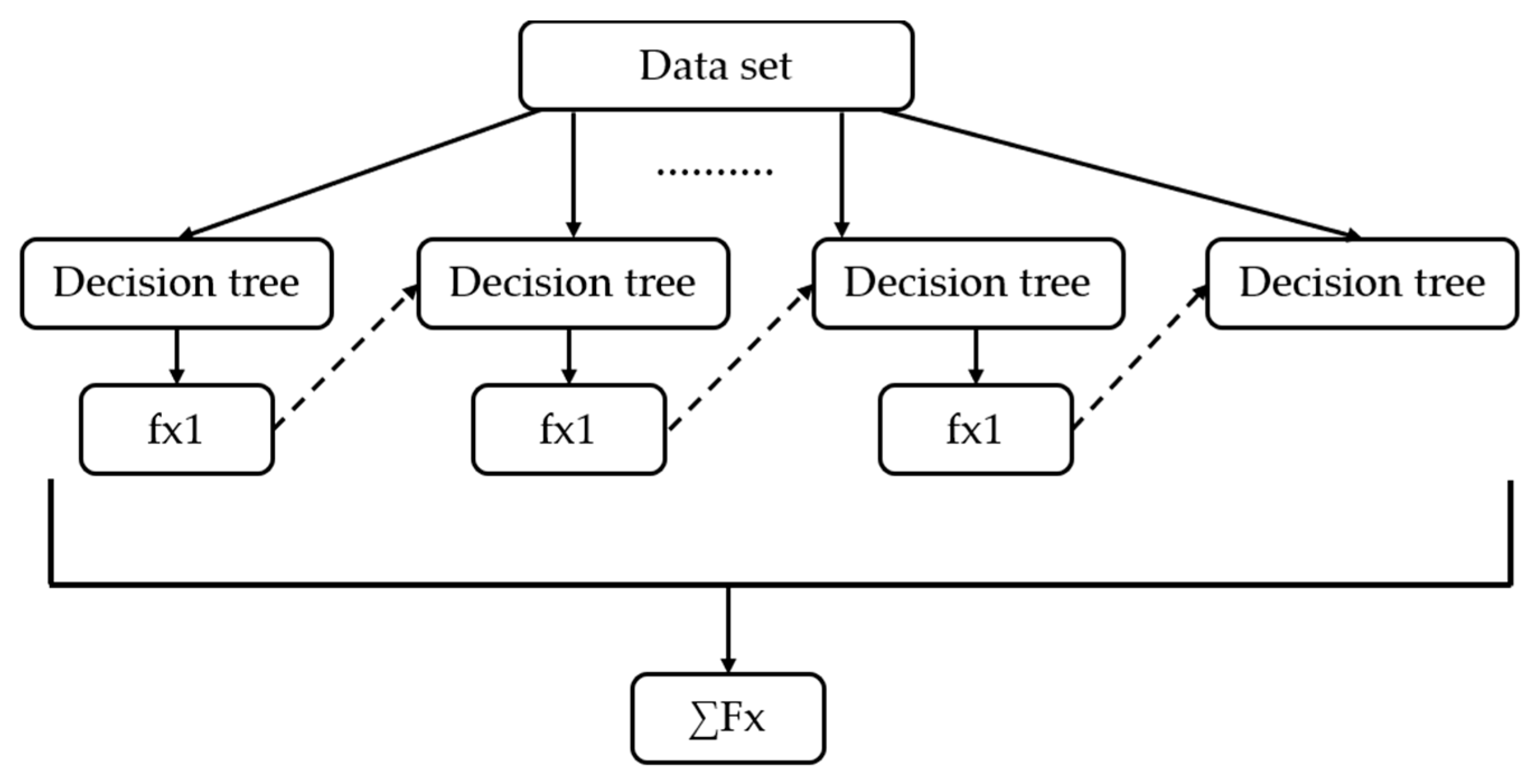

2.3.1. Extreme Gradient Boosting (XGBoost)

2.3.2. K-Fold Cross Validation

2.4. Neural Architecture Search (NAS)

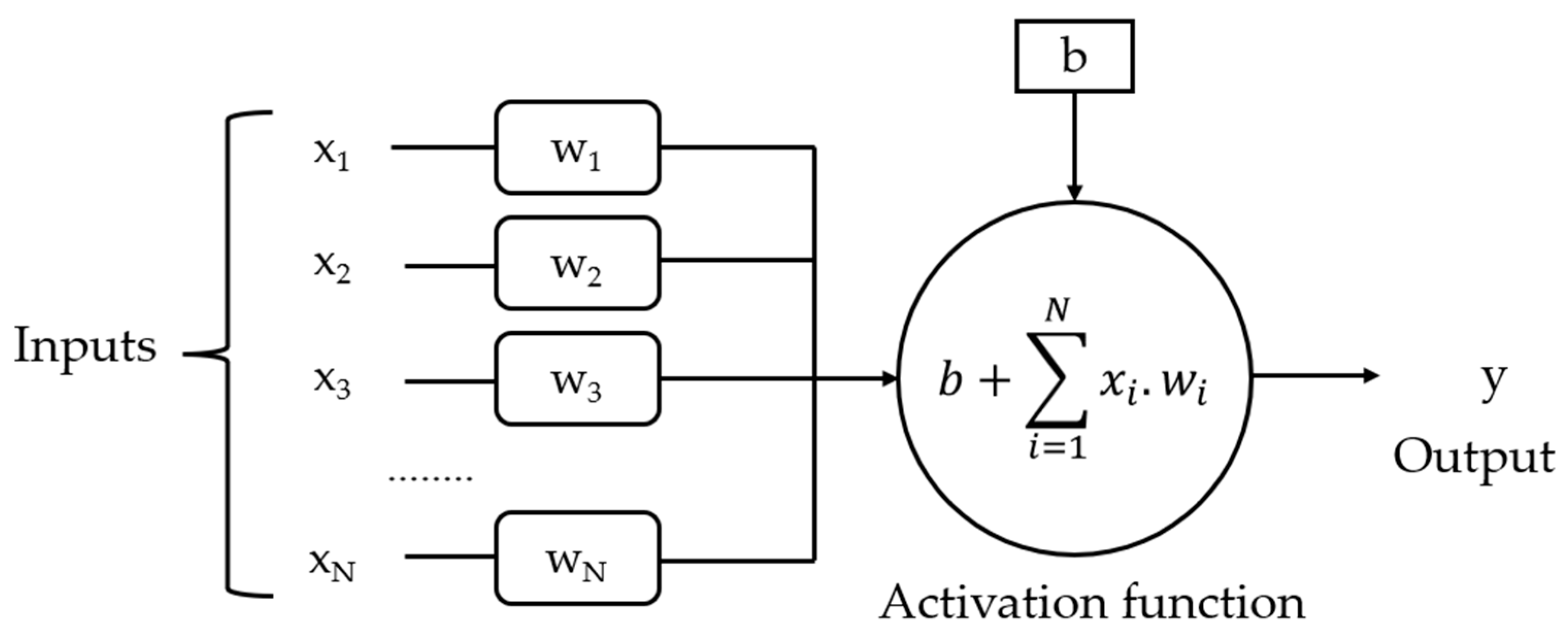

2.4.1. Artificial Neural Networks (ANNs)

2.4.2. Harmony Search (HS)

2.5. Performance Metrics

2.5.1. Root Mean Square Error (RMSE)

2.5.2. Mean Absolute Error (MAE)

2.5.3. Coefficient of Determination (R2)

2.6. SHapley Additive exPlanations (SHAP)

3. Results

SHapley Additive exPlanations (SHAP) Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Taerwe, L. Non-Metallic (FRP) Reinforcement for Concrete Structures; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar] [CrossRef]

- Fib. Fib Model Code for Concrete Structures 2010; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Zaman, A.; Gutub, S.A.; Wafa, M.A. A review on FRP composites applications and durability concerns in the construction sector. J. Reinf. Plast. Compos. 2013, 32, 1966–1988. [Google Scholar] [CrossRef]

- Frigione, M.; Lettieri, M. Durability Issues and Challenges for Material Advancements in FRP Employed in the Construction Industry. Polymers 2018, 10, 247. [Google Scholar] [CrossRef]

- Alberto, M. Introduction of Fibre-Reinforced Polymers—Polymers and Composites: Concepts, Properties and Processes. In Fiber Reinforced Polymers—The Technology Applied for Concrete Repair; InTech: Toyama, Japan, 2013. [Google Scholar] [CrossRef]

- Täljsten, B. FRP strengthening of concrete structures: New inventions and applications. Prog. Struct. Eng. Mater. 2004, 6, 162–172. [Google Scholar] [CrossRef]

- Bhatt, P.P.; Kodur, V.K.R.; Naser, M.Z. Dataset on fire resistance analysis of FRP-strengthened concrete beams. Data Brief 2024, 52, 110031. [Google Scholar] [CrossRef]

- Vu, D.-T.; Hoang, N.-D. Punching shear capacity estimation of FRP-reinforced concrete slabs using a hybrid machine learning approach. Struct. Infrastruct. Eng. 2016, 12, 1153–1161. [Google Scholar] [CrossRef]

- Abuodeh, O.R.; Abdalla, J.A.; Hawileh, R.A. Prediction of shear strength and behavior of RC beams strengthened with externally bonded FRP sheets using machine learning techniques. Compos. Struct. 2020, 234, 111698. [Google Scholar] [CrossRef]

- Basaran, B.; Kalkan, I.; Bergil, E.; Erdal, E. Estimation of the FRP-concrete bond strength with code formulations and machine learning algorithms. Compos. Struct. 2021, 268, 113972. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Al-Hamrani, A.; Ebead, U.; Alnahhal, W. Shear capacity prediction of FRP-RC beams using single and ensenble ExPlainable Machine learning models. Compos. Struct. 2022, 287, 115381. [Google Scholar] [CrossRef]

- Shen, Y.; Sun, J.; Liang, S. Interpretable Machine Learning Models for Punching Shear Strength Estimation of FRP Reinforced Concrete Slabs. Crystals 2022, 12, 259. [Google Scholar] [CrossRef]

- Kim, B.; Lee, D.-E.; Hu, G.; Natarajan, Y.; Preethaa, S.; Rathinakumar, A.P. Ensemble Machine Learning-Based Approach for Predicting of FRP—Concrete Interfacial Bonding. Mathematics 2022, 10, 231. [Google Scholar] [CrossRef]

- Wang, C.; Zou, X.; Sneed, L.H.; Zhang, F.; Zheng, K.; Xu, H.; Li, G. Shear strength prediction of FRP-strengthened concrete beams using interpretable machine learning. Constr. Build. Mater. 2023, 407, 133553. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, C.; Liu, J.; Zou, X.; Sneed, L.H.; Bao, Y.; Wang, L. Prediction of FRP-concrete interfacial bond strength based on machine learning. Eng. Struct. 2023, 274, 115156. [Google Scholar] [CrossRef]

- Khan, M.; Khan, A.; Khan, A.U.; Shakeel, M.; Khan, K.; Alabduljabbar, H.; Najeh, T.; Gamil, Y. Intelligent prediction modeling for flexural capacity of FRP-strengthened reinforced concrete beams using machine learning algorithms. Heliyon 2024, 10, e23375. [Google Scholar] [CrossRef]

- Alizamir, M.; Gholampour, A.; Kim, S.; Keshtegar, B.; Jung, W. Designing a reliable machine learning system for accurately estimating the ultimate condition of FRP-confined concrete. Sci. Rep. 2024, 14, 20466. [Google Scholar] [CrossRef] [PubMed]

- Ali, L.; Isleem, H.F.; Bahrami, A.; Jha, I.; Zou, G.; Kumar, R.; Sadeq, A.M.; Jahami, A. Integrated behavioural analysis of FRP-confined circular columns using FEM and machine learning. Compos. Part C Open Access 2024, 13, 100444. [Google Scholar] [CrossRef]

- Bhatt, P.P.; Sharma, N.; Kodur, V.K.R.; Naser, M.Z. Machine learning approach for predicting fire resistance of FRP-strengthened concrete beams. Struct. Concr. 2024, 26, 4143–4165. [Google Scholar] [CrossRef]

- Kumarawadu, H.; Weerasinghe, P.; Perera, J.S. Evaluating the Performance of Ensemble Machine Learning Algorithms Over Traditional Machine Learning Algorithms for Predicting Fire Resistance in FRP Strengthened Concrete Beams. Electron. J. Struct. Eng. 2024, 24, 47–53. [Google Scholar] [CrossRef]

- Habib, A.; Barakat, S.; Al-Toubat, S.; Junaid, M.T.; Maalej, M. Developing Machine Learning Models for Identifying the Failure Potential of Fire-Exposed FRP-Strengthened Concrete Beams. Arab. J. Sci. Eng. 2025, 50, 8475–8490. [Google Scholar] [CrossRef]

- Wang, S.; Fu, Y.; Ban, S.; Duan, Z.; Su, J. Genetic evolutionary deep learning for fire resistance analysis in fibre-reinforced polymers strengthened reinforced concrete beams. Eng. Fail. Anal. 2025, 169, 109149. [Google Scholar] [CrossRef]

- Moreno-Barea, F.J.; Jerez, J.M.; Franco, L. Improving classification accuracy using data augmentation on small data sets. Expert Syst. Appl. 2020, 161, 113696. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Synthetic data augmentation using GAN for improved liver lesion classification. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 289–293. [Google Scholar] [CrossRef]

- Zhou, Y.; Guo, C.; Wang, X.; Chang, Y.; Wu, Y. A Survey on Data Augmentation in Large Model Era. arXiv 2024, arXiv:2401.15422. [Google Scholar] [CrossRef]

- Ye, Y.; Li, Y.; Ouyang, R.; Zhang, Z.; Tang, Y.; Bai, S. Improving machine learning based phase and hardness prediction of high-entropy alloys by using Gaussian noise augmented data. Comput. Mater. Sci. 2023, 223, 112140. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Geng, J.; Deng, X.; Jiang, W. A New Data Augmentation Method Based on Mixup and Dempster-Shafer Theory. IEEE Trans. Multimed. 2024, 26, 4998–5013. [Google Scholar] [CrossRef]

- Cesmeli, M.S. Increasing the prediction efficacy of the thermodynamic properties of R515B refrigerant with machine learning algorithms using SMOGN data augmentation method. Int. J. Refrig. 2025, 179, 44–59. [Google Scholar] [CrossRef]

- Branco, P.; Torgo, L.; Ribeiro, R.P. SMOGN: A Pre-Processing Approach for Imbalanced Regression. Available online: https://www.researchgate.net/publication/319906917 (accessed on 25 August 2025).

- Innocenti, S.; Matte, P.; Fortin, V.; Bernier, N. Analytical and Residual Bootstrap Methods for Parameter Uncertainty Assessment in Tidal Analysis with Temporally Correlated Noise. J. Atmos. Ocean Technol. 2022, 39, 1457–1481. [Google Scholar] [CrossRef]

- Friedrich, M.; Lin, Y. Sieve bootstrap inference for linear time-varying coefficient models. J. Econom. 2024, 239, 105345. [Google Scholar] [CrossRef]

- Huang, S.-G.; Chung, M.K.; Qiu, A. Fast mesh data augmentation via Chebyshev polynomial of spectral filtering. Neural Netw. 2021, 143, 198–208. [Google Scholar] [CrossRef] [PubMed]

- Sirakov, N.M.; Shahnewaz, T.; Nakhmani, A. Training Data Augmentation with Data Distilled by Principal Component Analysis. Electronics 2024, 13, 282. [Google Scholar] [CrossRef]

- Cho, S.; Hong, S.; Jeon, J.-J. Adaptive adversarial augmentation for molecular property prediction. Expert Syst. Appl. 2025, 270, 126512. [Google Scholar] [CrossRef]

- Wang, H.; Ma, Y. Optimal subsampling for quantile regression in big data. Biometrika 2021, 108, 99–112. [Google Scholar] [CrossRef]

- Cao, C.; Zhou, F.; Dai, Y.; Wang, J.; Zhang, K. A Survey of Mix-based Data Augmentation: Taxonomy, Methods, Applications, and Explainability. ACM Comput. Surv. 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Majeed, A.; Hwang, S.O. CTGAN-MOS: Conditional Generative Adversarial Network Based Minority-Class-Augmented Oversampling Scheme for Imbalanced Problems. IEEE Access 2023, 11, 85878–85899. [Google Scholar] [CrossRef]

- Hu, T.; Tang, T.; Chen, M. Data Simulation by Resampling—A Practical Data Augmentation Algorithm for Periodical Signal Analysis-Based Fault Diagnosis. IEEE Access 2019, 7, 125133–125145. [Google Scholar] [CrossRef]

- Binson, V.A.; Thomas, S.; Subramoniam, M.; Arun, J.; Naveen, S.; Madhu, S. A Review of Machine Learning Algorithms for Biomedical Applications. Ann. Biomed. Eng. 2024, 52, 1159–1183. [Google Scholar] [CrossRef] [PubMed]

- Jahanshahi, H.; Zhu, Z.H. Review of machine learning in robotic grasping control in space application. Acta Astronaut. 2024, 220, 37–61. [Google Scholar] [CrossRef]

- Aydin, Y.; Bekdaş, G.; Işıkdağ, Ü.; Nigdeli, S.M. The State of Art in Machine Learning Applications in Civil Engineering. In Hybrid Metaheuristics in Structural Engineering; Springer: Berlin/Heidelberg, Germany, 2023; pp. 147–177. [Google Scholar] [CrossRef]

- Vashishtha, G.; Chauhan, S.; Sehri, M.; Zimroz, R.; Dumond, P.; Kumar, R.; Gupta, M.K. A roadmap to fault diagnosis of industrial machines via machine learning: A brief review. Measurement 2025, 242, 116216. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Müller, A.; Nothman, J.; Louppe, G.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar] [CrossRef]

- Chollet, F. Keras. Available online: https://github.com/fchollet/keras (accessed on 25 January 2025).

- McKinney, W. Data Structures for Statistical Computing in Python. Scipy 2010, 445, 56–61. [Google Scholar] [CrossRef]

- Cherif, I.L.; Kortebi, A. On using eXtreme Gradient Boosting (XGBoost) Machine Learning algorithm for Home Network Traffic Classification. In Proceedings of the 2019 Wireless Days (WD), Manchester, UK, 24–26 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Pande, C.B.; Egbueri, J.C.; Costache, R.; Sidek, L.M.; Wang, Q.; Alshehri, F.; Din, N.M.; Gautam, V.K.; Pal, S.C. Predictive modeling of land surface temperature (LST) based on Landsat-8 satellite data and machine learning models for sustainable development. J. Clean. Prod. 2024, 444, 141035. [Google Scholar] [CrossRef]

- Mitchell, R.; Adinets, A.; Rao, T.; Frank, E. Xgboost: Scalable gpu accelerated learning. arXiv 2018, arXiv:1806.11248. [Google Scholar] [CrossRef]

- Peng, B.; Qiu, J.; Chen, L.; Li, J.; Jiang, M.; Akkas, S.; Smirnov, E.; Israfilov, R.; Khekhnev, S.; Nikolaev, A. HarpGBDT: Optimizing Gradient Boosting Decision Tree for Parallel Efficiency. In Proceedings of the 2019 IEEE International Conference on Cluster Computing (CLUSTER), Albuquerque, NM, USA, 23–26 September 2019; pp. 1–11. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Mo, H.; Sun, H.; Liu, J.; Wei, S. Developing window behavior models for residential buildings using XGBoost algorithm. Energy Build. 2019, 205, 109564. [Google Scholar] [CrossRef]

- el Mahdi Safhi, A.; Dabiri, H.; Soliman, A.; Khayat, K.H. Prediction of self-consolidating concrete properties using XGBoost machine learning algorithm: Rheological properties. Powder Technol. 2024, 438, 119623. [Google Scholar] [CrossRef]

- Fatahi, R.; Abdollahi, H.; Noaparast, M.; Hadizadeh, M. Modeling the working pressure of a cement vertical roller mill using SHAP-XGBoost: A ‘conscious lab of grinding principle’ approach. Powder Technol. 2025, 457, 120923. [Google Scholar] [CrossRef]

- Xun, Z.; Altalbawy, F.M.A.; Kanjariya, P.; Manjunatha, R.; Shit, D.; Nirmala, M.; Sharma, A.; Hota, S.; Shomurotova, S.; Sead, F.F.; et al. Developing a cost-effective tool for choke flow rate prediction in sub-critical oil wells using wellhead data. Sci. Rep. 2025, 15, 25825. [Google Scholar] [CrossRef]

- Mealpy. Available online: https://github.com/thieu1995/mealpy (accessed on 2 December 2024).

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Uzair, M.; Jamil, N. Effects of Hidden Layers on the Efficiency of Neural networks. In Proceedings of the 2020 IEEE 23rd International Multitopic Conference (INMIC), Bahawalpur, Pakistan, 5–7 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Cardenas, L.L.; Mezher, A.M.; Bautista, P.A.B.; Leon, J.P.A.; Igartua, M.A. A Multimetric Predictive ANN-Based Routing Protocol for Vehicular Ad Hoc Networks. IEEE Access 2021, 9, 86037–86053. [Google Scholar] [CrossRef]

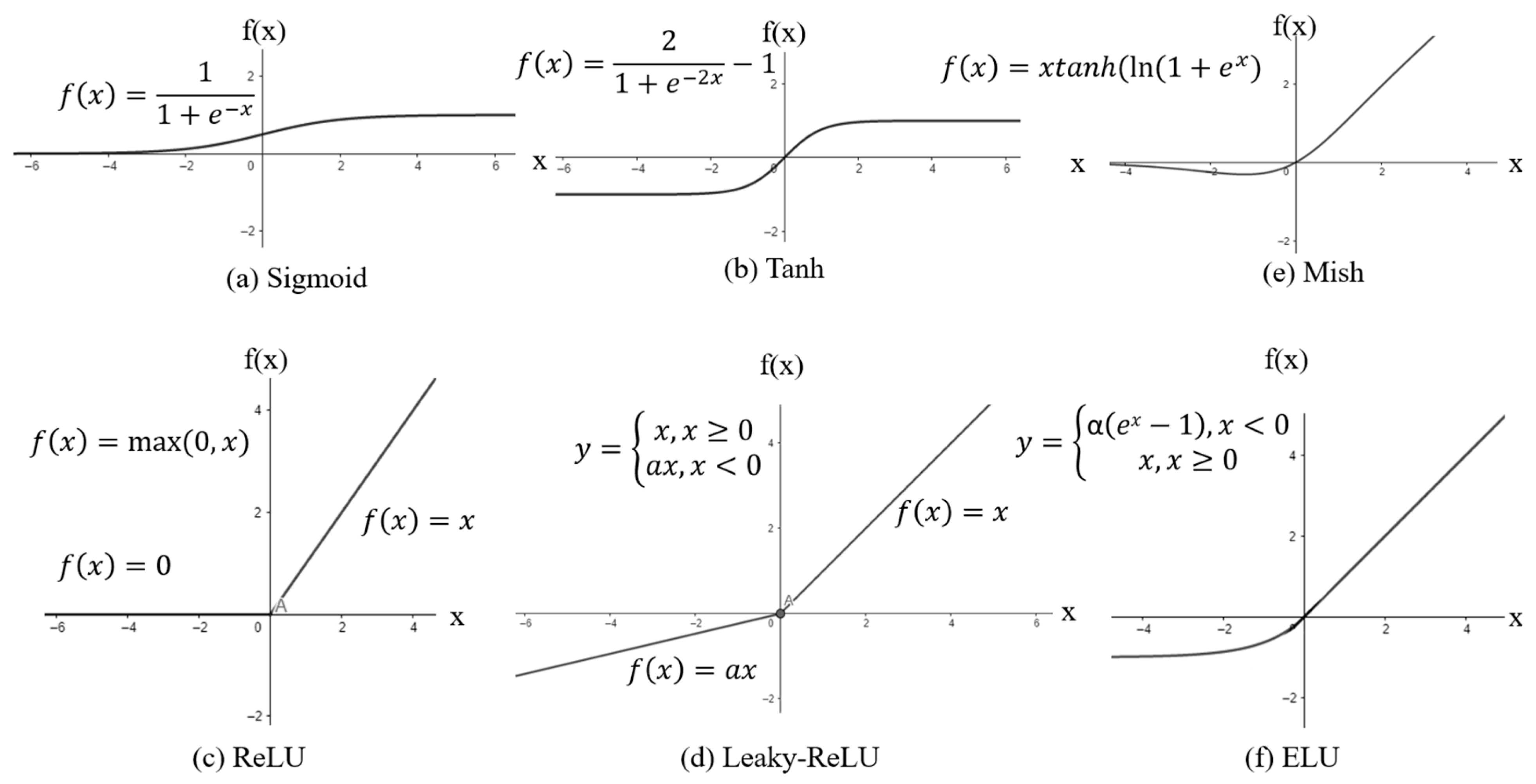

- Wang, Y.; Li, Y.; Song, Y.; Rong, X. The Influence of the Activation Function in a Convolution Neural Network Model of Facial Expression Recognition. Appl. Sci. 2020, 10, 1897. [Google Scholar] [CrossRef]

- Madhu, G.; Kautish, S.; Alnowibet, K.A.; Zawbaa, H.M.; Mohamed, A.W. NIPUNA: A Novel Optimizer Activation Function for Deep Neural Networks. Axioms 2023, 12, 246. [Google Scholar] [CrossRef]

- Banerjee, C.; Mukherjee, T.; Pasiliao, E. Feature representations using the reflected rectified linear unit (RReLU) activation. Big Data Min. Anal. 2020, 3, 102–120. [Google Scholar] [CrossRef]

- Zhang, M.; Vassiliadis, S.; Delgado-Frias, J.G. Sigmoid generators for neural computing using piecewise approximations. IEEE Trans. Comput. 1996, 45, 1045–1049. [Google Scholar] [CrossRef]

- Shen, S.-L.; Zhang, N.; Zhou, A.; Yin, Z.-Y. Enhancement of neural networks with an alternative activation function tanhLU. Expert. Syst. Appl. 2022, 199, 117181. [Google Scholar] [CrossRef]

- Kim, D.; Kim, J.; Kim, J. Elastic exponential linear units for convolutional neural networks. Neurocomputing 2020, 406, 253–266. [Google Scholar] [CrossRef]

- Wang, X.; Ren, H.; Wang, A. Smish: A Novel Activation Function for Deep Learning Methods. Electronics 2022, 11, 540. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A New Heuristic Optimization Algorithm: Harmony Search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci. Model. Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Zhou, W.; Yan, Z.; Zhang, L. A comparative study of 11 non-linear regression models highlighting autoencoder, DBN, and SVR, enhanced by SHAP importance analysis in soybean branching prediction. Sci. Rep. 2024, 14, 5905. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Advances in Neural Information Processing Systems. arXiv 2017, arXiv:1705.07874. [Google Scholar] [CrossRef]

- Ahmed, U.; Jiangbin, Z.; Almogren, A.; Sadiq, M.; Rehman, A.U.; Sadiq, M.T.; Choi, J. Hybrid bagging and boosting with SHAP based feature selection for enhanced predictive modeling in intrusion detection systems. Sci. Rep. 2024, 14, 30532. [Google Scholar] [CrossRef]

- Rafi, M.M.; Nadjai, A.; Ali, F. Fire resistance of carbon FRP reinforced-concrete beams. Mag. Concr. Res. 2007, 59, 245–255. [Google Scholar] [CrossRef]

- Ahmed, A.; Kodur, V. The experimental behavior of FRP-strengthened RC beams subjected to design fire exposure. Eng. Struct. 2011, 33, 2201–2211. [Google Scholar] [CrossRef]

| Material Type | Tensile Strength (MPa) | Young’s Modulus (GPa) | Elongation (%) |

|---|---|---|---|

| CFRP | 600–3920 | 37–784 | 0.5–1.8 |

| GFRP | 483–4580 | 35–86 | 1.2–5.0 |

| AFRP | 1720–3620 | 41–175 | 1.4–4.4 |

| BFRP | 600–1500 | 50–65 | 1.2–2.6 |

| Steel | 483–960 | 200 | 6.0–12.0 |

| L | Ac | Cc | As | Af | fc | fy | fu | Tg | tins | hi | ρins | kins | cins | Ld | anctins | FR |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 60,000 | 25 | 402.1 | 0 | 47.6 | 591 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 61.2 | 0 | 90 |

| 3 | 60,000 | 25 | 402.1 | 0 | 45.5 | 591 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 61.2 | 0 | 90 |

| 3 | 60,000 | 25 | 402.1 | 120 | 44.4 | 591 | 2800 | 52 | 25 | 0 | 870 | 0.175 | 840 | 81.2 | 25 | 76 |

| 3 | 60,000 | 25 | 402.1 | 120 | 47.4 | 591 | 2800 | 52 | 40 | 80 | 870 | 0.175 | 840 | 81.2 | 40 | 90 |

| … | ||||||||||||||||

| 3.66 | 125,730 | 38 | 603.2 | 102 | 43 | 460 | 1172 | 82 | 32 | 152 | 425 | 0.156 | 1200 | 97 | 32 | 240 |

| Variables | Data Type | Minimum | Maximum | Mean | Std. Deviation |

|---|---|---|---|---|---|

| L | float64 | 1.26 | 6 | 2.8481 | 1.2468 |

| Ac | int64 | 12,000 | 125,730 | 53,729.9591 | 37,635.8686 |

| Cc | int64 | 10 | 38 | 20.7346 | 7.9445 |

| As | float64 | 56.55 | 942.5 | 308.1 | 269.6602 |

| Af | float64 | 0 | 460 | 67.1295 | 73.2606 |

| fc | float64 | 23 | 52 | 36.0183 | 7.6697 |

| fy | int64 | 59 | 591 | 493.4489 | 93.2784 |

| fu | int64 | 0 | 4030 | 2106.3673 | 1215.0146 |

| Tg | int64 | 0 | 85 | 55.9795 | 26.4610 |

| tins | float64 | 0 | 50 | 21.7653 | 16.5583 |

| hi | int64 | 0 | 500 | 119.0408 | 159.7404 |

| ρins | int64 | 0 | 1650 | 497.4081 | 422.3450 |

| kins | float64 | 0 | 0.67 | 0.1243 | 0.1532 |

| cins | int64 | 0 | 1200 | 672.4285 | 398.1558 |

| Ld | float64 | 7.2 | 140 | 57.5122 | 36.9931 |

| anctins | float64 | 0 | 75 | 26.7653 | 20.0756 |

| FR | int64 | 12 | 240 | 109.2448 | 52.5886 |

| Data Augmentation Techniques | Parameters |

|---|---|

| Gaussian Noise | noise_factor = 0.03, augment_ratio = 1.0 |

| Regression Mixup | alpha = 0.4, augment_ratio = 1.0 |

| SMOGN | augment_ratio = 1.0 |

| Residual-based | augment_ratio = 1.0 |

| Polynomial + Noise | degree = 2, noise_factor = 0.02, augment_ratio = 1.0 |

| PCA-based | n_components_ratio = 0.8, noise_factor = 0.05, augment_ratio = 1.0 |

| Adversarial-like | epsilon = 0.01, augment_ratio = 1.0 |

| Quantile-based Sampling | n_quantiles = 5, augment_ratio = 1.0 |

| Feature Mixup | mix_probability = 0.5, augment_ratio = 1.0 |

| Conditional Sampling | augment_ratio = 1.0 |

| Parameters | Possible Values |

|---|---|

| ‘n_estimators’ | [100, 800] |

| ‘max_depth’ | [3, 10] |

| ‘learning_rate’ | [0.01, 0.3] |

| ‘subsample’ | [0.7, 1.0] |

| ‘colsample_bytree’ | [0.7, 1.0] |

| ‘reg_alpha’ | [1 × 10−8, 10.0], |

| ‘reg_lambda’ | [1× 10−8, 10.0] |

| ‘min_child_weight’ | [1, 7] |

| ‘random_state’ | [42] |

| ‘n_jobs’ | [−1] |

| ‘verbosity’ | [0] |

| Parameters | Range | Descrpitons |

|---|---|---|

| Number of Hidden Layers (hl) | 0–2 | One hidden layer (1), Two hidden layers (2), Three hidden layers (3) |

| Number of Neurons in hl = 1 | 0–6 | 8 (0), 16 (1), 32 (2), 64 (3), 128 (4), 256 (5), 512 (6) |

| Number of Neurons in hl = 2 | 0–6 | 8 (0), 16 (1), 32 (2), 64 (3), 128 (4), 256 (5), 512 (6) |

| Number of Neurons in hl = 3 | 0–6 | 8 (0), 16 (1), 32 (2), 64 (3), 128 (4), 256 (5), 512 (6) |

| Activation Function of hl = 1 | 0–6 | LeakyReLU (0), Sigmoid (1), Tanh (2), ReLU (3), LogSigmoid (4), ELU (5), Mish (6) |

| Activation Function of hl = 2 | 0–6 | LeakyReLU (0), Sigmoid (1), Tanh (2), ReLU (3), LogSigmoid (4), ELU (5), Mish (6) |

| Activation Function of hl = 3 | 0–6 | LeakyReLU (0), Sigmoid (1), Tanh (2), ReLU (3), LogSigmoid (4), ELU (5), Mish (6) |

| Augmented with… | Data Size (Rows) | Set | RMSE | MAE | R2 |

|---|---|---|---|---|---|

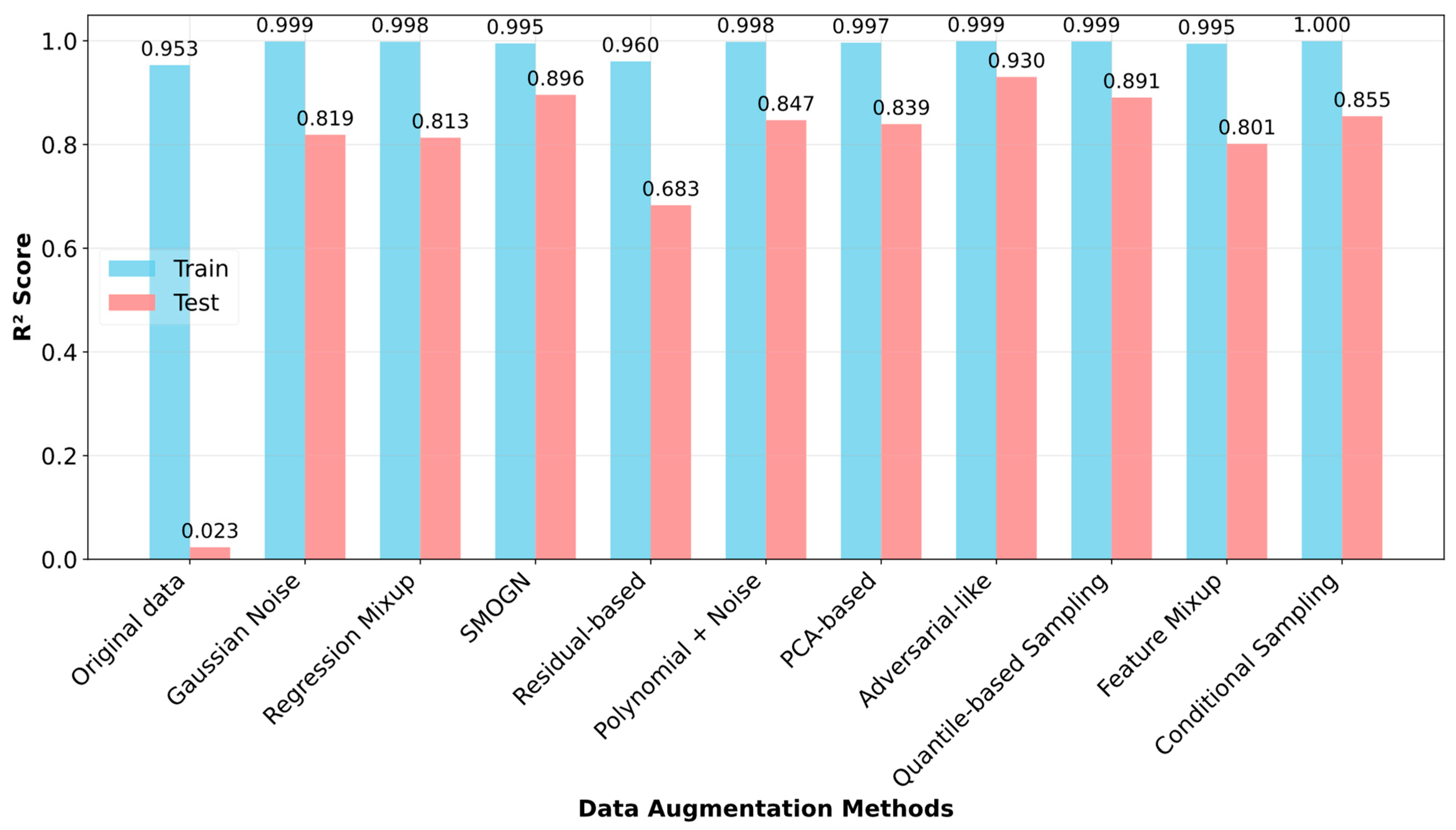

| No method (Raw dataset) | 49 | Train | 11.2113 ± 0.6845 | 8.0856 ± 0.4525 | 0.9532 ± 0.0054 |

| Test | 24.3087 ± 10.8885 | 19.1765 ± 7.9829 | 0.0232 ± 1.8417 | ||

| Gaussian Noise | 98 | Train | 1.7137 ± 0.4720 | 0.4663 ± 0.0918 | 0.9988 ± 0.0004 |

| Test | 16.2242 ± 4.0253 | 11.8361 ± 3.0808 | 0.8188 ± 0.2375 | ||

| Regression Mixup | 98 | Train | 1.9247 ± 0.4376 | 0.7500 ± 0.1315 | 0.9984 ± 0.0006 |

| Test | 17.8341 ± 6.4137 | 13.9886 ± 5.2002 | 0.8132 ± 0.1305 | ||

| SMOGN | 98 | Train | 4.0179 ± 0.3732 | 2.4713 ± 0.1769 | 0.9951 ± 0.0009 |

| Test | 14.9099 ± 6.7043 | 11.1190 ± 4.5070 | 0.8958 ± 0.1134 | ||

| Residual-based | 98 | Train | 9.5594 ± 0.3064 | 6.8184 ± 0.3100 | 0.9604 ± 0.0030 |

| Test | 23.1780 ± 6.068140 | 18.3552 ± 4.6631 | 0.6829 ± 0.1931 | ||

| Polynomial + Noise | 98 | Train | 1.9338 ± 0.3744 | 0.9237 ± 0.1294 | 0.9982 ± 0.0007 |

| Test | 15.5514 ± 7.5624 | 11.9105 ± 4.7363 | 0.8470 ± 0.0859 | ||

| PCA-based | 98 | Train | 3.0361 ± 0.3436 | 1.7367 ± 0.2097 | 0.9965 ± 0.0007 |

| Test | 16.0546± 6.8635 | 12.7819 ± 5.3441 | 0.8394 ± 0.1961 | ||

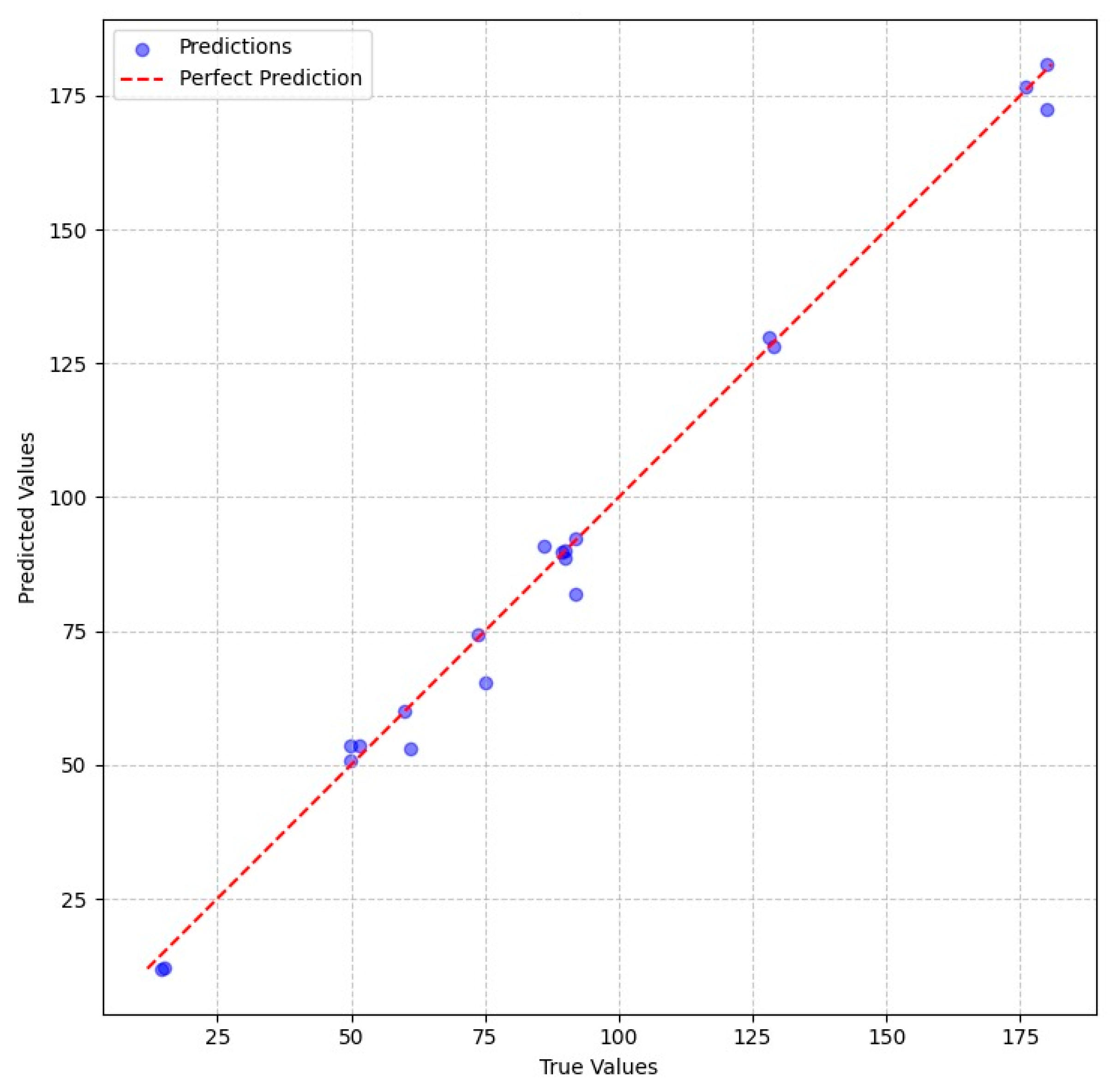

| Adversarial-like | 98 | Train | 1.2194 ± 0.1951 | 0.7302 ± 0.1097 | 0.9994 ± 0.0001 |

| Test | 11.5936 ± 5.5236 | 7.7017 ± 3.0513 | 0.9303 ± 0.0731 | ||

| Quantile-based Sampling | 94 | Train | 1.6940 ± 0.5319 | 0.3643 ± 0.0991 | 0.9987 ± 0.0005 |

| Test | 14.7802 ± 7.0718 | 10.1891 ± 5.1670 | 0.8906 ± 0.0761 | ||

| Feature Mixup | 98 | Train | 3.4956± 0.2855 | 2.3428 ± 0.2037 | 0.9945 ± 0.0010 |

| Test | 17.7424 ± 4.9500 | 12.9522 ± 3.4385 | 0.8015 ± 0.1070 | ||

| Conditional Sampling | 98 | Train | 1.0732 ± 0.3572 | 0.4413 ± 0.0785 | 0.9995 ± 0.0003 |

| Test | 17.6708 ± 7.3522 | 13.9188 ± 6.5395 | 0.8546 ± 0.1070 |

| Parameters | Value |

|---|---|

| Number of Hidden Layers (hl) | 3 |

| Number of Neurons in hl = 0 | 512 |

| Number of Neurons in hl = 1 | 64 |

| Number of Neurons in hl = 2 | 64 |

| Number of Neurons in hl = 3 | 1024 |

| Activation Function of hl = 0 | Mish() |

| Activation Function of hl = 1 | Tanh() |

| Activation Function of hl = 2 | LogSigmoid() |

| Activation Function of hl = 3 | LeakyReLU() |

| Train MSE | 19.18 |

| Train R2 | 0.99 |

| Val MSE | 50.23 |

| Val R2 | 0.7 |

| Test MSE | 22.68 |

| Test R2 | 0.99 |

| Study | Year | Aim | Method | Result |

|---|---|---|---|---|

| Vu and Hoang [8] | 2016 | Predict the ultimate drilling capacity of FRP-reinforced concrete slabs | least squares support vector machine (LS-SVM) and firefly algorithm | RMSE = 53.19 MAPE = 10.48 R2 = 0.97 |

| Abuodeh et al. [9] | 2020 | Investigate the behavior of reinforced concrete beams against cutting with edge-bonded and U-wrapped FRP laminates | Resilient Back Propagation Neural Network (RBPNN), Recursive Feature Extraction (RFE), and Neural Interpretation Diagram (NID) | R2 = 0.85 RMSE = 8.1 |

| Basaran et al. [10] | 2021 | Investigate the bond strength and development length of FRP bars embedded in concrete | Gaussian Process Regression (GPR), Artificial Neural Networks (ANN), Support Vector Machines (SVM), Regression Tree, and Multiple Linear Regression | r = 0.91 RMSE = 3.03 MAPE = 0.14 |

| Wakjira et al. [11] | 2022 | Predict the shear capacity of FRP-reinforced concrete (FRP-RC) | ridge regression, elastic net, least absolute shrinkage and selection operator (lasso) regres-sion, decision trees (DT), K-nearest neighbors (KNN), random forest (RF), extreme random trees (ERT), gradient-boosted decision trees (GBDT), AdaBoost, and extreme gradient boosting (XGBoost) | MAE = 8 MAPE = 12.9% RMSE = 12.6, R2 = 95.3% |

| Kim et al. [13] | 2022 | Predict FRP-concrete bond strength | categorical boosting (CatBoost), XGBoost, RF and Histogram Gradient Boosting | R2 = 96.1% RMSE = 2.31% |

| Wang et al. [14] | 2023 | Predict the shear contribution of FRP (Vf). | ANN, XGBoost, RF, GBDT, CatBoost, light gradient boosting machine (LightGBM), and adaptive boosting (AdaBoost) | RMSE = 8.98 CoV = 0.58 Avg = 1.08 integral absolute error (IAE) = 0.06 |

| Zhang et al. [15] | 2023 | Predict the ultimate condition of FRP-confined concrete | ANN, SVM, decision tree, gradient boosting, RF and XGBoost | RMSE = 2.528 CoV = 0.157 avg = 1.030 IAE = 0.112 |

| Khan et al. [16] | 2024 | Predict the flexural capacity of FRP-strengthened reinforced concrete beams | genetic expression programming (GEP) and multiple expression programming (MEP) | R = 0.98 |

| Alizamir et al. [17] | 2024 | Predict the response of FRP-reinforced concrete | gradient-boosted regression tree (GBRT), RF multilayer perceptron neural network (ANNMLP), and radial basis function neural network (ANNRBF) | RMSE = 9.67% |

| Ali et al. [18] | 2024 | Investigate the structural behavior of circular columns confined with glass fiber-reinforced polymer (GFRP) and aramid fiber-reinforced polymer (AFRP) | LS-SVM and long short-term memory (LSTM) | R2 = 0.992 Adj. R2 = 0.992 RMSE = 0.017 MAE = 0.013 |

| Bhatt et al. [19] | 2024 | Predict the fire resistance of FRP-reinforced concrete beam | support vector regression (SVR), RF regressor, and deep neural network (DNN) | R = 0.96, R2 = 0.91 |

| Kumarawadu et al. [20] | 2024 | Predict the fire resistance of FRP-reinforced RC beams | XGBoost, CatBoost, LightGBM, and GRB | accuracy > 92% |

| Habib et al. [21] | 2025 | Identifying the failure potential of of FRP-reinforced concrete beams exposed to fire | AdaBoost, DT, Extra trees, Gradient boosting, Logistic regression, and RF | recall = 1 |

| Wang et al. [22] | 2025 | Evaluate the fire resistance of FRP-reinforced concrete beams. | LightGBM and GEP | R2 = 0.923 |

| This study | 2025 | Prediciton of fire resistance time of FRP-strengthened structural beam | XGBoost + HyperNetExplorer | R2 = 0.99 MSE = 22.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Işıkdağ, Ü.; Aydın, Y.; Bekdaş, G.; Cakiroglu, C.; Geem, Z.W. Fire Resistance Prediction in FRP-Strengthened Structural Elements: Application of Advanced Modeling and Data Augmentation Techniques. Processes 2025, 13, 3053. https://doi.org/10.3390/pr13103053

Işıkdağ Ü, Aydın Y, Bekdaş G, Cakiroglu C, Geem ZW. Fire Resistance Prediction in FRP-Strengthened Structural Elements: Application of Advanced Modeling and Data Augmentation Techniques. Processes. 2025; 13(10):3053. https://doi.org/10.3390/pr13103053

Chicago/Turabian StyleIşıkdağ, Ümit, Yaren Aydın, Gebrail Bekdaş, Celal Cakiroglu, and Zong Woo Geem. 2025. "Fire Resistance Prediction in FRP-Strengthened Structural Elements: Application of Advanced Modeling and Data Augmentation Techniques" Processes 13, no. 10: 3053. https://doi.org/10.3390/pr13103053

APA StyleIşıkdağ, Ü., Aydın, Y., Bekdaş, G., Cakiroglu, C., & Geem, Z. W. (2025). Fire Resistance Prediction in FRP-Strengthened Structural Elements: Application of Advanced Modeling and Data Augmentation Techniques. Processes, 13(10), 3053. https://doi.org/10.3390/pr13103053