4.1. The DBN Model

Deep-belief networks are multilayered probabilistic generative models extensively utilized in feature learning and classification of intricate datasets. DBNs effectively amalgamate the characteristics of neural networks with probabilistic graphical models, enabling them to proficiently process nonlinear and high-dimensional data. As generative models, DBNs distinguish themselves by learning the joint distribution of the provided data—a capability that extends beyond mere classification to the generation of new data samples. The architecture of a DBN comprises multiple hidden layers, with each layer responsible for learning distinct features and representations of the data [

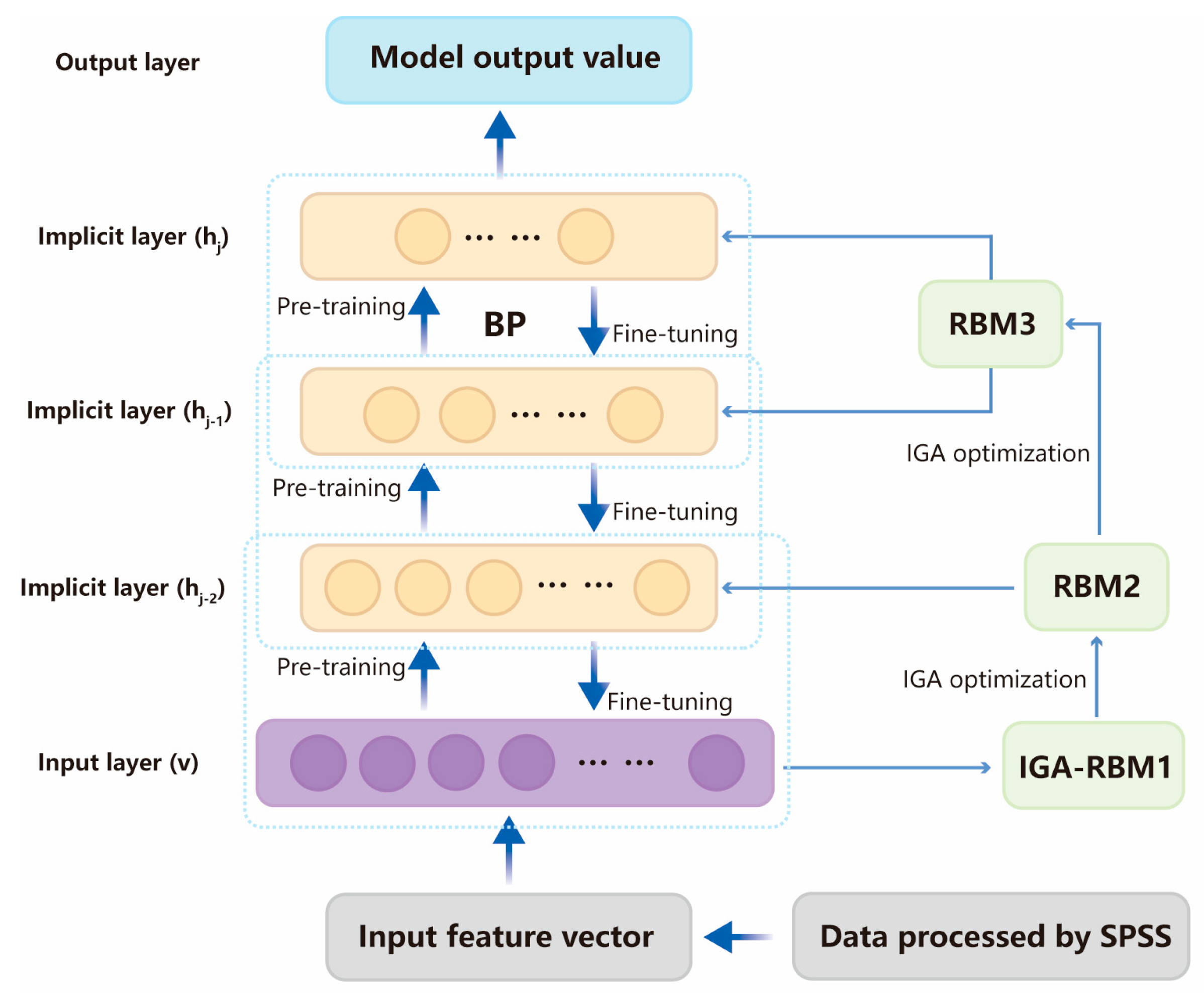

18]. This layered, hierarchical approach renders DBNs particularly adept at extracting features from complex data structures. The structural configuration of DBNs is depicted in

Figure 3.

The training process of DBNs is a well-designed multi-step process that aims to maximize the capture and characterization of the input data. This process can be divided into two main parts: layer-by-layer pre-training and global fine-tuning.

In the context of layer-by-layer pre-training within a DBN, the architecture comprises multiple layers of Restricted Boltzmann Machines (RBMs), each tasked with capturing features from various levels of input data. During this pre-training phase, each RBM layer is trained independently, without the influence of other layers. This independence allows each layer to concentrate solely on learning feature representations from its received input, beginning from the bottom layer and ascending sequentially through the DBN hierarchy [

19]. Successive layers of RBMs utilize the output from the preceding layer as their input. Consequently, the RBMs in the lower layers of the network are adept at learning fundamental data features, while those in the higher layers progressively extract more abstract and complex feature representations. The corresponding calculation is shown in Equation (1):

where

,

are the

i-th neuron input and bias of the visible layer;

,

are the

j-th neuron output and bias of the hidden layer; and

is the connection weight between the neurons of the visible and hidden layers.

The visible layer probability distribution function

for each layer in the RBM network is defined as shown in Equation (2):

where

,

are the number of neurons in the visible and hidden layers, respectively.

The probability distribution function

of the visible layer of each RBM network is defined as shown in Equation (3):

Given the independence of neurons within the same layer, it is possible to calculate the probability associated with the weight

as follows:

Similarly, the probability associated with the activation function

can be calculated:

function is referred to as the activation function in the context of neural networks:

The primary objective of RBM pre-training is to initially obtain optimal parameters, denoted as parameter

, represented by

, to achieve a DBN structure with a satisfactory fitting effect. At this stage, the sample statistical probability within the DBN and the model-generated probability are maximized to be equal. The calculation formula for

is as follows:

where

T denotes the total number of training samples.

Global fine-tuning entails the comprehensive refinement of a DBN using the Back Propagation (BP) algorithm, following the pre-training of all layers. This phase aims to adjust the weights and biases across the network, enabling improved data representation and prediction. Utilizing the error gradient, the BP algorithm methodically updates the parameters from the top layer downwards, thereby enhancing the network’s predictive capabilities [

20]. The significance of the fine-tuning phase lies in its integration of individual layer learnings, ensuring the DBN’s collective and effective representation of the data. After pre-training, the DBN acquires initial parameters. The fine-tuning phase aims to enhance the model’s fitting performance. Starting from the topmost layer of the DBN, fine-tuning adjusts the initial parameters layer by layer downwards using a small amount of labeled data obtained during pre-training. Typically, the SoftMax function is employed as the final classifier, with the BP algorithm used to execute the fine-tuning process. If the DBN network comprises

l RBMs, the output of the pre-training is as follows:

The SoftMax function determines the output of the DBN by identifying the category with the highest probability as the predicted class. This process effectively translates the network’s output into a probability distribution over predicted classes, facilitating classification tasks by emphasizing the most likely category.

In the initialization phase of the DBN architecture, a DBN model is established. Traditionally, the selection of optimal initial weights and thresholds in DBN models is randomized, which closely ties the iteration time to network parameters and adversely affects the global search capability. To address this issue, this study proposes the use of an improved GA to optimize the DBN, thereby overcoming the randomness in the selection of initial DBN weights and thresholds. This approach continuously guides the optimization of DBN parameters during model training, enhancing the model’s learning efficiency and predictive accuracy [

21].

4.2. IGA–DBN Neural-Network Prediction Model

In the exploration of ice-storage air conditioning systems, the pivotal roles of load forecasting and operational optimization are underscored by their contributions to enhancing energy efficiency and curbing operational expenses. The DBN emerges as the preferred analytical instrument, selected for its exemplary prowess in feature extraction and data modeling. This study leverages the DBN’s predictive insights, in concert with findings from an optimization algorithm, to refine the operational parameters of ice-storage air conditioners. Central to the predictive model architecture, the paper examines the learning efficacy of the RBM using reconstruction error (RE). Predominantly utilized in machine learning and signal processing, the RE evaluates the disparity between original and reconstructed data, serving crucially in dimensionality reduction and feature extraction tasks table. An incremental approach, adding RBM layers successively, facilitates the observation of RE variations. Notably, a diminished RE is indicative of more comprehensive feature extraction and enhanced model stability, as elaborated in Equation (9). The experiment sets the maximum number of iterations of the network model to 100, the learning rate is , and the learning increase coefficient and learning decrease coefficient are and .

where

is the reconstruction error value of the

t-th iteration,

is the number of samples,

is the

k-th column of the input sample matrix, and

is the current input layer neuron state.

The iterative analysis reveals a consistent downward trend in the reconstruction error (RE) values across the various layers of the RBM. Notably, there is a gradual decline in RE as the depth of the model increases. Each subsequent RBM layer initially presents a larger RE, surpassing the final value of its preceding layer, attributed to the random assignment of initial weights. This dynamic is further elucidated in

Table 1, which illustrates the impact of the DBN model depth on the RE.

Table 1 indicates a progressive decrease in network reconstruction error with increasing depth. However, this is accompanied by rising computational time and diminished efficiency. Specifically, a single-layer RBM network exhibits substantial fluctuations in RE and slower convergence. In contrast, a two-layer RBM network demonstrates a decreasing RE trend with quicker convergence. While the arithmetic time for three-layer and four-layer RBM networks is more complex, the four-layer network does not show significant improvements in steady-state error value or convergence speed compared to the three-layer network. Consequently, this study opts for a three-layer RBM architecture for the cold-load DBN prediction model.

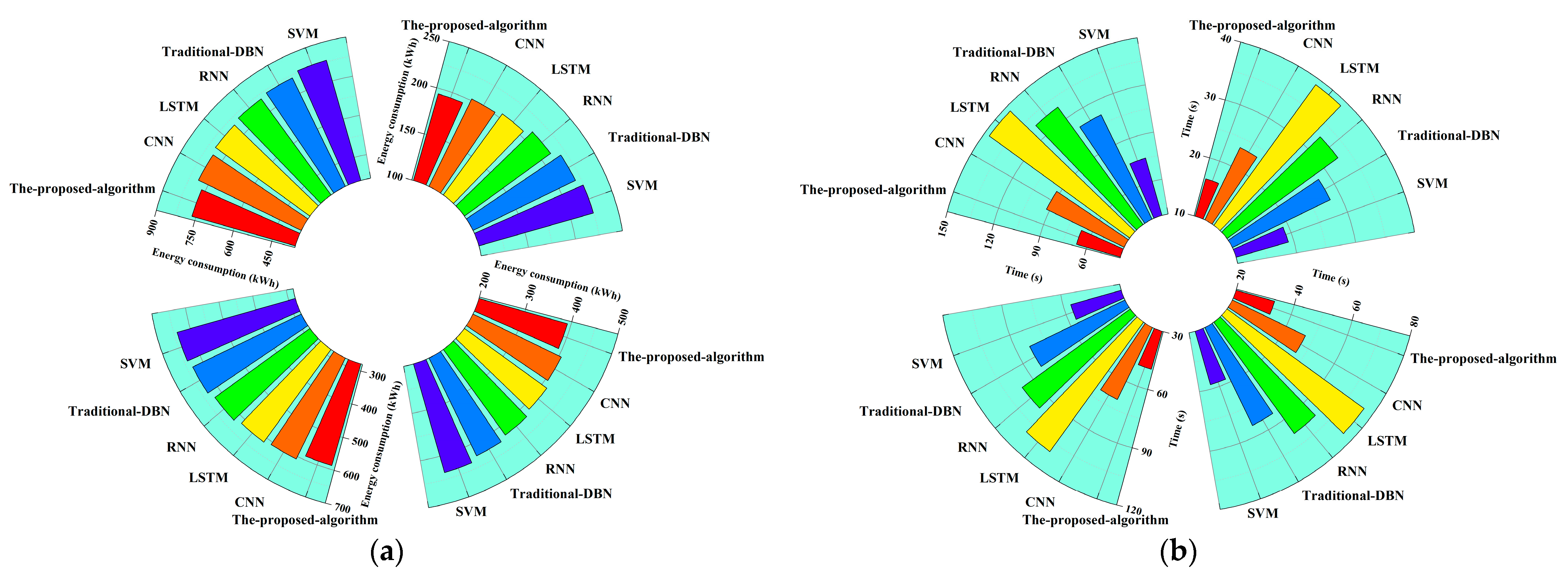

Simultaneously, to demonstrate the advantages of the DBN in tasks such as dimensionality reduction and feature extraction compared to other traditional network models, this study benchmarks against the computational time taken for reconstructing errors with three layers of the RBM in a traditional DBN network. It also involves training and calculating the computational times of conventional networks, including CNN, RNN, SVM, and LSTM networks. The specific outcomes are presented in

Table 2.

When the training conditions for the model remain unchanged, and after training different layers of CNN, RNN, SVM, and LSTM networks to meet a steady-state error and convergence speed within the RE range of 0.0397 to 0.7686, it was found that compared to the DBN model, the computational time for these four types of network models increased to a certain extent, with the maximum increase in computational duration reaching 17.35%. This demonstrates that the DBN model possesses superior convergence capabilities compared to other traditional network models, offering significant advantages in tasks such as high-dimensional data computation and feature extraction.

Having established the optimal depth for the RBM in the DBN prediction model, this study next addresses a fundamental challenge in traditional DBN models: the arbitrary selection of initial weights and thresholds. To rectify this, the research introduces an optimization of the DBN model using the improved IGA, specifically designed to reduce the randomness in selecting initial DBN parameters [

22]. The traditional GA employs binary coding, representing individuals within the population with fixed-length binary strings, where alleles are comprised of binary symbols from the set {0,1}. The selection process utilizes the roulette wheel selection method; for crossover, single-point crossover is used with a fixed crossover probability; and mutation is carried out through bit mutation, with mutation probability also set to a fixed value. In this study, four operational parameters of the basic GA need to be predetermined: the population size

M, which refers to the number of individuals within the population, is set to 100; the termination criterion for genetic operations

G is set to 500 generations; the crossover probability

Pc is set within the range of (0.4, 0.9); and the mutation probability

Pm is set within the range of (0, 0.1). However, traditional GAs exhibit drawbacks such as slow search speed and a tendency towards premature convergence. Moreover, the fixed values for crossover probability

Pc and mutation probability

Pm, based on experience, may not be suitable for different function optimization problems. However, traditional GAs suffer from limitations such as slow search speeds and a propensity for premature convergence. Furthermore, the conventional approach of using fixed values for crossover probability (

Pc) and mutation probability (

Pm), based on empirical judgment, limits their applicability across diverse optimization problems. In response, this paper proposes modifications to the GA, particularly in the adaptation of

Pc and

Pm during the evolutionary process [

23]. The traditional adaptive genetic algorithm (AGA), which dynamically adjusts crossover and mutation probabilities (

Pc and

Pm, respectively) based on fitness values, allows these probabilities to automatically change with fitness. However, when the fitness values of individuals are close to or equal to the maximum fitness value, both

Pc and

Pm approach zero. This condition is detrimental in the early stages of evolution, as superior individuals nearly remain unchanged, increasing the likelihood of converging to local optima.

Considering the limitations of traditional AGAs, where the crossover and mutation probabilities become zero when the fitness value equals the maximum fitness value, there is a tendency to converge to local optima. Additionally, individuals with lower fitness exhibit reduced mutation capabilities, leading to stagnation. While the elitist strategy protects the optimal individuals, it may cause the population’s evolution to stall when the number of individuals is large, leading to local convergence. Furthermore, traditional adaptive GAs have focused solely on the comparison between the average and optimal values within the population, neglecting a comprehensive evaluation of how crossover and mutation probabilities are set across the entire population. The modified approach allows Pc and Pm to dynamically regulate the algorithm’s search efficiency without compromise. These probabilities are crucial in influencing the GA’s behavior and performance. To escape local optima, Pc and Pm are increased when the population’s fitness con-verges towards a local optimum. Conversely, if the population’s fitness is more varied, Pc and Pm are reduced to preserve superior individuals. Additionally, individuals with fitness levels above the population average are assigned lower Pc and Pm to facilitate their progression to subsequent generations, while those below the average are subjected to higher Pc and Pm values to facilitate their elimination. Based on the adaptive genetic algorithm, the variation in crossover and mutation probabilities follows a specific rule: individuals of lower fitness are assigned higher crossover probabilities and lower mutation probabilities. Conversely, individuals of higher quality are allocated crossover and mutation probabilities based on their fitness level and the iteration state. As the number of iterations approaches the maximum, the crossover probability decreases, while the mutation probability increases. The improved adaptive genetic algorithm automatically adjusts the crossover and mutation probabilities, as shown in Equations (10) and (11).

In order to further improve the accuracy of the prediction model, the best initial weights and threshold parameter configurations of the DBN model are optimized using the IGA in this paper as follows:

Step 1: Initialize the parameters in the DBN network, select the number of neuron layers and the number of neurons in the DBN, and then select the dimensions of the individuals to establish the DBN network structure.

Step 2: Initialize the GA population to produce N individuals constituting the initial solution set. Encoding the initial values of the DBN model by an improved GA.

Step 3: Determine the fitness function , and calculate the fitness .

Step 4: Individuals are selected according to roulette rules, and the average fitness value of the current population, , and the maximum fitness value of the current population, , are calculated.

Step 5: Individuals in the population are randomly paired into pairs, and their crossover probability

Pc is calculated by the adaptive crossover formula, which randomly generates

, and the crossover operation is performed on the chromosome if

. The adaptive crossover probability formula is shown in Equation (10):

where

denotes the average fitness value of the current population,

denotes the maximum fitness value of the current population,

denotes the fitness value of the

i-th chromosome, and

,

are set parameters.

Step 6: For all individuals in the population, their adaptive mutation probability

Pm is calculated by the adaptive mutation formula,

is randomly generated, and crossover operation is performed on the chromosome if

. The adaptive mutation probability adjustment formula is shown in Equation (11):

where

denotes the average fitness value of the current population,

denotes the maximum fitness value of the current population,

denotes the fitness value of the

i-th chromosome, and

,

are set parameters.

Step 7: The fitness of new individuals generated from crossover and mutation is calculated. Low-fitness individuals generate new individuals after being manipulated by the IGA algorithm, and the resulting population of new individuals is mixed with the good population to form a new population.

Step 8: At the end of evolution, determine whether the accuracy meets the requirements, select the best fitness value, i.e., the optimal weight threshold, and then assign the optimized weight threshold to the DBN; otherwise, return to step 4 to continue training.

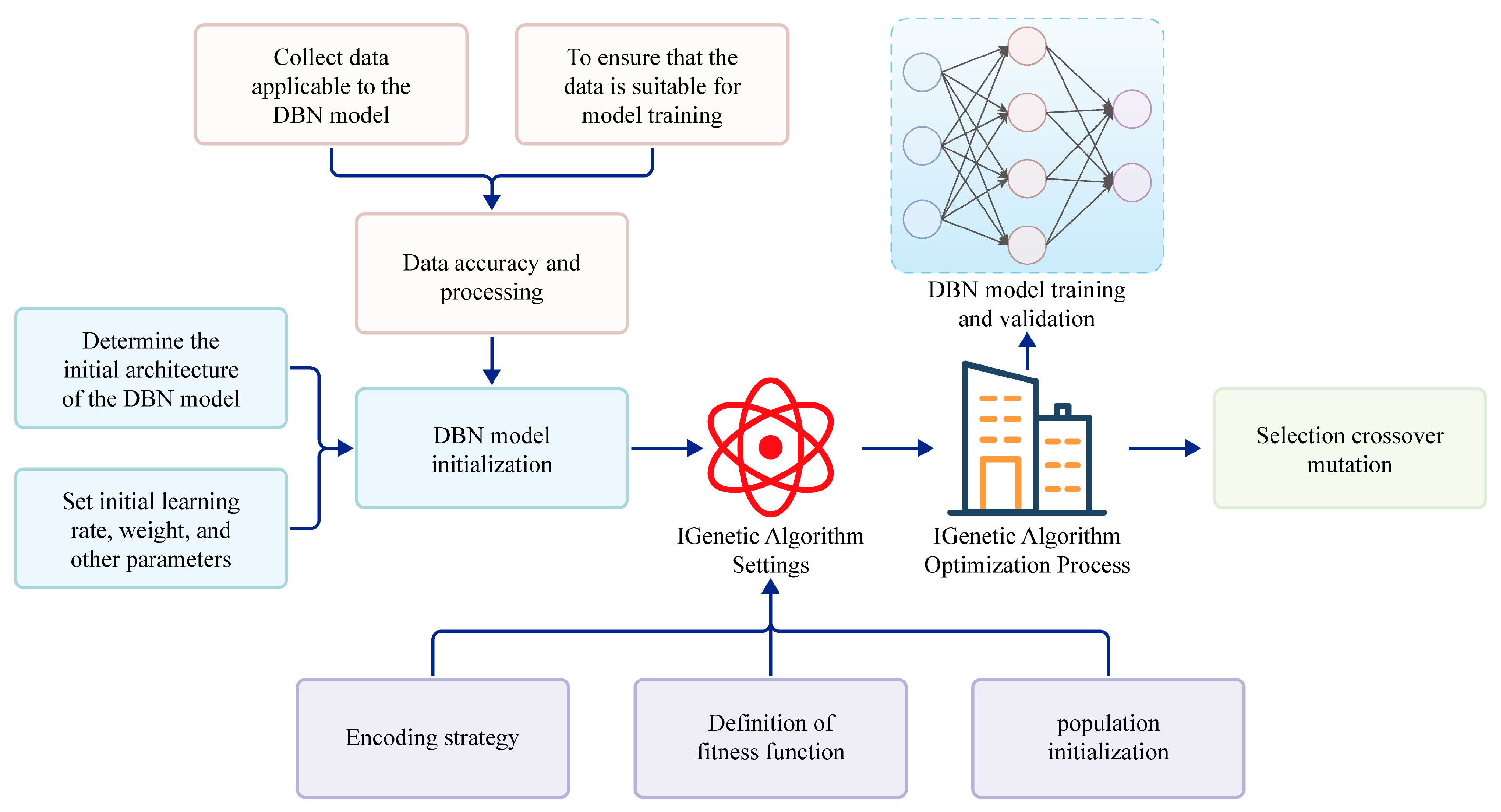

The architecture of the DBN prediction model, enhanced through the use of the IGA, is depicted in

Figure 4. This architecture represents an iterative process, where the IGA–DBN model achieves adaptive adjustments. These adjustments are facilitated by refining the

Pc and

Pm within the GA and integrating it with the DBN, renowned for its superior feature extraction capabilities. The IGA’s primary role in this framework is to address the issue of random initialization inherent in DBN weight parameters. It continually optimizes the parameters of the DBN, and the performance feedback from the DBN model informs subsequent rounds of IGA-guided parameter optimization. This synergistic combination of the IGA with the DBN model significantly enhances the latter’s efficacy in handling complex data processing tasks.