Abstract

Particle swarm optimization (PSO) has been extensively used to solve practical engineering problems, due to its efficient performance. Although PSO is simple and efficient, it still has the problem of premature convergence. In order to address this shortcoming, an adaptive particle swarm optimization with state-based learning strategy (APSO-SL) is put forward. In APSO-SL, the population distribution evaluation mechanism (PDEM) is used to evaluate the state of the whole population. In contrast to using iterations to just the population state, using the population spatial distribution is more intuitive and accurate. In PDEM, the population center position and best position for calculation are used for calculation, greatly reducing the algorithm’s computational complexity. In addition, an adaptive learning strategy (ALS) has been proposed to avoid the whole population’s premature convergence. In ALS, different learning strategies are adopted according to the population state to ensure the population diversity. The performance of APSO-SL is evaluated on the CEC2013 and CEC2017 test suites, and one engineering problem. Experimental results show that APSO-SL has the best performance compared with other competitive PSO variants.

1. Introduction

Optimization problems occur frequently in various practical engineering problems [1]. Thus, it is crucial to solve optimization problems efficiently [2]. Optimization problems have gradually become an important issue in the industrial field [3]. With the progress of science and technology, optimization problems in the industrial field are becoming more and more complex [4]. They are often accompanied by a large number of discontinuous, non-microscopic, computationally complex, local optimums [5,6]. Finding solutions to optimization problems in complex situations is crucial [7]. Traditional optimization algorithms such as quasi-Newton methods, the steepest descent method and the gradient descent method, etc., require the objective function to meet strict conditions [8]. Therefore, the above methods are difficult to apply in practice [9] and some new methods should be proposed to solve complex optimization problems [10].

At present, evolutionary algorithms have been proposed to address complex optimization problems and have achieved satisfactory results [11,12]. Evolutionary algorithms simulate the evolution of natural species with self-organizing and adaptive characteristics, and do not require the objective function to meet strict conditions. Therefore, evolutionary algorithms are adopted to address complex optimization problems. As a type of evolutionary algorithm, swarm intelligence algorithms have received widespread attention. Examples include PSO [13], differential evolution (DE) [10], artificial bee colony optimization (ABC) [14], ant colony optimization (ACO) [15], etc. In swarm intelligence algorithms, a population can be seen as being composed of independent individuals, each of whom interacts to jointly search for the global optimum. Therefore, use of swarm intelligence methods to solve complex optimization problems is feasible [16] and they have received widespread attention in recent times [17].

As a popular evolutionary algorithm, PSO has been extensively adopted in different engineering optimization problems since it was proposed in 1995. PSO simulates the foraging behavior of natural animals, and has the advantages of robustness, efficiency and precision. In PSO, a population is composed of some particles, and each particle has two learning samples, namely its own historical best solution (pbest) and a global best solution (gbest). In the process of evolution, particles in the whole population work together to seek gbest. Although PSO has advantages, it still has the problem of poor balance between global and local search. Recently, a large number of PSO variants have been proposed to address the above issue. These can be divided into three classes: adaptive learning parameter, novel updating strategy and new topology mechanism.

Adaptive learning parameter: Parameter setting is an important part of PSO. A fitness-based PSO algorithm (FMPSO) is developed in reference [18], in which different categories of particles have different parameters. In reference [19], the authors use sine and cosine parameters to increase algorithm diversity. In the literature [20], during the process of evolution, the authors use sigmoid function to realize dynamic parameters to make the algorithm search efficiently. Extensive experiments have proved the robustness of the algorithm. In the literature [21], the nonlinear attenuation acceleration coefficient is put forward. The robustness of an algorithm is enhanced by using different acceleration coefficients at different evolutionary stages of the population. In reference [22], inertial weight with chaotic mechanism is put forward. This is used to increase the diversity of the population. Similarly, in reference [23], the dynamic nonlinear inertia weight is adopted to enhance the anti-jamming capacity of the algorithm. Rosso et al. [24]. propose an enhanced multi-strategy particle swarm optimization for constrained problems with an evolutionary-strategies-based unfeasible local search operator. This method determines the parameters’ values that govern the evolutionary strategy simultaneously during the optimization process. Rosso et al. [25]. propose a new constraint-handling approach for PSO, adopting a supervised classification machine learning method: the support vector machine (SVM).

Novel updating strategy: Updating the strategy is the most important part of PSO, as this determines the whole population’s evolution direction. E et al. [26] introduce human social learning intelligence into the PSO algorithm to enhance its performance. In the literature [27], five different algorithms are integrated to form a new algorithm in which the algorithm with the best performance is selected to work through a rating function. In order to increase the diversity of learning samples, a comprehensive learning particle swarm optimization algorithm (CLPSO) is put forward [28] in which the entire population is updated based on excellent information. In reference [29], a novel dual population algorithm is proposed. In this approach, two sub-populations are devoted to conduct global and local searches, respectively. Extensive experiments have proved the effectiveness of the algorithm. Xia et al. [30] proposed a multi-learning sample algorithm in which different learning samples and update mechanisms collaborate to work. Li et al. [31] proposed an adaptive cooperative particle swarm optimization with difference learning. In this, performance accuracy can be enhanced by using different learning strategies. In reference [32], according to the spatial distribution, the population state can be obtained. Different learning mechanisms are used in various evolutionary states. Recently, researchers have incorporated other technologies into the PSO algorithm to enhance its performance [33,34].

New topology mechanism: Multiple swarm collaboration methods have been widely adopted recently. In the literature [35], a new multi-swarm interaction mechanism has been put forward (ADPSO). In ADPSO, the proposed strategies can be used to enhance the performance of traditional PSO. Yang and Li [36] combine evolutionary states with the collaborative mechanisms of the whole population for the first time. The experimental results have received praise from peers. In the literature [37], three learning features are used to enhance the performance of the traditional method. As a famous variant of PSO, dynamic multi-swarm PSO variants achieve excellent results [38] and have gained widespread attention from peers. In the literature [39], a fully informed PSO is proposed (FIPS). In FIPS, each individual can be influenced not only by the global best solution, but also by the particles in its neighborhood. Lu et al. [40] let four sub-swarms work together to find global optimization. Xia et al. [9] put forward an adaptive multi-swarm particle swarm optimization algorithm (MSPSO). In MSPSO, the number of sub-populations can be changed according to population stage.

Although the existing research has made significant improvements to the PSO algorithm, there are still some problems that have not been effectively addressed. The evolutionary state of the whole population is an important indicator. Specifically, in the early stage the whole population should learn more diversity information to increase the global ability. In the later stage, the whole population should learn more accuracy information to increase the local ability. Although a large number of methods have emerged to calculate population spatial distribution to evaluate population evolutionary state, calculating population spatial distribution consumes lots of computing resources. The additional calculations far exceed the complexity of PSO and it is difficult to achieve efficient and fast work. Therefore, how to design a lightweight complexity evaluating population evolutionary state method while obtaining satisfactory results is of great significance. In addition, using different evolutionary strategies in various evolutionary states is worthy. Therefore, it is crucial to adjust the learning strategy adaptively.

Inspired by above discussions, an adaptive PSO with state-based learning strategy (APSO-SL) is put forward. In APSO-SL, two new features are proposed in PSO. The first strategy is the population distribution evaluation mechanism (PDEM), in which the population center position and best position are used for calculating the whole population state. In this way, the whole population state can be evaluated more intuitively and accurately without excessive computation. The second strategy is the adaptive learning strategy (ALS). In ALS, different learning strategies are adopted based on the population state, to ensure the whole population diversity. Specifically, if the population diversity is high, the method conducts a global search to increase the population diversity. Instead, if the population diversity is low, the method carries out local search to increase the population’s local search ability. Lots of experiments have been conducted to confirm the effectiveness of APSO-SL, and the main contributions of APSO-SL are as follows:

- (1)

- Population distribution evaluation mechanism (PDEM) is put forward. In PDEM, instead of using iterations to just population state, APSO-SL only uses the population center position and best position for calculation. Thus, the whole population diversity can be improved without significantly increasing the calculation complexity. Our method can evaluate the population state accurately without increasing computational complexity.

- (2)

- Adaptive learning strategy (ALS) is put forward in this paper. In ALS, different learning strategies are used according to the population state, to ensure the whole population diversity. In this way, the whole population can achieve a balance between exploration and exploitation.

- (3)

- An efficient PSO variant, APSO-SL, is proposed, which combines PDEM and ALS, and outperforms 6 competitive optimization approaches. Therefore, APSO-SL is an efficient optimization algorithm.

2. Particle Swarm Optimization (PSO)

PSO is an optimization algorithm with a certain degree of randomness; each particle stands for a result in the D-dimensional feasible domain space. N particles form a population, and each particle in the population has position [ and velocity [. In each iteration, the learning sample of each particle consists of two parts: pbest and gbest. The update guidelines for PSO are shown in Equations (1) and (2):

where w is the inertia weight, d is the current dimension and r1 and r2 are two random numbers in the interval [0, 1], c1 and c2 stand for the two acceleration constants.

Generally, PSO can be divided into two types: global version and local version. The aforementioned formulas are global PSO. In order to increase the diversity of particles, local optimum is used instead of global optimum. lbest stands for the best position in the particle’s neighborhood. The velocity update strategy is described as follows:

3. APSO-SL

In this part, the proposed APSO-SL is described in detail. Firstly, the population distribution evaluation mechanism (PDEM) is adopted to evaluate the population state. Secondly, the adaptive learning strategy (ALS) is used to achieve a balance between exploration and exploitation. Finally, APSO-SL is described in detail in Algorithm 1. The APSO-SL proposed in this paper includes these two improvements, and we also conduct a detailed analysis of APSO-SL in Section 4.

| Algorithm 1. APSO-SL |

| 01. Objective function: f(x) |

| 02. Input: , ,,, pbest, gbest, D, d, t, Tmax, r1, r2, U, L, w, c1, c2; |

| 03. Output: Optimal solution; |

| 04. Initialization: = + rand×(), = + rand×(); |

| 05. while (t <= Tmax) |

| 06. for i = 1:N do |

| 07. Calculate the center position of the population based on Equation (4); |

| 08. Judging the Euclidean distance in space using Equation (5); |

| 09. Determine the evolutionary state of the population based on Equation (6); |

| 10. Case 1: |

| 11. Update particles in the population using Equations (1) and (2); |

| 12. Case 2: |

| 13. Update particles in the population using Equations (7) and (2); |

| 14. Case 3: |

| 15. Keep the population updating method unchanged. |

| 16. t = t + 1; |

| 17. end for |

| 18. end while |

3.1. Population Distribution Evaluation Mechanism (PDEM)

The evolutionary state of a population is an important indicator. Inspired by the diversity of natural species, different individuals possess different characteristics. As a result, use of different evolutionary strategies in various evolutionary states is worthy. In the initial stage, the whole population is suitable for conducting global search. In contrast, at the end stage the whole population is suitable for small-scale local search. Therefore, this is crucial to judge the population state.

In general, researchers use iterations to judge the evolutionary state. However, there are some problems when using this approach. In the initial iteration, the diversity may be low and suitable for exploitation. In the final iteration, the diversity of the population may be high and suitable for global search. Recently, researchers have used the spatial distribution of a population to judge its evolutionary state. The population spatial distribution is the most intuitive indicator to evaluate its state. However, calculating the population spatial distribution requires a lot of calculation. The additional calculations far exceed the complexity of PSO, and it is difficult to achieve efficient and fast work. Therefore, the question of how to design a lightweight method of evaluating the population state while obtain satisfying result is of great significance. The calculation process of the algorithm is shown in Equations (4)–(6).

In this section, the population distribution evaluation mechanism (PDEM) is proposed to evaluate the whole population state. Firstly, we calculate the center position of the whole population according to Equation (4). Secondly, the spatial Euclidean distance between the center and best positions is calculated, according to Equation (5). Finally, the population state can be determined based on the above spatial Euclidean distance, based on Equation (6). The whole population evolutionary state can be evaluated without significantly increasing the calculation complexity. Our method can evaluate the population state accurately without increasing computational complexity.

3.2. Adaptive Learning Strategy (ALS)

Based on the above discussion, APSO-SL can evaluate the evolutionary state of the population. We classify fB into three states: case 1, case 2 and case 3. These represent the states of exploration, exploitation and balance, respectively, and we define the following three cases.

Case (1)—Exploration: In this condition, fB is a relatively large value (e.g., larger than the threshold 0.4). Specifically, the best individual of the population is far from the center of the population, indicating that the population is currently relatively dispersed and suitable for global search. The proposed method defines this state as exploration state. In this case, exploring as many regions as possible and increasing the population diversity are beneficial to the population evolution. We use traditional PSO algorithms for a large-scale search, based on Equations (1) and (2).

Case (2)—Exploitation: In contrast, a small value of fB (e.g., smaller than the threshold 0.3) signifies that the current population is in the same region, most of the individuals are around the best individual, and only a few individuals are distributed in other areas. The population distribution is relatively dense, and suitable for small-scale local search. This case is therefore likely to represent the exploitation state. The formula for the population in this case is as follows. Equation (7) consists of three parts, with the first two parts being the same as the basic PSO algorithm, representing the weights learned from inertial learning and the weights learned from one’s own historical optimal values. The third item is the weight learned from sample e, where e is a randomly selected sample from the historical optimal values of all particles in the population.

In formula, e is the new learning sample which is randomly selected from the historical optimum values of all particles in the whole population. In this way, the population can learn high-quality diversity information.

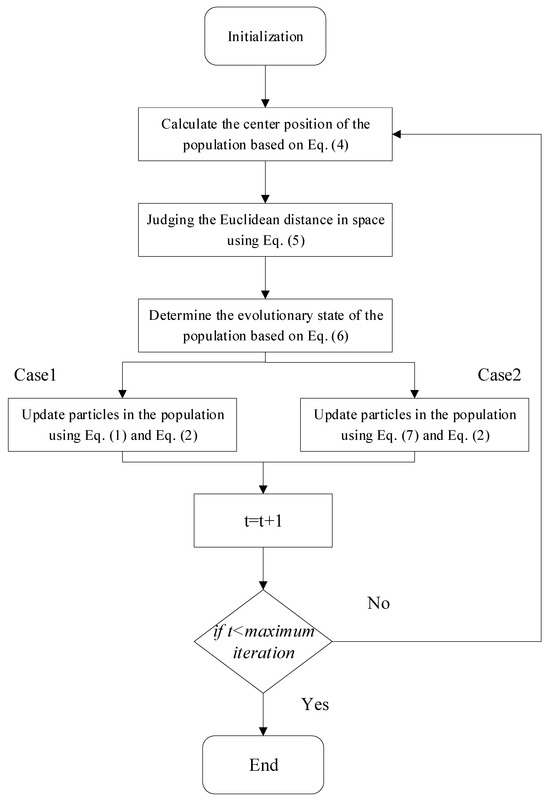

Case (3)—Balance: When fB is a relatively middle value (e.g., smaller than the threshold 0.4 and larger than the threshold 0.3), we maintain the learning strategy of the whole population unchanged to achieve a balance between exploration and exploitation. When the evolutionary state has been estimated, we can control the mutation strategy adaptively. Different learning strategies are used according to the population state, to ensure the whole population diversity. In this way, the whole population can achieve a balance between exploration and exploitation. The flowchart of the algorithm is shown in Figure 1. All input and output parameters have been explained in the corresponding sections of the text, and detailed explanations will not be provided here.

Figure 1.

The overall flowchart of the proposed algorithm.

4. Experiments

4.1. Experimental Fundamentals

The APSO-SL is tested on CEC2013 [41,42] test suite, and one practical engineering problem. CEC2013 is a famous test suite with extensive applications, which are listed in Supplementary Materials. CEC2013 is an authoritative dataset in the field of single objective evolutionary computing which includes 28 benchmark functions. Therefore, in the field of evolutionary computing, the CEC2013 dataset is widely used as the platform for evaluating algorithm performance.

The proposed APSO-SL is compared with six competitive PSO variants. The first comparison method is traditional PSO [13]; we can compare APSO-SL and PSO visually. The second peer algorithm is HCLPSO [29], in which two populations collaborate to find the optimum solution. TAPSO [30] is the third peer method, which uses three different strategies to update the population. The fourth method is CLPSO [28], which uses a novel comprehensive learning manner to work. The fifth peer algorithm is DMS-PSO [38], in which multiple populations are used to work together. EPSO [27] is the sixth comparison algorithm, in which five classic algorithms are integrated together, and the best method is selected based on scores during each iteration. The parameter settings of all comparison algorithms are shown in Table 1.

Table 1.

Parameters for six peer methods and APSO-SL.

4.2. Experimental Analysis on CEC2013

To test the effect of APSO-SL, extensive experiments are conducted on CEC2013 and CEC2017 test suites, and the solutions are shown in Table 2 and Table 3, where the best results are expressed in bold.

Table 2.

The result of all methods on CEC2013 (D = 30).

Table 3.

The result of all methods on CEC2013 (D = 50).

4.2.1. Accuracy Analysis

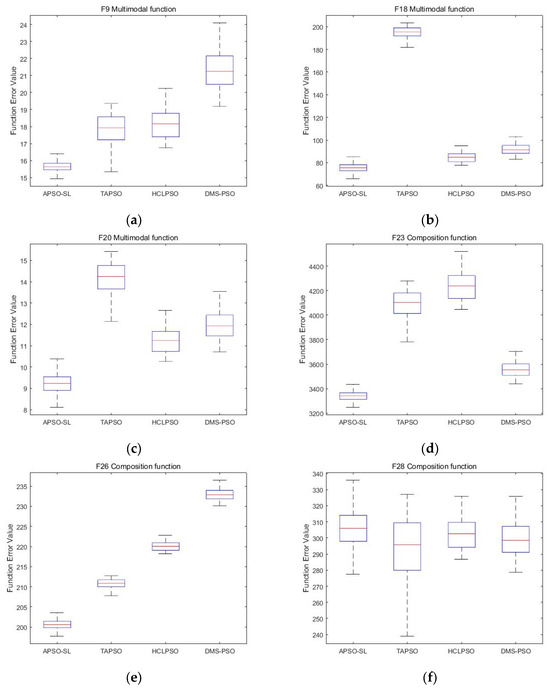

For the five unimodal functions, the solutions in Table 2 and Table 3 show that APSO-SL obtains excellent results in both 30-D and 50-D conditions, followed by TAPSO and HCLPSO. Although HCLPSO does not achieve the best results on a certain function, its overall performance is excellent. For the 15 multimodal functions, APSO-SL gets the best solutions in 30-D conditions, because it gets best solutions on 11 of 15 conditions. In 50-D condition, APSO-SL also obtains excellent performance, which obtains best solutions on 8 of 15 multimodal functions. In addition, TAPSO and HCLPSO have also achieved preferable results in solving multimodal functions. For the eight composition functions, we can see from Table 2 and Table 3 that APSO-SL achieves remarkable results in 30-D and 50-D conditions. In conclusion, population distribution evaluation mechanism (PDEM) and adaptive learning strategy (ALS) are effective. In addition, we use box-plot charts to evaluate the capabilities of all comparison methods. Due to the page limit, only some typical test functions are selected for comparison. The performances of APSO-SL and some excellent peer algorithms are shown in Figure 2. According to observation, the box-plot charts show that APSO-SL exhibited a more stable search performance in all conditions.

Figure 2.

Box-plot figures of peer methods on CEC2013. (a) f09; (b) f18; (c) f20; (d) f23; (e) f26; (f) f28.

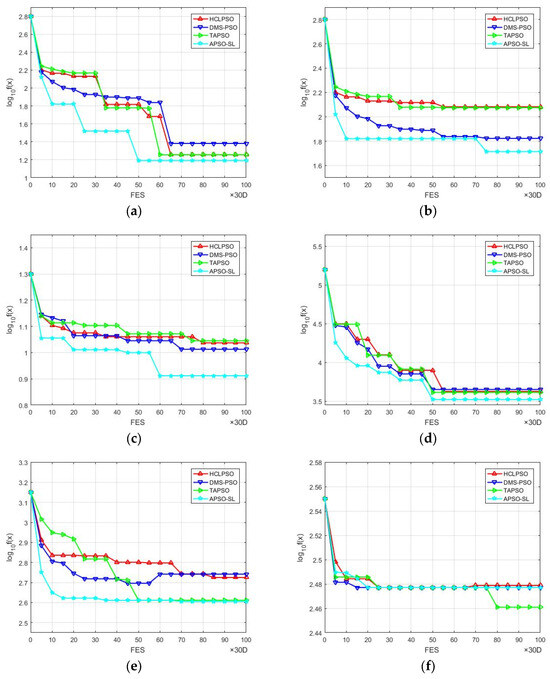

4.2.2. Convergence Analysis

In this part, we compare APSO-SL with three competitive peer methods (TAPSO, HCLPSO and DMS-PSO), and the experimental results are displayed in Figure 3. The abscissa is fitness evaluation (100×D), and the ordinate is the difference between the solution acquired by the peer method and the actual value. To be fair, each method is worked 30 times independently. Due to space limitations, only three multimodal functions (f9, f18, f20) and three complex functions (f23, f26, f28) are selected for analysis and discussion in this section. Through the results in Figure 2, we find APSO-SL has an excellent convergence performance in most functions (f9, f18, f20, f23, f26). In function f28, the convergence character of APSO-SL is the same as DMS-PSO and HCLPSO, but worse than TAPSO.

Figure 3.

Convergence characteristic of APSO-SL and other methods. (a) f09; (b) f18; (c) f20; (d) f23; (e) f26; (f) f28.

4.2.3. Statistical Analysis

Statistical analysis methods are an important part of data processing. We use the Friedman test to summarize the accuracy of information in all peer algorithms in Table 2 and Table 3, and the experimental results are displayed in Table 4. Through the results in Table 4, we find APSO-SL achieves the best performance in all conditions in both dimensions. Hence, APSO-SL has obvious advantages.

Table 4.

Friedman test of all compared algorithms on CEC2013 test suite.

4.3. Results on CEC2017

To test the proposed APSO-SL overall, CEC2017 has been used to evaluate all comparison methods performance. In order to reduce the length, only 30-D is selected in this part. Table 5 shows that APSO-SL achieves the best results in unimodal conditions. For seven multimodal functions, APSO-SL gets the best solutions on f5, f6, f8 and f9, in four of the total seven conditions, followed by EPSO and DMS-PSO. In hybrid and composition conditions, APSO-SL obtains preferable results. Experimental results show that APSO-SL performs competitively on CEC2017 test suite.

Table 5.

The result of all methods on CEC2017 (D = 30).

4.4. APSO-SL for Engineering Application Problem

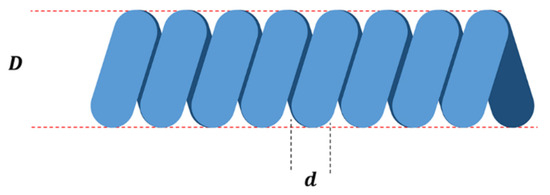

The optimization problem of tension springs is a classic problem in the field of engineering. The purpose is to find the minimum weight [36] based on material diameter d (x1), average winding diameter D (x2) and turns N (x3).

Its schematic diagram is shown in Figure 4 and the principle of the formula is shown in Equation (8). The experimental results of APSO-SL and six peer algorithms are shown in Table 6 and the results reveal that APSO-SL is competitive.

where .

Figure 4.

Tension spring optimization problem.

Table 6.

Experimental results on engineering issues.

5. Conclusions

We propose an adaptive PSO with state-based learning strategy (APSO-SL). In PDEM, the population center position and best position are used for calculating the whole population state. In this way, the whole population state can be evaluated more intuitively and accurately without excessive computation. In the second strategy, ALS, different learning strategies are adopted based on the population state to ensure the whole population diversity. Specifically, if the population diversity is high, we conduct a global search. If the population diversity is low, we carry out a local search.

We can draw some conclusions through experimental analysis. First, PDEM can be used to evaluate the population state more intuitively and accurately. Second, ALS can be used to achieve a balance between global and local search. Therefore, the PDEM and ALS strategies proposed in this article can effectively improve the diversity and purposefulness of the population, have good universality, and can be widely promoted. In future work, we can investigate the effectiveness of PDEM and ALS strategies in solving large-scale problems and expensive optimization problems.

However, PDEM and ALS still have limitations, which lacks theoretical proof and sufficient experimentation. In the following work, in terms of methods, we should design more efficient mechanisms. For the applications, we should apply our method to more engineering applications. In addition, we should explore the performance of the proposed method in solving large-scale optimization, expensive optimization, robust optimization, multitasking optimization and dynamic optimization problems.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/pr12020400/s1.

Author Contributions

Conceptualization, X.Y.; Software, X.Y.; Investigation, X.Y.; Resources, M.G.; Writing—original draft, M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article and supplementary materials.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Yuan, Q.; Sun, R.; Du, X. Path Planning of Mobile Robots Based on an Improved Particle Swarm Optimization Algorithm. Processes 2022, 11, 26. [Google Scholar] [CrossRef]

- Yang, X.; Li, H. Multi-sample learning particle swarm optimization with adaptive crossover operation. Math. Comput. Simul. 2023, 208, 246–282. [Google Scholar] [CrossRef]

- Ali, Y.A.; Awwad, E.M.; Al-Razgan, M.; Maarouf, A. Hyperparameter Search for Machine Learning Algorithms for Optimizing the Computational Complexity. Processes 2023, 11, 349. [Google Scholar] [CrossRef]

- Azrag, M.A.K.; Zain, J.M.; Kadir, T.A.A.; Yusoff, M.; Jaber, A.S.; Abdlrhman, H.S.M.; Ahmed, Y.H.Z.; Husain, M.S.B. Estimation of Small-Scale Kinetic Parameters of Escherichia coli (E. coli) Model by Enhanced Segment Particle Swarm Optimization Algorithm ESe-PSO. Processes 2023, 11, 126. [Google Scholar] [CrossRef]

- Chen, H.; Cheng, R.; Pedrycz, W.; Jin, Y. Solving Many-Objective Optimization Problems via Multistage Evolutionary Search. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 3552–3564. [Google Scholar] [CrossRef]

- Castillo, O.; Melin, P.; Ontiveros, E.; Peraza, C.; Ochoa, P.; Valdez, F.; Soria, J. A high-speed interval type 2 fuzzy system approach for dynamic parameter adaptation in metaheuristics. Eng. Appl. Artif. Intell. 2021, 85, 666–680. [Google Scholar] [CrossRef]

- Li, X.; Mao, K.; Lin, F.; Zhang, X. Particle swarm optimization with state-based adaptive velocity limit strategy. Neurocomputing 2021, 447, 64–79. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, X. Adaptive heterogeneous comprehensive learning particle swarm optimization with history information and dimensional mutation. Multimed. Tools Appl. 2022, 82, 9785–9817. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; Zhan, Z.-H. A multi-swarm particle swarm optimization algorithm based on dynamical topology and purposeful detecting. Appl. Soft Comput. 2018, 67, 126–140. [Google Scholar] [CrossRef]

- Sun, G.; Yang, B.; Yang, Z.; Xu, G. An adaptive differential evolution with combined strategy for global numerical optimization. Soft Comput. 2019, 24, 6277–6296. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, X. Research on the Participation of Household Battery Energy Storage in the Electricity Peak Regulation Ancillary Service Market. Processes 2023, 11, 794. [Google Scholar] [CrossRef]

- Li, L.; Li, Y.; Lin, Q.; Ming, Z.; Coello, C.A.C. A convergence and diversity guided leader selection strategy for many-objective particle swarm optimization. Eng. Appl. Artif. Intell. 2022, 115, 105249. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

- Hancer, E.; Xue, B.; Zhang, M.; Karaboga, D.; Akay, B. Pareto front feature selection based on artificial bee colony optimization. Inf. Sci. 2018, 422, 462–479. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Rosso, M.M.; Aloisio, A.; Cucuzza, R.; Asso, R.; Marano, G.C. Structural Optimization with the Multistrategy PSO-ES Unfeasible Local Search Operator. In Proceedings of the International Conference on Data Science and Applications: ICDSA 2022, Kolkata, India, 26–27 March 2022; pp. 215–229. [Google Scholar]

- Marano, G.C.; Cucuzza, R. Structural Optimization Through Cutting Stock Problem. In Italian Workshop on Shell and Spatial Structures; Springer: Cham, Switzerland, 2023; pp. 210–220. [Google Scholar]

- Xia, X.; Xing, Y.; Wei, B.; Zhang, Y.; Li, X.; Deng, X.; Gui, L. A fitness-based multi-role particle swarm optimization. Swarm Evol. Comput. 2019, 44, 349–364. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.; Yin, L.; Wang, S.; Wang, Y.; Wan, F. A hybrid particle swarm optimizer with sine cosine acceleration coefficients. Inf. Sci. 2018, 422, 218–241. [Google Scholar] [CrossRef]

- Lin, J.C.-W.; Yang, L.; Fournier-Viger, P.; Hong, T.-P.; Voznak, M. A binary PSO approach to mine high-utility itemsets. Soft Comput. 2016, 21, 5103–5121. [Google Scholar] [CrossRef]

- Tian, D.; Zhao, X.; Shi, Z. Chaotic particle swarm optimization with sigmoid-based acceleration coefficients for numerical function optimization. Swarm Evol. Comput. 2019, 51, 100573. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, F.; Wang, Y.; Yin, L. An ameliorated particle swarm optimizer for solving numerical optimization problems. Appl. Soft Comput. 2018, 73, 482–496. [Google Scholar] [CrossRef]

- Liang, H.; Kang, F. Adaptive mutation particle swarm algorithm with dynamic nonlinear changed inertia weight. Optik 2016, 127, 8036–8042. [Google Scholar] [CrossRef]

- Rosso, M.M.; Cucuzza, R.; Aloisio, A.; Marano, G.C. Enhanced Multi-Strategy Particle Swarm Optimization for Constrained Problems with an Evolutionary-Strategies-Based Unfeasible Local Search Operator. Appl. Sci. 2022, 12, 2285. [Google Scholar] [CrossRef]

- Rosso, M.M.; Cucuzza, R.; Di Trapani, F.; Marano, G.C. Nonpenalty Machine Learning Constraint Handling Using PSO-SVM for Structural Optimization. Adv. Civ. Eng. 2021, 2021, 6617750. [Google Scholar] [CrossRef]

- Jiyue, E.; Liu, J.; Wan, Z. A novel adaptive algorithm of particle swarm optimization based on the human social learning intelligence. Swarm Evol. Comput. 2023, 80, 101336. [Google Scholar] [CrossRef]

- Lynn, N.; Suganthan, P.N. Ensemble particle swarm optimizer. Appl. Soft Comput. 2017, 55, 533–548. [Google Scholar] [CrossRef]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Lynn, N.; Suganthan, P.N. Heterogeneous comprehensive learning particle swarm optimization with enhanced exploration and exploitation. Swarm Evol. Comput. 2015, 24, 11–24. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; Yu, F.; Wu, H.; Wei, B.; Zhang, Y.-L.; Zhan, Z.-H. Triple Archives Particle Swarm Optimization. IEEE Trans. Cybern. 2020, 50, 4862–4875. [Google Scholar] [CrossRef]

- Li, W.; Jing, J.; Chen, Y.; Chen, Y. A cooperative particle swarm optimization with difference learning. Inf. Sci. 2023, 643, 119238. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; He, G.; Wei, B.; Zhang, Y.; Yu, F.; Wu, H.; Zhan, Z.-H. An expanded particle swarm optimization based on multi-exemplar and forgetting ability. Inf. Sci. 2020, 508, 105–120. [Google Scholar] [CrossRef]

- Shankar, R.; Ganesh, N.; Čep, R.; Narayanan, R.C.; Pal, S.; Kalita, K. Hybridized Particle Swarm—Gravitational Search Algorithm for Process Optimization. Processes 2022, 10, 616. [Google Scholar] [CrossRef]

- Ghorbanpour, S.; Jin, Y.; Han, S. Differential Evolution with Adaptive Grid-Based Mutation Strategy for Multi-Objective Optimization. Processes 2022, 10, 2316. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Huang, Y. An adaptive dynamic multi-swarm particle swarm optimization with stagnation detection and spatial exclusion for solving continuous optimization problems. Eng. Appl. Artif. Intell. 2023, 123, 106215. [Google Scholar] [CrossRef]

- Yang, X.; Li, H. Evolutionary-state-driven multi-swarm cooperation particle swarm optimization for complex optimization problem. Inf. Sci. 2023, 646, 119302. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, X. A dynamic multi-swarm cooperation particle swarm optimization with dimension mutation for complex optimization problem. Int. J. Mach. Learn. Cybern. 2022, 13, 2581–2608. [Google Scholar] [CrossRef]

- Liang, J.; Suganthan, P. Dynamic Multi-Swarm Particle Swarm Optimizer with Local Search. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, Scotland, UK, 2–5 September 2005. [Google Scholar]

- Peram, T.; Veeramachaneni, K.; Mohan, C.K. Fitness-Distance-Ratio Based Particle Swarm Optimization. In Proceedings of the 2003 IEEE Swarm Intelligence Symposium, Indianapolis, IN, USA, 24–26 April 2003. [Google Scholar]

- Lu, J.; Zhang, J.; Sheng, J. Enhanced multi-swarm cooperative particle swarm optimizer. Swarm Evol. Comput. 2021, 69, 100989. [Google Scholar] [CrossRef]

- Jing, L.; Bo-Yang, Q.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization. Appl. Math. Sci. 2013, 7, 281–295. [Google Scholar]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N.; Liang, J. Problem Definitions and Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).