Detection of Cotton Seed Damage Based on Improved YOLOv5

Abstract

:1. Introduction

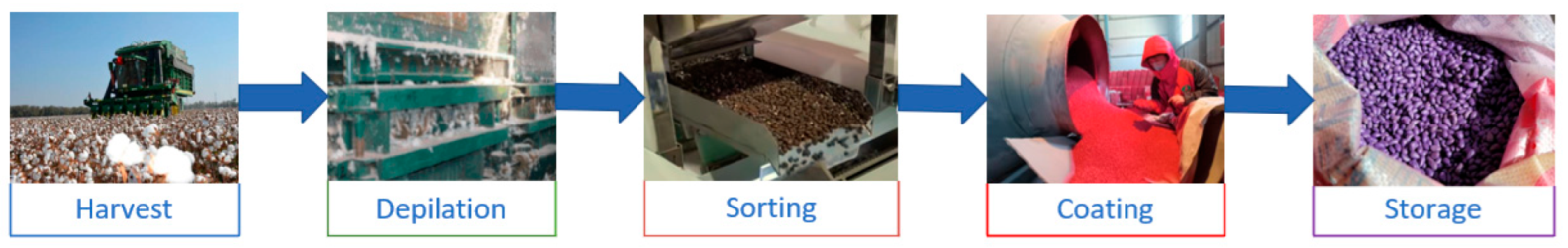

2. Materials and Methods

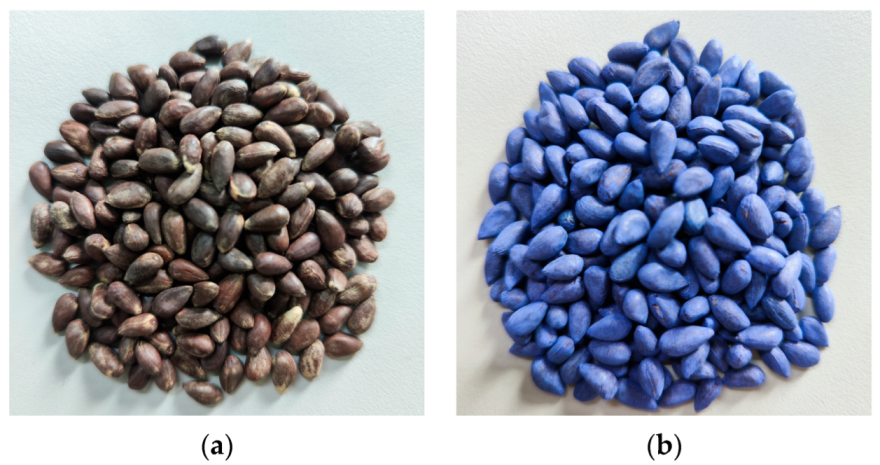

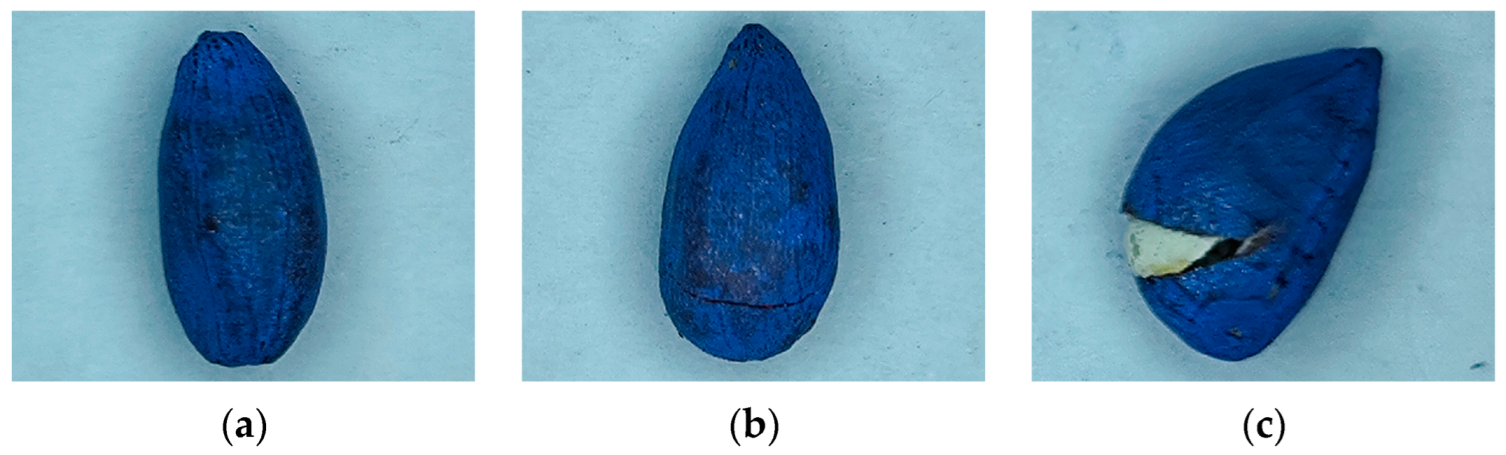

2.1. Material Selection

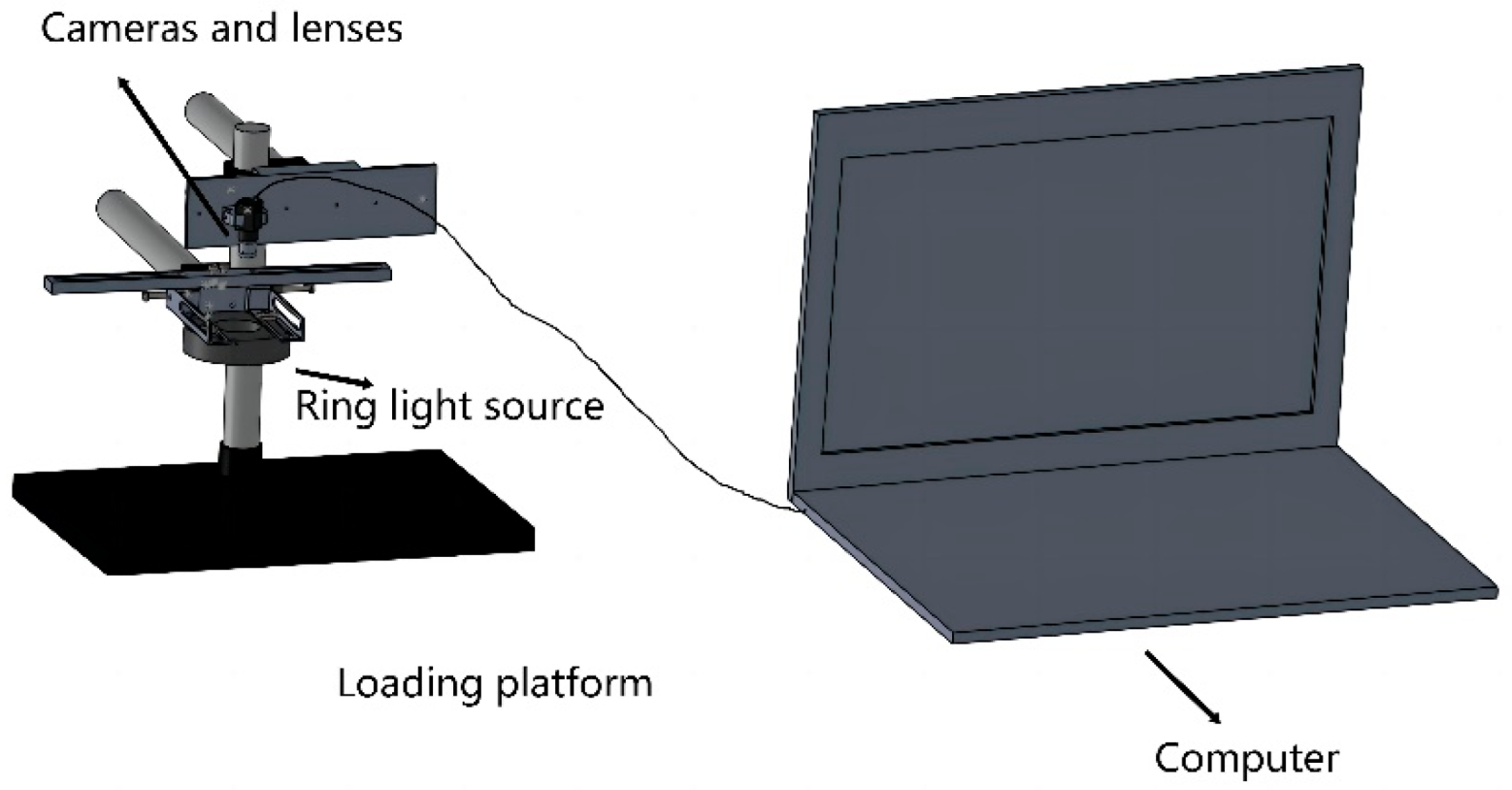

2.2. Data Acquisition and Processing

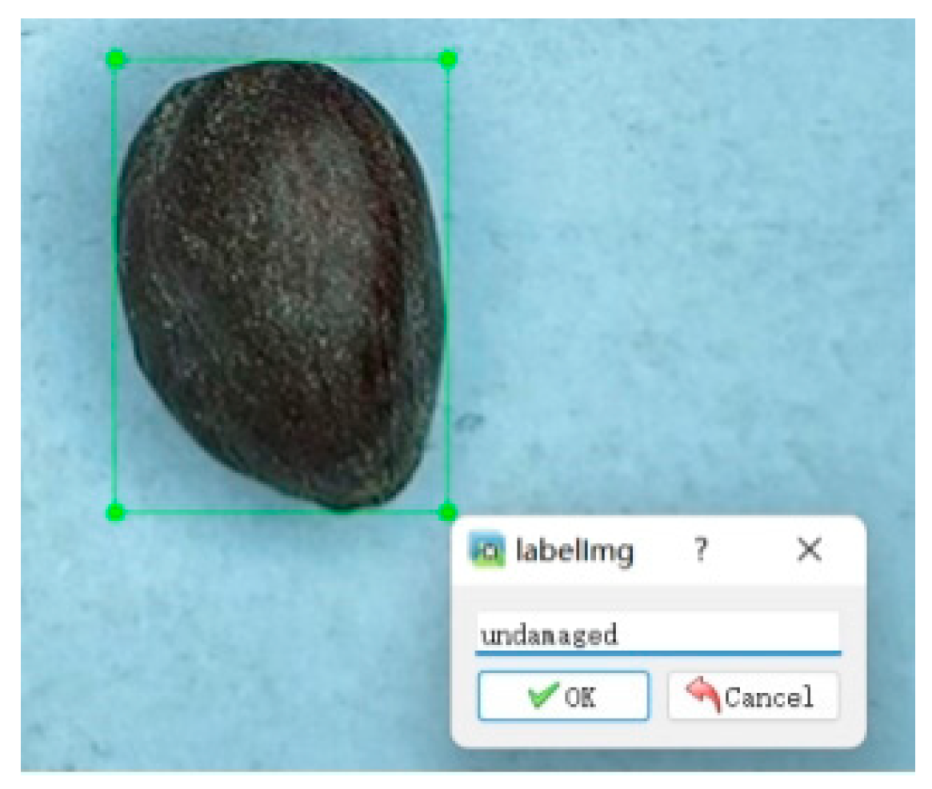

2.2.1. Data Collection and Annotation

2.2.2. Data Enhancement

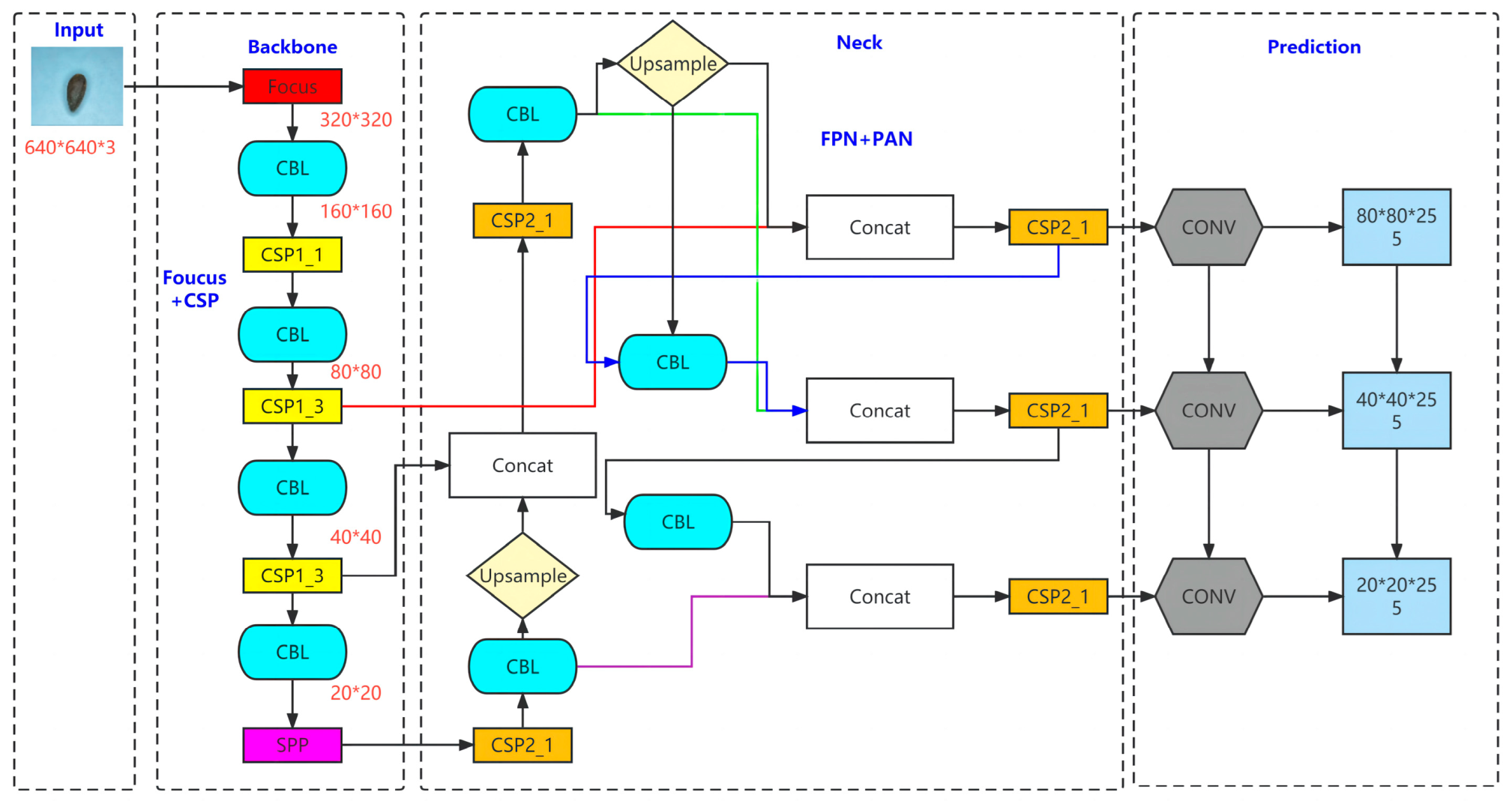

2.3. Overview of YOLOv5 Algorithm

3. Improvements in the YOLOv5 Algorithm

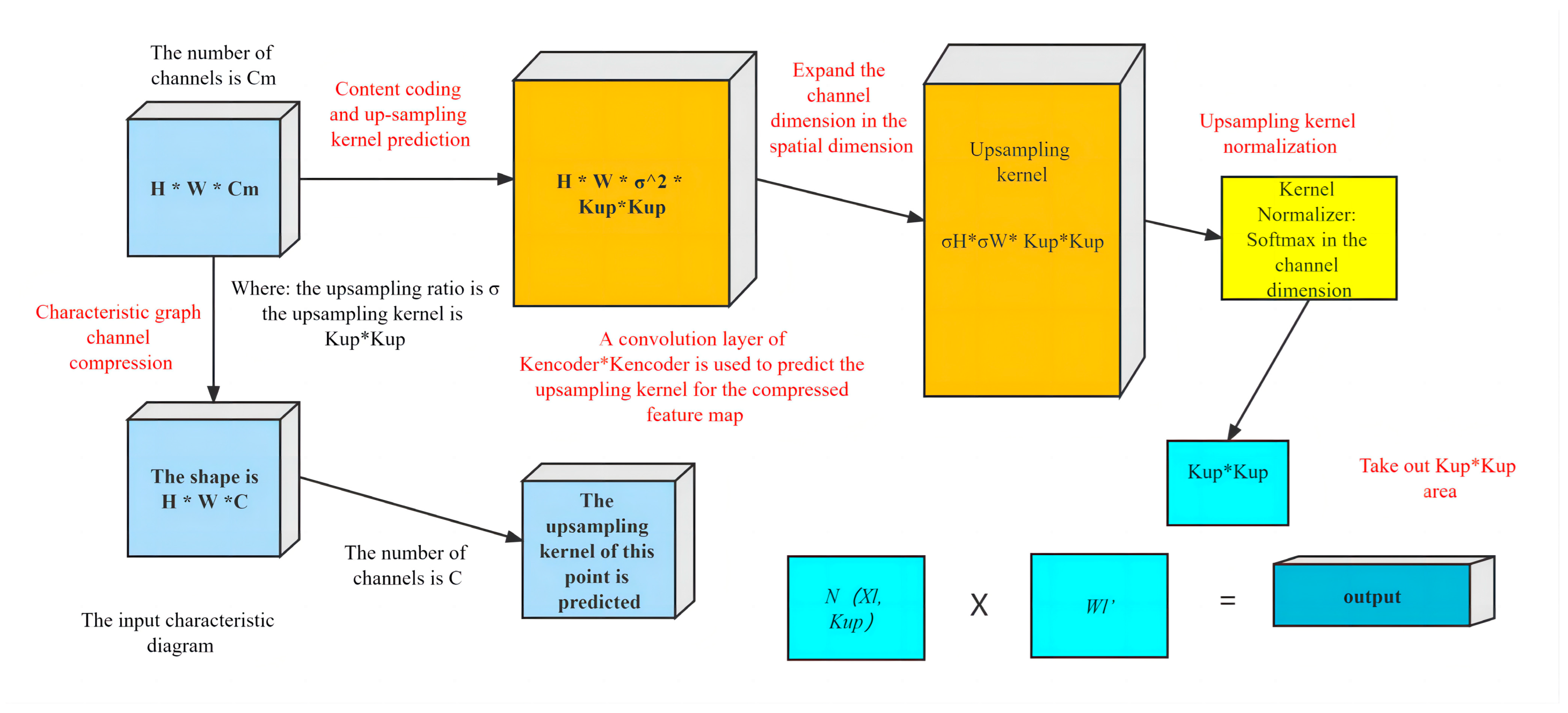

3.1. Addition of Lightweight Upsampling Operator CARAFE

3.2. Improvement of the Loss Function

4. Experimental Results and Analysis

4.1. Experimental Environment and Experimental Methods

4.2. Model Evaluation Indicators

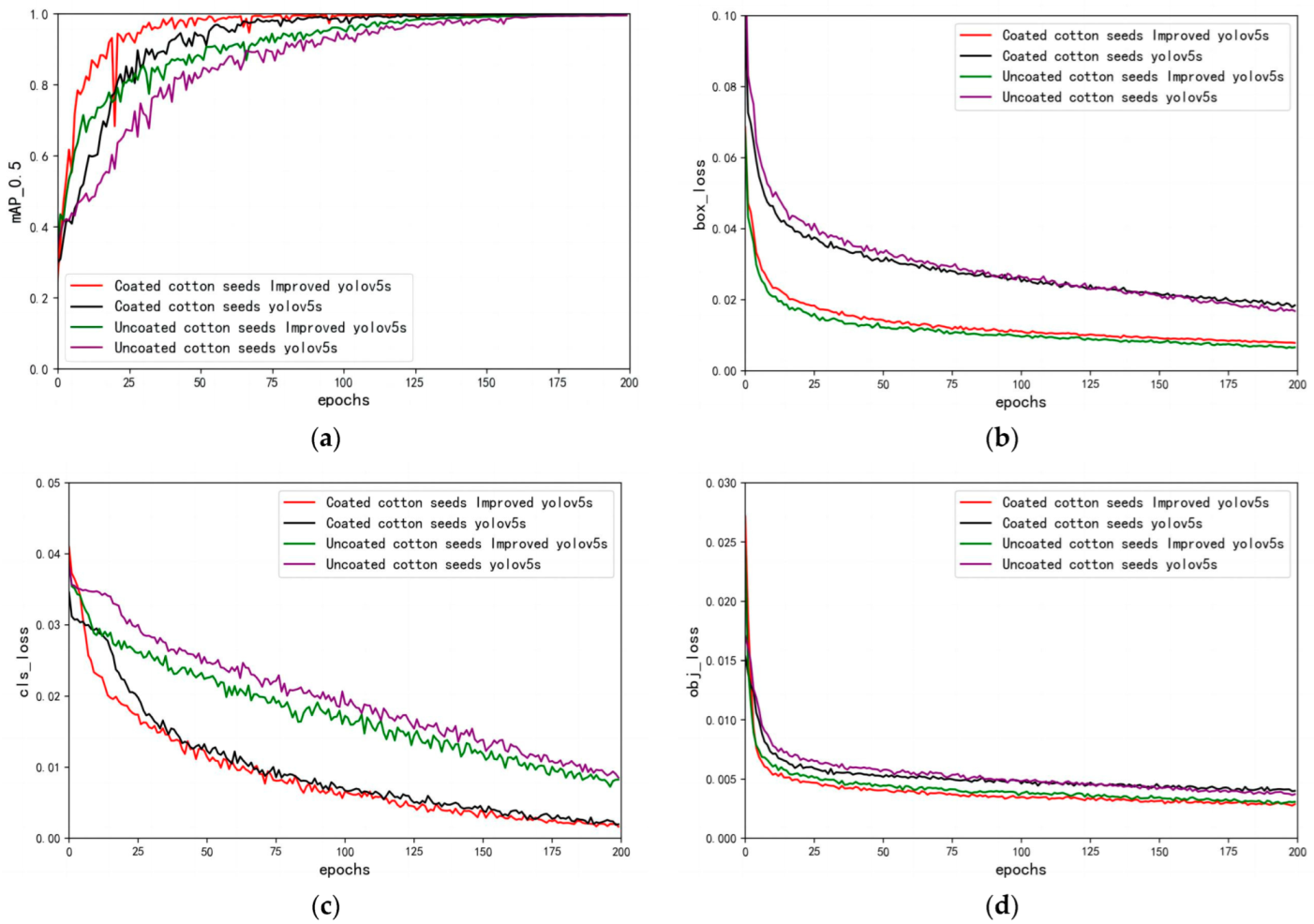

4.3. Comparison of YOLOv5 Algorithm before and after Improvement

4.3.1. Ablation Experiment Test

4.3.2. Comparison of Results before and after Improvement

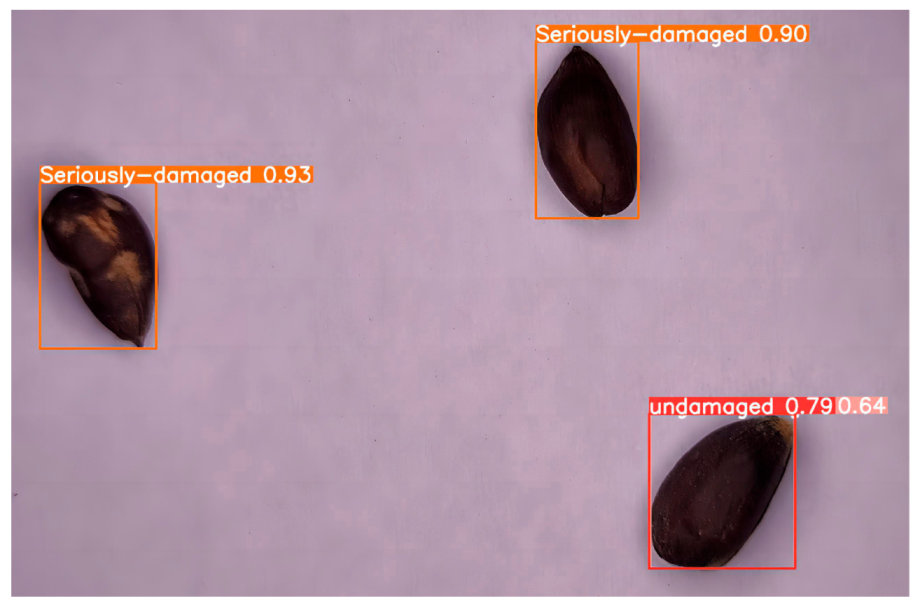

4.4. Visual Analysis

5. Discussion and Conclusions

5.1. Discussion

5.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- State Statistical Bureau. China Statistical Yearbook; China Statistics Press: Beijing, China, 2022.

- He, J. Research on optimization and innovation of Xinjiang cotton logistics system from the perspective of science and technology empowerment. China Cott. 2022, 49, 44–46. [Google Scholar]

- Zhu, J.; Li, J.; Kan, Z.; Zhang, R. The Simple Analysis of the Current Situation and the Development Tendency of Sorting Cotton Seed which Escaping the Fabric in Xinjiang Production and Construction Corps. J. Agric. Mech. Res. 2008, 1, 216–219. [Google Scholar]

- Wang, J.; Lu, Y.; Yang, H.; Gao, Z.; Liu, S. Present situation of delinted cotton seed selection technology. Jiangsu Agric. Sci. 2014, 42, 5–7. [Google Scholar]

- Kan, Z.; Gu, C.; Wang, L.; Fu, W. Optimization of parameters for delinted cottonseeds dielectric selection. Trans. Chin. Soc. Agric. Eng. 2010, 26, 114–119. [Google Scholar]

- Yu, S.; Liu, Y.; Wang, S.; Du, H. Design and Simulation Analysis of Dual CCD Detection System for Delinted Cottonseeds Color Sorter. Trans. Chin. Soc. Agric. Mach. 2015, 46, 55–61. [Google Scholar]

- Peng, S.; You, J.; Li, J. Software Design of the Testing System for the Dynamic Test of the Cotton Seed. J. Agric. Mech Res. 2017, 39, 66–71. [Google Scholar]

- Weng, H.; Ye, Q. Applications of Feature Extraction and Enhancement Algorithm in Image Processing. J. Chongqing Univ. Technol. Nat. Sci. 2016, 30, 119–122. [Google Scholar]

- Wu, Y.; Xu, W.; Zhang, D. A Study on the Extraction and Application of Image Contour Feature Codes. J. Residuals Sci. Technol. 2016, 13, 5–12. [Google Scholar]

- Wang, Q.; Gu, W.; Cai, P.; Zhang, H. Detection Method of Double Side Breakage of Population Cotton Seed Based on Improved YOLO v4. Trans. Chin. Soc. Agric. Mach. 2022, 53, 389–397. [Google Scholar]

- Fan, X.; Wang, B.; Liu, J.; Zhou, Y.; Zhang, J.; Suo, X. Corn Seed Appearance Quality Estimation Based on Improved YOLO v4. Trans. Chin. Soc. Agric. Mach. 2022, 53, 226–233. [Google Scholar]

- Li, H. Research on the Application of Improvedimage Processing System Based on YOLOmodel in Seed Purity Inspection; Sichuan Agricultural University: Ya’an, China, 2020. [Google Scholar]

- Li, S.; Zhang, S.; Xue, J.; Sun, H.; Ren, R. A Fast Neural Network Based on Attention Mechanisms for Detecting Field Flat Jujube. Agriculture 2022, 12, 717. [Google Scholar] [CrossRef]

- Li, Z.; Tian, X.; Liu, X.; Liu, Y.; Shi, X. A Two-Stage Industrial Defect Detection Framework Based on Improved-YOLOv5 and Optimized-Inception-ResnetV2 Models. Appl. Sci. 2022, 12, 834. [Google Scholar] [CrossRef]

- Ahmad, T.; Cavazza, M.; Matsuo, Y.; Prendinger, H. Detecting Human Actions in Drone Images Using YoloV5 and Stochastic Gradient Boosting. Sensors 2022, 22, 7020. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhao, J.; Zhao, L.; Zhang, H.; Li, L.; Ji, Z.; Ganchev, I. Detection of River Floating Garbage Based on Improved YOLOv5. Mathematics 2022, 10, 4366. [Google Scholar] [CrossRef]

- Zhou, J.; Jiang, P.; Zou, A.; Chen, X.; Hu, W. Ship Target Detection Algorithm Based on Improved YOLOv5. J. Mar. Sci. Eng. 2021, 9, 908. [Google Scholar] [CrossRef]

- Ahmad, I.; Yang, Y.; Yue, Y.; Ye, C.; Hassan, M.; Cheng, X.; Wu, Y.; Zhang, Y. Deep Learning Based Detector YOLOv5 for Identifying Insect Pests. Appl. Sci. 2022, 12, 10167. [Google Scholar] [CrossRef]

- Wang, H.; Shang, S.; Wang, D.; He, X.; Feng, K.; Zhu, H. Plant Disease Detection and Classification Method Based on the Optimized Lightweight YOLOv5 Model. Agriculture 2022, 12, 931. [Google Scholar] [CrossRef]

- Obsie, E.Y.; Qu, H.; Zhang, Y.; Annis, S.; Drummond, F. YOLOv5s-CA: An Improved YOLOv5 Based on the Attention Mechanism for Mummy Berry Disease Detection. Agriculture 2022, 13, 78. [Google Scholar] [CrossRef]

- Khan, Z.N.; Ahmad, J. Attention induced multi-head convolutional neural network for human activity recognition. Appl. Soft Comput. 2021, 110, 107671. [Google Scholar] [CrossRef]

- Du, Q.; Huang, L.; Tian, L.; Huang, D.; Jin, S.; Li, M. Recognition of Passengers’ Abnormal Behavior on Escalator Based on Video Monitoring. J. South China Univ. Technol. Nat. Sci. 2020, 48, 10–21. [Google Scholar]

- Qu, Z.; Gao, L.; Wang, S.; Yin, H.; Yi, T. An improved YOLOv5 method for large objects detection with multi-scale feature cross-layer fusion network. Image Vision Comput. 2022, 125, 104518. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, S. Research on Lightweight Improvement of Sonar Image Classification Network. J. Phys. Conf. Ser. 2021, 1883, 012140. [Google Scholar] [CrossRef]

- Li, Z. Road Aerial Object Detection Based on Improved YOLOv5. J. Phys. Conf. Ser. 2022, 2171, 012039. [Google Scholar] [CrossRef]

- Wu, S.; Li, Z.; Li, S.; Liu, Q.; Wu, W. Static Gesture Recognition Algorithm Based on Improved YOLOv5s. Electronics 2023, 13, 596. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE++: Unified Content-Aware ReAssembly of FEatures. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4674–4687. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Sun, Z.; Yang, H.; Zhang, Z.; Liu, J.; Zhang, X. An Improved YOLOv5-Based Tapping Trajectory Detection Method for Natural Rubber Trees. Agriculture 2022, 12, 1309. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, F.; Liu, D.; Pu, W.; Zhang, Q. Manhattan-distance IOU loss for fast and accurate bounding box regression and object detection. Neurocomputing 2022, 500, 99–114. [Google Scholar] [CrossRef]

- Jiao, H. Intelligent Research Based on Deep Learning Recognition Method in Vehicle-Road Cooperative Information Interaction System. Comput. Intell. Neurosci. 2022, 2022, 4921211. [Google Scholar] [CrossRef]

| Method | CARAFE | EIOU | mAP_0.5/% | Average Test Time/ms | Model Size/MB |

|---|---|---|---|---|---|

| YOLOv5s | × | × | 98.3 | 42 | 7.62 |

| YOLOv5s + EIOU | × | √ | 98.9 | 41 | 5.23 |

| YOLOv5s + CARAFE | √ | × | 99.2 | 38 | 3.78 |

| Proposed algorithm | √ | √ | 99.5 | 36 | 3.36 |

| Algorithm | Recall/% | AP/% | mAP_0.5/% | ||

|---|---|---|---|---|---|

| Undamaged | Slightly Damaged | Seriously Damaged | |||

| YOLOv5s | 98.4 | 98.6 | 97.7 | 98.6 | 98.3 |

| Improve YOLOv5s | 99.3 | 99.8 | 99.6 | 99.1 | 99.5 |

| Algorithm | Recall/% | AP/% | mAP_0.5/% | ||

|---|---|---|---|---|---|

| Undamaged | Slightly Damaged | Seriously Damaged | |||

| YOLOv5s | 98.2 | 98.4 | 98.8 | 98.9 | 98.7 |

| Improve YOLOv5s | 98.9 | 99.4 | 98.8 | 99.4 | 99.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Wang, L.; Liu, Z.; Wang, X.; Hu, C.; Xing, J. Detection of Cotton Seed Damage Based on Improved YOLOv5. Processes 2023, 11, 2682. https://doi.org/10.3390/pr11092682

Liu Z, Wang L, Liu Z, Wang X, Hu C, Xing J. Detection of Cotton Seed Damage Based on Improved YOLOv5. Processes. 2023; 11(9):2682. https://doi.org/10.3390/pr11092682

Chicago/Turabian StyleLiu, Zhicheng, Long Wang, Zhiyuan Liu, Xufeng Wang, Can Hu, and Jianfei Xing. 2023. "Detection of Cotton Seed Damage Based on Improved YOLOv5" Processes 11, no. 9: 2682. https://doi.org/10.3390/pr11092682

APA StyleLiu, Z., Wang, L., Liu, Z., Wang, X., Hu, C., & Xing, J. (2023). Detection of Cotton Seed Damage Based on Improved YOLOv5. Processes, 11(9), 2682. https://doi.org/10.3390/pr11092682