Prediction of Leakage Pressure in Fractured Carbonate Reservoirs Based on PSO-LSTM Neural Network

Abstract

1. Introduction

2. Methods and Principles

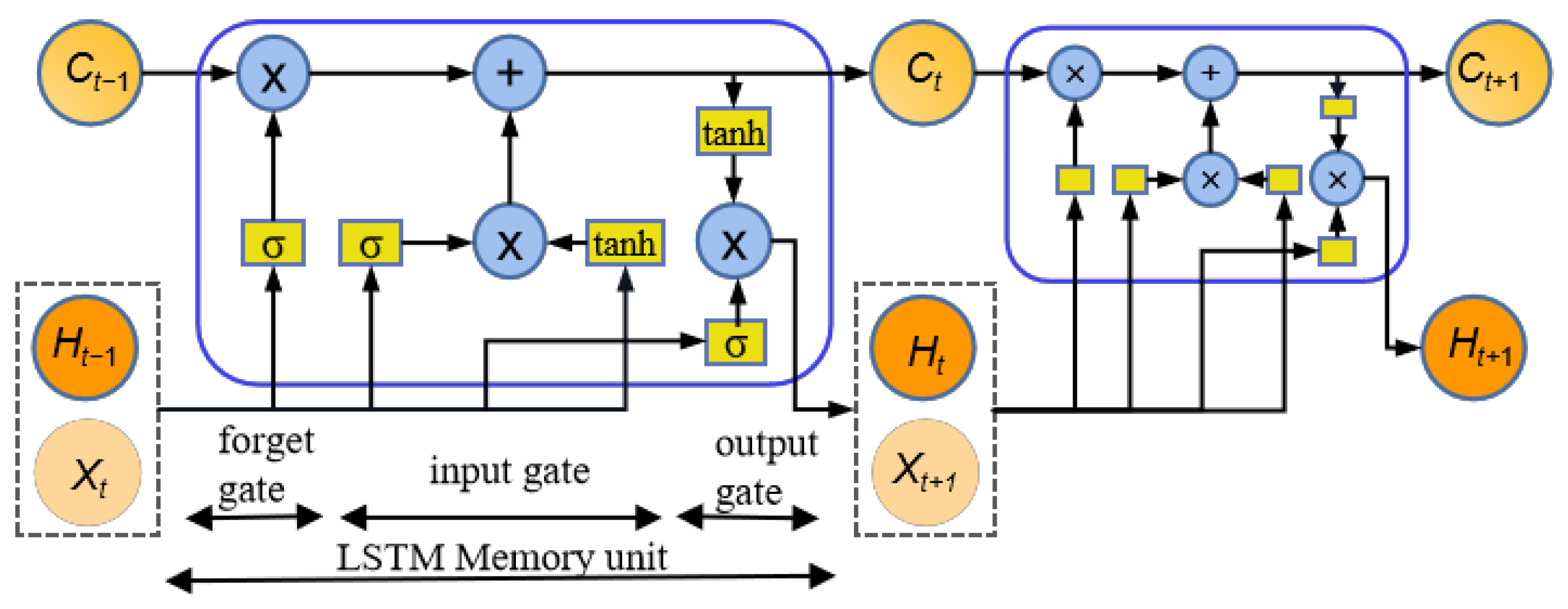

2.1. Principles of LSTM Recurrent Neural Networks

2.2. PSO Algorithm

2.3. Model Building Based on PSO-Optimized LSTM

3. Experiment and Result Analysis

3.1. Correlation Analysis

3.2. Data Normalization

4. Case Analysis

Model Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jin, Y.; Chen, M.; Liu, X.M.; Li, Z.J. Statistic analysis of leakage pressure of Ordovician carbonate for mation in middle Ta-rim Basin. Oil Drill. Prod. Technol. 2007, 29, 82–84. [Google Scholar]

- Zhu, L.; Zhang, C.Y.; Lou, Y.S.; Sun, W.H.; Li, Z.H.; Wu, H.M. Comparative analysis between the mechanics-bused and statistics-based calculation models for leakage pressure. Nat. Gas. Ind. 2008, 28, 60–61. [Google Scholar]

- Andersin, R.A.; Ingram, D.S.; Zanier, A.M. Determining fracture pressure gradients from well logs. J. Pet. Technol. 1973, 25, 1259–1268. [Google Scholar] [CrossRef]

- Aadnoy, B.S.; Belayneh, M. Elastic-plastic fracturing model for wellbore stability using non-penetrating fluids. J. Pet. Sci. Eng. 2004, 45, 179–192. [Google Scholar] [CrossRef]

- Breckels, I.M.; Eekelen, H.A.M. Relationship Between Horizontal Stress and depth in sedimentary basins. J. Pet. Sci. Eng. 1982, 34, 2191–2199. [Google Scholar] [CrossRef]

- Zhai, X.P.; Hao, H.Y.; Lou, Y.S.; Guan, H.X.; Ma, Z.L.; Lei, Y.C.; Zhang, H. Study on leakage pressure and its application in Halahatang sag. Petrol. Geol. Recov. Eff. 2013, 20, 108–110. [Google Scholar]

- Zhai, X.P.; Chen, H.; Lou, Y.S.; Wu, H.M. Prediction and control model of shale induced fracture leakage pressure. J. Pet. Sci. Eng. 2020, 198, 108186. [Google Scholar] [CrossRef]

- Majidi, R.; Miska, S.Z.; Yu, M.J.; Thompson, L.G.; Zhang, J. Modeling of drilling fluid losses in naturally fractured formations. In Proceedings of the SPE Annual Technical Conference and Exhibition, Denver, CO, USA, 21–24 September 2008; p. 114630. [Google Scholar] [CrossRef]

- Chen, X.H.; Qiu, Z.S.; Yang, P.; Guo, B.Y.; Wang, B.T.; Wang, X.D. Dynamic simulation of fracture leakage process based on ABAQUS. Drill. Fluid Complet. Fluid 2019, 36, 15–19. [Google Scholar]

- Laura, C.; Zoback, M.D.; Julio, F.; Vicki, S.; Chris, Z. Fracture characterization and fluid flow simulation with geomechanical constraints for a CO2–EOR and sequestration project Teapot Dome Oil Field, Wyoming, USA. Energy Procedia 2011, 4, 3973–3980. [Google Scholar]

- Majidi, R.; Miska, S.Z.; Thompson, L.G. Quantitative analysis of mud losses in naturally fractured reservoirs: The effect of rheology. SPE Drill. Compl. 2008, 25, 509–517. [Google Scholar] [CrossRef]

- Peter, E.; Fan, Z.Q.; Julia, F.W. Geomechanical analysis of fluid injection and seismic fault slip for the Mw4.8 Timpson. Texas, earthquake sequence. J. Geophys. Res. Solid Earth 2016, 121, 2798–2812. [Google Scholar]

- Ozdemirtas, M.; Kuru, E.; Babadagli, T. Experimental investigation of borehole ballooning due to flow of non-Newtonian fluids into fractured rocks. Int. J. Rock Mech. Min. Sci. 2010, 47, 1200–1206. [Google Scholar] [CrossRef]

- Shahri, M.P.; Mehrabi, M. A new approach in modeling of fracture ballooning in naturally fractured reservoirs. In Proceedings of the SPE Kuwait International Petroleum Conference and Exhibition, Kuwait City, Kuwait, 10–12 December 2012; p. 163382. [Google Scholar] [CrossRef]

- Zoback, M.D.; Apel, R. Upper crustal strength inferred from stress measurements to 6 km depth in the KTB borehole. Nature 1993, 365, 633–635. [Google Scholar] [CrossRef]

- Panja, P.; Velasco, R.; Pathak, M.; Deo, M. Application of artificial intelligence to forecast hydrocarbon production from shales. Petroleum 2017, 7, 75–89. [Google Scholar] [CrossRef]

- Ahmed, A.; Elkatatny, S.; Gamal, H.; Abdulraheem, A. Artificial intelligence models for real-time bulk density prediction of vertical complex lithology using the drilling parameters. Arab. J. Sci. Eng. 2022, 47, 10993–11006. [Google Scholar] [CrossRef]

- Agin, F.; Khosravanian, R.; Karimifard, M. Application of adaptive neuro-fuzzy inference system and data mining approach to predict lost circulation using DOE technique (Case study: Maroon oilfield). Petroleum 2018, 6, 423–437. [Google Scholar] [CrossRef]

- Alalimi, A.; Pan, L.; Al-Qaness, M.A.; Ewees, A.A.; Wang, X.; Abd Elaziz, M. Optimized Random Vector Functional Link network to predict oil production from Tahe oil field in China. Oil Gas Sci. Technol. Rev. IFP Energ. Nouv. 2021, 76, 3. [Google Scholar] [CrossRef]

- Kim, J.; Park, J.; Shin, S.; Lee, Y.; Min, K.; Lee, S.; Kim, M. Prediction of engine NOx for virtual sensor using deep neural network and genetic algorithm. Oil Gas Sci. Technol. Rev. IFP Energ. Nouv. 2021, 76, 72. [Google Scholar] [CrossRef]

- Guevara, J.; Zadrozny, B.; Buoro, A.; Lu, L.; Tolle, J.; Limbeck, J.W.; Hohl, D. A machine-learning methodology using domain knowledge constraints for well-data integration and well-production prediction. SPE Reserv. Eval. Eng. 2019, 22, 1185–1200. [Google Scholar] [CrossRef]

- Abdulmalek, A.S.; Mahmoud, A.; Elkatatny, S.; Mahmoud, M.; Abdulazeez, A. Prediction of Pore and Fracture Pressures Using Support Vector Machine. In Proceedings of the International Petroleum Technology Conference, Beijing, China, 26–29 March 2019. [Google Scholar]

- Li, Q.F.; Fu, J.H.; Peng, C.; Min, F.; Zhang, X.M.; Yang, Y.; Xu, Z.Y.; Bai, J.; Yu, Z.Q.; Wang, H. A deep learning approach for abnormal pore pressure prediction based on multivariate time series of kick. Geoenergy Sci. Eng. 2023, 226, 211715. [Google Scholar]

- Yu, H.; Chen, G.X.; Gu, H.M. A machine learning methodology for multivariate pore-pressure prediction. Comput. Geosci. 2020, 143, 104548. [Google Scholar] [CrossRef]

- Wang, J.; Cao, J.X.; Zhou, X. Reservoir porosity prediction based on deep bidirectional recurrent neural network. Prog. Geophys. 2022, 37, 267–274. [Google Scholar]

- Yang, R.; Liu, X.; Yu, R.; Hu, Z.M.; Duan, X.G. Long short-term memory suggests a model for predicting shale gas pro-duction. Appl. Energy 2022, 322, 119415. [Google Scholar] [CrossRef]

- Luo, F.Q.; Liu, J.T.; Chen, X.P. Intelligent method for predicting formation pore pressure in No. 5 fault zone in Shunbei oilfield based on BP and LSTM neural network. Oil Drill. Prod. Technol. 2022, 44, 506–514. [Google Scholar]

- Peng, L.; Wang, L.; Xia, D.; Gao, Q.L. Effective energy consumption forecasting using empirical wavelet transform and long short-term memory. Energy 2022, 238, 121756. [Google Scholar] [CrossRef]

- Lim, S.C.; Huh, J.H.; Hong, S.H.; Park, C.Y.; Kim, J.C. Solar Power Forecasting Using CNN-LSTM Hybrid Model. Energies 2022, 15, 8233. [Google Scholar] [CrossRef]

- Zheng, D.; Ozbayoglu, E.; Miska, S.; Liu, Y. Cement Sheath Fatigue Failure Prediction by ANN-Based Model. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 2–5 May 2022. [Google Scholar]

- Ehsan, B.; Ebrahim, B.D. Computational prediction of the drilling rate of penetration (ROP): A comparison of various machine learning approaches and traditional models. J. Pet. Sci. Eng. 2022, 210, 110033. [Google Scholar]

- Wang, J.; Cao, J.; Yuan, S. Shear wave velocity prediction based on adaptive particle swarm optimization optimized re-current neural network. J. Pet. Sci. Eng. 2020, 194, 107466. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zendehboudi, S.; Rezaei, N.; Lohi, A. Applications of hybrid models in chemical, petroleum, and energy systems: A systematic review. Appl. Energy 2018, 228, 2539–2566. [Google Scholar] [CrossRef]

- Pan, S.; Wang, C.; Zhang, Y.; Cai, W. Lithologic identification based on long-term and short-term memory neural net-work to complete logging curve and hybrid optimization xgboost. J. China Univ. Pet. 2022, 46, 62–71. [Google Scholar]

- Xu, K.; Shen, X.; Yao, T.; Tian, X.; Mei, T. Greedy layer-wise training of long-short term memory networks. In Proceedings of the 2018 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Ao, L.; Pang, H. Prediction of POR Based on Artificial Neural Network with Long and Short Memory (LSTM). In Proceedings of the 55th US Rock Mechanics/Geomechanics Symposium, Virtual, 18–25 June 2021; OnePetro: Richardson, TX, USA, 2021. [Google Scholar]

- Abdollahi, H. A novel hybrid model for forecasting crude oil price based on time series decomposition. Appl. Energy 2020, 267, 115035. [Google Scholar] [CrossRef]

- Chang, Z.; Zhang, Y.; Chen, W. Electricity price prediction based on hybrid model of adam optimized LSTM neural net-work and wavelet transform. Energy 2019, 187, 115804. [Google Scholar] [CrossRef]

- Zheng, D.; Miska, S.; Ziaja, M.; Zhang, J. Study of anisotropic strength properties of shale. AGH Drill. Oil Gas 2019, 36, 93–112. [Google Scholar] [CrossRef]

- Bajolvand, M.; Ramezanzadeh, A.; Mehrad, M.; Roohi, A. Optimization of controllable drilling parameters using a novel geomechanics-based workflow. J. Pet. Sci. Eng. 2022, 218, 111004. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Huo, F.; Chen, Y.; Ren, W.; Dong, H.; Yu, T.; Zhang, J. Prediction of reservoir key parameters in ‘sweet spot’ on the basis of particle swarm optimization to TCN-LSTM network. J. Pet. Sci. Eng. 2022, 214, 110544. [Google Scholar] [CrossRef]

- Zhou, C.; Gao, H.; Gao, G.; Zhang, W. Particle swarm optimization. Comp. Appl. Res. 2003, 20, 7–11. [Google Scholar]

- Aguila-Leon, J.; Vargas-Salgado, C.; Chiñas-Palacios, C.; Díaz Bello, D. Energy management model for a standalone hy-brid microgrid through a particle Swarm optimization and artificial neural networks approach. Energy Convers. Manag. 2022, 267, 115920. [Google Scholar] [CrossRef]

- Xue, L.; Gu, S.; Wang, J.; Liu, Y.; Tu, B. Prediction of gas well production performance based on particle swarm optimiza-tion and short-term memory neural network. Petrol. Drill. Prod. Technol. 2021, 43, 525–531. [Google Scholar]

- Wang, X.; Deng, W.; Qi, W. Short term power load forecasting model based on hyper-parametric optimization. Foreign Electron. Meas. Technol. 2022, 41, 152–158. [Google Scholar]

| S/N | Production Parameters | Min | Max | Average | STD |

|---|---|---|---|---|---|

| 1 | SP | 76.239 | 76.239 | 62.99 | 4.14 |

| 2 | GR | 50.61 | 258.12 | 79.90 | 14.52 |

| 3 | RILD | 0.179 | 2.20 | 0.62 | 0.33 |

| 4 | RILM | 0.21 | 1.53 | 0.62 | 0.27 |

| 5 | RFOC | 0.27 | 2.10 | 0.71 | 0.26 |

| 6 | AC | 63.31 | 107.54 | 82.77 | 7.16 |

| 7 | CAL | 0.02 | 0.83 | 0.36 | 0.17 |

| 8 | DEVI | 1 | 44 | 1.45 | 2.26 |

| 9 | DRTM | 1.02 | 1.99 | 1.36 | 0.17 |

| Function | Parameter | Initial Value | Optimization Range | Optimized Value |

|---|---|---|---|---|

| Training Options | Max Epochs | 40 | [40, 200] | 100 |

| Learn Rate | 0.0001 | [0.0001, 0.1] | 0.001 | |

| Min Batch Size | 64 | [64, 640, 32] | 64 | |

| LSTM Layer | Input Size | 7 | - | 7 |

| Hidden layer | 10 | [10, 100] | 20 | |

| Bias | 32 | - | 32 | |

| Fully Connected LayerPSO | Output Size | 1 | - | 1 |

| Pop | 20 | - | ||

| Dim | 7 | - |

| Train | Test | |||||

|---|---|---|---|---|---|---|

| Method | R2 | RMSE | MAPE | R2 | RMSE | MAPE |

| LSTM | 0.828 | 0.049 | 5.7 | 0.765 | 0.060 | 8.9 |

| PSO-LSTM | 0.857 | 0.041 | 3.2 | 0.795 | 0.051 | 7.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Zhai, X.; Ke, A.; Lin, Y.; Zhang, X.; Xie, Z.; Lou, Y. Prediction of Leakage Pressure in Fractured Carbonate Reservoirs Based on PSO-LSTM Neural Network. Processes 2023, 11, 2222. https://doi.org/10.3390/pr11072222

Xu X, Zhai X, Ke A, Lin Y, Zhang X, Xie Z, Lou Y. Prediction of Leakage Pressure in Fractured Carbonate Reservoirs Based on PSO-LSTM Neural Network. Processes. 2023; 11(7):2222. https://doi.org/10.3390/pr11072222

Chicago/Turabian StyleXu, Xuemei, Xiaopeng Zhai, Aoxiang Ke, Yang Lin, Xueling Zhang, Zelong Xie, and Yishan Lou. 2023. "Prediction of Leakage Pressure in Fractured Carbonate Reservoirs Based on PSO-LSTM Neural Network" Processes 11, no. 7: 2222. https://doi.org/10.3390/pr11072222

APA StyleXu, X., Zhai, X., Ke, A., Lin, Y., Zhang, X., Xie, Z., & Lou, Y. (2023). Prediction of Leakage Pressure in Fractured Carbonate Reservoirs Based on PSO-LSTM Neural Network. Processes, 11(7), 2222. https://doi.org/10.3390/pr11072222