Signal-to-Image: Rolling Bearing Fault Diagnosis Using ResNet Family Deep-Learning Models

Abstract

1. Introduction

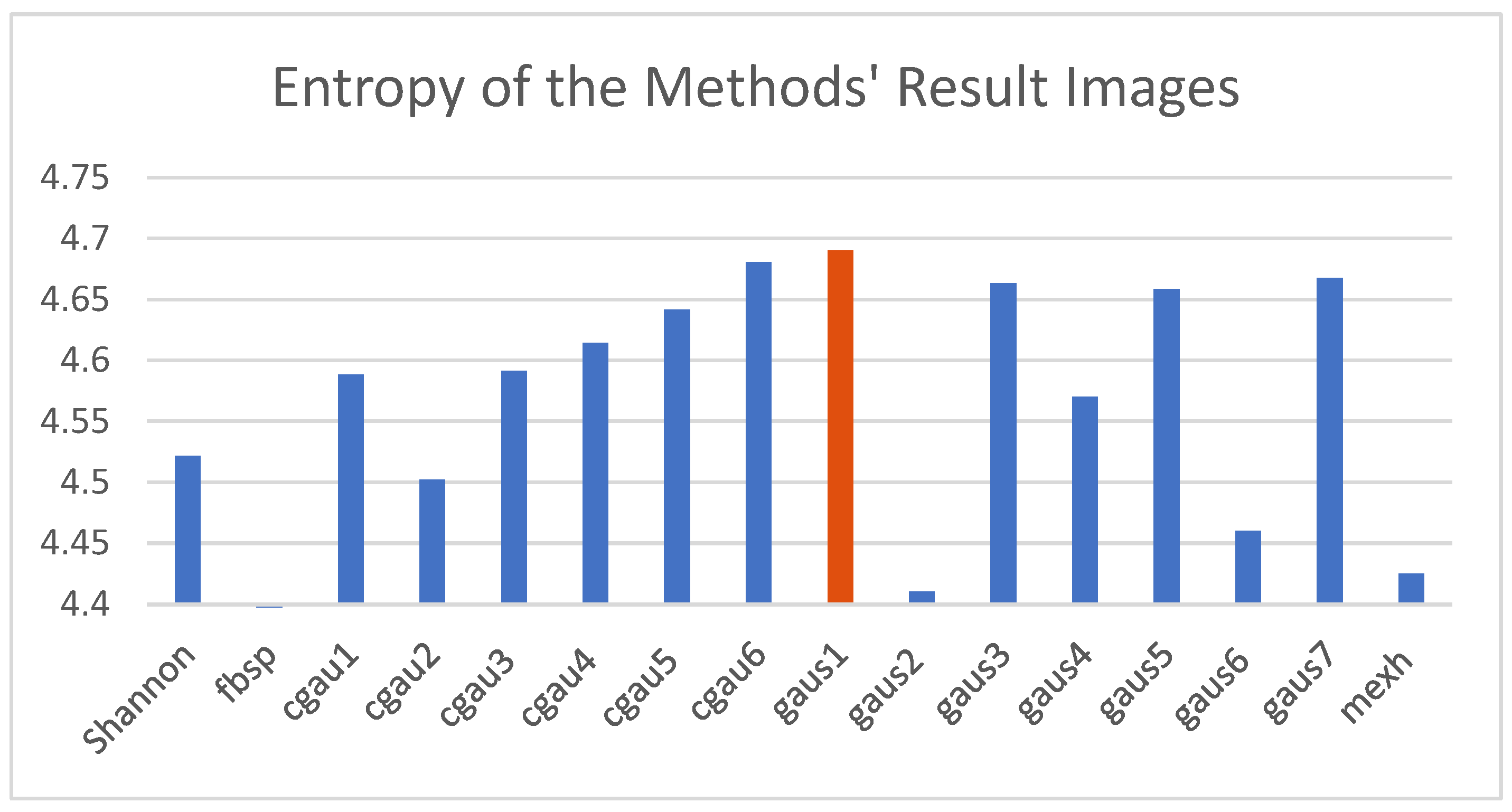

- It proposes a novel signal-to-image method using continuous wavelet transform (CWT); and

- It tests the classic ResNet family and the follow-up SE-ResNet family to find the best ResNet model for REB fault diagnosis.

2. Materials and Methods

2.1. Rolling Bearing Fault Signal Transformation

2.2. Introduce ResNet

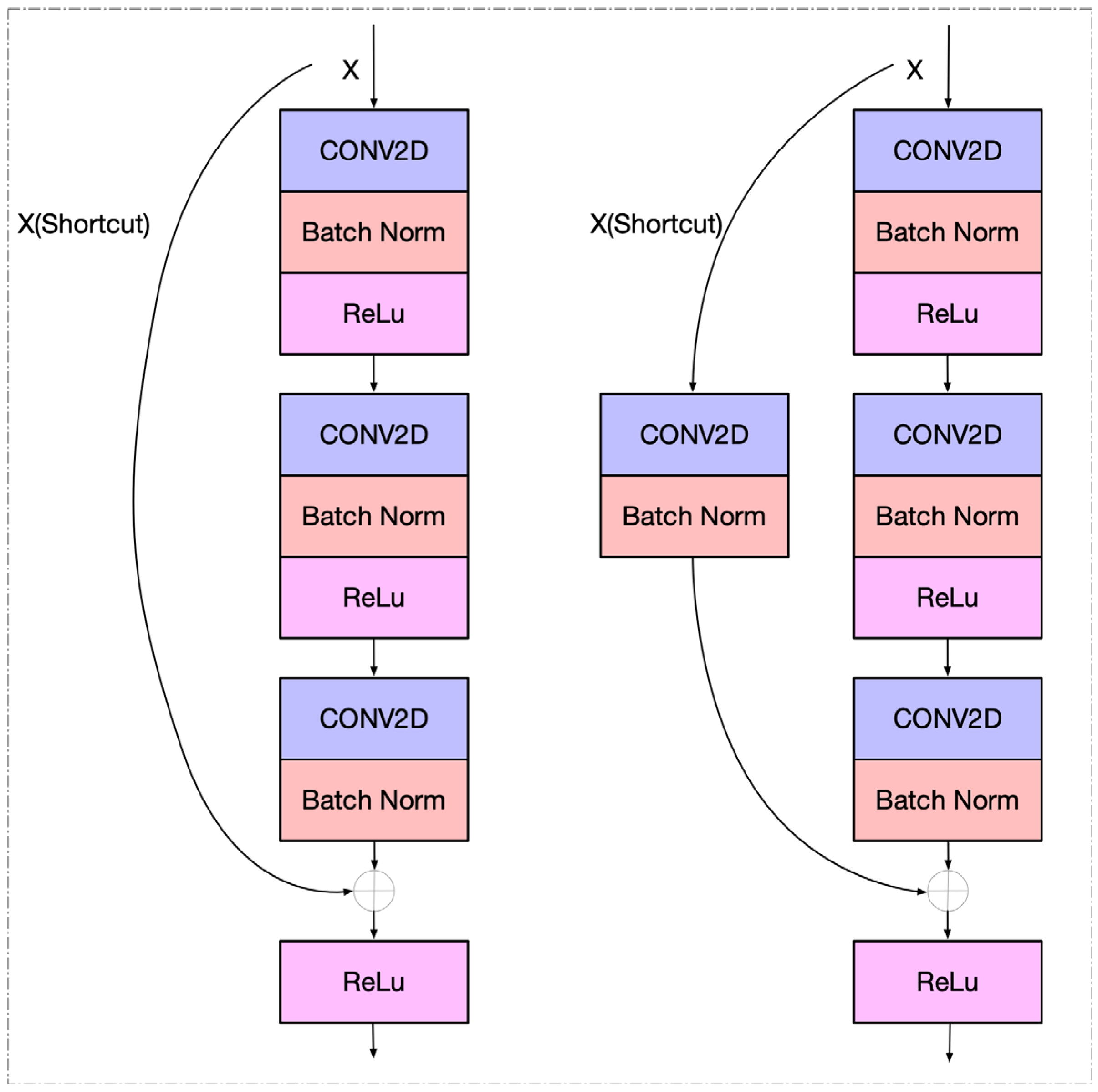

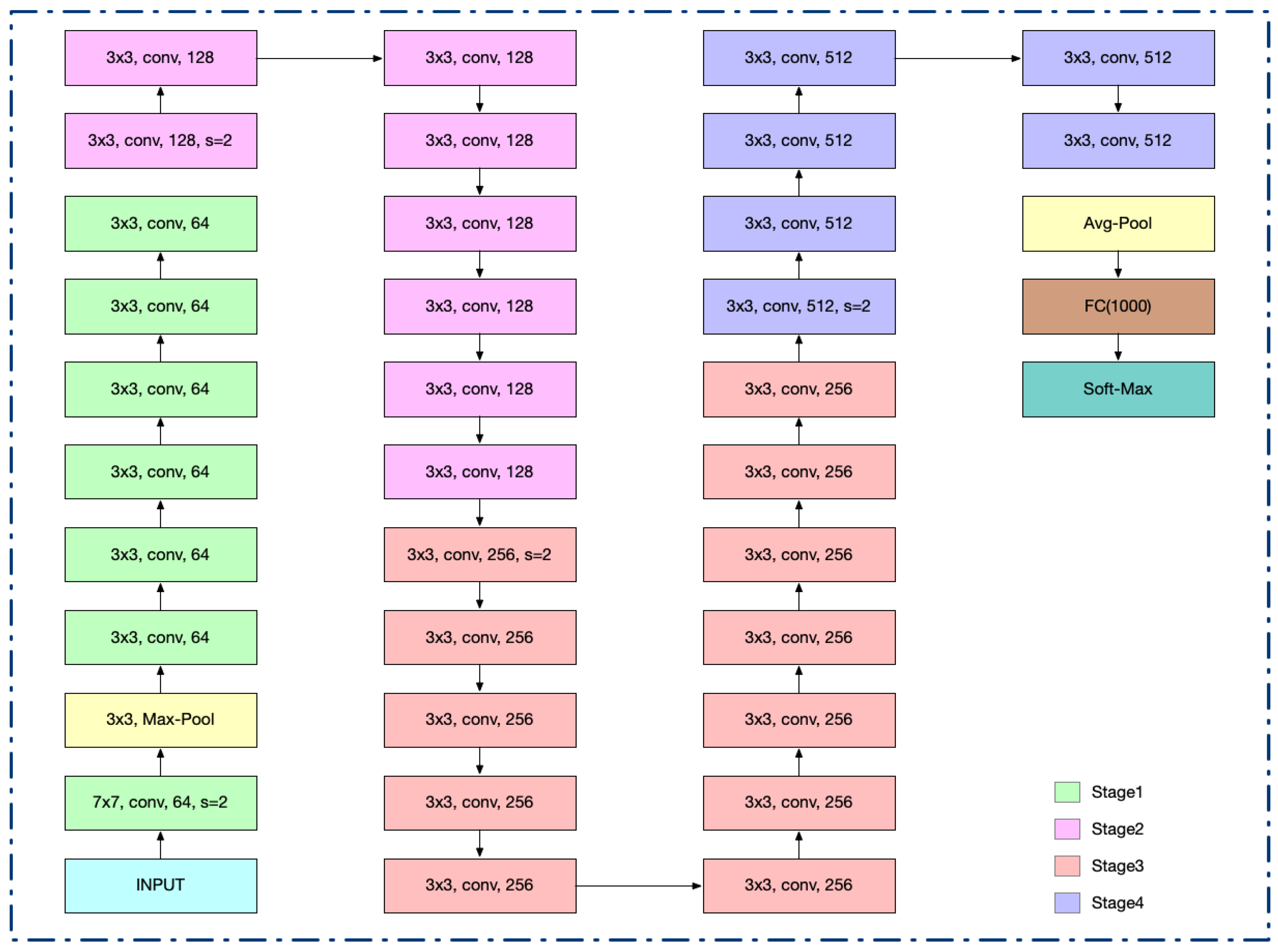

2.2.1. ResNet Basics

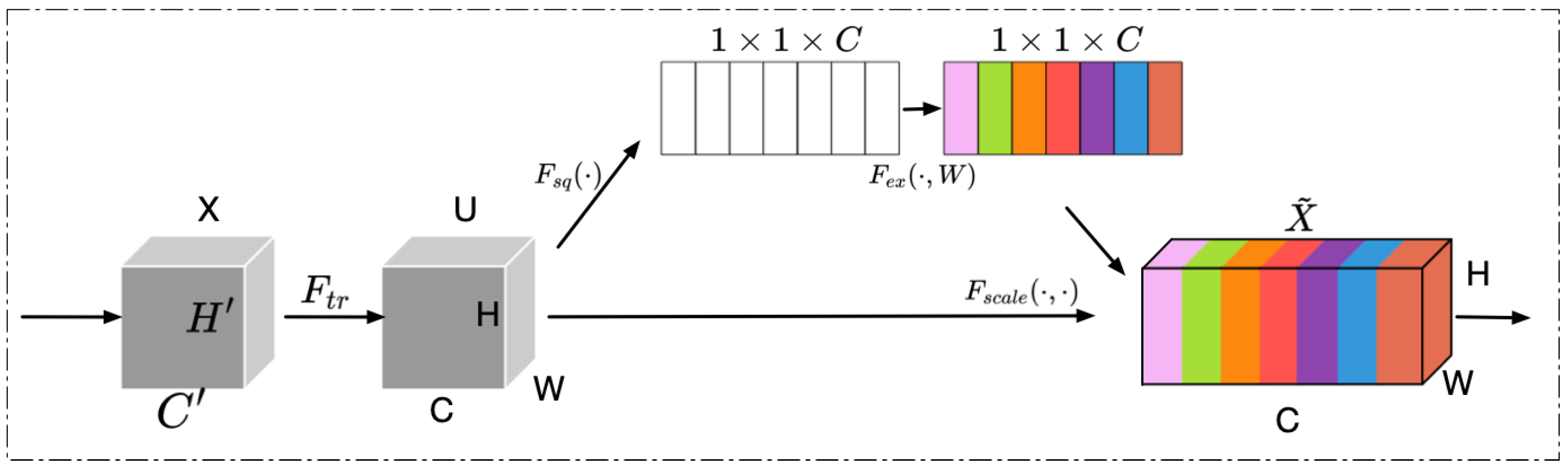

2.2.2. Squeeze-and-Excitation Networks Basics

2.2.3. SENet in ResNet

2.3. Experimental Procedure

2.4. Model Performance Metrics

3. Results of the Experiment

3.1. Signal-to-Image Results

3.2. The Results of the Fault Diagnosis

3.2.1. Results of ResNet Family Fault Diagnosis

3.2.2. Results of the SE-ResNet Family Fault Diagnosis

4. Discussion

4.1. ResNet Models

4.2. SE-ResNet Models

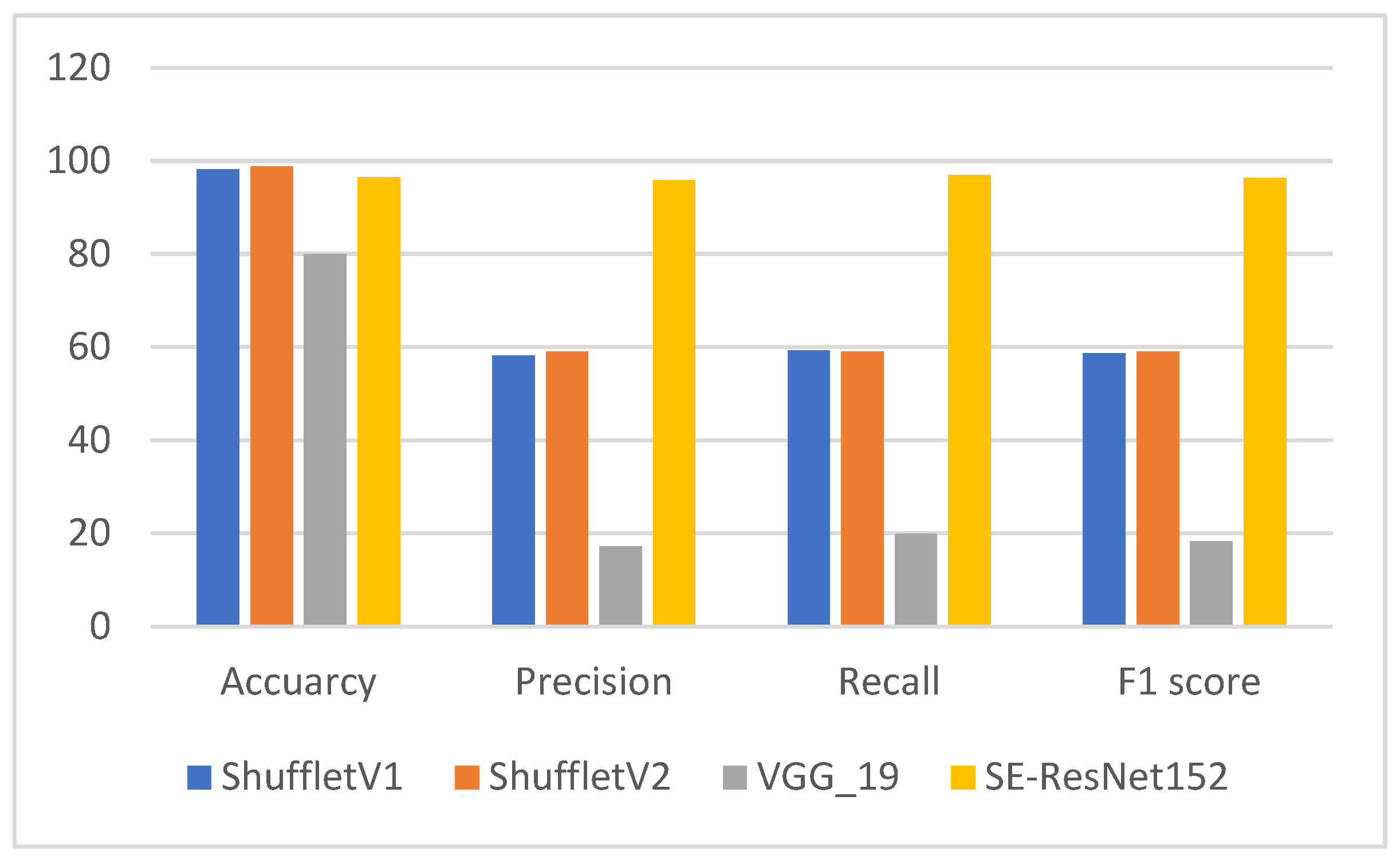

4.3. Performance Comparison of SE-ResNet152 with Three Other DL Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Zhou, J. Multi-fault diagnosis for rolling element bearings based on ensemble empirical mode decomposition and optimized support vector machines. Mech. Syst. Signal Process. 2013, 41, 127–140. [Google Scholar] [CrossRef]

- Randall, R.B.; Antoni, J. Rolling element bearing diagnostics—A tutorial. Mech. Syst. Signal Process. 2011, 25, 485–520. [Google Scholar] [CrossRef]

- Dibaj, A.; Ettefagh, M.M.; Hassannejad, R.; Ehghaghi, M.B. Fine-tuned variational mode decomposition for fault diagnosis of rotary machinery. Struct. Health Monit. 2020, 19, 1453–1470. [Google Scholar] [CrossRef]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Muruganatham, B.; Sanjith, M.; Krishnakumar, B.; Murty, S.S. Roller element bearing fault diagnosis using singular spectrum analysis. Mech. Syst. Signal Process. 2013, 35, 150–166. [Google Scholar] [CrossRef]

- McFadden, P.; Smith, J. Vibration monitoring of rolling element bearings by the high-frequency resonance technique—A review. Tribol. Int. 1984, 17, 3–10. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.; Zhou, B. Intelligent fault diagnosis of rolling bearing using hierarchical convolutional network based health state classification. Adv. Eng. Inform. 2017, 32, 139–151. [Google Scholar] [CrossRef]

- Singh, M.; Shaik, A.G. Faulty bearing detection, classification and location in a three-phase induction motor based on Stockwell transform and support vector machine. Measurement 2019, 131, 524–533. [Google Scholar] [CrossRef]

- Gharesi, N.; Arefi, M.M.; Ebrahimi, Z.; Razavi-Far, R.; Saif, M.; Zarei, J. Analyzing the vibration signals for bearing defects diagnosis using the combination of SGWT feature extraction and SVM. IFAC-Pap. 2018, 51, 221–227. [Google Scholar] [CrossRef]

- Caesarendra, W.; Pratama, M.; Kosasih, B.; Tjahjowidodo, T.; Glowacz, A. Parsimonious network based on a fuzzy inference system (PANFIS) for time series feature prediction of low speed slew bearing prognosis. Appl. Sci. 2018, 8, 2656. [Google Scholar] [CrossRef]

- Glowacz, A. Fault detection of electric impact drills and coffee grinders using acoustic signals. Sensors 2019, 19, 269. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Zhang, K.; Ma, C.; Cui, L.; Tian, W. Adaptive Kurtogram and its applications in rolling bearing fault diagnosis. Mech. Syst. Signal Process. 2019, 130, 87–107. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, S.; Liu, Y.; Lin, J.; Gu, X. Adaptive correlated Kurtogram and its applications in wheelset-bearing system fault diagnosis. Mech. Syst. Signal Process. 2021, 154, 107511. [Google Scholar] [CrossRef]

- Zhang, K.; Xu, Y.; Liao, Z.; Song, L.; Chen, P. A novel Fast Entrogram and its applications in rolling bearing fault diagnosis. Mech. Syst. Signal Process. 2021, 154, 107582. [Google Scholar] [CrossRef]

- Cheng, J.; Yang, Y.; Li, X.; Cheng, J. Adaptive periodic mode decomposition and its application in rolling bearing fault diagnosis. Mech. Syst. Signal Process. 2021, 161, 107943. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Chen, H.; Liu, Z.; Alippi, C.; Huang, B.; Liu, D. Explainable intelligent fault diagnosis for nonlinear dynamic systems: From 522 unsupervised to supervised learning. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Fu, W.; Tan, J.; Xu, Y.; Wang, K.; Chen, T. Fault diagnosis for rolling bearings based on fine-sorted dispersion entropy and SVM optimized with mutation SCA-PSO. Entropy 2019, 21, 404. [Google Scholar] [CrossRef]

- Wei, Y.; Li, Y.; Xu, M.; Huang, W. A review of early fault diagnosis approaches and their applications in rotating machinery. Entropy 2019, 21, 409. [Google Scholar] [CrossRef]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Li, C.; Sanchez, R.V. Gearbox fault identification and classification with convolutional neural networks. Shock. Vib. 2015, 2015, 390134. [Google Scholar] [CrossRef]

- Zhao, J.; Yang, S.; Li, Q.; Liu, Y.; Gu, X.; Liu, W. A new bearing fault diagnosis method based on signal-to-image mapping and convolutional neural network. Measurement 2021, 176, 109088. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; Van de Walle, R.; Van Hoecke, S. Convolutional neural network based fault detection for rotating machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Springenberg, J.T. Unsupervised and semi-supervised learning with categorical generative adversarial networks. arXiv 2015, arXiv:1511.06390. [Google Scholar]

- Gao, Y.; Liu, X.; Huang, H.; Xiang, J. A hybrid of FEM simulations and generative adversarial networks to classify faults in rotor-bearing systems. ISA Trans. 2021, 108, 356–366. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, J.; Xu, Y.; Zheng, Y.; Peng, X.; Jiang, W. Unsupervised fault diagnosis of rolling bearings using a deep neural network based on generative adversarial networks. Neurocomputing 2018, 315, 412–424. [Google Scholar] [CrossRef]

- Wang, R.; Jiang, H.; Li, X.; Liu, S. A reinforcement neural architecture search method for rolling bearing fault diagnosis. Measurement 2020, 154, 107417. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, J.; Zheng, Y.; Jiang, W.; Zhang, Y. Fault diagnosis of rolling bearings with recurrent neural network-based autoencoders. ISA Trans. 2018, 77, 167–178. [Google Scholar] [CrossRef]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Tamilselvan, P.; Wang, P. Failure diagnosis using deep belief learning based health state classification. Reliab. Eng. Syst. Saf. 2013, 115, 124–135. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Zhang, X.; Niu, M. Rolling bearing fault diagnosis using an optimization deep belief network. Meas. Sci. Technol. 2015, 26, 115002. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Zhang, H.; Duan, W.; Liang, T.; Wu, S. Rolling bearing fault feature learning using improved convolutional deep belief network with compressed sensing. Mech. Syst. Signal Process. 2018, 100, 743–765. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.Y.; Qin, W.L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Parmar, A.; Gulia, S.; Bajaj, S.; Gambhir, V.; Sharma, R.; Reddy, M. Signal processing of Raman signatures and realtime identification of hazardous molecules using continuous wavelet transformation (CWT). In Proceedings of the 2015 International Conference on Signal Processing and Communication Engineering Systems, Guntur, India, 2–3 January 2015; pp. 323–325. [Google Scholar]

- Nigrin, A. Neural Networks for Pattern Recognition; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556 527. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1319–1327. [Google Scholar]

- Le, Y.; Bottou, L.; Orr, G. Efficient BackProp. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Jajodia, T.; Garg, P. Image classification–cat and dog images. Image 2019, 6, 570–572. [Google Scholar]

| Layer Name | Output Size | 18-Layer | 34-Layer | 50-Layer | 101-Layer | 152-Layer |

|---|---|---|---|---|---|---|

| conv1 | 112 × 112 | 7 × 7, 64, stride 2 | ||||

| conv2x | 56 × 56 | 3 × 3 max pool, stride 2 | ||||

| conv3x | 28 × 28 | |||||

| conv4x | 14 × 14 | |||||

| conv5x | 7 × 7 | |||||

| 1 × 1 | Average pool, 1000-d fc, softmax | |||||

| FLOPs | 1.8 × 109 | 3.6 × 109 | 3.8 × 109 | 7.6 × 109 | 11.3 × 109 | |

| Load 0 | Load 1 | Load 2 | Load 3 | Mix | |

|---|---|---|---|---|---|

| IR | 354 | 259 | 355 | 356 | 412 |

| OR | 263 | 264 | 267 | 268 | 243 |

| Ball | 355 | 267 | 267 | 267 | 327 |

| Normal | 179 | 179 | 179 | 179 | 236 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, G.; Ji, X.; Yang, G.; Jia, Y.; Cao, C. Signal-to-Image: Rolling Bearing Fault Diagnosis Using ResNet Family Deep-Learning Models. Processes 2023, 11, 1527. https://doi.org/10.3390/pr11051527

Wu G, Ji X, Yang G, Jia Y, Cao C. Signal-to-Image: Rolling Bearing Fault Diagnosis Using ResNet Family Deep-Learning Models. Processes. 2023; 11(5):1527. https://doi.org/10.3390/pr11051527

Chicago/Turabian StyleWu, Guoguo, Xuerong Ji, Guolai Yang, Ye Jia, and Chuanchuan Cao. 2023. "Signal-to-Image: Rolling Bearing Fault Diagnosis Using ResNet Family Deep-Learning Models" Processes 11, no. 5: 1527. https://doi.org/10.3390/pr11051527

APA StyleWu, G., Ji, X., Yang, G., Jia, Y., & Cao, C. (2023). Signal-to-Image: Rolling Bearing Fault Diagnosis Using ResNet Family Deep-Learning Models. Processes, 11(5), 1527. https://doi.org/10.3390/pr11051527