1. Introduction

Group decision making (GDM) in a multicriteria scenario is extremely sensitive to internal and external influences. Many factors affect how each individual assigns criteria scores, including personal experience with and preference for alternatives, varying expertise in criteria, baseline requirements, and as demonstrated in previous experiments, information presentation modes. Mode or judgement format is also considered to be one of the four essential issues in GDM [

1]. These factors, if not carefully considered, can have significant effects on judgement and result in biased responses and violations of a critical assumption of multicriteria decision making (MCDM): criteria independence. Mode affects the dimensions in which decision makers anchor and adjust judgement in pairwise comparisons. It also affects the type and amount of response bias. Furthermore, mode has been shown to influence the response scale and the decision process in comparing alternatives. Frederick and Mochon [

2] refer to this personal change in response scale as scale distortion.

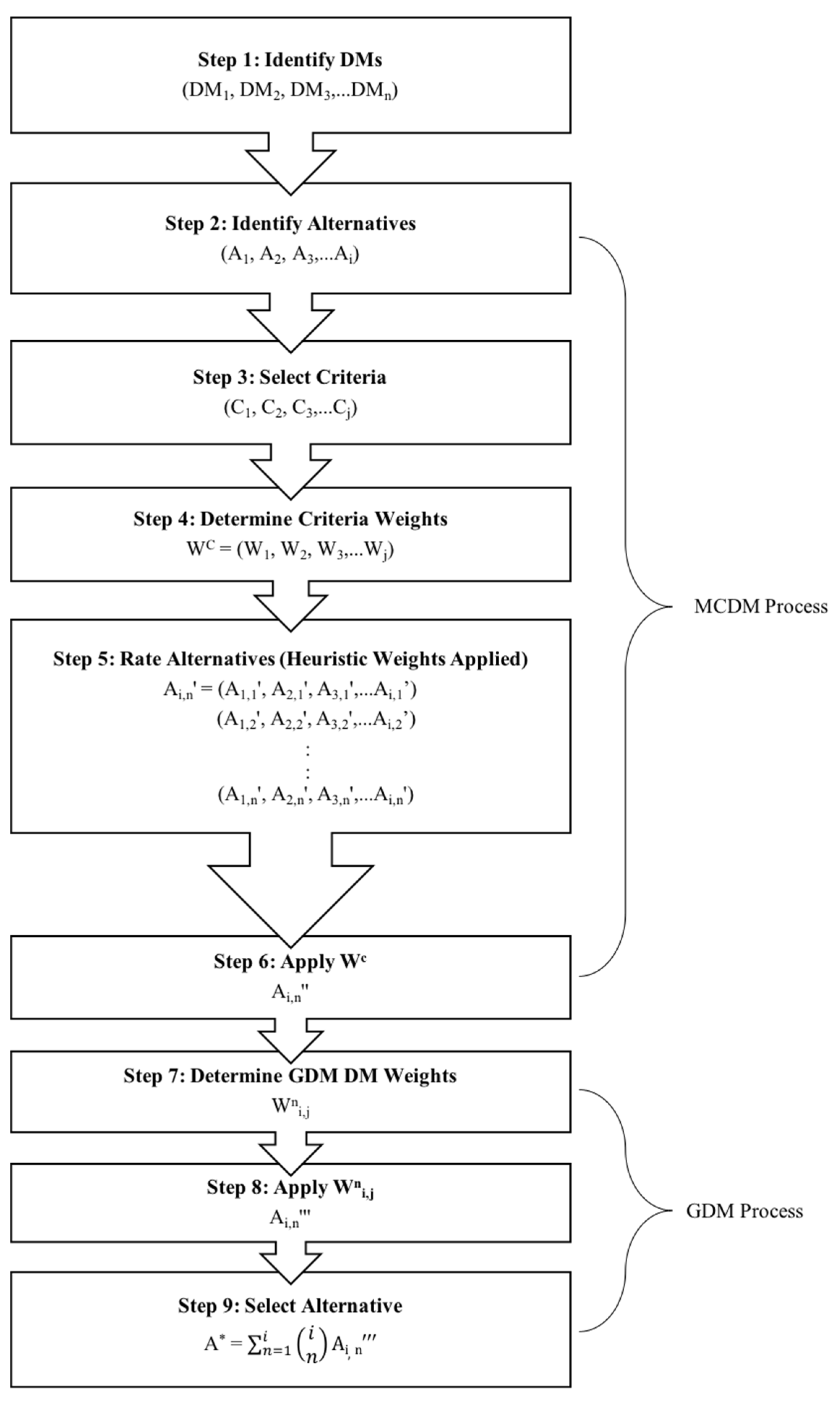

Another essential component of GDM is weighting. There are many proposed decision flow charts for MCDM considering GDM. We propose the flow chart shown in

Figure 1 as a general process that includes multiple layers of criteria and DM weighting. During the decision process, multiple different phases of weighting occur. However, a key phase not generally accounted for in the formal process is internal to the decision maker and occurs during the rating of alternatives in step 5. We refer to this account of weighting as heuristic weighting.

Any resulting bias from step 5 can create an inappropriate imbalance in criteria scores within alternatives and/or across alternatives. It is these types of functional dependencies [

3] that are difficult to characterize. Dependence between alternatives and within criteria and corresponding requirements is natural. Independence across criteria and corresponding requirements is ideal. Methods to identify and correct for dependencies in decision contexts are generally unaccounted for in traditional MCDM.

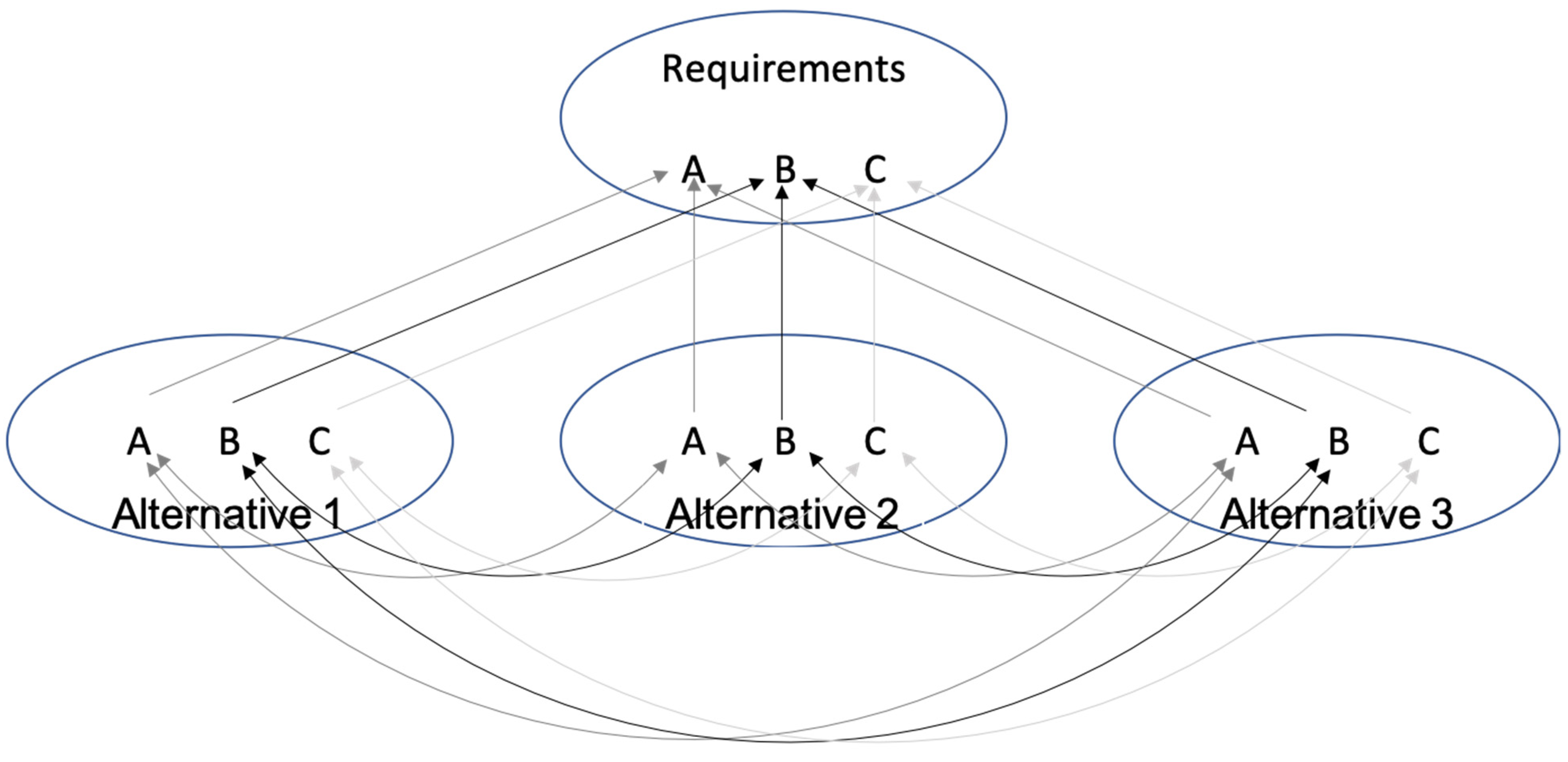

Figure 2 depicts this type of scenario. As shown, there are three alternatives judged against requirements for each criterion A, B, and C. Alternatives 1, 2, and 3 are interdependent within criteria and within criteria requirements. Criteria A, B, and C are independent of each other.

If dependencies cross criteria boundaries and criteria are weighted through steps 6 and 8, bias effects are compounded and cascaded through the process. It is difficult to trust judgement and weighted scores if criteria are not judged independently of each other. Requirements, whether informal or formal, biased or unbiased, are often used as supporting benchmarks to guide the decision maker in the direction of stronger criteria independence. However, it is a misconception that detailed decision, alternative, and criteria requirements alone are a sufficient mechanism to reduce bias and strengthen criteria independence. This research supports the idea that strategic facilitation through mode affects not only heuristics but also method of alternative comparison. Results support that when using a mixed mode of information presentation and evaluation, as described in

Table 1, subjects not only make alternative comparisons using ratio scaling, but compatible decision makers (having identical hierarchies of alternatives) use significantly similar ratios regardless of scale range.

If mode is to complement the criteria weighting method, the facilitation and formulations established in Step 5 of the process is critical. DMs can be influenced by a multitude of factors when determining criteria values. When comparing criteria against specific requirements, it is ideal that criteria considerations are independent of each other and match requirement criteria. For example, the value of the price of an alternative should be determined only by the limitations established by the price requirements, and not how other criteria such as performance do or do not justify the price. If the latter occurs, it is extremely difficult to determine weight accuracy of criteria as they satisfy requirements within decision makers. Certainly, a lack of criteria independence will cloud and skew the weighting of individual criteria and cascade effects through the GDM weighting. This makes the marrying of strategic decision facilitation and weighting methods imperative to reduce the negative effects of conflicting criteria.

2. Scale Distortion

Anchoring is a scaling effect in that the anchor changes how the response scale is used [

2]. These experiments provided evidence that anchoring effects are significant when making sequential judgements comparing different items, especially amongst those with high conceptual similarity, on the same scale. In contrast, there is also evidence that anchoring effects are minimized when making sequential judgments about different items using different scales [

2].

In most situations, use of the same scale is unavoidable. When a decision maker has to compare alternatives with high conceptual similarity side by side, such as choosing the better consumer product in a single-objective or multiobjective scenario, identical scales are natural between alternatives and corresponding criteria. Additionally, in most circumstances, and in the case of the research presented in this article, alternatives are competing and so are considered to have high conceptual similarity. Regardless of a predefined scale, every decision maker defines the range within that scale differently. A 7 out of 10 to one decision maker may be a 5 out of 10 to another based on personal values and experiences. Furthermore, how a decision maker assigns value in pairwise comparisons determines the range utilized within the full range of the scale. Therefore, value cannot be determined in relation to the full scale. Value should be determined by the comparison ratios of alternative scores regardless of the potential value set in the range. This is especially true when aggregating and weighting scores from multiple decision makers in a GDM context. It has been shown that a DM calculates a ratio when making pairwise comparisons of alternatives [

4,

5]. Furthermore, current MCDM methods such as the analytic hierarchy process (AHP) make relative comparisons of alternatives based on ratio scaling [

6].

Results from previous sections support that when presented alternatives in mixed mode, compatible subjects assigned value using significantly similar ratio comparisons regardless of scale range. Additionally, there was a disproportionate difference in the numbers of subjects in the subgroup that rated alternatives in the ideal rank order as they compared to requirements to subgroups that were deemed to have extreme amount of bias (i.e., last alternative was ranked first and/or first alternative was ranked last). That is, subjects with lower amounts of bias in their responses far outnumbered subjects with extreme bias in mixed mode. Therefore, there is evidence that scales are distorted from judgement of the first alternative and adjusted using similar ratios amongst compatible decision makers in mixed mode. In addition, evidence of extreme bias is significantly less prevalent in mixed mode.

Even in situations when mode reduces preference bias and consensus is reached in hierarchy of alternatives, unbalanced scale distortions between decision makers can remain. This is due to both criteria dependence and limitations of the scoring scales. This in turn causes misrepresentation of true criteria values as they satisfy criteria requirements. This is not to say that scores among decision makers should be as close as possible; however, it can be argued that the differences between alternative scores within decision makers should reflect similar ratios to other decision makers.

Consider a group decision scenario with a set

of alternatives, a set

of decision makers (DMs), and a set

N(

of criteria. Now consider the following case:

alternatives (

i = 1, …, 3),

decision makers (

m = 1, …,

), and

criteria (

j = 1, …,

). Since ratios are being considered, define

(

) as the ratio of scores

/

(i = 2, …,

;

m = 1, …,

) between alternatives

and

for i = 2, …,

. Ratios are always baselined from the score of the same alternative, in this case the score

of

. For three alternatives, there are x = 2 ratios for each criterion and decision maker. Thus, there are

unique ratios.

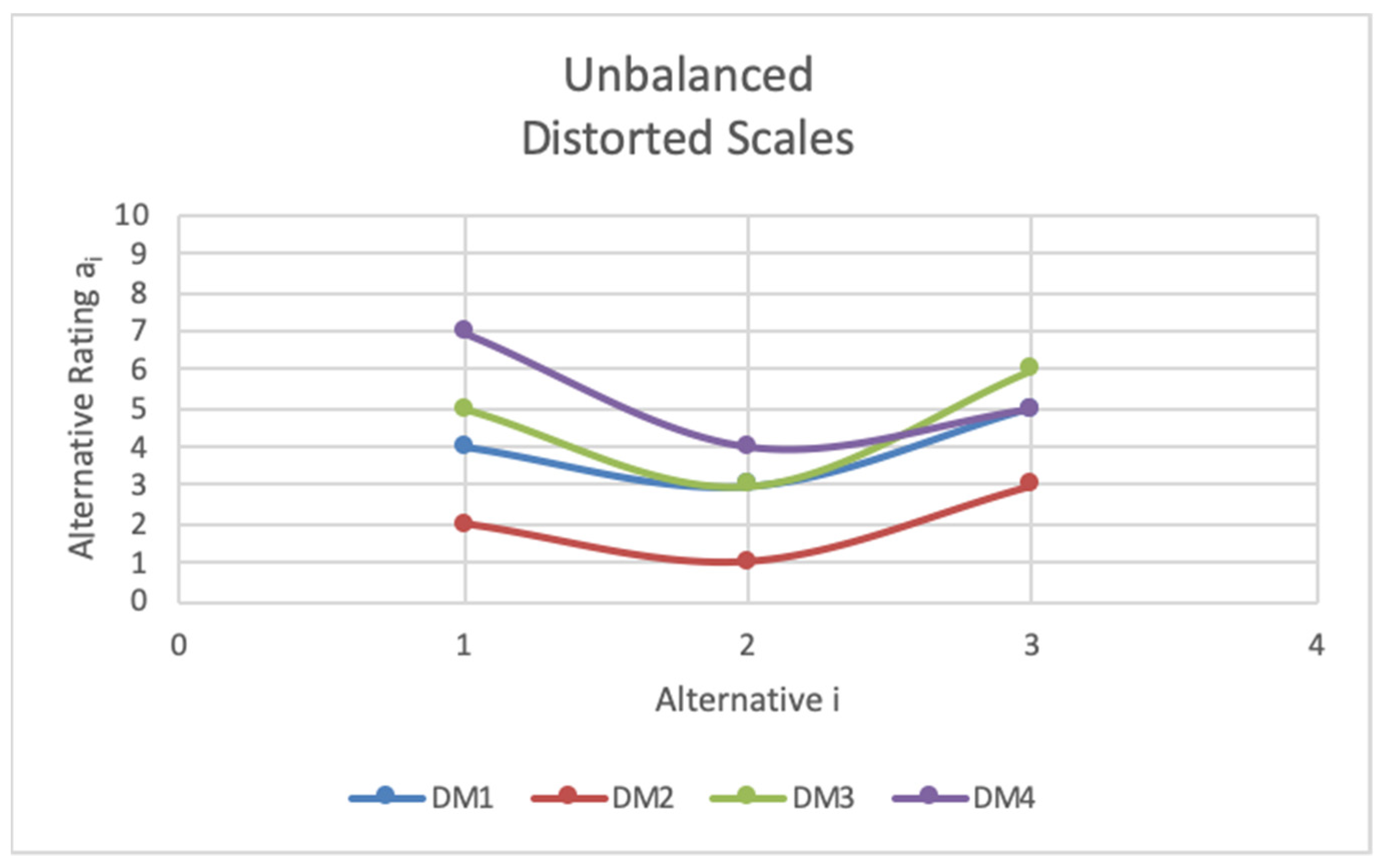

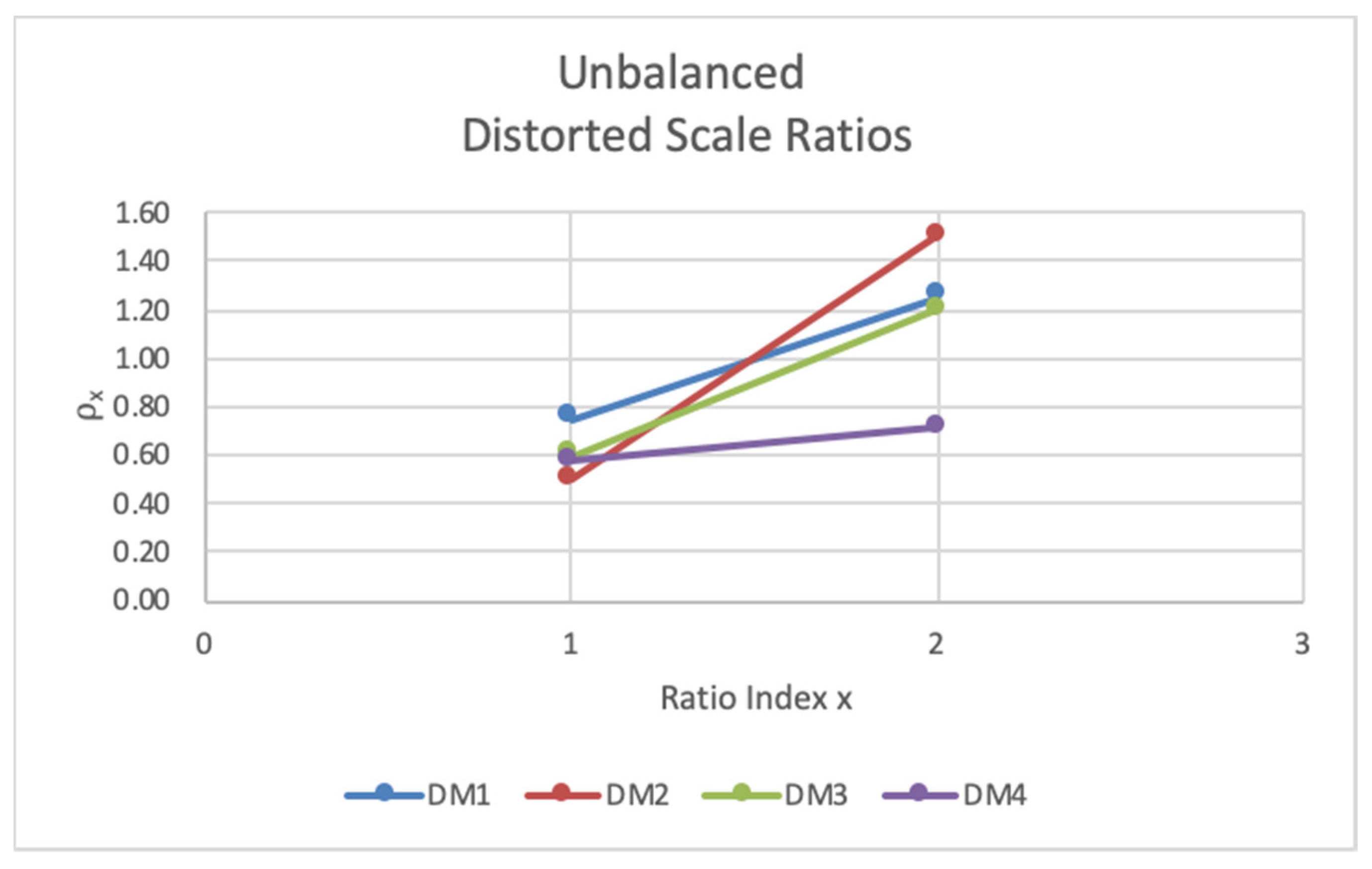

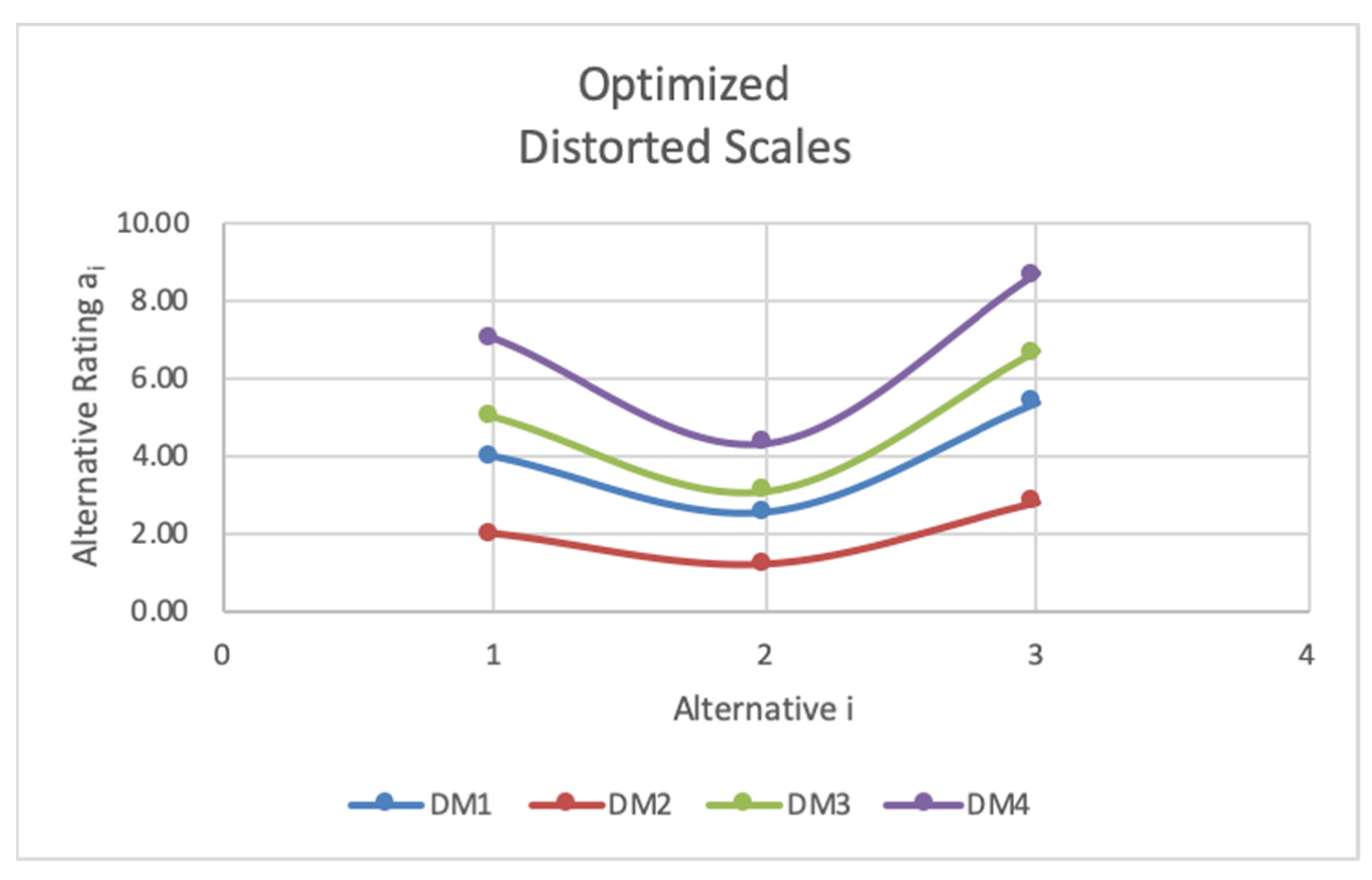

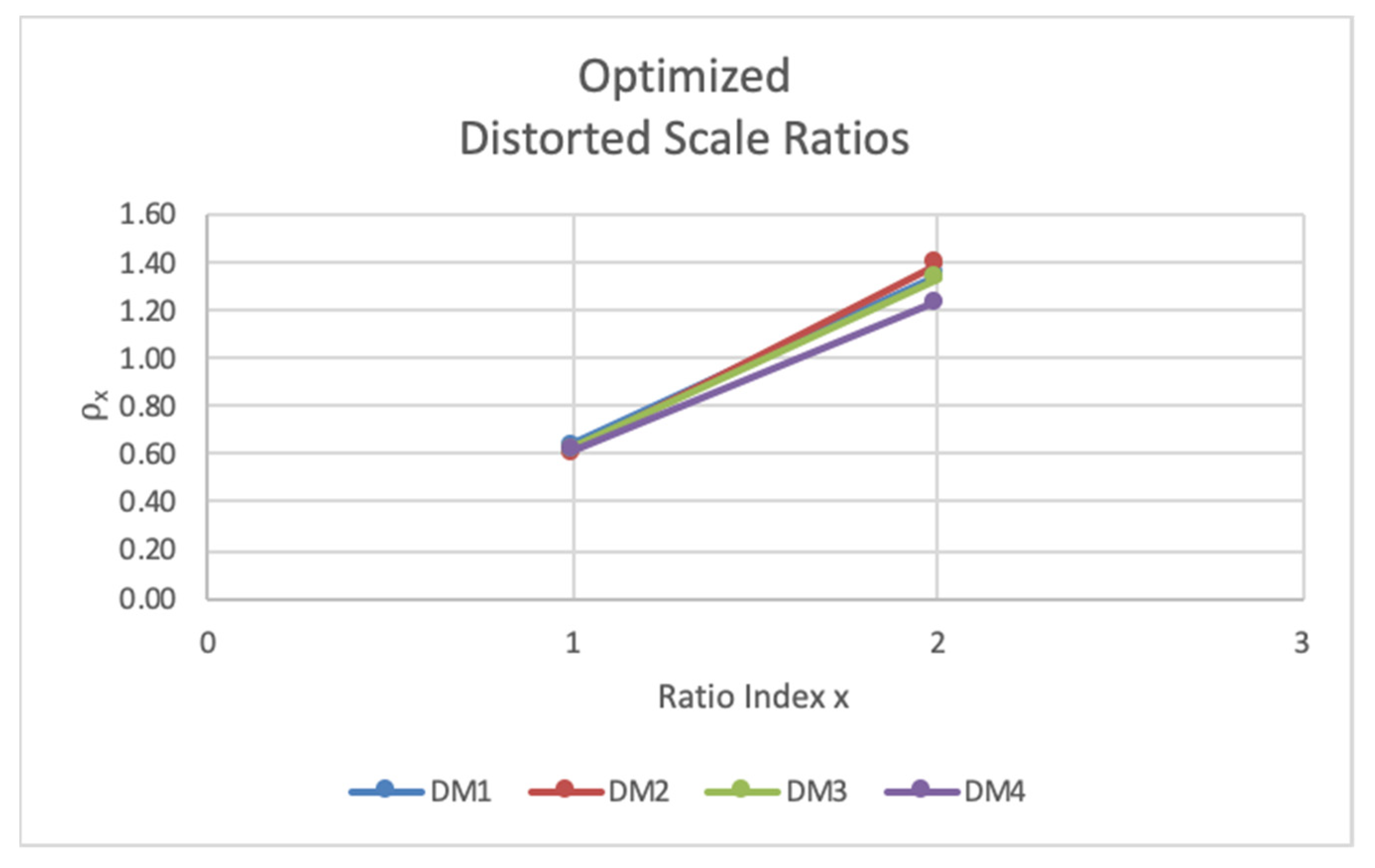

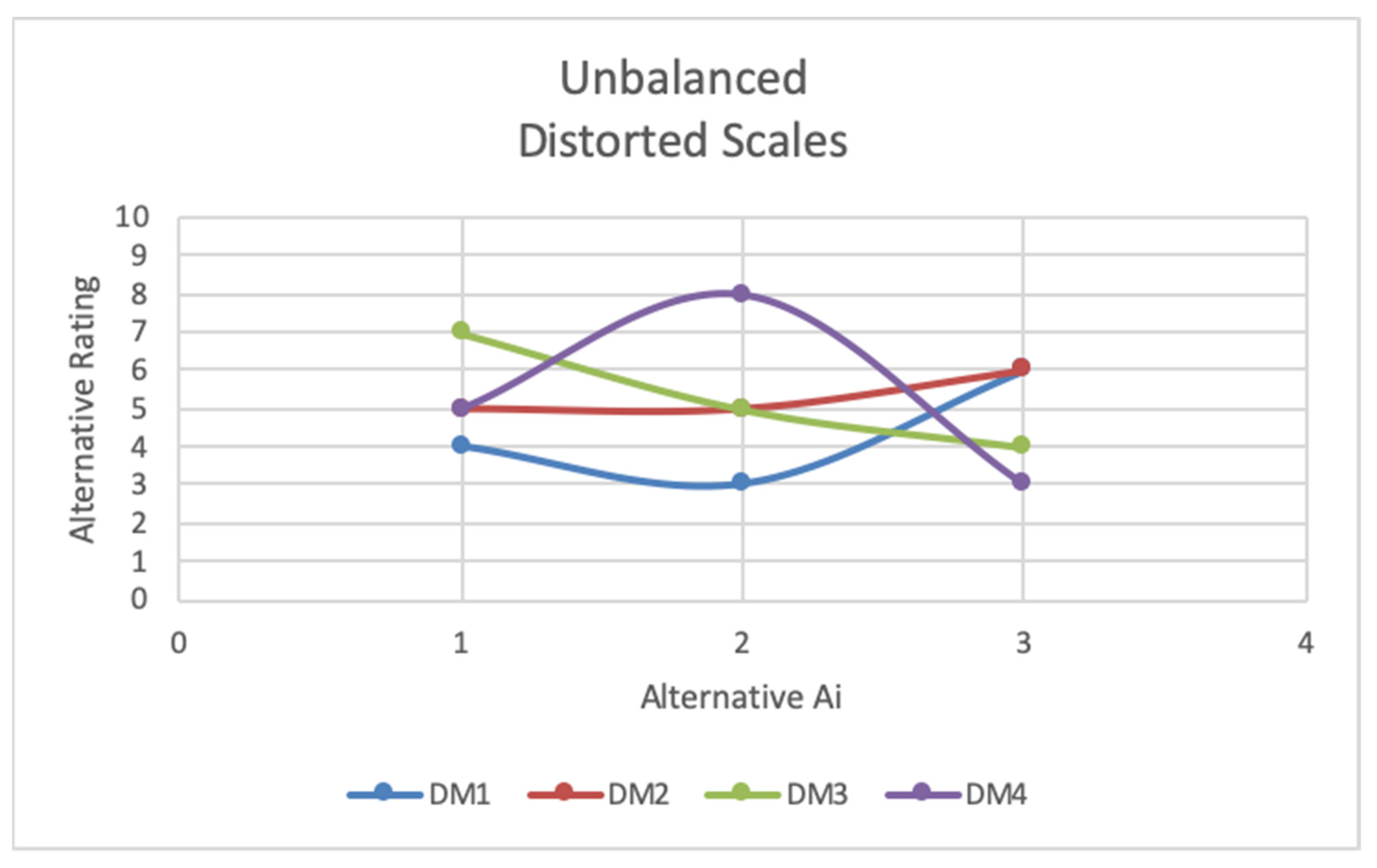

Figure 3 and

Figure 4 depict scale trend distortions between four (

) DMs across hypothetical judgements of three (

) alternatives for a single criterion.

Although the trends in the alternative

scores seem relatively similar in

Figure 3, closer inspection of the ratios

for DM

m in

Figure 4 reveal there is an imbalance between DMs. Ratios

(A

2/A

1) are relatively close between DMs; however, ratios

(A

3/A

1) are much further apart, and

, which indicates a disagreement in hierarchy and a potential issue with criteria independence.

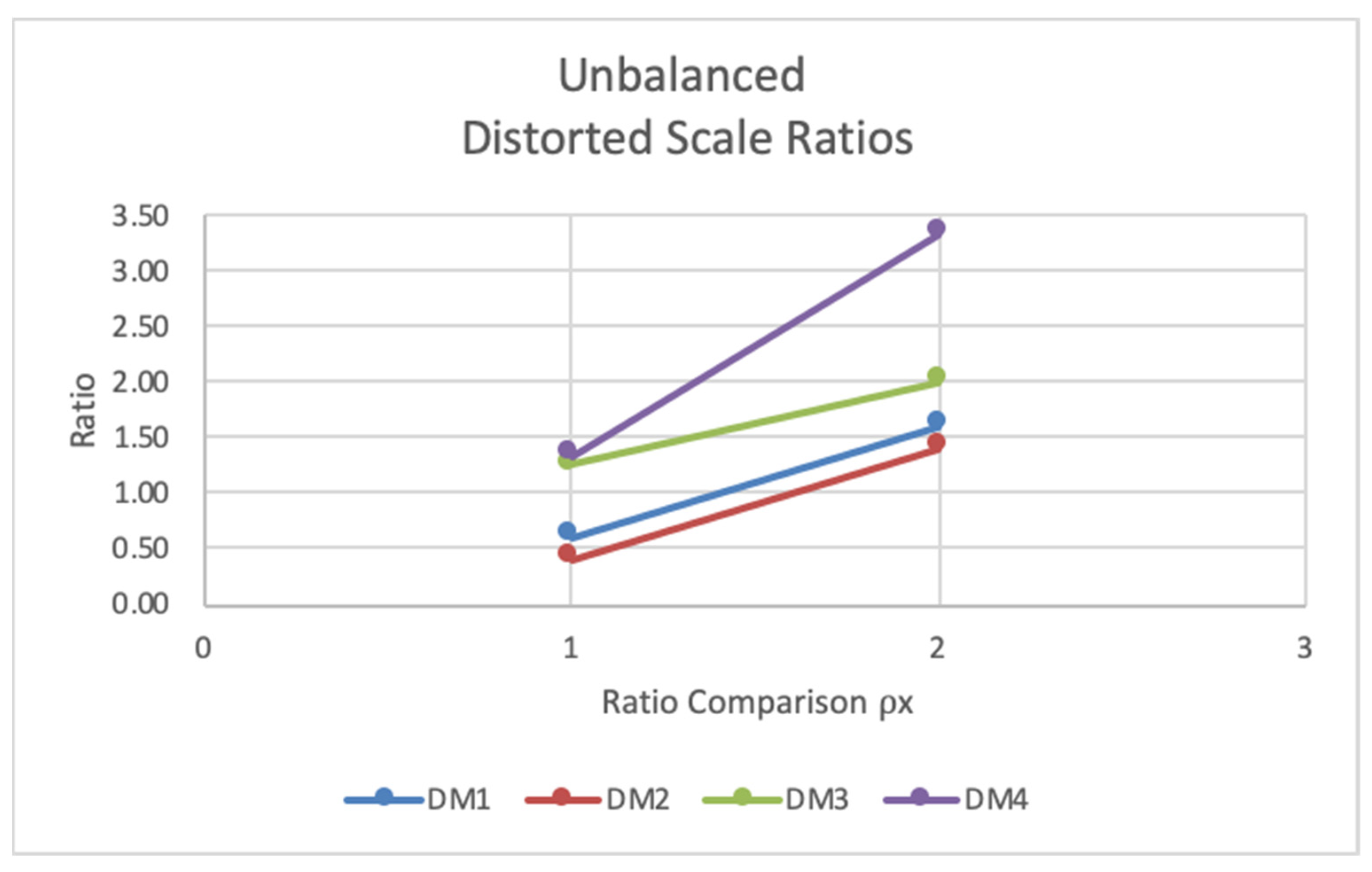

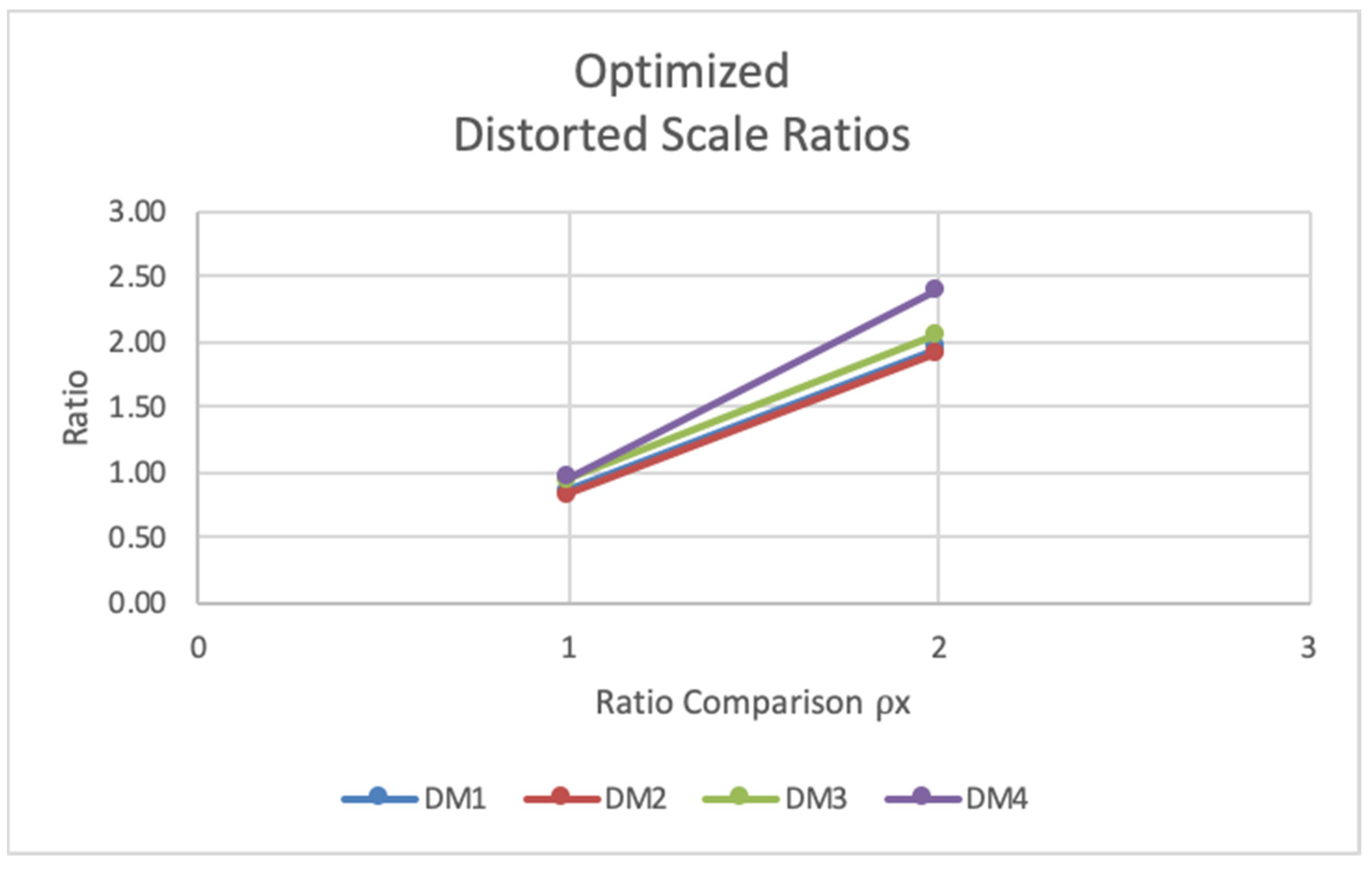

Figure 5 and

Figure 6 depict a scenario where scale trends display balanced distortions. There is a clear agreement between DMs in hierarchy supported by all

and all

in

Figure 6.

3. Optimization Method

There are two classic modes of decision making when considering a context with multiple alternatives, criteria, constraints, and objectives. If the decision-making group agrees on a set of quantifiable task variables, constraints, and objectives, then an optimum resource distribution may be obtained algorithmically. The alternative mode is to seek a satisfactory consensus distribution from group discussion [

7].

Previous experiments using optimization in group decision-making processes were mainly focused on measuring the consistency between subjects (consensus) to be able to perform an optimization of a decision context given constraints or the ability of a group to concede on an appropriate and proper optimization model for their decision context [

7,

8,

9]. Other research is focused on weighted models of interpersonal conflict in a group decision such as with the Friedkin–Johnsen model [

10]. This research is uniquely different in that the optimization model intends to minimize the difference between independent value judgements of different DMs by building the constraints from the independent input of each decision maker. The constraints are built from the ratio scales that each decision maker uses in the pairwise comparisons of alternatives. The use of ratio scaling in GDM to create consistency across DMs, stronger criteria independence, and positive cross alternative anchoring forces a restructuring of the MCDM process. This restructuring is designed to identify and adjust inconsistencies and bias disparities between DMs before relative ratings are plugged into the traditional MCDM matrix shown in

Table 2.

The optimization formulation presented here is focused on identifying where we can minimize the ratio scale disparity. This minimization must respect the boundaries of the ratio scales imposed by the DMs within the group. To accomplish this, we analyze the ratio scales across alternatives and within criteria for the group of DMs. The matrix of relative scores is shown in

Table 3.

It is important to define the critical assumptions in the decision-making process in order to rationalize the optimization formulation used in this experiment:

Alternatives have been vetted and reduced to only the viable, best competing solutions (in this case we use alternatives).

Alternative criteria are rated independently against criteria requirements.

At least 50% of DMs in the group are compatible (defined below). If not, there are very likely greater issues that need to be addressed in the process that are affecting relative ratings.

Because previous research supports the process of consistent ratio scaling, optimization can be used to reduce the

ratio disparity between DMs in GDM situations with compatible DMs and situations that lack compatibility. To quantify this disparity, define:

and

For example, the difference

is the scale distortion disparity for

. In order to reduce the total disparity and optimize the solution, we minimize the weighted sum of the ratio disparities as shown in Equation (3).

The variables in the optimization are the disparity weights .

Consistent hierarchies amongst DMs imply consensus. In these situations, bias and dependency can still exist. Additionally, insufficient incremental scale detail can lead to inaccurate ratio comparisons. In this situation, because consensus is achieved, tighter imposed optimization constraints are appropriate, as shown in constraint Equation (4). By taking the difference between and , narrow constraints are achieved. On the other hand, when DMs do not agree on hierarchies, more extreme bias exists and therefore constraints must be widened, as shown in constraint Equation (5). In this situation, to achieve the widest constraint possible, the difference of each ratio is taken from 0 (i.e., 0 – for the lower end and – 0 for the upper end). Thus, the formulation has two sets of constraints, one that is used in each of these situations as described below.

Optimization for consistent hierarchies (all DMs have the same hierarchy) is subject to the following constraints:

Optimization of incompatible DMs (there are at least two different hierarchies among the DMs in this criteria) is subject to the following constraints:

After the optimization is solved, the variables are used to adjust the ratios. In order to make those adjustments, we need the following definitions:

First, define a set of compatible decision makers in a criterion as those DMs that share the same hierarchy of alternative scores in that criterion. is the set of unique hierarchies represented among the ratings of all DMs for that criterion. For all of the DMs, let be one of these hierarchies, be the set of DMs that indicated hierarchy , and be the number of compatible DMs that chose hierarchy .

Notice the following:

is the most common hierarchy indicated by DMs for this criterion;

is the number of DMs that indicated in their ratings;

is the set of DMs that indicated .

Next, define

as the average of ratio

across a set of compatible DMs,

, that chose hierarchy

:

In order to proceed with the optimization of ratios, the following procedure is followed to leverage the information from compatible decision makers:

Case 1: If

, then use only ratios from DMs in

to compute

:

Case 2a: If

and there are more than two hierarchies among the DMs (i.e., there is a unique hierarchy chosen by half the DMs), then use only ratios from DMs in

to compute

(same computation as Case 1):

Case 2b: If

and there are only two hierarchies, each chosen by an equal number (

of DMs, then use all ratios in

to compute

:

For example, for Case 2b, if two DMs out of four agree on a hierarchy and the other two also agree, then there are only two different hierarchies, and thus the dominant hierarchy is unclear. In this case, the ratings of all four DMs are used in .

Case 3: If do not proceed. A majority of the DMs are not compatible.

Next, define

, the original distance between

and

for DM

, as follows:

Next, the adjusted distance

uses the corresponding optimization solution

Xx as shown in Equation (13).

The adjusted ratios (

) and ultimately adjusted alternative ratings (

) are then calculated using the adjusted distance (

) from

as shown in Equations (14) and (15).

The resulting MCDM matrix for a single DM after optimizing the ratio scales for all criteria is shown in

Table 4.

4. Analysis and Discussion

As previously stated in the assumptions, test cases analyzed scenarios when at least half of the decision makers agreed on hierarchy. Twenty-one test cases were analyzed. These 21 contain each possible hierarchy combination that conflicts with hierarchy 3-1-2 (i.e., A

3 rated highest and A

2 rated lowest). The optimization toolkit of Excel Solver is used to solve for

X1 and

X2. Results are shown in

Table 5.

The optimization formulation performed well when cases of bias were not extreme and consensus across all DMs was achieved, such as Case #11 shown in

Figure 7,

Figure 8,

Figure 9 and

Figure 10. Bias was considered to be extreme when at least one DM valued an alternative lowest and the other DM(s) valued the same alternative highest. In this case, DM1 and DM2 agree, and DM3 and DM4 agree on rank order of alternatives. It can be seen that the disparity in opinion is between alternative 1 and alternative 2. Recall that values of alternative criteria should be judged against criteria requirements, so there is clearly significant bias present in the evaluation between alternative 1 and alternative 2. After performing the optimization, it is shown that the bias was present in the evaluations of DM3 and DM4, and all adjusted alternative values reflect rank-order consistency (consensus) among all four DMs. It is important to note that even in situations when there is complete consensus prior to optimization, scale distortions can still exist. Therefore, weighted criteria values can skew overall alternative evaluations, necessitating adjusted scaling such as with Case #1.

In cases when bias was extreme, consensus was generally not identified after optimization. However, ratio disparities were minimized and alternative ratings and ratio scale trends were smoothed, such as in Case #18 shown in

Figure 11,

Figure 12,

Figure 13 and

Figure 14. It is shown that value disparities were significantly reduced between DMs in alternative 2 and alternative 3. So, even though consensus was not achieved in situations with extreme bias, the optimization reduced scale distortions to more accurately relate scales between DMs and provide a fairer evaluation of weighted criteria. Such situations are commensurate with decisions when group discussion may not resolve consensus.

Table 6 shows the total reduction in scale distortion disparity for each case. As shown, even in cases with extreme bias, average reduction was 54% with the tails being as high as 75%. Notice that in all cases, reductions are greater than zero for each

ρx. Additionally, average overall reductions of cases not containing instances of extreme bias was higher at 66%. This is possibly due to the shape of the constraints in instances when consensus is stronger allowing for more confidence in bias reduction. Reductions were calculated using Equations (16) and (17).