1. Introduction

Nowadays, non-traditional machining processes are mostly used in electronic and aerospace industries, where machining with high accuracy is required. Non-traditional machining processes are defined as processes that remove material by various techniques like mechanical, thermal, electrical, chemical energy or combinations of these energies. Unlike traditional machining processes, non-traditional machining processes do not require any sharp cutting tools. Generally, materials that are difficult to machine using conventional machining processes are machined using non-traditional machining processes. Electrical discharge machining (EDM) is one of the most widely used non-traditional machining processes. In EDM, the material is removed by the thermoelectric process. A series of discrete electrical sparks between the workpiece and the electrode is generated to erode the undesired materials from the workpiece. The Wire Electrical Discharge Machining (WEDM) process is widely used to pattern tool steel for die making. WEDM is mostly used to make dies and punches in the aerospace and automotive industries [

1]. In this process, a slowly moving wire travels along a pre-defined path and removes material from the workpiece. Wires in WEDM are mostly made with brass, copper, tungsten or molybdenum. Sometimes zinc- or brass-coated wires are also used. The wire in WEDM should have high tensile strength and good electrical conductivity. The surface roughness (

SR) and material removal rate (

MRR) of the machined surface by WEDM depends on different machining parameters, such as peak current (A), duty factor, wire tension (N), and water pressure (MPa). Thus, optimizing such process parameters is very important to maximize or minimize the response parameters and reduce machining time.

In the last few decades, many researchers have proposed several optimization techniques, such as genetic algorithm (GA) [

2], particle swarm optimization (PSO) [

3], ant colony optimization (ACO), artificial bee colony (ABC) [

4], cuckoo search optimization (CO), grey wolf optimizer (GWO) [

5], arithmetic optimization algorithm (AOA) [

6], salp swarm algorithm (SSA) [

7], ant lion optimization (ALO) [

8], whale optimization algorithm (WOA) [

9], multi-verse optimization (MVO), bat algorithm [

10], dragonfly algorithm (DA) [

11]. These optimization techniques are called non-traditional optimization techniques or metaheuristic techniques. These optimizers have been used in many fields, like the production, project scheduling, management field, manufacturing field and design field.

Hewidy et al. [

12] used an RSM-based metamodel of the WEDM process to optimize the process parameters. The objective of their work was to find the maximum

MRR and minimize

SR and wear ratio (WR). The experiment was conducted on Inconel 601. Zhang et al. [

13] performed optimization of process parameters for machining SKD11. They used a back-propagation neural network in link with a genetic algorithm (BPNN-GA) to maximize

MRR and minimize

SR. Shihab [

14] examined the optimal conditions for machining parameters using a Box-Behnken design (BBD). Chaudhary et al. [

15] optimized WEDM process parameters for machining of ASSAB’88 tool steel with RSM. The main objective was to calculate the optimal condition of process parameters to maximize

MRR and minimize

SR. Mahapatra and Patnaik [

16] examined optimum process parameters of WEDM using the Taguchi method. They considered discharge current, pulse duration, pulse frequency, wire-speed, wire tension and dielectric flow rate as process parameters and

MRR,

SR and cutting width (kerf) response parameters. Nayak and Mahapatra [

17] performed optimization of machining parameters of WEDM process parameters for a deep cryo-treated Inconel 718 material. Mukherjee et al. [

18] performed a comparative study of six different non-conventional optimization techniques (i.e., genetic algorithm (GA), particle swarm optimization (PSO), sheep flock algorithm (SF), ant colony optimization (ACO), artificial bee colony (ABC) and biogeography-based optimization (BBO)). The objective of their study was to maximize the

MRR and minimize the

SR and

WR values for the WEDM process.

A literature survey revealed that many researchers have used varied techniques to optimize the process parameters of machining processes. However, most researchers have been limited to desirability analyses through RSM and the use of traditional metaheuristics like GA and PSO. Furthermore, among the metaheuristic techniques, no comparative study has been carried out in comparison of recently-proposed optimization techniques. There is very little literature available where recent nature-inspired optimization techniques are used to optimize the process parameters of machining processes. Thus, in this paper, a comparison of six newly proposed metaheuristic techniques, namely, ant lion optimization (ALO), arithmetic optimization algorithm (AOA), dragonfly algorithm (DA), grey wolf optimizer (GWO), salp swarm algorithm (SSA) and whale optimization algorithm (WOA), is made, and the results are compared with previously published results. The rest of the article is presented as follows: in the second section, the theoretical background of the six metaheuristic algorithms with their pseudo code are shown; in the third section, the problem description is shown; in the fourth section, the results and discussions are discussed, and at last, the conclusions are made.

3. Problem Description

WEDM process is used to make micro holes on a very hard shape with complex geometry. Since all non-traditional machining processes are very costly and time-consuming, finding optimum process parameters is significant in reducing the cost of machining and machining time. Second-order regression models are formulated to maximize the

MRR and minimize the

WR and

SR in this study. Peak current (A), duty factor, wire tension (N) and water pressure (MPa) are considered as process parameters. The experiments were conducted by Hewidy et al. [

12] on an ELEKTTA MAXI-CUT434 CNC WEDM machine using brass CuZn377 of 0.25 mm diameter as the wire and 6 mm thick Inconel 601 as the workpiece material. Each process parameter was further discretized into five equispaced levels. In total, 31 experiments were performed, and the

MRR,

WR and

SR were recorded. Based on the experimental data [

12], second-order regression models were formulated considering four process parameters. The polynomial regression models of

MRR,

WR and

SR are given below in Equations (41)–(43), respectively.

From the regression model of WR, it is found that the WR is only dependent on two parameters, i.e., peak current and water pressure. The duty factor and wire tension do not significantly affect WR and thus, were dropped from the regression model.

The optimization problem may be defined as,

or minimize or minimize

The six metaheuristic algorithms considered in the study were compared against each other in terms of obtaining the best optimal solution, i.e., for optimization, the algorithm with the largest function value was considered superior, and for and , the algorithms with the smallest function values were considered superior. To make an unbiased comparison, the total number of function evaluations for each algorithm was restricted to 3000. Since metaheuristics are stochastic in nature, 10 independent trials for each algorithm were carried out. Emphasis was given on the best value, mean value and standard deviation of 10 trials. In general, a lower standard deviation and mean value as close as possible to the best value was desired. A lower standard deviation value indicates better reliability of the algorithm. The algorithms are also evaluated in terms of computational time requirements.

4. Results and Discussion

To make a fair comparison among all the selected algorithms, the number of search agents and iterations were limited to 30 and 100, respectively. Further, to eliminate any bias, each algorithm was independently run for 10 trials for each response.

Figure 1 shows the convergence plot for the six metaheuristics for a typical trial while optimizing

MRR.

It is observed from the plot that except for AOA, all the other algorithms had an appreciable increment in the best solution after the first few iterations. The convergence trend of GWO and WOA was seen to be similar for

MRR optimization. ALO, DA and SSA showed monotonic improvement in the best solution search for the first few iterations, after which there was a negligible improvement in them.

Table 1 shows the performance of the algorithms in terms of statistical parameters of 10 independent trials. It is observed that except for AOA, all other five algorithms reported the best value of 8.765. DA and SSA are observed to have a mean value of 8.765 for

MRR optimization, indicating that these two algorithms were able to predict the best-known value in all 10 trials. Thus, the success rate (i.e., the ratio of the number of times the best-known value was achieved by the algorithm to the number of trials) for DA and SSA was 100%. The success rate for GWO, WOA and ALO and AOA was seen to be 90%, 83%, 77% and 0%, respectively.

To delve deeper into the performance of the metaheuristics, the total function evaluations for each algorithm for a typical trial was plotted in the form of box plots in

Figure 2.

In all of the other five algorithms, except AOA, the mean value of the total function evaluation was found to be significantly below the median line. This indicates that the lower 50th percentile of the total function evaluations had much smaller values as compared to the top 50th percentile. This could also be indicative of the fact that the algorithms initiated at a low function value and took a significant number of iterations to reach the high function value (optimal) zone. However, to draw a clear conclusion and avoid misinterpretation, it is important to look into this plot in conjunction with

Table 1. For example, AOA’s mean and median values are very close to each other, with a very low spread of function evaluations. However, as seen from

Table 1, it could not locate the best-known value. This would mean that despite the AOA randomly starting from a better initial position than the other algorithms, it was not able to improve its best-known solution iteratively. This is most likely due to AOA being tapped in the pit of local optima and its inability to navigate out of it. Among the other algorithms, GWO and ALO are seen to have a lower number of function evaluations with lower values, indicating that they had more rapid convergence towards the optimal zone.

The optimal process parameters and the

MRR, as reported by the various metaheuristics, are presented in

Table 2. With respect to the Hewidy et al. [

12] solutions, AOA showed a 24.63% improvement. All the other algorithms reported a 33.41% improvement over the existing solutions in the literature.

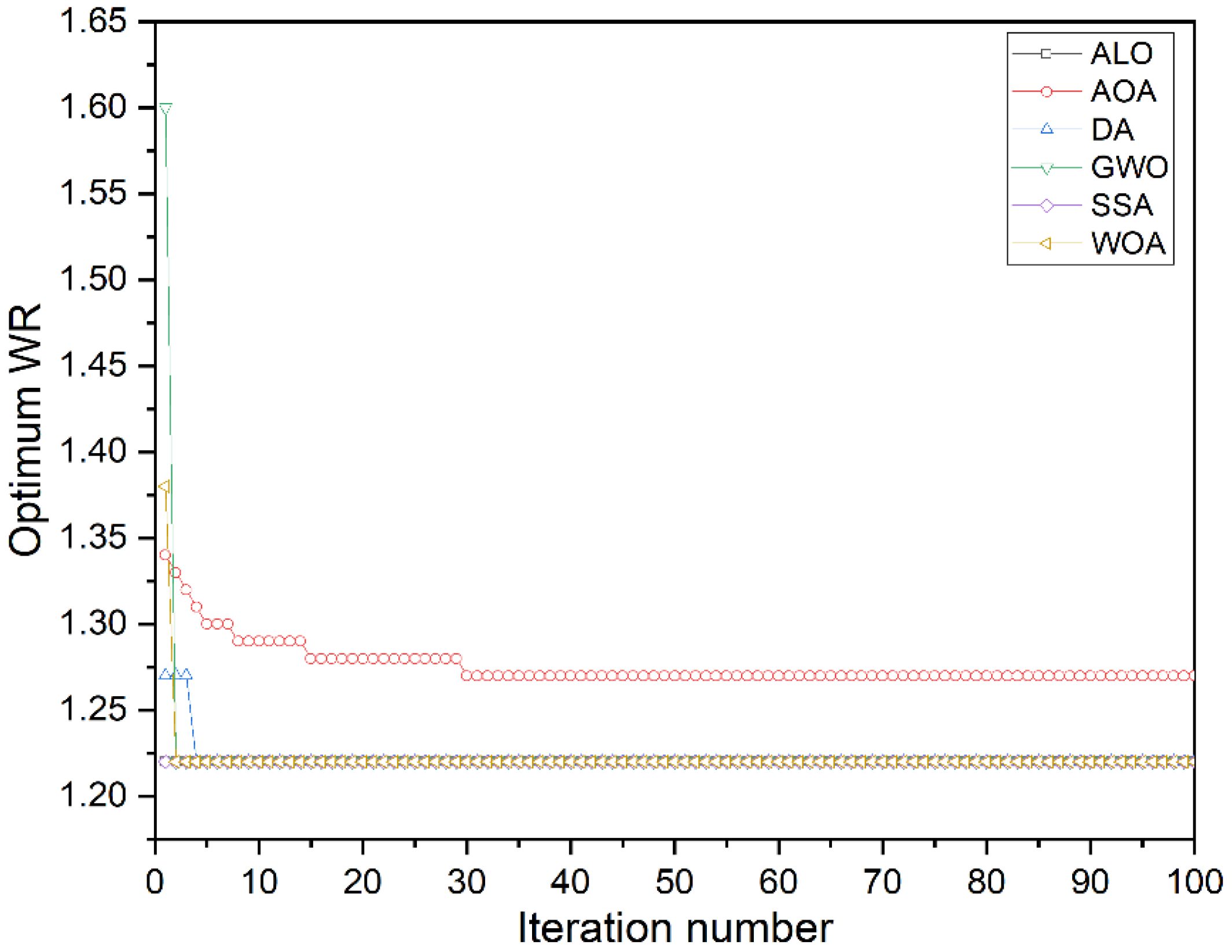

Figure 3 shows the convergence of the algorithms while optimizing the

WR. It was observed that except for AOA, all other algorithms have a similar convergence trend.

Table 3 contains the statistical summary of the 10 independent trials of

WR optimization. Like

MRR optimization, in the case of

WR, AOA was unable to locate the best-known optima. All the other five algorithms reported the best-known optima to be 1.216, an improvement of approximately 25% over the AOA results. As seen from Equation (42), the

WR model was linear with only two process parameters. This may be the reason behind the 100% success rate reported by all the other five algorithms except AOA.

Figure 4 shows the spread of the total function evaluations during a typical trial while optimizing

WR. The overall pattern of the spread and distribution of the function evaluations in

Figure 4 is very similar to that in

Figure 2. This indicates that the algorithms are unaffected by whether the optimization problem is a minimization or maximization type. GWO was seen to have the highest median function evaluation value among the algorithms. Moreover, the mean function evaluation value for GWO was very close to its median. This indicates that a very high percentage of GWO’s evaluated functions were in the optimal zone. This is generally preferred as it may be indicative of a high convergence rate.

The optimum process parameters for minimizing

WR are presented in

Table 4. With respect to Hewidy et al. [

12], the current results are 70% better for AOA and 71.32% better for ALO, DA, GWO, SSA and WOA. Similarly, the optimum process parameters for minimizing

SR are presented in

Table 5. In this case, the current ALO and AOA results are observed to be 47.68% better than Hewidy et al. [

12]. On the other hand, DA and GWO results were 47.82% better, while SSA and WOA were 48.05% better than Hewidy et al. [

12]. However, it is important to point out that all six algorithms reported varied in optimized values of the process parameters. This indicates that the objective function search space was, perhaps, multimodal. Therefore, it is worth mentioning that Hewidy et al. [

12] used an RSM-based model to calculate the optimum values. The optimum values of responses obtained by Hewidy et al. [

12] were

MRR = 6.57 mm

3/min,

WR = 4.24 and

SR = 2.20µm. The best-known optimum values obtained in this study were

MRR = 8.765 mm

3/min,

WR = 1.216 and

SR = 1.143 µm.

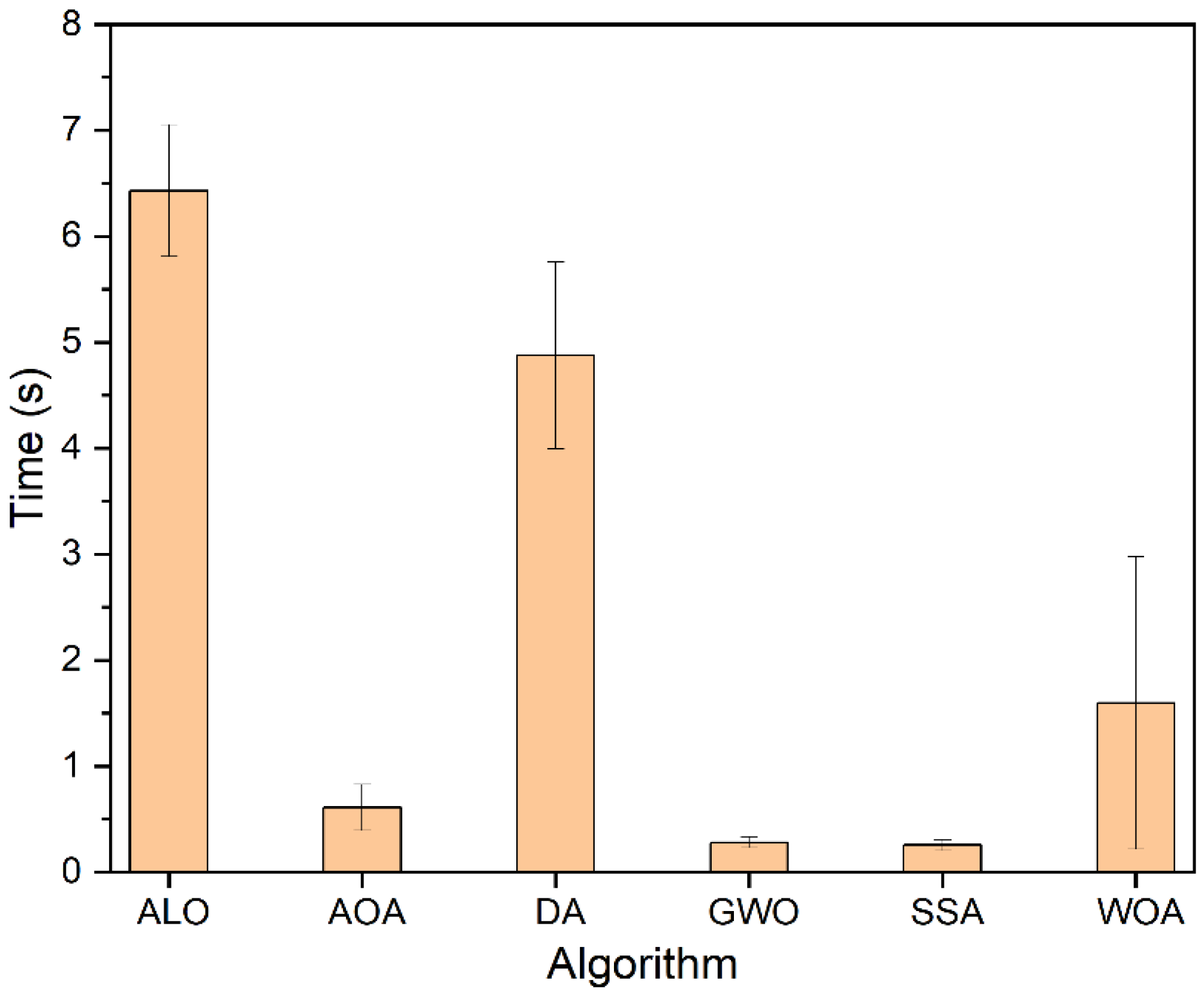

Though the same number of function evaluations (i.e., 30 × 100 = 3000) were carried out by each algorithm, some algorithms were expected to be faster than others. Thus, the CPU time of each algorithm was noted and averaged for 10 independent trials for each response. From

Figure 5, it is observed that ALO, DA and WOA were the three most expensive algorithms, whereas SSA, GWO and AOA were the three least expensive. Nevertheless, the standard deviation of computational time for WOA was very high, indicating that in some instances, it may take a very short computation time. Perhaps this is because optimizing

WR was very fast in optimizing the linear problem with only two variables.

All six recent algorithms performed better than the existing solutions by Hewidy et al. [

12]. Hewidy et al. [

12] used a desirability function-based approach, which is, in general, incapable of locating the global optima. The recent metaheuristics tested in this paper recorded at least 25%, 70% and 47% better solutions than Hewidy et al. [

12] for

MRR,

WR and

SR results. This improvement is perhaps due to the fact that the metaheuristics initiate a random population and algorithmically improve it over generations by continuously evolving the solutions. Based on the comprehensive evaluations of the algorithms on the three machining-related test functions, it can be summarized that despite AOA being the most recent algorithm among the six tested metaheuristics, it is not necessarily the best in locating the best-known optima. It is evident that the AOA is trapped in the local optima region, and for both the minimization type test functions, the solution of AOA was 1–2% poorer than the best-known optima. For the maximization-type test function, the best-known optima were observed to be roughly 9% better than the AOA’s solution. However, the computational time requirement of AOA was quite low, nearly on par with SSA and GWO. In terms of convergence, the SSA was observed to be the fastest. The median evaluated function value of SSA was seen to be quite a bit lower (for minimization problems) than its mean evaluated function value, indicating faster navigation to the optimal solution zone.

5. Conclusions

Machining process optimization is a necessary task for manufacturing industries and can lead to significant savings in material wastage, power consumption and tool wear and can improve productivity and efficiency of the process. Since a plethora of novel algorithms have been proposed in the recent past, with each having demonstrated capabilities in the literature, it is important to comprehensively compare them for their potential use in machining process optimization. In this article, six recently proposed nature-inspired algorithms, namely, ALO, AOA, DA, GWO, SSA and WOA, are comprehensively assessed, and the following conclusions were drawn.

Based on the ability to navigate and find the optimal solution, the tested algorithms may be ranked as SSA > WOA > GWO > DA > ALO > AOA. Both SSA and WOA were able to locate the best solution for all three responses. However, SSA’s success rate was 100% as opposed to 83% of WOA.

Based on the computational time, the tested algorithms may be ranked as SSA > GWO > AOA > WOA > DA > ALO. Both SSA and GWO had very minimal and similar computational requirements. However, SSA had a marginally lower standard deviation than GWO. As compared to ALO, SSA was observed to be about 25 times faster.

The convergence of SSA was observed to be slightly better than its counterparts. GWO also showed fast convergence. AOA was prone to be trapped in local optima.

As compared to the previous known best solutions, an average (on three responses) improvement of 50.8% and 47.47% was observed for ALO and AOA, respectively. DA and GWO showed a 50.85% improvement, whereas SSA and WOA recorded a 50.93% improvement.

The limitations of this study are that there are several advanced and hybrid variants of the algorithms, which were not considered in this paper. The study is also limited to one class of optimization problems. Nevertheless, the current optimization problems are of immense importance to industries. In the future, this study can be extended to incorporate an in-depth analysis of hybrid metaheuristics and the use of advanced quantum and chaotic enhancements to these algorithms. Optimization under uncertainty could also be an interesting area for their application.