Object Detection: Custom Trained Models for Quality Monitoring of Fused Filament Fabrication Process

Abstract

1. Introduction

1.1. Relevant Work

1.2. Artificial Intelligence and Computer Vision

1.3. Object Detection

1.4. Computational Power–GPU

1.5. Problem Description

2. Materials and Methods

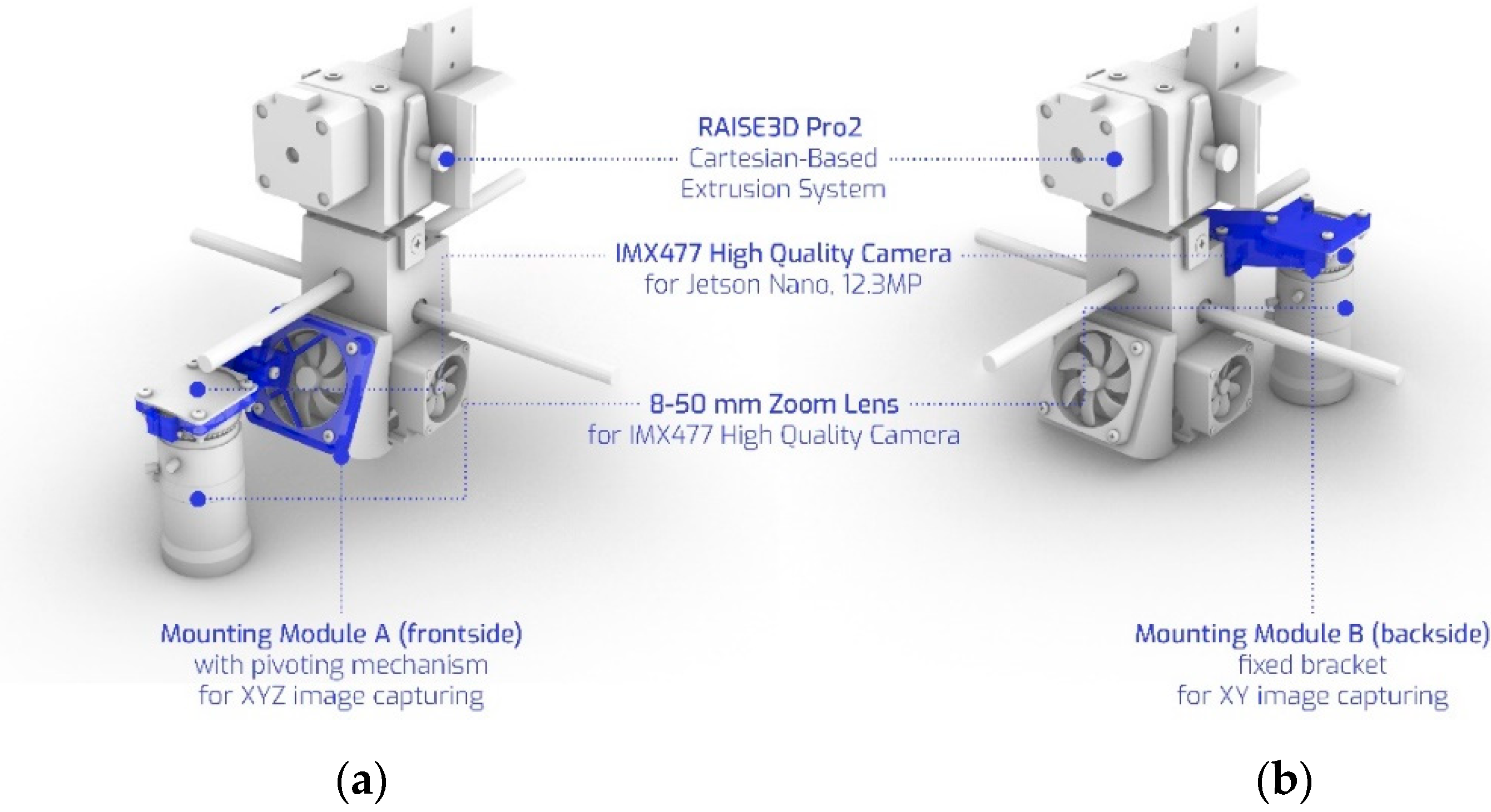

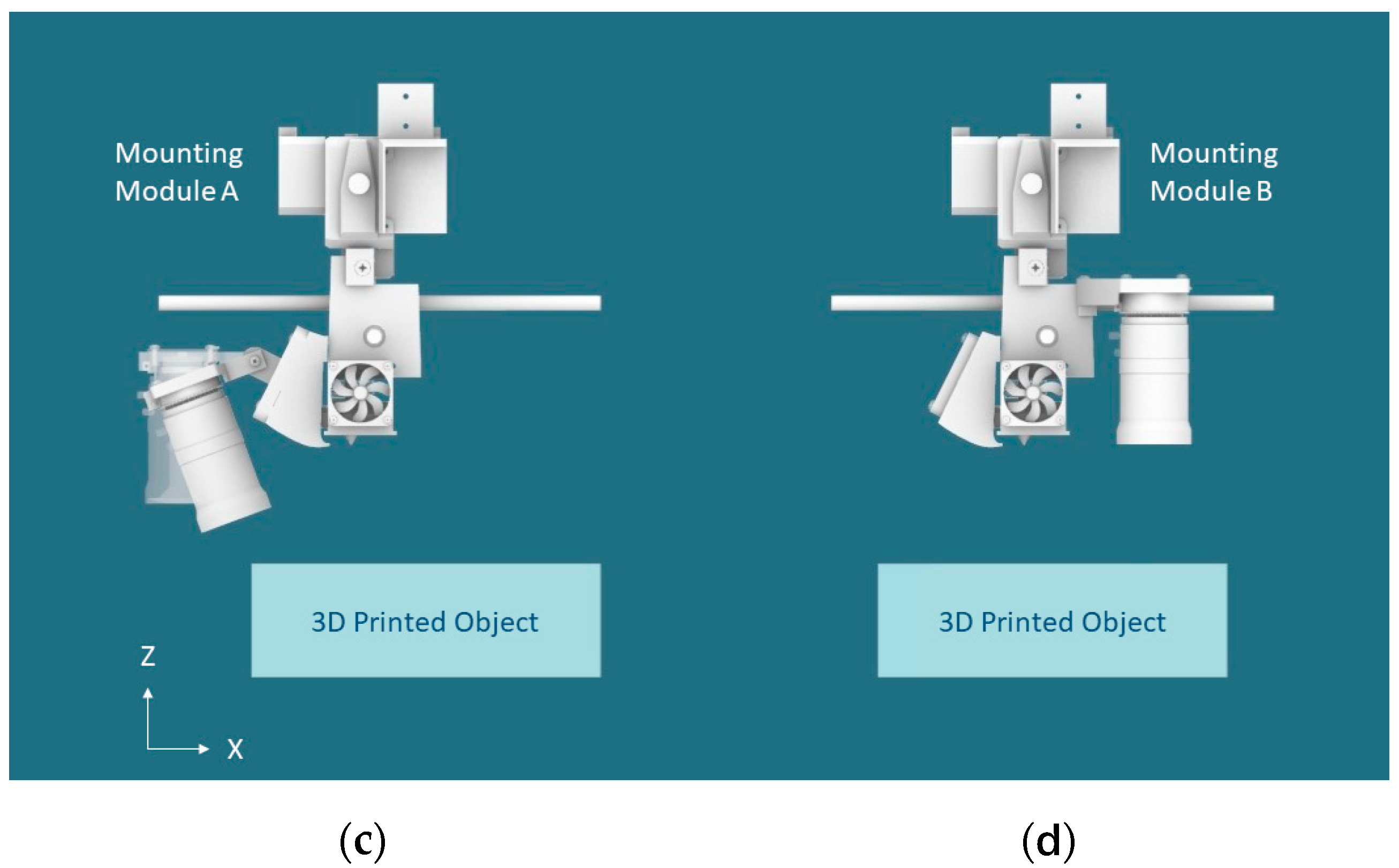

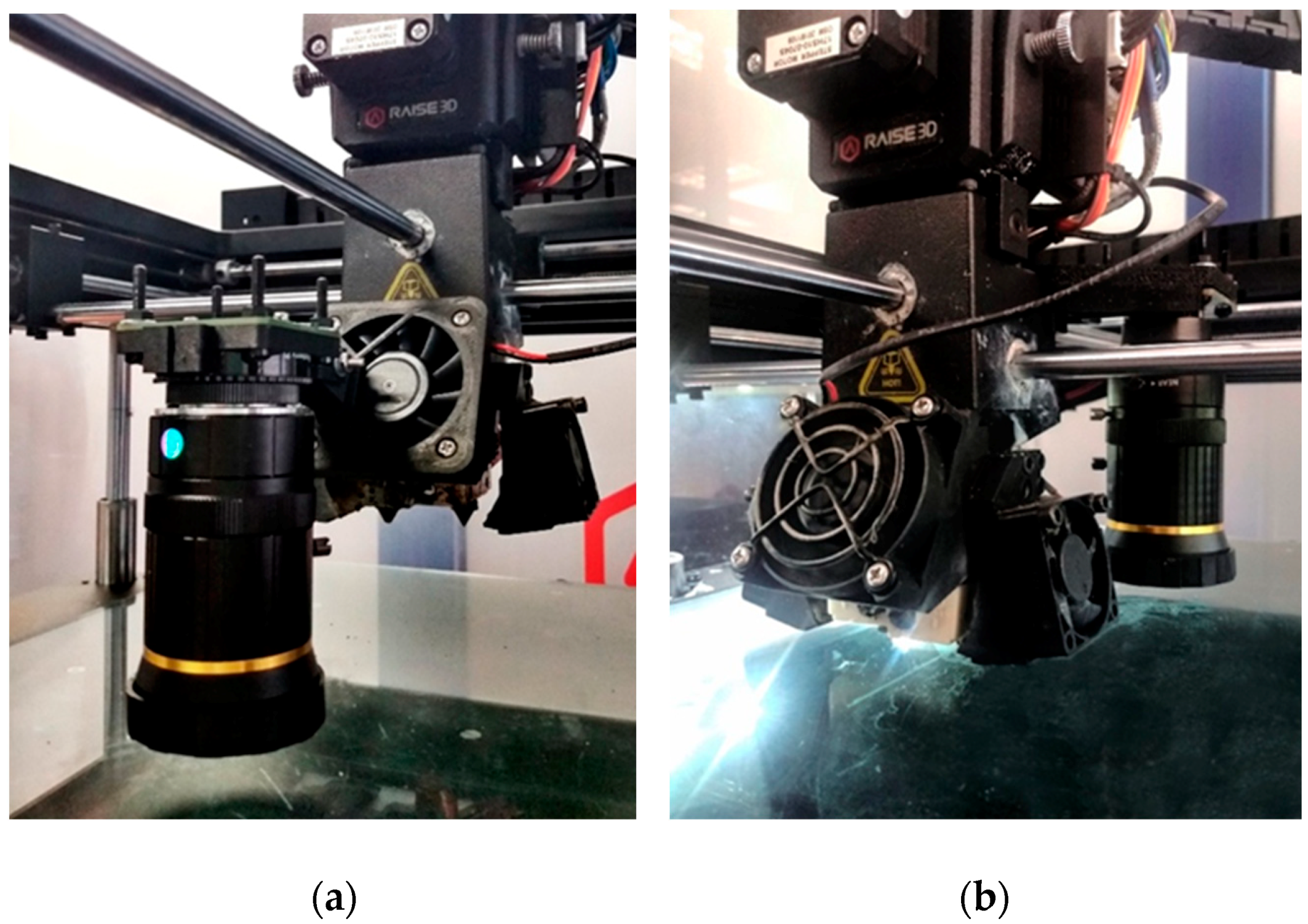

2.1. AM Hardware and 3D-Printed Customizable Cools for Detection Devices Integration

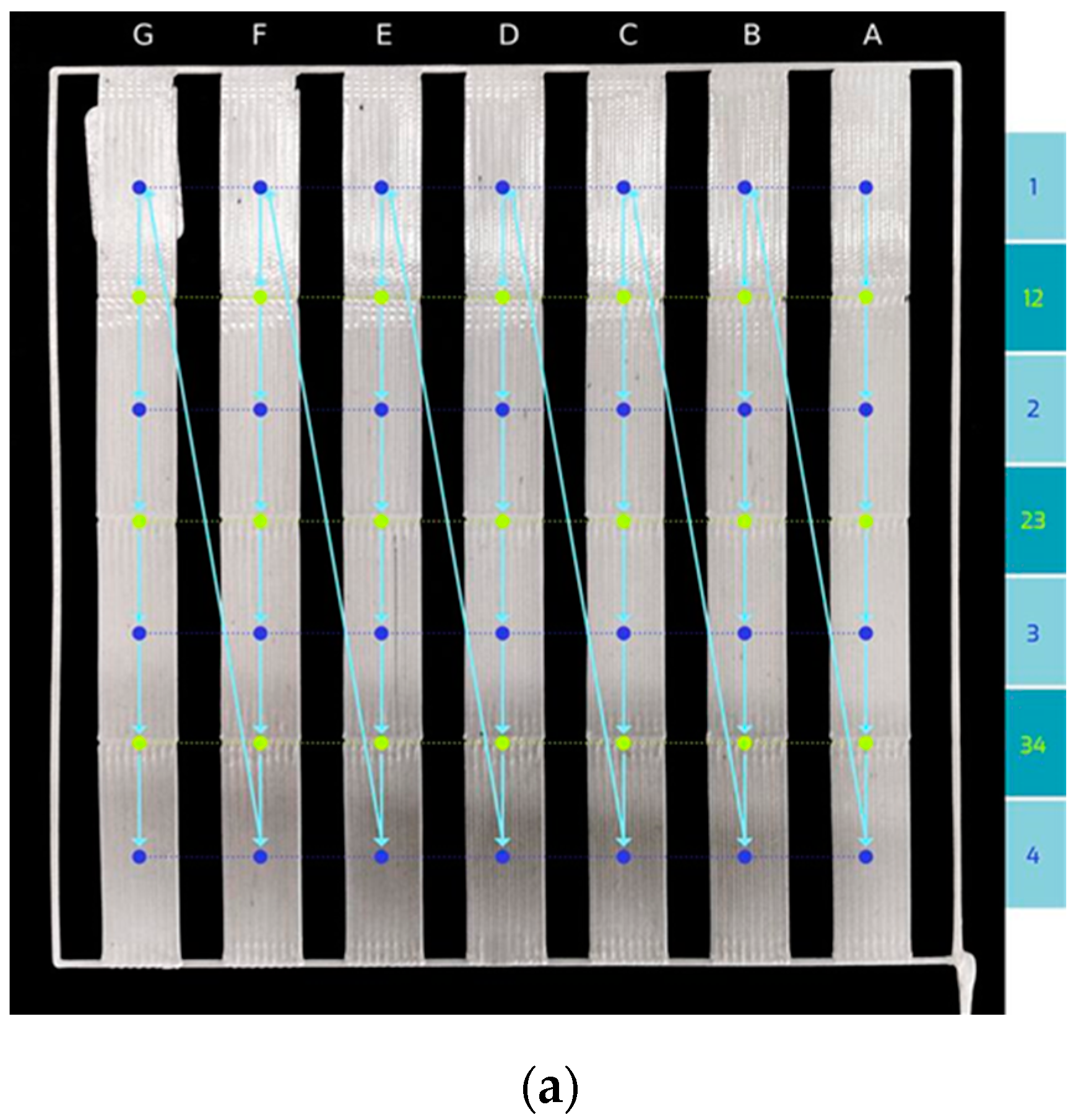

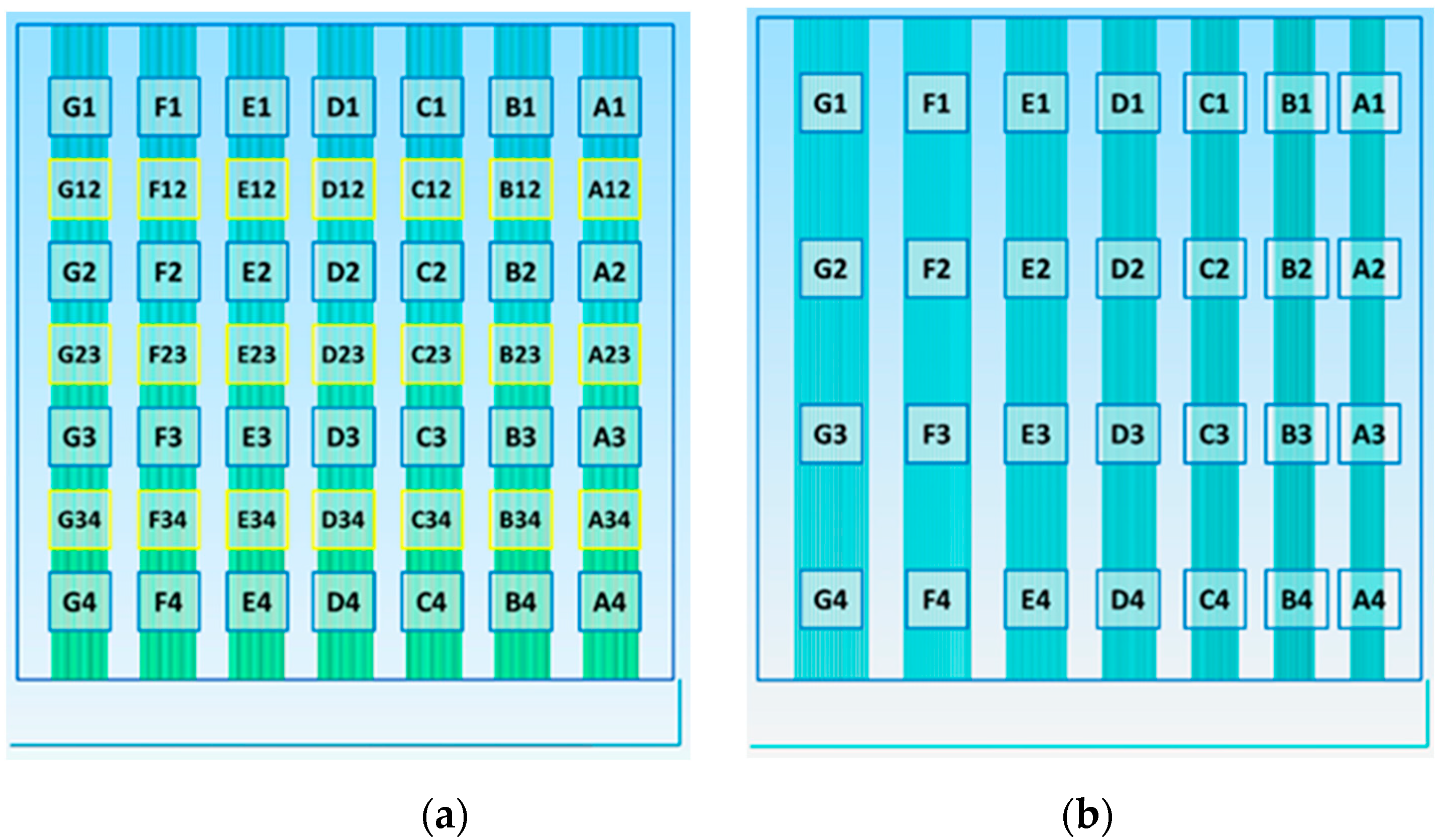

2.2. FFF Testing Specimens

2.3. Hardware Setup

- Focal Length: 16 mm;

- Aperture: F1.4-16;

- Dimensions: 39 mm × 50 mm.

2.4. Training and Methodology

2.4.1. Annotation Labels

2.4.2. Training

2.5. Custom G-Code and Image Capturing

2.6. Defined Workflow

- (1)

- results.csv

| Filename | Label | x | y | Width | Height |

| … | … | … | … | … | … |

- (2)

- processed_results.csv

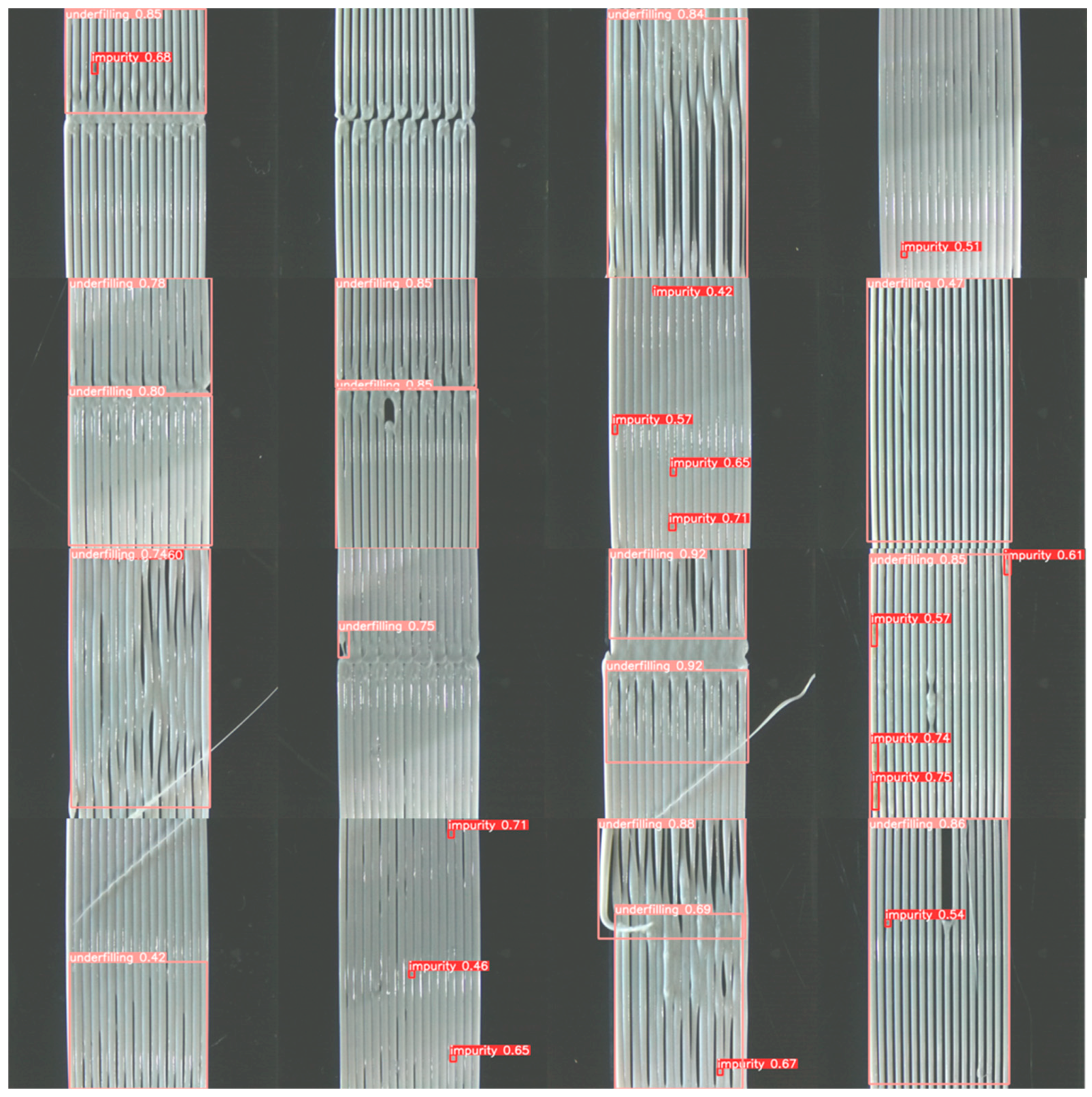

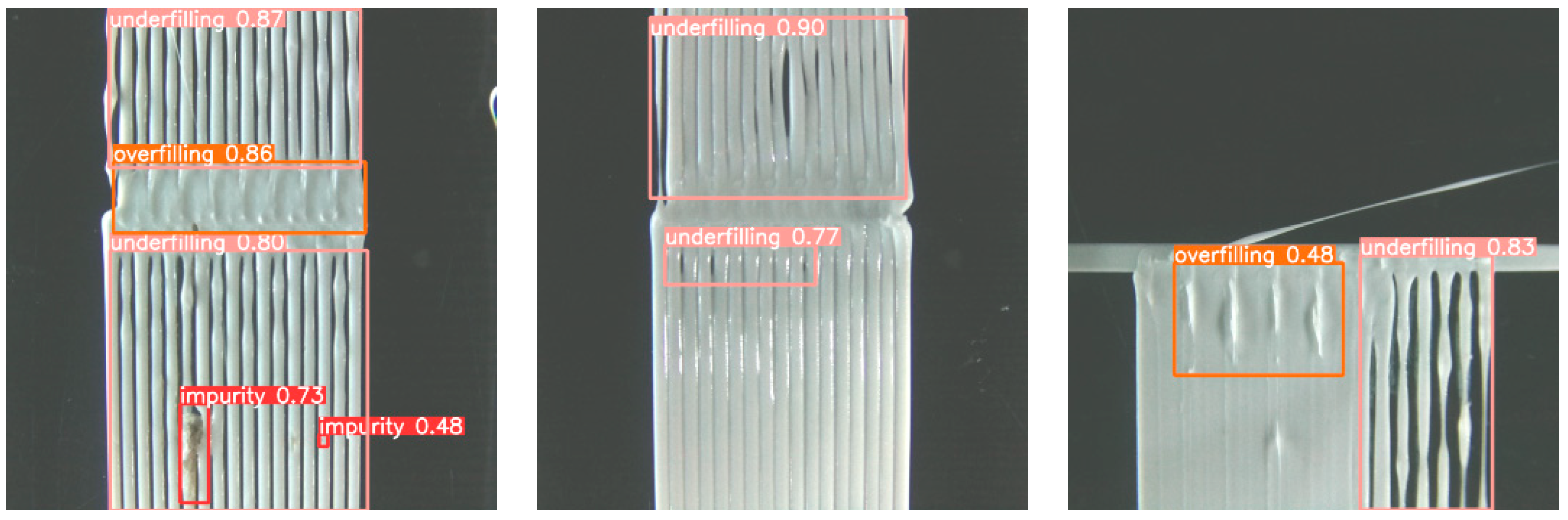

3. Results and Discussion

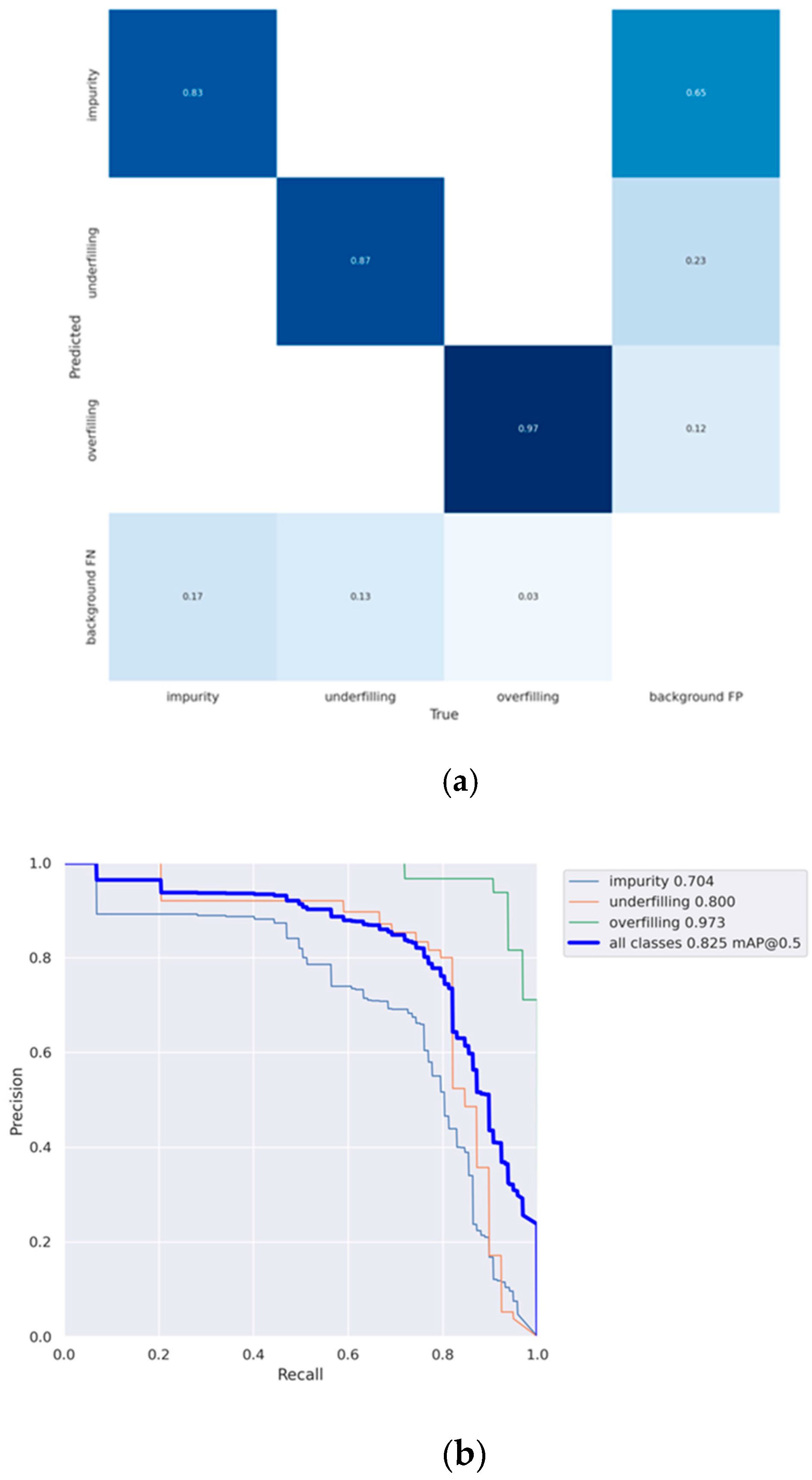

Images, Defects, Ground Truth over Prediction

- True positive (TP) is when a prediction-target mask (and label) pair have an IoU score, which exceeds a predefined threshold;

- False positive (FP) indicates a predicted object mask that has no associated ground truth object mask;

- False negative (FN) indicates a ground truth object mask that has no associated predicted object mask;

- True negative (TN) is the background region correctly not being detected by the model, these regions are not explicitly annotated in an instance segmentation problem, thus we chose not to calculated it;

- Accuracy = TP TP + FP + FN;

- Precision = TP TP + FP.

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Butt, J.; Bhaskar, R.; Mohaghegh, V. Non-destructive and destructive testing to analyse the effects of processing parameters on the tensile and flexural properties of FFF-printed graphene-enhanced PLA. J. Compos. Sci. 2022, 6, 148. [Google Scholar] [CrossRef]

- Holzmond, O.; Li, X. In situ real time defect detection of 3D printed parts. Addit. Manuf. 2017, 17, 135–142, ISSN 2214-8604. [Google Scholar] [CrossRef]

- Shen, H.; Sun, W.; Fu, J. Multi-view online vision detection based on robot fused deposit modeling 3D printing technology. Rapid Prototyp. J. 2019, 25, 343–355. [Google Scholar] [CrossRef]

- Fastowicz, J.; Grudziński, M.; Tecław, M.; Okarma, K. Objective 3D Printed Surface Quality Assessment Based on Entropy of Depth Maps. Entropy 2019, 21, 97. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Phoha, V.V.; Moon, Y.B.; Belman, A.K. Detecting malicious defects in 3D printing process using machine learning and image classification. ASME Int. Mech. Eng. Congr. Expo. Proc. 2016, 14, 4–9. [Google Scholar] [CrossRef]

- Khan, M.F.; Alam, A.; Siddiqui, M.A.; Alam, M.S.; Rafat, Y.; Salik, N.; Al-Saidan, I. Real-time defect detection in 3D printing using machine learning. Mater. Today Proc. 2020, 42, 521–528. [Google Scholar] [CrossRef]

- Goh, G.D.; Sing, S.L.; Yeong, W.Y. A review on machine learning in 3D printing: Applications, potential, and challenges. Artif. Intell. Rev. 2021, 54, 63–94. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32, pp. 8024–8035. Available online: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf (accessed on 15 May 2022).

- Shaqour, B.; Abuabiah, M.; Abdel-Fattah, S.; Juaidi, A.; Abdallah, R.; Abuzaina, W.; Qarout, M.; Verleije, B.; Cos, P. Gaining a better understanding of the extrusion process in fused filament fabrication 3D printing: A review. Int. J. Adv. Manuf. Technol. 2021, 114, 1279–1291. [Google Scholar] [CrossRef]

- Spoerk, M.; Gonzalez-Gutierrez, J.; Sapkota, J.; Schuschnigg, S.; Holzer, C. Effect of the printing bed temperature on the adhesion of parts produced by fused filament fabrication. Plast. Rubber Compos. 2018, 47, 17–24. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- LabelImg, T. Free Software: MIT License. 2015. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Computer Vision–ECCV 2014; Lecture Notes in Computer Science; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693. [Google Scholar] [CrossRef]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. arXiv 2012. [Google Scholar] [CrossRef]

- Biewald, L. Experiment Tracking with Weights and Biases. 2020. Available online: https://www.wandb.com/ (accessed on 20 May 2022).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021. [Google Scholar] [CrossRef]

- Bai, J.; Lu, F.; Zhang, K. ONNX: Open neural network exchange. In GitHub Repository; GitHub: San Francisco, CA, USA, 2019; Available online: https://github.com/onnx/onnx (accessed on 20 May 2022).

| Defect Type | Annotated Bounding Boxes |

|---|---|

| Underfill | 475 |

| Overfill | 178 |

| Impurity | 154 |

| Category | Value | Description |

|---|---|---|

| epochs | 100 | total epochs of training |

| batch_size | 64 | total batch size for all GPUs |

| imgsz | 640 | train, val image size (pixels) |

| optimizer | SGD | optimizer function |

| lr0 | 0.03826 | initial learning rate |

| momentum | 0.65250 | SGD momentum |

| weight_decay | 0.00009 | optimizer weight decay |

| warmup_epochs | 3.0 | warmup epochs (fractions ok) |

| warmup_momentum | 0.8 | warmup initial momentum |

| warmup_bias_lr | 0.8 | warmup initial bias lr |

| box | 0.05 | box loss gain |

| cls | 0.5 | cls loss gain |

| cls_pw | 1.0 | cls BCELoss positive_weight |

| obj | 1.0 | obj loss gain (scale with pixels) |

| obj_pw | 1.0 | obj BCELoss positive_weight |

| iou_t | 0.4436 | IoU training threshold |

| anchor_t | 7.538 | anchor-multiple threshold |

| f1_gamma | 0.0 | focal loss gamma (efficientDet default gamma = 1.5) |

| hsv_h | 0.015 | image HSV-Hue augmentation (fraction) |

| hsv_s | 0.7 | image HSV-Saturation augmentation (fraction) |

| hsv_v | 0.4 | image HSV-Value augmentation (fraction) |

| fliplr | 0.5 | image flip left-right (probability) |

| Display Defect Number | Defect Type |

|---|---|

| 0 | Impurity |

| 1 | Underfill |

| 2 | Overfill |

| Loss Type | Train Value | Validation Value |

|---|---|---|

| Box Loss | 0.037 | 0.046 |

| Object Loss | 0.018 | 0.012 |

| Class Loss | 0.003 | 0.002 |

| Dataset | mAP (%) |

|---|---|

| Overall | 82 |

| Impurity | 70 |

| Overfill | 97 |

| Underfill | 80 |

| Filename | Label | x | y | w | h |

|---|---|---|---|---|---|

| 1040_(1)_PLA_WHITE_a2.png | 0 | 0.270833 | 0.384722 | 0.0194444 | 0.0277778 |

| 1040_(1)_PLA_WHITE_a2.png | 1 | 0.460417 | 0.490972 | 0.518056 | 0.981944 |

| 1040_(1)_PLA_WHITE_c23.png | 1 | 0.477083 | 0.705556 | 0.523611 | 0.588889 |

| 1040_(1)_PLA_WHITE_c23.png | 1 | 0.473611 | 0.202083 | 0.519444 | 0.404167 |

| 1040_(1)_PLA_WHITE_f1.png | 0 | 0.495139 | 0.574306 | 0.0208333 | 0.0263889 |

| 1040_(1)_PLA_WHITE_f1.png | 0 | 0.648611 | 0.886111 | 0.0222222 | 0.025 |

| 1040_(1)_PLA_WHITE_f1.png | 0 | 0.640972 | 0.0555556 | 0.0208333 | 0.0305556 |

| 1040_(1)_PLA_WHITE_f34.png | 1 | 0.248611 | 0.352778 | 0.0388889 | 0.1 |

| 1040_(1)_PLA_WHITE_g4.png | 0 | 0.327083 | 0.911806 | 0.0208333 | 0.0236111 |

| 1040_(2)_PLA_WHITE_a4.png | 1 | 0.461111 | 0.486806 | 0.533333 | 0.973611 |

| 1040_(2)_PLA_WHITE_f34.png | 1 | 0.490972 | 0.213889 | 0.526389 | 0.427778 |

| 1040_(2)_PLA_WHITE_f34.png | 1 | 0.490972 | 0.711111 | 0.529167 | 0.552778 |

| 1041_(1)_PLA_WHITE_a2.png | 0 | 0.216667 | 0.318056 | 0.0222222 | 0.0861111 |

| 1041_(1)_PLA_WHITE_a2.png | 0 | 0.710417 | 0.05 | 0.0236111 | 0.0916667 |

| 1041_(1)_PLA_WHITE_a2.png | 0 | 0.218056 | 0.773611 | 0.0277778 | 0.111111 |

| 1041_(1)_PLA_WHITE_a2.png | 0 | 0.222222 | 0.914583 | 0.025 | 0.104167 |

| 1041_(1)_PLA_WHITE_a2.png | 1 | 0.459722 | 0.510417 | 0.516667 | 0.979167 |

| 1041_(1)_PLA_WHITE_b12.png | 0 | 0.322222 | 0.220833 | 0.0222222 | 0.0444444 |

| 1041_(1)_PLA_WHITE_b12.png | 1 | 0.473611 | 0.195833 | 0.519444 | 0.386111 |

| 1041_(1)_PLA_WHITE_c4.png | 1 | 0.477083 | 0.519444 | 0.515278 | 0.961111 |

| 1041_(1)_PLA_WHITE_d2.png | 1 | 0.483333 | 0.764583 | 0.508333 | 0.470833 |

| 1041_(1)_PLA_WHITE_d23.png | 1 | 0.489583 | 0.620833 | 0.523611 | 0.341667 |

| 1041_(1)_PLA_WHITE_d23.png | 1 | 0.491667 | 0.165972 | 0.502778 | 0.331944 |

| 1041_(1)_PLA_WHITE_d4.png | 0 | 0.638889 | 0.936111 | 0.0194444 | 0.025 |

| 1041_(1)_PLA_WHITE_d4.png | 1 | 0.486111 | 0.675694 | 0.472222 | 0.648611 |

| 1041_(1)_PLA_WHITE_d4.png | 1 | 0.460417 | 0.221528 | 0.543056 | 0.443056 |

| 1041_(1)_PLA_WHITE_e1.png | 0 | 0.395833 | 0.0416667 | 0.0166667 | 0.0222222 |

| 1041_(1)_PLA_WHITE_e1.png | 0 | 0.246528 | 0.557639 | 0.0180556 | 0.0375 |

| 1041_(1)_PLA_WHITE_e1.png | 0 | 0.461806 | 0.714583 | 0.0208333 | 0.0319444 |

| 1041_(1)_PLA_WHITE_e1.png | 0 | 0.458333 | 0.918056 | 0.025 | 0.0277778 |

| 1041_(1)_PLA_WHITE_e3.png | 0 | 0.365278 | 0.0180556 | 0.0222222 | 0.0277778 |

| 1041_(1)_PLA_WHITE_e3.png | 1 | 0.491667 | 0.479167 | 0.513889 | 0.958333 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bakas, G.; Bei, K.; Skaltsas, I.; Gkartzou, E.; Tsiokou, V.; Papatheodorou, A.; Karatza, A.; Koumoulos, E.P. Object Detection: Custom Trained Models for Quality Monitoring of Fused Filament Fabrication Process. Processes 2022, 10, 2147. https://doi.org/10.3390/pr10102147

Bakas G, Bei K, Skaltsas I, Gkartzou E, Tsiokou V, Papatheodorou A, Karatza A, Koumoulos EP. Object Detection: Custom Trained Models for Quality Monitoring of Fused Filament Fabrication Process. Processes. 2022; 10(10):2147. https://doi.org/10.3390/pr10102147

Chicago/Turabian StyleBakas, Georgios, Kyriaki Bei, Ioannis Skaltsas, Eleni Gkartzou, Vaia Tsiokou, Alexandra Papatheodorou, Anna Karatza, and Elias P. Koumoulos. 2022. "Object Detection: Custom Trained Models for Quality Monitoring of Fused Filament Fabrication Process" Processes 10, no. 10: 2147. https://doi.org/10.3390/pr10102147

APA StyleBakas, G., Bei, K., Skaltsas, I., Gkartzou, E., Tsiokou, V., Papatheodorou, A., Karatza, A., & Koumoulos, E. P. (2022). Object Detection: Custom Trained Models for Quality Monitoring of Fused Filament Fabrication Process. Processes, 10(10), 2147. https://doi.org/10.3390/pr10102147