Abstract

This study employs a novel 3D engineered robotic eye system with dielectric elastomer actuator (DEA) pupils and a 3D sculpted and colourised gelatin iris membrane to replicate the appearance and materiality of the human eye. A camera system for facial expression analysis (FEA) was installed in the left eye, and a photo-resistor for measuring light frequencies in the right. Unlike previous prototypes, this configuration permits the robotic eyes to respond to both light and emotion proximal to a human eye. A series of experiments were undertaken using a pupil tracking headset to monitor test subjects when observing positive and negative video stimuli. A second test measured pupil dilation ranges to high and low light frequencies using a high-powered artificial light. This data was converted into a series of algorithms for servomotor triangulation to control the photosensitive and emotive pupil dilation sequences. The robotic eyes were evaluated against the pupillometric data and video feeds of the human eyes to determine operational accuracy. Finally, the dilating robotic eye system was installed in a realistic humanoid robot (RHR) and comparatively evaluated in a human-robot interaction (HRI) experiment. The results of this study show that the robotic eyes can emulate the average pupil reflex of the human eye under typical light conditions and to positive and negative emotive stimuli. However, the results of the HRI experiment indicate that replicating natural eye contact behaviour was more significant than emulating pupil dilation.

1. Introduction

This practical study follows on from two previously published position papers on the application of dilating robotic pupils in HRI [1,2]. Human eyes are commonly referred to as ‘windows to the soul’ as they reflect and encapsulate love, life and sentience, [3]. Pupil size and dynamics (pulsation rate and frequency) are important components of eye contact interfacing during human communication as they act as subconscious visual cues of emotional state and attention [4,5]. However, pupil dilation alone is not an accurate representation of an emotional state as the effect is synergistic, incorporating other facial features such as eyebrows, mouth and cheeks to display recognisable human emotions [6]. This condition is significant in HRI as engineers continually neglect dynamic pupils in robotic eyes, which make RHRs eyes look cold and lifeless [7,8]. Therefore, pupil dilation is a crucial consideration in the development of RHRs with embodied artificial intelligence (EAI) for HRI as pupils emit visual signals during face-to-face communication. Direct eye contact is the primary mode of interpersonal interfacing in human interaction as it establishes attention, emotional state, trust and security [9].

The irregularities commonly observed in traditional glass and acrylic prosthetic eyes have the potential to reduce visual authenticity and instigate the uncanny valley effect (UVE). However, previous robotic eye prototypes with dilating pupils are incapable of responding to both light and emotion, which is not indicative of the sensory capabilities of the human eye [1]. This consideration is significant per the Uncanny Valley (UV) hypothesis, which suggests that RHRs fall into the UV as they lack organic nuances such as pupil dilation and accurate lip-sync. The code, video footage and CAD materials for this project are available in the GitHub repository in the supplementary materials section.

2. The Importance of Eye Contact Interfacing in HRI

Prolonged direct eye contact is considered an aggressive or domineering behaviour in humans and primates, and in some cultures gaze avoidance is associated with dishonesty and mistrust [10]. The results of a recent study [11] into gaze interfacing in HRI concluded that replicating normal eye contact behaviour in HRI is vital for natural communication with humans. This approach is vital towards developing RHRs for naturalistic HRI, as a lack of eye contact can produce adverse feedback [12]. Similarly, if an RHR maintains constant eye contact, it creates an unnerving experience per the UVE, as this is not a normal gaze behaviour. Furthermore, eye contact in HRI plays a crucial role in instigating the flight-or-fight response, as RHRs appear inhuman and the innate human drive is to approach the robotic agent with caution [13,14]. Irregular gaze in HRI has the potential to heighten the UVE if robotic eyes do not move within the natural parameters of the human eyes [15].

Nevertheless, establishing positive eye contact in HRI is dependent on circumstantial factors [16]. For instance, many Eastern cultures perceive direct eye contact as a domineering behaviour, and women, children and lower-class citizens actively avoid making eye contact with their superiors as an act of submission and respect. Conversely, eye contact avoidance in Western cultures is typically associated with lying and underhanded behaviour. However, cultural divergence in eye contact interaction in HRI is observable in numerous Eastern and Western RHRs. For example, Eastern produced RHRs such as Vyommitra, 2020: IND, Jiang Lilai, 2019: CHN, Telinoid, 2006: JAP, JIA JIA, 2016: CHN, Junko Chihira, 2016: JAP, Geminod H1, 2006: JAP, Otonaroid, 2014: JAP, YANG YANG, 2015: CHN, Geminoid DK, 2011: JAP Kodomoroid, 2014: JAP, Actroid DER-2, 2006: JAP, ChihiraAico, 2015: JAP, Erica, 2018: JAP, SAYA, 2009: CHN, ALEX, 2019: RUS and Android Robo-C, 2019: RUS are all void of gaze tracking systems to form natural eye contact interaction with humans.

An RHR named Nadine, 2015: SG, is an exception to this list as the robot has an eye contact interaction system; however, it is important to note that Nadine’s creator ‘Professor Nadia Magnenat Thalmann’ is of Western origins. In comparison, Sophia, 2016: USA, ALICE, 2008: USA, Han, 2015: USA, AI-DA, 2019: UK, BINA 48, 2016: USA, Fred, 2018: the UK, Diego San, 2010, USA Jules, 2008: the USA, and Furhat, 2018: SWD implement eye sensors to simulate human eye contact behaviour. Significantly, none of the above RHRs has dilating pupils; therefore, the influence of pupil dynamics on natural gaze interaction is unknown. Therefore, this study aims to explore this gap in the state-of-the-art robotic eye technology and HRI.

3. Human Eye Dilation to Light and Emotion

The human iris contracts and expands the pupil to regulate light into the retinal gland for precision imaging. This reflex is the primary function of the pupil and a key consideration when developing a robotic eye. The iris is continually adjusting in diameter between 2–8 mm to changing light levels when the eyelids are open [17]. Therefore, the actuation system employed in a synthetic iris has to be highly durable to withstand prolonged use. The higher the brightness, the more the pupil aperture constricts to regulate the light processed by the retina to prevent tissue damage to the sensitive pupil membrane. The average diameter of pupil contraction in daylight conditions regulates between 2–4 mm, and during low-level luminescence, the pupil diaphragm expands in size to enhance the sensitivity of the retinal membrane between 4–8 mm in diameter [18]. Therefore, 3–5 mm is the average pupil dilation range for the synthetic iris membrane to operate during general light conditions. The spectral sensitivity of a healthy human eye ranges between 380 mn and 800 mn, with an average photopic value of 555 mn (optimal retinal response to light), [19]. However, the standard light-dependent resistor (LDR) operates between 400 mn and 600 mn [20]. Although the standard LDR is capable of incremental light processing, a minor adjustment to the photosensitivity of the sensor is needed to account for its inferior light sensitivity. This adjustment is made to the script to increase the output signal from the LDR sensor to the computer. Muscular fibres within the iris membrane control the transitioning between pupil states. [21] The capillaceous tissue strands of the eye are transparent to permit light transference from the iris membrane into the retinal lens. This is an important design factor in the development of a synthetic iris membrane as light transference into the LDR is essential for an accurate photo-response and the control of pupil actuation. To maintain a natural appearance, the LDR requires embedding within the confines of the internal eye framework to reduce exterior sensor visibility which may lessen the robotic eyes visual authenticity. In addition to light regulation, the human iris responds to emotive stimuli. During elevated states of arousal, the pupil involuntarily expands up to 8 mm in diameter [19].

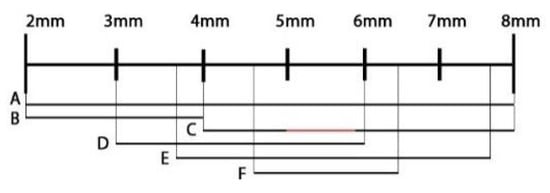

However, a more recent study [22] measuring pupil dilation responses to emotion argues that the maximum and minimum pupil dilation ranges to emotional stimulus is 3.7 mm–7.6 mm (4.6 mm–6.4 mm average). The expansion and contraction of the iris occur less frequently and in a shorter duration when reacting to emotion than during light processing. Thus, the influence of highly emotional stimulus on pupil diameter is proximal to that of low-light processing [20]. The cognitive load processing of external optical stimulus has the most significant impact on pupil dilation [23]. However, audio and touch stimulation also incite a pupil response if the stimulus registers as psychologically arousing. In support of this, a recent study [4] measured the effects of emotive sound on the pupil dilation response of 33 participants. The results of the study suggest that, on average, pupil diameter increased 3–5% from natural ambient sound regulation (4 mm: +0.2 mm/−0.2 mm). A similar study [24] examining eye dilation to touch sensitivity (skin interfacing) concluded skin conduction stimulus evoked a pupil increase of 0.5 mm–1 mm pupil expansion, 3–4%. Thus, pupil dilation to audio and touch stimulation instigates less responsiveness than visual stimulus. However, replicating audible and touch sensitivity are significant design considerations in emulating higher modes of human emotion. Figure 1 shows the effects of an external stimulus on pupil dilation size. These natural pupil ranges and averages configure the variability of a synthetic iris to achieve a more organic pupil emulation for the robotic eye system developed in this study. However, on average, humans blink four times every minute, and the duration of each blink ranges between 1–4 ms [25].

Figure 1.

Human pupil diameter ranges to light and emotion: (A) total pupil range of the human eye; (B) average dilation range in daylight conditions; (C) average pupil dilation in low-level light; (D) average light dilation range; (E) emotive pupil dilation range; (F) the average pupil dilation frequency to emotion.

4. Previous Prototype Robotic Eyes with Dilating Pupils

The following section will analyse a selection of robotic eye prototypes with pupil dilation to examine the advantages and suitability of each system for adaptation into the robotic eye developed in this research. A robotic eye [26] for RHRs named, Pupiloid, has a 3D printed system shutter-style mechanism for emulating pupil dynamics. The design features a speech processing application to autonomously respond to emotive utterances. However, there are numerous design issues with the Pupiloid prototype: Firstly, the shutter mechanism consists of multiple plastic blades that expand and contract using servo-driven actuation. This overly complicated system design prohibits fluidity proximal to the human pupil due to the mechanised nature of the shutter mechanism. This reduces the stability of the system, as pupil dilation in humans is continually adapting to various external stimuli.

Secondly, the eyes pivot on a series of large mechanisms that require significant operational space, which may cause compatibility issues during installation with existing components. Thirdly, the Pupiloid prosthetic cannot respond to light, and visual-emotional stimulus as pupil dynamics outside of the emotive voice recognition function is restricted, resulting in unnatural pupil dynamics during non-verbal communication. Finally, 3D printing intricate components such as the pupil shutter arms are susceptible to fracturing under continual stress, which may be a considerable durability issue when attached to a robotic skin. A similar prototype dilating robotic eye [27] named ‘Animatronic Pupil’, uses a motor-driven camera shutter to simulate human pupil dynamics. However, as in previous models [24], the eyes require substantial space for the eye components to function, thus restricting natural eye movement. The humanoid paediatric robot Heuristically programmed ALgorithmic computer (HAL) utilises a comparable shutter lens system to simulate natural pupil reflex to light [28]. HAL’s pupil mechanism is designed to replicate human eye dilation to light for medical training procedures. Therefore, mechanical stress on the camera shutter mechanism is negotiable as the RHR runs for short periods with the eyes moving left and right. A prototype artificial eye [29] developed for use in RHRs utilises a triangular foam point compressed back and forth against a transparent plastic eye shell to create the effect of pupil dilation.

Although the pupil transition is more fluid than mechanical shutter mechanisms, the system suffers a significant design flaw as the system offsets the central pivot of the eye. This issue causes the eyes to move unnaturally backwards and forwards during operation. Furthermore, the foam membrane prohibits the installation of internal light sensors and camera devices, which reduces the autonomous capabilities of the artificial eye to light and emotion. A dilating medical prosthetic eye [30] with light reactive liquid crystal polymer materials can expand and contract autonomously under variable light conditions. The photosensitive membrane permits light to pass through a series of crystalline layers, making them appear semi-translucent under intensive luminescence, revealing a pupil-like aperture.

Although the system is capable of running for long periods, the synthetic iris membrane is still in the early stages of development. Furthermore, the crystal polymer structure prohibits internal sensors and camera systems, making the eye incompatible with RHRs. Other robotic eyes [31,32,33] for use in medical eye prosthesis utilise a type of artificial muscle membrane known as dielectric elastomer actuators (DEAs). The robotic eyes regulate photosensitivity using a photo-resistor embedded inside the artificial eye module. This method allows light to pass through the synthetic iris much like the translucent membrane fibres of the human eye. The DEA is activated by positive and negative electrodes to create static electricity actuation which compresses the membrane surfaces together, creating a dynamic ellipse. However, the prototypes are aesthetically unrealistic in comparison to previous examples. Moreover, the photo-resistor set up in these examples prohibit pupil responses to emotional stimulus, and the DEA scatter pattern prohibits sensor insertion. Therefore, these configurations are unsuitable for RHRs, but the actuation method and light sensory capability is consistent with human pupil dynamics. Similar DEA systems developed for precision camera lens imaging created by [34,35,36] invert the standard DEA to create a transparent central ellipse. This approach permits the fluid transitioning between camera focal lengths in comparison to the standard shutter focusing mechanism.

Furthermore, a selection of artificial eyes [37,38,39] with liquid crystal display (LCD) screens are incompatible with this study. The LCD prohibit the implementation of sensors to regulate pupil dynamics, and the light of the LCD screen would make the eyes appear to glow in low light environments. The critical analysis of artificial eye prototypes with pupil dynamics highlights a gap in prosthetic eye design, as no system can simultaneously replicate natural pupil responses to alternating light and emotive stimulus. Comparable robotic eyes with organic light-emitting diode (OLED) screens [40,41,42,43] are susceptible to the same compatibility issues as the LCD screen. However, although OLED screens are transparent, they still emit light. Thus, as light emissions from the OLED display may disrupt light input into the photo-resistor and FEA when functioning in low-light conditions, OLED screens are not suitable for this study.

5. Building on the State-of-the-Art in Robotic Eyes Design

Unlike the previous prototypes, the robotic eye system developed in this study incorporates a camera and a photo-resistor to control the pupil reflex to light and emotion. Thus, the DEA scatter configuration is inverted to leave a central non-conductive and transparent ellipse to insert a camera and photo-resistor, as demonstrated in Figure 2. A DEA is an artificial muscle [44] that comprises a transparent silicone foam membrane stretched over a rigid polymer frame coated on each side in conductive graphite or carbon powder. Positive and negative electrodes supply high voltage current to the conductive layers on either side of the silicone foam membrane. The applied electrical force propagates static electricity between the conductive particles on each side of the membrane, forcing the layers together by electrostatic impulse [45].

Figure 2.

Inverted graphite dielectric elastomer actuator (DEA) silicone membrane tested in this study: (A) deactivated DEA (12 mm); (B) activated DEA (5 mm).

EAPs are flexible and precise, and in some instances can generate higher torque for a longer duration than the standard electro-mechanical servo [46]. Moreover, EAPs have no integral moving parts and are less susceptible to mechanical failure or stalling than standard robotic servo motors [47]. However, configuring and applying the DEA membrane to the acrylic frame is essential as DEAs are prone to tearing under high electrostatic stress if incorrectly installed [48]. EAPs require high levels of electrostatic energy to operate; employing protective and preventive design is essential to avoid skin contact with the EAP, which may cause electric shock and damage to the EAP membrane [49]. In consideration, DEA systems similar to [31,32,33] are suitable for application in this study. However, one issue with the carbon grease compound (hydrogel) is the high voltage of the central ellipse to emulate natural pupil dynamics. The robotic eye developed in this study considers graphene, which is an allotrope of carbon that is up to three times more conductive than graphite or carbon powder, due to its single layered nanotube atomic structure.

According to recent research [50,51,52], graphene EAPs require less electrical input for sizable actuation compared to carbon or graphite paste at a similar scatter rate. The single-layer atomic composition of graphene makes the substance almost transparent, permitting light absorption of merely 2–3% of the total light intensity compared to graphite at 20–35% and a standard glass windowpane at 10–20% [53]. This is significant [54] as the spectral light transference reduction rate of the average human iris is approximately 2–2.5% absorbance of the natural light spectrum. Therefore, unlike the graphite and carbon DEAs in the previous section, a graphene DEA membrane allows light to pass through it with minimal loss of light intensity, which is proximal to the light transference of the human iris membrane.

Furthermore, the application of graphene tactile sensors in future robotic skins may be capable of replicating the natural sensory and regenerative capabilities of human skin and muscles [55]. A further consideration is the aesthetic quality of the synthetic iris and the materials used for replicating the sclera tissue. The robotic eyes examined in the previous section fail to reproduce the detailed pigmentation and translucency of the human iris using hand-painted techniques. Therefore, 3D printing and CAD sculpting techniques are used to produce a casting mould to create a colourised gel iris print from an image of a human iris. It is vital in this research to evaluate the use of soft 3D printing materials in replicating the human sclera, as previous prototypes employ hardened materials unlike the soft tissue of the human eye.

6. Robotic Eye Design

This section will detail the tools, materials and software used to create a colour printed, semi-transparent and flexible gelatin iris membrane to cover the DEA actuator. The gelatin iris starts with a blank eye model created in Autodesk Maya using the average dimensions of the living human eye: 24.2 mm (transverse) × 23.7 mm (sagittal) × 22.0–24.8 mm (axial). A high-resolution digital image compressed at 24 megapixels (4800 × 2400 dpi) of a human iris is transformed into an imprint using the stencil tool in Autodesk Mudbox. The iris stencil is inverted and overlaid onto the digital image to precisely map the image and stencil to create a highly detailed 3D duplicate of the human eye. Autodesk Maya exports stereolithography (.STL) files which are printed using a FormLabs 2, stereolithography (SLA) 3D printer (10 microns = 0.01 mm) and Ultimaker Cura. SLA printers use a liquid polymer with Ultra Violet (UV) light to induce photo-polymerisation of resin monomers, as opposed to the thermal compression of polymer layers in standard 3D printing. This printing approach allows for the smooth and detailed casting of the 3D printed model without the significant disfiguration caused by extruder layering.

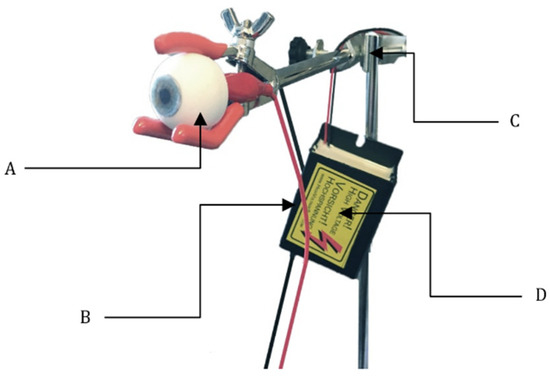

A transparent gelatin cast of the 3D printed iris extracted from the surface of the 3D model with a depth of approximately 0.2 mm provides a flexible and detailed replication of the iris surface. The gelatin brand used in this study is ‘Swallow Sun, Tepung Agar-Ager Crystal Clear Gelatin’, which is a composition of clear powder and de-ionised water (1:4 ratio at 90°). This lowers the electro-conductivity of the material and acts as an insulating barrier between the highly electronically charged EAP membrane and the outer shell of the eye module. The advantage of implementing gelatin over silicone-based materials is that it creates less strain on the EAP foam membrane as gelatin is higher in elasticity than synthetic materials such as silicone and thermoplastic urethane (TPU). The gelatin membrane is coloured using the same high-resolution digital image used in the 3D modelling process (4800 × 2400 dpi) and is screen-printed on gelatin film. This approach ensures the correct scaling and orientation of the gelatin print to match the contours of the gelatin iris membrane. The positional data of the iris layer is extracted from Mudbox and imported as an image map in Adobe Photoshop for printing. The gelatin paper amalgamates with the thin gelatin membrane by applying it to the base of the gelatin compound when curing. It is essential to ensure the DEA membrane functions properly before and after adding the gelatin overlay by running voltage through the DEA, as shown in Figure 3.

Figure 3.

Graphene DEA test without iris overlay to measure the maximum and minimum pupil ranges: (A) DEA deactivated; (B) DEA activated at 1000 v, 2a. Approx. 21% reduction in DEA; (C) DEA activated at 2000 v, 2a. Approx. 58% reduction. DEA test with colourised Iris and gelatin overlay; (D) DEA deactivated; (E) DEA activated at 2000 v, 2a. Approx. 39% reduction in DEA.

6.1. Integrating Gelatin Iris with Graphene Dielectric Elastomer Actuator

The width of the gelatin iris membrane after the application is 0.1–0.2 mm. However, the graphene-infused DEA diaphragm does not permit direct adhesion with the gelatin membrane due to its powdered surface. Therefore, adherence is between the rim of the polymer frame and the outer perimeter of the transparent ellipse. As the foam membrane is adhesive, it bonds with the surface of the gelatin membrane, as shown in Figure 4.

Figure 4.

Robotic eye test set-up for measuring the maximum and minimum pupil dilation range: (A) robotic eye prototype; (B) power supply to the EAP membrane; (C) support stand for the experiment with non-conductive claw; (D) negative ion generator.

6.2. CAD-Designed 3D Printed Thermoplastic Polyurethane Sclera

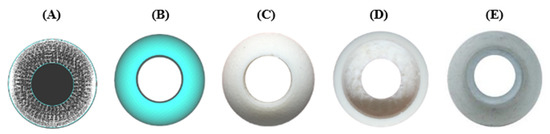

The synthetic sclera is composed of flexible TPU filament and is printed on a Creality CR10-S 3D printer. Figure 6 shows the design pipeline of the synthetic sclera, starting with a 3D model constructed in Autodesk 3DS Max from the eye blank. The model is adapted to include internal hexagonal tubes in the central body and a 1.5 mm rigid surface layer, allowing the sclera shell to be flexible under pressure and maintain a durable outer shell to prevent permanent deformation of the eye. The .STL file is exported from Autodesk 3DS Max into Ultimaker Cura 3D and printed at 270° nozzle and a 100° bed with 0.1 mm layer depth using Ridgid Ink 0.75 TPU filament. This approach mimics the thickness of the human sclera muscle tissue, unlike hardened acrylic, glass artificial and hardened synthetic materials such as polylactic acid (PLA) or acrylonitrile butadiene styrene (ABS), as shown in Figure 5. The flexibility of the TPU polymer ensures the seamless integration of the two eye elements. The sclera shell is detailed using a combination of fine strands of red silk adhered to the TPU using spray adhesive and is colourised using silicone-based paint. Silicone paint is flexible and deforms without cracking, unlike other paint materials such as acrylic, oil and enamel. The sclera shell is attached using flex-gloss polymer spray that acts as a protective barrier to the underlying silicone paint detailing. Finally, the wires for the DEA are sealed inside the TPU shell and kept in place with epoxy putty as an insulating non-conductive barrier between the camera and light sensor and the highly charged cables of the step-up/down voltage converter to minimise electrical interference.

Figure 5.

3D CAD to 3D printed flexible TPU sclera development pipeline: (A) 3D CAD model created in 3DS Max; (B) model compiled in Cura 3D; (C) 3D printed sclera model in TPU front; (D) TPU Sclera rear; (E) finished model with vein details.

6.3. Camera and Photo-Resistor Integration into Robotic Eye

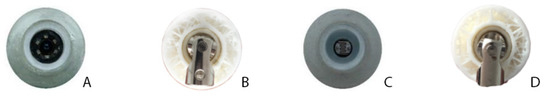

The robotic eyes respond to light using a (GL5528) photo-resistor and detect facial expressions (FE) using a full HD (GT2005) universal serial bus (USB) camera. Numerous USB camera systems, including the 600TVL, 550TVL, SE0004 and U11 1080Pv3 resulted in poor FEA mapping as interference from the DEA affected image quality, unlike the GT2005, as the camera and control board are protected against static interference and encased in separate housing units. The camera and light sensor are positioned underneath the DEA membrane using the central ellipse as a portal for taking light measurements and FEA data. These are encased in a 3D printed ABS ring with rubber mounts to ensure a precision fit within the TPU eye casing to protect the delicate internal components and sensor alignment with the iris portal, as indicated in Figure 6.

Figure 6.

Light sensor and FEA camera implementation into the robotic eye: (A) RGB camera for the FEA application; (B) ball and socket joint; (C) photo-resistor sensor to measure light; (D) flexible mount for the ball and socket joint.

6.4. Control System Hardware and Software Design

The robotic eye DEA membrane operates using a custom-built mains powered (HVGEN_NEG_30KV) DC step-up/down negative Ion generator with an operational voltage range of 12 v–30,000 v, supporting both left and right EAP actuators. An Arduino compatible (XL6019) power shield with a manual command knob controls the voltage input into the step-up/down converter by regulating the input voltage from the power supply unit (PSU) between 0.3 v–12 v. A Spektrum (A3030) brush-less Sub Micro digital servo attached to the manual control knob of the XL6019 shield provides autonomous control of DC voltage into the EAP membrane. An Arduino Uno microprocessor controls the triangulation of the servo using data from the photo-resistor.

To accurately and automatically transpose positional data to the servo using the photo-resistor, the following map constraints were applied in the Arduino scripture (0,1023,0,180) as a mathematical integer. This formula provides a higher (current range) and lower (target range) boundary for the raw incoming light data from the photo-resistor. The higher limit (0–1023) is the luminous flux (LUX) capacity of the photo-resistor, and the lower boundary (0–180) is the range of the servo. The system has a delay of 30 ms to reduce feedback caused by rapid light changes. This approach permits fluid control of the EAP membrane and calibration of the photo-resistor to accurately emulate the natural human pupil responses to alternating light levels, as represented in Figure 7.

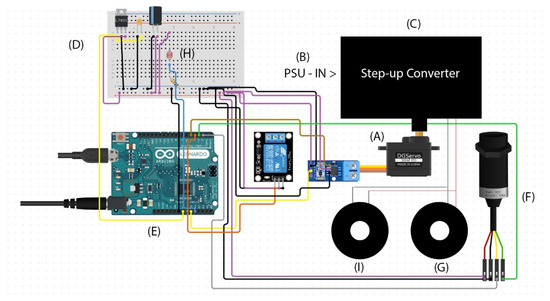

Figure 7.

Robotic Eye System Arduino, DEA, FEA Camera, Photo-resistor and PSU Schematic: (A) micro servo; (B) mains PSU; (C) 30 Kv generator; (D) Breadboard with a manual control knob; (E) Arduino Uno microprocessor; (F) GT2005 USB camera; (G) right EAP actuator; (H) photo-resistor with 200 Ohm resistor; (I) left EAP actuator.

Light frequency is measured continuously using the photo-resistor sensor in the Arduino C++ framework. However, an IF statement in Arduino initiates the incoming serial data from the FEA system.

The open-source FEA to Arduino code [56] using the Affectiva FEA software development kit (SDK) installed on a Mac (OSX) and run on the Xcode application to control a servo motor using FEA is adapted and incorporated into this study. The Affectiva, FEA application detects if an individual is smiling, angry or surprised, and also measures eye contact engagement level. The Arduino micro-controller detects the data stream from the Affectiva processing output of the camera system over a USB serial to control the triangulation of the servo motors to operate within similar pupil parameters to the human eye, depending on the user’s FE. The emulative dilation process in response to positive and negative emotion operates between two common values, relating to the maximum and minimum pupil diameter range. A sequence generator populates values in-between the two ellipse parameters for simulating positive and negative FE for a greater naturalistic dilation response. This process emits a high naturalistic variance of the synthetic pupil to emotion by generating fluctuations of the iris membrane similar to those of the human eye. When the user moves out of the range of the emotion detection camera, a further IF statement is triggered in the Arduino code to revert the system to light-responsive mode.

7. Robotic Eye Testing and Calibration

The pupil reflex of the human eye to light stimulus is measured using a custom-built biometric headset depicted in Figure 8, founded on a previous study in pupil detection technology and light inference [57]. The custom head-mounted device developed for this study incorporates a real-time pupil detection camera and an open-source application called PupilLabs (pupil-labs.com, accessed on 6 April 2021), which calculates the circumference, diameter and acceleration of the pupil during contraction and dilation, and a LUX meter to measure environmental luminescence.

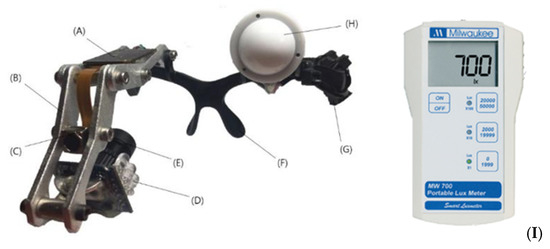

Figure 8.

Pupil and light sensor headset for measuring pupil fluctuations: (A) tracking sensor; (B) aluminium arm; (C) target Camera; (D) Infrared LEDs; (E) pupil-tracking camera; (F) 3D printed ABS nose-pad; (G) LUX sensor UI output; (H) removable light sensor; (I) multilevel LUX meter.

The LUX meter and the infrared LEDs on the pupil tracking camera are changeable to calibrate the equipment. The pupil tracking headset monitors the DEA pupils from the dark and light pixels of the eye. The same evaluation methodology is used to measure the robotic eyes, which is cross analysed by comparatively evaluating the data output from the PupilLabs application. The test for examining pupil responses to light frequencies is modelled on a previous study [58] using a 300 w, 0–4000 Lumens LED studio light with a dimmer switch to increase brightness under manual control and is employed in this study to induce measurable a natural photopic pupil reflex of the eye. The participant sits exactly 1 m away from the light source to maintain relative distance to reduce spatial inconsistency between the user and the stimulus. The luminescent frequency from the LED light source is registered by the head-mounted LUX meter at 0–20,000 Lumens. The test starts in zero luminescence, and light intensity is increased in increments of 500 Lumens up to 5000 lumens using the manual dimmer switch, measuring the output of the LUX meter with the pupil size data from the Pupil Lab application.

The experiment examines the actuation range of the DEA with natural pupil dilation to light. The LED light source is reset to zero and then increased by 500 lumens up to 5000 lumens. The robotic eyes were implanted into an RHR named Baudi, shown in Figure 9, and placed within range of the pupil tracking camera to read the diameter of the synthetic pupils (manual configuration) and 1 m away from the light source. Adjustments were made in the Arduino code to configure the servo to voltage input to match the DEA pupil diameter of the robotic eye with that of the human eye at the same luminosity. The objective of this approach is to determine if the robotic eyes can function within the same pupil range as the human eyes during light processing. The evaluation method for examining emotional pupil responses is modelled on a previous study [59] using negative and positive video-based stimulus to evoke a pupil response. In the test procedure, the subject is seated one meter away from a screen and observes the video stimulus for 60 s; the pupil tracking headset monitors pupil rate and frequency in real-time.

Figure 9.

Robotic eye installation in RHR ‘Baudi’ for testing. Left: Exoskeleton. Right: Finished robot with skin and gelatin eye membranes.

Light measurements are taken regularly to ensure pupil responses are emotional and not a result of light interference. The emotive pupil test implements the pupil tracking camera and light sensor headset from the previous experiment to ensure ambient light and screen brightness do not significantly impact pupil movement. The data is exported from Pupilabs as .txt files for analysis. The robot eyes pulsate using the frequency, rate and range data extracted from the living pupil and scripted into the Arduino control system. Finally, the PupilLabs biometric analysis software monitors the dilation of the robotic eyes, and the data is comparatively examined against the data of the human eye test to determine accuracy. The experiment employs six videos divided into two categories of positive and negative stimulus. The objective of this method is to determine which algorithm has the most accurate pupil pulsation sequence to positive and negative emotion. This is then employed in the robotic eyes to configure the pupil dilation algorithm to positive and negative FE.

8. Robotic Eye Test Results

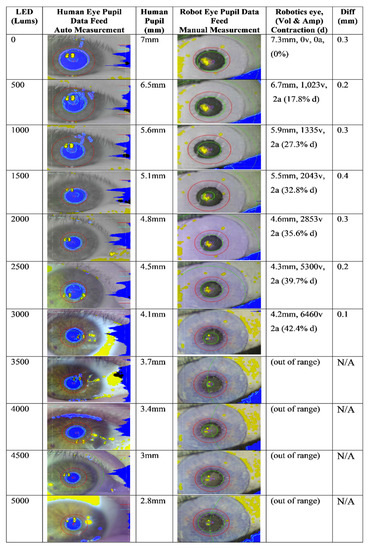

The results of the robotic eye light test indicate that the DEAs functioned between 7.3 mm and 4.1 mm with a 42.4% overall decrease, with an average (optimal) range of 7.3 mm to 4.6 mm (0–2853v@2a). However, the DEAs were unable to operate past 4.1 mm due to the limitations of the gelatin iris overlay. These results do not coincide with previous [60] DEA test results that suggest the maximum strain for silicone-based EAPs is 63%. However, the additional stress of the gelatin iris overlays and running two EAPs on one circuit accounts for the 20.6% reduction in strain. The robotic eyes were incapable of precisely mapping the size of the human pupils due to the incremental steps of the servos. This issue resulted in a fractional difference in pupil size between the human pupil and the synthetic DEA pupil.

Although the robotic eyes were unable to function within the maximum and minimum ranges of the human eye to an alternating light stimulus (7 mm–2.8 mm), they operated within the scope of previous studies [18] and [61] with average pupil dilation to light (1000–3000 lumens) = (5–3 mm)/(5.1–4.2 mm) − 1.1 mm diff, with a 75.8% accuracy rating between the robotic and human eye. Per the results of a recent study, the maximum and minimum human pupil range to emotion is 3.7 mm–7.6 mm. This radius is outside of the EAP range of 4.2 mm–7.3 mm, (0.8 mm diff, 79.4% acc.), as shown in Figure 10. However, the robotic eyes operate within the average pupil range to emotion (4.6 mm–6.4 mm) [22], operating between 7.3 mm–4.2 mm, (0–6460 v). The voltage range is lower than previous studies by [31,32], achieved by replacing graphite with graphene (0–7000/8000 v). Therefore, although the robotic eyes operate slightly outside of the maximum and minimum range of the human pupil reflex to an emotional stimulus, they operate within the scope of the average pupil response range to emotion.

Figure 10.

Comparative light test of the human and robotic eyes to alternating light.

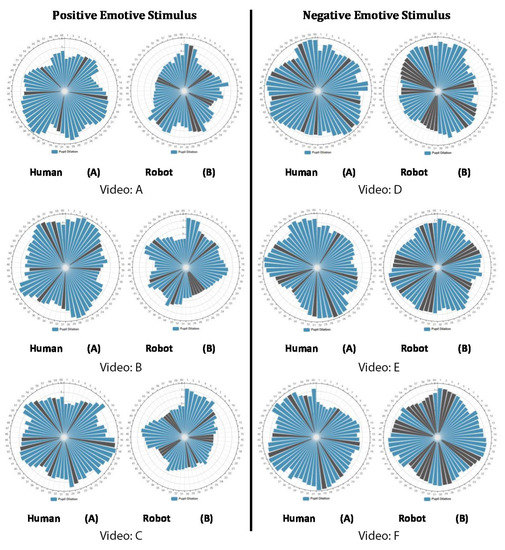

The results of the robot pupil calibration to emotional stimulus suggest that the human pupil responded to a positive stimulus in fluctuations of higher frequencies, rates and ranges compared to the negative stimulus. The following results highlight the accuracy of the robotic eyes’ DEA pulsation algorithm when analysed against the natural pupil reflex of the human eye during the observation of negative and positive video stimuli.

8.1. Results of Positive Video Stimulus Experiment

Video A: human eye, Range 3.3–5.9 mm. Freq 17, Avg Accel 12.4 mm/s. Configures robotic eye algorithm: Range (pos = 35; pos< = 45; pos) Freq: *10) delay (10). Robot eye: Range 7.1–4.3 mm. Freq 24, Avg Accel 16.65 mm/s. The findings indicate Acc: 26.3% (Err: 73.7%) and low consistency in readings: 0.0274. These results suggest the algorithm functioned with a low level of precision over 60 s.

Video B: human eye, Range 4.3–7.1 mm. Freq 19, Avg Accel 13.5 mm/s. Configures robotic eye: Range (pos = 56; pos< = 72; pos) Freq: *19), delay (11) Robot eye: Range 7.4–4.3 mm. Freq 31, Avg Accel 5.69 mm/s. The findings indicate a 65.9% Acc: (Err: 34.1%), variance between the data fields: 0.5158. The results indicate the algorithm functioned with a moderate accuracy over 60 s.

Video C: human eye, Range 3.7–5.9 mm. Freq 15, Avg Accel 12.9 mm/s. Configures robotic eye algorithm: Range (pos = 37; pos< = 45; pos) Freq: *11). Robot eye: Range 7.2–4.3 mm. Freq 26, Avg Accel 6.32 mm/s. The findings of the comparative analysis indicate Acc: 28.1% (Err: 71.9%) variance between the data sets: 0.3301. The results indicate the pupil dilation and contraction algorithm functioned with a low level of accuracy over 60 s.

Therefore, the algorithm used in the Video B analysis achieved the highest levels of accuracy and consistency to control the pupil pulsation responses of the DEA in the Arduino scripture for responding to positive FEA during HRI. However, in Figure 11 it is notable in the results of Videos A, B and C that the robotic eyes spent significant periods in the 4.1–4.4 mm state due to the limited range of the DEA. This issue restricted pupil sequencing below 4.1 mm when mapping the human pupil responses. Thus, this irregularity is most likely a result of surface oscillation interference from high levels of electrical current applied to the DEA during the upper limits of radial actuation.

8.2. Results of Negative Video Stimulus Experiment

Video D: human eye, Range 5.1–6.9 mm. Freq 9, Avg Accel 4.1 mm/s. Configures robotic eye algorithm: Range (pos = 45; pos< = 57; pos) Freq: *9), delay (10). Robot eye: Range 7.3–5.1 mm. Freq 11, Avg Accel 7.65 mm/s. The findings of the comparative analysis indicate Acc 20.9% (Err: 79.1%) and variance between the data fields: 0.1145. These results indicate the pupil algorithm functioned with low accuracy over 60 s.

Video E: human eye, Range 3.6–5.9 mm. Freq 7, Avg Accel 3.5 mm/s. Configures robotic eye algorithm: Range (pos = 35; pos< = 45; pos) Freq: *7), delay (12); Robot eye: Range 7.3–5.4 mm. Freq 12 Avg Accel 8.52 mm/s. The findings of the comparative analysis indicate Acc 32.6% (Err: 67.4%) and variance between the data sets: 0.2317. These results suggest the algorithm functioned with low precision over 60 s.

Video F: human eye, Range 3.9–6.9 mm. Freq 6, Avg Accel 2.9 mm/s. Configures robotic eye algorithm: Range (pos = 37; pos< = 57; pos) Freq: *6). delay (14). Robot eye: Range 7.3–5.7 mm. Freq 5, Avg Accel 3.21 mm/s. The findings of the comparative analysis indicate Acc 60.4% (Err: 39.6%,) and variance between the data sets: 0.122. The results submit that the pupil algorithm functioned with moderate-low accuracy over 60 s.

Thus, the pupil dilation algorithm from Video F achieved the highest level of accuracy and consistency out of the data set. This data configures the robotic eyes to negative FEA to enhance pupil interfacing during HRI. The pupil frequency, range and acceleration rates were marginally randomised to give the robotic eyes greater organic movements in advance of the 60-s time limit. For example, as indicated by double brackets, Video B: positive emotive robotic eye algorithm: Range ((pos = random (54,58); pos2< = random (70,74)); Freq: *10) + ((random (1, 4)). delay ((3,7); +/− 4 ms)) and in Video F: negative emotive robotic eye algorithm: Range (pos = random (35,39); pos2< = random (55;59); Freq: *3) + random (1,3). delay ((12,16); +/− 4 ms)).

Pupil analysis of the DEA pulsations during negative stimulus was more fluid with less interference than during positive stimulus as the range of actuation was within the range of the DEA. Secondly, the robotic eyes were tested with blink functions enabled. Findings suggest that blinking registered as an anomaly in the Arduino serial monitor. However, the acceleration (0.1 s–0.4 s) and frequency (10–15 per min) of the blinking did not affect the light or emotional responses of the robot eyes due to the 0.3 s system delay.

9. Analysis of the Light and Emotion Test Results

Unlike the pupil and DEA range examinations, issues arose when analysing, calibrating and testing the robotic eyes to pulsate within the radial frequencies of the human pupil reflex during the observation of positive and negative stimuli.

1. Blinking affected the mapping of the human pupil in the Pupil-Labs application, which resulted in missing data entries. Thus, to make the data more coherent for review, missing data fields were reconstructed by averaging the previous and proceeding registered pupil measurements. Similar data loss occurred in the robotic eye test as the application frequently stopped and restarted when tracking the DEA pupil, as shown in Figure 11. A potential cause of these issues may consider the irregular elliptical movement of the DEA, poor edge detection in machine learning (ML) and external light interference as a result of DEA dynamics.

Figure 11.

Human and Robot Pupil Dilation to Positive and Negative Emotional Video Stimulus for 60 s. The black lines indicate reconstructed data fields. Hyperlinks to the videos are available in the GitHub repository github.com/carlstrath/Robotic_Eye_System (accessed on 22 August 2021).

2. Although the head-mounted LUX meter indicated that ambient lighting was stable and proximal during the positive and negative video-based stimulus (304–342 lux), the onscreen images were brighter and more rapid during the playback of the positive stimulus compared to the negative. Therefore, image brightness and rapid imagery may have influenced pupil dynamics. However, this issue is difficult to scientifically verify due to the subjective nature of the emotive pupil dilation response.

3. Before the randomisation of pupil pulsation, the rhythmic patterns of servomotor noise replayed over 60-s loops became distracting over time. Although this issue did not affect the functionality of the robotic eyes, randomising variables minimised noticeable sound patterns in servo noise interference. However, random pulsation reduced robotic eye accuracy by approx. −10–15% when re-analysed against the human pupil data.

The test results provided data for calibrating the robotic eyes to respond to emotional FE with a moderate-low level of accuracy. These outcomes are indicative of the reliability and consistency issues in the data due to mapping and aligning problems with the pupil analysis software. Furthermore, the relationship between the human mind (interpretation of data), visual cortex and stimulus is too complex and variable to emulate with high precision.

10. Human-Robot Interaction Experiment

The robotic eye system installed in the RHR ‘Baudi’, pictured in Figure 9, was implemented in a comparative experiment against a similar robot named ‘Euclid’ with non-dilating acrylic eyes, as shown in Figure 12. This experiment is part of a broader study into modelling user preference for embodied artificial intelligence and appearance in realistic humanoid robots [62]. To minimise aesthetic differences, both the robotic and acrylic eyes employed the same iris image, and test subjects were not informed about the dilating robotic eye system before the experiment to minimise influential factors.

Figure 12.

Realistic Humanoid Robot Euclid with non-dilating acrylic Eyes. Left: exoskeleton. Right: RHR with skin.

The HRI experiment consisted of 20 participants and was based on similar HRI studies [63,64]. Test subjects were recruited from the university with core modules in fields relating to computing and AI, including computer programming, application design and AI games programming. The gaze attention of test subjects was measured throughout the experiment with a pupil tracking camera. The HRI test was divided into two 10 min evaluations for each robot, followed by a questionnaire on the appearance and functionality of the robotic eyes.

HRI Test Results and Analysis

Functionality: Twelve out of twenty (60%) cited Euclid’s eyes as moving the most human-like. Of that dataset, 5/12 explained that the movement and direction and eye contact interaction made the RHR appear lifelike, and 2/12 suggested that Euclid made eye contact with them more frequently, which made for a greater authentic HRI [65]. Four out of fourteen argued that Euclid’s eyes moved left/right and up/down with greater synchronicity and 1/14 advocated that Euclid’s eyes appeared to blink less randomly than Baudi’s eyes. Eight out of twenty (40%) of the test subjects stipulated that Baudi’s eyes moved more realistically than Euclid’s. Of this subset, 4/8 explained that Baudi’s eyes moved more realistically and made eye contact more frequently, and 2/8 argued that Baudi’s eyes blinked more humanistically than Euclid’s. Two out of eight mentioned Baudi’s dilating pupils as a significant factor in their decision making. However, out of the total data set, 18/20 of the test subjects did not notice Baudi’s pupil dilation reflex during HRI. These results suggest Euclid’s eyes moved more realistically than Baudi’s eyes. However, as the test subjects were not informed of the pupil dilation reflex of Baudi until the end of the experiment to minimise influential factors, this component appears to have gone mostly unnoticed. A potential reason for this outcome may be the limited range of the pupil dilation reflex due to the gelatine overlay, and distraction from more predominant facial features [66,67,68]. Furthermore, 18/20 (90%) of the test subjects stated that eye movement and contact was a more significant factor than pupil dilation when determining the authenticity of the robotic eyes, as per the UV [69].

Appearance: Fifteen out of twenty (75%) of participants explained that Euclid’s eyes appeared more realistic than Baudi’s. Of those results, 9/15 suggested that the colour and shiny surface of Euclid’s eyes made them look more genuine and 5/15 defined the pupils as being darker, which made the eyes look human; 1/15 gave no clear explanation for their decision.

Four out of twenty (20%) advocated that Baudi’s eyes appeared more realistic, as they looked alive; 2/4 of this subset explained that, although Euclid’s eyes looked human-like, they were cold and dead and Baudi’s eyes appeared alive, this result coincides with previous studies in the UV [70]. One out of twenty (5%) of the test subjects explained that they could not decide, as both eyes looked equally realistic; 75% cited the acrylic eyes as looking more realistic than the gelatin DEA eyes. This suggests that the robotic eyes developed in this study lack the visual quality of the standard acrylic eyes implemented in RHR ocular design. Interestingly, gaze attention was marginally higher in Baudi’s results (78%) compared to Euclid (75%), although, as the difference is marginal, it is difficult to draw a definitive conclusion from the results of the eye-tracking camera, as in similar group research in the UV examining gaze interaction [71]. However, a lack of authentic human presence may be a factor as in previous research in humanoid avatars [72].

11. Conclusions

Unlike previous prototypes, the robotic eyes developed and tested in this study can respond to both light and emotion. However, much like previous dilating artificial eyes, they lack the aesthetic quality of the human eye. The novel gelatin print and 3D iris moulding method study effectively captured the intricate details of the human iris, which is difficult to achieve using the traditional hand painted methods explored in previous robotic eye systems. The robotic eyes are formed from many different components that fit together to form the internal and external structure of the system. Thus, the robotic eyes are not as seamless as glass or acrylic artificial eyes, which affects their appearance.

As the pupils of the robotic eyes are see-through, they do not reflect light in the same way as human eyes, or the black painted pupils of the acrylic artificial eyes.

However, light reflection is only notable in direct sunlight conditions compared to ambient room lighting. The robotic eyes achieved a high level of functional accuracy by implementing a FEA camera system in the left eye and a photo-resistor in the right, allowing the robotic eyes to actuate proximal to the human eye dilation reflex during average light conditions and emotional stimulus. The novel robotic eye configuration accurately emulates the average human pupil dilation to light (0.1–0.4 mm diff.), and the graphene EAP permitted light permeation up to 3500 lumens, which is in the range of the average light permeation of the human iris (1000–3000 lumens). Although the robotic eyes can operate within the average pupil range of the human eyes in response to light and emotion, the application of the gelatin iris membrane on top of the graphene DEA increased voltage input and reduced the operational range. This configuration made pupil sequences difficult to observe with the robotic eyes and head in motion.

In consideration, the pupil analysis software approach was insufficient for gaining accurate positional data, which resulted in the significant reconstruction of the data fields to maintain consistency and permit equitable comparative analysis. Therefore, non-computational methods of pupil tracking may prove more accurate and fruitful than ML approaches due to the mapping issues of the DEA and interference from blinking during real-time analysis. Although the robotic eyes were unable to precisely map the pupil diameter of the human eye (0.0 mm) due to the incremental steps of the servos, the synthetic pupils achieved a proximal dilation within 0.4 mm, indicating a high level of functional accuracy. The synthetic pupils operate within the average range of the human pupil dilation during an emotional stimulus, configured using the results of the literature review and the maximum and minimum EAP ranges from the pupil reflex to light input test. The results of the eye calibration test provide further grounding for the theory that irregular aesthetics supersede quality functionality, and vice versa [73] and [74]. Furthermore, inconsistency and consistency in eye contact behaviour in the HRI experiment had a more significant impact on RHR authenticity than actuated/non-actuated robotic pupils and aesthetic accuracy.

These findings suggest that effective eye contact interaction in HRI is more significant to the authenticity of robotic eye pupil dilation. The literature review uncovered a flaw in the aesthetic detailing of the artificial irises of the robotic eyes, as these components were hand-painted onto the DEA, which resulted in an inaccurate visual representation of the iris. Finally, unlike previous research, this study measured the range, frequency and acceleration of the pupil as well as the effect of pupil dilation and aesthetics during HRI.

12. Future Work

The robotic eyes demonstrated in this research indicate a high potential for accurately in replicating the functionality of the human eye. However, future research should consider methods towards improving the aesthetics of the artificial eyes to promote greater naturalistic HRI. Although eye contact behaviour proved more significant than pupil dynamics in the HRI experiment, this may change with improvements in the realism of RHRs. Per the UV, minor irregularities (such as static pupils) become more noticeable with greater levels of human likeness. Thus, future research using greater authentic RHRs may provide grounds for the significance of emulating natural pupil interfacing in HRI.

Supplementary Materials

The following are available online at https://github.com/carlstrath/Robotic_Eye_System.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Institutional Review Board of Staffordshire University and was approved on 9 July 2019.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Strathearn, C.; Ma, M. Biomimetic pupils for augmenting eye emulation in humanoid robots. Artif. Life Robot. 2018, 23, 540–546. [Google Scholar] [CrossRef]

- Strathearn, C.; Ma, M. Development of 3D sculpted, hyper-realistic biomimetic eyes for humanoid robots and medical ocular prostheses. In Proceedings of the 2nd International Symposium on Swarm Behavior and Bio-Inspired Robotics (SWARM 2017), Kyoto, Japan, 29 October–1 November 2017. [Google Scholar]

- Ludden, D. Your Eyes Really Are the Window to Your Soul. 2015. Available online: www.psychologytoday.com/gb/blog/talking-apes/201512/your-eyes-really-are-the-window-your-soul (accessed on 17 February 2020).

- Kret, M. The role of pupil size in communication. Is there room for learning? Cogn. Emot. 2018, 32, 1139–1145. [Google Scholar] [CrossRef] [Green Version]

- Oliva, M.; Anikin, A. Pupil dilation, reflects the time course of emotion recognition in human vocalisations. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Baird, T.D.; Huang, J.; Coutinho, J.D.; Brien, D.C.; Munoz, D.P. Arousal effects on pupil size, heart rate, and skin conductance in an emotional face task. Front. Neurol. 2018, 9, 1029. [Google Scholar] [CrossRef]

- Reuten, A.; Dam, M.S.; Naber, M. Pupillary responses to robotic and human emotions: The uncanny valley and media equation confirmed. Front. Psychol. 2018, 9, 774. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wuss, R. Teaching a Robot to See: A Conversation in Eye Tracking in the Media Arts and Human-Robot Interaction. 2019. Available online: http://www.interactivearchitecture.org/trashed-10.html (accessed on 17 February 2020).

- Koike, T.; Sumiya, M.; Nakagawa, E.; Okazaki, S.; Sadato, N. What makes eye contact special? Neural substrates of on-line mutual eye-gaze: A hyperscanning fMRI study. Eneuro 2019, 6, 326. [Google Scholar] [CrossRef]

- Jarick, M.; Bencic, R. Eye contact is a two-way street: Arousal is elicited by the sending and receiving of eye gaze information. Front. Psychol. 2019, 10, 1262. [Google Scholar] [CrossRef]

- Kompatsiari, K.; Ciardo, F.; Tikhanoff, V. On the role of eye contact in gaze cueing. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Xu, T.L.; Zhang, H.; Yu, C. See you see me: The role of eye contact in multimodal human-robot interaction. ACM Trans. Interact. Intell. Syst. 2016, 6, 2. [Google Scholar] [CrossRef] [Green Version]

- Admoni, H.; Scassellati, B. Social eye gaze in human-robot interaction: A review. J. Hum. Robot Interact. 2017, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- Broz, H.; Lehmann, Y.; Nakano, T.; Mutlu, B. HRI Face-to-Face: Gaze and Speech Communication (Fifth Workshop on Eye-Gaze in Intelligent Human-Machine Interaction). In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 431–432. [Google Scholar] [CrossRef]

- Ruhland, K.; Peters, C.E.; Andrist, S.; Badler, J.B.; Badler, N.I.; Gleicher, M.; Mutlu, B.; McDonnell, R. A review of eye gaze in virtual agents, social robotics and HCI. Comput. Graph. Forum 2015, 34, 299–326. [Google Scholar] [CrossRef]

- Hoque, M.; Kobayashi, Y.; Kuno, Y. A proactive approach of robotic framework for making eye contact with humans. Adv. Hum. Comput. Interact. 2014, 5, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Riley-Wong, M. Energy metabolism of the visual system. Eye Brain 2010, 2, 99–116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spector, R.H. The pupils. In Clinical Methods: The History, Physical and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990; pp. 256–272. [Google Scholar]

- Gigahertz, O. Measurements of Light. 2018. Available online: www.light-measurement.com/spectral-sensitivity-of-eye/ (accessed on 9 October 2019).

- Zandman, F. Resistor Theory and Technology; SciTech Publishing Inc.: Raleigh, NC, USA, 2002; pp. 56–58. [Google Scholar]

- Edwards, M.; Cha, D.; Krithika, S.; Johnson, M.; Parra, J. Analysis of iris surface features in populations of diverse ancestry. R. Soc. Open Sci. 2016, 3, 150424. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kinner, V.L.; Kuchinke, L.; Dierolf, A.M.; Merz, C.J.; Otto, T.; Wolf, O.T. What our eyes tell us about feelings: Tracking pupillary responses during emotion regulation processes. J. Psychophysiol. 2017, 54, 508–518. [Google Scholar] [CrossRef]

- Hoppe, S.; Loetscher, T.; Morey, S.; Bulling, A. Eye movements during everyday behaviour predict personality traits. Front. Hum. Neurosci. 2018, 12, 105. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.; Miccoli, L.; Escrig, M.; Lang, P. The pupil as a measure of emotional arousal and autonomic activation. J. Psychophysiol. 2013, 4, 602–607. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Munoz, G. How Fast is a Blink of Eyes. 2018. Available online: https://sciencing.com/fast-blink-eye-5199669.html (accessed on 14 March 2020).

- Sejima, Y.; Egawa, S.; Maeda, R.; Sato, Y.; Watanabe, T. A speech-driven pupil response robot synchronised with burst-pause of utterance. In Proceedings of the 26th IEEE International Workshop on Robot and Human Communication (ROMAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 228–233. [Google Scholar] [CrossRef]

- Prendergast, K.W.; Reed, T.J. Simulator Eye Dilation Device. U.S. Patent 5900923, 4 May 1999. Available online: www.google.co.uk/patents/US5900923 (accessed on 9 April 2020).

- Simon, M. This Hyper-Real Robot Will Cry and Bleed on Med Students. 2018. Available online: www.wired.com/story/hal-robot/ (accessed on 12 January 2019).

- Schnuckle, G. Expressive Eye with Dilating and Constricting Pupils. U.S. Patent 7485025B2, 3 February 2006. Available online: https://www.google.com/patents/US7485025 (accessed on 9 April 2018).

- Zeng, H.; Wani, M.; Wasylczyk, P.; Kaczmarek, R.; Priimagi, A. Self-regulating iris based on light-actuated liquid crystal elastomer. Adv. Mat. 2017, 29, 90–97. [Google Scholar] [CrossRef] [PubMed]

- Breedon, J.; Lowrie, P. Lifelike prosthetic eye: The call for smart materials. Expert Rev. Ophthalmol. 2013, 8, 135–137. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, L.; Liu, L.; Zhang, Z.; Jinsong, L. Inflated dielectric elastomer actuator for eyeball’s movements: Fabrication, analysis and experiments. In Proceedings of the SPIE Smart Structures and Materials + Nondestructive Evaluation and Health Monitoring, San Diego, CA, USA, 9–13 March 2008; Volume 6927. [Google Scholar] [CrossRef]

- Chen, B.; Bai, Y.; Xiang, F.; Sun, J.; Mei Chen, Y.; Wang, H.; Zhou, J.; Suo, Z. Stretchable and transparent hydrogels as soft conductors for dielectric elastomer actuators. J. Polym. Sci. Polym. Phys. 2014, 52, 1055–1060. [Google Scholar] [CrossRef]

- Vunder, V.; Punning, A.; Aabloo, A. Variable-focal lens using an electroactive polymer actuator. In Proceedings of the SPIE Smart Structures and Materials + Nondestructive Evaluation and Health Monitoring, San Diego, CA, USA, 6–10 March 2011; Volume 7977. [Google Scholar] [CrossRef]

- Son, S.; Pugal, D.; Hwang, T.; Choi, H.; Choon, K.; Lee, Y.; Kim, K.; Nam, J.-D. Electromechanically driven variable-focus lens based on the transparent dielectric elastomer. Int. J. Appl. Optics 2012, 51, 2987–2996. [Google Scholar] [CrossRef]

- Shian, S.; Diebold, R.; Clarke, D. Tunable lenses using transparent dielectric elastomer actuators. Opt. Express 2013, 21, 8669–8676. [Google Scholar] [CrossRef] [PubMed]

- Lapointe, J.; Boisvert, J.; Kashyap, R. Next-generation artificial eyes with dynamic iris. Int. J. Clin. Res. 2016, 3, 1–10. [Google Scholar] [CrossRef]

- Lapointe, J.; Durette, J.F.; Shaat, A.; Boulos, P.R.; Kashyap, R. A ‘living’ prosthetic iris. Eye 2010, 24, 1716–1723. [Google Scholar] [CrossRef] [PubMed]

- Abramson, D.; Bohle, G.; Marr, B.; Booth, P.; Black, P.; Katze, A.; Moore, J. Ocular Prosthesis with a Display Device. 2013. Available online: https://patents.google.com/patent/WO2014110190A2/en (accessed on 24 April 2020).

- Mertens, R. MIT Robotic Labs Makes a New Cute Little Robot with OLED Eyes. 2009. Available online: https://www.oled-info.com/mit-robotic-labs-make-new-cute-little-robot-oled-eyes (accessed on 12 June 2019).

- Amadeo, R. Sony’s Aibo Robot Dog Is Back, Gives Us OLED Puppy Dog Eyes. 2017. Available online: https://arstechnica.com/gadgets/2017/11/sonys-aibo-robot-dog-is-back-gives-us-oled-puppy-dog-eyes/ (accessed on 12 February 2020).

- Blaine, E. Eye of Newt: Keep Watch with a Creepy, Compact, Animated Eyeball. 2018. Available online: www.makezine.com/projects/eye-of-newt-keep-watch-with-a-creepy-compact-animated-eyeball/ (accessed on 15 February 2020).

- Castro-González, Á.; Castillo, J.; Alonso, M.; Olortegui-Ortega, O.; Gonzalez-Pacheco, V.; Malfaz, M.; Salichs, M. The effects of an impolite vs a polite robot playing rock-paper-scissors. In Social Robotics, Proceedings of the 8th International Conference, ICSR 2016, Kansas City, MO, USA, 1–3 November 2016; Agah, A., Cabibihan, J.-J., Howard, A., Salichs, M.A., He, H., Eds.; Springer: Cham, Switzerland, 2016; Volume 9979. [Google Scholar] [CrossRef]

- Gu, G.; Zhu, J.; Zhu, X. A survey on dielectric elastomer actuators for soft robots. J. Bioinspiration Biomim. 2017, 12, 011003. [Google Scholar] [CrossRef]

- Hajiesmaili, E.; Clarke, D.R. Dielectric elastomer actuators. J. Appl. Phys. 2021, 129, 151102. [Google Scholar] [CrossRef]

- Minaminosono, A.; Shigemune, H.; Okuno, Y.; Katsumata, T.; Hosoya, N.; Maeda, S. A deformable motor driven by dielectric elastomer actuators and flexible mechanisms. Front. Robot. AI 2019, 6, 1. [Google Scholar] [CrossRef] [Green Version]

- Shintake, J.; Cacucciolo, V.; Shea, H.R.; Floreano, D. Soft biomimetic fish robot made of DEAs. Soft Robot. 2018, 5, 466–474. [Google Scholar] [CrossRef] [Green Version]

- Ji, X.; Liu, X.; Cacucciolo, V.; Imboden, M.; Civet, Y.; El Haitami, A.; Cantin, S.; Perriard, Y.; Shea, H. An autonomous untethered fast, soft robotic insect driven by low-voltage dielectric elastomer actuators. Sci. Robot. 2019, 4, eaaz6451. [Google Scholar] [CrossRef]

- Gupta, U.; Lei, Q.; Wang, G.; Jian, Z. Soft robots based on dielectric elastomer actuators: A review. Smart Mater. Struct. 2018, 28, 103002. [Google Scholar] [CrossRef]

- Panahi-Sarmad, M.; Zahiri, B.; Noroozi, M. Graphene-based composite for dielectric elastomer actuator. Sens. Actuators A Phys. 2019, 293, 222–241. [Google Scholar] [CrossRef]

- Duduta, M.; Hajiesmaili, E.; Huichan, Z.; Wood, R.; Clarke, C. Realizing the potential of dielectric elastomer artificial muscles. Proc. Natl. Acad. Sci. USA 2019, 116, 2476–2481. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zurutuza, A. Graphene and Graphite, How do They Compare? 2018. Available online: www.graphenea.com/pages/graphenegraphite.WQEXOj7Q2y (accessed on 20 November 2018).

- Woodford, C. What is Graphene. 2018. Available online: www.explainthatstuff.com/graphene.html (accessed on 29 April 2020).

- Roberts, J.; Dennison, J. The photobiology of lutein and zeaxanthin in the eye. J. Ophthalmol. 2015, 20, 332–342. [Google Scholar] [CrossRef] [Green Version]

- Miao, P.; Wang, J.; Zhang, C. Graphene nanostructure-based tactile sensors for electronic skin applications. Nano-Micro Lett. 2019, 11, 1–37. [Google Scholar] [CrossRef] [Green Version]

- Anding, B. EmotionPinTumbler. 2016. Available online: https://github.com/BenjaminAnding/EmotionPinTumbler (accessed on 15 December 2020).

- Kassner, M.; Patera, W.; Bulling, A. Pupil: An open-source platform for pervasive eye tracking and mobile gaze-based interaction. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014. [Google Scholar] [CrossRef]

- Rossi, L.; Zegna, L.; Rossi, G. Pupil size under different lighting sources. Lighting Eng. 2012, 21, 40–50. [Google Scholar]

- Soleymani, M.; Pantic, M.; Pun, T. Multimodal emotion recognition in response to videos. IEEE Trans. Affect. Comput. 2012, 3, 211–223. [Google Scholar] [CrossRef] [Green Version]

- Brochu, P.; Pei, Q. Advances in dielectric elastomers for actuators and artificial muscles. Macromol. Rapid Commun. 2010, 31, 10–36. [Google Scholar] [CrossRef] [PubMed]

- Mardaljevic, J.; Andersen, M.; Nicolas, R.; Christoffersen, J. Daylighting metrics: Is there a relation between useful daylight illuminance and daylight glare probability? In Proceedings of the Building Simulation and Optimization Conference BSO12, Loughborough, UK, 10–11 September 2012. [Google Scholar] [CrossRef]

- Strathearn, C.; Minhua, M. Modelling user preference for embodied artificial intelligence and appearance in realistic humanoid robots. Informatics 2020, 7, 28. [Google Scholar] [CrossRef]

- Karniel, A.; Avraham, G.; Peles, B.; Levy-Tzedek, S.; Nisky, I. One dimensional Turing-like handshake test for motor intelligence. J. Vis. Exp. JoVE 2010, 46, 2492. [Google Scholar] [CrossRef] [Green Version]

- Stock-Homburg, R.; Peters, J.; Schneider, K.; Prasad, V.; Nukovic, L. Evaluation of the handshake Turing test for anthropomorphic robots. In Proceedings of the 15th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Cambridge, UK, 23–26 March 2020. [Google Scholar] [CrossRef] [Green Version]

- Cheetham, M. The uncanny valley hypothesis: Behavioural, eye-movement, and functional MRI findings. Int. J. HRI 2014, 1, 145. [Google Scholar] [CrossRef]

- Tinwell, A.; Abdel, D. The effect of onset asynchrony in audio visual speech and the uncanny valley in virtual characters. Int. J. Mech. Robot. Syst. 2015, 2, 97–110. [Google Scholar] [CrossRef]

- Trambusti, S. Automated Lip-Sync for Animatronics—Uncanny Valley. 2019. Available online: https://mcqdev.de/automated-lip-sync-for-animatronics/ (accessed on 11 February 2020).

- White, G.; McKay, L.; Pollick, F. Motion and the uncanny valley. J. Vis. 2007, 7, 477. [Google Scholar] [CrossRef]

- Novella, S. The Uncanny Valley. 2010. Available online: https://theness.com/neurologicablog/index.php/the-uncanny-valley/ (accessed on 14 April 2020).

- Lonkar, A. The Uncanny Valley The Effect of Removing Blend Shapes from Facial Animation. 2016. Available online: https://sites.google.com/site/lameya17/ms-thesis (accessed on 12 April 2020).

- Garau, M.; Weirich, D. The Impact of Avatar Fidelity on Social Interaction in Virtual Environments. Ph.D. Thesis, University College London, London, UK, 2003. [Google Scholar]

- Tromp, J.; Bullock, A.; Steed, A.; Sadagic, A.; Slater, M.; Frécon, E. Small-group behaviour experiments in the COVEN project. IEEE Comput. Graph. Appl. 1998, 18, 53–63. [Google Scholar] [CrossRef]

- Ishiguro, H. Android science: Toward a new cross-interdisciplinary framework. J. Comput. Sci. 2005, 28, 118–127. [Google Scholar]

- Schweizer, P. The truly total turing test. Minds Mach. 1998, 8, 263–272. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).