Abstract

Virtual Reality (VR) technology is frequently applied in simulation, particularly in medical training. VR medical training often requires user input either from controllers or free-hand gestures. Nowadays, hand gestures are commonly tracked via built-in cameras from a VR headset. Like controllers, hand tracking can be used in VR applications to control virtual objects. This research developed VR intubation training as a case study and applied controllers and hand tracking for four interactions—namely collision, grabbing, pressing, and release. The quasi-experimental design assigned 30 medical students in clinical training to investigate the differences between using VR controller and hand tracking in medical interactions. The subjects were divided into two groups, one with VR controllers and the other with VR hand tracking, to study the interaction time and user satisfaction in seven procedures. System Usability Scale (SUS) and User Satisfaction Evaluation Questionnaire (USEQ) were used to measure user usability and satisfaction, respectively. The results showed that the interaction time of each procedure was not different. Similarly, according to SUS and USEQ scores, satisfaction and usability were also not different. Therefore, in VR intubation training, using hand tracking has no difference in results to using controllers. As medical training with free-hand gestures is more natural for real-world situations, hand tracking will play an important role as user input for VR medical training. This allows trainees to recognize and correct their postures intuitively, which is more beneficial for self-learning and practicing.

1. Introduction

The continuous development and improvement of Virtual Reality (VR) devices have resulted in new applications and new head-mounted display (HMD) features. Interacting ideas in a virtual world have accompanied the advancement of this technology, enabling users to interact as realistically as possible with the virtual environment. The purpose is to bring users into the virtual world with the same interactions as in the real world. One of the popular ideas is using hand tracking to replace controllers for interactions in the virtual world. It is possible to apply this feature for learning that requires hand interaction, especially in training simulations, such as medicine or manufacturing [1,2].

VR headset trend is likely to be standalone and using inside-out tracking technology [3]. This technology enables the headset to perform 6 Degree of Freedom (6DoF) tracking itself using lights or cameras mounted on the headset to map a user’s space and create digital information about the 3D space that the user is in. The cameras can be used to detect controller positions using image processing and process hand-gesture recognition. Using controllers or hand tracking have different characteristics that should be considered for interactions in VR related to precision to provide accurate control results. VR simulations must ensure quality and a good user experience. Nowadays, many VR controllers are wireless positional tracking. The specific type of algorithm used on VR headset tracking is called Simultaneous Location And Mapping (SLAM) [4]. This technique, along with the matching of controller LEDs across multiple headset cameras, provides controller tracking with high accuracy in 6DoF [5]. When a user moves a hand while holding a controller, the VR system displays the controller position according to the position and rotation of the moving hand. The controls are based on buttons available on the controller, which can design various user-system interactions.

Another technique in VR interaction is to use image processing for hand tracking, such as RGB-based approaches [6,7] or depth-based approaches [8,9], to detect a user’s hand. In the past, these techniques have been employed in additional VR accessories to detect hand positions and gestures, such as Leap Motion (Ultraleap, Bristol, UK), Microsoft Kinect (Microsoft Corp., Redmond, WA, USA), or data glove [10]. However, the inside-out technology [11,12] of VR headsets, such as the Oculus Quest 1-2 (Facebook, Inc., Menlo Park, CA, USA) and HTC Vive Cosmos (HTC Corp., New Taipei, Taiwan), makes it possible to enable hand tracking without any accessories. Hand tracking works by using inside-out cameras on a VR headset. The headset detects the position and orientation of the user’s hands and the configuration of fingers. Once detected, computer vision algorithms are used to track their movement and orientation [13]. Hand tracking is different from using a controller’s buttons. The hand-tracking system detects hand gestures as control commands instead of pushing buttons, which can be used in various applications according to the developer’s design. In the future, inside-out hand tracking will be widely used due to its ease of use without the need for accessories [3]. However, hand tracking with inside-out technology may not yet provide accurate hand detection in real-time. To apply this technology into practice, further studies should investigate to see the effectiveness of using it.

This research focuses on the VR application for training because interactions are focused on realistic results. The interaction between the user and the application is directly related to the choice of the control scheme. This research aims to determine the difference between using controllers and hand tracking when applied to VR training applications.

2. VR Training Using Hand Tracking and Related Works

The use of hand tracking for training has many forms in VR, with a hand gesture design for specifying commands [14]. However, hand tracking has often been used in combination with other devices or techniques to detect hand gestures [15], including Leap motion, data glove, marker-based, and inside-out, which will have the following related tasks.

2.1. Leap Motion

The Leap Motion Controller is a low-cost hand-gesture-sensing device. It can be attached to a VR headset to interact with the virtual environment, enabling accurate and smooth tracking of hands, fingers, and many small objects in open spaces with millimetre precision [16]. Many VR applications have benefited substantially from interacting with natural movements and viewing the user’s hands in VR. The device has been employed in their development [17] as the following research works.

An Oculus Rift (Facebook, Inc., Menlo Park, CA, USA), a Leap Motion tracker, and 360-degree video were used in an interactive VR application for practicing oral and maxillofacial surgery [18,19,20]. This application enables trainees to participate and engage in surgical procedures with the patient’s anatomy visualization. The outcome demonstrated its utility for trainees as visual assistance in a virtual operating room simulation.

VR training on subacute stroke patients [21] explored the impact of using Leap Motion for rehabilitation. Virtual training was used in combination with regular rehabilitation and the control group. The rehabilitation with Leap Motion was more enjoyable and better than traditional treatment by all patients in the experimental group. These results demonstrated that virtual training with Leap Motion was a promising and feasible addition to recovery.

Many research works focused on teaching with VR systems and Leap Motion [22,23], leading to the development of skills and experiences for users. The results showed that VR assisted students in learning from interactions and 3D rendering, followed by more complex creative studies in higher education.

2.2. VR Glove

The VR glove is an additional accessory and usually provides a haptic system to enhance object interaction. The VR glove accurately recognizes hand gestures due to the direct connection of sensors on the glove. Several studies applied force feedback for VR interaction.

There was a study comparing VR gloves to the Leap Motion sensor [24]. The hardware design used an easily simulated glove that detects hand gestures over a network, determines hand position using Vive Tracker, and provides haptic feedback through the vibration motor. The design captures detailed hand poses based on the collision geometry of the virtual hand and the virtual object. The experiments demonstrated the efficiency of capturing various objects with significantly higher success rates of capturing and moving objects in VR compared to Leap Motion sensors. Hand movements and handles were recorded to understand the manipulation of objects, potentially facilitating research in Artificial Intelligence (AI), which can be used to train virtual AI agents for robot gripping tasks.

Developing a sensory glove can be used in medical training to improve the user’s ability. One of the most significant features is VR haptic feedback to stimulate muscles and nerves, allowing users to train through muscle memory. Medical students can learn and comprehend essential techniques faster than traditional learning methods [25].

There were studies compared the use of Vive Controller, Leap Motion, and data glove. Using controllers has average ease of use and greater satisfaction than other devices [26]. However, in terms of usefulness, they were about the same. The research also suggested that having haptic feedback increases user satisfaction. When considering average percentage error between Leap Motion and data glove, Leap Motion was better than data glove in bending angle and presented a high repeatability and high potential for soft finger interaction [27]. However, it is interesting to study hand tracking for interaction, including real-use case comparisons in medical training.

From various works related to hand tracking for medical training, we found that the results of many studies tend to be favourable and encourage the training or rehabilitation to have better results. However, the accuracy of the Leap Motion was restricted due to the sensor. The VR glove is accurate, but it is bulky and inconvenient. The current development trend for VR headsets included embedded cameras on the headset that can use RGB and Depth Camera to directly detect the controller’s position or user’s hand gesture. Therefore, it is interesting to investigate the effects of using controllers and hand tracking on the usability of VR training applications.

3. Interaction Design and Implementation

We designed an experiment by developing a VR application that puts users on different procedures to compare the interaction time in each procedure. The case study was an intubation training in a medical VR application, and participants were medical students to ensure the resulting base on the actual use case. The interactions used in the application focused on selection and manipulation, which are basic 3D interaction tasks in VR trainings [28].

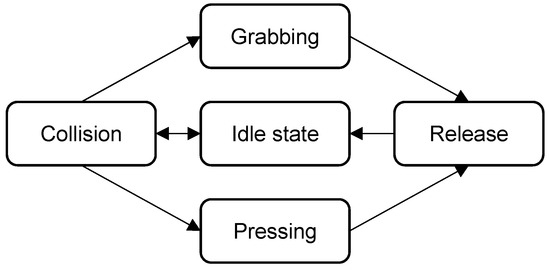

There were four main interactions in our VR application: collision, grabbing, pressing, and release. The collision was used to check the contact between virtual hands and objects in each scene. Some objects have kinematics and can move following virtual hand collision. The grabbing was used for object selection. Once the object was selected, it moved according to the virtual hand movement. The pressing was used for interactions with objects such as pressing, squeezing, etc. When interacting, the object displayed an animation to make the user aware of the interaction. The release was used to cancel the grabbing or pressing interaction and waiting for a new command. The state diagram of interactions is shown in Figure 1. The interaction design of both controller and hand tracking was the same, but the commands were different, as shown in Table 1.

Figure 1.

The interaction state workflow of the VR intubation training.

Table 1.

Differences of interaction commands between controller and hand tracking.

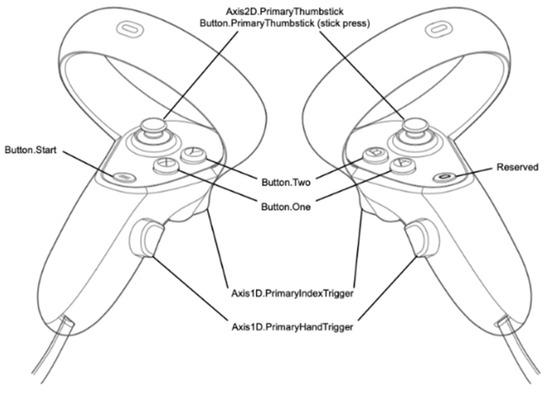

This study requires the use of a VR headset with controllers and hand-tracking technology. Both technologies are supported by the Oculus Quest with Touch controllers and Hand-Tracking feature, making it an appropriate device for the experiment. Therefore, the design of the interaction technique was based on the Oculus Quest with Touch controllers. When using a controller to implement interaction design, commands can be sent directly from the controller’s buttons. Our VR application for intubation training used two buttons: PrimaryHandTrigger and PrimaryIndexTrigger (as shown in Figure 2) for grabbing and pressing, respectively. Instead of seeing controllers in the display, the user sees virtual hands, which detect collisions with certain items in the scene and allow interaction.

Figure 2.

OVRInput API in Unity3D for Touch controller. (source: Oculus Quest controller mapping).

For hand tracking, we needed to create our conditions using hand recognition by two defined hand gestures, grabbing and pressing (Algorithm 1). When performing a specified hand gesture, a conditional interaction command is triggered (Algorithm 2). The virtual hands using hand tracking have the exact collision detection for interaction but follow user hand and finger movement in real-time. All implementations were developed using Unity3D Game Engine with the OVRInput and OVRHand classes provided by the Oculus software development kit (SDK) [29,30].

| Algorithm 1. Gesture detection based on captured hand tracking | |

| 1: | gestures ← grab, pressing |

| 2: | currentGesture ← new gesture |

| 3: | discard ← false |

| 4: | procedure GestureDetector (captured hand tracking) |

| 5: | for gesture in gestures do |

| 6: | for i ∈fingerBones do |

| 7: | distance ← ∑|currentGesture.fingerBones[i]—gesture.fingerBones[i]| |

| 8: | if distance > threshold then |

| 9: | discard ← true |

| 10: | break |

| 11: | end if |

| 12: | end for |

| 13: | if discard = false then |

| 14: | return gesture |

| 15: | end if |

| 16: | end for |

| 17: | end procedure |

| Algorithm 2. Object interaction based on hand gesture | |

| 1: | procedure ObjectInteraction (hand) |

| 2: | if object.collision = hand and gesture = grab then |

| 3: | object.parent ← hand |

| 4: | while gesture ≠ release do |

| 5: | object.transform ← hand.transform |

| 6: | end while |

| 7: | drop object |

| 8: | end if |

| 9: | else if object.collision = hand and gesture = pressing then |

| 10: | object.animation ← pressing animation |

| 11: | while gesture ≠ release do |

| 12: | continue object.animation |

| 13: | end while |

| 14: | object.animation ← original |

| 15: | end if |

| 16: | end procedure |

4. Development of VR Intubation Training

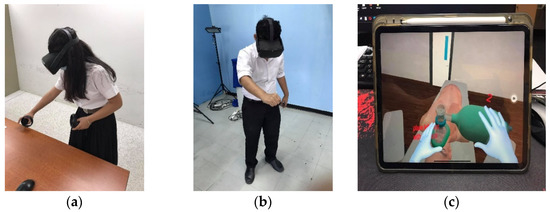

The VR intubation training consisted of seven procedures, each of which is detailed in Table 2. Table 3 shows the interactions of each procedure with selection and manipulation. The endotracheal intubation sequence in VR was built for involvement, and each procedure had objectives to accomplish after the training instruction assistance in VR. Figure 3, Figure 4, Figure 5 and Figure 6 depict virtual environments and assistance text during the training, whereas Figure 7 shows the experiment setup.

Table 2.

The instructions of each procedure in VR intubation training.

Table 3.

Interactions of each procedure in VR intubation training.

Figure 3.

Introduction scene, pick up and drop the equipment according to its name: (a) virtual hand of the controller; (b) selection with controller; (c) selection with hand (grabbing); (d) release (unhand).

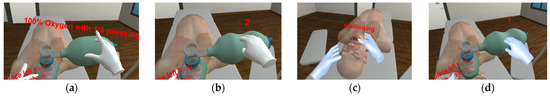

Figure 4.

The scene of sniff position and ambulatory bag pressing: (a) text assistance; (b) pressing with controller; (c) sniff position with hand; (d) pressing with hand.

Figure 5.

The scene of laryngoscope insertion and inflate cuff: (a) laryngoscope insertion with controller; (b) remove laryngoscope; (c) laryngoscope insertion with hand; (d) inflate cuff with hand.

Figure 6.

The scene of ventilation machine connection and verification: (a) connection with controller; (b) verification with controller; (c) connection with hand; (d) verification with hand.

Figure 7.

The experiment: (a) with VR controller; (b) with VR hand tracking; (c) Oculus Quest display cast to the iPad screen in real-time to see user interactions.

5. Research Methodology

We state the research question: are there any differences in interaction time and usability between controllers and hand tracking in VR medical training? The experiment was set up under this research question, and the VR application was created applying both interactions to see the difference in interaction time and usability.

In our experiment, 30 medical students volunteered and participated in the study after providing informed consent. Twenty-eight participants had never used VR headset before, and two of them had used it a few times. They were third-year medical students (undergraduate) taking part in clinical training at Walailak University, Nakhon Si Thammarat, Thailand. We divided the participants into two groups: VR controller and VR hand tracking, each of which contained 15 medical students who participated in different interactions. The protocols were the same in both experiments, but the interactions were different depending on the group. The VR controller group used the controller for interactions, while the VR hand-tracking group used hand gestures

For the selection process, we set an experiment schedule of the hand-tracking group on one Wednesday afternoon and the controller group on another Wednesday afternoon, with 15 time slots each. Next, we demonstrated how to perform the VR training using both methods to all 48 medical students in their third year. According to their curriculum, they had enough knowledge and skills to operate an intubation training but had not yet been trained on this subject before. Then, these 48 students selected one out of 30 time slots for the experiment in accordance with their preference and free time. The group selection closed when all 15 slots were chosen on a first-come, first-serve basis.

The interaction time measurements of each procedure were taken using a timekeeper in the VR application and displayed to the user when the procedure was completed, allowing us to assess the differences of using different VR interactions. Usability and satisfaction were assessed using the System Usability Scale (SUS) [31,32] and USE Questionnaire (USEQ) [33,34] with 5-point Likert-scale questionnaires. The SUS was used to evaluate the usability of VR applications, while the USEQ was used to assess the usefulness, ease of use, ease of learning, and satisfaction.

Before training in VR, basic commands to use in the VR application were introduced to all participants. The VR controller group has learned how to use a VR headset with controllers, while the other group learned how to use hand gestures for interactions. At the beginning of the experiment, all participants of both groups studied endotracheal intubation from a video to understand the basic training procedures for approximately 10 min. Then, each group was tested with the same procedures in VR intubation training but using different interactions with controllers and hand tracking. After finishing all procedures, interaction time measurements were automatically recorded to a database. All participants had to complete the evaluations by answering the SUS and USEQ questionnaires.

Finally, the interviewing process was conducted individually by our experiment crew and took about 10 min per student. It consisted of 19 questions concerning emotional, instrumental, and motivational experiences. The questions asked about feelings and opinions on the experiment as well as suggestions for development of VR medical trainings in general. Participant interviews provided further development information and explored factors that affect VR usability and satisfaction besides the questionnaires.

6. Results and Discussion

Table 4 shows the normality test results of both groups’ SUS scores with respect to the normal distribution. Then, the independent t-test was used as a statistical model to analyze the differences in SUS scores. However, the usefulness, ease of use, ease of learning, and satisfaction from USEQ scores and interaction time were non-parametric distributions. Therefore, the Mann–Whitney test was used to analyze each USEQ score and interaction time of each procedure between the VR controller group and VR hand-tracking group.

Table 4.

Test of normality of SUS scores and USEQ scores of each criterion (* normal distribution p > 0.05).

6.1. SUS Scores

When calculating the SUS scores, the VR controller had SUS scores = 67.17, and the VR hand tracking had SUS scores = 60.17. As a result, the VR controller was almost satisfactory, while VR hand tracking was poor [35]. Even though the VR controller had higher SUS scores, Table 5 shows that the SUS scores for both interactions were not significantly different.

Table 5.

Independent sample t-test results of SUS scores.

6.2. USEQ Scores

Like SUS scores, the usefulness, ease of use, ease of learning, and satisfaction were not different on both interactions, as shown in Table 6. The result implied no difference in usability and satisfaction for VR intubation experiment between using controllers and hand tracking.

Table 6.

Mann–Whitney U test results of learning, training, ease of use, and satisfaction score for VR training.

6.3. Interaction Time

From Table 4, we can see that the SD value of each procedure was relatively high due to the different user interaction times. However, when we tested the difference with U-test, shown in Table 7, the results showed that all procedures have no difference in interaction time. Especially in the laryngoscope procedure with long interaction durations, the p-value was approximately as high, indicating that we have insufficient evidence to conclude that one interaction method is better than the other.

Table 7.

Mann–Whitney U test results of selection and manipulation time for each procedure in VR training.

6.4. Interview Results

Most participants recommended others to use this VR training application because it was easy to understand, fun to learn, something new to try, and was an authentic experience in learning. 3D rendering makes it easier to understand (controllers 14, hand tracking 15). The VR application’s interactions enable for a deeper understanding throughout training (controllers 12, hand tracking 14). However, three participants felt that watching videos was easier to understand than using VR, and interacting with VR did not improve their understanding. Both groups had the same feedback direction; the difference was about the application stability: the VR hand-tracking group reported sometimes losing tracking, leading to repeated interactions.

Participants gave positive comments, such as: “Intubation training with a VR application is easy to learn”, “It was entertaining like playing a game”, “It seemed like doing in a real situation”, and “Touching, grabbing, and moving hands make comprehension easier than studying from the video”.

Some participants gave feedback to improve our VR application: “There should be a guide telling me whether the steps are right or wrong to make it easier to understand”, “Do not let objects pass through the virtual manikin”, “I want a voice to tell me what part has done wrong”, “I want a system with force feedback”, “I want a response at the end of each procedure such as good, excellent to stimulate like playing games”, “Need more detailed description”, “I do not want the auto-snap function, I want the system that notices me if the procedure is right or wrong”, and “I want a time-keeper that looks like a real situation”.

6.5. Discussion

Even though the SUS scores of using controllers and hand tracking were not significantly different, the average SUS scores of the VR controller group were slightly higher than that of the VR hand-tracking group. This result was consistent with the interviews: using push-button was more accessible to command than hand gestures. Users preferred using controllers rather than using hand tracking for interactions. However, using hand tracking was better in terms of realistic rendering and interactions. Pressing and picking up the equipment with the user’s own hands promoted a better understanding of training because of actual handling. Most comments were given from the interviewees of the VR hand-tracking group.

As medical training with free-hand gestures is more natural for real-world situations, we believe that, in the future, hand tracking will be an essential user input for VR medical trainings, especially in operations that require advanced motor skills. Hand tracking allows learners to practice their skills in an intuitive manner without having to worry about manipulating the controller.

6.6. Limitations and Recommendations

Some of the training equipment used in the VR application may have inconsistent appearances, causing some students to become confused throughout the practice test. Sometimes the VR system lost tracking, leaving users confused about what to do, especially on VR hand tracking. In this case, a new firmware upgrade from the VR headset manufacturer on more accurate tracking may help improve interaction with the system.

According to one interviewer, the use of a controller with haptic feedback contributes to user satisfaction. This is a benefit that hand tracking cannot provide without the use of accessories. It is one of the advantages of using a VR controller.

7. Conclusions

From the VR intubation training case study, the results of interaction time investigated in selection and manipulation demonstrate that training with either controllers or hand tracking has no significant difference. We also could not find any significant difference in usability scores using controllers or hand tracking. From the research question, we conclude that using controllers or hand tracking in VR intubation training has no difference in interaction time and usability. The future work of this study is to enhance VR application that enable user-suggested functionality, investigate the factors that influence interaction usability, and simulate realistic interactions for training.

Author Contributions

Conceptualization, C.K. and F.N.; methodology, C.K. and V.V.; software, C.K.; validation, C.K. and V.V.; formal analysis, C.K.; investigation, P.P. and W.H.; resources, C.K., P.P. and W.H.; data curation, C.K., P.P. and W.H.; writing—original draft preparation, C.K. and V.V.; writing—review and editing, C.K., V.V. and F.N.; visualization, C.K., P.P. and W.H.; supervision, F.N.; project administration, C.K.; funding acquisition, C.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Walailak University Research Fund, contract number WU62245.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Human Research Ethics Committee of Walailak University (approval number WUEC-20-031-01 on 28 January 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kamińska, D.; Sapiński, T.; Wiak, S.; Tikk, T.; Haamer, R.E.; Avots, E.; Helmi, A.; Ozcinar, C.; Anbarjafari, G. Virtual reality and its applications in education: Survey. Information 2019, 10, 318. [Google Scholar] [CrossRef] [Green Version]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Khundam, C.; Nöel, F. A Study of Physical Fitness and Enjoyment on Virtual Running for Exergames. Int. J. Comput. Games Technol. 2021, 2021, 1–16. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Tracking Technology Explained: LED Matching [Internet]. Oculus.com. Available online: https://developer.oculus.com/blog/tracking-technology-explained-led-matching/ (accessed on 1 September 2021).

- Tran, D.S.; Ho, N.H.; Yang, H.J.; Baek, E.T.; Kim, S.H.; Lee, G. Real-time hand gesture spotting and recognition using RGB-D camera and 3D convolutional neural network. Appl. Sci. 2020, 10, 722. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Mueller, F.; Bernard, F.; Sorli, S.; Sotnychenko, O.; Qian, N.; Otaduy, M.A.; Casas, D.; Theobalt, C. Rgb2hands: Real-time tracking of 3d hand interactions from monocular rgb video. ACM Trans. Graph. (TOG) 2020, 39, 1–16. [Google Scholar]

- Yuan, S.; Garcia-Hernando, G.; Stenger, B.; Moon, G.; Chang, J.Y.; Lee, K.M.; Molchanov, P.; Kautz, J.; Honari, S.; Kim, T.K.; et al. Depth-based 3d hand pose estimation: From current achievements to future goals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2636–2645. [Google Scholar]

- Tagliasacchi, A.; Schröder, M.; Tkach, A.; Bouaziz, S.; Botsch, M.; Pauly, M. Robust articulated-icp for real-time hand tracking. In Computer Graphics Forum; Wiley: Hoboken, NJ, USA, 2015; Volume 34, pp. 101–114. [Google Scholar]

- Aditya, K.; Chacko, P.; Kumari, D.; Kumari, D.; Bilgaiyan, S. Recent trends in HCI: A survey on data glove, LEAP motion and microsoft kinect. In Proceedings of the 2018 IEEE International Conference on System, Computation, Automation and Networking (ICSCA), Pondicherry, India, 6–7 July 2018; pp. 1–5. [Google Scholar]

- Buckingham, G. Hand tracking for immersive virtual reality: Opportunities and challenges. arXiv 2021, arXiv:2103.14853. [Google Scholar]

- Angelov, V.; Petkov, E.; Shipkovenski, G.; Kalushkov, T. Modern virtual reality headsets. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–27 June 2020; pp. 1–5. [Google Scholar]

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand gesture recognition based on computer vision: A review of techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Du, L.; Harris-Adamson, C.; Barr, A.; Rempel, D. Design of hand gestures for manipulating objects in virtual reality. In Proceedings of the International Conference on Human-Computer Interaction, Vancouver, BC, Canada, 9–14 July 2017; pp. 584–592. [Google Scholar]

- Anthes, C.; García-Hernández, R.J.; Wiedemann, M.; Kranzlmüller, D. State of the art of virtual reality technology. In Proceedings of the 2016 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2016; pp. 1–19. [Google Scholar]

- Guna, J.; Jakus, G.; Pogačnik, M.; Tomažič, S.; Sodnik, J. An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking. Sensors 2014, 14, 3702–3720. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wozniak, P.; Vauderwange, O.; Mandal, A.; Javahiraly, N.; Curticapean, D. Possible applications of the LEAP motion controller for more interactive simulated experiments in augmented or virtual reality. In Optics Education and Outreach IV; International Society for Optics and Photonics: Washington, DC, USA, 2016; Volume 9946, p. 99460P. [Google Scholar]

- Pulijala, Y.; Ma, M.; Ayoub, A. VR surgery: Interactive virtual reality application for training oral and maxillofacial surgeons using oculus rift and leap motion. In Serious Games and Edutainment Applications; Springer: London, UK, 2017; pp. 187–202. [Google Scholar]

- Pulijala, Y.; Ma, M.; Pears, M.; Peebles, D.; Ayoub, A. An innovative virtual reality training tool for orthognathic surgery. Int. J. Oral Maxillofac. Surg. 2018, 47, 1199–1205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pulijala, Y.; Ma, M.; Pears, M.; Peebles, D.; Ayoub, A. Effectiveness of immersive virtual reality in surgical training—A randomized control trial. J. Oral Maxillofac. Surg. 2018, 76, 1065–1072. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.R.; Wang, P.; Xing, L.; Mei, L.P.; Zhao, J.; Zhang, T. Leap Motion-based virtual reality training for improving motor functional recovery of upper limbs and neural reorganization in subacute stroke patients. Neural Rgeneration Res. 2017, 12, 1823. [Google Scholar]

- Vasylevska, K.; Podkosova, I.; Kaufmann, H. Teaching virtual reality with HTC Vive and Leap Motion. In Proceedings of the SIGGRAPH Asia 2017 Symposium on Education, Bangkok, Thailand, 27–30 November 2017; pp. 1–8. [Google Scholar]

- Obrero-Gaitán, E.; Nieto-Escamez, F.; Zagalaz-Anula, N.; Cortés-Pérez, I. An Innovative Approach for Online Neuroanatomy and Neuropathology Teaching Based on 3D Virtual Anatomical Models Using Leap Motion Controller during COVID-19 Pandemic. Front. Psychol. 2021, 12, 1853. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Zhang, Z.; Xie, X.; Zhu, Y.; Liu, Y.; Wang, Y.; Zhu, S.C. High-fidelity grasping in virtual reality using a glove-based system. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5180–5186. [Google Scholar]

- Besnea, F.; Cismaru, S.I.; Trasculescu, A.C.; Resceanu, I.C.; Ionescu, M.; Hamdan, H.; Bizdoaca, N.G. Integration of a Haptic Glove in a Virtual Reality-Based Environment for Medical Training and Procedures. Acta Tech. Napoc.–Ser. Appl. Math. Mech. Eng. 2021, 64, 281–290. [Google Scholar]

- Fahmi, F.; Tanjung, K.; Nainggolan, F.; Siregar, B.; Mubarakah, N.; Zarlis, M. Comparison study of user experience between virtual reality controllers, leap motion controllers, and senso glove for anatomy learning systems in a virtual reality environment. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 851, p. 012024. [Google Scholar]

- Gunawardane, H.; Medagedara, N.T. Comparison of hand gesture inputs of leap motion controller & data glove in to a soft finger. In Proceedings of the 2017 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Ottawa, ON, Canada, 5–7 October 2017; pp. 62–68. [Google Scholar]

- LaViola, J.J., Jr.; Kruijff, E.; McMahan, R.P.; Bowman, D.; Poupyrev, I.P. 3D User Interfaces: Theory and Practice; Addison-Wesley Professional: Boston, MA, USA, 2017. [Google Scholar]

- Map Controllers [Internet]. Oculus.com. Available online: https://developer.oculus.com/documentation/unity/unity-ovrinput (accessed on 1 September 2021).

- Hand Tracking in Unity [Internet]. Oculus.com. Available online: https://developer.oculus.com/documentation/unity/unity-handtracking/ (accessed on 1 September 2021).

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Lund, A.M. Measuring usability with the use questionnaire12. Usability Interface 2001, 8, 3–6. [Google Scholar]

- Gil-Gómez, J.A.; Manzano-Hernández, P.; Albiol-Pérez, S.; Aula-Valero, C.; Gil-Gómez, H.; Lozano-Quilis, J.A. USEQ: A short questionnaire for satisfaction evaluation of virtual rehabilitation systems. Sensors 2017, 17, 1589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Webster, R.; Dues, J.F. System Usability Scale (SUS): Oculus Rift® DK2 and Samsung Gear VR®. In Proceedings of the 2017 ASEE Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).