Self-Reinforcement Mechanisms of Sustainability and Continuous System Use: A Self-Service Analytics Environment Perspective

Abstract

1. Introduction

2. Literature Review

2.1. Sustainability of a System

2.2. Self-Service Analytics Environment

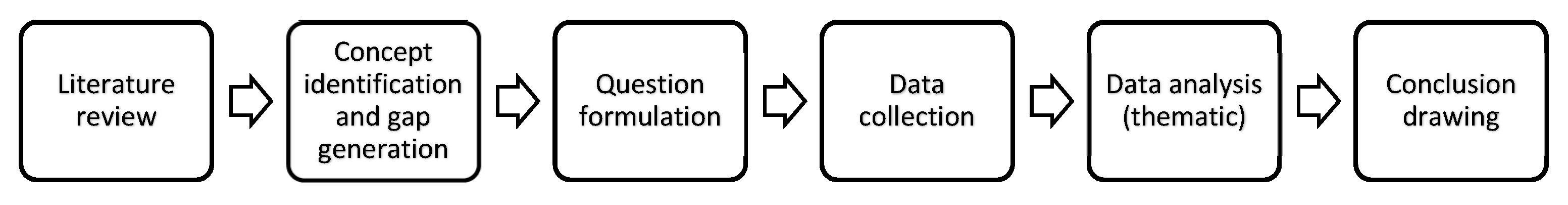

3. Method

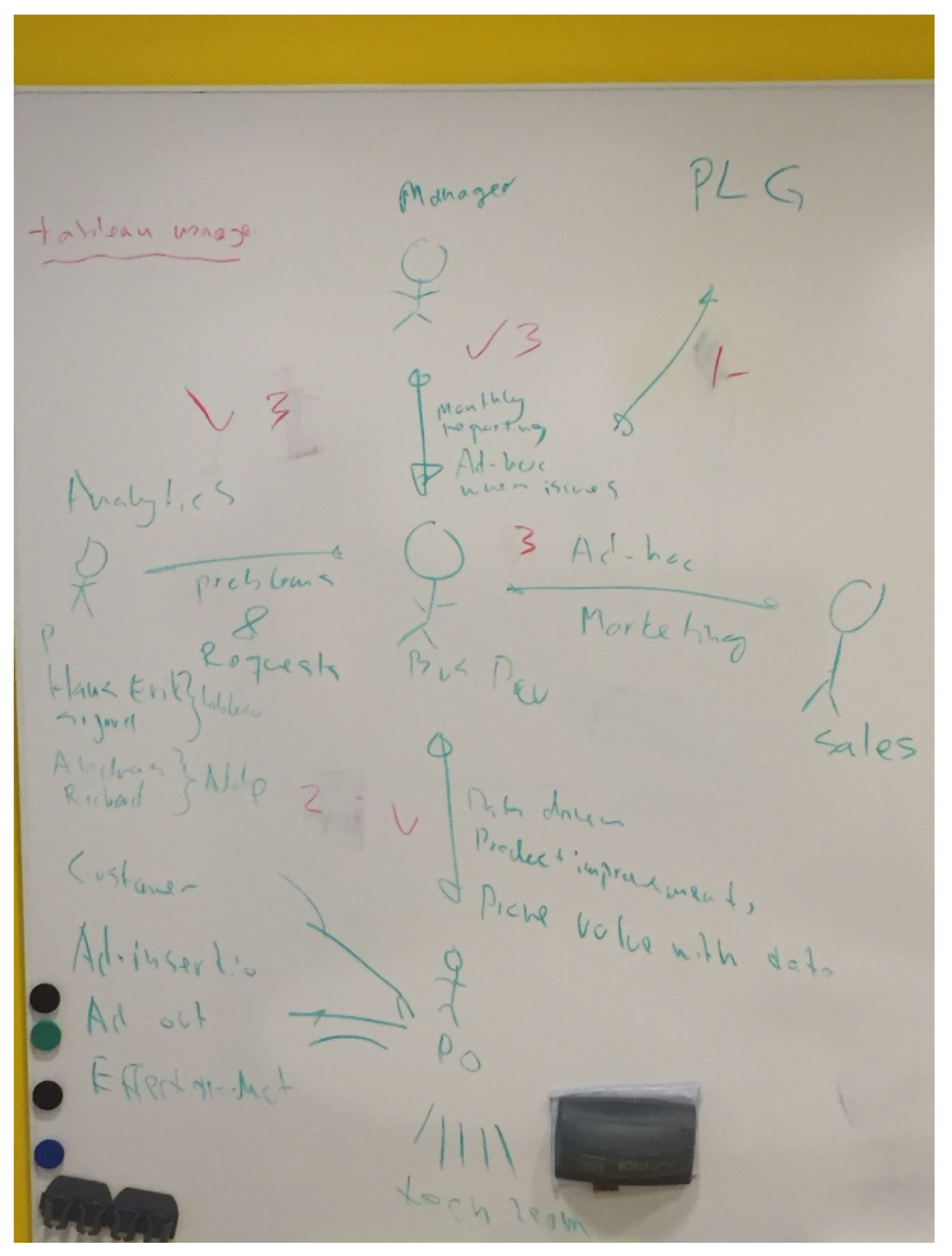

3.1. Case Company

3.2. Data Collection and Analysis

4. Findings

4.1. Environment Value

4.1.1. Fit for Purpose and Performance on Value

4.1.2. Information and System Quality on Utilization

4.1.3. Value-Added Services on Continuous Use

4.2. Personal Capabilities

4.2.1. Trust and Confirmation on Satisfaction

4.2.2. Expectation on Usefulness

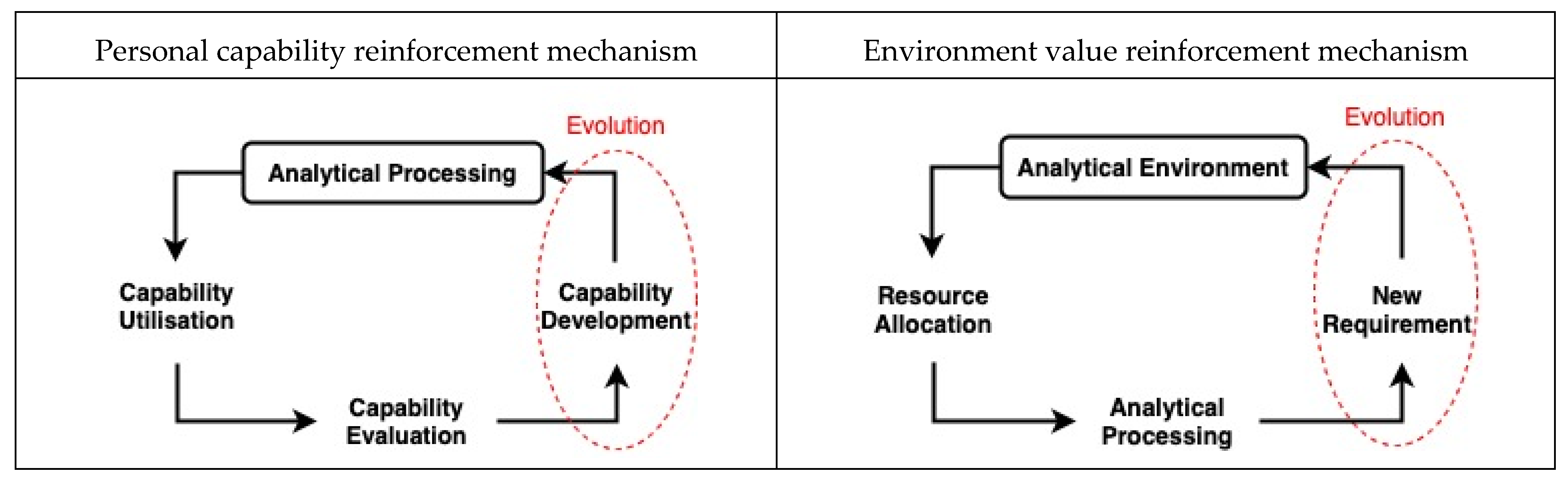

4.3. Self-Reinforcement Property

4.3.1. Collaboration on the Environment Services

4.3.2. Collaboration on the Self-Assessment

4.3.3. Collaboration on Self-Capabilities

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| BA | Business Analytics |

| BI | Business Intelligence |

| BI&A | Business Intelligence and Analytics |

| SSA | Self-Service Analytics |

| IS | Information System |

| PEVA | Perceived Value |

| ECM | Expectation–Confirmation Model |

| ECT | Expectation–Confirmation Theory |

| TAM | Technology Acceptance Model |

| IT | Information Technology |

| SS | Self-Service |

References

- Brozović, D.; D’Auria, A.; Tregua, M. Value Creation and Sustainability: Lessons from Leading Sustainability Firms. Sustainability 2020, 12, 4450. [Google Scholar] [CrossRef]

- Popovič, A.; Turk, T.; Jaklič, J. Conceptual model of business value of business intelligence system. Manag. J. Contemp. Manag. Issues 2010, 15, 5–30. [Google Scholar]

- Yang, M.; Evans, S.; Vladimirova, D.; Rana, P. Value uncaptured perspective for sustainable business model innovation. J. Clean. Prod. 2017, 140, 1794–1804. [Google Scholar] [CrossRef]

- Yang, M.; Rana, P.; Evans, S. Product service system (PSS) life cycle value analysis for sustainability. In Proceedings of the 6th International Conference on Design and Manufacture for Sustainable Development (ICDMSD2013), Hangzhou, China, 15–17 April 2013. [Google Scholar]

- Bocken, N.M.P.; Short, S.W.; Rana, P.; Evans, S. A literature and practice review to develop sustainable business model archetypes. J. Clean. Prod. 2014, 65, 42–56. [Google Scholar] [CrossRef]

- Gupta, A.; Deokar, A.; Iyer, L.; Sharda, R.; Schrader, D. Big Data & Analytics for Societal Impact: Recent Research and Trends. Inf. Syst. Front. 2018, 20, 185–194. [Google Scholar] [CrossRef]

- Mălăescu, I.; Sutton, S.G. The effects of decision aid structural restrictiveness on cognitive load, perceived usefulness, and reuse intentions. Int. J. Account. Inf. Syst. 2015, 17, 16–36. [Google Scholar] [CrossRef]

- Jaklič, J.; Grublješič, T.; Popovič, A. The role of compatibility in predicting business intelligence and analytics use intentions. Int. J. Inf. Manag. 2018, 43, 305–318. [Google Scholar] [CrossRef]

- Zhang, Y. The map is not the territory: Coevolution of technology and institution for a sustainable future. Curr. Opin. Environ. Sustain. 2020, 45, 56–68. [Google Scholar] [CrossRef]

- Watson, H.J. Tutorial: Business intelligence-Past, present, and future. Commun. Assoc. Inf. Syst. 2009, 25, 39. [Google Scholar] [CrossRef]

- Shanks, G.; Sharma, R. Creating Value from Business Analytics Systems: The Impact of Strategy. In Proceedings of the 15th Pacific Asia Conference on Information Systems, Quality Research in Pacific, PACIS 2011, Brisbane, Australia, 7–11 July 2011; pp. 1–12. [Google Scholar]

- Someh, I.A.; Shanks, G. The Role of Synergy in Achieving Value from Business Analytics Systems. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.967.8954&rep=rep1&type=pdf (accessed on 11 June 2021).

- Wixom, B.H.; Yen, B.; Relich, M. Maximizing Value from Business Analytics. MIS Q. Exec. 2013, 12, 111–123. [Google Scholar]

- Abbasi, A.; Sarker, S.; Chiang, R.H. Big Data Research in Information Systems: Toward an Inclusive Research Agenda. J. Assoc. Inf. Syst. 2016, 17, 3. [Google Scholar] [CrossRef]

- Bani Hani, I.; Deniz, S.; Carlsson, S. Enabling Organizational Agility through Self-Service Business Intelligence: The case of a digital marketplace. In Proceedings of the Pacific Asia Conference on Information Systems (PACIS) 2017, Langkawi, Malaysia, 16–20 July 2017. [Google Scholar]

- Bani Hani, I.; Tona, O.; Carlsson, S.A. From an Information Consumer to an In-formation Author: The Role of Self-Service Business Intelligence. In Proceedings of the American Conference on Information Systems (AMCIS) 2017, Boston, MA, USA, 10–12 August 2017. [Google Scholar]

- Imhoff, C.; White, C. Self-Service Business Intelligence. Empowering Users to Generate Insights, TDWI Best Practices Report; TWDI: Renton, WA, USA, 2011. [Google Scholar]

- Kim, H.-W.; Chan, H.C.; Gupta, S. Value-based Adoption of Mobile Internet: An empirical investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Kim, S.; Bae, J.; Jeon, H. Continuous intention on accommodation apps: Integrated value-based adoption and expectation–confirmation model analysis. Sustainability 2019, 11, 1578. [Google Scholar] [CrossRef]

- Shaikh, A.A.; Karjaluoto, H. Mobile banking services continuous usage—Case study of finland. In Proceedings of the 2016 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 5–8 January 2016; pp. 1497–1506. [Google Scholar]

- Islam, A.K.M.N.; Mäntymäki, M.; Bhattacherjee, A. Towards a Decomposed Expectation-Confirmation Model of IT Continuance: The Role of Usability. Commun. Assoc. Inf. Syst. 2017, 40, 502–523. [Google Scholar] [CrossRef]

- Mamun, M.R.A.; Senn, W.D.; Peak, D.A.; Prybutok, V.R.; Torres, R.A. Emotional Satisfaction and IS Continuance Behavior: Reshaping the Expectation-Confirmation Model. Int. J. Hum. Comput. Interact. 2020, 36, 1437–1446. [Google Scholar] [CrossRef]

- Thong, J.Y.L.; Hong, S.-J.; Tam, K.Y. The effects of post-adoption beliefs on the expectation-confirmation model for information technology continuance. Int. J. Hum. Comput. Stud. 2006, 64, 799–810. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovations: Modifications of a model for telecommunications. In Die Diffusion von Innovationen in der Telekommunikation; Springer: Berlin, Germany, 1995; pp. 25–38. [Google Scholar]

- Dishaw, M.T.; Strong, D.M. Assessing software maintenance tool utilization using task–technology fit and fitness-for-use models. J. Softw. Maint Res. Pract. 1998, 10, 151–179. [Google Scholar] [CrossRef]

- Dishaw, M.T.; Strong, D.M. Extending the technology acceptance model with task–technology fit constructs. Inf. Manag. 1999, 36, 9–21. [Google Scholar] [CrossRef]

- Katz, M.L.; Shapiro, C. On the licensing of innovations. RAND J. Econ. 1985, 16, 504. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Budner, P.; Fischer, M.; Rosenkranz, C.; Basten, D.; Terlecki, L. Information system continuance intention in the context of network effects and freemium business models: A replication study of cloud services in Germany. AIS TRR 2017, 3, 1–13. [Google Scholar] [CrossRef][Green Version]

- Lin, T.-C.; Wu, S.; Hsu, J.S.-C.; Chou, Y.-C. The integration of value-based adoption and expectation–confirmation models: An example of IPTV continuance intention. Decis. Support Syst. 2012, 54, 63–75. [Google Scholar] [CrossRef]

- Han, Y.-M.; Shen, C.-S.; Farn, C.-K. Determinants of continued usage of pervasive business intelligence systems. Inf. Dev. 2016, 32, 424–439. [Google Scholar] [CrossRef]

- Henfridsson, O.; Bygstad, B. The generative mechanisms of digital infastructure evolution. MIS Q. 2013, 37, 907–931. [Google Scholar] [CrossRef]

- Sydow, J.; Schreyögg, G.; Koch, J. Organizational path dependency: Opening the black box. Acad. Manag. Rev. 2009, 34, 689–709. [Google Scholar] [CrossRef]

- Schreyögg, G.; Sydow, J. Organizational Path Dependence: A Process View. Organ. Stud. 2011, 32, 321–335. [Google Scholar] [CrossRef]

- Berman, S.J.; Davidson, S.; Ikeda, K.; Korsten, P.J.; Marshall, A. How successful firms guide innovation: Insights and strategies of leading CEOs. Strategy Leadersh. 2016, 44, 21–28. [Google Scholar] [CrossRef]

- Dey, S.; Sharma, R.R.K. Strategic alignment of information systems flexibility with organization’s operational and manufacturing philosophy: Developing a theoretical framework. In Proceedings of the IEOM Society International 2018, Paris, France, 26–27 July 2018; pp. 381–391. [Google Scholar]

- Lucić, D. Business intelligence and business continuity: Empirical analysis of Croatian companies. Ann. Disaster Risk Sci. ADRS 2019, 2, 1–10. [Google Scholar]

- Moreno, V.; da Silva, F.E.L.V.; Ferreira, R.; Filardi, F. Complementarity as a driver of value in business intelligence and analytics adoption processes. Rev. Ibero Am. Estratégia 2019, 18, 57–70. [Google Scholar] [CrossRef]

- McKinney, V.; Yoon, K.; Zahedi, F.M. The Measurement of Web-Customer Satisfaction: An Expectation and Disconfirmation Approach. Inf. Syst. Res. 2002, 13, 296–315. [Google Scholar] [CrossRef]

- Davis, G.B. Caution: User-Developed Systems Can Be Dangerous to Your Organization; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1989. [Google Scholar]

- Edwards, A.; Edwards, C.; Westerman, D.; Spence, P.R. Initial expectations, interactions, and beyond with social robots. Comput. Hum. Behav. 2019, 90, 308–314. [Google Scholar] [CrossRef]

- Helson, H. Adaptation-level theory: An experimental and systematic approach to behavior; Harper and Row: New York, 1964. [Google Scholar]

- Goodhue, D.L.; Thompson, R.L. Task-technology fit and individual performance. MIS Q. 1995, 213–236. [Google Scholar] [CrossRef]

- Carrillo-Hermosilla, J.; del Río, P.; Könnölä, T. Diversity of eco-innovations: Reflections from selected case studies. J. Clean. Prod. 2010, 18, 1073–1083. [Google Scholar] [CrossRef]

- Ben-Eli, M.U. Sustainability: Definition and five core principles, a systems perspective. Sustain. Sci. 2018, 13, 1337–1343. [Google Scholar] [CrossRef]

- Inigo, E.A.; Albareda, L. Sustainability oriented innovation dynamics: Levels of dynamic capabilities and their path-dependent and self-reinforcing logics. Technol. Forecast. Soc. Chang. 2019, 139, 334–351. [Google Scholar] [CrossRef]

- Popovič, A.; Puklavec, B.; Oliveira, T. Justifying business intelligence systems adoption in SMEs: Impact of systems use on firm performance. Ind. Manag. Data Syst. 2019, 119, 210–228. [Google Scholar] [CrossRef]

- Bani-Hani, I.; Tona, O.; Carlsson, S. Patterns of Resource Integration in the Self-Service Approach to Business Analytics. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 2020. [Google Scholar]

- Alpar, P.; Schultz, M. Self-Service Business Intelligence. Bus. Inf. Syst. Eng. 2016, 58, 151–155. [Google Scholar] [CrossRef]

- Gartner IT Glossary, n.d. Available online: http://www.gartner.com/it-glossary/self-service-analytics/ (accessed on 11 June 2021).

- Weber, M. Keys to sustainable self-service business intelligence. Bus. Intell. J. 2013, 18, 18. [Google Scholar]

- Schuff, D.; Corral, K.; Louis, R.D.S.; Schymik, G. Enabling self-service BI: A methodology and a case study for a model management warehouse. Inf. Syst. Front. 2016, 20, 275–288. [Google Scholar] [CrossRef]

- Curran, J.M.; Meuter, M.L. Self-service technology adoption: Comparing three technologies. J. Serv. Mark. 2005, 19, 103–113. [Google Scholar] [CrossRef]

- Dabholkar, P.A. Consumer evaluations of new technology-based self-service options: An investigation of alternative models of service quality. Int. J. Res. Mark. 1996, 13, 29–51. [Google Scholar] [CrossRef]

- Dabholkar, P.A.; Bagozzi, R.P. An attitudinal model of technology-based self-service: Moderating effects of consumer traits and situational factors. J. Acad. Mark. Sci. 2002, 30, 184–201. [Google Scholar] [CrossRef]

- Schuster, L.; Drennan, J.; Lings, I.N. Consumer acceptance of m-wellbeing services: A social marketing perspective. Eur. J. Mark. 2013, 47, 1439–1457. [Google Scholar] [CrossRef]

- López-Bonilla, J.M.; López-Bonilla, L.M. Self-service technology versus traditional service: Examining cognitive factors in the purchase of the airline ticket. J. Travel Tour. Mark. 2013, 30, 497–508. [Google Scholar] [CrossRef]

- Meuter, M.L.; Ostrom, A.L.; Roundtree, R.I.; Bitner, M.J. Self-Service Technologies: Understanding Customer Satisfaction with Technology-Based Service Encounters. J. Mark. 2000, 64, 50–64. [Google Scholar] [CrossRef]

- Scherer, A.; Wünderlich, N.; von Wangenheim, F. The Value of Self-Service: Long-Term Effects of Technology-Based Self-Service Usage on Customer Retention. Mis Q. 2015, 39, 177–200. [Google Scholar] [CrossRef]

- Barc. Self-Service Business Intelligence Users Are Now in the Majority; TDWI: Renton, WA, USA, 2014. [Google Scholar]

- Biernacki, P.; Waldorf, D. Snowball sampling: Problems and techniques of chain referral sampling. Sociol. Methods Res. 1981, 10, 141–163. [Google Scholar] [CrossRef]

- Schultze, U.; Avital, M. Designing interviews to generate rich data for information systems research. Inf. Organ. 2011, 21, 1–16. [Google Scholar] [CrossRef]

- Yin, R.K. Case Study Research: Design and Methods; Sage Publications: Addison, TX, USA, 2013. [Google Scholar]

- Creswell, J.W.; Hanson, W.E.; Clark Plano, V.L.; Morales, A. Qualitative research designs: Selection and implementation. Couns. Psychol. 2007, 35, 236–264. [Google Scholar] [CrossRef]

- Silverman, D. Qualitative Research; Sage: Addison, TX, USA, 2016. [Google Scholar]

- Miles, M.B.; Huberman, A.M. Qualitative Data Analysis: An Expanded Sourcebook; Sage: Addison, TX, USA, 1994. [Google Scholar]

- Hou, C.K. Examining users’ intention to continue using business intelligence systems from the perspectives of end-user computing satisfaction and individual performance. IJBCRM 2018, 8, 49. [Google Scholar] [CrossRef]

- Hsu, C.-L.; Lin, J.C.-C. What drives purchase intention for paid mobile apps?—An expectation confirmation model with perceived value. Electron. Commer. Res. Appl. 2015, 14, 46–57. [Google Scholar] [CrossRef]

- Ashraf, M.; Ahmad, J.; Hamyon, A.A.; Sheikh, M.R.; Sharif, W. Effects of post-adoption beliefs on customers’ online product recommendation continuous usage: An extended expectation-confirmation model. Cogent Bus. Manag. 2020, 7, 1735693. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- Ashraf, M.; Jaafar, N.I.; Sulaiman, A. The mediation effect of trusting beliefs on the relationship between expectation-confirmation and satisfaction with the usage of online product recommendatioun. South East Asian J. Manag. 2016, 10. [Google Scholar] [CrossRef]

- Haka, E.; Haliti, R. The Effects of Self Service Business Intelligence in the Gap between Business Users and IT. Master’s Thesis, Lund University, Lund, Sweden, June 2018. [Google Scholar]

- Bani-Hani, I.; Pareigis, J.; Tona, O.; Carlsson, S. A holistic view of value generation process in a SSBI environment: A service dominant logic perspective. J. Decis. Syst. 2018, 27, 46–55. [Google Scholar] [CrossRef]

- Belkin, N.J. Cognitive models and information transfer. Soc. Sci. Inf. Stud. 1984, 4, 111–129. [Google Scholar] [CrossRef]

- Arnott, D.; Pervan, G. A Critical Analysis of Decision Support Systems Research Revisited: The Rise of Design Science. J. Inf. Technol. 2014, 29, 269–293. [Google Scholar] [CrossRef]

- Pappas, I.O.; Mikalef, P.; Giannakos, M.N.; Krogstie, J.; Lekakos, G. Big Data and Business Analytics Ecosystems: Paving the Way towards Digital Transformation and Sustainable Societies. Inf. Syst. e-Bus. Manag. 2018, 16, 479–491. [Google Scholar]

- Hanseth, O.; Lyytinen, K. Design theory for dynamic complexity in information infrastructures: The case of building internet. In Enacting Research Methods in Information Systems; Springer: Berlin, Germany, 2016; pp. 104–142. [Google Scholar]

| Interview Quote | Theoretical Concept (Etic) | Second Level Code (Emic) | ||

|---|---|---|---|---|

| Author 1 | Author 2 | Final | ||

| “…just getting help extracting or manipulating the data or just getting the tie to do it. Let’s say I have this problem; I think the solution is like this and they kind of develop or prove the content and we can work together on that.” | Collaboration on the environment services | Collaboration in relation to support from technical people | Collaboration in relation to environment optimization | Environment value and reinforcement |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bani-Hani, I.; Shepherd, E. Self-Reinforcement Mechanisms of Sustainability and Continuous System Use: A Self-Service Analytics Environment Perspective. Informatics 2021, 8, 45. https://doi.org/10.3390/informatics8030045

Bani-Hani I, Shepherd E. Self-Reinforcement Mechanisms of Sustainability and Continuous System Use: A Self-Service Analytics Environment Perspective. Informatics. 2021; 8(3):45. https://doi.org/10.3390/informatics8030045

Chicago/Turabian StyleBani-Hani, Imad, and Eva Shepherd. 2021. "Self-Reinforcement Mechanisms of Sustainability and Continuous System Use: A Self-Service Analytics Environment Perspective" Informatics 8, no. 3: 45. https://doi.org/10.3390/informatics8030045

APA StyleBani-Hani, I., & Shepherd, E. (2021). Self-Reinforcement Mechanisms of Sustainability and Continuous System Use: A Self-Service Analytics Environment Perspective. Informatics, 8(3), 45. https://doi.org/10.3390/informatics8030045