Should We Be Concerned about How Information Privacy Concerns Are Measured in Online Contexts? A Systematic Review of Survey Scale Development Studies

Abstract

1. Introduction

2. Background

3. Method

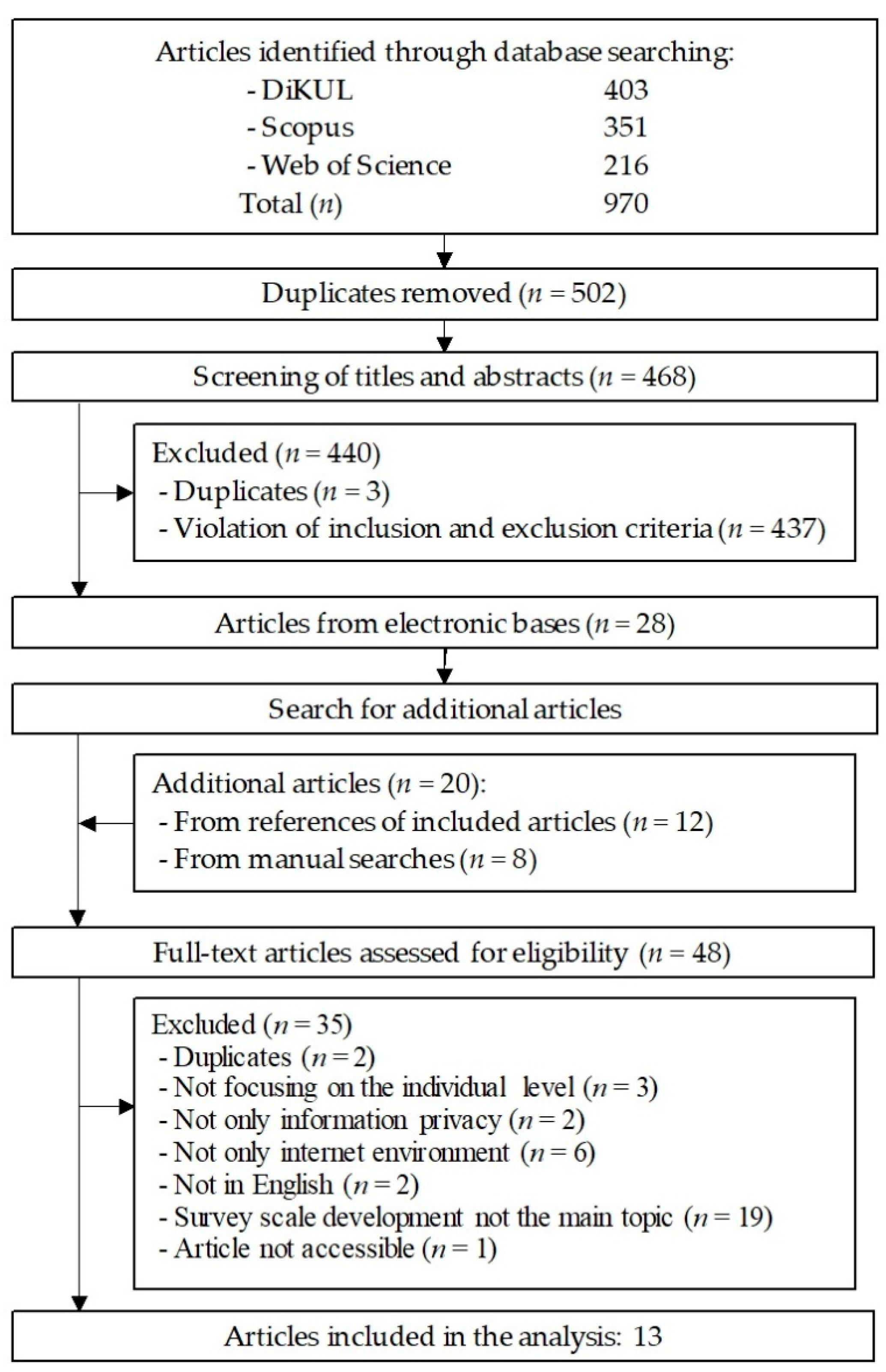

3.1. Procedure

3.1.1. Systematic Literature Search

3.1.2. Eligibility Criteria

3.1.3. Information Sources and Search Strategies

3.1.4. Study Selection

3.1.5. Data Extraction

3.1.6. Coding Instrument

3.1.7. The Coding Process and Analysis

4. Results

4.1. Study Selection

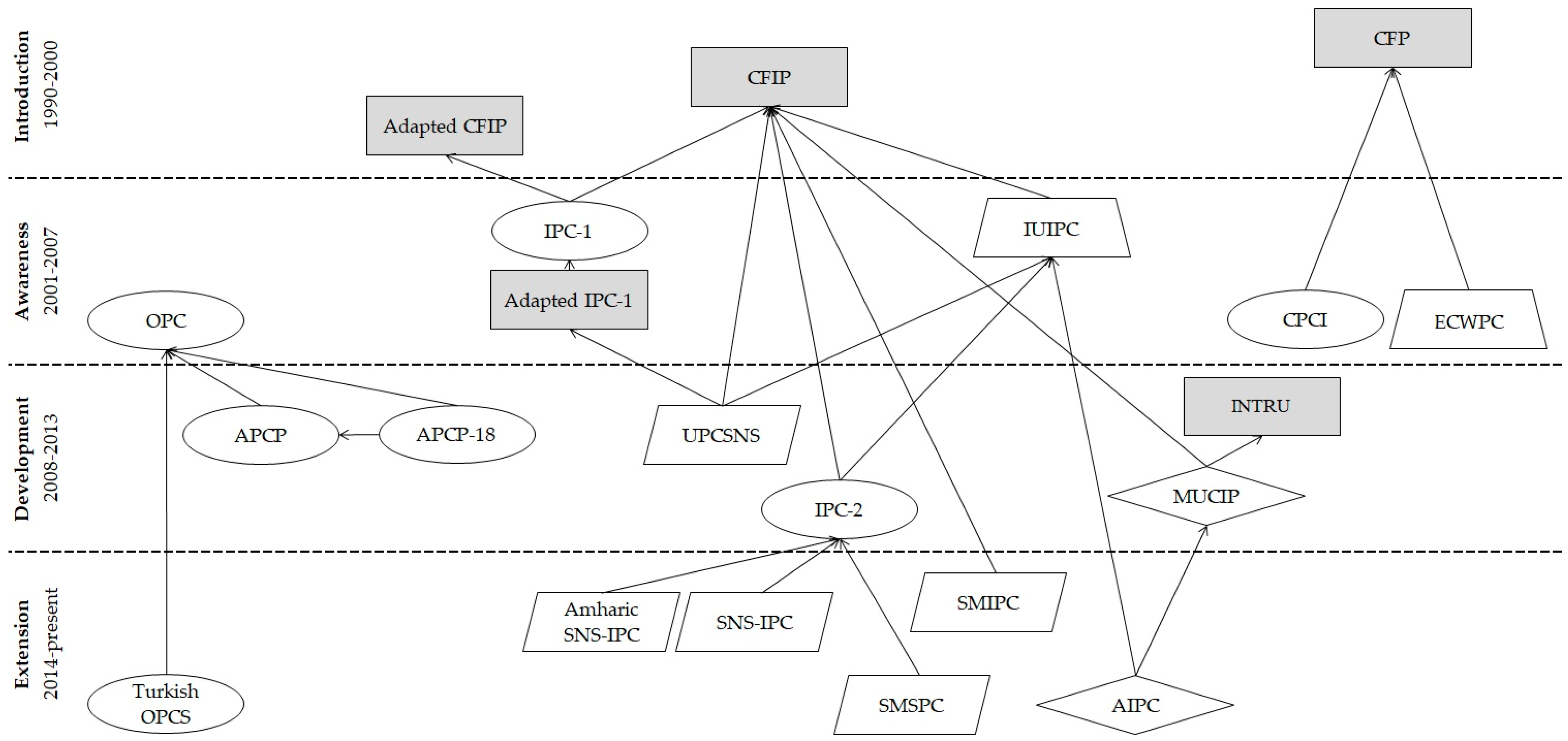

4.2. Descriptive Results

4.3. Research Questions

4.3.1. Conceptual Definitions in IPC Scales

4.3.2. Online Contexts and IPC Scales

4.3.3. Dimensionality of IPC Scales

4.3.4. The Methodological Quality of IPC Scale Development

Content Validity

Structural Validity, Internal Consistency, and Cross-Cultural Validity

Reliability and Criterion Validity

5. Discussion

5.1. Substantial Findings and Implications

5.2. Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Data Source | Search Terms and Phrases |

|---|---|

| Web of Science (www.webofknowledge.com) (accessed on 22 September 2019) | TITLE: (privacy) AND TITLE: (concern*) AND TOPIC: (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND TOPIC: (information* OR internet*) NOT TITLE: (secur*) AND LANGUAGE: (English) AND DOCUMENT TYPES: (Article OR Book OR Book Chapter OR Proceedings Paper) Timespan: 1996–2019. Indexes: SCI-EXPANDED, SSCI, A&HCI, CPCI-S, CPCI-SSH, BKCI-S, BKCI-SSH, ESCI, CCR-EXPANDED, and IC. |

| SCOPUS (www.scopus.com) (accessed on 22 September 2019) | (TITLE (privacy) AND TITLE (concern*) AND TITLE-ABS-KEY (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND TITLE-ABS-KEY (information* OR internet*) AND NOT TITLE (secur*) AND LANGUAGE (english*) AND PUBYEAR > 1995 AND PUBYEAR < 2020 AND (LIMIT-TO (DOCTYPE, “ar”) OR LIMIT-TO (DOCTYPE, “cp”) OR LIMIT-TO (DOCTYPE, “ch”)) |

| DiKUL (https://plus-ul.si.cobiss.net/opac7/bib/search) (accessed on 22 September 2019) | TI privacy AND TI concern* AND (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND (information* OR internet*) NOT TI secur* Limitations: Peer Reviewed, Date Published: 19960101–20191231, Language: English |

| Label | Context | Contextual Group | |||

|---|---|---|---|---|---|

| Type of Individuals | Type of Personal Information 1 | Type of Entity Handling Personal Information 2 | Type of Technology/Service Contexts | ||

| IPC-1 | Online consumers, Internet users | General/unspecified | General/unspecified | General Internet usage, ecommerce | General Internet usage |

| IUIPC | Online consumers, Internet users | General/unspecified | Second and third parties, company/website | General Internet usage, ecommerce | Ecommerce |

| OPC | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website, other users | General Internet usage, ecommerce | General Internet usage |

| CPCI | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce | General Internet usage |

| ECWPC | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce | Ecommerce |

| APCP | Online consumers, Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website, other users | General Internet usage, ecommerce, online/mobile services | General Internet usage |

| APCP-18 | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | General Internet usage, online/mobile services | General Internet usage |

| UPCSNS | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| MUCIP | Internet users, mobile services users | Location information, general/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce, online/mobile services | Mobile Internet |

| IPC-2 | Internet users | General/unspecified | Second, third, and fourth parties; company/website; government | General Internet usage | General Internet usage |

| SMIPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| SNS-IPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| Amharic SNS-IPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| Turkish OPCS | Online consumers, Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce, online/mobile services | General Internet usage |

| AIPC | Internet users, mobile services users | General/unspecified | Second and third parties, company/website | General Internet usage, online/mobile services | Mobile Internet |

| SMSPC | Internet users, blogger/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| Label | Number of Dimensions | (Ab)use | Collection | Access | Privacy Concerns | Control | Errors | Awareness | Personal Attitude | Requirements | Structure of the Measurement Model |

|---|---|---|---|---|---|---|---|---|---|---|---|

| IPC-1 | 2 | 1 | 1 | First-order | |||||||

| IUIPC | 3 | 1 | 1 | 1 | Second-order | ||||||

| CPCI | 2 | 1 | 1 | Second-order | |||||||

| ECWPC | 2 | 1 | 1 | Second-order | |||||||

| APCP | 4 | 4 | First-order | ||||||||

| UPCSNS | 6 | 1 | 1 | 1 | 1 | 1 | 1 | First-order | |||

| MUCIP | 3 | 1 | 1 | 1 | Second-order | ||||||

| IPC-2 | 6 | 1 | 1 | 1 | 1 | 1 | 1 | Third-order | |||

| SMIPC 1 | 3 | 1 | 1 | 1 | 1 | Second-order | |||||

| SNS-IPC | 5 | 1 | 1 | 1 | 1 | 1 | Third-order | ||||

| Amharic SNS-IPC | 5 | 1 | 1 | 1 | 1 | 1 | Third-order | ||||

| Turkish OPCS | 3 | 3 | First-order | ||||||||

| AIPC | 3 | 1 | 1 | 1 | First-order | ||||||

| SMSPC | 6 | 1 | 1 | 1 | 1 | 1 | 1 | First-order | |||

| Total | 53 | 11 | 10 | 8 | 8 | 6 | 5 | 4 | 1 | 1 |

References

- Westin, A.F. Social and Political Dimensions of Privacy. J. Soc. Issues 2003, 59, 431–453. [Google Scholar] [CrossRef]

- Smith, J.H.; Dinev, T.; Xu, H. Information Privacy Research: An Interdisciplinary Review. MIS Q. 2011, 35, 989–1015. [Google Scholar] [CrossRef]

- Van Dijck, J. The Culture of Connectivity: A Critical History of Social Media; Oxford University Press: New York, NY, USA, 2013; ISBN 9780199970773. [Google Scholar]

- Regulation (EU) 2016/679. The Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). European Parliament, Council of the European Union. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 6 November 2020).

- Bélanger, F.; Crossler, R.E. Privacy in the Digital Age: A Review of Information Privacy Research in Information Systems. MIS Q. 2011, 35, 1017–1041. [Google Scholar] [CrossRef]

- Rohunen, A. Advancing Information Privacy Concerns Evaluation in Personal Data Intensive Services. Ph.D. Thesis, University of Oulu, Oulu, Finland, 14 December 2019. [Google Scholar]

- Castaldo, S.; Grosso, M. An Empirical Investigation to Improve Information Sharing in Online Settings: A Multi-Target Comparison. In Handbook of Research on Retailing Techniques for Optimal Consumer Engagement and Experiences; Musso, F., Druica, E., Eds.; IGI Global: Hershey, PA, USA, 2020; pp. 355–379. ISBN 9781799814139. [Google Scholar]

- Buchanan, T.; Paine, C.; Joinson, A.N.; Repis, U.-D. Development of Measures of Online Privacy Concern and Protection for Use on the Internet. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 157–165. [Google Scholar] [CrossRef]

- Hong, W.; Thong, J.Y.L. Internet Privacy Concerns: An Integrated Conceptualization and Four Empirical Studies. MIS Q. 2013, 37, 275–298. [Google Scholar] [CrossRef]

- Koohang, A. Social Media Sites Privacy Concerns: Empirical Validation of an Instrument. Online J. Appl. Knowl. Manag. 2017, 5, 14–26. [Google Scholar] [CrossRef]

- Li, Y. Empirical Studies on Online Information Privacy Concerns: Literature Review and an Integrative Framework. Commun. Assoc. Inf. Syst. 2011, 28, 453–496. [Google Scholar] [CrossRef]

- Yun, H.; Lee, G.; Kim, D.J. A Chronological Review of Empirical Research on Personal Information Privacy Concerns: An Analysis of Contexts and Research Constructs. Inf. Manag. 2019, 56, 570–601. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet Users’ Information Privacy Concerns (IUIPC): The Construct, the Scale, and a Causal Model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef]

- Smith, J.H.; Milberg, S.J.; Burke, S.J. Information Privacy: Measuring Individuals’ Concerns about Organizational Practices. MIS Q. 1996, 20, 167–196. [Google Scholar] [CrossRef]

- Dienlin, T.; Trepte, S. Is the Privacy Paradox a Relic of the Past? An in-Depth Analysis of Privacy Attitudes and Privacy Behaviors. Eur. J. Soc. Psychol. 2015, 45, 285–297. [Google Scholar] [CrossRef]

- Gerber, N.; Gerber, P.; Volkamer, M. Explaining the Privacy Paradox: A Systematic Review of Literature Investigating Privacy Attitude and Behavior. Comput. Secur. 2018, 77, 226–261. [Google Scholar] [CrossRef]

- Preibusch, S. Guide to Measuring Privacy Concern: Review of Survey and Observational Instruments. Int. J. Hum. Comput. Stud. 2013, 71, 1133–1143. [Google Scholar] [CrossRef]

- Dourish, P.; Anderson, K. Collective Information Practice: Exploring Privacy and Security as Social and Cultural Phenomena. Hum. Comput. Interact. 2006, 21, 319–342. [Google Scholar] [CrossRef]

- Li, Y. Theories in Online Information Privacy Research: A Critical Review and an Integrated Framework. Decis. Support Syst. 2012, 54, 471–481. [Google Scholar] [CrossRef]

- Osatuyi, B. An Instrument for Measuring Social Media Users’ Information Privacy Concerns. J. Curr. Issues Media Telecommun. 2014, 6, 359–375. [Google Scholar]

- Prinsen, C.A.C.; Mokkink, L.B.; Bouter, L.M.; Alonso, J.; Patrick, D.L.; de Vet, H.C.W.; Terwee, C.B. COSMIN Guideline for Systematic Reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1147–1157. [Google Scholar] [CrossRef] [PubMed]

- Mokkink, L.B.; de Vet, H.C.W.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; Terwee, C.B. COSMIN Risk of Bias Checklist for Systematic Reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1171–1179. [Google Scholar] [CrossRef]

- Rohunen, A.; Markkula, J.; Heikkilä, M.; Oivo, M. Explaining Diversity and Conflicts in Privacy Behavior Models. J. Comput. Inf. Syst. 2020, 60, 378–393. [Google Scholar] [CrossRef]

- Acquisti, A. Privacy in electronic commerce and the economics of immediate gratification. In Proceedings of the 5th ACM conference on Electronic commerce (EC 2004), New York, NY, USA, 17–20 May 2004; pp. 21–29. [Google Scholar]

- Nissenbaum, H.F. Privacy in Context: Technology, Policy, and the Integrity of Social Life; Stanford University Press: Stanford, CA, USA, 2010; ISBN 9780804772891. [Google Scholar]

- Castañeda, A.J.; Montoso, F.J.; Luque, T. The Dimensionality of Customer Privacy Concern on the Internet. Online Inf. Rev. 2007, 31, 420–439. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2012; ISBN 9781412980449. [Google Scholar]

- Mokkink, L.B.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Terwee, C.B. COSMIN Methodology for Systematic Reviews of Patient-reported Outcome Measures (PROMs): User Manual. Available online: https://www.cosmin.nl/wp-content/uploads/COSMIN-syst-review-for-PROMs-manual_version-1_feb-2018-1.pdf (accessed on 6 November 2020).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. BMJ 2009, 339, 332–336. [Google Scholar] [CrossRef]

- Terwee, C.B.; Prinsen, C.A.C.; Chiarotto, A.; Westerman, M.J.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Mokkink, L.B. COSMIN Methodology for Evaluating the Content Validity of Patient-Reported Outcome Measures: A Delphi Study. Qual. Life Res. 2018, 27, 1159–1170. [Google Scholar] [CrossRef] [PubMed]

- PRISMA. Available online: http://prisma-statement.org/ (accessed on 5 November 2020).

- Dinev, T.; Hart, P. Internet Privacy Concerns and Their Antecedents—Measurement Validity and a Regression Model. Behav. Inf. Technol. 2004, 23, 413–422. [Google Scholar] [CrossRef]

- Taddicken, M.M.M. Measuring online privacy concern and protection in the (social) web: Development of the APCP and APCP-18 scale. In Proceedings of the 60th Annual Conference of the International Communication Association (ICA 2010), Singapore, 22–26 June 2010. [Google Scholar]

- Zheng, S.; Shi, K.; Zeng, Z.; Lu, Q. The exploration of instrument of users’ privacy concerns of social network service. In Proceedings of the 2010 IEEE International Conference on Industrial Engineering and Engineering Management (IEEE 2010), Macao, China, 7–10 December 2010; pp. 1538–1542. [Google Scholar]

- Xu, H.; Gupta, S.; Rosson, M.B.; Carroll, J.M. Measuring mobile users’ concerns for information privacy. In Proceedings of the International Conference on Information Systems (ICIS 2012), Orlando, FL, USA, 16–19 December 2012. [Google Scholar]

- Borena, B.; Belanger, F.; Ejigu, D.; Anteneh, S. Conceptualizing information privacy concern in low-income countries: An Ethiopian language instrument for social networks sites. In Proceedings of the Twenty-First Americas Conference on Information Systems (AMCIS 2015), Fajardo, Puerto Rico, 13–15 August 2015. [Google Scholar]

- Alakurt, T. Adaptation of Online Privacy Concern Scale into Turkish Culture. Pegem Eğitim ve Öğretim Derg. 2017, 7, 611–636. [Google Scholar] [CrossRef]

- Buck, C.; Burster, S. App information privacy concerns. In Proceedings of the Twenty-Third Americas Conference on Information Systems (AMCIS 2017), Boston, MA, USA, 10–12 August 2017. [Google Scholar]

- Culnan, M.J. ‘How Did They Get My Name’: An Exploratory Investigation of Consumer Attitudes toward Secondary Information Use. MIS Q. 1993, 17, 341–363. [Google Scholar] [CrossRef]

- Culnan, M.J.; Armstrong, P.K. Information Privacy Concerns, Procedural Fairness, and Impersonal Trust: An Empirical Investigation. Organ. Sci. 1999, 10, 104–115. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An Extended Privacy Calculus Model for E-Commerce Transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Xu, H.; Dinev, T.; Smith, J.H.; Hart, P. Examining the formation of individual’s privacy concerns: Toward an integrative view. In Proceedings of the Twenty-Ninth International Conference on Information Systems (ICIS 2008), Paris, France, 14–17 December 2008. [Google Scholar]

- Petronio, S. Boundaries to Privacy: Dialectics of Disclosure; State University of New York Press: Albany, NY, USA, 2002; ISBN 9780791455166. [Google Scholar]

- Donaldson, T. The Ethics of International Business; Oxford University Press: New York, NY, 1989; ISBN 9780195058741. [Google Scholar]

- Friend, C. Social Contract Theory. Available online: https://www.iep.utm.edu/soc-cont/ (accessed on 6 November 2020).

- Laufer, R.S.; Wolfe, M. Privacy as a Concept and a Social Issue: A Multidimensional Developmental Theory. J. Soc. Issues 1977, 33, 22–42. [Google Scholar] [CrossRef]

- Campbell, A.J. Relationship Marketing in Consumer Markets: A Comparison of Managerial and Consumer Attitudes about Information Privacy. J. Direct Mark. 1997, 11, 44–57. [Google Scholar] [CrossRef]

- Westin, A. Privacy and Freedom; Atheneum: New York, NY, USA, 1967; ISBN 9780689102899. [Google Scholar]

- Bergman, M.N. A Theoretical Note on the Differences between Attitudes, Opinions, and Values. Swiss Polit. Sci. Rev. 1998, 4, 81–93. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. Privacy Concerns and Levels of Information Exchange: An Empirical Investigation of Intended e-Services Use. e-Serv. J. 2006, 4, 25–59. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. Internet Privacy Concerns and Social Awareness as Determinants of Intention to Transact. Int. J. Electron. Commer. 2005, 10, 7–29. [Google Scholar] [CrossRef]

- Youn, S. Teenagers’ Perceptions of Online Privacy and Coping Behaviors: A Risk–Benefit Appraisal Approach. J. Broadcast. Electron. Media 2005, 49, 86–110. [Google Scholar] [CrossRef]

- Lwin, M.O.; Williams, J.D. A Model Integrating the Multidimensional Developmental Theory of Privacy and Theory of Planned Behavior to Examine Fabrication of Information Online. Mark. Lett. 2003, 14, 257–272. [Google Scholar] [CrossRef]

- Bollen, K.A. Structural Equations with Latent Variables; John Wiley & Sons: New York, NY, USA, 1989; ISBN 9780471011712. [Google Scholar]

- Mackenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct Measurement and Validation Procedures in MIS and Behavioral Research: Integrating New and Existing Techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Vogt, D.S.; King, D.W.; King, L.A. Focus Groups in Psychological Assessment: Enhancing Content Validity by Consulting Members of the Target Population. Psychol. Assess. 2004, 16, 231–243. [Google Scholar] [CrossRef]

| Survey Scale | Abbreviation | Article | No. Items | Response Options | Response Scale | Number of Dimensions | Country | Language | Translation |

|---|---|---|---|---|---|---|---|---|---|

| Internet Privacy Concerns | IPC-1 | [32] | 14 | 1–5 | – | 2 | US | English | No |

| Internet Users’ Information Privacy Concerns | IUIPC | [13] | 10 | 1–7 | Strongly disagree to strongly agree | 3 | US | English | No |

| Online Privacy Concern | OPC | [8] | 16 | 1–5 | Not at all to very much | 1 | UK | English | No |

| Customer Privacy Concern on the Internet | CPCI | [26] | 8 | 1–7 | – | 2 | Spain 1 | – | No |

| E-commerce Website Privacy Concern | ECWPC | [26] | 4 | 1–5 | – | 2 | Spain 1 | – | No |

| Adapted online Privacy Concern and Protection for use on the Internet | APCP | [33] | 17 | 1–5 | Not at all to very much | 4 | Germany | German | Yes |

| Adapted online Privacy Concern and Protection for use on the Internet-18 | APCP-18 | [33] | 8 | 1–5 | Not at all to very much | 1 | Germany | German | Yes |

| Users’ Privacy Concerns on Social Network Service | UPCSNS | [34] | 28 | 1–7 | Strongly disagree to strongly agree | 6 | China | – | No |

| Mobile Users’ Concerns for Information Privacy | MUCIP | [35] | 9 | 1–7 | – | 3 | US | English | No |

| Internet Privacy Concerns | IPC-2 | [9] | 18 | 1–7 | Strongly disagree to strongly agree | 6 | Hong Kong | – | No |

| Social Media Users’ Information Privacy Concern | SMIPC | [20] | 14 | 1–5 | Strongly disagree to strongly agree | 3 | US | English | No |

| Social Network Sites Internet Privacy Concerns | SNS-IPC | [36] | 15 | – | – | 5 | Ethiopia 1 | English | No |

| Amharic Social Network Sites Internet Privacy Concerns | Amharic SNS-IPC | [36] | 15 | – | – | 5 | Ethiopia | Amharic | Yes |

| Turkish Online Privacy Concern Scale | Turkish OPCS | [37] | 14 | 1–5 | Not at all to very much | 3 | Turkey | English, Turkish | Yes |

| App Information Privacy Concerns | AIPC | [38] | 17 | 1–7, no opinion | Totally disagree to agree completely, no opinion | 3 | Germany | – | No |

| Social Media Site Privacy Concerns | SMSPC | [10] | 18 | 1–7 | Completely disagree to completely agree | 6 | US | English | No |

| Label | Definition 1 | Theory |

|---|---|---|

| IPC-1 | – | – |

| IUIPC | The individual’s subjective views of fairness within the context of information privacy (adapted from Campbell [47]) | Social contract theory [44] |

| OPC | The desire to keep personal information out of the hands of others (adapted from Westin [48]) (IPCs are subjective measures that vary among individuals.) | – |

| CPCI | The Internet customers’ concern for controlling the acquisition and subsequent use of the information that is generated or acquired on the Internet about them (adapted from Westin [48]) | – |

| ECWPC | – | |

| APCP | – | – |

| APCP-18 | – | – |

| UPCSNS | Users’ ability or the right to control whether to disclose their personal information and the manner of disclosure | – |

| MUCIP | The concerns of mobile users about possible loss of privacy because of information disclosure to a specific external agent | Communication privacy management theory [43] |

| IPC-2 | The perception in a dyadic relationship between an individual and an online entity, which can either be a particular website or a category of websites, such as commercial websites | Multidimensional developmental theory [46] |

| SMIPC | Concerns about loss of privacy because of the disclosure of personal information to known or unknown external agents, including other social media users, social media platforms, and third parties | Communication privacy management theory [43] |

| SNS-IPC | Feelings or perceptions that SNS users have regarding privacy-related activities of SNSs and their members, who could infringe upon rights or ability to control these activities | – |

| Amharic SNS-IPC | – | |

| Turkish OPCS | – | – |

| AIPC | The degree of an individual’s IPCs while using mobile services. IPCs refer to the anxiety, personal attitude, and requirements of individuals regarding the collection, usage, and processing of data gained by mobile apps. | Social contract theory [45] and communication privacy management theory [43] |

| SMSPC | The degree to which an Internet user is concerned about website practices related to the collection and use of his/her personal information | – |

| Label | Content Validity | Internal Validity | Remaining Measurement Properties | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| General Design Requirements [2] | Concept-Elicitation Study [3] | Cognitive Interview [4] | Comprehensibility [5] | Comprehensiveness [6] | Structural Validity [7] | Internal Consistency [8] | Cross-Cultural Validity [9] | Reliability [10] | Criterion Validity [11] | |

| IPC-1 | IN | – | AD | IN | DF | AD | VG | – | – | – |

| IUIPC | AD | DF | IN | – | – | VG | VG | – | – | VG |

| OPC | IN | DF | IN | – | – | AD | VG | – | – | VG |

| CPCI | IN | – | AD | DF | DF | VG | VG | – | – | VG |

| ECWPC | IN | – | AD | DF | DF | AD | DF | – | – | – |

| APCP | IN | DF | IN | – | – | VG | VG | – | – | – |

| APCP-18 | IN | DF | IN | – | – | VG | VG | – | – | VG |

| UPCSNS | IN | AD | IN | – | – | IN | VG | – | – | – |

| MUCIP | VG | DF | AD | DF | DF | VG | VG | – | – | – |

| IPC-2 | IN | – | AD | IN | DF | VG | VG | – | – | – |

| SMIPC | IN | – | AD | DF | DF | VG | VG | – | – | – |

| SNS-IPC | IN | – | IN | DF | DF | DF | VG | – | – | – |

| Amharic SNS-IPC | IN | – | IN | DF | DF | DF | VG | – | – | – |

| Turkish OPCS | IN | – | AD | DF | DF | VG | VG | – | – | VG |

| AIPC | IN | – | IN | – | – | DF | VG | – | – | – |

| SMSPC | IN | – | IN | – | – | AD | VG | – | – | – |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bartol, J.; Vehovar, V.; Petrovčič, A. Should We Be Concerned about How Information Privacy Concerns Are Measured in Online Contexts? A Systematic Review of Survey Scale Development Studies. Informatics 2021, 8, 31. https://doi.org/10.3390/informatics8020031

Bartol J, Vehovar V, Petrovčič A. Should We Be Concerned about How Information Privacy Concerns Are Measured in Online Contexts? A Systematic Review of Survey Scale Development Studies. Informatics. 2021; 8(2):31. https://doi.org/10.3390/informatics8020031

Chicago/Turabian StyleBartol, Jošt, Vasja Vehovar, and Andraž Petrovčič. 2021. "Should We Be Concerned about How Information Privacy Concerns Are Measured in Online Contexts? A Systematic Review of Survey Scale Development Studies" Informatics 8, no. 2: 31. https://doi.org/10.3390/informatics8020031

APA StyleBartol, J., Vehovar, V., & Petrovčič, A. (2021). Should We Be Concerned about How Information Privacy Concerns Are Measured in Online Contexts? A Systematic Review of Survey Scale Development Studies. Informatics, 8(2), 31. https://doi.org/10.3390/informatics8020031