Abstract

This systematic review addresses problems identified in existing research on survey measurements of individuals’ information privacy concerns in online contexts. The search in this study focused on articles published between 1996 and 2019 and yielded 970 articles. After excluding duplicates and screening for eligibility, we were left with 13 articles in which the investigators developed a total of 16 survey scales. In addition to reviewing the conceptualizations, contexts, and dimensionalities of the scales, we evaluated the quality of methodological procedures used in the scale development process, drawing upon the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) Risk of Bias checklist. The results confirmed that the breadth of conceptualizations and dimensions of information privacy concerns are constructed with a low emphasis on contextuality. Assessment of the quality of methodological procedures suggested a need for a more thorough evaluation of content validity. We provide several recommendations for tackling these issues and propose new research directions.

1. Introduction

Privacy is important because it is “an arena of democratic politics” [1] (p. 433). In other words, determinations of what is private and what is not impact individuals’ freedoms. Whereas concerns regarding privacy, particularly information privacy (see [2] (p. 990) for definition of information privacy), became a social issue in the 1960s, information privacy came to the forefront of public debate with the advent of the Internet [1]. The Internet plays such an important role in the debate about information privacy because it offers companies and governments unprecedented possibilities for large-scale collection of personal information about individuals and their online activities [3], and this can infringe on individuals’ freedoms. In recent years, many laws have been passed to protect individuals’ rights and freedoms related to information privacy. One such example is the General Data Protection Regulation (GDPR) adopted by the European Union (EU) in 2016 [4]. In line with the social and technological developments, information privacy has been an important research topic for many domains, including technical, ethical, legal, and psychological [2]. Many of these studies have focused on information privacy concerns (IPCs) because they are an important factor in individuals’ behavior [2,5].

Although competing definitions of IPCs exist [6], in the most general sense, they encompass individuals’ views about the possible loss of privacy when submitting information to a known or unknown entity. On the Internet, these views stem from an individual’s apprehension of losing control of their personal data, namely “the possible intentional or unintentional mismanagement of personal data submitted online” [7] (p. 357). Some investigators have also explored online, Internet, or social media sites’ privacy concerns [8,9,10] which are, in this study, regarded as special instances of IPCs in online contexts. A number of studies in the information management field have investigated factors of IPCs or have focused on the potential implications of IPCs for the behavior of Internet users [2,5,11,12]. To this end, many scholars have developed survey scales for individuals’ self-assessment of IPCs [9,13,14]. Whereas this research has provided important empirical insights into the ways in which IPCs shape the online behavior of Internet users, it has also raised seminal methodological questions about how to conceptualize and measure IPCs in a valid manner [11,15,16,17].

In fact, scholars have argued that current empirical assessments of IPCs suffer from (a) a lack of a broader theory that could frame different online contexts [12], (b) the unclear categorization of different concepts within the field of information privacy [16], and (c) methodologically questionable or inadequate adaptation of existing IPC scales to new online domains [17]. Potential methodological problems can also stem from different definitions of IPCs [11] and the difficulty in defining the key dimensions of the IPC construct [18]. Therefore, this systematic review is motivated by the need to systematically document the various IPC survey scales developed for online contexts and to assess the methodological quality of their development.

Accordingly, the aim of this study was to systematically scrutinize the conceptual background, contextuality, dimensionality, and quality of methodological procedures used in the development of existing survey scales for assessing individuals’ self-reported IPCs in online environments (herein referred to as IPC scales). Whereas many reviews have focused on the concept of information privacy and IPCs in various online contexts [2,5,11,12,16,19], only a handful have addressed IPC scales from the measurement quality perspective. For instance, Li [11] provided a descriptive overview of IPC scales. However, he did not tackle their content or measurement characteristics. Likewise, Hong and Thong [9] indirectly focused on understanding the dimensions of IPCs by reviewing 20 studies when developing their own IPC scale. Conversely, Preibusch [17] conducted a comprehensive review of IPC scales. Although he elaborated upon the content, length, and dimensionality of each inventory, his review does not provide insight into the quality of survey scale development procedures. In addition, the intensive engagement of Internet users with social network sites (SNSs) has recently led scholars to investigate personal data and privacy issues related to SNSs and to develop several survey scales tailored to the related specific aspects of IPCs [20]. However, none of these scales were included in the prior reviews.

To address these gaps, we conducted a systematic review of studies in which IPC scales were developed. The review was based on the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) methodology [21]. Specifically, we drew on the COSMIN Risk of Bias checklist [22], which was designed to evaluate the methodological quality of studies in which survey scales are developed or validated. Thus, this study provides a broad overview of various IPC scales and evaluates the methodological quality of their development process. In this study, we identify key issues and provide suggestions for remedying them. The study serves as a basis for improving the methodological quality of the development process of IPC scales and IPC survey measurement in online contexts.

The rest of the paper is structured as follows. In Section 2, we briefly introduce the notion of IPCs and provide an overview of past studies that reviewed IPC scales. Section 3 presents the methods and procedures used in this review, and Section 4 presents the empirical results. Section 5 discusses the key empirical findings, provides recommendations for future work and presents the limitations of this study. The last section concludes the paper.

2. Background

The conceptualizations of IPCs used in the development of IPC scales are defined and described in various ways despite being based on the same theoretical backgrounds in some cases [6,17]. Such multifariousness stems from the conceptual varieties of definitions of information privacy. These definitions are not univocal and vary from one discipline to another depending on the relevance and application. In fact, Smith et al. [2] suggested that even the understanding of privacy ranges from value-based definitions, which entail general privacy as a right or information privacy as a commodity, to cognate-based definitions, which understand general privacy as a state or as control. The lack of a clear distinction between privacy attitudes and privacy concerns further complicates matters [16]. In addition, Rohunen [6] (p. 66) stated, “Privacy concerns have often been defined based on previous theoretical and empirical literature, and they vary in the views on privacy (i.e., the data subject’s view and the data collector’s view), levels of subjectivity and application specificity.” Accordingly, she categorized seven different definitions of IPCs [6]: (a) Loss of privacy, (b) loss of control over privacy, (c) uncertainty about handling of personal information, (d) lack of awareness of use of the information, (e) opportunistic behavior related to the submitted data, (f) use of the information, and (g) fairness of the processing and use of the information.

When examining the constructs and measures of IPCs in online contexts, Li [11] and Preibusch [17] came to a similar conclusion: Research on IPCs in online contexts does not adhere closely to any common definition. Instead, it references a multitude of definitions and theoretical constructs. The wide variety of IPC constructs applied in online contexts is also consistent with the observation that the related research builds on a number of theoretical backgrounds [19]. Whereas theories are used as guides to identify the dimensionalities of IPC constructs (e.g., social contract theory and agent theory), others serve to conceptualize its effects on behavior (e.g., theory of reasoned action) or to explain the privacy paradox (e.g., utility maximization theory). From a theoretical perspective, the openness of the field to the plurality of theoretical perspectives can contribute to a deeper understanding of the dimensionality of IPCs in specific online contexts. Simultaneously, however, from a measurement perspective, the lack of a uniform theory and the counterpointing conceptualizations of IPCs can result in confusion in the field and impede its advance [12].

Given this disparity, evaluating and comparing empirical findings among studies that measure IPCs based on different conceptual definitions and in different online contexts become challenging endeavors [23]. Furthermore, hardly any empirical work systematically compares IPC scales [5]. In this respect, an overview of IPC scales for use in different online contexts seems to be a particularly valuable undertaking considering the importance of context in defining IPCs [24,25]. As Nissenbaum [25] argued, individuals’ privacy expectations are dependent not only on the type of information but also on the entity to which the information is being disclosed, in what way, what that entity will or can do with it, and to whom the information pertains. Tellingly, Yun et al. [12] demonstrated that in each of the five phases of research on IPCs, namely the pre-stage (before 1991), introduction (1991–2000), awareness (2001–2007), development (2008–2013), and extension (2014–present), studies have followed the technological advancements of the time. For example, in the introduction phase related to the beginnings of commercial Internet use, researchers primarily focused on IPCs in the ecommerce context. In the next phase, awareness, SNSs appeared, and the research focused on understanding IPCs in this context. In the last two stages, development and extension, researchers focused on Internet of Things (IoT) applications, autonomous vehicles, drones, etc. However, Yun et al. [12] did not shed light on how this diachronic development of contexts in which IPCs were studied has been reflected in the conceptualization of IPC scales.

In fact, relatively few studies have attempted to review the conceptual dimensions of IPC scales in online contexts. For instance, Castañeda et al. [26] identified four key dimensions (knowledge collection, knowledge use, control collection, and control use), whereas Hong and Thong [9] found six (collection, secondary usage, errors, improper access, control, and awareness). Moreover, Li [11] (p. 454) explored five IPC scales, arguing that “the conceptualizations and measurements of the privacy concern construct differ significantly across studies.” Similarly, Preibusch [17] examined seven IPC scales and concluded, considering the definitions of construct dimensions and other scale characteristics, that “direct comparisons are […] difficult” [17] (p. 1135). Hence, he did not delve further into the differences among conceptualizations of the scales and avoided gauging whether the potential distinctions in the dimensionalities of the measurement models associated with the scales are related to differences in theoretical frameworks or online contexts. Moreover, while some investigators considered that the dimensionality of the IPC construct is liable to change over time [14], others suggested that it will remain invariable [26] (p. 426). Thus, we can conclude that consensus on the dimensionality of IPC scales is still lacking.

Relatedly, scholars have emphasized that more research is needed to resolve the question of the measurement quality of IPC scales [9,13,26]. In general, when developing IPC scales, researchers assess the quality of their measurement models and operationalization definitions by reporting various psychometric properties of the internal structure (e.g., structural validity and internal consistency) and other common measurement properties (e.g., reliability). However, a synthesis of the quality of the scale development process of IPC scales has yet to emerge. Such a synthesis should first consider the conceptual complexity of IPCs, which has resulted in many alternative ways of defining dimensions in its measurement models. Hence, it is important to understand how scholars developing IPC scales tap into the multifaceted nature of this notion while ensuring the scales demonstrate adequate content validity. Content validity in the scale construction process is obtained with a theoretically informed item generation and selection process based on expert reviews, followed by a scale pretest with the target population [27]. A better understanding of how content validity is accounted for in the scale development process could help in the evaluation of the quality of IPC scales when applied to different online contexts. In fact, the constant adaptation of existing IPC scales to fit particular research contexts [11] and the resulting ad hoc development and scarce validity testing can seriously impact the quality of the results [17]. Whereas the reuse of existing scales is recommended, it should not happen without adequate scale development procedures and tests [17]. Therefore, a systematic evaluation of scale development procedures in this study could help identify potential problems in the scale development process and thus direct future studies toward ensuring higher quality in newly developed IPC scales which stem from the ever-evolving development of online domains and Internet services.

Based on the above considerations, we posed four research questions (RQs): (RQ1) What are the conceptual definitions of IPCs used in IPC scales? (RQ2) For which online contexts were IPC scales developed? (RQ3) Which dimensions are defined in the measurement models of IPC scales? (RQ4) What is the quality of the methods used in the development of IPC scales?

3. Method

The empirical analysis of this study was based on the COSMIN methodology [21], which was developed for the assessment of the methodological quality and psychometric properties of patient-reported outcome measures (PROMs) in the field of health care. Even though this method has been mostly used to assess the appropriateness of survey measurement instruments for perceptions of treatment outcomes or interventions (i.e., PROMs), its analytical framework is also suitable for evaluating the adequacy of survey scales in other disciplines [21] (p. 1155).

Given our research aims, we followed the relevant steps from the COSMIN methodology for performing a literature search and then used the COSMIN Risk of Bias checklist to screen for potential methodological biases in the scale development studies [22]. We focused on the following measurement properties: Content validity, structural validity, internal consistency, cross-cultural validity, reliability, and criterion validity. In this study, the understanding of measurement properties is aligned with the definitions used in the COSMIN methodology [28] (pp. 11–12). The remaining steps of the COSMIN methodology were omitted because they were not applicable to the aims and scope of this study.

3.1. Procedure

3.1.1. Systematic Literature Search

A systematic literature search was first conducted in 3 electronic databases, with the aim of including all the articles dealing with the development of IPC scales for online contexts. The review was prepared using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Protocol (PRISMA-P) guidelines [29]. It contains 4 sequential components (eligibility criteria, data sources, study selection, and data extraction), followed by data coding and analysis.

3.1.2. Eligibility Criteria

Guided by the research aims of this study, we focused on articles that dealt with the development of survey scales for assessing individuals’ self-reported IPCs in online environments. Following the propositions from the COSMIN methodology [28], we defined the eligibility criteria based on the construct of interest, population of interest, and measurement properties we chose to review. Additionally, we included only articles in English, due to language constraints, and excluded non-peer-reviewed works as they are unlikely to include relevant studies [28].

Based on these considerations, we used the following eligibility criteria: (a) The development of a survey scale must be the main topic and purpose of the article, (b) the analysis in the article must focus on the individual (i.e., respondent) and not on other units of observation (e.g., organizations and groups), (c) the survey scale must focus on information privacy and must not be related to other concepts (e.g., physical privacy), (d) the survey scale must be based on the self-assessment of the individual, (e) the article must attempt quantitative validation of 1 or more survey scales, (f) the survey scale must be developed solely for the purpose of exploring an individual’s IPCs in relation to the Internet environment, (g) the article must be written in English, and (h) articles (i.e., papers, books, book chapters, and conference contributions) must be peer-reviewed and published in a scientific publication (e.g., a journal or a book).

3.1.3. Information Sources and Search Strategies

Searches took place from 19 September to 22 September 2019. They were conducted in Web of Science, Scopus, and a bibliographic harvester Digital Library of the University of Ljubljana (DiKUL). The latter covers more than 100 information sources, including EBSCO, ProQuest, JSTOR, SAGE, and ScienceDirect. The search included articles from the beginning of 1996 to 18 September 2019. The year 1996 was chosen as the lower limit as this was the year that the first rigorously developed IPC scale—the Concern for Information Privacy (CFIP)—was published by Smith et al. [14]. However, this scale was not included in the analysis because it does not refer to the Internet context.

The search queries used, adjusted slightly in line with the technical differences of the search engines, are detailed in Appendix A, Table A1. To ensure the comprehensiveness of our results, we also scanned the reference lists of articles included in the full-text review and manually checked relevant literature (i.e., prior literature reviews and articles found while performing scoping reviews).

3.1.4. Study Selection

The selected articles were evaluated for eligibility via a 3-stage screening procedure. In the first stage and following the electronic search, all duplicates were removed from the search results. In the second stage, 1 author (J.B.) reviewed the titles and abstracts of the remaining articles. In cases where the eligibility for inclusion could not be established, the article was retained for further evaluation at the next screening stage. In the third stage, a full-text review of articles retained from the first 2 stages was performed. One author (J.B.) first reviewed the full texts to select articles eligible for inclusion. Thereafter, another author (A.P.) screened only those full texts that were classified as noneligible. Finally, to identify additional relevant articles, the reference lists of the included articles were reviewed by 2 authors (J.B. and A.P.). Any discrepancies and conflicts were resolved through discussion.

3.1.5. Data Extraction

The data extraction was conducted by 1 author (J.B.) and a study assistant (T.G.), who independently extracted data from the eligible articles using a predefined data extraction tool. Variables identified (and, where necessary, quantified) from this process included (a) the investigators, (b) year of publication, (c) number of included items, (d) type of measurement scale, (e) response options, (f) country or setting, (g) language(s) in which the survey scale was developed/tested, and (h) whether a formal procedure was used for the translation. Discrepancies were resolved through discussion with the other authors.

3.1.6. Coding Instrument

To address the 4 research questions, a coding instrument was developed (a copy is available upon request). It contained 82 information items divided into 2 sections. The first section collected information about (a) the definitions of the IPC concept for each survey scale and the theoretical framework on which the definitions were based, (b) the context for which the survey scale was developed, and (c) the dimensions of the survey scale. In the second section, the coding instrument combined information items adapted from the COSMIN Risk of Bias checklist designed to assess the methodological quality of the studies. The instrument assessed 6 measurement properties arranged into 3 blocks. The first block of items assessed the quality of procedures used to ensure content validity of the developed IPC scales. The second block of items assessed the quality of procedures used to determine structural validity, internal consistency, and cross-cultural validity (measurement invariance) of the reviewed IPC scales. The third block of items focused on the quality of procedures used to assess the reliability and criterion validity of the IPC scales.

As the 2 sections of the coding instrument were related to the characteristics of the survey scales and the studies included in the systematic review, we first identified all the survey scales and studies presented in each reviewed article. Then, we applied the coding instrument to collect the information (definition, context of use, and structure of the measurement model) for each survey scale in the study. Next, we assessed the methodological quality of each study on a measurement property (as defined in the COSMIN Risk of Bias checklist) to screen for risk of bias in the included studies [22].

The COSMIN Risk of Bias checklist is composed of boxes, and each individual box is used to evaluate the methodological quality of one measurement property (there are more boxes for the evaluation of content validity). The same box can be completed multiple times per article, once for each study. Each box contains multiple standards according to which the methodological quality of each study on a measurement property is rated. For example, the box for assessing the quality of the concept elicitation study contains 8 standards referring to the use of appropriate data collection and analysis methods. Each standard is rated as either very good, adequate, doubtful, or inadequate. The score very good is assigned if there is explicit information that the quality is appropriate, as adequate if information is not explicit but the quality can be assumed, as doubtful if no explicit information is provided while the quality cannot be assumed, and as inadequate if it is clear that the standard has been violated. For details, see the COSMIN Risk of Bias checklist available at www.cosmin.nl (accessed on 6 November 2020).

3.1.7. The Coding Process and Analysis

One author (J.B.) and a study assistant (T.G.) conducted a pilot test of the coding instrument. They independently coded 2 randomly selected articles from the list of all eligible articles. The results were compared for each information item, and differences in the interpretations of the information items were reconciled with the help of another author (A.P.). Accordingly, the coding instrument was modified whenever the original information items appeared to be inappropriate or additional clarification was deemed necessary. In the main study, 2 members of the research team (J.B. and T.G.), who conducted the coding independently, utilized the modified coding instrument for all the eligible articles. Once all the articles were coded, 1 of the researchers (J.B.) reviewed the results for potential inter-rater disagreements. In line with the COSMIN methodology [21], the final codes were assigned through the consensus of both members (J.B. and T.G.). Where the 2 members could not come to an agreement, the issues were resolved with the help of the other authors.

After the completion of the coding, information items in the first part of the coding instrument were analyzed according to their content, and the results are presented descriptively. Next, the COSMIN Risk of Bias checklist protocol was used to assert the methodological quality of each study on a measurement property (or for each box in the case of content validity). The protocol takes the lowest rating of any standard in that box (i.e., “the worst score counts” method). The lowest rating is taken because “poor methodological aspects of a study cannot be compensated by good aspects” [30] (p. 1164). After this step, the COSMIN methodology proposes assessing the concrete results of the studies on measurement properties (e.g., values of Cronbach’s alpha) and pooling the results of the methodological quality and measurement properties. However, as our goal was only to document the methodological quality of their development processes, we assigned the final score for the methodological quality per measurement property by taking the result of the best development study for each IPC scale.

4. Results

4.1. Study Selection

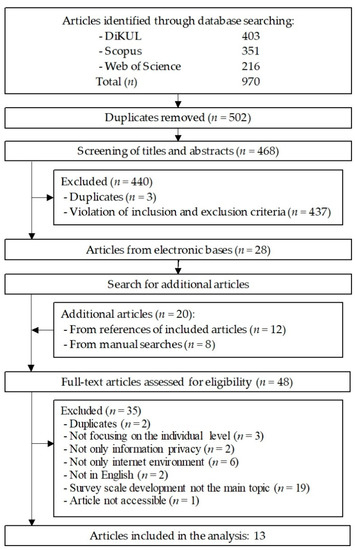

A flow diagram of the study selection procedure and the results (using the PRISMA tool [31]) is presented in Figure 1. Our electronic search of the 3 databases identified 970 potentially relevant articles, of which 502 were duplicates. A further 440 articles were excluded after the title and abstract were reviewed because they did not meet the eligibility criteria (437) or were identified as duplicates (3). In total, 20 additional articles were included for the full-text evaluation following the review of the reference lists of the included articles (12) and manual searching (8). Of the 48 articles read in full, 35 did not meet the eligibility criteria because the development of the survey scale was not the main topic of the study (19), they did not focus only on the Internet context (6), or the survey scale was not related to information privacy (2) or did not address individuals’ IPCs (3). In addition, two articles were identified as duplicates, and two were not available in English. We could not retrieve the complete full-text manuscript for one article. Consequently, 13 articles met the criteria for final inclusion.

Figure 1.

Article selection process.

4.2. Descriptive Results

Table 1 provides the details of the 16 IPC scales identified in 13 eligible articles containing 41 studies (4 qualitative studies, 10 pilot studies, and 27 studies evaluating measurement properties). The first IPC scale dates back to 2004, whereas the most recent ones were presented in 2017. The review of the articles shows that five scales (IPC-1, IUIPC, OPC, CPCI, and ECWPC; in the text we only use abbreviations of the scales, while full names are reported in Table 1) were presented before 2008. Moreover, the survey scales contained, on average, 14.1 items (SD = 5.4), ranging from 4 in ECWPC to 28 in UPCSNS. The results showed that the survey scales were based on Likert-type response options on a scale from 1 to 5 (seven survey scales) or 1 to 7 (seven survey scales). The scales were developed and tested in various countries and languages. However, the translation process of the scale into another language was described only in four cases (APCP, APCP-18, Amharic SNS-IPC, and Turkish OPCS).

Table 1.

Descriptive characteristics of survey scales for measuring individuals’ IPCs in the online context.

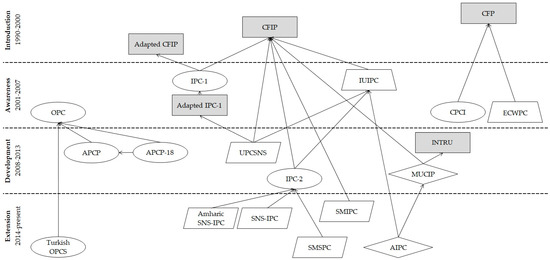

To better understand the relationships between individual survey scales, we mapped the genealogy of the reviewed scales (Figure 2). The figure shows that the first five IPC scales were conceived in the period of awareness, namely the period that marked SNS development and growth (see Section 2). However, they did not focus on SNSs and concerned either general Internet use (IPC-1, OPC, and CPCI) or ecommerce contexts (i.e., IUIPC and ECWPC). Moreover, they were developed independently of each other, either without a direct reference to existing IPC scales (OPC) or with a reference to existing IPC scales developed for non-internet-related contexts (IPC-1, IUIPC, CPCI, and ECWPC). Survey scales developed after 2008 drew heavily on existing IPC scales (developed for either online or offline contexts). All IPC scales related to general Internet use (APCP, APCP-18, and Turkish OPCS) referred to OPC, which was designed for general Internet use. One exception was IPC-2, which integrated CFIP and IUIPC into a new scale. Conversely, scales for use in the SNS context (SMIPC, SNS-IPC, Amharic SNS-IPC, and SMSPC) were developed from a general Internet use scale (IPC-2) or CFIP, which is not related to any online context. Notwithstanding the observed contextual inconsistency for the scales designed for the SNS context, the investigators made only minor changes to the existing scales (e.g., adjusting the wordings of items, adapting the scale response options, or adding or removing items) and/or conceptualized IPCs through a different measurement model. Moreover, none of these scales covered contexts such as autonomous vehicles and IoT, which characterize the development and extension phases of research on IPCs, according to Yun et al. [12].

Figure 2.

Development timelines of the IPC scales and their relatedness according to the four periods in research on IPCs. Survey scales in gray were not included in the review. The arrows indicate the source from which the new survey scale was developed (e.g., IUIPC was developed based on CFIP). We did not designate the connections between scales that were excluded from the review. Abbreviations in gray rectangles: CFP—Concern for Privacy [39], CFIP—Concern for Information Privacy [14], Adapted CFIP—Adapted Concern for Information Privacy [40], Adapted IPC-1—Adapted Internet Privacy Concerns [41], INTRU—Perception of Intrusion [42]. Legend of shapes representing online contexts: trapezoid—ecommerce, ellipse—general Internet usage, parallelogram—SNS, rhombus—mobile Internet. A detailed description of the contexts is presented in Section 4.3.2.

4.3. Research Questions

4.3.1. Conceptual Definitions in IPC Scales

To answer RQ1 (i.e., “What are the conceptual definitions of IPCs used in IPC scales?”), we searched the articles for definitions of IPCs and their theoretical underpinnings. Explicit definitions were found in 10 articles for 12 survey scales (Table 2). The definition of IPCs was absent in the articles on IPC-1, APCP, APCP-18, and Turkish OPCS. Moreover, the conceptualization of IPCs was presented for only five survey scales (IUIPC, MUCIP, IPC-2, SMIPC, and AIPC). This means that only those scales were drawn from a clearly delineated theoretical framework. In the articles, the investigators most often referred to communication privacy management theory [43], followed by social contract theory [44,45] and multidimensional developmental theory [46].

Table 2.

Definitions of IPCs and theories used in the scale development process.

Based on the inspection of the conceptual and operational definitions in the articles, we clustered the definitions of IPCs into two groups. The first group denotes a desire for or feeling of control (OPC, CPCI, ECWPC, and UPCSNS). Such definitions refer to “the desire to keep personal information out of the hands of others” [8] (p. 158), “the Internet customer’s concern for controlling the acquisition and subsequent use of information” [26] (p. 424), or the “user’s ability or right to control” [34] (p. 1539). The second group (IUIPC, MUCIP, IPC-2, SMIPC, SNS-IPC, Amharic SNS-IPC, AIPC, and SMSPC) contains definitions accounting for perceptions of information privacy which refer to or are reflected through subjective views of fairness [13], concerns of (mobile) users [35], or “feeling, perception or concerns” [36] (p. 3). Consequently, all the IPC scales measure individuals’ subjective evaluations or feelings about their own information privacy (e.g., feelings of control, fear of loss of information privacy, and opinions about one’s own information privacy), although each utilizes a slightly different definition, supposedly to fit the intended context.

4.3.2. Online Contexts and IPC Scales

To answer RQ2 (i.e., “For which online contexts were IPC scales developed?”), we drew on Yun et al.’s [12] typology of information privacy contexts and coded the contexts according to four dimensions: Type of individuals, type of personal information, type of entities handling personal information, and type of technology/service contexts. To determine the context of a survey scale, we relied on both the theoretical conceptualization of IPCs in the article (when available) and on the operationalization of IPCs, which the wording of the question and scale items comprise.

The results suggest that the survey scales cover only three types of individuals: Online consumers, Internet users, and bloggers/SNS users (Appendix A, Table A2). With reference to the type of personal information, only four scales (IPC-1, OPC, APCP, and MUCIP) specified the type of personal information. However, even among those, only MUCIP referred to unequivocally specified type of information (i.e., location information). Likewise, when assessing the type of entity handling the information, we would expect higher specificity. Most survey scales measured IPCs in relation to second, third, and fourth parties or a company/website. Scales developed after 2010 also referred to “other users” (e.g., of SNSs). The only exception was IPC-2, which involves the government as the entity handling personal information. Even with regard to the type of technology/service context, the scales cover a limited number of contexts: General Internet use, ecommerce, and online/mobile services. Again, the only exception is IPC-2, with items related to government surveillance. Surprisingly, none of the reviewed survey scales were designed to assess IPCs in the workplace, in the healthcare context, or with regard to the IoT or similar aspects. Based on these results, we derived four overarching contextual groups of IPC scales that provide a comprehensive overview of the online contexts: ecommerce (IUIPC and ECWPC), general Internet usage (IPC-1, OPC, CPCI, APCP, APCP-18, IPC-2, and Turkish OPCS), mobile Internet (MUCIP and AIPC), and SNSs (UPCSNS, SMIPC, SNS-IPC, Amharic SNS-IPC, and SMSPC). As expected, all the survey scales in the last two groups were developed in the last decade.

4.3.3. Dimensionality of IPC Scales

RQ3 asked, “Which dimensions are defined in the measurement models of IPC scales?” We found that only two scales were unidimensional (OPC and APCP-18), while the remainder were multidimensional (including from two to six dimensions). The median number of dimensions was three. Interestingly, all the survey scales measured IPCs as a reflective concept. Among the multidimensional scales, eight were operationalized as first-order, five as second-order, and three as third-order constructs (Appendix A, Table A3).

In all, the investigators operationalized 25 different dimensions in the IPC scales. A content inspection revealed that many dimensions with different names were related to matching definitions and conceptualizations. In an attempt to map out potential convergences among the reviewed survey scales, the description of each dimension and the wordings of related scale items were systematically inspected for substantial resemblance. By content-matching the dimensions, we were able to map nine distinctive dimensions (Appendix A, Table A3). The three most frequent were (ab)use, collection, and access, appearing in 11, 10, and 8 survey scales, respectively. The dimension privacy concern also appeared in eight survey scales. As it referred to context- or service-specific concerns regarding user behavior (e.g., privacy concerns while using email) and lacked any conceptual grounding (i.e., none of the IPC scales that include it are based on a theory), we considered it a specific aspect of IPCs rather than their distinct conceptual dimension. The remaining five dimensions appeared six or fewer times. The three notable ones are control, errors, and awareness, which appeared in six, five, and four IPC scales, respectively. The latter three dimensions are, however, sometimes also conceptualized as distinct constructs or might apply only to specific contexts. This is further elaborated in the discussion.

4.3.4. The Methodological Quality of IPC Scale Development

Results pertaining to RQ4 (i.e., “What is the quality of the methods used in the development of IPC scales?”) are presented according to COSMIN Risk of Bias checklist boxes nested in three domains (see Section 3.1.6).

Content Validity

The general design requirements box entails a clear description of the construct, the theoretical justification of the construct, the description of the target population, the description of the context of use, and the assessment of whether the concept-elicitation study was conducted in a sample representing the target population. As shown in Table 3 (column 2), only one study was rated as adequate (IUIPC) and one as very good (MUCIP). Notably, the context of use was described clearly for all scales, a clear description of the target population was provided in 15 scales, the construct was clearly described for 12 IPC scales, and the origin of the construct was clearly described in 10 cases, while the concept-elicitation study was either not performed or not performed on a representative sample in 10 cases (IPC-1, CPCI, ECWPC, IPC-2, SMIPC, SNS-IPC, Amharic SNS-IPC, Turkish OPCS, AIPC, and SMSPC). This indicates that the major shortcoming of the studies on IPC scale development is the absence of a concept-elicitation study on a representative sample.

Table 3.

Methodological quality of the reviewed IPC scales based on the COSMIN Risk of Bias checklist.

The concept-elicitation study (Table 3, column 3) box evaluates the adequacy of research activities used for concept mapping (desirable are qualitative studies such as interviews and focus groups) and recognition of relevant items to be included in the survey scale. Such activities were presented in studies for six IPC scales: One study utilized quantitative methods, while five utilized qualitative methods. Among the latter, four studies used a widely recognized qualitative data collection method, three studies provided a clear description of an appropriate method for data analysis, and two presented transcripts. Only one study provided a clear indication of skilled interviewers and a clear description of independent data coding, while none reported using a topic or interview guide or clearly described whether data saturation was reached.

The cognitive interview (Table 3, column 4) box evaluates whether a cognitive interview (or pilot test) aimed at assessing comprehensibility and comprehensiveness (see next paragraph) was conducted and if it was conducted with members of the target population. If this section is rated as inadequate, it indicates that a cognitive interview was not conducted (or not conducted with a representative sample). The results revealed that the development of seven scales included cognitive interviews with the target population. A pilot study was conducted while SNS-IPC and Amharic SNS-IPC were developed but not on a representative sample (the pilot study was conducted with experts and not with the target population of respondents). Accordingly, both studies were rated as inadequate.

Among the studies including either a cognitive interview or another type of pilot study, we assessed whether the investigators tested the respondents’ understanding of the scale items as intended (i.e., comprehensibility) and if they controlled to what extent the survey scale includes all facets of the measured construct (i.e., comprehensiveness). As indicated in Table 3 (columns 5 and 6), the procedures applied to inspect the comprehensibility and comprehensiveness of the IPC scales were doubtful, either because they were not conducted in accordance with COSMIN standards or because the descriptions of the procedures were incomplete. In the cases of IPC-1 and IPC-2, the investigators performed pilot studies but did not evaluate the comprehensibility of the survey scale.

Structural Validity, Internal Consistency, and Cross-Cultural Validity

The results indicated that the procedures used for the evaluation of structural validity (i.e., the verification that the survey scale adequately reflects the dimensionality of the measured construct) varied considerably (Table 3, column 7). Whereas the quality of procedures to ascertain structural validity was inadequate only for UPCSNS (due to the small sample size), it was assessed as doubtful for SNS-IPC, Amharic SNS-IPC, and AIPC. Notably, when SNS-IPC and Amharic SNS-IPC were developed, the methods utilized for identifying the scale dimensions were not sufficiently explained, while in the case of AIPC, the investigators did not remove the item that did not load on any of the identified factors. Conversely, the studies on IPC-1, OPC, ECWPC, and SMSPC were of adequate quality, and the scale dimensions were found to have been determined according to all COSMIN standards when IUIPC, CPCI, APCP, APCP-18, MUCIP, IPC-2, SMIPC, and Turkish OPCS were developed.

In all cases except for ECWPC, internal consistency was estimated using Cronbach’s alpha or composite reliability, both of which are considered appropriate by COSMIN standards. Thus, the quality of the studies was rated as very good (Table 3, column 8). However, the analysis showed that none of the studies considered cross-cultural validity (Table 3, column 9). As many of the IPC scales were developed in various countries and validated on samples with different demographic and cultural characteristics, the lack of testing for cross-cultural validity can indicate a disregard for the cultural and contextual specificity of IPCs.

Reliability and Criterion Validity

The quality of studies on reliability was determined based on the presence of a test–retest study design, which compares a scale’s scores at two different points in time on the same sample to check if the obtained scores are the same (assuming that the true value of the measured variable does not change). None of the reviewed studies utilized such a procedure (Table 3, column 10). However, the absence of a reliability study does not necessarily indicate that the quality of the scale development process is poor. Last, the criterion validity was assessed for five IPC scales (Table 3, column 11). The procedures were of high quality in all five cases and the authors used criterion variables based on existing IPC scales. Namely, Malhotra et al. [13] compared the newly developed IUIPC scale to a newly developed scale for general IPCs based on the items used by Smith et al. [14]. Similarly, Castañeda et al. [26] compared CPCI with a scale that measures general IPCs. The authors did not provide any information about this measure. Buchanan et al. [8] validated OPC with IUIPC. Taddicken [33] estimated the criterion validity of APCP-18 with its longer version (APCP), whereas Alakurt [37] compared the Turkish translation of OPCS with the original English version (OPC).

5. Discussion

5.1. Substantial Findings and Implications

The present systematic review provides important new insights into the conceptual and methodological aspects of studies in which survey scales for assessing individuals’ self-reported IPCs in online contexts were developed. In the following, we discuss the findings and their implications and give recommendations for future research.

The results related to RQ1 indicate that the IPC constructs are defined in different ways. Li [11] suggested that such a range of conceptual definitions stems from researchers’ attempts to capture the most important aspects of IPCs in the changing technological and sociocultural environment. However, our results indicate that different conceptualizations were proposed in the same technological period and in relation to the same context (see Figure 2). As the genealogy of the IPC scales shows, this might be because the investigators often developed new survey scales rather than incrementally improving existing ones [17]. For example, Koohang [10] adapted the IPC-2 scale for use in the SNS context, although three scales had been developed for SNSs beforehand (UPCSNS, SMIPC, and SNS-IPC). Moreover, an evident lack of theory utilization in scale development was observed. Only a handful of studies based the definition of the IPC constructs on a theory, and even those that did often used counterpointing theories. Various conceptualizations seem to have arisen from attempts to provide new survey scales for specific online contexts (e.g., SNSs) or to capture only a subset of IPCs [11]. Whereas the multitude of approaches might deepen the understanding of IPCs in each individual context, this plurality endangers the consolidation of measurement approaches as investigators engage in the development of similar, albeit conceptually different, IPC scales. This disparity results in potential confusion rather than a richness of insights, since the same notion is used to refer to different concepts [49]. Therefore, we propose that researchers developing IPC scales draw upon an appropriate theory and clearly state their conceptualization of the construct. Further, researchers utilizing existing IPC scales should adopt the same conceptualization as used in the original scale development study.

In contrast to the vast heterogeneity of conceptualizations, the reviewed IPC scales cover four well-distinguished online contexts (RQ2): General Internet use, ecommerce, SNSs, and mobile Internet. Given the fast pace of Internet service innovation, this finding opens at least two intriguing issues for discussion. First, the general definitions of the identified online contexts in the survey scales (except for ECWPC) might hinder the performance of IPC scales because they are less sensitive to the specifics of the context [27] (pp. 73–75). This is especially important because IPCs are very context-dependent [25]. In fact, differences in an individual’s level of IPCs in various online contexts have also been empirically demonstrated, indicating that an individual’s privacy expectations depend not only on the type of information submitted but also, for example, on his or her perceived anonymity [24,50]. Further, relying on a general definition of IPCs might deter their explanatory potential when predicting privacy-related attitudes and human behavior in specific online contexts [17]. Li [11] hypothesized that when conceptualized and measured broadly, IPCs are appropriate as a measure of the psychological state, while narrower conceptualizations are required to predict behavior and trust. In this respect, we advise researchers to account for the specificity issue and adopt a measure of IPCs that corresponds to the level at which the dependent variable is measured. Second, the reviewed IPC scales were developed only for online contexts that pertain to the second (introduction) and third (awareness) stages in Yun et al.’s [12] typology of the periods of IPC research. Thus, IPC scales that would extend the scope of the online contexts of IPCs to emerging domains of the Internet in everyday life, such as IoT, cloud computing, or autonomous vehicles, are warranted.

Regarding RQ3, considerable diversity in the number of dimensions included in IPC scales can be observed. A limited number of scales draw on a particular theory in defining dimensions (IUIPC, MUCIP, IPC-2, SMIPC, and AIPC), while the others are based on a review of existing dimensions of IPCs (IPC-1, CPCI, ECWPC, and UPCSNS), adopt the dimensionalities of previous scales (SNS-IPC, Amharic SNS-IPC, and SMSPC), or derive the dimensions through exploratory or confirmatory factor analysis (OPC, APCP, and Turkish OPCS). As a result, the contents of the included dimensions often overlap, although their formal denomination and number might differ significantly. Nevertheless, through the process of content matching, we were able to distill six key dimensions: (ab)use, collection, access, control, awareness, and errors (the dimension privacy concerns was excluded; see Section 4.3.3). Whereas all six dimensions were empirically validated, there are some issues that we would like to underscore with reference to the last three dimensions. For example, Laufer and Wolfe [46] noted that control is not a prerequisite of privacy and that a situation can be perceived as private although the individual lacks control over it. Tellingly, it has been demonstrated that privacy control is related to but, in essence, different from IPCs [42]. Likewise, awareness can be conceptualized as a distinct construct and as an antecedent of IPCs [42,51]. Finally, errors might be a possible dimension, but only for specific online contexts (e.g., medical, banking), as studies have reported that users in fact provide false information in ecommerce and SNS scenarios to protect their privacy [52,53]. In this sense, the inclusion of the errors dimension in IPC scales developed for SNS contexts is confusing. Looking back at the genealogy of the IPC scales (Figure 2), one might conclude that researchers adopted previously validated dimensions without considering the context-specific elements of the environment for which the new scale was developed. Coupled with scarce theoretical justifications and a lack of content validity, this introduces a considerable level of doubt regarding the appropriateness of the included dimensions in some IPC scales. We therefore propose that more effort be given to identifying the relevant dimensions of IPCs for each specific context and to incorporating only these in the corresponding IPC scale.

RQ4 addressed the quality of procedures used in IPC scale development. Whereas the procedures used for assessing internal validity were most often of adequate or higher quality, our analysis recognized a high risk of bias in ensuring content validity. Assuming that a clear description of the measured construct is an essential step in assessing content validity—because only by specifying the construct clearly can the scale’s content be judged as appropriate or otherwise [27,54,55]—our review suggests that some studies have already failed in this very first step of scale development. Notably, four scales did not rely on a clear account of the IPC concept, and the delineation of the construct origin was absent in six scales. Content validation was further restrained by the lack of input from the target population during the item generation process and by scarce testing of comprehensibility and comprehensiveness. This might lead to “conceptualizations that are faulty and items that do not address important facets of the construct” [56] (p. 233). We found that several investigators attempted to overcome the problems of content validation with a stronger reliance on existing questionnaires. Nevertheless, due to the cultural and contextual specificities of privacy [18], such a strategy needs to be applied attentively. Thus, developing a new IPC scale or adapting an existing one from one Internet context or culture to another should always be accompanied by content validation, for example, using expert reviews, cognitive interviews, or behavioral coding. Finally, criterion validity poses a problem. According to the COSMIN methodology [28], criterion validity is assessed by comparing the newly developed measure to a “gold standard” (i.e., an existing and rigorously validated measure of the same concept), and a high correlation between them indicates that both measure the same concept. Whereas the methods used for assessing criterion validity were appropriate, criterion validity was tested only with respect to existing IPC scales of uncertain methodological quality, making the validity of such comparisons questionable. Moreover, IPC scales often differ in the conceptualization of the measured construct (see Table 2), which calls the conceptual similarity of the compared scales into question. Therefore, we suggest that future research aimed at testing the criterion validity of IPC scales should use only existing scales with confirmed validity and consider the conceptual underpinnings of the scales in comparison.

When we integrate the findings across the research questions, they suggest that future endeavors in IPC scale development should first focus on the conceptualization of the construct and content validity of newly developed scales. Of course, many difficulties regarding the conceptualization of IPCs (e.g., nonuniformity, nonspecificity, lack of contextuality) can stem from the challenges in defining information privacy and privacy in general [2]. Nonetheless, developing a robust—and ideally unified—conceptual framework that would allow a theoretically informed definition of the IPC construct should become the goal of future research. This would not only allow scholars to advance and compare measurement models of IPCs in different online contexts and cultural settings but also give practitioners a theoretically and methodologically informed basis for empirically evaluating the applied implications of IPCs for human behavior.

5.2. Limitations and Future Research

As with any systematic review, the findings of this study should be interpreted with the limitations of both the research literature and our methods in mind. With reference to the scope of the systematic search, the exclusive focus on articles developing and testing IPC scales can be regarded as the first limitation of our work. If our selection criteria had been more inclusive, we might have identified studies that tested specific measurement properties of IPC scales. For instance, none of the selected scales were tested for cross-cultural validity. However, it is likely that such evaluation was conducted in other empirical studies on IPCs in online contexts. Thus, overall, our results might underestimate certain aspects of IPC scales. Further, as our research is limited to online contexts, the findings may not be generalizable to other domains for which the IPC scales were developed.

Second, although the literature search was comprehensive, publication bias cannot be completely discounted. Whereas we performed manual searches of relevant journals and reviewed the reference lists of the screened articles, limited resources did not allow us to include unpublished studies, works in the gray literature, or studies not published in English. However, it is unlikely that relevant studies would be found in these sources [28].

Third, the studies in this review were evaluated only for the methodological quality of the scale development procedures. We did not assess and compare the psychometric characteristics of the IPC scales. Such an overview and evaluation would be beneficial to the research community as it could be used to make an informed choice of the IPC scales for use in future empirical studies. However, the heterogeneity of the identified scales (in terms of contexts, dimensions, definitions, etc.) and the asserted questionable quality of the methodological procedures for ensuring content validity herein make the usefulness of such a comparison disputable. In fact, Mokkink et al. [28] suggested that evaluation of the interpretability and feasibility of survey scales based on their psychometric characteristics is worthwhile only when high-quality evidence of content validity is present.

The last potential limitation pertains to the selection of the evaluation method. The COSMIN methodology is a validated framework for systematic reviews of studies on measurement properties. Nevertheless, it has been utilized mostly in the medical and health sciences for selecting the most suitable PROMs. Whereas its checklists can potentially be used in other research fields and disciplines, certain measurement properties will nonetheless need appropriate adjustments for well-founded applications to the social sciences. For instance, using the test–retest method to ascertain the reliability of a survey scale is highly uncommon for information and Internet research in general. Therefore, the identified absence of reliability studies for IPC scales might be more a consequence of the general practices in the field than a distinctive scale development characteristic of IPCs.

6. Conclusions

In summary, it seems that we should be concerned to some extent about how IPCs are measured in online contexts. First, the numerous identified definitions of the reviewed IPC scales appearing across different contexts are rarely derived from theoretical frameworks. Moreover, the breadth of constructs subsumed by the diverse definitions and operationalizations of IPCs is not without costs in terms of the methodological quality of the tools used in the development of the IPC scales. Although methods for assessing the internal validity of the reviewed scales are mostly of very good quality, they are seldom preceded by procedures that would secure an adequate level of content validity. Most importantly, the tools researchers developed for working with concepts to achieve efficient and exhaustive concept elicitation, comprehensibility, and comprehensiveness were used selectively. Even when they were implemented, their quality was questionable. Thus, those who develop IPC scales should put more focus on the conceptualization and content validation stages, while practitioners using these scales should account for the context-specific properties of IPC scales and perform the necessary content validity checks when applying them to a new context. The present paper can help researchers identify and address the most critical issues related to the development of IPC scales for the online context and their use. Therefore, it is a step forward in ensuring greater methodological rigor of this fragmented study area.

Author Contributions

Conceptualization, J.B. and A.P.; methodology, J.B., A.P. and V.V.; formal analysis, J.B.; investigation, J.B. and A.P.; data curation, J.B.; writing—original draft preparation, J.B. and A.P.; writing—review and editing, J.B., A.P. and V.V.; visualization, J.B.; project administration, J.B.; funding acquisition, A.P. and V.V. All authors have read and agreed to the published version of the manuscript.

Funding

The research was conducted as part of the first author’s Young Researcher fellowship, financed from the national budget by a contract between the Slovenian Research Agency and the Faculty of Social Sciences, University of Ljubljana. This research has been also co-funded by the Slovenian Research Agency (Grant Nos. P5-0399, L5-9337).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to acknowledge Teja Gerjovič for her assistance with the data collection process.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

Table A1.

Search terms and phrases in the three data sources.

Table A1.

Search terms and phrases in the three data sources.

| Data Source | Search Terms and Phrases |

|---|---|

| Web of Science (www.webofknowledge.com) (accessed on 22 September 2019) | TITLE: (privacy) AND TITLE: (concern*) AND TOPIC: (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND TOPIC: (information* OR internet*) NOT TITLE: (secur*) AND LANGUAGE: (English) AND DOCUMENT TYPES: (Article OR Book OR Book Chapter OR Proceedings Paper) Timespan: 1996–2019. Indexes: SCI-EXPANDED, SSCI, A&HCI, CPCI-S, CPCI-SSH, BKCI-S, BKCI-SSH, ESCI, CCR-EXPANDED, and IC. |

| SCOPUS (www.scopus.com) (accessed on 22 September 2019) | (TITLE (privacy) AND TITLE (concern*) AND TITLE-ABS-KEY (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND TITLE-ABS-KEY (information* OR internet*) AND NOT TITLE (secur*) AND LANGUAGE (english*) AND PUBYEAR > 1995 AND PUBYEAR < 2020 AND (LIMIT-TO (DOCTYPE, “ar”) OR LIMIT-TO (DOCTYPE, “cp”) OR LIMIT-TO (DOCTYPE, “ch”)) |

| DiKUL (https://plus-ul.si.cobiss.net/opac7/bib/search) (accessed on 22 September 2019) | TI privacy AND TI concern* AND (survey* OR measure* OR factor* OR development* OR instrument* OR question* OR questionnaire* OR dimension* OR scale*) AND (information* OR internet*) NOT TI secur* Limitations: Peer Reviewed, Date Published: 19960101–20191231, Language: English |

Table A2.

Contexts for which the IPC scales were developed.

Table A2.

Contexts for which the IPC scales were developed.

| Label | Context | Contextual Group | |||

|---|---|---|---|---|---|

| Type of Individuals | Type of Personal Information 1 | Type of Entity Handling Personal Information 2 | Type of Technology/Service Contexts | ||

| IPC-1 | Online consumers, Internet users | General/unspecified | General/unspecified | General Internet usage, ecommerce | General Internet usage |

| IUIPC | Online consumers, Internet users | General/unspecified | Second and third parties, company/website | General Internet usage, ecommerce | Ecommerce |

| OPC | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website, other users | General Internet usage, ecommerce | General Internet usage |

| CPCI | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce | General Internet usage |

| ECWPC | Online consumers, Internet users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce | Ecommerce |

| APCP | Online consumers, Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website, other users | General Internet usage, ecommerce, online/mobile services | General Internet usage |

| APCP-18 | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | General Internet usage, online/mobile services | General Internet usage |

| UPCSNS | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| MUCIP | Internet users, mobile services users | Location information, general/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce, online/mobile services | Mobile Internet |

| IPC-2 | Internet users | General/unspecified | Second, third, and fourth parties; company/website; government | General Internet usage | General Internet usage |

| SMIPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| SNS-IPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| Amharic SNS-IPC | Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

| Turkish OPCS | Online consumers, Internet users, bloggers/SNS users | General/unspecified | Second, third, and fourth parties; company/website | General Internet usage, ecommerce, online/mobile services | General Internet usage |

| AIPC | Internet users, mobile services users | General/unspecified | Second and third parties, company/website | General Internet usage, online/mobile services | Mobile Internet |

| SMSPC | Internet users, blogger/SNS users | General/unspecified | Second, third, and fourth parties; company/website; other users | Online/mobile services | SNSs |

1 General/unspecified—the authors did not define any specific type of information. 2 First party—the data subject, second party—collects data directly from the first party, third party—legal entities who directly or indirectly (from second party) collect data about the first party, fourth party—illegal entities which collect data about the first party.

Table A3.

Dimensions of IPC constructs in the reviewed survey scales.

Table A3.

Dimensions of IPC constructs in the reviewed survey scales.

| Label | Number of Dimensions | (Ab)use | Collection | Access | Privacy Concerns | Control | Errors | Awareness | Personal Attitude | Requirements | Structure of the Measurement Model |

|---|---|---|---|---|---|---|---|---|---|---|---|

| IPC-1 | 2 | 1 | 1 | First-order | |||||||

| IUIPC | 3 | 1 | 1 | 1 | Second-order | ||||||

| CPCI | 2 | 1 | 1 | Second-order | |||||||

| ECWPC | 2 | 1 | 1 | Second-order | |||||||

| APCP | 4 | 4 | First-order | ||||||||

| UPCSNS | 6 | 1 | 1 | 1 | 1 | 1 | 1 | First-order | |||

| MUCIP | 3 | 1 | 1 | 1 | Second-order | ||||||

| IPC-2 | 6 | 1 | 1 | 1 | 1 | 1 | 1 | Third-order | |||

| SMIPC 1 | 3 | 1 | 1 | 1 | 1 | Second-order | |||||

| SNS-IPC | 5 | 1 | 1 | 1 | 1 | 1 | Third-order | ||||

| Amharic SNS-IPC | 5 | 1 | 1 | 1 | 1 | 1 | Third-order | ||||

| Turkish OPCS | 3 | 3 | First-order | ||||||||

| AIPC | 3 | 1 | 1 | 1 | First-order | ||||||

| SMSPC | 6 | 1 | 1 | 1 | 1 | 1 | 1 | First-order | |||

| Total | 53 | 11 | 10 | 8 | 8 | 6 | 5 | 4 | 1 | 1 |

1 The SMIPC survey scale contains a dimension called “Access and use,” which was subsumed under “(Ab)use” and “Access” separately.

References

- Westin, A.F. Social and Political Dimensions of Privacy. J. Soc. Issues 2003, 59, 431–453. [Google Scholar] [CrossRef]

- Smith, J.H.; Dinev, T.; Xu, H. Information Privacy Research: An Interdisciplinary Review. MIS Q. 2011, 35, 989–1015. [Google Scholar] [CrossRef]

- Van Dijck, J. The Culture of Connectivity: A Critical History of Social Media; Oxford University Press: New York, NY, USA, 2013; ISBN 9780199970773. [Google Scholar]

- Regulation (EU) 2016/679. The Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). European Parliament, Council of the European Union. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 6 November 2020).

- Bélanger, F.; Crossler, R.E. Privacy in the Digital Age: A Review of Information Privacy Research in Information Systems. MIS Q. 2011, 35, 1017–1041. [Google Scholar] [CrossRef]

- Rohunen, A. Advancing Information Privacy Concerns Evaluation in Personal Data Intensive Services. Ph.D. Thesis, University of Oulu, Oulu, Finland, 14 December 2019. [Google Scholar]

- Castaldo, S.; Grosso, M. An Empirical Investigation to Improve Information Sharing in Online Settings: A Multi-Target Comparison. In Handbook of Research on Retailing Techniques for Optimal Consumer Engagement and Experiences; Musso, F., Druica, E., Eds.; IGI Global: Hershey, PA, USA, 2020; pp. 355–379. ISBN 9781799814139. [Google Scholar]

- Buchanan, T.; Paine, C.; Joinson, A.N.; Repis, U.-D. Development of Measures of Online Privacy Concern and Protection for Use on the Internet. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 157–165. [Google Scholar] [CrossRef]

- Hong, W.; Thong, J.Y.L. Internet Privacy Concerns: An Integrated Conceptualization and Four Empirical Studies. MIS Q. 2013, 37, 275–298. [Google Scholar] [CrossRef]

- Koohang, A. Social Media Sites Privacy Concerns: Empirical Validation of an Instrument. Online J. Appl. Knowl. Manag. 2017, 5, 14–26. [Google Scholar] [CrossRef]

- Li, Y. Empirical Studies on Online Information Privacy Concerns: Literature Review and an Integrative Framework. Commun. Assoc. Inf. Syst. 2011, 28, 453–496. [Google Scholar] [CrossRef]

- Yun, H.; Lee, G.; Kim, D.J. A Chronological Review of Empirical Research on Personal Information Privacy Concerns: An Analysis of Contexts and Research Constructs. Inf. Manag. 2019, 56, 570–601. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet Users’ Information Privacy Concerns (IUIPC): The Construct, the Scale, and a Causal Model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef]

- Smith, J.H.; Milberg, S.J.; Burke, S.J. Information Privacy: Measuring Individuals’ Concerns about Organizational Practices. MIS Q. 1996, 20, 167–196. [Google Scholar] [CrossRef]

- Dienlin, T.; Trepte, S. Is the Privacy Paradox a Relic of the Past? An in-Depth Analysis of Privacy Attitudes and Privacy Behaviors. Eur. J. Soc. Psychol. 2015, 45, 285–297. [Google Scholar] [CrossRef]

- Gerber, N.; Gerber, P.; Volkamer, M. Explaining the Privacy Paradox: A Systematic Review of Literature Investigating Privacy Attitude and Behavior. Comput. Secur. 2018, 77, 226–261. [Google Scholar] [CrossRef]

- Preibusch, S. Guide to Measuring Privacy Concern: Review of Survey and Observational Instruments. Int. J. Hum. Comput. Stud. 2013, 71, 1133–1143. [Google Scholar] [CrossRef]

- Dourish, P.; Anderson, K. Collective Information Practice: Exploring Privacy and Security as Social and Cultural Phenomena. Hum. Comput. Interact. 2006, 21, 319–342. [Google Scholar] [CrossRef]

- Li, Y. Theories in Online Information Privacy Research: A Critical Review and an Integrated Framework. Decis. Support Syst. 2012, 54, 471–481. [Google Scholar] [CrossRef]

- Osatuyi, B. An Instrument for Measuring Social Media Users’ Information Privacy Concerns. J. Curr. Issues Media Telecommun. 2014, 6, 359–375. [Google Scholar]

- Prinsen, C.A.C.; Mokkink, L.B.; Bouter, L.M.; Alonso, J.; Patrick, D.L.; de Vet, H.C.W.; Terwee, C.B. COSMIN Guideline for Systematic Reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1147–1157. [Google Scholar] [CrossRef] [PubMed]

- Mokkink, L.B.; de Vet, H.C.W.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; Terwee, C.B. COSMIN Risk of Bias Checklist for Systematic Reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1171–1179. [Google Scholar] [CrossRef]

- Rohunen, A.; Markkula, J.; Heikkilä, M.; Oivo, M. Explaining Diversity and Conflicts in Privacy Behavior Models. J. Comput. Inf. Syst. 2020, 60, 378–393. [Google Scholar] [CrossRef]

- Acquisti, A. Privacy in electronic commerce and the economics of immediate gratification. In Proceedings of the 5th ACM conference on Electronic commerce (EC 2004), New York, NY, USA, 17–20 May 2004; pp. 21–29. [Google Scholar]

- Nissenbaum, H.F. Privacy in Context: Technology, Policy, and the Integrity of Social Life; Stanford University Press: Stanford, CA, USA, 2010; ISBN 9780804772891. [Google Scholar]

- Castañeda, A.J.; Montoso, F.J.; Luque, T. The Dimensionality of Customer Privacy Concern on the Internet. Online Inf. Rev. 2007, 31, 420–439. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2012; ISBN 9781412980449. [Google Scholar]

- Mokkink, L.B.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Terwee, C.B. COSMIN Methodology for Systematic Reviews of Patient-reported Outcome Measures (PROMs): User Manual. Available online: https://www.cosmin.nl/wp-content/uploads/COSMIN-syst-review-for-PROMs-manual_version-1_feb-2018-1.pdf (accessed on 6 November 2020).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. BMJ 2009, 339, 332–336. [Google Scholar] [CrossRef]

- Terwee, C.B.; Prinsen, C.A.C.; Chiarotto, A.; Westerman, M.J.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; de Vet, H.C.W.; Mokkink, L.B. COSMIN Methodology for Evaluating the Content Validity of Patient-Reported Outcome Measures: A Delphi Study. Qual. Life Res. 2018, 27, 1159–1170. [Google Scholar] [CrossRef] [PubMed]

- PRISMA. Available online: http://prisma-statement.org/ (accessed on 5 November 2020).

- Dinev, T.; Hart, P. Internet Privacy Concerns and Their Antecedents—Measurement Validity and a Regression Model. Behav. Inf. Technol. 2004, 23, 413–422. [Google Scholar] [CrossRef]

- Taddicken, M.M.M. Measuring online privacy concern and protection in the (social) web: Development of the APCP and APCP-18 scale. In Proceedings of the 60th Annual Conference of the International Communication Association (ICA 2010), Singapore, 22–26 June 2010. [Google Scholar]

- Zheng, S.; Shi, K.; Zeng, Z.; Lu, Q. The exploration of instrument of users’ privacy concerns of social network service. In Proceedings of the 2010 IEEE International Conference on Industrial Engineering and Engineering Management (IEEE 2010), Macao, China, 7–10 December 2010; pp. 1538–1542. [Google Scholar]

- Xu, H.; Gupta, S.; Rosson, M.B.; Carroll, J.M. Measuring mobile users’ concerns for information privacy. In Proceedings of the International Conference on Information Systems (ICIS 2012), Orlando, FL, USA, 16–19 December 2012. [Google Scholar]

- Borena, B.; Belanger, F.; Ejigu, D.; Anteneh, S. Conceptualizing information privacy concern in low-income countries: An Ethiopian language instrument for social networks sites. In Proceedings of the Twenty-First Americas Conference on Information Systems (AMCIS 2015), Fajardo, Puerto Rico, 13–15 August 2015. [Google Scholar]

- Alakurt, T. Adaptation of Online Privacy Concern Scale into Turkish Culture. Pegem Eğitim ve Öğretim Derg. 2017, 7, 611–636. [Google Scholar] [CrossRef]

- Buck, C.; Burster, S. App information privacy concerns. In Proceedings of the Twenty-Third Americas Conference on Information Systems (AMCIS 2017), Boston, MA, USA, 10–12 August 2017. [Google Scholar]

- Culnan, M.J. ‘How Did They Get My Name’: An Exploratory Investigation of Consumer Attitudes toward Secondary Information Use. MIS Q. 1993, 17, 341–363. [Google Scholar] [CrossRef]

- Culnan, M.J.; Armstrong, P.K. Information Privacy Concerns, Procedural Fairness, and Impersonal Trust: An Empirical Investigation. Organ. Sci. 1999, 10, 104–115. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An Extended Privacy Calculus Model for E-Commerce Transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Xu, H.; Dinev, T.; Smith, J.H.; Hart, P. Examining the formation of individual’s privacy concerns: Toward an integrative view. In Proceedings of the Twenty-Ninth International Conference on Information Systems (ICIS 2008), Paris, France, 14–17 December 2008. [Google Scholar]

- Petronio, S. Boundaries to Privacy: Dialectics of Disclosure; State University of New York Press: Albany, NY, USA, 2002; ISBN 9780791455166. [Google Scholar]

- Donaldson, T. The Ethics of International Business; Oxford University Press: New York, NY, 1989; ISBN 9780195058741. [Google Scholar]

- Friend, C. Social Contract Theory. Available online: https://www.iep.utm.edu/soc-cont/ (accessed on 6 November 2020).

- Laufer, R.S.; Wolfe, M. Privacy as a Concept and a Social Issue: A Multidimensional Developmental Theory. J. Soc. Issues 1977, 33, 22–42. [Google Scholar] [CrossRef]

- Campbell, A.J. Relationship Marketing in Consumer Markets: A Comparison of Managerial and Consumer Attitudes about Information Privacy. J. Direct Mark. 1997, 11, 44–57. [Google Scholar] [CrossRef]

- Westin, A. Privacy and Freedom; Atheneum: New York, NY, USA, 1967; ISBN 9780689102899. [Google Scholar]

- Bergman, M.N. A Theoretical Note on the Differences between Attitudes, Opinions, and Values. Swiss Polit. Sci. Rev. 1998, 4, 81–93. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. Privacy Concerns and Levels of Information Exchange: An Empirical Investigation of Intended e-Services Use. e-Serv. J. 2006, 4, 25–59. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. Internet Privacy Concerns and Social Awareness as Determinants of Intention to Transact. Int. J. Electron. Commer. 2005, 10, 7–29. [Google Scholar] [CrossRef]

- Youn, S. Teenagers’ Perceptions of Online Privacy and Coping Behaviors: A Risk–Benefit Appraisal Approach. J. Broadcast. Electron. Media 2005, 49, 86–110. [Google Scholar] [CrossRef]

- Lwin, M.O.; Williams, J.D. A Model Integrating the Multidimensional Developmental Theory of Privacy and Theory of Planned Behavior to Examine Fabrication of Information Online. Mark. Lett. 2003, 14, 257–272. [Google Scholar] [CrossRef]

- Bollen, K.A. Structural Equations with Latent Variables; John Wiley & Sons: New York, NY, USA, 1989; ISBN 9780471011712. [Google Scholar]

- Mackenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct Measurement and Validation Procedures in MIS and Behavioral Research: Integrating New and Existing Techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Vogt, D.S.; King, D.W.; King, L.A. Focus Groups in Psychological Assessment: Enhancing Content Validity by Consulting Members of the Target Population. Psychol. Assess. 2004, 16, 231–243. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).