Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario

Abstract

1. Introduction

- Do the attitude of the users and the user’s perception of the technology change after the interaction with the robot? (RQ1).

- Does the cognitive mental state influence the usability and the user’s perception of the robot in this scenario? (RQ2).

- Do the personality traits influence the usability and user’s perception of the robot in this scenario? If yes, which one? (RQ3).

- Does the current emotion influence the usability and user’s perception of the robot in this scenario? If yes, which one? (RQ4).

- Which factors may influence the interaction in the assistive scenario? (RQ5).

2. Related Works

| Reference | Year | Robot | Aim | Robot Role | Human Role | Outcome |

|---|---|---|---|---|---|---|

| [13] | 2009 | Bandit | Cognitive Therapy | Guiding the patient in performing the game | Supervision | Robot encouragement improves response time. |

| [14] | 2017 | NAO | Cognitive Therapy | Guiding the patient in performing the game | Supervision | Robot acceptability increases after the interaction. |

| [15] | 2018 | Pepper | Cognitive Assessment | Overall administration of the test | Supervision | Robot improved socialization. |

| [16] | 2019 | Pepper | Cognitive Assessment | Overall administration of the test (1st time) | Overall administration of the test (2nd time) | Validation of the robotic assessment. |

| [17] | 2019 | NAO | Cognitive Therapy | Overall administration of the test (1st time) | Overall administration of the test (2nd time) | Analysis of nonverbal behavior revealed there was more engagement with the robot, than with the clinician. |

| [24] | 2019 | Giraff | Cognitive Stimulation | Overall administration of the test | Supervision | Older people accepted the guidance of a robot, feeling comfortable with it explaining and supervising the tests instead of a clinician. |

| [25] | 2020 | NAO | Cognitive Stimulation | Overall administration of the test (1st time) | Overall administration of the test (2nd time) | Performing stimulation exercises with the robot enhanced the therapeutic effect of the exercise itself, reducing depression-related symptoms in some cases. |

| [26] | 2021 | Pepper | Cognitive Therapy | Guiding the patient in performing the game | Supervision | Robot improved socialization. |

| This work | 2021 | ASTRO | Cognitive Assessment | Overall administration of the test | Supervision | Robot reduced anxiety and incentivized the interaction. |

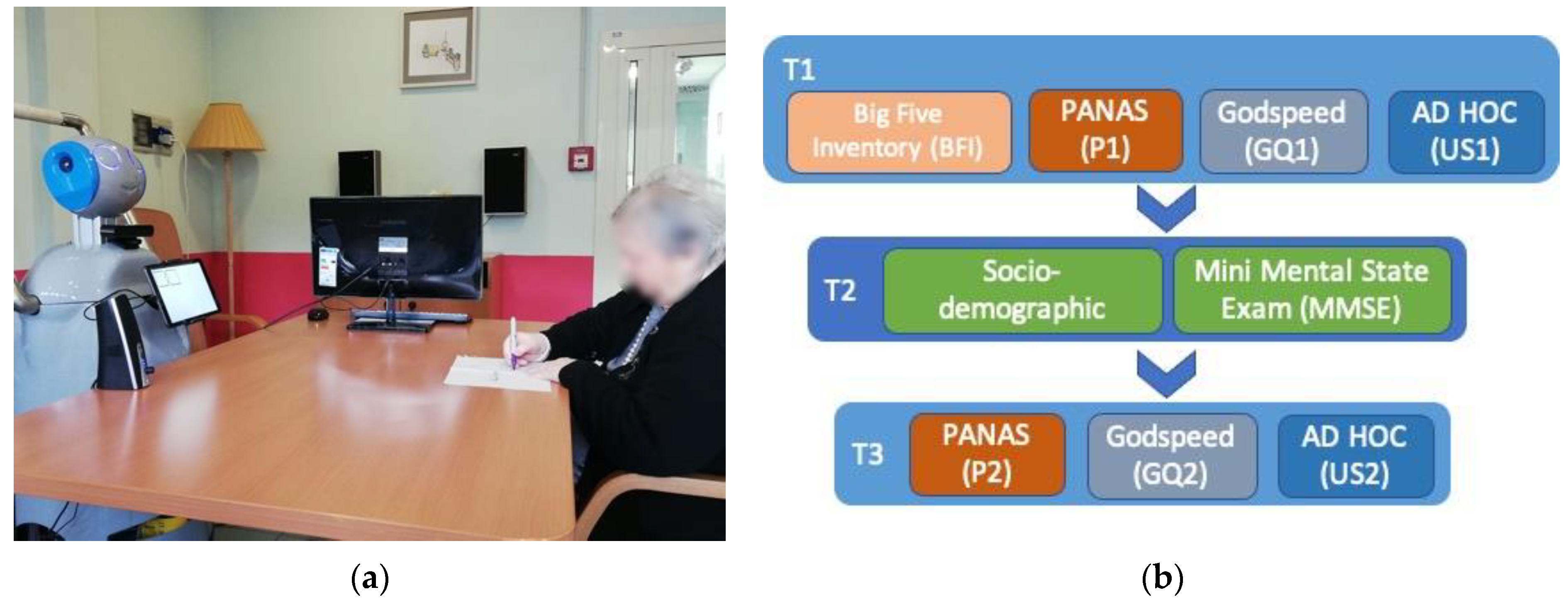

3. Materials and Methods

3.1. Cognitive Assessment

3.2. The Robot

3.3. Experimental Procedure

3.4. Questionnaires

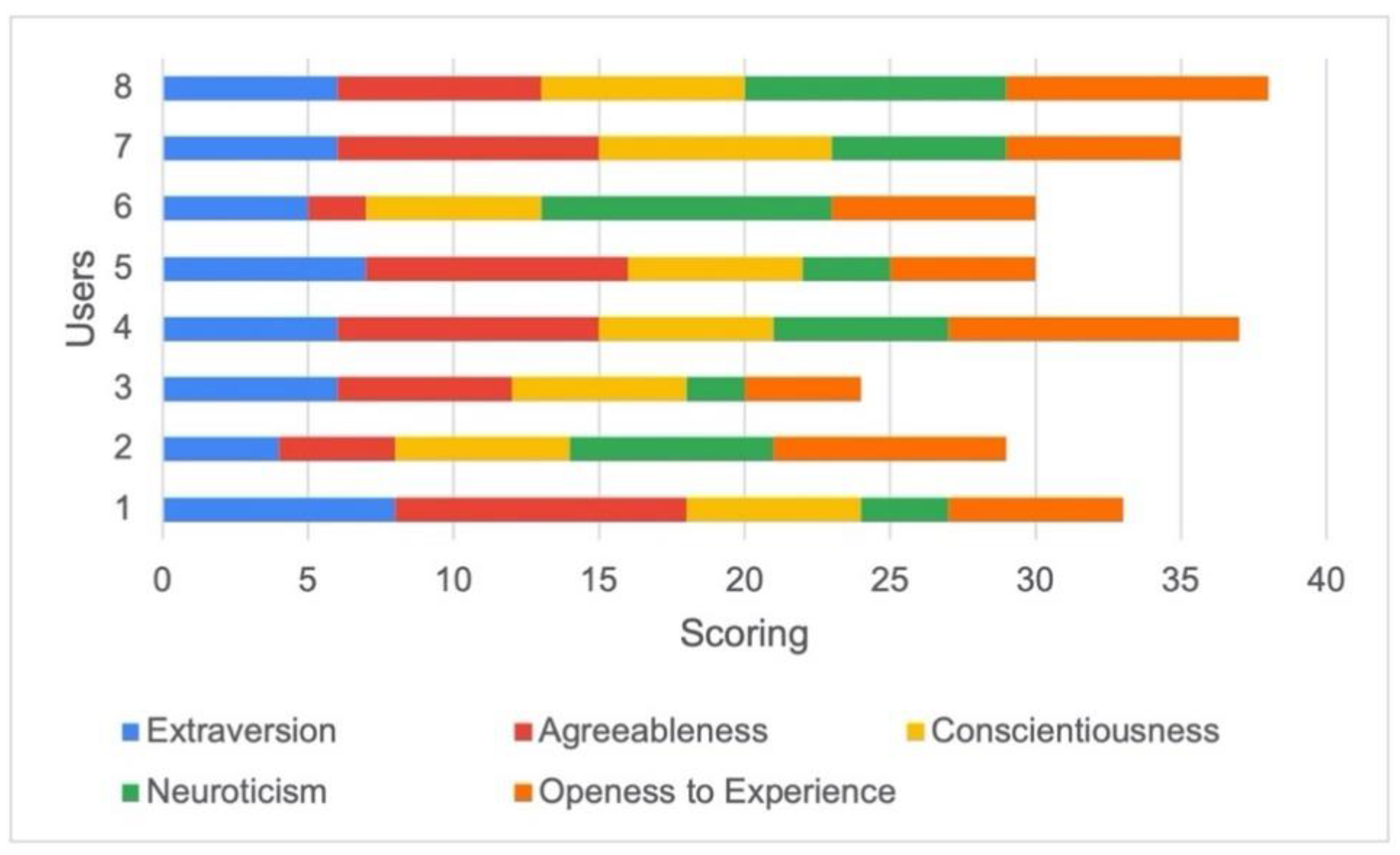

3.5. Participants

3.6. Data Analysis

- Verbal interaction quality metrics: Robot’s questions, robot’s quote, robot’s repetition, robot’s interaction time, interaction with the therapist, therapist interaction time, other time, total interaction time, coherent interaction, incoherent interaction;

- Emotion: Joy, neutral;

- Gaze: Direct, none;

- Facial expressions: Smile, laugh, frown, raised eyebrows, inexpressive; and

- Body gestures: Lifting shoulders, nodding head, shaking the head, quiet.

4. Results

4.1. Video Recording Analysis

4.2. Descriptive Statistics

4.3. Correlation with Personality

4.4. Correlation with Emotional State

4.5. Correlation with Cognitive State

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cross, E.S.; Hortensius, R.; Wykowska, A. From social brains to social robots: Applying neurocognitive insights to human–robot interaction. Philos. Trans. R. Soc. B Biol. Sci. 2019, 374, 20180024. [Google Scholar] [CrossRef] [PubMed]

- Rossi, S.; Ferland, F.; Tapus, A. User profiling and behavioral adaptation for HRI: A survey. Pattern Recognit. Lett. 2017, 99, 3–12. [Google Scholar] [CrossRef]

- Clabaugh, C.; Matarić, M. Robots for the people, by the people: Personalizing human-machine interaction. Sci. Robot. 2018, 3, eaat7451. [Google Scholar] [CrossRef]

- Rudovic, O.; Lee, J.; Dai, M.; Schuller, B.; Picard, R.W. Personalized machine learning for robot perception of affect and engagement in autism therapy. Sci. Robot. 2018, 3, eaao6760. [Google Scholar] [CrossRef] [PubMed]

- Nocentini, O.; Fiorini, L.; Acerbi, G.; Sorrentino, A.; Mancioppi, G.; Cavallo, F. A Survey of Behavioral Models for Social Robots. Robotics 2019, 8, 54. [Google Scholar] [CrossRef]

- Robert, L.P.; Alahmad, R.; Esterwood, C.; Kim, S.; You, S.; Zhang, Q. A review of personality in human-robot interactions. Ann. Arbor. 2020, 4, 107–212. [Google Scholar] [CrossRef]

- Braun, M.; Alt, F. Affective assistants: A matter of states and traits. In Proceedings of the Conference on Human Factors in Computing Systems-Proceedings, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Ivaldi, S.; Lefort, S.; Peters, J.; Chetouani, M.; Provasi, J.; Zibetti, E. Towards Engagement Models that Consider Individual Factors in HRI: On the Relation of Extroversion and Negative Attitude Towards Robots to Gaze and Speech During a Human–Robot Assembly Task: Experiments with the iCub humanoid. Int. J. Soc. Robot. 2017, 9, 63–86. [Google Scholar] [CrossRef]

- Anzalone, S.M.; Boucenna, S.; Ivaldi, S.; Chetouani, M. Evaluating the Engagement with Social Robots. Int. J. Soc. Robot. 2015, 7, 465–478. [Google Scholar] [CrossRef]

- Khamassi, M.; Velentzas, G.; Tsitsimis, T.; Tzafestas, C. Robot Fast Adaptation to Changes in Human Engagement During Simulated Dynamic Social Interaction With Active Exploration in Parameterized Reinforcement Learning. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 881–893. [Google Scholar] [CrossRef]

- Jain, S.; Thiagarajan, B.; Shi, Z.; Clabaugh, C.; Matarić, M.J. Modeling engagement in long-term, in-home socially assistive robot interventions for children with autism spectrum disorders. Sci. Robot. 2020, 5, eaaz3791. [Google Scholar] [CrossRef]

- Hamada, T.; Okubo, H.; Inoue, K.; Maruyama, J.; Onari, H.; Kagawa, Y.; Hashimoto, T. Robot therapy as for recreation for elderly people with dementia - Game recreation using a pet-type robot. In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN, Munich, Germany, 1–3 August 2008; pp. 174–179. [Google Scholar] [CrossRef]

- Tapus, A.; Tapus, C.; Mataric, M.J. The use of socially assistive robots in the design of intelligent cognitive therapies for people with dementia. In Proceedings of the 2009 IEEE International Conference on Rehabilitation Robotics, Kyoto, Japan, 23–26 June 2009; pp. 924–929. [Google Scholar]

- Fan, J.; Bian, D.; Zheng, Z.; Beuscher, L.; Newhouse, P.A.; Mion, L.C.; Sarkar, N. A Robotic Coach Architecture for Elder Care (ROCARE) Based on Multi-User Engagement Models. IEEE Trans. Neural Syst. Rehabilitation Eng. 2017, 25, 1153–1163. [Google Scholar] [CrossRef] [PubMed]

- Rossi, S.; Santangelo, G.; Staffa, M.; Varrasi, S.; Conti, D.; Di Nuovo, A. Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Acceptance. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Tai’an, China, 27–31 August 2018; pp. 802–807. [Google Scholar]

- Di Nuovo, A.; Varrasi, S.; Lucas, A.; Conti, D.; McNamara, J.; Soranzo, A. Assessment of Cognitive skills via Human-robot Interaction and Cloud Computing. J. Bionic Eng. 2019, 16, 526–539. [Google Scholar] [CrossRef]

- Desideri, L.; Ottaviani, C.; Malavasi, M.; Di Marzio, R.; Bonifacci, P. Emotional processes in human-robot interaction during brief cognitive testing. Comput. Hum. Behav. 2019, 90, 331–342. [Google Scholar] [CrossRef]

- Palestra, G.; Pino, O. Detecting emotions during a memory training assisted by a social robot for individuals with Mild Cognitive Impairment (MCI). Multimedia Tools Appl. 2020, 1–16. [Google Scholar] [CrossRef]

- König, A.; Satt, A.; Sorin, A.; Hoory, R.; Toledo-Ronen, O.; Derreumaux, A.; Manera, V.; Verhey, F.R.J.; Aalten, P.; Robert, P.H.; et al. Automatic speech analysis for the assessment of patients with predementia and Alzheimer’s disease. Alzheimer’s Dementia: Diagn. Assess. Dis. Monit. 2015, 1, 112–124. [Google Scholar] [CrossRef]

- Rossi, S.; Conti, D.; Garramone, F.; Santangelo, G.; Staffa, M.; Varrasi, S.; Di Nuovo, A. The Role of Personality Factors and Empathy in the Acceptance and Performance of a Social Robot for Psychometric Evaluations. Robotics 2020, 9, 39. [Google Scholar] [CrossRef]

- Feil-Seifer, D.; Matarić, M.J. Defining socially assistive robotics. In Proceedings of the 2005 IEEE 9th International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005. [Google Scholar]

- Rabbitt, S.M.; Kazdin, A.E.; Scassellati, B. Integrating socially assistive robotics into mental healthcare interventions: Applications and recommendations for expanded use. Clin. Psychol. Rev. 2015, 35, 35–46. [Google Scholar] [CrossRef] [PubMed]

- Mancioppi, G.; Fiorini, L.; Sportiello, M.T.; Cavallo, F. Novel Technological Solutions for Assessment, Treatment, and Assistance in Mild Cognitive Impairment. Front. Aging Neurosci. 2019, 13, 58. [Google Scholar] [CrossRef] [PubMed]

- Luperto, M.; Romeo, M.; Lunardini, F.; Basilico, N.; Abbate, C.; Jones, R.; Cangelosi, A.; Ferrante, S.; Borghese, N.A. Evaluating the Acceptability of Assistive Robots for Early Detection of Mild Cognitive Impairment. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 1257–1264. [Google Scholar]

- Pino, O.; Palestra, G.; Trevino, R.; De Carolis, B. The Humanoid Robot NAO as Trainer in a Memory Program for Elderly People with Mild Cognitive Impairment. Int. J. Soc. Robot. 2020, 12, 21–33. [Google Scholar] [CrossRef]

- Manca, M.; Paternò, F.; Santoro, C.; Zedda, E.; Braschi, C.; Franco, R.; Sale, A. The impact of serious games with humanoid robots on mild cognitive impairment older adults. Int. J. Hum. Comput. Stud. 2021, 145, 102509. [Google Scholar] [CrossRef]

- Bontchev, B. Adaptation in Affective Video Games: A Literature Review. Cybern. Inf. Technol. 2016, 16, 3–34. [Google Scholar] [CrossRef]

- McCrae, R.R.; Costa, P.T. The Five Factor Theory of personality. In Handbook of Personality: Theory and Research; The Guilford Press: New York, NY, USA, 2008; ISBN 9781593858360. [Google Scholar]

- Folstein, M.F.; Robins, L.N.; Helzer, J.E. The Mini-Mental State Examination. Arch. Gen. Psychiatry 1983, 40, 812. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bedirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Fiorini, L.; Tabeau, K.; D’Onofrio, G.; Coviello, L.; De Mul, M.; Sancarlo, D.; Fabbricotti, I.; Cavallo, F. Co-creation of an assistive robot for independent living: Lessons learned on robot design. Int. J. Interact. Des. Manuf. (IJIDeM) 2019, 14, 491–502. [Google Scholar] [CrossRef]

- John, O.P.; Donahue, E.M.; Kentle, R.L. Big Five Inventory. J. Personal. Soc. Psychol. 1991. [Google Scholar] [CrossRef]

- Rammstedt, B.; John, O.P. Measuring personality in one minute or less: A 10-item short version of the Big Five Inventory in English and German. J. Res. Pers. 2007, 41, 203–212. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 1988, 54, 1063. [Google Scholar] [CrossRef]

- Terracciano, A.; McCrae, R.R.; Costa, P.T., Jr. Factorial and Construct Validity of the Italian Positive and Negative Affect Schedule (PANAS). Eur. J. Psychol. Assess. 2003, 19, 131–141. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Cavallo, F.; Esposito, R.; Limosani, R.; Manzi, A.; Bevilacqua, R.; Felici, E.; Di Nuovo, A.; Cangelosi, A.; Lattanzio, F.; Dario, P. Robotic Services Acceptance in Smart Environments With Older Adults: User Satisfaction and Acceptability Study. J. Med. Internet Res. 2018, 20, e264. [Google Scholar] [CrossRef] [PubMed]

- Esposito, R.; Fracasso, F.; Limosani, R.; Onofrio, G.D.; Sancarlo, D.; Cesta, A.; Dario, P.; Cavallo, F. Engagement during Interaction with Assistive Robots. Neuropsychiatry 2018, 8, 739–744. [Google Scholar]

- Mukaka, M.M. A guide to appropriate use of Correlation coefficient in medical research. Malawi Med. J. 2012, 24, 69–71. [Google Scholar]

- Fiorini, L.; Mancioppi, G.; Becchimanzi, C.; Sorrentino, A.; Pistolesi, M.; Tosi, F.; Cavallo, F. Multidimensional evaluation of telepresence robot: Results from a field trial*. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples Italy, 31 August–4 September 2020; pp. 1211–1216. [Google Scholar] [CrossRef]

- Conti, D.; Commodari, E.; Buono, S. Personality factors and acceptability of socially assistive robotics in teachers with and without specialized training for children with disability. Life Span Disabil. 2017, 20, 251–272. [Google Scholar]

- Bernotat, J.; Eyssel, F. A robot at home—How affect, technology commitment, and personality traits influence user experience in an intelligent robotics apartment. In Proceedings of the RO-MAN 2017-26th IEEE International Symposium on Robot and Human Interactive Communication, Lisbon, Portugal, 28 August–1 September 2017; pp. 641–646. [Google Scholar] [CrossRef]

- Takayama, L.; Pantofaru, C. Influences on proxemic behaviors in human-robot interaction. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009, St. Louis, MO, USA, 10–15 October 2009; pp. 5495–5502. [Google Scholar]

- Damholdt, M.F.; Nørskov, M.; Yamazaki, R.; Hakli, R.; Hansen, C.V.; Vestergaard, C.; Seibt, J. Attitudinal change in elderly citizens toward social robots: The role of personality traits and beliefs about robot functionality. Front. Psychol. 2015, 6, 1701. [Google Scholar] [CrossRef]

- Petersen, S.; Houston, S.; Qin, H.; Tague, C.; Studley, J. The Utilization of Robotic Pets in Dementia Care. J. Alzheimer’s Dis. 2016, 55, 569–574. [Google Scholar] [CrossRef]

- Mordoch, E.; Osterreicher, A.; Guse, L.; Roger, K.; Thompson, G. Use of social commitment robots in the care of elderly people with dementia: A literature review. Maturitas 2013, 74, 14–20. [Google Scholar] [CrossRef] [PubMed]

- Wada, K.; Shibata, T. Living With Seal Robots—Its Sociopsychological and Physiological Influences on the Elderly at a Care House. IEEE Trans. Robot. 2007, 23, 972–980. [Google Scholar] [CrossRef]

- Fasola, J.; Mataric, M.J. Using Socially Assistive Human–Robot Interaction to Motivate Physical Exercise for Older Adults. In Proceedings of the IEEE, Wuhan, China, 23–25 March; 2012; Volume 100, pp. 2512–2526. [Google Scholar]

- O’ Dwyer, J.; Murray, N.; Flynn, R. Affective computing using speech and eye gaze: A review and bimodal system proposal for continuous affect prediction. arXiv 2018, arXiv:1805.06652. [Google Scholar]

- Mehta, Y.; Majumder, N.; Gelbukh, A.; Cambria, E. Recent trends in deep learning based personality detection. Artif. Intell. Rev. 2020, 53, 2313–2339. [Google Scholar] [CrossRef]

- Aranha, R.V.; Correa, C.G.; Nunes, F.L.S. Adapting software with Affective Computing: A systematic review. IEEE Trans. Affect. Comput. 2019, 1. [Google Scholar] [CrossRef]

| Reference | Year | Investigated Factors | Tools | Feedback |

|---|---|---|---|---|

| [13] | 2009 | - | - | A short questionnaire on the experience |

| [14] | 2017 | Engagement | Physiological signals (EEG, GSP) | Robot User Acceptance Scale-RUAS |

| [15] | 2018 | Personality | NEO Personality Inventory-3 | Unified Theory of Acceptance and Use of Technology (UTAUT) |

| [17] | 2019 | Engagement and Emotion | PANAS, video analysis, and Physiological signals | NASA Task Load Index |

| [24] | 2019 | - | - | Usability questionnaire |

| [25] | 2020 | Engagement and Emotion | Video analysis | State-Trait Anxiety Inventory, Psychosocial Impact of Assistive Devices Scales, and System Usability Scale |

| [26] | 2021 | Engagement | User Engagement Scale questionnaire, video analysis | - |

| This work | 2021 | Engagement, Emotions, Personality, Cognitive Mental state | PANAS, video analysis, BFI, and MMSE | Godspeed questionnaire, Ad-hoc usability questionnaire |

| Domain | Acronym | Measure | Average | Standard Deviation |

|---|---|---|---|---|

| Verbal interaction quality metrics | Robot’s question | Count | 18.5 | 2.3 |

| Robot’s Quote | Count | 15.5 | 1.7 | |

| Robot’s Repetition | Count | 5.7 | 3.5 | |

| Coherent Interaction | Count | 23.9 | 7.3 | |

| Incoherent Interaction | Count | 2.3 | 3.6 | |

| Robot Interaction Time | Time (sec) | 560 § | 146.5 | |

| Percentage | 82.9 | 9.9 | ||

| Interaction with the therapist | Count | 7.5 | 4.2 | |

| Therapist Interaction Time | Time (sec) | 109.1 | 64.6 | |

| Percentage | 16.6 | 9.5 | ||

| Other Time | Time (sec) | 3.1 | 7 | |

| Percentage | 0.5 | 1.1 | ||

| Total Interaction Time | Time (sec) | 672.3 * | 121.2 | |

| Emotions | Joy | Time (sec) | 12.6 | 14.4 |

| Percentage | 1.7 | 1.8 | ||

| Neutral | Time (sec) | 659.6 | 110.8 | |

| Percentage | 98.3 | 1.8 | ||

| Gaze | Direct | Time (sec) | 278 | 142.6 |

| Percentage | 42.7 | 23 | ||

| None | Time (sec) | 394.3 | 205 | |

| Percentage | 57.3 | 23 | ||

| Facial Expression | Smile | Time (sec) | 8.1 | 10.5 |

| Percentage | 1.1 | 1.2 | ||

| Laugh | Time (sec) | 3.5 | 4.3 | |

| Percentage | 0.5 | 0.7 | ||

| Frown | Time (sec) | 89.3 * | 87.3 | |

| Percentage | 12.5 | 12.2 | ||

| Raised Eyebrows | Time (sec) | 14.1 | 16.7 | |

| Percentage | 2.3 | 2.7 | ||

| Inexpressive | Time (sec) | 557.3 | 105.3 | |

| Percentage | 83.6 | 12.4 | ||

| Body Gestures | Lifting Shoulders | Time (sec) | 1.3 | 3.5 |

| Percentage | 0.2 | 0.6 | ||

| Nodding Head | Time (sec) | 8.8 | 16.6 | |

| Percentage | 1.4 ^ | 2.5 | ||

| Shaking Head | Time (sec) | 5.4 ° | 5.8 | |

| Percentage | 0.8 | 0.9 | ||

| Quiet | Time (sec) | 656.9 | 127.5 | |

| Percentage | 97.6 | 3.2 |

| Domain | Acronym | Item | GQ1 | GQ2 |

|---|---|---|---|---|

| Anthropomorphism | ANT | Natural | 1.86 (1.46) | 2.71 (1.7) |

| Human-like | 1 (0) | 1 (0) | ||

| Consciousness | 2.14 (1.67) ° | 2.57 (1.51) °,§ | ||

| Lifelike | 1.28 (0.49) | 1.14 (0.37) | ||

| Moving Elegantly | 2 (1) | 2.57 (0.78) | ||

| Animacy | ANI | Alive | 1.71 (0.75) | 2 (1.53) |

| Lively | 1.86 (1.07) | 1.42 (0.53) | ||

| Organic | 1 (0) | 1 (0) | ||

| Interactive | 1.57 (0.79) | 2.14 (1.34) | ||

| Responsive | 1.86 (1.46) | 2.71 (1.89) | ||

| Likeability | LIK | Like | 4 (1) | 3.85 (1.21) |

| Friendly | 3.57(1.4) * | 4.42 (0.78) | ||

| Kind | 4.14 (0.9) | 4.85 (0.37) | ||

| Pleasant | 3.71 (1.11) | 4.14 (0.69) | ||

| Nice | 3 (1) | 3.57 (1.39) | ||

| Perceived | PEI | Competent | 3.43 (1.27) | 3.71 (1.7) |

| Intelligence | Knowledge | 3.85 (0.69) | 4.28 (1.11) | |

| Responsible | 3 (1.63) | 3.71 (0.75) * | ||

| Intelligent | 3.42 (1.51) | 4 (1) | ||

| Sensible | 2.14 (0.89) | 3.42 (1.71) ° | ||

| Perceived | PES | Relaxed | 3.71 (1.25) | 4.57 (0.78) |

| Safety | Calm | 3.71 (1.38) | 4 (1.73) | |

| Surprise | 3.57 (1.51) * | 3.85 (1.67) * |

| Domain | Acronym | Item | US1 | US2 |

|---|---|---|---|---|

| Disposition about the services | ITU | 10 (2) | 10.8 (4.9) | |

| “I would use the robot in case of need (i.e., if I was sick)” (Q1) | 4 (0.7) | 4 (1.22) | ||

| “I would be willing to use the cognitive service if it could help the family/caregiver’s work” (Q2) | 2.8 (1.3) | 3.4 (1.81) * | ||

| “I think my independence would be improved by the used of the robot” (Q3) | 3.2 (1.48) * | 3.4 (2.19) ° | ||

| Anxiety | ANX | 5 (1.22) | 2.2 (0.4) | |

| “I am too embarrassed in using the robot, around the community or the family” (Q4) | 3 (1.58) | 1.2 (0.44) | ||

| “I am/was nervous doing the cognitive assessment with the robot” (Q5) | 2 (1) | 1 (0) | ||

| Enjoyment | ENJ | “I will enjoy/enjoyed using the robot for doing the cognitive assessment” (Q6) | 3.2 (1.48) | 3.8 (1.6) |

| Trust | TRUST | 6.8 (1.64) | 7.8 (2.2) | |

| “I would trust in robot’s ability to perform the cognitive assessment” (Q7) | 3.6 (1.14) | 4 (1.41) ° | ||

| “I think the robot would be too intrusive for my privacy” (Q8) | 3.2 (1.09) | 3.8 (1.64) | ||

| Perceived Easy of Use | PEU | “I found the robot easy to use to perform the cognitive assessment” (Q9) | 3.2 (0.45) | 4.4 (0.9) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sorrentino, A.; Mancioppi, G.; Coviello, L.; Cavallo, F.; Fiorini, L. Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario. Informatics 2021, 8, 23. https://doi.org/10.3390/informatics8020023

Sorrentino A, Mancioppi G, Coviello L, Cavallo F, Fiorini L. Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario. Informatics. 2021; 8(2):23. https://doi.org/10.3390/informatics8020023

Chicago/Turabian StyleSorrentino, Alessandra, Gianmaria Mancioppi, Luigi Coviello, Filippo Cavallo, and Laura Fiorini. 2021. "Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario" Informatics 8, no. 2: 23. https://doi.org/10.3390/informatics8020023

APA StyleSorrentino, A., Mancioppi, G., Coviello, L., Cavallo, F., & Fiorini, L. (2021). Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario. Informatics, 8(2), 23. https://doi.org/10.3390/informatics8020023