Frame-Based Elicitation of Mid-Air Gestures for a Smart Home Device Ecosystem

Abstract

1. Introduction

2. Related Work

2.1. On the Elicitation Study Method

2.2. Elicitation Studies for Control of Smart Home Devices

3. Study Design

3.1. Aim and Scope

3.2. Methodology and Procedure

3.3. Participants

3.4. Apparatus

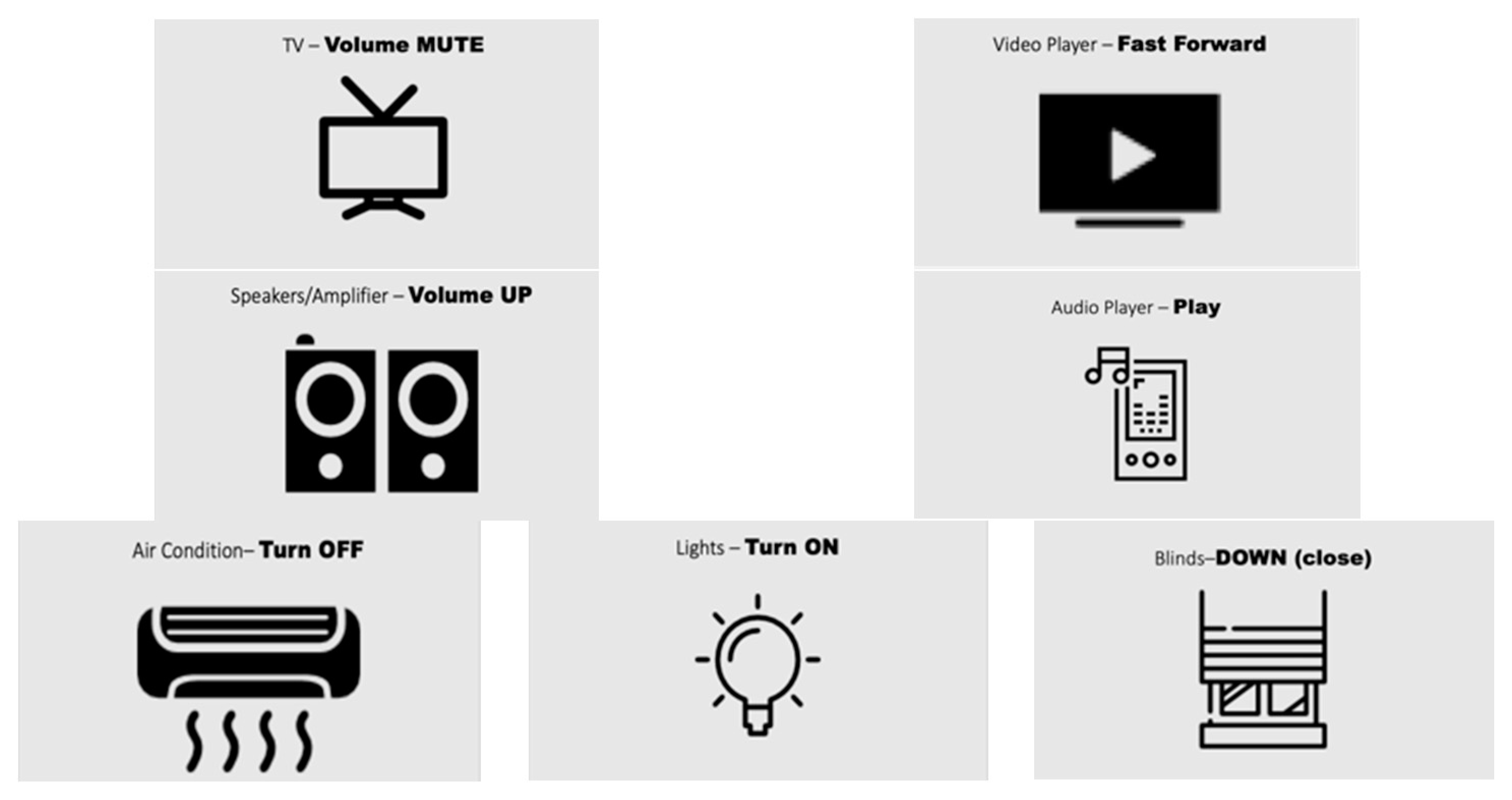

3.5. Referents

3.6. Data Analysis

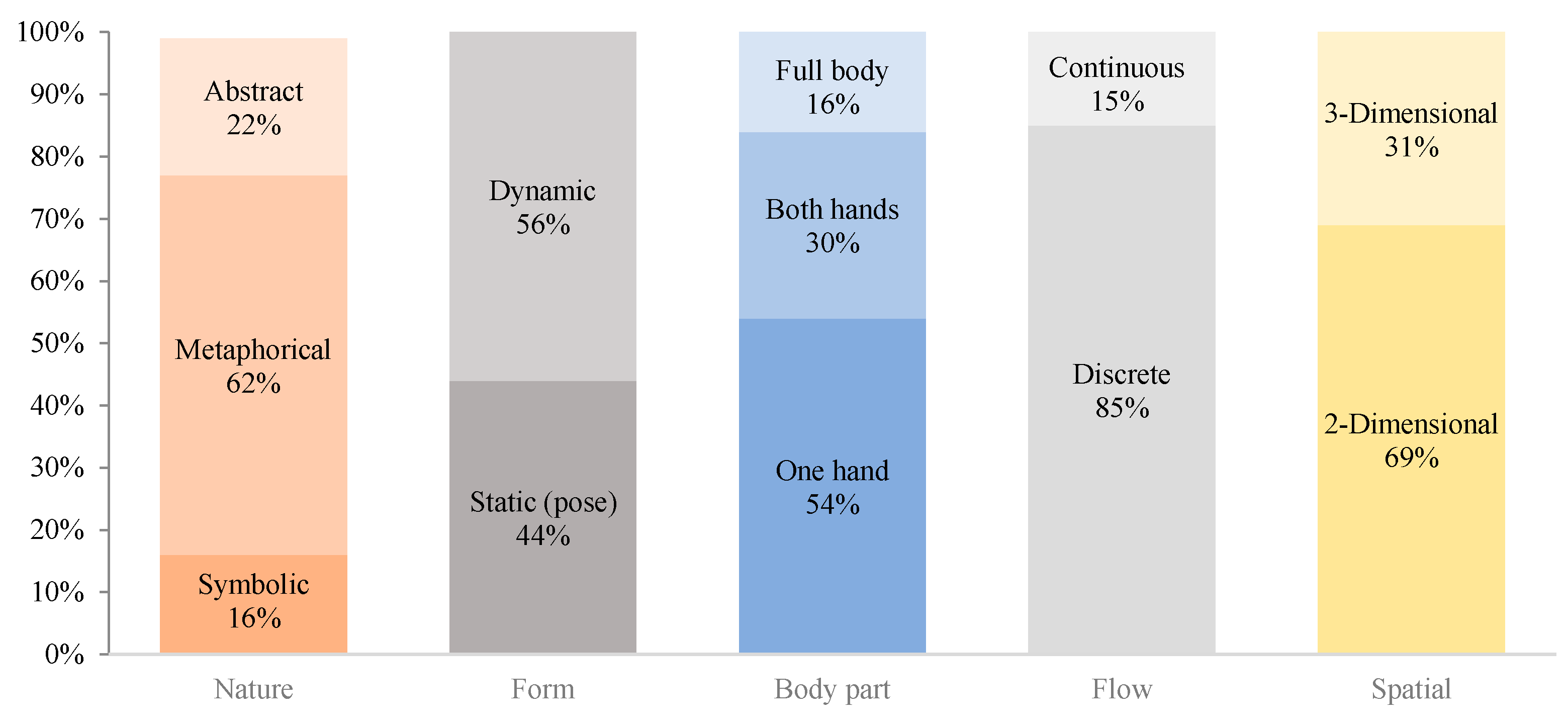

3.6.1. Gesture Classification—Taxonomy

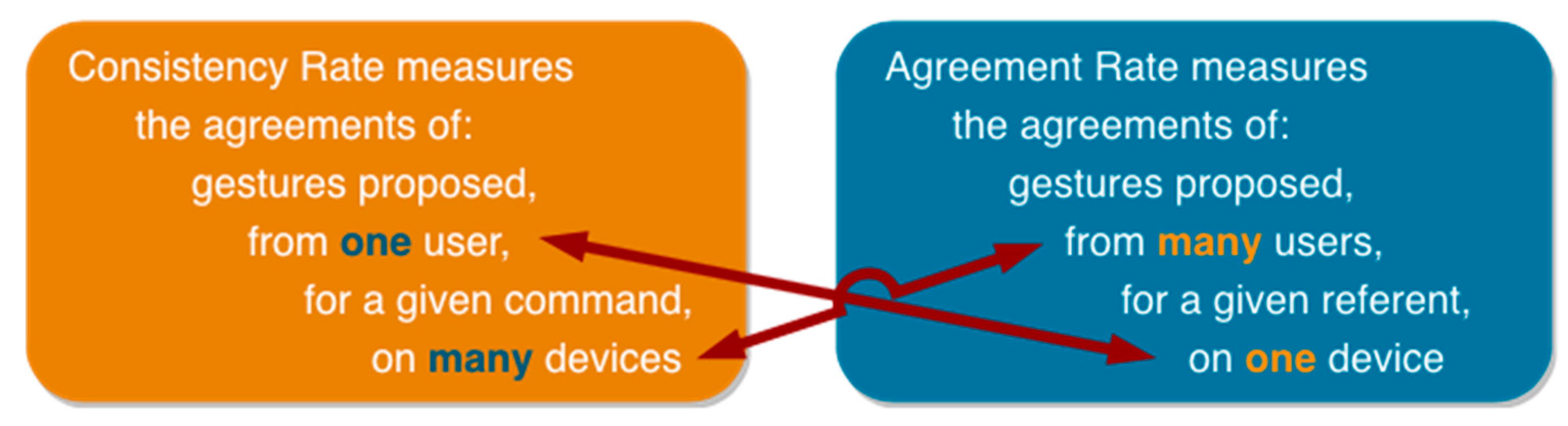

3.6.2. Gesture Consensus Level among Users (Agreement Rate)

3.6.3. Gesture Consensus Level among Devices (Consistency Rate)

4. Results

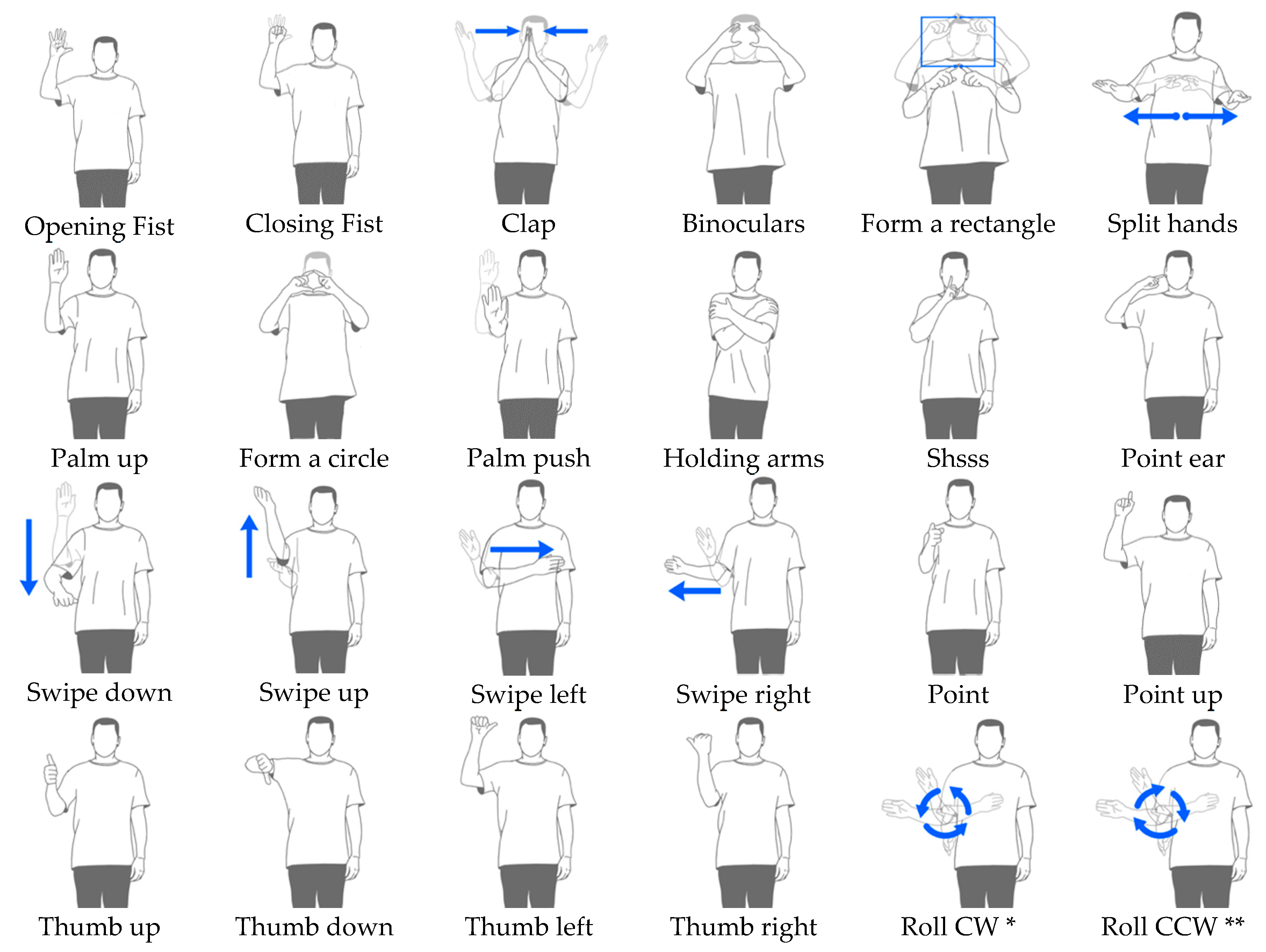

4.1. User-Defined Gesture Set

4.2. Gesture Taxonomy

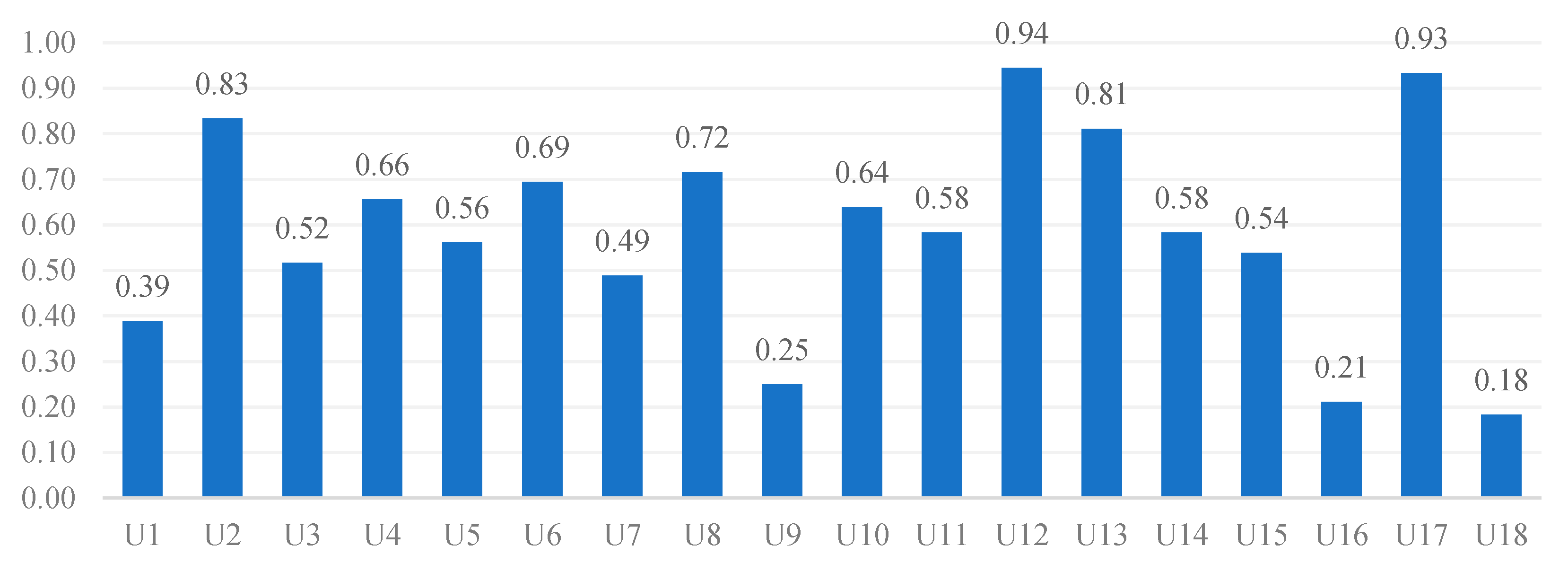

4.3. Users Gesture Consistency among Devices

5. Discussion

5.1. Comparison to Other Gesture Sets

5.2. Increased Consistency Rate Raises the Requirement for Personalized Gesture Construction

5.3. Attending the Application Context in User Elicitation

5.4. Attending Referents’ Affordances

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Koutsabasis, P.; Vogiatzidakis, P. Empirical Research in Mid-Air Interaction: A Systematic Review. Int. J. Hum. Comput. Interact. 2019, 1–22. [Google Scholar] [CrossRef]

- Morris, M.R.; Danielescu, A.; Drucker, S.; Fisher, D.; Lee, B.; Wobbrock, J.O. Reducing legacy bias in gesture elicitation studies. Interactions 2014, 21, 40–45. [Google Scholar] [CrossRef]

- Chan, E.; Seyed, T.; Stuerzlinger, W.; Yang, X.-D.; Maurer, F. User Elicitation on Single-Hand Microgestures. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3403–3414. [Google Scholar]

- Dim, N.K.; Silpasuwanchai, C.; Sarcar, S.; Ren, X. Designing Mid-Air TV Gestures for Blind People Using User- and Choice-Based Elicitation Approaches. In Proceedings of the 2016 ACM Conference on Designing Interactive Systems, Brisbane, Australia, 4–8 June 2016; pp. 204–214. [Google Scholar]

- Dong, H.; Danesh, A.; Figueroa, N.; Saddik, A.E. An Elicitation Study on Gesture Preferences and Memorability Toward a Practical Hand-Gesture Vocabulary for Smart Televisions. IEEE Access 2015, 3, 543–555. [Google Scholar] [CrossRef]

- Vatavu, R.-D. There’s a World Outside Your TV: Exploring Interactions beyond the Physical TV Screen. In Proceedings of the 11th European Conference on Interactive TV and Video, Como, Italy, 24–26 June 2013; pp. 143–152. [Google Scholar]

- Vatavu, R.D.; Zaiti, I.A. Leap gestures for TV: Insights from an elicitation study. In Proceedings of the ACM International Conference on Interactive Experiences for TV and Online Video, Newcastle Upon Tyne, UK, 25–27 June 2014. [Google Scholar]

- Wu, H.; Wang, J. User-Defined Body Gestures for TV-based Applications. In Proceedings of the 2012 Fourth International Conference on Digital Home, Guangzhou, China, 23–25 November 2012; pp. 415–420. [Google Scholar]

- Zaiţi, I.-A.; Pentiuc, Ş.-G.; Vatavu, R.-D. On free-hand TV control: Experimental results on user-elicited gestures with Leap Motion. Pers. Ubiquitous Comput. 2015, 19, 821–838. [Google Scholar] [CrossRef]

- Löcken, A.; Hesselmann, T.; Pielot, M.; Henze, N.; Boll, S. User-centred process for the definition of free-hand gestures applied to controlling music playback. Multimed. Syst. 2011, 18, 15–31. [Google Scholar] [CrossRef]

- Kühnel, C.; Westermann, T.; Hemmert, F.; Kratz, S.; Müller, A.; Möller, S. I’m home: Defining and evaluating a gesture set for smart-home control. Int. J. Hum. Comput. Stud. 2011, 69, 693–704. [Google Scholar]

- Neßelrath, R.; Lu, C.; Schulz, C.H.; Frey, J.; Alexandersson, J. A Gesture Based System for Context—Sensitive Interaction with Smart Homes. In Ambient Assisted Living; Wichert, R., Eberhardt, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 209–219. ISBN 978-3-642-18166-5. [Google Scholar]

- Ng, W.L.; Ng, C.K.; Noordin, N.K.; Ali, B.M. Gesture Based Automating Household Appliances. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Choi, E.; Kwon, S.; Lee, D.; Lee, H.; Chung, M.K. Can User-Derived Gesture be Considered as the Best Gesture for a Command? Focusing on the Commands for Smart Home System. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Boston, MA, USA, 22–26 October 2012; Human Factors and Ergonomics Soc.: Santa Monica, CA, USA; Volume 56, no. 1. pp. 1253–1257. [Google Scholar]

- Wobbrock, J.O.; Aung, H.H.; Rothrock, B.; Myers, B.A. Maximizing the guessability of symbolic input. In Proceedings of the CHI’05 Extended Abstracts on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; ISBN 1-59593-002-7. [Google Scholar]

- Vatavu, R.-D.; Wobbrock, J.O. Formalizing Agreement Analysis for Elicitation Studies. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 1325–1334. [Google Scholar]

- Cafaro, F.; Lyons, L.; Antle, A.N. Framed Guessability: Improving the Discoverability of Gestures and Body Movements for Full-Body Interaction. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Vogiatzidakis, P.; Koutsabasis, P. Gesture Elicitation Studies for Mid-Air Interaction: A Review. Multimodal Technol. Interact. 2018, 2, 65. [Google Scholar] [CrossRef]

- Freeman, E.; Brewster, S.; Lantz, V. Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 2319–2331. [Google Scholar]

- Rodriguez, I.B.; Marquardt, N. Gesture Elicitation Study on How to Opt-in & Opt-out from Interactions with Public Displays. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, Brighton, UK, 17–20 October 2017; pp. 32–41. [Google Scholar]

- Walter, R.; Bailly, G.; Müller, J. StrikeAPose: Revealing mid-air gestures on public displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 2 May 2013; pp. 841–850. [Google Scholar]

- Bostan, I.; Buruk, O.T.; Canat, M.; Tezcan, M.O.; Yurdakul, C.; Göksun, T.; Özcan, O. Hands as a Controller: User Preferences for Hand Specific On-Skin Gestures. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 1123–1134. [Google Scholar]

- Siddhpuria, S.; Katsuragawa, K.; Wallace, J.R.; Lank, E. Exploring At-Your-Side Gestural Interaction for Ubiquitous Environments. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 1111–1122. [Google Scholar]

- Fariman, H.J.; Alyamani, H.J.; Kavakli, M.; Hamey, L. Designing a user-defined gesture vocabulary for an in-vehicle climate control system. In Proceedings of the 28th Australian Conference on Computer-Human Interaction, Launceston, Australia, 29 November–2 December 2016; pp. 391–395. [Google Scholar]

- Jahani, H.; Alyamani, H.J.; Kavakli, M.; Dey, A.; Billinghurst, M. User Evaluation of Hand Gestures for Designing an Intelligent In-Vehicle Interface. In Designing the Digital Transformation; Maedche, A., vom Brocke, J., Hevner, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10243, pp. 104–121. ISBN 978-3-319-59143-8. [Google Scholar]

- Riener, A.; Weger, F.; Ferscha, A.; Bachmair, F.; Hagmuller, P.; Lemme, A.; Muttenthaler, D.; Pühringer, D.; Rogner, H.; Tappe, A. Standardization of the in-car gesture interaction space. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Netherlands, 28–30 October 2013; pp. 14–21. [Google Scholar]

- Rovelo Ruiz, G.A.; Vanacken, D.; Luyten, K.; Abad, F.; Camahort, E. Multi-viewer gesture-based interaction for omni-directional video. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 4077–4086. [Google Scholar]

- Vatavu, R.-D. User-defined gestures for free-hand TV control. In Proceedings of the 10th European Conference on Interactive tv and Video, Berlin, Germany, 4–6 July 2012; pp. 45–48. [Google Scholar]

- Wu, H.; Wang, J.; Zhang, X. User-centered gesture development in TV viewing environment. Multimed. Tools Appl. 2016, 75, 733–760. [Google Scholar] [CrossRef]

- Dix, A.; Finlay, J.; Abowd, G.; Beale, R. Human-Computer Interaction, 3rd ed.; Pearson/Prentice-Hall: Harlow, UK; New York, NY, USA, 2004; ISBN 978-0-13-046109-4. [Google Scholar]

- McNeill, D. Hand and Mind: What Gestures Reveal about Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the 27th International Conference on Human Factors in Computing Systems—CHI 09, Boston, MA, USA, 4–9 April 2009; pp. 1083–1092. [Google Scholar]

- Ruiz, J.; Li, Y.; Lank, E. User-defined motion gestures for mobile interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 197–206. [Google Scholar]

- Arefin Shimon, S.S.; Lutton, C.; Xu, Z.; Morrison-Smith, S.; Boucher, C.; Ruiz, J. Exploring Non-touchscreen Gestures for Smartwatches. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3822–3833. [Google Scholar]

- Di Geronimo, L.; Bertarini, M.; Badertscher, J.; Husmann, M.; Norrie, M.C. Exploiting mid-air gestures to share data among devices. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Vienna, Austria, 4–7 September 2017; pp. 1–11. [Google Scholar]

- Piumsomboon, T.; Clark, A.J.; Billinghurst, M.; Cockburn, A. User-defined gestures for augmented reality. In Proceedings of the CHI ’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 955–960. [Google Scholar]

- Chen, Z.; Ma, X.; Peng, Z.; Zhou, Y.; Yao, M.; Ma, Z.; Wang, C.; Gao, Z.; Shen, M. User-Defined Gestures for Gestural Interaction: Extending from Hands to Other Body Parts. Int. J. Hum. Comput. Interact. 2018, 34, 238–250. [Google Scholar] [CrossRef]

- Obaid, M.; Kistler, F.; Kasparavičiūtė, G.; Yantaç, A.E.; Fjeld, M. How would you gesture navigate a drone? A user-centered approach to control a drone. In Proceedings of the 20th International Academic Mindtrek Conference, Tampere, Finland, 17–18 October 2016; pp. 113–121. [Google Scholar]

- Ruiz, J.; Vogel, D. Soft-Constraints to Reduce Legacy and Performance Bias to Elicit Whole-body Gestures with Low Arm Fatigue. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3347–3350. [Google Scholar]

- Guesgen, H.W.; Kessell, D. Gestural Control of Household Appliances for the Physically Impaired. In Proceedings of the Twenty-Fifth International FLAIRS Conference, Marco Island, FL, USA, 16 May 2012. [Google Scholar]

- Koutsabasis, P.; Domouzis, C.K. Mid-Air Browsing and Selection in Image Collections. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Bari, Italy, 7–10 June 2016; pp. 21–27. [Google Scholar]

- Malizia, A.; Bellucci, A. The artificiality of natural user interfaces. Commun. ACM 2012, 55, 36–38. [Google Scholar] [CrossRef]

- Fails, J.A.; Olsen, D.R. A Design Tool for Camera-based Interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003. [Google Scholar]

- Kim, J.-W.; Nam, T.-J. EventHurdle: Supporting designers’ exploratory interaction prototyping with gesture-based sensors. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI ’13, Paris, France, 27 April–2 May 2013; pp. 267–276. [Google Scholar]

- Klemmer, S.; Landay, J. Toolkit Support for Integrating Physical and Digital Interactions. Hum. Comput. Interact. 2009, 24, 315–366. [Google Scholar] [CrossRef]

- Maynes-Aminzade, D.; Winograd, T.; Igarashi, T. Eyepatch: Prototyping camera-based interaction through examples. In Proceedings of the 20th annual ACM symposium on User interface software and technology, Newport, RI, USA, 7–10 October 2007; pp. 33–42. [Google Scholar]

- Albertini, N.; Brogni, A.; Olivito, R.; Taccola, E.; Caramiaux, B.; Gillies, M. Designing natural gesture interaction for archaeological data in immersive environments. Virtual Archaeol. Rev. 2017, 8, 12–21. [Google Scholar] [CrossRef]

| Devices Commands | TV | Speakers | Video Player | Audio Player | Air-Conditioner | Lights | Blinds |

|---|---|---|---|---|---|---|---|

| Registration On | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Registration Off | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Turn On | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Turn Off | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Up | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Down | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Next | ✓ | ✓ | ✓ | ||||

| Previous | ✓ | ✓ | ✓ | ||||

| Mute | ✓ | ✓ | |||||

| Fast Forward | ✓ | ✓ | |||||

| Fast Rewind | ✓ | ✓ | |||||

| Play | ✓ | ✓ | |||||

| Pause | ✓ | ✓ | |||||

| Stop | ✓ | ✓ | ✓ | ||||

| Referents | 9 | 7 | 11 | 11 | 6 | 6 | 5 |

| Dimension | Category | Description |

|---|---|---|

| Nature | Symbolic | Gesture depicts a symbol. |

| Metaphorical | Gesture indicates a metaphor. | |

| Abstract | Gesture-command mapping is arbitrary. | |

| Form | Static | Gesture is a pose. |

| Dynamic | Gesture involves body movement. | |

| Body Part | One hand | Gesture is performed with one hand. |

| Both Hands | Gesture is performed with both hands. | |

| Full body | Gesture involves movement or pose of upper body. | |

| Flow | Discrete | Command is performed on gesture completion. |

| Continuous | Command is performed during the gesture. | |

| Spatial | 2-Dimensional | Gesture is performed on single axis. |

| 3-Dimensional | Gesture is performed in 3D space. |

| TV | Speakers | Video Player | Audio Player | Air Condition | Lights | Blinds | |

|---|---|---|---|---|---|---|---|

| Registration On | Form a rectangle * (0.11) | Form a circle * (0.03) | Binoculars * (0.09) | Point on ear (0.04) | Hands holding arms * (0.07) | Clap * (0.05) | Point (0.04) |

| Registration Off | Form a rectangle * (0.05) | Form a Circle * (0.02) | Binoculars * (0.06) | Point on ear (0.02) | Hands holding arms * (0.06) | Clap * (0.03) | Point (0.01) |

| Turn On | Point (0.04) | Opening fist (0.05) | Opening fist (0.01) | Palm push (0.05) | Opening fist (0.07) | Point up (0.05) | |

| Turn Off | Closing fist (0.05) | Closing fist (0.08) | Closing fist (0.04) | Closing fist (0.03) | Closing fist (0.07) | Closing fist (0.03) | |

| Up | Swipe up (0.52) | Swipe up (0.46) | Swipe up (0.28) | Swipe up (0.28) | Swipe up (0.33) | ||

| Down | Swipe down (0.52) | Swipe down (0.46) | Swipe down (0.33) | Swipe down (0.32) | Swipe down (0.33) | ||

| Next | Swipe left/right (0.34) | Point right (0.14) | Swipe left/right (0.16) | ||||

| Previous | Swipe left/right (0.34) | Point left (0.14) | Swipe left/right (0.16) | ||||

| Mute | Ssshhh (0.12) | Ssshhh (0.10) | |||||

| Fast Forward | Swipe left/right (0.17) | Move hand clockwise (0.09) | |||||

| Fast Rewind | Swipe left/right (0.17) | Move hand counterclockwise (0.09) | |||||

| Play | Palm up (0.03) | Point (0.08) | |||||

| Pause | Palm stop (0.09) | Palm stop (0.15) | |||||

| Stop | Palm stop * (0.07) | Hands split * (0.14) | Palm stop (0.52) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vogiatzidakis, P.; Koutsabasis, P. Frame-Based Elicitation of Mid-Air Gestures for a Smart Home Device Ecosystem. Informatics 2019, 6, 23. https://doi.org/10.3390/informatics6020023

Vogiatzidakis P, Koutsabasis P. Frame-Based Elicitation of Mid-Air Gestures for a Smart Home Device Ecosystem. Informatics. 2019; 6(2):23. https://doi.org/10.3390/informatics6020023

Chicago/Turabian StyleVogiatzidakis, Panagiotis, and Panayiotis Koutsabasis. 2019. "Frame-Based Elicitation of Mid-Air Gestures for a Smart Home Device Ecosystem" Informatics 6, no. 2: 23. https://doi.org/10.3390/informatics6020023

APA StyleVogiatzidakis, P., & Koutsabasis, P. (2019). Frame-Based Elicitation of Mid-Air Gestures for a Smart Home Device Ecosystem. Informatics, 6(2), 23. https://doi.org/10.3390/informatics6020023