Decoding Trust in Artificial Intelligence: A Systematic Review of Quantitative Measures and Related Variables

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Selection Criteria

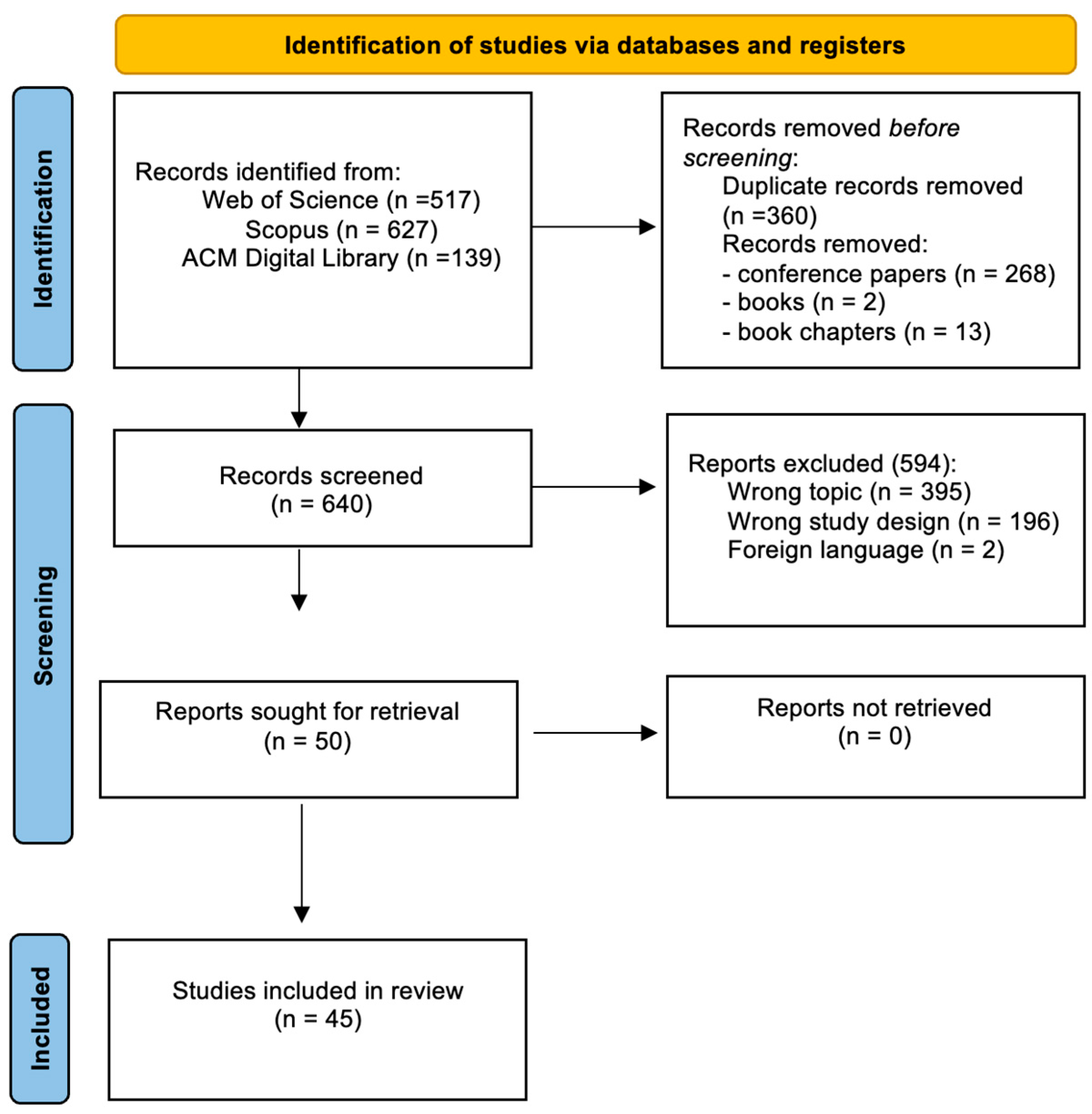

2.2. Data Sources and Search Strategy

3. Results

3.1. Defining and Measuring Trust

3.1.1. Definitions of Trust

Trust Questionnaires: From Factors to Item Description

From Definition to Measurement

3.2. Types of AI Systems

3.3. Experimental Stimuli

3.4. Main Elements Related to Trust in AI

3.4.1. Cognitive Trust: Characteristics of the Decisional Process of AI

3.4.2. Affective Trust: Characteristics of AI’s Behaviour

4. Discussion

4.1. Definitions of Trust

4.2. Measuring Trust: Affective and Cognitive Components

4.3. Types of AI Systems

4.4. Experimental Stimuli

4.5. Cognitive and Affective Trust Factors

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Choung, H.; David, P.; Ross, A. Trust in AI and Its Role in the Acceptance of AI Technologies. Int. J. Hum.-Comput. Interact. 2022, 39, 1727–1739. [Google Scholar] [CrossRef]

- Gefen, D.; Karahanna, E.; Straub, D.W. Trust and TAM in Online Shopping: An Integrated Model. MIS Q. 2003, 27, 51. [Google Scholar] [CrossRef]

- Nikou, S.A.; Economides, A.A. Mobile-based assessment: Investigating the factors that influence behavioral intention to use. Comput. Educ. 2017, 109, 56–73. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Shin, J.; Bulut, O.; Gierl, M.J. Development Practices of Trusted AI Systems among Canadian Data Scientists. Int. Rev. Inf. Ethics 2020, 28, 1–10. [Google Scholar] [CrossRef]

- Bach, T.A.; Khan, A.; Hallock, H.; Beltrão, G.; Sousa, S. A Systematic Literature Review of User Trust in AI-Enabled Systems: An HCI Perspective. Int. J. Hum.-Comput. Interact. 2024, 40, 1251–1266. [Google Scholar] [CrossRef]

- Lewis, J.D.; Weigert, A. Trust as a Social Reality. Soc. Forces 1985, 63, 967. [Google Scholar] [CrossRef]

- Colwell, S.R.; Hogarth-Scott, S. The effect of cognitive trust on hostage relationships. J. Serv. Mark. 2004, 18, 384–394. [Google Scholar] [CrossRef]

- McAllister, D.J. Affect-and cognition-based trust as foundations for interpersonal cooperation in organizations. Acad. Manag. J. 1995, 38, 24–59. [Google Scholar] [CrossRef]

- Rempel, J.K.; Holmes, J.G.; Zanna, M.P. Trust in close relationships. J. Personal. Soc. Psychol. 1985, 49, 95–112. [Google Scholar] [CrossRef]

- Mcknight, D.H.; Carter, M.; Thatcher, J.B.; Clay, P.F. Trust in a specific technology: An investigation of its components and measures. ACM Trans. Manage. Inf. Syst. 2011, 2, 1–25. [Google Scholar] [CrossRef]

- Aurier, P.; de Lanauze, G.S. Impacts of perceived brand relationship orientation on attitudinal loyalty: An application to strong brands in the packaged goods sector. Eur. J. Mark. 2012, 46, 1602–1627. [Google Scholar] [CrossRef]

- Johnson, D.; Grayson, K. Cognitive and affective trust in service relationships. J. Bus. Res. 2005, 58, 500–507. [Google Scholar] [CrossRef]

- Komiak, S.Y.; Benbasat, I. The effects of personalization and familiarity on trust and adoption of recommendation agents. MIS Q. 2006, 30, 941–949. [Google Scholar] [CrossRef]

- Moorman, C.; Zaltman, G.; Deshpande, R. Relationship between Providers and Users of Market Research: They Dynamics of Trust within & Between Organizations. J. Mark. Res. 1992, 29, 314–328. [Google Scholar] [CrossRef]

- Luhmann, N. Trust and Power; John, A., Ed.; Wiley and Sons: Chichester, UK, 1979. [Google Scholar]

- Morrow, J.L., Jr.; Hansen, M.H.; Pearson, A.W. The cognitive and affective antecedents of general trust within cooperative organizations. J. Manag. Issues 2004, 16, 48–64. [Google Scholar]

- Chen, C.C.; Chen, X.-P.; Meindl, J.R. How Can Cooperation Be Fostered? The Cultural Effects of Individualism-Collectivism. Acad. Manag. Rev. 1998, 23, 285. [Google Scholar] [CrossRef]

- Kim, D. Cognition-Based Versus Affect-Based Trust Determinants in E-Commerce: Cross-Cultural Comparison Study. In Proceedings of the International Conference on Information Systems, ICIS 2005, Las Vegas, NV, USA, 11–14 December 2005. [Google Scholar]

- Dabholkar, P.A.; van Dolen, W.M.; de Ruyter, K. A dual-sequence framework for B2C relationship formation: Moderating effects of employee communication style in online group chat. Psychol. Mark. 2009, 26, 145–174. [Google Scholar] [CrossRef]

- Ha, B.; Park, Y.; Cho, S. Suppliers’ affective trust and trust in competency in buyers: Its effect on collaboration and logistics efficiency. Int. J. Oper. Prod. Manag. 2011, 31, 56–77. [Google Scholar] [CrossRef]

- Gursoy, D.; Chi, O.H.; Lu, L.; Nunkoo, R. Consumers acceptance of artificially intelligent (AI) device use in service delivery. Int. J. Inf. Manag. 2019, 49, 157–169. [Google Scholar] [CrossRef]

- Madsen, M.; Gregor, S. Measuring Human-Computer Trust. In Proceedings of the 11th Australasian Conference on Information Systems, Brisbane, Australia, 6–8 December 2000; Volume 53, p. 6. [Google Scholar]

- Pennings, J.; Woiceshyn, J. A Typology of Organizational Control and Its Metaphors: Research in the Sociology of Organizations; JAI Press: Stamford, CT, USA, 1987. [Google Scholar]

- Chattaraman, V.; Kwon, W.-S.; Gilbert, J.E.; Ross, K. Should AI-Based, conversational digital assistants employ social- or task-oriented interaction style? A task-competency and reciprocity perspective for older adults. Comput. Hum. Behav. 2019, 90, 315–330. [Google Scholar] [CrossRef]

- Louie, W.-Y.G.; McColl, D.; Nejat, G. Acceptance and Attitudes Toward a Human-like Socially Assistive Robot by Older Adults. Assist. Technol. 2014, 26, 140–150. [Google Scholar] [CrossRef] [PubMed]

- Marchetti, A.; Manzi, F.; Itakura, S.; Massaro, D. Theory of Mind and Humanoid Robots From a Lifespan Perspective. Z. Für Psychol. 2018, 226, 98–109. [Google Scholar] [CrossRef]

- Nass, C.I.; Brave, S. Wired for speech: How voice activates and advances the human-computer relationship. In Computer-Human Interaction; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Golossenko, A.; Pillai, K.G.; Aroean, L. Seeing brands as humans: Development and validation of a brand anthropomorphism scale. Int. J. Res. Mark. 2020, 37, 737–755. [Google Scholar] [CrossRef]

- Manzi, F.; Peretti, G.; Di Dio, C.; Cangelosi, A.; Itakura, S.; Kanda, T.; Ishiguro, H.; Massaro, D.; Marchetti, A. A Robot Is Not Worth Another: Exploring Children’s Mental State Attribution to Different Humanoid Robots. Front. Psychol. 2020, 11, 2011. [Google Scholar] [CrossRef]

- Gomez-Uribe, C.A.; Hunt, N. The Netflix Recommender System: Algorithms, Business Value, and Innovation. ACM Trans. Manag. Inf. Syst. 2016, 6, 1–19. [Google Scholar] [CrossRef]

- Hengstler, M.; Enkel, E.; Duelli, S. Applied artificial intelligence and trust—The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Change 2016, 105, 105–120. [Google Scholar] [CrossRef]

- Tussyadiah, I.P.; Zach, F.J.; Wang, J. Attitudes Toward Autonomous on Demand Mobility System: The Case of Self-Driving Taxi. In Information and Communication Technologies in Tourism; Schegg, R., Stangl, B., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 755–766. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human Trust in Artificial Intelligence: Review of Empirical Research. ANNALS 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Asan, O.; Bayrak, A.E.; Choudhury, A. Artificial Intelligence and Human Trust in Healthcare: Focus on Clinicians. J. Med. Internet Res. 2020, 22, e15154. [Google Scholar] [CrossRef]

- Keysermann, M.U.; Cramer, H.; Aylett, R.; Zoll, C.; Enz, S.; Vargas, P.A. Can I Trust You? Sharing Information with Artificial Companions. In Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems—AAMAS’12, Valencia, Spain, 4–8 June 2012; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2012; Volume 3, pp. 1197–1198. [Google Scholar]

- Churchill, G.A. A Paradigm for Developing Better Measures of Marketing Constructs. J. Mark. Res. 1979, 16, 64. [Google Scholar] [CrossRef]

- Peter, J.P. Reliability: A Review of Psychometric Basics and Recent Marketing Practices. J. Mark. Res. 1979, 16, 6. [Google Scholar] [CrossRef]

- Churchill, G.A.; Peter, J.P. Research Design Effects on the Reliability of Rating Scales: A Meta-Analysis. J. Mark. Res. 1984, 21, 360–375. [Google Scholar] [CrossRef]

- Kwon, H.; Trail, G. The Feasibility of Single-Item Measures in Sport Loyalty Research. Sport Manag. Rev. 2005, 8, 69–89. [Google Scholar] [CrossRef]

- Allen, J.F.; Byron, D.K.; Dzikovska, M.; Ferguson, G.; Galescu, L.; Stent, A. Toward conversational human-computer interaction. AI Mag. 2001, 22, 27. [Google Scholar]

- Raees, M.; Meijerink, I.; Lykourentzou, I.; Khan, V.-J.; Papangelis, K. From Explainable to Interactive AI: A Literature Review on Current Trends in Human-AI Interaction. arXiv 2024, arXiv:2405.15051. [Google Scholar] [CrossRef]

- Chu, Z.; Wang, Z.; Zhang, W. Fairness in Large Language Models: A Taxonomic Survey. SIGKDD Explor. Newsl. 2024, 26, 34–48. [Google Scholar] [CrossRef]

- Zhang, W. AI fairness in practice: Paradigm, challenges, and prospects. AI Mag. 2024, 45, 386–395. [Google Scholar] [CrossRef]

- Xiang, H.; Zhou, J.; Xie, B. AI tools for debunking online spam reviews? Trust of younger and older adults in AI detection criteria. Behav. Inf. Technol. 2022, 42, 478–497. [Google Scholar] [CrossRef]

- Kim, T.; Song, H. Communicating the Limitations of AI: The Effect of Message Framing and Ownership on Trust in Artificial Intelligence. Int. J. Hum.-Comput. Interact. 2022, 39, 790–800. [Google Scholar] [CrossRef]

- Zarifis, A.; Kawalek, P.; Azadegan, A. Evaluating If Trust and Personal Information Privacy Concerns Are Barriers to Using Health Insurance That Explicitly Utilizes AI. J. Internet Commer. 2021, 20, 66–83. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, X.; Cohen, J.; Mou, J. Human vs. AI: Understanding the impact of anthropomorphism on consumer response to chatbots from the perspective of trust and relationship norms. Inf. Process. Manag. 2022, 59, 102940. [Google Scholar] [CrossRef]

- Ingrams, A.; Kaufmann, W.; Jacobs, D. In AI we trust? Citizen perceptions of AI in government decision making. Policy Internet 2022, 14, 390–409. [Google Scholar] [CrossRef]

- Liu, B. In AI We Trust? Effects of Agency Locus and Transparency on Uncertainty Reduction in Human–AI Interaction. J. Comput.-Mediat. Commun. 2021, 26, 384–402. [Google Scholar] [CrossRef]

- Lee, O.-K.D.; Ayyagari, R.; Nasirian, F.; Ahmadian, M. Role of interaction quality and trust in use of AI-based voice-assistant systems. J. Syst. Inf. Technol. 2021, 23, 154–170. [Google Scholar] [CrossRef]

- Kandoth, S.; Shekhar, S.K. Social influence and intention to use AI: The role of personal innovativeness and perceived trust using the parallel mediation model. Forum Sci. Oeconomia 2022, 10, 131–150. [Google Scholar] [CrossRef]

- Schelble, B.G.; Lopez, J.; Textor, C.; Zhang, R.; McNeese, N.J.; Pak, R.; Freeman, G. Towards Ethical AI: Empirically Investigating Dimensions of AI Ethics, Trust Repair, and Performance in Human-AI Teaming. Hum. Factors 2022, 66, 1037–1055. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust and ethics in AI. AI Soc. 2022, 38, 733–745. [Google Scholar] [CrossRef]

- Molina, M.D.; Sundar, S.S. When AI moderates online content: Effects of human collaboration and interactive transparency on user trust. J. Comput.-Mediat. Commun. 2022, 27, zmac010. [Google Scholar] [CrossRef]

- Chi, N.T.K.; Vu, N.H. Investigating the customer trust in artificial intelligence: The role of anthropomorphism, empathy response, and interaction. CAAI Trans. Intell. Technol. 2022, 8, 260–273. [Google Scholar] [CrossRef]

- Pitardi, V.; Marriott, H.R. Alexa, she’s not human but … Unveiling the drivers of consumers’ trust in voice-based artificial intelligence. Psychol. Mark. 2021, 38, 626–642. [Google Scholar] [CrossRef]

- Sullivan, Y.; de Bourmont, M.; Dunaway, M. Appraisals of harms and injustice trigger an eerie feeling that decreases trust in artificial intelligence systems. Ann. Oper. Res. 2022, 308, 525–548. [Google Scholar] [CrossRef]

- Yu, L.; Li, Y. Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort. Behav. Sci. 2022, 12, 127. [Google Scholar] [CrossRef]

- Yokoi, R.; Eguchi, Y.; Fujita, T.; Nakayachi, K. Artificial Intelligence Is Trusted Less than a Doctor in Medical Treatment Decisions: Influence of Perceived Care and Value Similarity. Int. J. Hum.-Comput. Interact. 2021, 37, 981–990. [Google Scholar] [CrossRef]

- Hasan, R.; Shams, R.; Rahman, M. Consumer trust and perceived risk for voice-controlled artificial intelligence: The case of Siri. J. Bus. Res. 2021, 131, 591–597. [Google Scholar] [CrossRef]

- Łapińska, J.; Escher, I.; Górka, J.; Sudolska, A.; Brzustewicz, P. Employees’ trust in artificial intelligence in companies: The case of energy and chemical industries in Poland. Energies 2021, 14, 1942. [Google Scholar] [CrossRef]

- Huo, W.; Zheng, G.; Yan, J.; Sun, L.; Han, L. Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human–computer trust, and personality. Comput. Hum. Behav. 2022, 132, 107253. [Google Scholar] [CrossRef]

- Cheng, M.; Li, X.; Xu, J. Promoting Healthcare Workers’ Adoption Intention of Artificial-Intelligence-Assisted Diagnosis and Treatment: The Chain Mediation of Social Influence and Human–Computer Trust. IJERPH 2022, 19, 13311. [Google Scholar] [CrossRef]

- Gutzwiller, R.S.; Reeder, J. Dancing With Algorithms: Interaction Creates Greater Preference and Trust in Machine-Learned Behavior. Hum. Factors 2021, 63, 854–867. [Google Scholar] [CrossRef]

- Goel, K.; Sindhgatta, R.; Kalra, S.; Goel, R.; Mutreja, P. The effect of machine learning explanations on user trust for automated diagnosis of COVID-19. Comput. Biol. Med. 2022, 146, 105587. [Google Scholar] [CrossRef] [PubMed]

- Nakashima, H.H.; Mantovani, D.; Junior, C.M. Users’ trust in black-box machine learning algorithms. Rev. Gest. 2022, 31, 237–250. [Google Scholar] [CrossRef]

- Choi, S.; Jang, Y.; Kim, H. Influence of Pedagogical Beliefs and Perceived Trust on Teachers’ Acceptance of Educational Artificial Intelligence Tools. Int. J. Hum.-Comput. Interact. 2022, 39, 910–922. [Google Scholar] [CrossRef]

- Zhang, S.; Meng, Z.; Chen, B.; Yang, X.; Zhao, X. Motivation, Social Emotion, and the Acceptance of Artificial Intelligence Virtual Assistants-Trust-Based Mediating Effects. Front. Psychol. 2021, 12, 728495. [Google Scholar] [CrossRef]

- De Brito Duarte, R.; Correia, F.; Arriaga, P.; Paiva, A. AI Trust: Can Explainable AI Enhance Warranted Trust? Hum. Behav. Emerg. Technol. 2023, 2023, 4637678. [Google Scholar] [CrossRef]

- Jang, C. Coping with vulnerability: The effect of trust in AI and privacy-protective behaviour on the use of AI-based services. Behav. Inf. Technol. 2024, 43, 2388–2400. [Google Scholar] [CrossRef]

- Jiang, C.; Guan, X.; Zhu, J.; Wang, Z.; Xie, F.; Wang, W. The future of artificial intelligence and digital development: A study of trust in social robot capabilities. J. Exp. Theor. Artif. Intell. 2023, 37, 783–795. [Google Scholar] [CrossRef]

- Malhotra, G.; Ramalingam, M. Perceived anthropomorphism and purchase intention using artificial intelligence technology: Examining the moderated effect of trust. JEIM 2023, 38, 401–423. [Google Scholar] [CrossRef]

- Baek, T.H.; Kim, M. Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telemat. Inform. 2023, 83, 102030. [Google Scholar] [CrossRef]

- Langer, M.; König, C.J.; Back, C.; Hemsing, V. Trust in Artificial Intelligence: Comparing Trust Processes Between Human and Automated Trustees in Light of Unfair Bias. J. Bus. Psychol. 2023, 38, 493–508. [Google Scholar] [CrossRef]

- Shamim, S.; Yang, Y.; Zia, N.U.; Khan, Z.; Shariq, S.M. Mechanisms of cognitive trust development in artificial intelligence among front line employees: An empirical examination from a developing economy. J. Bus. Res. 2023, 167, 114168. [Google Scholar] [CrossRef]

- Neyazi, T.A.; Ee, T.K.; Nadaf, A.; Schroeder, R. The effect of information seeking behaviour on trust in AI in Asia: The moderating role of misinformation concern. New Media Soc. 2023, 27, 2414–2433. [Google Scholar] [CrossRef]

- Alam, S.S.; Masukujjaman, M.; Makhbul, Z.K.M.; Ali, M.H.; Ahmad, I.; Al Mamun, A. Experience, Trust, eWOM Engagement and Usage Intention of AI Enabled Services in Hospitality and Tourism Industry: Moderating Mediating Analysis. J. Qual. Assur. Hosp. Tour. 2023, 25, 1635–1663. [Google Scholar] [CrossRef]

- Schreibelmayr, S.; Moradbakhti, L.; Mara, M. First impressions of a financial AI assistant: Differences between high trust and low trust users. Front. Artif. Intell. 2023, 6, 1241290. [Google Scholar] [CrossRef]

- Hou, K.; Hou, T.; Cai, L. Exploring Trust in Human–AI Collaboration in the Context of Multiplayer Online Games. Systems 2023, 11, 217. [Google Scholar] [CrossRef]

- Li, J.; Wu, L.; Qi, J.; Zhang, Y.; Wu, Z.; Hu, S. Determinants Affecting Consumer Trust in Communication With AI Chatbots: The Moderating Effect of Privacy Concerns. J. Organ. End. User Comput. 2023, 35, 1–24. [Google Scholar] [CrossRef]

- Selten, F.; Robeer, M.; Grimmelikhuijsen, S. ‘Just like I thought’: Street-level bureaucrats trust AI recommendations if they confirm their professional judgment. Public Adm. Rev. 2023, 83, 263–278. [Google Scholar] [CrossRef]

- Xiong, Y.; Shi, Y.; Pu, Q.; Liu, N. More trust or more risk? User acceptance of artificial intelligence virtual assistant. Hum. FTRS Erg. MFG SVC 2024, 34, 190–205. [Google Scholar] [CrossRef]

- Song, J.; Lin, H. Exploring the effect of artificial intelligence intellect on consumer decision delegation: The role of trust, task objectivity, and anthropomorphism. J. Consum. Behav. 2024, 23, 727–747. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Phong, L.T.; Chi, N.T.K. The impact of AI chatbots on customer trust: An empirical investigation in the hotel industry. CBTH 2023, 18, 293–305. [Google Scholar] [CrossRef]

- Shin, J.; Chan-Olmsted, S. User Perceptions and Trust of Explainable Machine Learning Fake News Detectors. Int. J. Commun. 2023, 17, 518–540. [Google Scholar]

- Göbel, K.; Niessen, C.; Seufert, S.; Schmid, U. Explanatory machine learning for justified trust in human-AI collaboration: Experiments on file deletion recommendations. Front. Artif. Intell. 2022, 5, 919534. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709. [Google Scholar] [CrossRef]

- McKnight, D.H.; Choudhury, V.; Kacmar, C. Developing and Validating Trust Measures for e-Commerce: An Integrative Typology. Inf. Syst. Res. 2002, 13, 334–359. [Google Scholar] [CrossRef]

- Wang, W.; Benbasat, I. Attributions of Trust in Decision Support Technologies: A Study of Recommendation Agents for E-Commerce. J. Manag. Inf. Syst. 2008, 24, 249–273. [Google Scholar] [CrossRef]

- Oliveira, T.; Alhinho, M.; Rita, P.; Dhillon, G. Modelling and testing consumer trust dimensions in e-commerce. Comput. Hum. Behav. 2017, 71, 153–164. [Google Scholar] [CrossRef]

- Ozdemir, S.; Zhang, S.; Gupta, S.; Bebek, G. The effects of trust and peer influence on corporate brand—Consumer relationships and consumer loyalty. J. Bus. Res. 2020, 117, 791–805. [Google Scholar] [CrossRef]

- Grazioli, S.; Jarvenpaa, S.L. Perils of Internet fraud: An empirical investigation of deception and trust with experienced Internet consumers. IEEE Trans. Syst. Man. Cybern. A 2000, 30, 395–410. [Google Scholar] [CrossRef]

- Lewicki, R.J.; Tomlinson, E.C.; Gillespie, N. Models of Interpersonal Trust Development: Theoretical Approaches, Empirical Evidence, and Future Directions. J. Manag. 2006, 32, 991–1022. [Google Scholar] [CrossRef]

- Madhavan, P.; Wiegmann, D.A. Effects of Information Source, Pedigree, and Reliability on Operator Interaction With Decision Support Systems. Hum. Factors 2007, 49, 773–785. [Google Scholar] [CrossRef]

- Moorman, C.; Deshpandé, R.; Zaltman, G. Factors Affecting Trust in Market Research Relationships. J. Mark. 1993, 57, 81–101. [Google Scholar] [CrossRef]

- Lee, M.K. Understanding perception of algorithmic decisions: Fairness, trust, and emotion in response to algorithmic management. Big Data Soc. 2018, 5, 205395171875668. [Google Scholar] [CrossRef]

- Höddinghaus, M.; Sondern, D.; Hertel, G. The automation of leadership functions: Would people trust decision algorithms? Comput. Hum. Behav. 2021, 116, 106635. [Google Scholar] [CrossRef]

- Shin, D.; Park, Y.J. Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 2019, 98, 277–284. [Google Scholar] [CrossRef]

- Wirtz, J.; Patterson, P.G.; Kunz, W.H.; Gruber, T.; Lu, V.N.; Paluch, S.; Martins, A. Brave new world: Service robots in the frontline. JOSM 2018, 29, 907–931. [Google Scholar] [CrossRef]

- Komiak, S.X.; Benbasat, I. Understanding Customer Trust in Agent-Mediated Electronic Commerce, Web-Mediated Electronic Commerce, and Traditional Commerce. Inf. Technol. Manag. 2004, 5, 181–207. [Google Scholar] [CrossRef]

- Meeßen, S.M.; Thielsch, M.T.; Hertel, G. Trust in Management Information Systems (MIS): A Theoretical Model. Z. Arb.-Organ. AO 2020, 64, 6–16. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors J. Hum. Factors Ergon. Soc. 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Rousseau, D.M.; Sitkin, S.B.; Burt, R.S.; Camerer, C. Not So Different After All: A Cross-Discipline View Of Trust. AMR 1998, 23, 393–404. [Google Scholar] [CrossRef]

- Rotter, J.B. Interpersonal trust, trustworthiness, and gullibility. Am. Psychol. 1980, 35, 1–7. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and Mindlessness: Social Responses to Computers. J. Soc. Isssues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Han, S.; Yang, H. Understanding adoption of intelligent personal assistants: A parasocial relationship perspective. IMDS 2018, 118, 618–636. [Google Scholar] [CrossRef]

- Santos, J.; Rodrigues, J.J.P.C.; Silva, B.M.C.; Casal, J.; Saleem, K.; Denisov, V. An IoT-based mobile gateway for intelligent personal assistants on mobile health environments. J. Netw. Comput. Appl. 2016, 71, 194–204. [Google Scholar] [CrossRef]

- Hoy, M.B. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Pu, P.; Chen, L. Trust building with explanation interfaces. In Proceedings of the 11th International Conference on Intelligent User Interfaces, Sydney, Australia, 29 January–1 February 2006; pp. 93–100. [Google Scholar] [CrossRef]

- Bunt, A.; McGrenere, J.; Conati, C. Understanding the Utility of Rationale in a Mixed-Initiative System for GUI Customization. In User Modeling; Lecture Notes in Computer Science; Conati, C., McCoy, K., Paliouras, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4511, pp. 147–156. [Google Scholar] [CrossRef]

- Kizilcec, R.F. How Much Information?: Effects of Transparency on Trust in an Algorithmic Interface. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 2390–2395. [Google Scholar] [CrossRef]

- .Lafferty, J.C.; Eady, P.M.; Pond, A.W. The Desert Survival Problem: A Group Decision Making Experience for Examining and Increasing Individual and Team Effectiveness: Manual; Experimental Learning Methods: Plymouth, MI, USA, 1974. [Google Scholar]

- Zhang, J.; Curley, S.P. Exploring Explanation Effects on Consumers’ Trust in Online Recommender Agents. Int. J. Hum.–Comput. Interact. 2018, 34, 421–432. [Google Scholar] [CrossRef]

- Papenmeier, A.; Kern, D.; Englebienne, G.; Seifert, C. It’s Complicated: The Relationship between User Trust, Model Accuracy and Explanations in AI. ACM Trans. Comput.-Hum. Interact. 2022, 29, 1–33. [Google Scholar] [CrossRef]

- Lim, B.Y.; Dey, A.K. Investigating intelligibility for uncertain context-aware applications. In Proceedings of the 13th International Conference on Ubiquitous Computing, Beijing, China, 17–21 September 2011; pp. 415–424. [Google Scholar] [CrossRef]

- Cai, C.J.; Jongejan, J.; Holbrook, J. The effects of example-based explanations in a machine learning interface. In Proceedings of the 24th International Conference on Intelligent User Interfaces, Marina del Ray, CA, USA, 17–20 March 2019; pp. 258–262. [Google Scholar] [CrossRef]

- Ananny, M.; Crawford, K. Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. New Media Soc. 2018, 20, 973–989. [Google Scholar] [CrossRef]

- Plous, S. The psychology of judgment and decision making. J. Mark. 1994, 58, 119. [Google Scholar]

- Levin, I.P.; Schneider, S.L.; Gaeth, G.J. All Frames Are Not Created Equal: A Typology and Critical Analysis of Framing Effects. Organ. Behav. Hum. Decis. Process. 1998, 76, 149–188. [Google Scholar] [CrossRef] [PubMed]

- Levin, P.; Schnittjer, S.K.; Thee, S.L. Information framing effects in social and personal decisions. J. Exp. Soc. Psychol. 1988, 24, 520–529. [Google Scholar] [CrossRef]

- Davis, M.A.; Bobko, P. Contextual effects on escalation processes in public sector decision making. Organ. Behav. Hum. Decis. Process. 1986, 37, 121–138. [Google Scholar] [CrossRef]

- Dunegan, K.J. Framing, cognitive modes, and image theory: Toward an understanding of a glass half full. J. Appl. Psychol. 1993, 78, 491–503. [Google Scholar] [CrossRef]

- Wong, R.S. An Alternative Explanation for Attribute Framing and Spillover Effects in Multidimensional Supplier Evaluation and Supplier Termination: Focusing on Asymmetries in Attention. Decis. Sci. 2021, 52, 262–282. [Google Scholar] [CrossRef]

- Berger, C.R. Communicating under uncertainty. In Interpersonal Processes: New Directions in Communication Research; Sage Publications, Inc.: Thousand, OK, USA, 1987; pp. 39–62. [Google Scholar]

- Jian, J.-Y.; Bisantz, A.M.; Drury, C.G. Foundations for an Empirically Determined Scale of Trust in Automated Systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Berger, C.R.; Calabrese, R.J. Some explorations in initial interaction and beyond: Toward a developmental theory of interpersonal communication. Hum. Comm. Res. 1975, 1, 99–112. [Google Scholar] [CrossRef]

- Schlosser, M.E. Dual-system theory and the role of consciousness in intentional action. In Free Will, Causality, and Neuroscience; Missal, M., Sims, A., Eds.; Brill: Leiden Bernard Feltz, The Netherlands, 2019. [Google Scholar]

- Dennett, D.C. Précis of The Intentional Stance. Behav. Brain Sci. 1988, 11, 495. [Google Scholar] [CrossRef]

- Takayama, L. Telepresence and Apparent Agency in Human–Robot Interaction. In The Handbook of the Psychology of Communication Technology, 1st ed.; Sundar, S.S., Ed.; Wiley: Hoboken, NJ, USA, 2015; pp. 160–175. [Google Scholar] [CrossRef]

- Mittelstadt, B.D.; Allo, P.; Taddeo, M.; Wachter, S.; Floridi, L. The ethics of algorithms: Mapping the debate. Big Data Soc. 2016, 3, 205395171667967. [Google Scholar] [CrossRef]

- Epley, N.; Waytz, A.; Cacioppo, J.T. On seeing human: A three-factor theory of anthropomorphism. Psychol. Rev. 2007, 114, 864–886. [Google Scholar] [CrossRef]

- Fox, J.; Ahn, S.J.; Janssen, J.H.; Yeykelis, L.; Segovia, K.Y.; Bailenson, J.N. Avatars Versus Agents: A Meta-Analysis Quantifying the Effect of Agency on Social Influence. Hum.–Comput. Interact. 2015, 30, 401–432. [Google Scholar] [CrossRef]

- Oh, C.S.; Bailenson, J.N.; Welch, G.F. A Systematic Review of Social Presence: Definition, Antecedents, and Implications. Front. Robot. AI 2018, 5, 114. [Google Scholar] [CrossRef]

- Kiesler, S.; Goetz, J. Mental models of robotic assistants. In Proceedings of the CHI’02 Extended Abstracts on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; pp. 576–577. [Google Scholar] [CrossRef]

- Ososky, S.; Philips, E.; Schuster, D.; Jentsch, F. A Picture is Worth a Thousand Mental Models: Evaluating Human Understanding of Robot Teammates. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2013, 57, 1298–1302. [Google Scholar] [CrossRef]

- Short, J.; Williams, E.; Christie, B. The Social Psychology of Telecommunications; Wiley: New York, NY, USA, 1976. [Google Scholar]

- Van Doorn, J.; Mende, M.; Noble, S.M.; Hulland, J.; Ostrom, A.L.; Grewal, D.; Petersen, J.A. Emergence of Automated Social Presence in Organizational Frontlines and Customers’ Service Experiences. J. Serv. Res. 2017, 20, 43–58. [Google Scholar] [CrossRef]

- Fiske, S.T.; Macrae, C.N. (Eds.) The SAGE Handbook of Social Cognition; SAGE: London, UK, 2012. [Google Scholar]

- Hassanein, K.; Head, M. Manipulating perceived social presence through the web interface and its impact on attitude towards online shopping. Int. J. Hum.-Comput. Stud. 2007, 65, 689–708. [Google Scholar] [CrossRef]

- Cyr, D.; Hassanein, K.; Head, M.; Ivanov, A. The role of social presence in establishing loyalty in e-Service environments. Interact. Comput. 2007, 19, 43–56. [Google Scholar] [CrossRef]

- Chung, N.; Han, H.; Koo, C. Adoption of travel information in user-generated content on social media: The moderating effect of social presence. Behav. Inf. Technol. 2015, 34, 902–919. [Google Scholar] [CrossRef]

- Ogara, S.O.; Koh, C.E.; Prybutok, V.R. Investigating factors affecting social presence and user satisfaction with Mobile Instant Messaging. Comput. Hum. Behav. 2014, 36, 453–459. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D.W. Consumer trust in B2C e-Commerce and the importance of social presence: Experiments in e-Products and e-Services. Omega 2004, 32, 407–424. [Google Scholar] [CrossRef]

- Lu, B.; Fan, W.; Zhou, M. Social presence, trust, and social commerce purchase intention: An empirical research. Comput. Hum. Behav. 2016, 56, 225–237. [Google Scholar] [CrossRef]

- Ogonowski, A.; Montandon, A.; Botha, E.; Reyneke, M. Should new online stores invest in social presence elements? The effect of social presence on initial trust formation. J. Retail. Consum. Serv. 2014, 21, 482–491. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K.; Wilson, A.; Pitardi, V. How live chat assistants drive travel consumers’ attitudes, trust and purchase intentions: The role of human touch. IJCHM 2020, 32, 1795–1812. [Google Scholar] [CrossRef]

- Hassanein, K.; Head, M.; Ju, C. A cross-cultural comparison of the impact of Social Presence on website trust, usefulness and enjoyment. IJEB 2009, 7, 625. [Google Scholar] [CrossRef]

- Mackey, K.R.M.; Freyberg, D.L. The Effect of Social Presence on Affective and Cognitive Learning in an International Engineering Course Taught via Distance Learning. J. Eng. Edu 2010, 99, 23–34. [Google Scholar] [CrossRef]

- Ye, Y.; Zeng, W.; Shen, Q.; Zhang, X.; Lu, Y. The visual quality of streets: A human-centred continuous measurement based on machine learning algorithms and street view images. Environ. Plan. B-Urban. Anal. City Sci. 2019, 46, 1439–1457. [Google Scholar] [CrossRef]

- Horton, D.; Wohl, R.R. Mass Communication and Para-Social Interaction: Observations on Intimacy at a Distance. Psychiatry 1956, 19, 215–229. [Google Scholar] [CrossRef]

- Sproull, L.; Subramani, M.; Kiesler, S.; Walker, J.; Waters, K. When the Interface Is a Face. Hum.-Comp. Interact. 1996, 11, 97–124. [Google Scholar] [CrossRef]

- White, R.W. Motivation reconsidered: The concept of competence. Psychol. Rev. 1959, 66, 297–333. [Google Scholar] [CrossRef] [PubMed]

- Di Dio, C.; Manzi, F.; Peretti, G.; Cangelosi, A.; Harris, P.L.; Massaro, D.; Marchetti, A. Shall I Trust You? From Child–Robot Interaction to Trusting Relationships. Front. Psychol. 2020, 11, 469. [Google Scholar] [CrossRef]

- Kim, S.; McGill, A.L. Gaming with Mr. Slot or Gaming the Slot Machine? Power, Anthropomorphism, and Risk Perception. J. Consum. Res. 2011, 38, 94–107. [Google Scholar] [CrossRef]

- Morrison, M.; Lăzăroiu, G. Cognitive Internet of Medical Things, Big Healthcare Data Analytics, and Artificial intelligence-based Diagnostic Algorithms during the COVID-19 Pandemic. Am. J. Med. Res. 2021, 8, 23. [Google Scholar] [CrossRef]

- Schanke, S.; Burtch, G.; Ray, G. Estimating the Impact of ‘Humanizing’ Customer Service Chatbots. Inf. Syst. Res. 2021, 32, 736–751. [Google Scholar] [CrossRef]

- Aggarwal, P. The Effects of Brand Relationship Norms on Consumer Attitudes and Behavior. J. Consum. Res. 2004, 31, 87–101. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, L.; Wei, W. The effect of perceived error stability, brand perception, and relationship norms on consumer reaction to data breaches. Int. J. Hosp. Manag. 2021, 94, 102802. [Google Scholar] [CrossRef]

- Li, X.; Chan, K.W.; Kim, S. Service with Emoticons: How Customers Interpret Employee Use of Emoticons in Online Service Encounters. J. Consum. Res. 2019, 45, 973–987. [Google Scholar] [CrossRef]

- Shuqair, S.; Pinto, D.C.; So, K.K.F.; Rita, P.; Mattila, A.S. A pathway to consumer forgiveness in the sharing economy: The role of relationship norms. Int. J. Hosp. Manag. 2021, 98, 103041. [Google Scholar] [CrossRef]

- Belanche, D.; Casaló, L.V.; Schepers, J.; Flavián, C. Examining the effects of robots’ physical appearance, warmth, and competence in frontline services: The Humanness-Value-Loyalty model. Psychol. Mark. 2021, 38, 2357–2376. [Google Scholar] [CrossRef]

| Trust OR Trustworthiness AND | Web of Science | Scopus | ACM Digital Library | |

|---|---|---|---|---|

| Artificial Intelligence | 162 | 194 | 28 | |

| AI | 222 | 298 | 52 | |

| Machine Learning | 133 | 135 | 59 | |

| Subtotal | 517 | 627 | 139 | |

| Total | 1283 | |||

| Duplicated removal | 360 | |||

| Wrong type of publication | 281 | |||

| Identified studies for Abstract and Title screening | 642 | |||

| Included | 45 |

| N | Authors | Research Questions (RQ)/Hypotheses (H) Regarding Trust | Results |

|---|---|---|---|

| 1 | [46] | RQ1: What is the difference in the level of trust in AI tools for spam review detection between younger and older adults? RQ2: How does the difference in credibility judgments of reviews between humans and AI tools affect the users’ trust in AI tools? | Older adults’ evaluations of the competence, benevolence, and integrity of AI tools were found to be far higher than younger adults’. |

| 2 | [47] | RQ1: Does providing information about the performance of AI enhance or harm users’ levels of (a) cognitive and (b) behavioural trust in AI? | Regardless of information framing, higher levels of trust were perceived by those who received no information about the AI’s performance; participants were more likely to perceive high levels of trust when they did not feel ownership of the message. |

| 3 | [48] | RQ1: Does AI visibility result in lower trust during the process of purchasing health insurance online? RQ2: Does perceived ease of use have the same influence on trust in AI if AI is visible during the purchase of health insurance online? | Trust was higher without visible AI involvement; perceived ease of use had the same influence on trust in AI, regardless of AI visibility. |

| 4 | [49] | RQ1: What are the anthropomorphic attributes of chatbots influencing consumers’ perceived trust in and responses to chatbots? RQ2: How do the anthropomorphic attributes of chatbots influence consumers’ perceived trust in and subsequent responses to chatbots? RQ3: To what extent do the impacts of the anthropomorphic attributes of chatbots on consumers’ perceived trust in chatbots depend on the type of relationship norm that is salient in consumers’ minds during the service encounter? | Chatbot anthropomorphism has a significant effect on consumers’ trust in chatbots (when consumers infer chatbots as having higher warmth or higher competence based on interaction with them, they tend to develop higher levels of trust in them). Among the three anthropomorphic attributes, perceived competence showed the largest effect size. The effect of the anthropomorphic attributes of chatbots on consumers’ trust in them resulted to be contingent on the type of relationship norm that is salient in their minds during the service encounter. |

| 5 | [50] | How do citizens evaluate the impact of AI decision-making in government in terms of impact on citizen values (trust)? | Complexity was not shown to have a relevant effect on trust. |

| 6 | [51] | RQ1: Compared with no transparency, what are the effects of placebic transparency on (a) uncertainty, (b) trust in judgments, (c) trust in system, and (d) use intention? | Machine-agency locus induced less social presence, which in turn was associated with lower uncertainty and higher trust. Transparency reduced uncertainty and enhanced trust. |

| 7 | [52] | RQ1: What is the relationship between a user’s trust in a VAS and intention to use the VAS? RQ2. What is the relationship between a VAS’s interaction quality and a user’s trust in the VAS? | Interaction quality significantly led to user trust and intention to use |

| 8 | [53] | RQ1: What is the impact of social influence on perceived trust? RQ2: What is the impact of perceived trust on the intention to use AI? RQ3: What is the mediation impact of personal innovativeness and perceived trust between social influence and the intention to use AI? | Social influence had a significant direct positive effect on perceived trust, and perceived trust had a significant direct positive effect on the intention to use. Personal innovativeness and perceived trust partially mediated social influence and the intention to use. |

| 9 | [4] | RQ1: How do explainability and causability affect trust and the user experience with a personalized recommender system? | Causability played an antecedent role to explainability and an underlying part in trust. Users acquired a sense of trust in algorithms when they were assured of their expected level of FATE. Trust significantly mediated the effects of the algorithms’ FATE on users’ satisfaction. Satisfaction stimulated trust and in turn led to positive user perception of FATE. Higher satisfaction led to greater trust and suggested that users were more likely to continue to use an algorithm. |

| 10 | [54] | RQ1: What is the effect of AT ethicality on trust within human–AI teams? RQ2: If unethical actions damage trust, how effective are common trust repair strategies after an AI teammate makes an unethical decision? | AT’s unethical actions decreased trust in the AT and the overall team. Decrease in trust in an AT was not associated with decreased trust in the human teammate. |

| 11 | [55] | RQ1: What influence, if any, do the ethical requirements of AI have on trust in AI? | Younger and more educated individuals indicated greater trust in AI, as did familiarity with smart consumer technologies. Propensity for trust in other people as well as trust in institutions were strongly correlated with both dimensions of trust (human-like and functionality trust). |

| 12 | [1] | RQ1: Is there a difference in the influence between the human-like dimension and the functionality dimension of trust in AI within the TAM framework? | Trust had a significant effect the on intention to use AI. Both dimensions of trust (human-like and functionality) shared a similar pattern of effects within the model, with functionality-related trust exhibiting a greater total impact on usage intention than human-like trust |

| 13 | [56] | RQ1: When humans and AI together serve as moderators of content (vs. AI only or human only), what is the relationship between interactive transparency (vs. transparency vs. no transparency) and (a) trust, (b) agreement, (c) understanding of the system and (d) perceived user agency, human agency, and AI agency? | Users trusted AI for the moderation of content just as much as humans, but it depended on the heuristic that was triggered when they were told AI was the source of moderation. Allowing users to provide feedback to the algorithm enhanced trust by increasing user agency. |

| 14 | [57] | RQ1: How does anthropomorphism affect customer trust in AI? RQ2: What effect does empathy response have on customer trust in AI? RQ3: What effect does interaction response have on customer trust in AI? RQ4: What is the relationship between communication quality and customer trust in AI? | Anthropomorphism and interaction did not play critical roles in generating customer trust in AI unless they created communication quality with customers. |

| 15 | [58] | RQ1: What is the influence of perceived usefulness of voice-activated assistants on users’ attitude to use and trust towards the technology? RQ2: What is the influence of perceived ease of use of voice-activated assistants on users’ attitude to use and trust towards the technology? RQ3: What is the influence of perceived enjoyment of voice-activated assistants on users’ attitude to use and trust towards the technology? RQ4: What is the influence of perceived social presence of voice-activated assistants on users’ attitude to use and trust towards the technology? RQ5: What is the influence of user-inferred social cognition of voice-activated assistants on users’ attitude to use and trust towards the technology? RQ6: What is the influence of perceived privacy concerns of voice-activated assistants on users’ attitude to use and trust towards the technology? | The social attributes (social presence and social cognition) were the unique antecedents for developing trust. Additionally, a peculiar dynamic between privacy and trust was shown, highlighting how users distinguish two different sources of trustworthiness in their interactions with VAs, identifying the brand producers as the data collector. |

| 16 | [59] | RQ1: What is the effect of uncanniness on trust in artificial agents? | Uncanniness had a negative impact on trust in artificial agents. Perceived harm and perceived injustice were the major predictors of uncanniness. |

| 17 | [60] | RQ1: Does employees’ perceived transparency mediate the impact of AI decision-making transparency on employees’ trust in AI? RQ2: What impact does employees’ perceived effectiveness of AI have on employees’ trust in AI? RQ3: Do employees’ perceived transparency and perceived effectiveness of AI have a chain mediating role between AI decision-making transparency and employees’ trust in AI? RQ4: Does employees’ discomfort with AI have a negative impact on employees’ trust in AI? RQ5: Do employees’ perceived transparency of and discomfort toward AI have a chain mediating role between AI decision-making transparency and employees’ trust in AI? | AI decision-making transparency (vs. non-transparency) led to higher perceived transparency, which in turn increased both effectiveness (which promoted trust) and discomfort (which inhibited trust). |

| 18 | [61] | RQ1: How is AI trusted compared to human doctors? RQ2: When an AI system learns and then provides patients’ desired treatment, will they perceive the AI system to care for them and, therefore, will they trust the AI system more than when the AI system provides patients’ desired treatment without learning it? RQ3: Will an AI system proposing the treatment preferred by the patient be trusted more than that not proposing the patient’s preferred treatment, regardless of whether or not it learns the patient’s preference? | Participants trusted the AI system less than a doctor, even when the AI system learned and suggested their desired treatment and even if it performed at the level of a human doctor. |

| 19 | [62] | RQ1: How does trust in Siri influence brand loyalty? RQ2: How do interactions with Siri influence brand loyalty? RQ3: Will a higher level of perceived risk be associated with a lower level of brand loyalty? RQ4: How does the novelty value of Siri influence brand loyalty? | Perceived risk seemed to have a significantly negative influence on brand loyalty. The influence of novelty value of using Siri was found to be moderated by brand involvement and consumer innovativeness in such a way the influence is greater for consumers who are less involved with the brand and who are more innovative. |

| 20 | [63] | RQ1: Does employees’ general trust in technology impact on their trust in AI in the company? RQ2: Does intra-organizational trust impact on employees’ trust in AI in the company? RQ3: Does employees’ individual competence trust impact their trust in AI in the company? | A positive relationship between general trust in technology and employees’ trust in AI in the company was found, as well as between intraorganizational trust and employees’ trust in AI in the company |

| 21 | [64] | RQ1: Does human–computer trust play a mediating role in the relationship between patients’ self-responsibility attribution and acceptance of medical AI for independent diagnosis and treatment? RQ2: Does human–computer trust play a mediating role in the relationship between patients’ self-responsibility attribution and acceptance of medical AI for assistive diagnosis and treatment? RQ3: Do Big Five personality traits moderate the relationship between human–computer trust and acceptance of medical AI for independent diagnosis and treatment? | Patients’ self-responsibility attribution was positively related to human–computer trust (HCT). Conscientiousness and openness strengthened the association between HCT and acceptance of AI for independent diagnosis and treatment; agreeableness and conscientiousness weakened the association between HCT and acceptance of AI for assistive diagnosis and treatment. |

| 22 | [65] | RQ1: Does human–computer trust mediate the relationship between performance expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment? RQ2: Does human–computer trust mediate the relationship between effort expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment? RQ3: Can the relationship between performance expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment be mediated sequentially by social influence and human–computer trust? RQ4: Can the relationship between effort expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment be mediated sequentially by social influence and human–computer trust? | Social influence and human–computer trust, respectively, mediated the relationship between expectancy (performance expectancy and effort expectancy) and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. Furthermore, social influence and human–computer trust played a chain mediation role between expectancy and healthcare workers’ adoption intention of AI-assisted diagnosis and treatment. |

| 23 | [66] | RQ1: Will an interactive process (Interactive Machine Learning) develop behaviours that are more trustworthy and adhere more closely to user goals and expectations about search performance during implementation? | Compared to noninteractive techniques, Interactive Machine Learning (IML) behaviours were more trusted and preferred, as well as recognizable, separate from non-IML behaviours. |

| 24 | [67] | RQ1: What is the influence of trust on clinicians based on the ML explanations? | While the clinicians’ trust in automated diagnosis increased with the explanations, their reliance on the diagnosis reduced as clinicians were less likely to rely on algorithms that were not close to human judgement. |

| 25 | [68] | RQ1: Do explainability artifacts increase user confidence in a black-box AI system? | Users’ trust of black-box systems was high and explainability artifacts did not influence this behaviour |

| 26 | [69] | RQ1: How do teachers’ perceived trust affect their acceptance of EAITs? RQ2: What is the most dominant factor that affects teachers’ intention to use EAITs? | Teachers with constructivist beliefs were more likely to integrate EAITs than teachers with transmissive orientations. Perceived usefulness, perceived ease of use, and perceived trust in EAITs were determinants to be considered when explaining teachers’ acceptance of EAITs. The most influential determinant of predicting their acceptance was found to be how easily the EAIT is constructed. |

| 27 | [70] | RQ1: What is the correlation between users’ behaviour of trusting AI virtual assistants and the acceptance of AI virtual assistants? RQ2: What is the correlation between perceived usefulness and users’ behaviour of trusting AI virtual assistants? RQ3: What is the correlation between perceived ease of use and users’ behaviour of trusting AI virtual assistants? RQ4: What is the relationship between perceived humanity and user trust in artificial intelligence virtual assistants? RQ5: What is the correlation between perceived social interactivity and user trust in artificial intelligence virtual assistants? RQ6: What is the correlation between perceived social presence and user trust in AI virtual assistants? User trust behaviour has a mediating role between functionality and acceptance. H5: User trust behaviour has a mediating role between social emotion and acceptance. | Functionality and social emotions had a significant effect on trust, where perceived humanity showed an inverted U-shaped relationship with trust, and trust mediated the relationship between both functionality and social emotions and acceptance. |

| 28 | [71] | RQ1. How do different explanations affect user’s trust in AI systems? RQ2. How do the levels of risk of the user’s decision-making play a role in the user’s trust in AI systems? RQ3. How does the performance of the AI system play a role in the user’s AI trust even when an explanation is present? | The study has shown that the presence of explanations increases AI trust, but only in certain conditions. AI trust was higher when explanations with feature importance were provided than with counterfactual explanations. Moreover, when the system performance is not guaranteed, the use of explanations seems to lead to an overreliance on the system. Lastly, system performance had a stronger impact on trust, compared to the effects of other factors (explanation and risk). |

| 29 | [72] | H1. Trust in AI has a positive effect on the degree of use of various AI-based services. | First, trust in AI and privacy-protective behaviour positively impact AI-based service usage. Second, online skills do not impact AI-based service usage significantly. |

| 30 | [73] | H1: The different anthropomorphism of social robots affects students’ initial capability trust in social robots. H3: The stronger the student’s attraction perception of the social robot, the stronger the trust in the initial capabilities of the social robot. H5: There are differences in the initial capability of students of different ages to trust the initial capabilities of social robots with different anthropomorphic levels. | When the degree of anthropomorphism of social robots is at different levels, there are significant differences in students’ initial capability trust evaluation. It can be seen that the degree of anthropomorphism of social robots has an impact on students’ initial capability trust. |

| 31 | [74] | RQ3. Does trust in AI play a role informing consumers’ intention to purchase using AI? | The results show that consumers tend to demand anthropomorphized products to gain a better shopping experience and, therefore, demand features that attract and motivate them to purchase through artificial intelligence via mediating variables, such as perceived animacy and perceived intelligence. Moreover, trust in artificial intelligence moderates the relationship between perceived anthropomorphism and perceived animacy. |

| 32 | [75] | H2-1: Information seeking positively affects the perceived trust of generative AI. H2-2: Task efficiency positively affects the perceived trust of generative AI. H2-3: Personalization positively affects the perceived trust of generative AI. H2-4: Social interaction positively affects the perceived trust of generative AI. H2-5: Playfulness positively affects the perceived trust of generative AI. H4: Perceived trust of generative AI positively affects continuance intention. | The findings reveal a negative relationship between personalization and creepiness, while task efficiency and social interaction are positively associated with creepiness. Increased levels of creepiness, in turn, result in decreased continuance intention. Furthermore, task efficiency and personalization have a positive impact on trust, leading to increased continuance intention. |

| 33 | [76] | RQ1: Is there an initial difference for trustworthiness assessments, trust, and trust behaviour between the automated system and the human trustee? RQ2: Is there an initial difference and are there different effects for trust violations and trust repair interventions for the facets of trustworthiness for human and automated systems as trustees? RQ3: Will there be interaction effects between the trust repair intervention and the information regarding imperfection for the automated system for trustworthiness, trust, and trust behaviour? | The results of the study showed that participants have initially less trust in automated systems. Furthermore, the trust violation and the trust repair intervention had weaker effects for the automated system. Those effects were partly stronger when highlighting system imperfection. |

| 34 | [77] | H1: The higher the perceived reliability of AI is, the more cognitive trust employees display in AI. H2: The higher the AI transparency is, the more cognitive trust employees display in AI. H3: The higher AI flexibility is, the more cognitive trust employees have in AI. H4: Effectiveness of data governance stimulates trust in data governance, which leads to cognitive trust in AI. H5: AI-driven disruption in work routines lowers the effect of AI reliability, transparency, and flexibility on cognitive trust in AI. | The findings suggest that AI features positively influence the cognitive trust of employees, while work routine disruptions have a negative impact on cognitive trust in AI. The effectiveness of data governance was also found to facilitate employees’ trust in data governance and, subsequently, employees’ cognitive trust in AI. |

| 35 | [78] | H1a. Seeking information about AI on traditional media is positively associated with trust in AI after controlling for faith in and engagement with AI. H1b. Seeking information about AI on social media is negatively associated with trust in AI after controlling for faith in and engagement with AI. H2a. Concern about misinformation online will weaken the positive relationship between information-seeking behaviour about AI on traditional media and trust in AI. H2b. Concern about misinformation online will strengthen the negative relationship between information-seeking behaviour about AI on social media and trust in AI. | Results indicate a positive relationship exists between seeking AI information on social media and trust across all countries. However, for traditional media, this association was only present in Singapore. When considering misinformation, a positive moderation effect was found for social media in Singapore and India, whereas a negative effect was observed for traditional media in Singapore. |

| 36 | [79] | H1a: Accuracy experience of AI technology is positively related to the trust in AI-enabled services. H1b: Insight experience of AI technology is positively related to the trust in AI-enabled services. H1c: Interactive experience of AI technology is positively related to the trust in AI-enabled services. H3a: User trust is positively related to eWOM engagement. H3b: User trust is positively related to usage intention to use AI-enabled services. H5a: Self-efficacy positively moderates the association between accuracy experience and trust in AI. H5b: Self-efficacy positively moderates the association between insight experience and trust in AI. H5c: Self-efficacy positively moderates the association between interactive experience and trust in AI. H6: eWOM engagement mediates the association between trust in AI and AI-enabled service usage intention. | The results indicate that accuracy experience, insight experience, and interactive experience between trust in AI and eWOM engagements are significant, except for the relationship between interactive experience and eWOM. Likewise, the outcome indicates that trust in AI has a significant positive relationship between usage intention and eWOM, while eWOM significantly and positively influences usage intention. In addition, this study found that word of mouth mediates the association between accuracy experience and trust in AI. The results show that self-efficacy moderates the association between accuracy experience and trust in AI. |

| 37 | [80] | RQ: How do initial perceptions of a financial AI assistant differ between people who report they (rather) trust and people who report they (rather) do not trust the system? | Comparisons between high-trust and low-trust user groups revealed significant differences in both open-ended and closed-ended answers. While high-trust users characterized the AI assistant as more useful, competent, understandable, and human-like, low-trust users highlighted the system’s uncanniness and potential dangers. Manipulating the AI assistant’s agency had no influence on trust or intention to use. |

| 38 | [81] | H1. Perceived anthropomorphism has a positive effect on trust in AI teammates. H2. Perceived rapport has a positive effect on trust in AI teammates. H3. Perceived enjoyment has a positive effect on trust in AI teammates. H4. Peer influence has a positive effect on trust in AI teammates. H5. Facilitating conditions has a positive effect on trust in AI teammates. H6. Self-efficacy has a positive effect on trust in AI teammates. H8. Trust in AI teammates has a positive effect on intention to cooperate with AI teammates. | The results show that perceived rapport, perceived enjoyment, peer influence, facilitating conditions, and self-efficacy positively affect trust in AI teammates. Moreover, self-efficacy and trust positively relate to the intention to cooperate with AI teammates. |

| 39 | [82] | H1: The expertise of AI chatbots has a positive impact on consumers’ trust in chatbots. H2: The responsiveness of AI chatbots has a positive impact on consumers’ trust in chatbots. H3: The ease of use of AI chatbots has a positive impact on consumers’ trust in chatbots. H4: The anthropomorphism of AI chatbots has a positive impact on consumers’ trust in chatbots. H5: Consumers’ brand trust in AI chatbot providers positively affects consumers’ trust in chatbots. H6: Human support has a negative impact on consumers’ trust in AI chatbots. H7: Perceived risk has a negative impact on consumers’ trust in AI chatbots. H8: Privacy concerns moderate the relationship between chatbot-related factors and consumers’ trust in AI chatbots. H9: Privacy concerns moderate the relationship between company-related factors and consumers’ trust in AI chatbots. | The results found that the chatbot-related factors (expertise, responsiveness, and anthropomorphism) positively affect consumers’ trust in chatbots. The company-related factor (brand trust) positively affects consumers’ trust in chatbots, and perceived risk negatively affect consumers’ trust in chatbots. Privacy concerns have a moderating effect on company-related factors. |

| 40 | [83] | H1. Street-level bureaucrats perceive AI recommendations that are congruent with their professional judgement as more trustworthy than AI recommendations that are incongruent with their professional judgement. H2. Street-level bureaucrats perceive explained AI recommendations as more trustworthy than unexplained AI recommendations. H3. Higher perceived trustworthiness of AI recommendations by street-level bureaucrats is related to an increased likelihood of use. | We found that police officers trust and follow AI recommendations that are congruent with their intuitive professional judgement. We found no effect of explanations on trust in AI recommendations. We conclude that police officers do not blindly trust AI technologies, but follow AI recommendations that confirm what they already thought. |

| 41 | [84] | H7. Trust positively affects performance expectancy of AI virtual assistants. H8. Trust positively affects behavioural intention to use AI virtual assistants. H9. Trust positively affects attitude toward using AI virtual assistants. H10. Trust negatively affects perceived risk of AI virtual assistants. | Results show that gender is significantly related to behavioural intention to use, education is positively related to trust and behavioural intention to use, and usage experience is positively related to attitude toward using. UTAUT variables, including performance expectancy, effort expectancy, social influence, and facilitating conditions, are positively related to behavioural intention to use AI virtual assistants. Trust and perceived risk respectively have positive and negative effects on attitude toward using and behavioural intention to use AI virtual assistants. Trust and perceived risk play equally important roles in explaining user acceptance of AI virtual assistants. |

| 42 | [85] | H2a. Consumers have greater trust in a high-intelligence-level AI compared to a low-intelligence-level AI. H2b. Trust mediates the relationship between the intelligence level of AI and consumers’ willingness to delegate tasks to the AI. H3. Task objectivity has a moderating effect on the relationship between intelligence level of AI and trust. Specifically, consumers have greater trust in low-intelligence-level AI for objective tasks compared to subjective tasks, but such differences in trust are not manifested when the AI has a high intelligence level. H4. Anthropomorphism has a moderating effect on the relationship between intelligence level of AI and trust. Specifically, consumers’ trust in AI may be enhanced by increased anthropomorphism when the AI’s intelligence level is low, but this moderated effect is not manifested when its intelligence level is high. | The findings reveal that increasing AI intelligence enhances consumer decision delegation for both subjective and objective consumption tasks, with trust serving as a mediating factor. However, objective tasks are more likely to be delegated to AI. Moreover, anthropomorphic appearance positively influences trust but does not moderate the relationship between intelligence and trust. |

| 43 | [86] | H1b. Empathy response has positively impacted customer trust toward AI chatbots. H2a. Anonymity has positively impacted interaction. H2b. Anonymity has positively impacted customer trust toward AI chatbots. H5. Interaction has positively impacted customer trust toward AI chatbots. | The paper reports that empathy response, anonymity and customization significantly impact interaction. Empathy response is found to have the strongest influence on interaction. Meanwhile, empathy response and anonymity were revealed to indirectly affect customer trust. |

| 44 | [87] | RQ1: How are various user characteristics, such as (a) demographics, (b) trust propensity, (c) fake news self-efficacy, (d) fact-checking service usage, (e) AI expertise, (f) and overall AI trust, associated with their trust in specific explainable ML fake news detectors? RQ2: How are the perceived machine characteristics such as (a) performance, (b) collaborative capacity, (c) agency, and (d) complexity associated with users’ trust in explainable ML fake news detectors? RQ3: How does trust in the explainable ML fake news detector predict adoption intention of this AI application? | Users’ trust levels in the software were influenced by both individuals’ inherent characteristics and their perceptions of the AI application. Users’ adoption intention was ultimately influenced by trust in the detector, which explained a significant amount of the variance. We also found that trust levels were higher when users perceived the application to be highly competent at detecting fake news, be highly collaborative, and have more power in working autonomously. Our findings indicate that trust is a focal element in determining users’ behavioural intentions. |

| 45 | [88] | H1a: Explanations make it more likely that individuals will delete the proposed files. H1b: Providing explanations increases both affective and cognitive trust ratings. H1c: By providing information on why the system’s suggestions are valid, users can better understand the reliability of the underlying processes. This should lead to increased credibility ratings. H1d: Explanations directly reduce information uncertainty. H2a: Information uncertainty in the system’s proposals mediates the effect of explanations of the system’s proposals on its acceptance. H2b: Information uncertainty in the system’s proposals mediates the effect of explanations of the system’s proposals on trust. H2c: Information uncertainty in the system’s proposals mediates the effect of explanations of the system’s proposals on credibility. H3a: Individuals high in need for cognition have a stronger preference for thinking about the explanations, which helps them to delete irrelevant files. H3b: … build trust. H3c: build credibility. H3d: … to reduce information uncertainty. H4a: Conscientiousness moderates the impact of explanations on deletion of irrelevant files. H4b: … building trust. H4c: … credibility. H4d: … on reducing information uncertainty. H5: Deletion is not only an action that causes digital objects to be forgotten in external memory, but may also support intentional forgetting of associated memory content. | Results show the importance of presenting explanations for the acceptance of deleting suggestions in all three experiments, but also point to the need for their verifiability to generate trust in the system. However, we did not find clear evidence that deleting computer files contributes to human forgetting of the related memories. |

| N | Authors | AI System | Trust-Related Constructs Studies | Trust Construct | Trust Type | Attribute |

|---|---|---|---|---|---|---|

| 1 | [46] | Tool for spam review detection | Age Difference in credibility judgments Trust propensity Educational background Online shopping experience Variety of online shopping platforms usually used View on online spam reviews | Trust beliefs | Cognitive | Competence |

| Affective | Benevolence Integrity | |||||

| 2 | [47] | Decision-making assistant | Information framing Message ownership | Perceived trustworthiness | Cognitive | Computer credibility |

| 3 | [48] | Interface for online insurance purchase | Perceived Usefulness Perceived Ease of Use Visibility of AI | Trust in AI | Cognitive | Competence Reliability Functionality Helpfulness |

| Affective | Benevolence Integrity | |||||

| 4 | [49] | Text-based chatbots | Anthropomorphic attributes (perceived warmth, perceived competence, communication delay) Relationship norms (communal/exchange relationship) | Trust in chatbots | Cognitive | Capability |

| Affective | Honesty Truthfulness | |||||

| 5 | [50] | Decision-making assistant used in public administration | Complexity of the decision process | Cognitive | Competence | |

| Affective | Benevolence Honesty | |||||

| 6 | [51] | Fake news detection tool | Agency locus Transparency Social presence Anthropomorphism Perceived AI’s threat Social media experience | Trust in judgments/system | Cognitive | Correctness Accuracy Intelligence Competence |

| 7 | [52] | AI-based voice assistant systems | Information quality System quality Interaction quality | Trust | Cognitive | Trust |

| 8 | [53] | AI-based job application process | Social influence Personal innovativeness | Perceived trust | Cognitive | Perceived trust |

| 9 | [4] | Algorithm service | Transparency Accountability Fairness, explainability (FATE) Causability | Trust | Cognitive | Trust |

| 10 | [54] | Autonomous teammate (AT) in military game | Team score Trust in human teammate Trust in the team AI ethicality | Trust in the autonomous teammate | Cognitive | Trust |

| Affective | Trust | |||||

| 11 | [55] | Smart technologies | Propensity to trust Age Education Familiarity with smart consumer technologies | Trust in AI; functionality trust in AI | Cognitive | Competence |

| Affective | Benevolence Integrity | |||||

| 12 | [1] | Voice assistants | Perceived ease of use Perceived usefulness | Trust in the voice assistant | Cognitive | Safety Competence |

| Affective | Integrity Benevolence | |||||

| 13 | [56] | Online content classification systems | Machine heuristic Transparency | Attitudinal trust | Cognitive | Integrity Benevolence |

| Affective | Accurateness Dependability Value Usefulness | |||||

| 14 | [57] | AI applications in general | Interaction, empathy Anthropomorphism Communication quality COVID-19 pandemic risk | Trust in AI | Cognitive | Efficiency Safety Competence |

| 15 | [58] | AI-based voice assistant systems | Perceived usefulness Perceived ease of use Perceived enjoyment Social presence Social cognition Privacy concern | Trust | Affective | Honesty Truthfulness |

| 16 | [59] | Artificial agents in general | Perceived harm Injustice Reported wrongdoing Uncanniness | Trust in artificial agent | Cognitive | Reliability Dependability Safety |

| 17 | [60] | Decision-making assistant | Perceived effectiveness of AI Discomfort Transparency | Trust | Cognitive | Reliability |

| 18 | [61] | Medical AI | Perceived value similarity Perceived care Perceived ability Perceived uniqueness neglect | Trust in decision maker | Cognitive | Reliability |

| 19 | [62] | AI-based voice assistant systems (Siri) | Interaction, novelty value Consumer innovativeness Brand involvement Brand loyalty Perceived risk | Trust | Cognitive | Competence |

| Affective | Honesty | |||||

| 20 | [63] | AI in companies | General trust in technology Intraorganizational trust Individual competence trust | Employees’ trust in AI in the company | Cognitive | Reliability Safety Competence |

| Affective | Honesty | |||||

| 21 | [64] | Medical AI | Self-responsibility attribution Big Five personality traits Acceptance of medical AI for independent diagnosis and treatment | Human–computer trust | Cognitive | Effectiveness Competence Reliability Helpfulness |

| 22 | [65] | Medical AI | Performance expectancy Effort expectancy Social influence | Human–computer trust | Cognitive | Effectiveness Competence Reliability Helpfulness |

| 23 | [66] | Machine learning models for automated vehicles | Interactivity of machine learning | Trust in automated systems | Cognitive | Security Dependability Reliability |

| Affective | Integrity Familiarity | |||||

| 24 | [67] | Medical AI | Explanation Reliability | Trust in the system | Cognitive | Reliability Understandability |

| 25 | [68] | Artificial neural network algorithms | Explainability | Trust in AI systems | Cognitive | Competence Predictability |

| 26 | [69] | Educational Artificial Intelligence Tools (EAIT) | Perceived ease of use Constructivist pedagogical beliefs Transmissive pedagogical beliefs Perceived usefulness | Perceived trust | Cognitive | Reliability Dependability |

| Affective | Fairness | |||||

| 27 | [70] | AI virtual assistants | Functionality (perceived usefulness, perceived ease of use) Social emotion (perceived humanity, perceived social interactivity, perceived social presence) | Trust | Cognitive | Favourability |

| Affective | Honesty Care | |||||

| 28 | [71] | Recommendation system | Explanations, risk Performance | Trust | Cognitive | Reliability Predictability Consistency Skill Capability Competency Preciseness Transparency |

| 29 | [72] | AI-based services | Digital literacy Online skills Privacy-protective behaviour | Trust in AI | Cognitive | Reliability Efficiency Helpfulness |

| 30 | [73] | Social robots | Age Anthropomorphism | Capability Trust | Cognitive | Competence |

| Affective | Honesty | |||||

| 31 | [74] | AI shopping assistant | Perceived animacy Perceived intelligence Perceived anthropomorphism | Trust in Artificial Intelligence | Affective | Honesty Interest respect |

| 32 | [75] | ChatGPT | Information seeking Personalization Task efficiency Playfulness Social interaction | Trust | Cognitive | Believability Credibility |

| 33 | [76] | AI system for candidate selection | Information about the system Trust violations and repairs | Trustworthiness | Cognitive | Ability |

| Affective | Integrity Benevolence | |||||

| 34 | [77] | AI | Perceived controllability Transparency | Cognitive trust | Cognitive | Cognitive trust |

| 35 | [78] | AI | Information seeking Concern about misinformation online | Trust in AI | Cognitive | Trustworthiness Rightfulness Dependability Reliability |

| 36 | [79] | AI technology | Self-efficacy Accuracy Interactivity | Trust in AI | Cognitive | Competence |

| Affective | Honesty | |||||

| 37 | [80] | Financial AI assistant | Autonomy | Trust in automation | Cognitive | Reliability |

| 38 | [81] | AI teammates | Self-efficacy Perceived anthropomorphism Perceived enjoyment Perceived rapport | Trust in AI teammates | Cognitive | Trustworthiness |

| Affective | Honesty | |||||

| 39 | [82] | AI chatbots | Privacy concerns Expertise Anthropomorphism Responsiveness Ease of use | Trust in AI chatbots | Cognitive | Trustworthiness |

| Affective | Well-intendedness | |||||

| 40 | [83] | AI recommendation system | Explanations Congruency with own judgments | Perceived trustworthiness | Cognitive | Competence |

| Affective | Honesty Anthropomorphism | |||||

| 41 | [84] | AI virtual assistant | Perceived risk Performance expectancy | Trust | Cognitive | Competence Reliability |

| 42 | [85] | AI recommendation system | System intelligence Anthropomorphism | Trust | Cognitive | Competence |

| 43 | [86] | AI chatbots | Empathy response Customization Interaction | Trust in AI | Cognitive | Competence Safety |

| 44 | [87] | ML fake news detectors | Performance Collaborative capacity Agency Complexity | Overall AI trust, Trust in detector | Cognitive | Dependability Competence Responsiveness Safety Reliability |

| Affective | Integrity Well-intendedness Honesty | |||||

| 45 | [88] | Explanatory AI system | Explanations Credibility Information uncertainty | Cognitive/Affective trust | Cognitive | Cognitive trust |

| Affective | Affective trust |

| Art N | Trust Definition: Affective Component | Trust Measurement (Aff/Cogn/Both) | System Anthropomorphism Level | Experimental Stimulus |

|---|---|---|---|---|

| 1 | Yes | Both | Low | Direct experience: task |

| 2 | Yes | Cognitive | Medium | Direct experience: task |

| 3 | No | Both | Medium | Direct experience: scenario |

| 4 | Yes | Both | High | Representation of past experience |

| 5 | No | Both | Medium | Direct experience: scenario |

| 6 | No | Cognitive | Low | Direct experience: task |

| 7 | No | Cognitive | High | Representation of past experience |

| 8 | No | Cognitive | Low | General representation |

| 9 | No | Cognitive | Low | Direct experience: task |

| 10 | Yes | Both | High | Direct experience: task |

| 11 | Yes | Both | Undefined | General representation |

| 12 | Yes | Both | High | General representation |

| 13 | No | Both | Undefined | Direct experience: task |

| 14 | No | Cognitive | Undefined | General representation |

| 15 | Yes | Affective | High | Representation of past experience |

| 16 | No | Cognitive | Undefined | Representation of past experience; direct experience: scenario |

| 17 | No | Cognitive | Medium | Direct experience: scenario |

| 18 | No | Cognitive | Medium | Direct experience: scenario |

| 19 | No | Both | High | Representation of past experience |

| 20 | Yes | Both | Low | Representation of past experience |

| 21 | No | Cognitive | Medium | Representation of past experience |

| 22 | No | Cognitive | Medium | Representation of past experience |

| 23 | No | Both | Low | Direct experience: task |

| 24 | No | Cognitive | Medium | Direct experience: task |

| 25 | No | Cognitive | Low | Direct experience: task |

| 26 | No | Both | Low | Representation of past experience |

| 27 | No | Both | High | Representation of past experience |

| 28 | Yes | Cognitive | Medium | Direct experience: task |

| 29 | No | Cognitive | Undefined | Representation of past experience |

| 30 | No | Both | High | Direct experience: scenario |

| 31 | No | Affective | High | Representation of past experience |

| 32 | Yes | Cognitive | High | Representation of past experience |

| 33 | Yes | Both | Medium | Direct experience: task |

| 34 | No | Cognitive | Undefined | General representation |

| 35 | No | Cognitive | Undefined | General representation |

| 36 | Yes | Both | Undefined | General representation |

| 37 | No | Cognitive | Medium | Direct experience: scenario |

| 38 | Yes | Both | High | General representation |

| 39 | No | Both | High | Representation of past experience |

| 40 | No | Both | Medium | Direct experience: scenario |

| 41 | No | Cognitive | High | General representation |