Blockchain-Enabled, Nature-Inspired Federated Learning for Cattle Health Monitoring

Abstract

1. Introduction

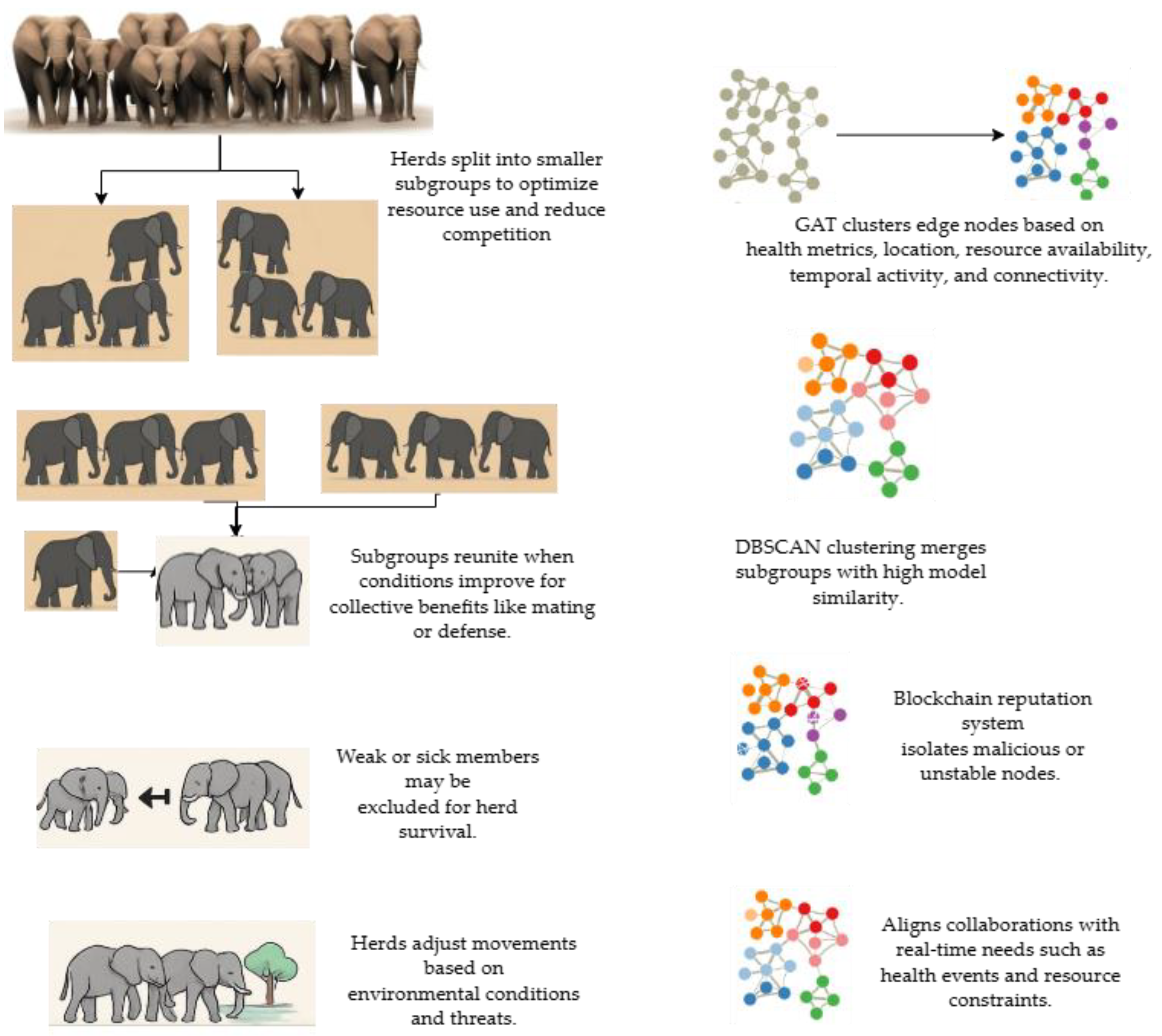

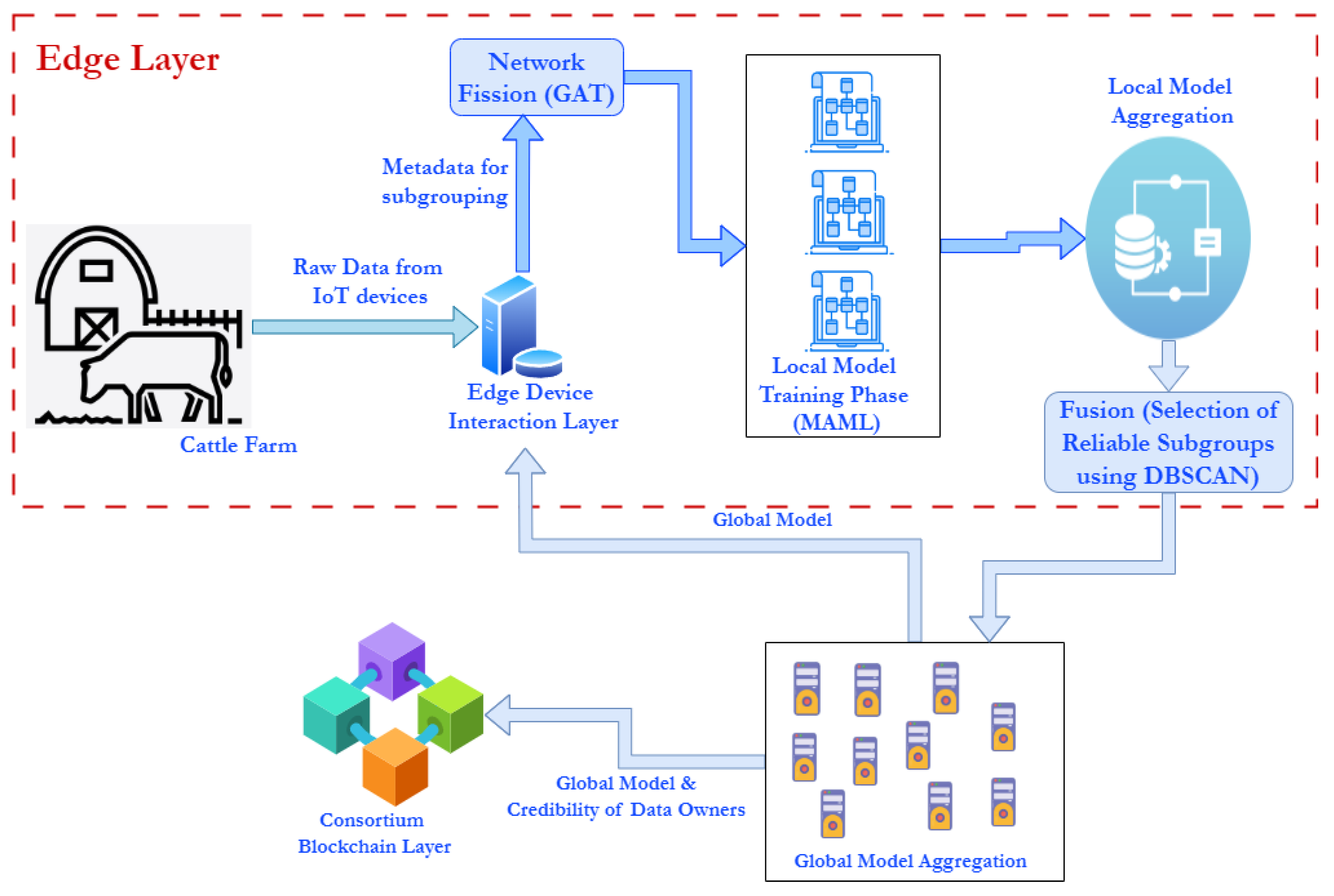

- Nature-Inspired GAT-Based Fission–Fusion: The framework introduces a dynamic GAT-based fission mechanism that clusters edge devices based on six real-time parameters—health metrics, activity levels, geographical proximity, resource availability, temporal activity, and network connectivity—mirroring the adaptive clustering observed in elephant herds.

- Adaptive Fusion with DBSCAN: A novel DBSCAN-based fusion process refines clusters by merging models with high similarity and excluding outliers, ensuring robust collaboration while maintaining cluster integrity during federated learning cycles.

- Blockchain-Enabled Model Validation and Node Reputation: Smart contract-based model validation ensures accuracy and resilience, while dynamic reputation scoring prioritizes high-performing nodes for global model aggregation, promoting continuous improvement and accountability.

- Privacy-Preserving and Decentralized Federated Learning: The system facilitates secure, decentralized cattle health monitoring by keeping sensitive data at the edge while ensuring reliable, adaptive model training across distributed cattle farms.

2. Literature Survey

2.1. Blockchain Implementation in Federated Learning

2.2. Model Aggregation Using Weighted Average

2.3. Addressing Heterogeneity in Federated Learning

3. Methodology

3.1. Nature-Inspired Federated Learning Framework

3.2. Edge-Based Cattle Monitoring

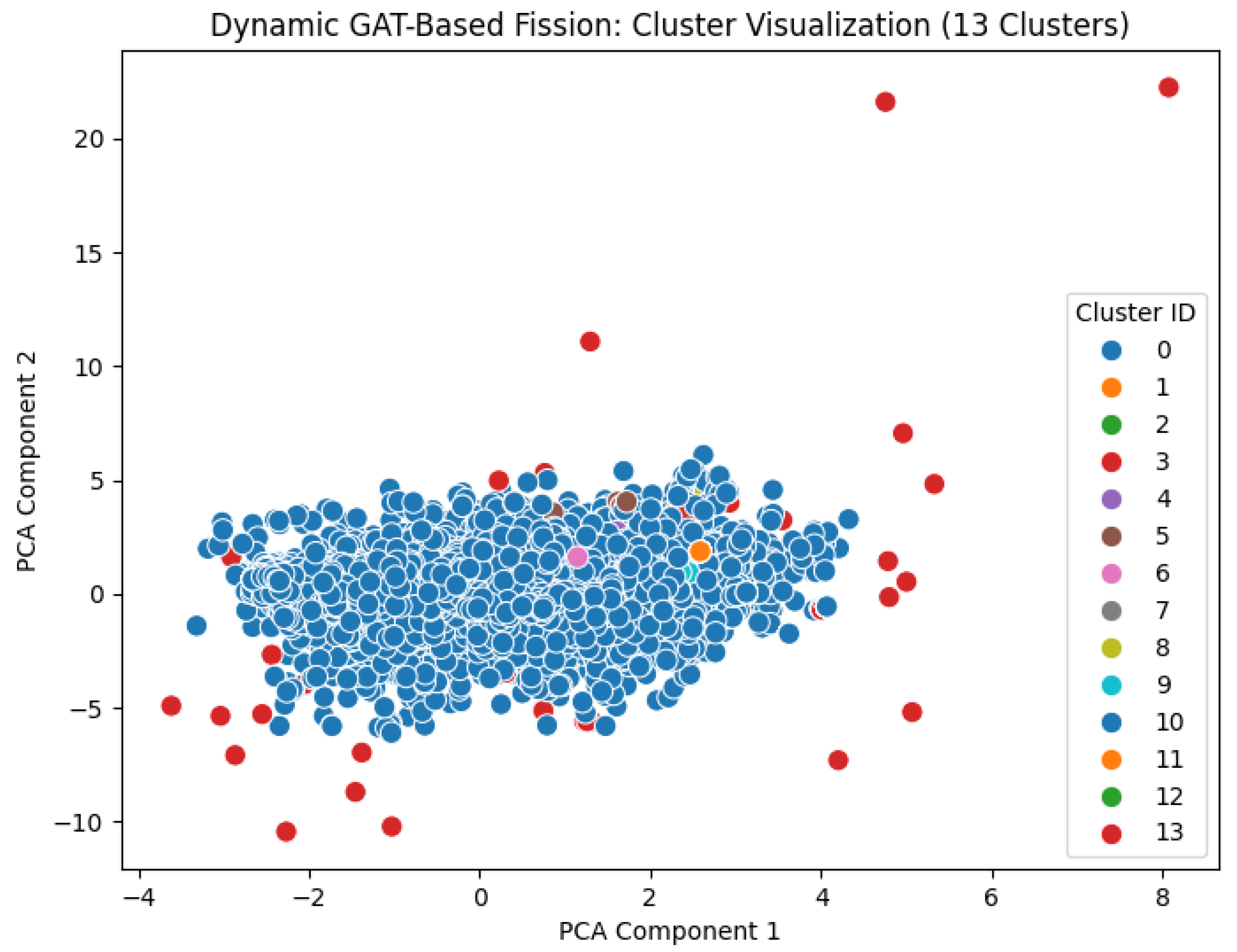

3.3. Adaptive Cluster Formation Using GAT

- —the attention coefficient between node i and node j.

- ,—feature vectors of nodes i and j.

- W—weight matrix applied to the node features.

- a—attention vector used to compute importance.

- —set of neighbors for node i.

- —non-linear activation function applied to the concatenated features.

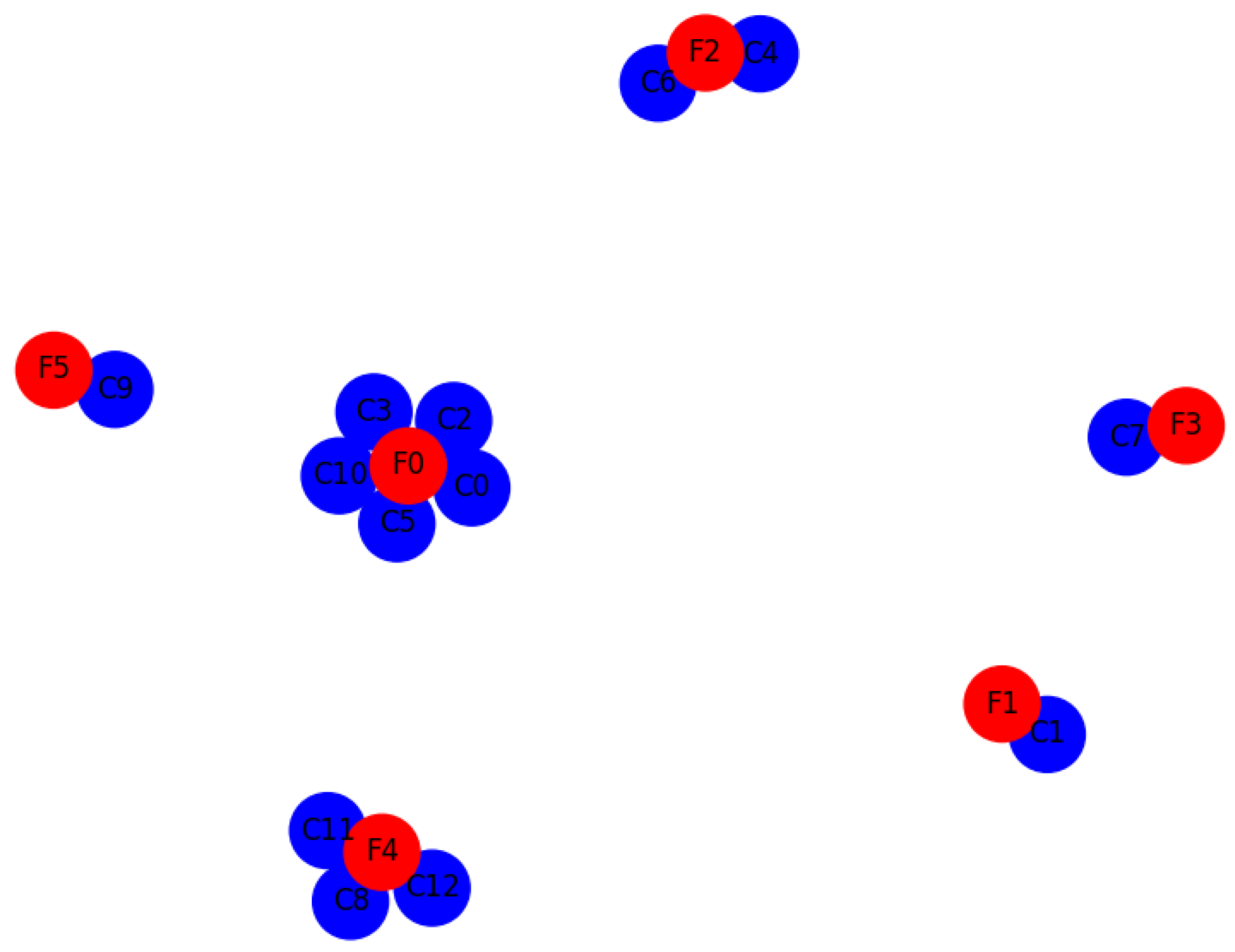

3.4. Local Model Training and DBSCAN-Based Fusion

- —loss function for node i.

- —local dataset for node i.

- —input features and corresponding labels.

- —mean squared error (MSE) loss function.

- —model with parameters .

- —cosine similarity between model weights and .

- —threshold for model similarity.

- —Jaccard index measuring overlap between health events and .

- —threshold for event overlap.

3.5. Blockchain-Enabled Model Validation

- —validation accuracy for node i.

- —test dataset used for validation.

- —input–output pairs in the test dataset.

- —indicator function, returning 1 if the prediction matches the ground truth and 0 otherwise.

3.6. Node Reputation and Prioritized Aggregation

- —reputation score for node i.

- —accuracy of the model submitted by node i.

- —efficiency in resource usage during training.

- —response time for model submission.

- —contribution in terms of data and updates.

- —weights assigned to each factor.

- —global model parameters.

- —local model parameters from node i.

- —reputation score of node.

- —total number of participating nodes.

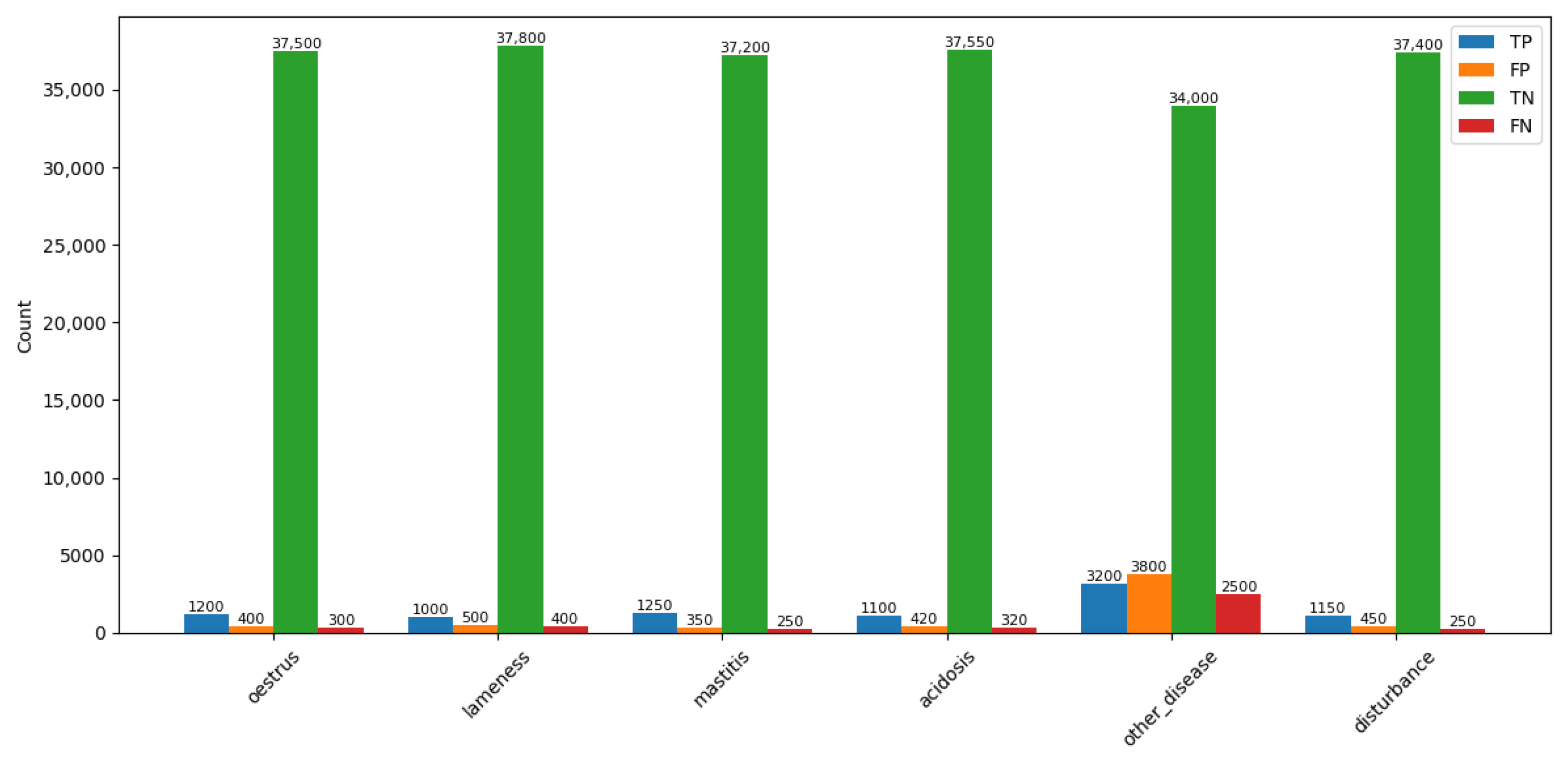

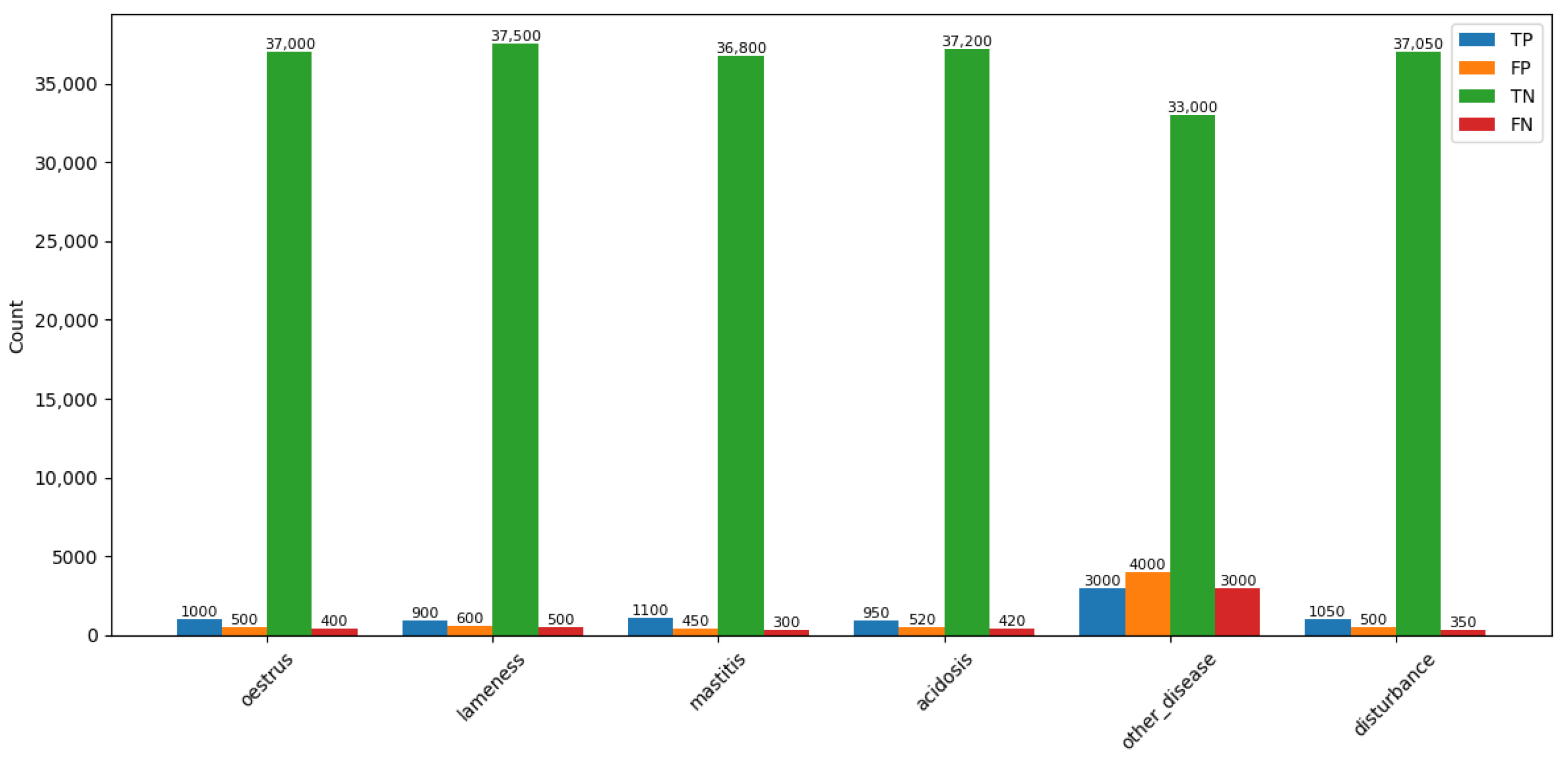

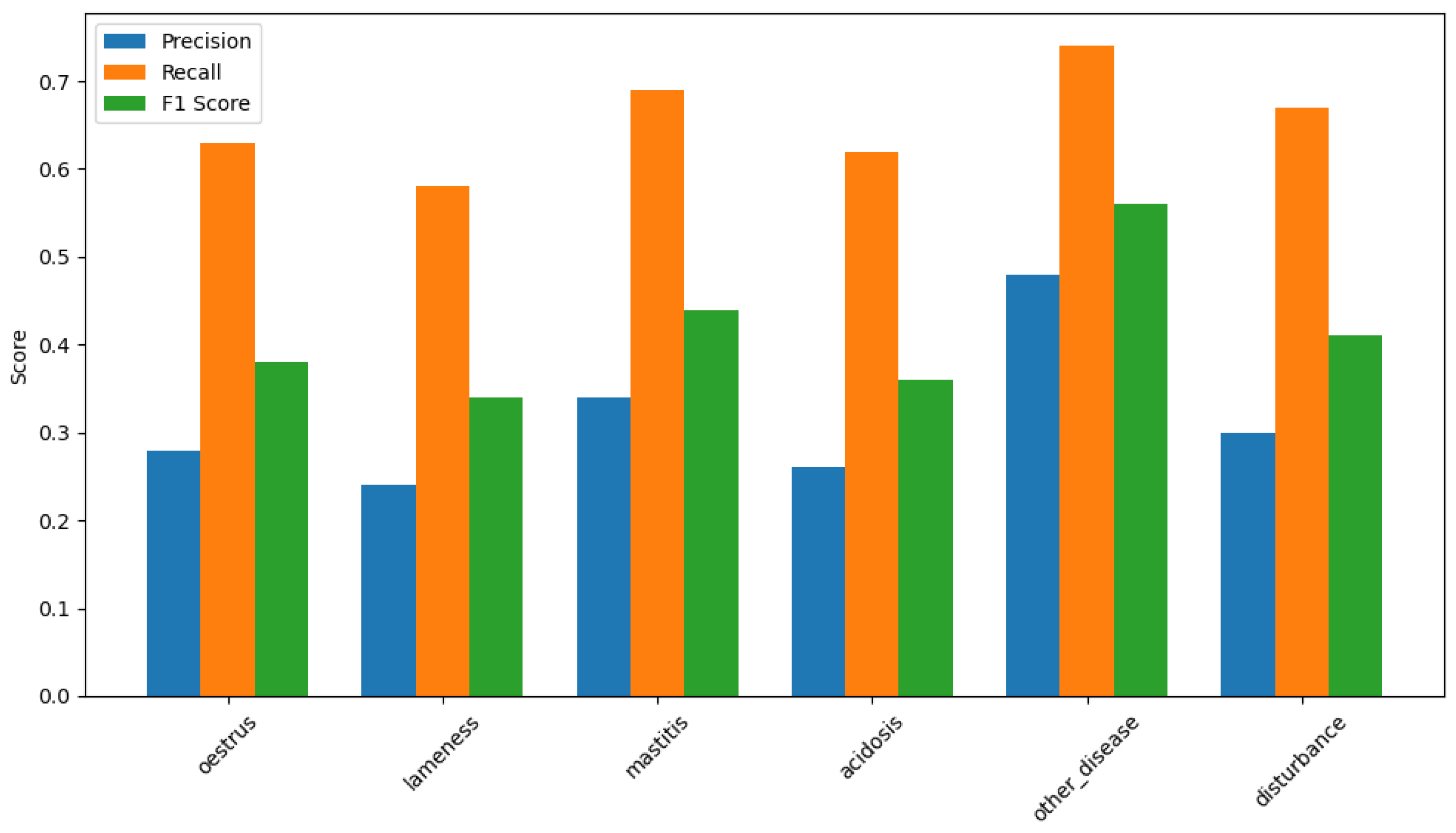

4. Results and Discussion

Blockchain Validation Overhead Analysis

5. Conclusions

6. Limitation and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GAT | graph attention network |

| FL | federated learning |

| DBSCAN | density-based spatial clustering of applications with noise |

| Non-IID | non-independent and identically distributed |

| MAML | model-agnostic meta-learning |

| TPS | transaction per second |

| TTF | time to finality |

References

- Feng, L.; Zhao, Y.; Guo, S.; Qiu, X.; Li, W.; Yu, P. BAFL: A blockchain-based asynchronous federated learning framework. IEEE Trans. Comput. 2021, 71, 1092–1103. [Google Scholar] [CrossRef]

- Kalapaaking, A.P.; Khalil, I.; Rahman, M.S.; Atiquzzaman, M.; Yi, X.; Almashor, M. Blockchain-based federated learning with secure aggregation in trusted execution environment for internet-of-things. IEEE Trans. Ind. Inform. 2022, 19, 1703–1714. [Google Scholar] [CrossRef]

- Baucas, M.J.; Spachos, P.; Plataniotis, K.N. Federated learning and blockchain-enabled fog-IoT platform for wearables in predictive healthcare. IEEE Trans. Comput. Soc. Syst. 2023, 10, 1732–1741. [Google Scholar] [CrossRef]

- Qi, J.; Lin, F.; Chen, Z.; Tang, C.; Jia, R.; Li, M. High-Quality Model Aggregation for Blockchain-Based Federated Learning via Reputation-Motivated Task Participation. IEEE Internet Things J. 2022, 9, 18378–18391. [Google Scholar] [CrossRef]

- Wang, R.; Tsai, W.-T. Asynchronous federated learning system based on permissioned blockchains. Sensors 2022, 22, 1672. [Google Scholar] [CrossRef]

- Ali, M.; Karimipour, H.; Tariq, M. Integration of blockchain and federated learning for Internet of Things: Recent advances and future challenges. Comput. Secur. 2021, 108, 102355. [Google Scholar] [CrossRef]

- Myrzashova, R.; Alsamhi, S.H.; Shvetsov, A.V.; Hawbani, A.; Wei, X. Blockchain meets federated learning in healthcare: A systematic review With challenges and opportunities. IEEE Internet Things J. 2023, 10, 14418–14437. [Google Scholar] [CrossRef]

- Qammar, A.; Karim, A.; Ning, H.; Ding, J. Securing federated learning with blockchain: A systematic literature review. Artif. Intell. Rev. 2023, 56, 3951–3985. [Google Scholar] [CrossRef]

- Vahidian, S.; Morafah, M.; Chen, C.; Shah, M.; Lin, B. Rethinking data heterogeneity in federated learning: Introducing a new notion and standard benchmarks. IEEE Trans. Artif. Intell. 2023, 5, 1386–1397. [Google Scholar] [CrossRef]

- Wang, L.; Polato, M.; Brighente, A.; Conti, M.; Zhang, L.; Xu, L. PriVeriFL: Privacy-preserving and aggregation-verifiable federated learning. IEEE Trans. Serv. Comput. 2024, 18, 998–1011. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Y.; Li, W.; Guo, K.; Shao, Y. Personalized federated learning via variational bayesian inference. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Xu, C.; Qu, Y.; Xiang, Y.; Gao, L. Asynchronous federated learning on heterogeneous devices: A survey. Comput. Sci. Rev. 2023, 50, 100595. [Google Scholar] [CrossRef]

- Ahmed, A.; Choi, B.J. Frimfl: A fair and reliable incentive mechanism in federated learning. Electronics 2023, 12, 3259. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Xie, S.; Zhang, J. Incentive mechanism for reliable federated learning: A joint optimization approach to combining reputation and contract theory. IEEE Internet Things J. 2019, 6, 10700–10714. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Zou, Y.; Zhang, Y.; Guizani, M. Reliable federated learning for mobile networks. IEEE Wirel. Commun. 2020, 27, 72–80. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.-L. Blockchained on-device federated learning. IEEE Commun. Lett. 2019, 24, 1279–1283. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Sun, Y.; Xue, A.; Zhang, Y.; Jiang, H.; Shen, W. Real-Time Monitoring Method for Cow Rumination Behavior Based on Edge Computing and Improved MobileNet v3. Smart Agric. 2024, 6, 29. [Google Scholar]

- Sánchez, P.M.S.; Celdrán, A.H.; Xie, N.; Bovet, G.; Pérez, G.M.; Stiller, B. Federatedtrust: A solution for trustworthy federated learning. Future Gener. Comput. Syst. 2024, 152, 83–98. [Google Scholar] [CrossRef]

- Thejaswini, S.; Ranjitha, K.R. Blockchain in agriculture by using decentralized peer to peer networks. In Proceedings of the 2020 Fourth International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 8–10 January 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Mishra, P.; Dash, R.K. Energy Efficient Routing Protocol and Cluster Head Selection Using Modified Spider Monkey Optimization. Comput. Netw. Commun. 2024, 2, 262–278. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Jie, C.; Ma, T.; Xiao, C. Fastgcn: Fast learning with graph convolutional networks via importance sampling. arXiv 2018, arXiv:1801.10247. [Google Scholar]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.B.; Leiserson, C.E. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2020; Volume 34. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Cui, P.; Yu, P.; Ye, Y. Heterogeneous graph attention network. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. In Proceedings of the Machine Learning and Systems 2, Austin, TX, USA, 2–4 March 2020; pp. 429–450. [Google Scholar]

- Smith, V.; Chiang, C.-K.; Sanjabi, M.; Talwalkar, A. Federated multi-task learning. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Ghosh, A.; Chung, J.; Yin, D.; Ramchandran, K. An efficient framework for clustered federated learning. IEEE Trans. Inf. Theory 2022, 68, 8076–8091. [Google Scholar] [CrossRef]

- Wang, H.; Yurochkin, M.; Sun, Y.; Papailiopoulos, D.; Khazaeni, Y. Federated learning with matched averaging. arXiv 2020, arXiv:2002.06440. [Google Scholar]

- Weng, J.; Weng, J.; Zhang, J.; Li, M.; Zhang, Y.; Luo, W. Deepchain: Auditable and privacy-preserving deep learning with blockchain-based incentive. IEEE Trans. Dependable Secur. Comput. 2019, 18, 2438–2455. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, Q.; Yu, Q.; Li, Z.; Liu, Y.; Lo, S.K.; Chen, S.; Xu, X.; Zhu, L. Blockchain-based federated learning for device failure detection in industrial IoT. IEEE Internet Things J. 2020, 8, 5926–5937. [Google Scholar] [CrossRef]

- Dataset 1. Available online: https://entrepot.recherche.data.gouv.fr/dataset.xhtml?persistentId=doi:10.15454/52J8YS (accessed on 23 October 2024).

- Dataset 2. Available online: https://www.kaggle.com/datasets/khushupadhyay/cow-health-prediction?resource=download (accessed on 4 April 2025).

| Aspect | Elephant Herd Behavior | Proposed Framework |

|---|---|---|

| Fission (Dynamic Cluster Formation) | Herds split into smaller subgroups to optimize resource use and reduce competition. | GAT clusters edge nodes based on health metrics, location, resource availability, temporal activity, and connectivity. |

| Fusion (Reintegration after Local Training) | Subgroups reunite when conditions improve for collective benefits like mating or defense. | DBSCAN clustering merges clusters with high model similarity. |

| Adaptive Resilience | Herds adapt to threats (e.g., predators) or opportunities (e.g., water sources) by restructuring. | Clusters auto-adjust based on real-time conditions (e.g., dropout due to poor connectivity) using event-aware attention. |

| Efficiency | Elephants minimize energy expenditure by forming optimal groups. | Reduces communication overhead by ensuring only relevant nodes collaborate. |

| Fault Tolerance | Weak or sick members may be excluded for herd survival. | Blockchain reputation system isolates malicious or unstable nodes. |

| Context Awareness | Herds adjust movements based on environmental conditions and threats. | Aligns collaborations with real-time needs such as health events and resource constraints. |

| Method | Training Framework | Fusion Criteria | Domain Adaptation | Data Heterogeneity | Computational Load | Privacy Preservation |

|---|---|---|---|---|---|---|

| Proposed Framework | Lightweight MAML for local adaptation to health conditions | DBSCAN with cosine model similarity and Jaccard-based event overlap | Supports health events like mastitis, respiratory anomalies, environmental shifts | Fully decentralized; supports regional health and behavioral variance | Optimized for edge devices with minimal model complexity | Raw data retained on local nodes; event similarity computed over anonymized metadata |

| FedAvg [26] | SGD on static local data | Weighted average | No domain semantics | Prone to divergence under non-IID | Lightweight but less adaptive | Preserves privacy by design |

| FedMA [30] | Local SGD with layer-wise neuron matching | Permutation-matched layer aggregation | Only structural layer matching | Handles moderate heterogeneity | Medium (requires Hungarian matching) | Preserves privacy; some structural meta-data needed |

| IFCA [29] | Gradient descent with alternating clustering | Loss-based group reassignment | Task-shared, no event-specific adaptation | Requires good cluster initialization | Medium (multiple restarts and alternation) | Preserves privacy |

| MOCHA [28] | Multi-task learning with local solvers per node | Task-specific model aggregation via proximal updates | Learns related models, not explicitly event-aligned | Designed for statistical and systems heterogeneity | Medium; handles stragglers and variability | Preserves privacy; model updates only shared |

| Challenge | Description | Solution |

|---|---|---|

| Data Heterogeneity | Edge devices collect non-uniform data due to varying cattle health conditions, locations, and farm environments. | Dynamic fission–fusion clustering groups edge devices based on health metrics, geographical proximity, and activity levels, ensuring that local training occurs within more homogeneous clusters. |

| Non-IID Data | Federated learning nodes often have datasets that are not independent and identically distributed, leading to biased global models. | MAML-based local training personalizes the global model for each node, allowing rapid adaptation to specific conditions while maintaining alignment with overall training objectives. |

| Unreliable Model Aggregation | Traditional frameworks aggregate models without considering node performance, leading to the inclusion of poorly trained models. | Reputation-based node prioritization assigns scores based on model accuracy, resource availability, and timely updates, ensuring that only high-quality models contribute to global aggregation. |

| Model Integrity and Security | Malicious or poorly trained models can compromise the accuracy of the global model. | Blockchain-based model validation employs smart contracts to verify each model update based on accuracy thresholds, consistency metrics, and divergence checks before aggregation. |

| Device Heterogeneity | Edge devices differ in computational capacity and resource availability, affecting participation in federated learning. | The framework prioritizes high-reputation nodes for aggregation, ensuring that resource-constrained devices are not overburdened while maintaining model performance. |

| Privacy and Data Security | Sharing raw data across the network increases the risk of privacy breaches. | Federated learning ensures that only model updates, not raw data, are shared, while blockchain validation guarantees tamper-proof model integrity. |

| Bias in Global Model | Traditional aggregation methods can lead to models that underperform for specific clusters or regions. | Cross-cluster fusion ensures that high-quality models from diverse clusters are merged, producing a balanced global model that is representative of varying farm conditions. |

| Attribute | Dataset 1 [33] | Dataset 2 [34] |

|---|---|---|

| Total Rows | 40,247 | 178 |

| Total Columns | 19 | 14 |

| Dataset Size | 8.10 MB | 0.2 MB |

| Unique Cow IDs | 28 | 178 |

| Data Types | 13 int64, 5 float64, 1 object | 6 float64, 5 int64, 3 object |

| Metric | Proposed Work with Dataset 1 [33] | FedAvg | FedProx | Scaffold | MOON | Hierarchical FL |

|---|---|---|---|---|---|---|

| Model Accuracy (%) | 94.3 | 88.7 | 89.5 | 91 | 92.1 | 90.4 |

| Timeliness (s/round) | 8.7 | 12.6 | 11.9 | 10.5 | 9.4 | 11.3 |

| Resource Contribution (%) | 90.8 | 78.9 | 81.6 | 85.1 | 87 | 83.4 |

| Model Convergence (Rounds) | 36 | 52 | 48 | 44 | 40 | 46 |

| Health Insights (%) | 93.4 | 85.7 | 87.1 | 88.9 | 90 | 88.6 |

| Original Clusters | 13 | 10 | 11 | 12 | 12 | 11 |

| Fused Clusters | 6 | N/A | N/A | N/A | N/A | N/A |

| Adaptive Fusion Threshold | 0.84 | N/A | N/A | N/A | N/A | N/A |

| Metrics | Standard Ethereum Blockchain |

|---|---|

| Validation Delay | 50 ms |

| Throughput | 18.2 TPS |

| Gas Used per Validation | 32,499 Gwei |

| Time-to-Finality (TTF) | 1201 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ganesan, L.P.; Krishnan, S. Blockchain-Enabled, Nature-Inspired Federated Learning for Cattle Health Monitoring. Informatics 2025, 12, 57. https://doi.org/10.3390/informatics12030057

Ganesan LP, Krishnan S. Blockchain-Enabled, Nature-Inspired Federated Learning for Cattle Health Monitoring. Informatics. 2025; 12(3):57. https://doi.org/10.3390/informatics12030057

Chicago/Turabian StyleGanesan, Lakshmi Prabha, and Saravanan Krishnan. 2025. "Blockchain-Enabled, Nature-Inspired Federated Learning for Cattle Health Monitoring" Informatics 12, no. 3: 57. https://doi.org/10.3390/informatics12030057

APA StyleGanesan, L. P., & Krishnan, S. (2025). Blockchain-Enabled, Nature-Inspired Federated Learning for Cattle Health Monitoring. Informatics, 12(3), 57. https://doi.org/10.3390/informatics12030057