Abstract

The advent of generative artificial intelligence (GenAI) has significantly transformed the educational landscape. While GenAI offers benefits such as convenient access to learning resources, it also introduces potential risks. This study explores the phenomenon of learning burnout among university students resulting from the misuse of GenAI in this context. A questionnaire was designed to assess five key dimensions: information overload and cognitive load, overdependence on technology, limitations of personalized learning, shifts in the role of educators, and declining motivation. Data were collected from 143 students across various majors at Shandong Institute of Petroleum and Chemical Technology in China. In response to the issues identified in the survey, the study proposes several teaching strategies, including cheating detection, peer learning and evaluation, and anonymous feedback mechanisms, which were tested through experimental teaching interventions. The results showed positive outcomes, with students who participated in these strategies demonstrating improved academic performance. Additionally, two rounds of surveys indicated that students’ acceptance of additional learning tasks increased over time. This research enhances our understanding of the complex relationship between GenAI and learning burnout, offering valuable insights for educators, policymakers, and researchers on how to effectively integrate GenAI into education while mitigating its negative impacts and fostering healthier learning environments. The dataset, including detailed survey questions and results, is available for download on GitHub.

1. Introduction

In recent years, the rapid advancement of artificial intelligence (AI) technologies, particularly generative artificial intelligence (GenAI) [1,2], exemplified by models like ChatGPT (version: GPT-4, developed by OpenAI, San Francisco, United States) [3], has significantly transformed the landscape of the education sector. These large models, by aggregating, analyzing, and disseminating vast amounts of human knowledge, have significantly enhanced the accessibility and availability of educational resources [4]. They not only enable personalized learning experiences but also foster greater interactivity through instant feedback and simulated dialogues. Educational platforms powered by these models can process massive amounts of data, assist teachers in refining instructional strategies, and overcome language and geographical barriers, thus promoting educational equity [5]. Junaid Qadir [6] proposed that GenAI technologies, such as the ChatGPT conversational agent, had the potential to enhance engineering education by offering personalized learning experiences, providing customized feedback and explanations, and creating realistic virtual simulations for hands-on learning, thus addressing the evolving needs of the engineering industry. Dilling et al. [7] proposes that large language models (LLMs) like ChatGPT hold significant potential for enhancing mathematics education, specifically in supporting university students’ mathematical proof processes, but highlights challenges such as biases, data privacy, and over-reliance on AI tools, stressing the need for a balanced approach in utilizing LLMs for educational purposes.

However, despite the numerous innovations and conveniences that large models have introduced to education, their potential negative impacts are increasingly becoming apparent [8]. Under traditional educational models, students acquire knowledge primarily through a combination of teacher-led instruction and autonomous exploration [9]. The widespread application of large models has altered this paradigm, resulting in significant changes to students’ learning methodologies. Large models can swiftly provide answers, substantially reducing opportunities for students to engage in independent thinking, analysis, and problem-solving. Many students, especially those in lower grades who have not yet established a solid academic foundation, tend to rely on large models to complete assignments and projects, leading to a gradual decline in their abilities to think independently and solve problems. Excessive dependence on these technological tools not only constrains students’ critical thinking but also adversely affects their interest in learning and academic performance, ultimately potentially leading to learning burnout [10].

Learning burnout refers to the phenomenon where students experience emotional exhaustion, loss of motivation, and diminished interest during the learning process [11,12]. It has become a significant psychological barrier affecting students’ academic development. Particularly in the context of large models, students’ dependency and information overload concurrently limit the autonomy and creativity of learning. Prolonged reliance on AI tools may not only weaken students’ critical thinking skills but also impede the development of their innovative capabilities and complex problem-solving abilities. As information retrieval becomes overly facile, students often cease to delve deeply into the underlying principles and structures of knowledge, resulting in superficial learning [13]. This shallow approach to learning restricts students’ comprehensive mastery of knowledge, causing them—especially those in the early stages of higher education—to overlook the importance of active learning, critical thinking, and practical experience, thereby exerting profound negative impacts on their future career development [14]. Therefore, effectively addressing students’ learning burnout in the era of large models has become an urgent educational challenge that needs to be resolved.

Milano et al. [15] analyzed the challenges that GenAI, especially ChatGPT, poses to higher education, examined the existing responses of universities and their associated issues, and proposed that academics could collaborate on publicly funded GenAI for educational use. Kasneci et al. [4] discussed the potential benefits and challenges of using GenAI in education, emphasizing the need for developing digital competencies, critical thinking, and fact-checking strategies among both teachers and students, while also addressing issues like bias, misuse, and the need for human oversight. Huang et al. [16] investigated the relationship between online peer feedback and learning burnout using deep learning models like BERT and Bi-LSTM, finding that suggestive feedback can reduce emotional exhaustion, while excessive positive or negative feedback may worsen burnout and self-learning behavior. Jeon et al. [17] explored the relationship between ChatGPT and teachers, identifying complementary roles for both in education, and highlighted the importance of teachers’ pedagogical expertise in effectively integrating AI tools like ChatGPT into instruction. Abd-alrazaq et al. [18] examined the potential and challenges of integrating GenAI into medical education, highlighting their transformative impact on curriculum, teaching, and assessments while addressing concerns such as bias, plagiarism, and privacy issues. Scholars collectively emphasized the increasing integration of GenAI in diverse educational contexts, highlighting their transformative potential while acknowledging differing opinions on their impact [19,20]. However, there is a shared sense of caution and concern regarding challenges such as ethical risks, pedagogical adaptation, and human–AI collaboration [21]. The findings advocate for balanced strategies that prioritize educational equity, interdisciplinary cooperation, and the preservation of human-centric learning principles amid technological advancements [22].

This study aims to investigate the causes, manifestations, and impacts of learning burnout among university students in the era of large-scale AI models. Based on these findings, this study seeks to propose effective instructional strategies to assist higher education instructors in fostering a positive learning attitude among students. Furthermore, it aspires to provide policy recommendations for educational administrators to optimize resource allocation and instructional model design, thereby enhancing the overall quality and effectiveness of education.

Section 2 conducts a survey with a designed questionnaire among students from specific majors, analyzes the results to explore students’ cognitive and emotional responses when using GenAI in learning, and reveals the advantages and risks of these models in the learning process. Section 3 identifies the five main causes of learning burnout and elaborates on their negative impacts on students’ academic development. Section 4 proposes teaching response strategies including cheating detection, peer learning and evaluation, and anonymous feedback mechanisms, and analyzes students’ acceptance and performance changes. Finally, Section 5 provides a summary and discussion.

2. Survey and Results Analysis

2.1. Survey Questionnaire

We designed a survey questionnaire, as shown in Table 1. Each question in the survey was rated using a five-point Likert scale (1 = strongly agree, 5 = strongly disagree) [23], with the aim of exploring the various cognitive and emotional responses that students may encounter when using GenAI for learning.

Table 1.

Survey questionnaire scale.

Question 1 aims to investigate the frequency and level of reliance on GenAI by students, assessing whether these models are commonly used in daily academic tasks. This question provides preliminary data on the popularity of GenAI in student learning. Questions 2 to 5 aim to explore whether students experience information overload when using GenAI and how this overload affects their deep understanding and learning outcomes [24,25]. Questions 6 to 9 investigate whether the rapid feedback from GenAI inhibits students’ deep thinking and problem-solving abilities [26,27]. Questions 10 to 13 focus on investigating the impact of the personalized learning features of GenAI on students’ learning behaviors and social interactions [28]. Questions 14 to 17 aim to analyze the impact of GenAI on the role of teachers in instruction [29,30]. Questions 18 to 20 focus on exploring the impact of GenAI on students’ learning motivation, sense of challenge, and academic interest [31,32].

2.2. Reliability Analysis

In this study, reliability analysis was performed after data collection from the completed questionnaires. A trial sample of 143 participants, selected from students across various majors, was used for this analysis. The questionnaire was administered anonymously, and the sample size was deemed adequate to ensure the robustness of the reliability results. The Cronbach’s Alpha values [33] for all dimensions meet the reliability requirements, indicating that the questionnaire exhibits a high level of internal consistency and is suitable for further analysis. Specifically, the overall Cronbach’s Alpha value for the questionnaire is 0.936, which is well above the commonly accepted reliability threshold of 0.7, demonstrating a high degree of internal consistency for the entire instrument. The Cronbach’s Alpha values for each dimension are as follows: 0.877, 0.859, 0.785, 0.779, and 0.759, as shown in Table 2. These values are all above 0.7, meeting the standard for reliability and indicating that each dimension shows adequate internal consistency in measuring the respective constructs [34]. Therefore, both the overall questionnaire and its individual dimensions provide a strong foundation for reliability, making it suitable for assessing students’ cognitive and emotional responses when using GenAI for learning.

Table 2.

Reliability analysis of the questionnaire and its dimensions.

2.3. Survey Results

The survey was conducted among 143 students from the Computer Science and Technology, Internet of Things Engineering, and Digital Economy majors at Shandong Institute of Petroleum and Chemical Technology, China. The survey results are shown in Table 2, and the average scores were calculated according to the agreed-upon five-point Likert scale scoring system. We have organized the survey and its results into a dataset, which can be accessed through https://github.com/dongxr2/LLM_Burnout_Dataset/ (accessed on 19 April 2025).

2.4. Survey Result Analysis

As presented in Table 3, an overwhelming majority of respondents, 77.62% of the 143 students surveyed, reported using GenAI frequently to assist with their academic tasks (Q1: M = 1.92, SD = 0.82). In this context, “M” refers to the mean score, indicating the average level of agreement among respondents, while “SD” denotes standard deviation, which measures the dispersion or variability of the responses around the mean. Specifically, 37.06% strongly agreed, and 40.56% agreed with this statement. These findings indicate that GenAI has become a mainstream tool in the academic practices of students. The high frequency of use may be attributed to its efficiency in generating answers, which raises concerns regarding the potential risks associated with technological dependence.

Table 3.

Survey results.

In examining the aspect of information processing (Q2–5), it was found that 43.36% of students acknowledged experiencing “information overload” due to GenAI (Q2: M = 2.71). Additionally, 53.85% of students felt that they did not achieve a deep understanding of the knowledge after utilizing GenAI (Q3: M = 2.52), and 38.46% reported feelings of mental overload (Q5: M = 2.94). This implies that excessive information may directly exacerbate cognitive load. When external information input exceeds working memory capacity, the effectiveness of deep learning is compromised.

Turning to the aspects of independent thinking and problem-solving skills (Q6–9), while the majority of students did not believe that GenAI significantly undermined their ability to think independently (with average scores for Q6–9 ranging from 2.98 to 3.27, leaning towards “neutral” to “disagree”), it is concerning that 26.57% reported relying on GenAI for answers to questions (Q6: Agree + Strongly Agree), and 39.16% felt that opportunities for independent thinking had decreased (Q8). This contradiction may reflect a lack of awareness among students regarding the subtle impacts of dependence on tools on their metacognitive abilities [35]. An overreliance on immediate feedback may diminish goal-setting and strategy-monitoring capabilities, thus leading to a cycle of “shallow learning”.

Focusing on the personalized features of GenAI (Q10–13), the results indicated that these features did not significantly disrupt students’ capacity to set long-term learning goals (Q10: M = 2.80), though 34.27% acknowledged a lack of regulatory ability over their learning strategies (Q11: Agree + Strongly Agree). In terms of social interaction, only 27.97% of respondents felt that GenAI caused a sense of isolation (average scores for Q12 and Q13 were between 3.30 and 3.51), suggesting that the social substitution effect of GenAI is limited. This may be attributed to the prevailing dominance of face-to-face collaboration in current educational settings. However, it also highlights the risk of “filter bubbles” stemming from excessive personalization.

Examining the role of teachers and the teaching authority (Q14–17), a significant majority, ranging from 72.03% to 74.82%, disagreed with the notion that the role of teachers has been diminished by GenAI (Q14, Q16: M = 4.01). Furthermore, 46.16% believed that a lack of teacher guidance would lead to mechanical learning (Q17: M = 2.80). These results support the view that the cognitive guidance and emotional support provided by teachers are irreplaceable. However, Q15 indicated that 26.57% of students prefer AI feedback over that of teachers, suggesting a need for educators to reposition their roles from “knowledge deliverers” to “cognitive coaches”.

Regarding learning motivation and academic interest (Q18–20), despite a majority of students denying that GenAI diminishes the challenge of learning (Q18: M = 3.50) and their academic interest (Q19: M = 3.42, Q20: M = 3.10), between 21.68% and 32.87% expressed a positive alignment with these sentiments. According to self-determination theory [36], a reliance on tools may undermine intrinsic motivation, such as curiosity, especially when the difficulty of tasks is skewed by AI assistance. Educators need to design tasks that present “optimal challenges” to maintain motivation, ensuring that students continue to engage meaningfully with their learning processes.

3. Analysis of the Causes and Impacts

Based on the research and analysis presented in Section 2, the primary causes of learning burnout among university students in the era of large models can be summarized into the five following aspects.

3.1. Information Overload and Cognitive Load

Large models provide students with an abundance of learning resources and information, which can be accessed anytime through AI tools. However, this excess of information has become a significant source of learning burnout. Information overload refers to the cognitive pressure and decision-making difficulties that individuals face when the volume of information exceeds their processing capacity. In the era of large models, students are often overwhelmed by the sheer volume of information and knowledge points, making it difficult to effectively filter and process, thereby increasing the cognitive load. Although AI provides immediate answers, students rarely have the opportunity to deeply reflect, analyze, or critically synthesize the information. This superficial mode of learning leaves students feeling confused and fatigued, contributing to feelings of burnout. Moreover, while AI-generated answers are quick and accurate, they often lack contextual depth and theoretical support, which can trap students in a state of shallow learning. The knowledge obtained through AI tools is often not deeply processed, leading to a lack of comprehensive understanding and long-term memory of core concepts, resulting in a sense of knowledge fragmentation. This, in turn, increases the difficulty and exhaustion of learning.

3.2. Overdependence on Technology and the Loss of Autonomous Learning Abilities

The application of large models has led to an increasing reliance on technological tools in the learning process, and this dependency is another critical factor contributing to learning burnout. While AI tools can offer immediate assistance, they do not necessarily cultivate students’ abilities for autonomous learning or complex problem-solving. Students, by quickly obtaining answers without engaging in deep thinking or reflection, may satisfy short-term learning needs but overlook the importance of independent thought and critical analysis. In this context, students may become disillusioned with learning, especially when facing tasks without AI assistance. They often lack sufficient intrinsic motivation for self-directed learning and problem-solving capabilities. This overreliance on AI weakens students’ internal learning motivation, as they tend to depend more on technology to complete tasks rather than actively engage in thinking and problem-solving, leading to a lack of intrinsic drive to learn, and ultimately, to burnout.

3.3. Limitations of Personalized Learning and the Lack of Self-Management Skills

The personalized learning offered by large models is highly adaptable, adjusting content and difficulty according to students’ interests and progress. However, the limitation of such personalized learning lies in its inability to effectively cultivate students’ self-management skills. While personalized learning focuses on providing tailored learning paths based on immediate feedback and customization, it often neglects the development of students’ long-term learning goals, time management, and self-motivation. Additionally, within a personalized learning environment, students’ learning processes may become overly isolated. With the widespread use of AI tools, students’ learning activities become increasingly self-directed, reducing opportunities for social interaction and collaborative learning [28]. This independent learning model can lead to feelings of loneliness and isolation, which in turn heightens the emotional burden of learning burnout. The absence of peer support and collaborative opportunities makes it difficult for students to receive emotional and motivational encouragement when facing academic challenges, ultimately decreasing their interest and engagement in learning.

3.4. Changes in the Role of Teachers and Challenges in Teaching Methods

The introduction of large models has significantly altered the role of teachers and teaching methods. In traditional teaching models, teachers act as knowledge transmitters and classroom guides, playing an essential role in stimulating students’ interest and cultivating their thinking skills. However, in the era of large models, many students begin to rely on AI tools for acquiring knowledge and completing assignments, leading to a gradual reduction in the role of teachers in the classroom. This shift in role not only affects the quality of teaching but also impacts students’ learning experiences. Without effective guidance and personalized support from teachers, students may feel lost and frustrated, leading to burnout. Moreover, teachers, faced with the challenges of teaching in the era of large models, must navigate the balance between technology and humanistic education. If teachers fail to leverage their roles in classroom management, emotional support, and fostering critical thinking, students’ learning experiences may become more mechanized and disengaged, further exacerbating learning burnout.

3.5. Decline in Learning Motivation and the Loss of Innovation Ability

Large models, by providing immediate feedback and simplified learning pathways, make learning quicker and easier for students. However, excessive reliance on these convenient learning methods often leads to a decline in students’ motivation for learning itself. In the era of large models, students obtain instant answers through AI tools, which, while reducing the difficulty of learning, also diminish the challenges and sense of achievement that are central to intrinsic motivation. This results in a reduction in motivation and stifles students’ ability to innovate, a situation particularly pronounced among students with weaker foundational knowledge. Since the solutions provided by AI tools are typically based on existing data and patterns, students are more likely to adopt standardized answers rather than engage in exploratory thinking and creative problem-solving. This “standardized” mode of learning limits students’ innovative abilities, and over time, their interest in new knowledge and curiosity for learning diminishes, leading to burnout.

4. Teaching Strategies

4.1. Specific Measures

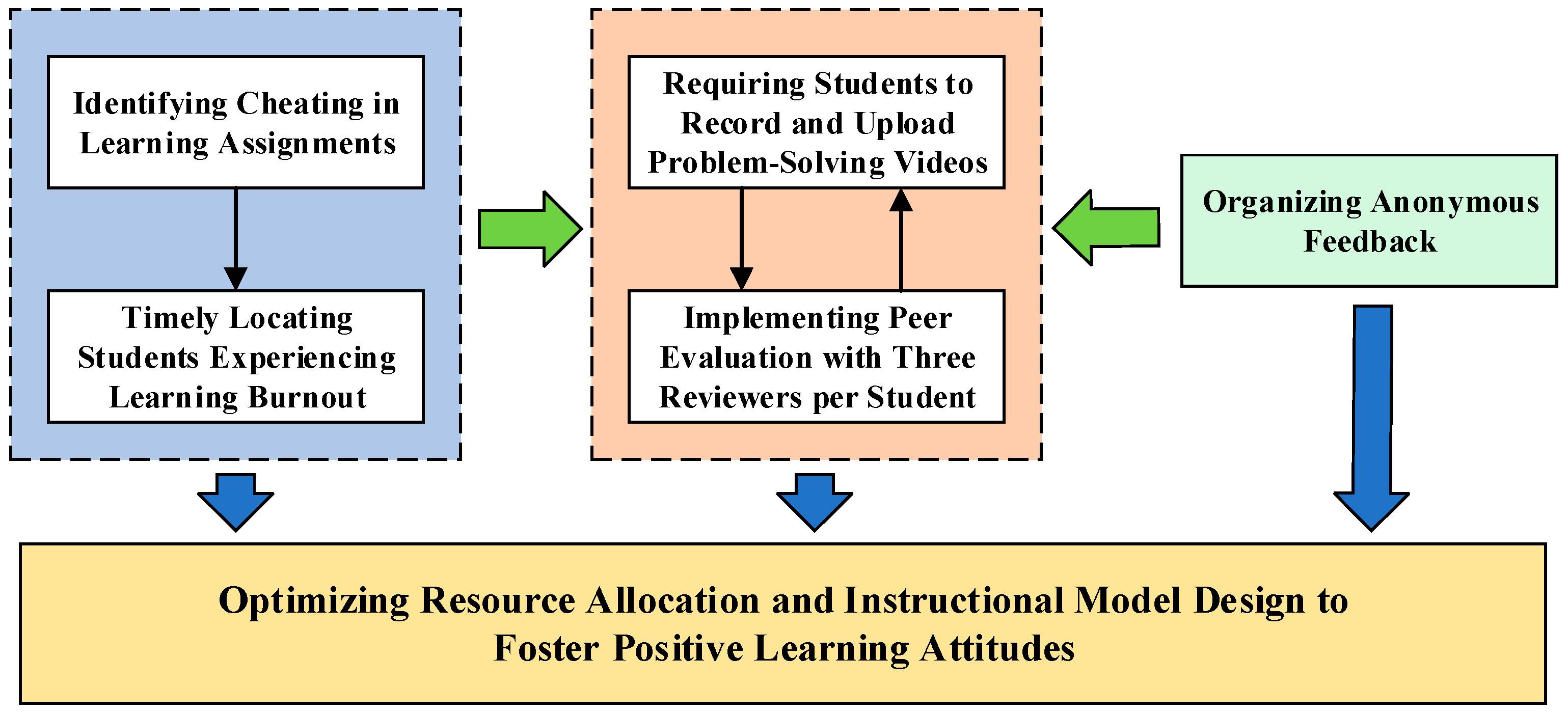

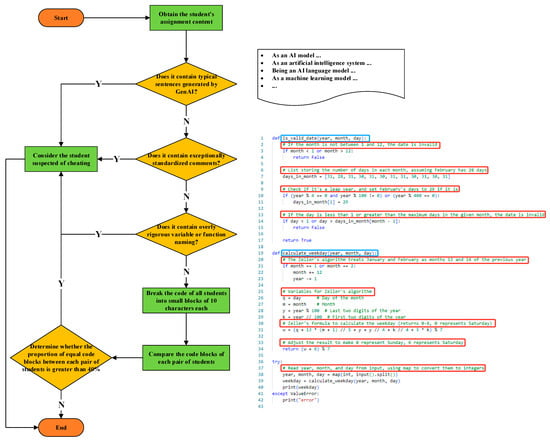

Through continuous practice and research in teaching, this study, using programming language instruction as a case study, gradually explored and formulated response strategies from the three following aspects, establishing a closed-loop process as illustrated in Figure 1.

Figure 1.

Closed-loop process of teaching response strategies.

4.1.1. Identifying Cheating in Learning Assignments and Quickly Locating Students Experiencing Learning Burnout

To effectively identify students experiencing learning burnout or even refusing to learn due to excessive reliance on GenAI, this study designed and developed corresponding software tools. Currently, this tool is primarily integrated into the OnlineJudge system [37] for detecting code assignments in programming courses. However, the rules can also be applied to the detection of electronic assignments submitted via platforms such as Xuexitong [38], email, or cloud storage. The main principle is to compare the repetition rate of submitted assignments and analyze submission timestamps to detect behaviors such as using AI tools to complete assignments, plagiarizing other students’ work, or showing a lack of genuine effort. The specific detection rules are shown in Table 4. With this method, teachers can promptly identify students facing learning burnout and take appropriate intervention measures to alleviate cognitive load, thereby helping them regain motivation for learning.

Table 4.

The specific detection rules for identifying cheating in learning assignments.

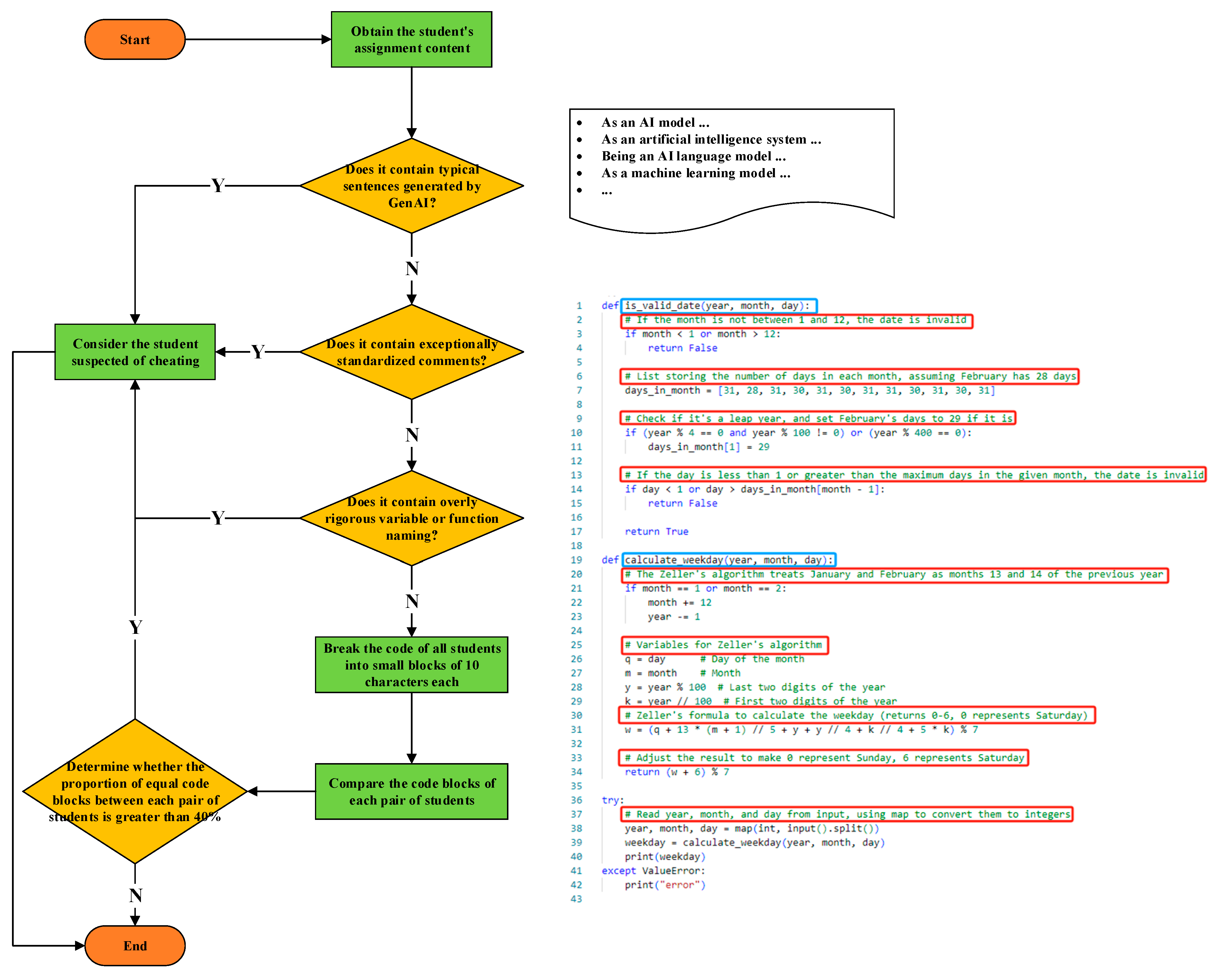

The detection process and rule examples for the Submitted Content dimension are shown in Figure 2. In the code example shown in Figure 2, red highlights represent highly standardized comments, while blue highlights indicate exceptionally well-named functions or variables. In most cases, when submitting short coding assignments or test solutions, students tend to overlook these aspects in order to save time.

Figure 2.

The detection process and rule examples for the submitted content dimension.

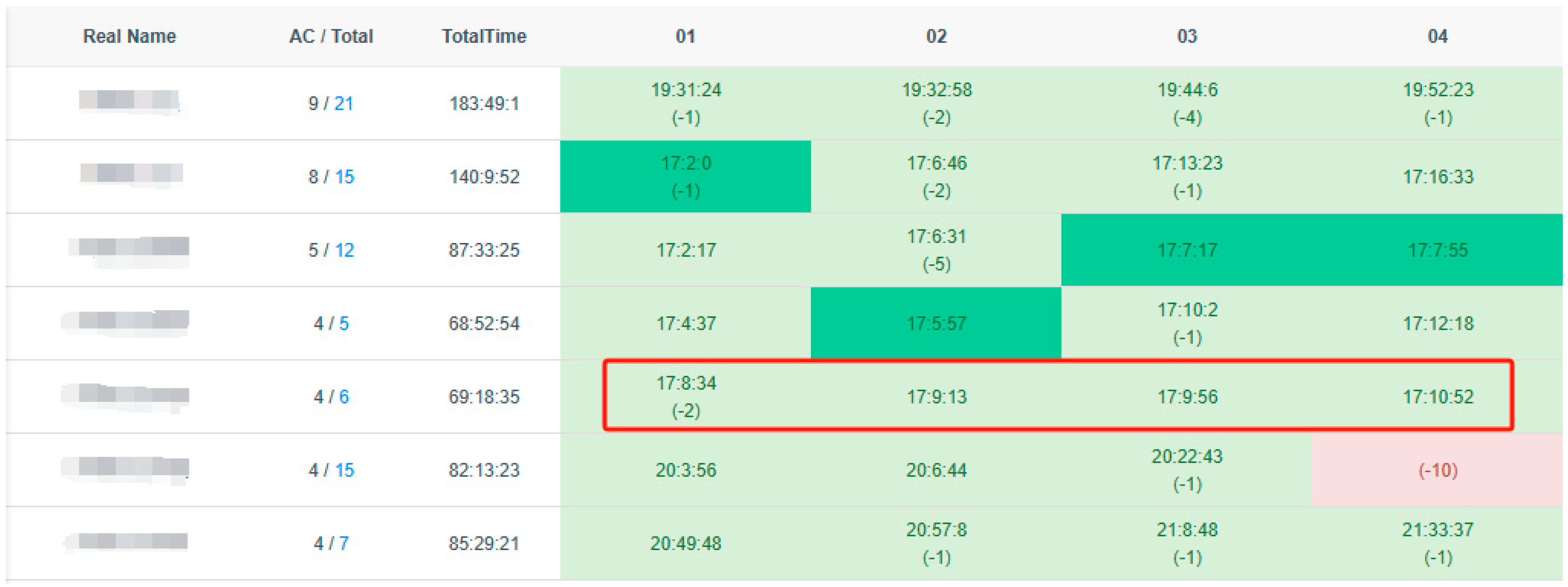

The detection of the behavioral patterns and behavioral characteristics dimension is primarily based on timestamps, with examples of detected behaviors illustrated in Figure 3. In Figure 3, the red boxes highlight suspected cheating behaviors, as it is normally not possible to complete assignments within such a short period of time.

Figure 3.

An example of suspected cheating behaviors in the behavioral patterns and behavioral characteristics dimension.

4.1.2. Establishing Peer Learning and Evaluation Mechanisms to Ensure True Mastery of Knowledge

Traditional assignment evaluation models typically rely on one-way feedback from teachers, which is often ineffective in stimulating students’ interest in learning or promoting deep understanding. Therefore, this study proposes a peer learning and evaluation mechanism, requiring students to record problem-solving videos and upload them to the learning management platform along with their assignments. Other students are tasked with scoring and providing feedback on these videos. This approach not only encourages students to explain the knowledge in-depth, reducing their reliance on AI tools for direct answers, but also fosters an awareness of their peers’ problem-solving approaches through the evaluation process. This further strengthens their understanding and mastery of the material. Through peer evaluation, teachers can also gather multi-dimensional feedback on students’ learning progress without excessively increasing their workload. In preliminary experiments, a “three-person evaluation per student” peer review model was adopted, not significantly increasing students’ workload while ensuring the objectivity and fairness of the evaluation.

4.1.3. Establishing an Anonymous Feedback Mechanism to Adjust Assignment Difficulty and Intensity Timely and Reasonably

To gain real-time insights into students’ learning status and make appropriate course adjustments, this study introduced an anonymous feedback mechanism. Teachers track students’ learning progress and performance through assignments, videos, cheating detection results, and peer feedback. These data provide valuable support for adjusting assignment difficulty and the pace of teaching. In addition, regular surveys help teachers collect feedback on assignment difficulty and workload, avoiding situations where excessive or insufficient assignment demands lead to student stress or emotional negative reactions, which may trigger a different form of learning burnout. With these timely and effective feedback mechanisms, teachers can more flexibly adjust their teaching strategies, ensuring that students are appropriately challenged without becoming overly fatigued or losing interest in learning.

4.2. Changes in Students’ Willingness to Accept

The implementation of the proposed teaching response strategies has undoubtedly increased students’ workload. To analyze their acceptance of these additional tasks—primarily problem-solving videos recording—two rounds of questionnaire surveys were conducted during the implementation process. The objective was to explore students’ psychological and behavioral changes during the adaptation process and to provide empirical evidence for further optimization of the teaching model. The details and results of the surveys are presented in Table 5.

Table 5.

The details and results of the surveys regarding changes in students’ willingness to accept.

Comparing the results of the two surveys, students underwent a psychological transition from initial discomfort to gradual adaptation and eventual acceptance of the problem-solving video recording format. This process reflects not only the natural progression of adaptive learning [39], but also the reduction in cognitive load [40] and the enhancement of emotional regulation abilities [41]. At the initial stage, students experienced significant anxiety and discomfort, with task difficulty and technological challenges being the primary obstacles. However, over time, through repeated exposure and practice, students’ unfamiliarity with the technical aspects diminished, and they began to recognize the value of problem-solving video recording in their learning process, gradually shifting towards a more positive stance. This change can also be explained through emotional regulation theory. Initially, students’ negative emotions (e.g., stress and anxiety) were gradually replaced by positive emotions (e.g., a sense of achievement and confidence), especially as the recording time decreased and the task became more manageable. Ultimately, students’ emotional responses toward the task became increasingly positive, leading to greater willingness to actively engage in problem-solving video recording. Based on the survey results in Table 3, the following aspects were analyzed.

4.2.1. Changes in Perceived Difficulty of Problem-Solving Video Recording

There was a significant shift in students’ perception of problem-solving video recording difficulty between the first and second surveys. In the first survey, approximately 94.91% of students found the problem-solving video recording “difficult” or “very difficult”, indicating that most students experienced substantial pressure when completing this task. Several factors may have contributed to this challenge. First, the problem-solving video recording requires not only a solid understanding of the problem but also strong verbal communication skills, technical proficiency, and familiarity with recording tools. For students lacking technical expertise, the difficulty of operating the software and ensuring video quality might have imposed an additional cognitive burden. However, the second survey revealed a marked improvement. A majority (75.38%) of the students rated the recording difficulty as “moderate”, suggesting that their ability to adapt to this task improved over time. From a psychological perspective, this change aligns with the adaptive learning theory, which posits that students, through continuous practice and experience accumulation, gradually reduce anxiety and discomfort associated with new tasks. Although 4.62% of students still perceived problem-solving video recording as “very difficult”, the overall trend indicates a substantial positive shift in students’ evaluations of task difficulty.

4.2.2. Changes in Time Required for Problem-Solving Video Recording

The time required to complete the problem-solving video recording task also changed significantly between the two surveys. In the first survey, 22.03% of students reported spending more than two hours on the task, whereas in the second survey, this proportion had almost disappeared. The second survey showed that 36.92% of students could complete the task within 15 min, and 40% within 30 min. This shift indicates a notable increase in students’ efficiency in completing the recording process. This reduction in time required suggests an improvement in students’ proficiency in executing the task. Through repeated practice, students became more efficient in problem-solving video recording, thereby reducing both cognitive load and time pressure. Notably, the decreased time investment likely influenced students’ perceptions of the task, contributing to a greater willingness to engage with this format.

4.2.3. Changes in Willingness to Accept Problem-Solving Video Recording

Students’ willingness to accept the problem-solving video recording showed a substantial shift between the two surveys. In the first survey, only 25.42% of students reported “actively accepting” this format, while a majority (approximately 39%) indicated “passive acceptance”. Furthermore, 13.56% of students explicitly stated that they did not accept this format. These results suggest that, at the initial stage, the introduction of the problem-solving video recording encountered significant resistance. Students’ negative attitudes may have stemmed from their unfamiliarity with the technical aspects of the task, concerns about excessive time consumption, or a general reluctance toward adopting new learning formats. However, in the second survey, approximately 46.15% of students reported “actively accepting” problem-solving video recording, a significant increase from the initial 25.42%. This improvement suggests that, as students continued to engage with the task, they gradually recognized its educational benefits, particularly in enhancing their problem-solving skills. Meanwhile, 41.54% of students still remained in the “passive acceptance” category, indicating that although overall acceptance had improved, a portion of students had not yet fully transitioned into enthusiastic participants. Notably, 6.15% of students expressed “strongly passive acceptance”, a sharp decline from the 22.03% recorded in the first survey, implying a reduction in students’ resistance toward this format. Additionally, the percentage of students who did not accept the problem-solving video recording dropped from 13.56% to 6.15%, further supporting the notion that students’ adaptation to this learning approach had improved. According to Cognitive Load Theory [42], as students became more familiar with the recording process, the cognitive load associated with the task decreased, leading to a corresponding increase in acceptance.

4.3. Changes in Students’ Performance

This section analyzes the performance of students from the Digital Economy major (Class of 2022 and Class of 2023) in the Python Programming course, focusing on their final exam results, as presented in Table 6. While students from the Class of 2022 exhibited better performance in their daily tasks, their final exam scores were less than satisfactory. Through post-exam discussions with the students, it became evident that their over-reliance on large-scale models, coupled with a lack of practical exercises, significantly contributed to their underperformance. This observation serves as one of the primary motivations for conducting this research. In contrast, students from the Class of 2023 followed the instructional approach outlined in Section 4.1 for their daily learning activities.

Table 6.

The details and final exam results of the performances of students from the Digital Economy major (Class of 2022 and Class of 2023) in the Python Programming course.

The average scores of the two cohorts show a notable difference, with the Class of 2023 outperforming the Class of 2022 (78.61 vs. 75.74). Analyzing the distribution across different score brackets, the proportion of students in the high-score range (100–90) in the Class of 2023 increased significantly from 10.34% in the Class of 2022 to 15.25%. Similarly, in the 89–80 range, the percentage rose from 25.86% in the Class of 2022 to 35.59% in the Class of 2023, reflecting an improvement of 9.73%. This shift indicates that the instructional strategies proposed in this study have demonstrated notable effectiveness in the teaching practices of the Class of 2023. Meanwhile, the number of students scoring below 69 remained largely unchanged, suggesting that these students are less associated with the use of large-scale AI models. Their consistently low performance may be primarily attributed to poor study habits and a lack of motivation, leading to minimal engagement in academic activities.

5. Discussion

The findings of this study emphasize the dual-edged nature of GenAI in education, particularly its role in both enhancing and challenging student learning experiences, which is consistent with the concerns and analyses presented in the literature [4,5,6,18,19,20,21,22]. As we explore the implications of learning burnout among university students in the context of large-scale AI models, it is crucial to analyze the results from this study, focusing on the causes of burnout, the effectiveness of proposed response strategies, and the broader educational implications. The initiative proposed in this study focuses on enhancing student engagement, aligning with approaches such as flipped classrooms [43]. While current research in this area has often lacked practical implementation strategies [15,16,17], the findings of this study serve as a valuable complement and effective exploration of the existing literature.

Our survey identified key contributors to learning burnout, primarily information overload and overdependence on technology. The data reveal a significant sense of being overwhelmed, with 43.36% of students reporting experiences of information overload while using GenAI tools, which undermines effective learning and critical thinking. This superficial engagement can lead to fragmented knowledge, creating a disconnect between learned information and its practical application in real-world contexts. Furthermore, our results highlight a troubling trend of overreliance on GenAI, where such dependence diminishes students’ autonomous learning capabilities and fosters a sense of learned helplessness. As students frequently turn to AI for answers, their intrinsic motivation for learning declines correspondingly. Reliance on simplified responses also inhibits genuine engagement with learning tasks, ultimately resulting in stagnation in cognitive development and innovation.

In addressing these challenges, the study’s proposed instructional strategies—primarily centered around collaborative learning, peer evaluation, and real-time feedback mechanisms—have shown promise in mitigating the negative impacts of learning burnout. The implementation of peer learning and evaluation allowed students to engage deeply with the material while fostering a collaborative learning environment, promoting not only cognitive understanding but also social interaction. The rise in students’ acceptance of this format, as highlighted by the changes in survey responses, further confirms the potential of these strategies to enhance engagement and motivation. The strategy to integrate cheating detection tools serves as an immediate feedback mechanism, empowering educators to identify at-risk students and intervene proactively. This aspect of the intervention is crucial because timely support can help restore a sense of agency in students and counteract the feelings of burnout associated with technological dependence. The acceptance of this tool reflects the willingness of students to recognize the importance of maintaining academic integrity while also benefitting from AI assistance.

From an educational standpoint, the findings advocate for a re-evaluation of how GenAI tools are integrated into instructional practices. The role of educators must evolve from traditional knowledge transmitters to facilitators of learning, where teaching strategies are designed to incorporate technology while simultaneously fostering critical thinking and problem-solving. The effectiveness of AI tools hinges on the pedagogical framework employed by instructors. Moreover, findings suggest a need for educational systems to implement training programs focused on digital literacy, critical thinking, and the ethical use of AI tools. As students increasingly interact with GenAI models, equipping them with the skills to critically assess and utilize the technology responsibly becomes imperative.

This study provides valuable insights into the phenomenon of learning burnout associated with GenAI in higher education. However, it is important to acknowledge several limitations. First, the current sample size is relatively small, with participants being restricted to students from a limited set of majors within a single institution in China. Second, the focus on programming courses limits the broader applicability of the findings to other disciplines. Third, the current cheating detection rules primarily rely on the subjective experience gained through the teaching process. While the machine-generated results are presented to the students, who have acknowledged their cheating behaviors, suggesting a certain level of reliability in the detection system, the rules cannot be disclosed publicly to avoid students circumventing them. As a result, it has been challenging to accurately collect and build a comprehensive dataset on cheating behaviors. In future research, we aim to expand the scope of participants and course types, explore the long-term impact of AI tools in other fields of study and diverse student populations, and collect and construct a more accurate and comprehensive cheating behavior dataset without disrupting regular teaching activities. We will also focus on developing more precise performance evaluation algorithms.

In conclusion, this study emphasizes the critical issue of mitigating the learning burnout caused by the misuse of GenAI in higher education, with a focus on programming language teaching as a case study. While acknowledging the transformative potential of GenAI in enhancing educational experiences, we also highlight the vulnerabilities it introduces, particularly in fostering learning burnout. By identifying the root causes of this phenomenon and proposing targeted teaching strategies, this research offers valuable insights into how educators can effectively integrate AI tools while minimizing their negative impact on student well-being.

Author Contributions

Conceptualization, X.D. and Z.W.; methodology, X.D., Z.W. and S.H.; software, X.D.; validation, X.D., Z.W. and S.H.; formal analysis, X.D.; investigation, X.D.; resources, X.D.; data curation, X.D.; writing—original draft preparation, X.D.; writing—review and editing, X.D.; visualization, X.D.; supervision, X.D.; project administration, X.D.; funding acquisition, X.D., Z.W. and S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shandong Provincial Undergraduate University Teaching Reform Research Key Project, grant number Z2022316; the Undergraduate Teaching Reform Project of Nanning Normal University, grant number 2025JGX049; the University-Level Teaching Research Project of Shandong Institute of Petroleum and Chemical Technology, grant number JGYB202458; and the Horizontal Project of Shandong Institute of Petroleum and Chemical Technology, grant number 2025HKJ0006.

Institutional Review Board Statement

This study was approved by Ethics Committee of the School of Big Data and Basic Sciences, Shandong University of Petroleum and Chemical Technology, with code SK-2024-SH-058, on 30 August 2024.

Informed Consent Statement

Informed consent was obtained from the participants.

Data Availability Statement

The dataset supporting the results reported in this study is publicly available in the GitHub repository at https://github.com/dongxr2/LLM_Burnout_Dataset/ (accessed on 19 April 2025). The dataset contains detailed survey questions, results, and scores on a five-point Likert scale.

Acknowledgments

We would like to thank the students from the Computer Science and Technology 2021 cohort, Internet of Things Engineering 2021 cohort, and Digital Economy 2023 cohort at Shandong Institute of Petroleum and Chemical Technology, China, for their valuable contributions to this research. Their participation in the survey was essential to the success of this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shanahan, M. Talking about Large Language Models. Commun. ACM 2024, 67, 68–79. [Google Scholar] [CrossRef]

- Xu, F.F.; Alon, U.; Neubig, G.; Hellendoorn, V.J. A Systematic Evaluation of Large Language Models of Code. In Proceedings of the 6th ACM SIGPLAN International Symposium on Machine Programming, San Diego, CA, USA, 13 June 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1–10. [Google Scholar]

- Welsby, P.; Cheung, B.M.Y. ChatGPT. Postgrad. Med. J. 2023, 99, 1047–1048. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Grassini, S. Shaping the Future of Education: Exploring the Potential and Consequences of AI and ChatGPT in Educational Settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. In Proceedings of the 2023 IEEE Global Engineering Education Conference (EDUCON), Kuwait, Kuwait, 1–4 May 2023; pp. 1–9. [Google Scholar]

- Dilling, F.; Herrmann, M. Using Large Language Models to Support Pre-Service Teachers Mathematical Reasoning—An Exploratory Study on ChatGPT as an Instrument for Creating Mathematical Proofs in Geometry. Front. Artif. Intell. 2024, 7, 1460337. [Google Scholar] [CrossRef]

- Nguyen, A.; Ngo, H.N.; Hong, Y.; Dang, B.; Nguyen, B.-P.T. Ethical Principles for Artificial Intelligence in Education. Educ. Inf. Technol. 2023, 28, 4221–4241. [Google Scholar] [CrossRef]

- Byers, T.; Imms, W.; Hartnell-Young, E. Comparative Analysis of the Impact of Traditional versus Innovative Learning Environment on Student Attitudes and Learning Outcomes. Stud. Educ. Eval. 2018, 58, 167–177. [Google Scholar] [CrossRef]

- Zhang, J.-Y.; Shu, T.; Xiang, M.; Feng, Z.-C. Learning Burnout: Evaluating the Role of Social Support in Medical Students. Front. Psychol. 2021, 12, 625506. [Google Scholar] [CrossRef]

- Cazan, A.-M. Learning Motivation, Engagement and Burnout among University Students. Procedia—Soc. Behav. Sci. 2015, 187, 413–417. [Google Scholar] [CrossRef]

- Stoliker, B.E.; Lafreniere, K.D. The Influence of Perceived Stress, Loneliness, and Learning Burnout on University Students’ Educational Experience. Coll. Stud. J. 2015, 49, 146–160. [Google Scholar]

- Astuti, D.P.; Kardiyem, K.; Setiyani, R.; Jaenudin, A. The influence of digital literacy, parental and peer support on critical thinking skills in the ai era. Int. J. Econ. Educ. Entrep. 2024, 4, 539–547. [Google Scholar]

- Akhyar, Y.; Fitri, A.; Zalisman, Z.; Syarif, M.I.; Niswah, N.; Simbolon, P.; S, A.P.; Tryana, N.; Abidin, Z. Contribution of Digital Literacy to Students’ Science Learning Outcomes in Online Learning. Int. J. Elem. Educ. 2021, 5, 284–290. [Google Scholar] [CrossRef]

- Milano, S.; McGrane, J.A.; Leonelli, S. Large Language Models Challenge the Future of Higher Education. Nat. Mach. Intell. 2023, 5, 333–334. [Google Scholar] [CrossRef]

- Huang, C.; Tu, Y.; Han, Z.; Jiang, F.; Wu, F.; Jiang, Y. Examining the Relationship between Peer Feedback Classified by Deep Learning and Online Learning Burnout. Comput. Educ. 2023, 207, 104910. [Google Scholar] [CrossRef]

- Jeon, J.; Lee, S. Large Language Models in Education: A Focus on the Complementary Relationship between Human Teachers and ChatGPT. Educ. Inf. Technol. 2023, 28, 15873–15892. [Google Scholar] [CrossRef]

- Abd-alrazaq, A.; AlSaad, R.; Alhuwail, D.; Ahmed, A.; Healy, P.M.; Latifi, S.; Aziz, S.; Damseh, R.; Alrazak, S.A.; Sheikh, J. Large Language Models in Medical Education: Opportunities, Challenges, and Future Directions. JMIR Med. Educ. 2023, 9, e48291. [Google Scholar] [CrossRef]

- Ortega-Ochoa, E.; Sabaté, J.-M.; Arguedas, M.; Conesa, J.; Daradoumis, T.; Caballé, S. Exploring the Utilization and Deficiencies of Generative Artificial Intelligence in Students’ Cognitive and Emotional Needs: A Systematic Mini-Review. Front. Artif. Intell. 2024, 7, 1493566. [Google Scholar] [CrossRef]

- Duong, C.D.; Vu, T.N.; Ngo, T.V.N.; Do, N.D.; Tran, N.M. Reduced Student Life Satisfaction and Academic Performance: Unraveling the Dark Side of ChatGPT in the Higher Education Context. Int. J. Hum.-Comput. Interact. 2025, 41, 4948–4963. [Google Scholar] [CrossRef]

- Aad, S.; Hardey, M. Generative AI: Hopes, Controversies and the Future of Faculty Roles in Education. Qual. Assur. Educ. 2024, 33, 267–282. [Google Scholar] [CrossRef]

- Bin-Nashwan, S.A.; Sadallah, M.; Benlahcene, A.; Ma’aji, M.M. ChatGPT and Academic Integrity: The Role of Personal Best Goals, Academic Competence, and Workplace Stress. Foresight, 2025; ahead-of-print. [Google Scholar] [CrossRef]

- Taherdoost, H. What Is the Best Response Scale for Survey and Questionnaire Design; Review of Different Lengths of Rating Scale / Attitude Scale / Likert Scale. Int. J. Acad. Res. Manag. 2019, 8, 1–12. [Google Scholar]

- Dai, S.; Xu, C.; Xu, S.; Pang, L.; Dong, Z.; Xu, J. Bias and Unfairness in Information Retrieval Systems: New Challenges in the LLM Era. In Proceedings of the Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 6437–6447. [Google Scholar]

- Arnold, M.; Goldschmitt, M.; Rigotti, T. Dealing with Information Overload: A Comprehensive Review. Front. Psychol. 2023, 14, 1122200. [Google Scholar] [CrossRef]

- Orrù, G.; Piarulli, A.; Conversano, C.; Gemignani, A. Human-like Problem-Solving Abilities in Large Language Models Using ChatGPT. Front. Artif. Intell. 2023, 6, 1199350. [Google Scholar] [CrossRef]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. Adv. Neural Inf. Process. Syst. 2023, 36, 11809–11822. [Google Scholar]

- Soller, A. Supporting Social Interaction in an Intelligent Collaborative Learning System. Int. J. Artif. Intell. Educ. 2001, 12, 40. [Google Scholar]

- Chan, C.K.Y.; Tsi, L.H.Y. Will Generative AI Replace Teachers in Higher Education? A Study of Teacher and Student Perceptions. Stud. Educ. Eval. 2024, 83, 101395. [Google Scholar] [CrossRef]

- Louis, M.; ElAzab, M. Will AI Replace Teacher? Int. J. Internet Educ. 2023, 22, 9–21. [Google Scholar] [CrossRef]

- Yıldız, T.A. The Impact of ChatGPT on Language Learners’ Motivation. J. Teach. Educ. Lifelong Learn. 2023, 5, 582–597. [Google Scholar] [CrossRef]

- Qu, K.; Wu, X. ChatGPT as a CALL Tool in Language Education: A Study of Hedonic Motivation Adoption Models in English Learning Environments. Educ. Inf. Technol. 2024, 29, 19471–19503. [Google Scholar] [CrossRef]

- Bujang, M.A.; Omar, E.D.; Baharum, N.A. A Review on Sample Size Determination for Cronbach’s Alpha Test: A Simple Guide for Researchers. Malays. J. Med. Sci. 2018, 25, 85–99. [Google Scholar] [CrossRef]

- Izah, S.C.; Sylva, L.; Hait, M. Cronbach’s Alpha: A Cornerstone in Ensuring Reliability and Validity in Environmental Health Assessment. ES Energy Environ. 2023, 23, 1057. [Google Scholar] [CrossRef]

- Schraw, G.; Moshman, D. Metacognitive Theories. Educ. Psychol. Rev. 1995, 7, 351–371. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Self-Determination Theory. In Encyclopedia of Quality of Life and Well-Being Research; Maggino, F., Ed.; Springer International Publishing: Cham, Switzerland, 2023; pp. 6229–6235. ISBN 978-3-031-17299-1. [Google Scholar]

- Dong, Y.; Hou, J.; Lu, X. An Intelligent Online Judge System for Programming Training. In Database Systems for Advanced Applications; Nah, Y., Cui, B., Lee, S.-W., Yu, J.X., Moon, Y.-S., Whang, S.E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 785–789. [Google Scholar]

- Zhang, F.; She, M. The Effectiveness of “Xuexitong” in College English Teaching. In Proceedings of the 2020 3rd International Seminar on Education Research and Social Science (ISERSS 2020), Kuala Lumpur, Malaysia, 28 December 2020; Atlantis Press: Dordrecht, The Netherlands, 2021; pp. 487–490. [Google Scholar]

- Liu, M.; McKelroy, E.; Corliss, S.B.; Carrigan, J. Investigating the Effect of an Adaptive Learning Intervention on Students’ Learning. Educ. Tech. Res. Dev. 2017, 65, 1605–1625. [Google Scholar] [CrossRef]

- Sweller, J. CHAPTER TWO—Cognitive Load Theory. In Psychology of Learning and Motivation; Mestre, J.P., Ross, B.H., Eds.; Academic Press: Cambridge, MA, USA, 2011; Volume 55, pp. 37–76. [Google Scholar]

- Lopes, P.N.; Salovey, P.; Côté, S.; Beers, M. Emotion Regulation Abilities and the Quality of Social Interaction. Emotion 2005, 5, 113–118. [Google Scholar] [CrossRef] [PubMed]

- Schnotz, W.; Kürschner, C. A Reconsideration of Cognitive Load Theory. Educ. Psychol. Rev. 2007, 19, 469–508. [Google Scholar] [CrossRef]

- Lawan, A.A.; Muhammad, B.R.; Tahir, A.M.; Yarima, K.I.; Zakari, A.; Ii, A.H.A.; Hussaini, A.; Kademi, H.I.; Danlami, A.A.; Sani, M.A.; et al. Modified Flipped Learning as an Approach to Mitigate the Adverse Effects of Generative Artificial Intelligence on Education. Educ. J. 2023, 12, 136–143. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).