1. Introduction

Open-source software (OSS) represents a distribution model (source code) that guarantees free access, allowing the user to install the software without an additional purchase and to access its source code if they want to contribute to its development. This type of software allows those qualified to test and fix bugs without help instead of waiting for someone else to do it [

1]. OSS communities still need to establish standard processes to ensure that the product they develop meets quality attributes [

2]. Due to the significant increase in the number of non-developer users of OSS applications, there is a growing interest in developing usable OSS [

3,

4]. Moreover, usability is an essential characteristic, and it is considered a quality attribute that evaluates how easy it is to use a graphical user interface [

5,

6,

7,

8]. According to the human–computer interaction (HCI) approach, there is an excellent variety of usability techniques. This variety is evidenced in the catalog compiled by Ferré et al. [

9], which contains 55 HCI techniques for integration into the software engineering development process. Several authors have recognized low usability in OSS projects [

3,

4,

10,

11]. Its incorporation into the OSS development process is complex due to the unusual characteristics of these communities. The main challenges faced by these projects in terms of usability include (i) a culture focused on functionality development, (ii) the global geographic location of its members, (iii) scarcity of resources, and (iv) a culture that may be somewhat alien to interaction design. This prevents many HCI usability techniques from being adopted directly [

12].

Our research determines how to incorporate three usability techniques (cognitive walkthrough, formal usability testing, and thinking aloud) into the development process of three OSS projects (Code::Blocks, Freeplane, and Scribus). To do so, we previously analyzed and identified the impediments that must be solved to apply these three techniques in the three OSS projects, considering their specific characteristics. Although there is a framework for integrating techniques in OSS developments [

12], this framework is general. It does not detail the steps and artifacts that should be used to adapt the techniques. The solutions to these impediments correspond to the adaptations that must be made to the steps of the methods to allow their incorporation into the OSS development process. In other words, with our research, we want to improve usability in OSS projects, making their developers recognize the value and benefits of applying evaluation techniques. For this, it is necessary to evaluate such OSS projects by adopting HCI methods that allow usability problems to be identified for their subsequent solution.

Nielsen [

13] states that usability is not a single, one-dimensional property of a user interface but comprises multiple components, such as learnability, efficiency, productivity, errors, and satisfaction. Based on this definition, we can see usability as a software evaluation metric oriented to the components to meet a quality standard in software systems. To meet this quality, it is necessary to understand the definition of low usability in a project [

14], which can be determined by little or no user interaction with the system. In addition, usability in non-commercial software systems should be more recognized [

14]. Therefore, the need arises to evaluate OSS projects by applying different techniques, among which stand out cognitive walkthrough, formal usability testing, and thinking aloud. Integrating techniques related to usability evaluation in OSS projects is a complicated process that has yet to be investigated in depth [

15,

16,

17,

18,

19]. Existing studies suggest that the techniques should be reconceptualized. However, they need to detail how to adjust the methods. To the best of our knowledge, only the studies by Nichols and Twidale [

20] and Ternauciuc and Vasiu [

21] propose some general ideas for improving the usability of OSS projects. Although there are studies on usability in OSS, there is no standardized procedure for its incorporation in OSS developments. Only the study by Castro [

12] analyzes the usability problems and techniques used in OSS projects in an integrated way, proposing a general framework for integrating these techniques and considering the characteristics and philosophy of the OSS community. In the literature, some works have reported the use of cognitive walkthrough [

22,

23,

24,

25,

26], formal usability testing [

27,

28,

29], and thinking aloud [

2,

16,

23,

30,

31,

32]. However, these works only report the results of applying usability evaluation techniques and do not specify the adaptations used in OSS projects.

The current literature highlights critical challenges in usability evaluation in OSS projects, including the lack of systematic comparative studies and specific adaptations of techniques such as cognitive walkthrough, formal usability testing, and thinking aloud for these environments. Our research addresses these gaps by providing a detailed and practical framework that integrates these techniques, adapted to the unique characteristics of OSS projects, such as their distributed development and lack of usability experts. In addition, we evaluate usability from the perspective of end users and developers, providing a complete view. Finally, we use qualitative and quantitative methods for a more detailed evaluation.

Cognitive walkthrough (CW) [

33] is used to identify usability problems in interactive systems. It aims to know how easily new users can perform tasks with the software system. This method is one of the most appreciated due to its speed in generating results at low cost. Formal usability testing (FUT) [

34] corresponds to the most common tests in the HCI field. In these tests, the user is asked to perform a series of tasks with the system prototype to observe what problems the user experiences, what mistakes they make, and how they recover from them. Thinking aloud (TA) is very effective when it is required to obtain flaws in software development [

16,

30]. In this technique, it is necessary to carefully observe what the user says and does and how they behave in front of a software system. Starting from their emotions, we can find usability improvements that would not be obtained using traditional methods (e.g., surveys). In addition, TA allows data to be received from a user through a set of activities to be tested on a system, verbalizing their thoughts. Users allow an observer to determine what they are doing with the interface and why they are doing it [

16]. CW is fast and inexpensive for identifying usability problems; FUT evaluates effectiveness, efficiency, and user satisfaction; and TA provides a deep understanding of user interactions and thoughts while using the system.

To choose the projects for this research, we used SourceForge, one of the most popular OSS repositories [

35]. For the selection of OSS projects, we have considered the following criteria: (i) availability for the Windows operating system; (ii) tool related to education and engineering; (iii) in a beta or stable state; (iv) constant updates; (v) active user community; (vi) developed in a programming language known to researchers; (vii) projects in early stages of development, where essential functionalities are still being added; (viii) presence of a critical mass of users to determine the actual user segments of an application; and (ix) frequency of activity and popularity score of the application as rated by its users. Considering the above, we selected the Code::Blocks, Freeplane, and Scribus projects. These projects constitute a suitable framework for our study because specific authors claim that OSS communities generally do not know about usability techniques [

21,

36,

37], do not have resources for usability testing, and usability experts are not involved in these projects [

20,

21,

36,

38,

39,

40]. Some OSS projects need more knowledge about the techniques available to improve usability [

15,

40,

41,

42,

43].

Code::Blocks is a cross-platform IDE for programming in the C++ and C languages. It is designed to be extensible and fully configurable. In the Code::Blocks forum, users comment on difficulties encountered and criticisms of the user interface (e.g., lack of a default path to save a project). Freeplane allows the creation of mind maps. In the Freeplane forum, users comment on some difficulties encountered and criticize the user interface (e.g., the location of some options in the menus could be more evident). Scribus allows the layout of web pages, typesetting, and preparing professional quality image files; it can adapt to any language and user. Scribus users also found some difficulties and criticisms of the user interface, such as (i) unintuitive interface; (ii) it is not possible to change the font size immediately; (iii) it is not possible to insert an image directly from the web into the project; (iv) when trying to change the opacity, it is not possible; (v) when selecting the edit image option an error is displayed indicating that the function does not exist; and (vi) the icons in the properties and header window are not very intuitive.

In order to solve the difficulties encountered by users, we propose the adaptation of CW, FUT, and TA to the context of OSS projects. To adapt these techniques, we will start with the integration framework proposed by Castro [

12]. In addition, for the application of CW, FUT, and TA in OSS projects, several artifacts were designed, such as (i) a document with the description of the tasks to be performed by the users, (ii) a document to record user information by the evaluator, (iii) a document to record the time spent by each user in performing the tasks, (iv) a document for the user to record their experience and observations, (v) a letter to request authorization from the developer, (vi) consent for image capture and video recording, and (vii) a formal invitation to users, sent by e-mail, to participate in the usability evaluation. These artifacts will be tools to apply CW, FUT, and TA.

As mentioned above, the three OSS projects were not designed with usability aspects in mind. Instead, they were built mainly while thinking about the functionalities proposed by their developers. Therefore, to adapt these three techniques, we consider the typical scenario of OSS projects, i.e., (i) volunteers work remotely, and (ii) they usually do not have a usability expert to design the evaluation experience nor perform the final analysis of the improvement proposals. Therefore, our proposal is novel in that it submits several methods adapted to a complex application scenario, such as that of OSS communities. Furthermore, considering that techniques in the HCI area, in general, do not have formalized procedures for their application in this type of OSS development, it is necessary to standardize these practices in the OSS context, thus ensuring usability improvements that are replicable and sustainable in the long term [

12].

The present work also makes a significant contribution to the field of Software Engineering, in particular in OSS developments because, as mentioned above, to our knowledge, there are no works that report how to incorporate CW, FUT, and TA in OSS projects, specifying the adaptations to be made and formalizing the application process of each of these techniques. Thus, in particular, our research presents the following contributions: (i) We identify the adverse conditions that hinder the application of CW, FUT, and TA in OSS developments. (ii) We propose adaptations for each of the steps of CW, FUT, and TA based on the identified adverse conditions that allow their incorporation into the OSS development process. (iii) We validate the proposed adjustments by applying CW, FUT, and TA to real OSS projects. (iv) We suggest recommendations to improve the user interface of Code::Blocks, Freeplane, and Scribus through the identification of usability issues as a result of applying the CW, FUT, and TA. (v) Based on our experience participating as volunteers in different OSS projects, we propose a framework to integrate techniques related to usability evaluation in this type of OSS project.

This article is organized as follows. In

Section 2, we describe related work. In

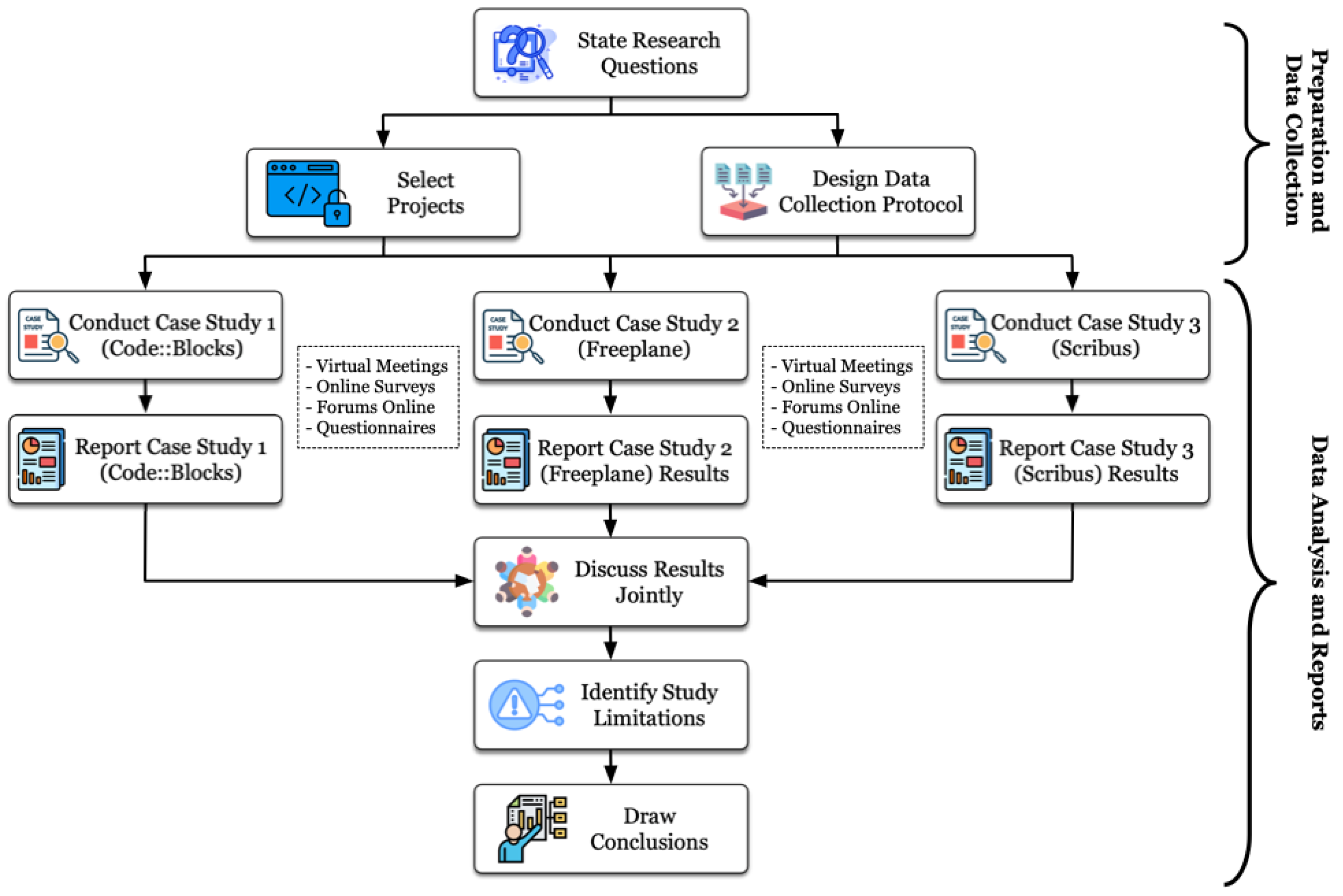

Section 3, we report the research method.

Section 4 describes the identified adverse conditions and usability technique adaptations. In

Section 5, we present the design of the multiple case study. In

Section 6, we report the results of the multiple case study.

Section 7 presents a framework for usability evaluation in the OSS projects. In

Section 8, we discuss the results. In

Section 9, we describe this study’s limitations. Finally, in

Section 10, we present conclusions and future research.

2. Related Work

This section reports the work related to applying CW, FUT, and TA in OSS projects. These techniques have been widely recognized in the HCI area for their ability to evaluate and improve the user experience in software systems. The description of the work will serve as the basis for the proposed adaptations to the usability techniques in the context of OSS development, presented in later sections of this paper.

Few studies have recently reported using the CW in OSS developments [

22,

23,

24,

25,

26]. In the study by Assal et al. [

22], the authors use the CW to evaluate a system called Caesar. The authors combined a cognitive dimension framework with the CW in the usability evaluation. The authors consider the application interface with the participants (developers) performing different previously defined tasks. In the study by Ledesma et al. [

23], the authors report the application of the CW for evaluating the medical software library hFigures, with the participation of three experts. A CW is performed with several end users to assess the usability of BiDaML [

24]. These users highlighted that BiDaML was easy to understand and learn. The authors obtained favorable results. Weninger et al. [

25] conducted a study to evaluate the AntTracks Analyzer tool using the CW. The main objective of the CW was to reveal and correct possible usability flaws. In the study by Tegos et al. [

26], the authors evaluated the usability of the PeerTalk system. The study involved four MOOC instructors and two experts. In this study, the CW technique was used to assess the usability of the system functions when users performed the given tasks.

To the best of our knowledge, very few research papers report applying the FUT technique in OSS projects [

2,

27,

28,

29]. In the study by Andreasen et al. [

2], testing is mentioned in the context of usability evaluation methods, highlighting that these methods are not widely used in OSS projects due to the lack of resources and evaluation methods that fit the OSS paradigm. The study of Cemellini et al. [

27] reports the implementation of a 3D cadastre prototype. The authors performed a test to obtain feedback from different groups of users. During the application of FUT, the test users were subdivided into groups according to different professional domains and expertise. The usability test result was crucial to pinpoint the limitations detected at this early prototype development stage. In Hall’s study [

28], the authors examine OSS’s usability, focusing on why this quality attribute is often overlooked. The authors apply FUT to the GNOME project, a popular OSS desktop environment. The application of this technique allowed the authors to collect data suggesting usability features or issues. In the work of Pietrobon et al. [

29], the authors describe the development of a web OSS called Duke Surgery Research Central (DSRC). The FUT was used to evaluate the usability of the DSRC. To apply this technique, the authors used an external evaluator and recruited ten users who performed the tests proposed by this evaluator. The results of the application of the FUT were positive.

Some works in the literature report use TA in OSS projects [

2,

16,

23,

30,

31,

32]. The empirical study by Andreasen et al. [

2] reports that 21% of the OSS developers surveyed have used the TA. The authors mention that developers often participate in usability evaluations, although usability professionals do not always perform these activities; instead, they follow the developers’ common sense [

2]. The authors identified several problems related to usability evaluation in OSS communities, including a need for more priority in systematically assessing this quality attribute and a limited understanding of usability among developers. In addition, evaluation is often performed remotely and with conventional techniques such as TA. However, this approach presents challenges in accurately identifying usability issues due to the absence of facial expressions and the technical setup required to perform the evaluation remotely. TA has been adopted to develop a web browser, a desktop utility, an operating system [

16], and the TrueCrypt project [

30]. The study by Terry et al. [

16] highlights some usability problems in OSS, such as the difficulty in implementing a holistic design due to its distributed and voluntary development, the scarcity of usability infrastructure, and a developer-centric culture that limits the integration of user experience practices. In the TrueCrypt project, users were asked to “think out loud” while performing specific tasks, especially to indicate when they started, finished, or became stuck [

30]. In addition, TA has been used in the CARTON [

31], hFigures [

23], and ViSH Editor [

32] projects. In the CARTON project [

31], the authors evaluated students. Participants were encouraged to provide feedback while building an augmented reality device by following the guides provided in the application. In the study by Ledesma et al. [

23], the authors report using TA as part of a test conducted in a usability lab. In this study, participants were asked during the evaluation to perform specific tasks while commenting on what they were doing and thinking. Finally, TA was applied to assess ViSH Editor [

32]. In this case, the authors used the technique with the help of a session moderator. The moderator gave the participants the tasks and asked them to think aloud while performing them.

Usability is a critical aspect in the development of OSS projects, as it directly influences the adoption and satisfaction of its users. Some studies have evaluated usability in various OSS projects [

4,

11], highlighting challenges and opportunities to improve user experience. However, despite these efforts, the current literature has several essential gaps. These include the lack of systematic comparative studies that analyze different techniques related to usability evaluation across multiple case studies. Although studies in the literature evaluate the usability of individual OSS projects, they need to report the necessary adaptations to adequately apply CW, FUT, and TA techniques in these developments, considering their characteristics. Moreover, these studies must formalize or systematize each step to use the methods. Our study addresses all these gaps.

In summary, after a review of the literature related to CW, FUT, and TA, the lack of studies that specifically address the necessary adaptations that allow their application in OSS projects, considering the characteristics of this type of development, stands out. As for CW, papers that report on its adaptation for incorporation in OSS developments were not found, which highlights the need for more research in this area, such as the one presented in this study. Concerning FUT, although there are some studies on its application in OSS, the lack of detailed documentation on the steps and adaptations required for its application points to the importance of continuing to explore this line of research. Finally, although TA has been used in some OSS projects, the absence of studies describing its specific adaptations for this environment emphasizes the need for further research. In conclusion, our work seeks to fill these gaps by providing a detailed and practical framework for incorporating these three evaluation techniques in OSS projects, thus opening new opportunities for improving usability in this domain.

6. Results of the Multiple Case Study

The case study results for the OSS projects are presented, showcasing the application of the adapted usability techniques. The implementation materials (forms, invitations, etc.) used are available at 10.6084/m9.figshare.28020965.

6.1. Results of the Code::Blocks Case by Applying CW

Regarding the Code::Bocks case study, we first sent a letter requesting authorization to participate in the OSS project to the responsible developer’s email and asking for a list of potential users who could participate in this study. Due to the lack of collaboration from the OSS community to participate in applying the CW, both in the pilot and final tests, we conducted these tests with students from the Universidad Técnica Estatal de Quevedo (UTEQ). To start the pilot test, we invited two users by e-mail, attaching a link to the “User’s Sociodemographic Profile” questionnaire. In this questionnaire, users had to register their data and answer questions about the Code::Blocks project.

Once the users were identified, we formally invited each of them, through their emails, to a virtual meeting to apply the CW technique. Once the pilot test was completed, with the results obtained, we identified several difficulties, such as (i) a lack of user knowledge when using the software and (ii) the test duration needing to be longer to perform the tasks. In the following, we describe the results of the pilot test. Two users participated in the pilot test and completed the “User’s Sociodemographic Profile” questionnaire. The sociodemographic profile is crucial in usability evaluations to contextualize the results and understand how different characteristics, such as age or technological experience, affect the interaction with the software. In addition, it allows us to ensure that the sample of users is representative of the target population, which is vital for generalizing the results. On the one hand, the first user had problems when creating a project in the C++ programming language because the application did not request a destination path where the project would be saved. On the other hand, the second user had experience with tools similar to Code::Blocks, such as Visual Studio and NetBeans.

As a final result of the pilot test, we recorded the users’ problems during the virtual meeting in the corresponding document. This document has the following information: the name of the expert, the date of the evaluation, the name of the user, the type of user, problems in the OSS, and possible solutions to the identified issues of the OSS project. It is essential to mention that only two problems were identified because the pilot test was conducted with two participants, which limited the number of possible observations during the process.

In the case of the final test, the step of identifying user profiles was omitted because we already had enough information about each of them, such as their experience (sixth-semester students of Software Engineering). Seven users participated in this final test. We made the invitation to participate via e-mail, in which we indicated the objective of the users’ participation and the tasks they had to perform in a maximum time of 20 min. In addition, we attached in the same e-mail (i) the consent forms for image capture and video recording and (ii) the document with the tasks to be performed by the users during the test. We conduct general training to start the usability evaluation, in which the expert briefly explains the cognitive walkthrough. In this training, we welcome the participants and thank them for accepting the invitation to participate in the virtual meeting. We also present an introductory summary of the CW technique and indicate what each participant must do in Code::Blocks. Due to the time constraints available to the participants to perform the evaluation, we selected two tasks: (i) create a project in C++ language and (ii) execute the script created in the project to perform the final evaluation.

According to Wharton et al. [

33], the CW is carried out with expert users or users with a medium level of experience, and indeed, the final test users met this profile. During the virtual meeting, we found that users had a smooth interaction with Code::Blocks and that the time to perform the tasks ranged from 6 to 12 min. To conclude the usability evaluation, we asked the participants for their criticisms regarding the problems encountered and possible improvement proposals for the Code::Blocks project in future updates.

As a final evaluation result, we identified certain inconveniences that users expressed during the virtual meeting, which were recorded in the document “Format Instrument with tasks for users”, which contains the tasks to be performed. The following are some of the inconveniences encountered by the participants during the final usability test: (i) Difficulty in identifying the execution button because there are two very similar buttons. (ii) Problems in creating a new project caused by the lack of a default path where the created projects are saved. (iii) There are too many options to do the same thing or something straightforward, like how to create a project. (iv) The current interface could be more intuitive and eye-catching, but it may not adequately capture users’ attention when downloading the OSS project.

The following are some improvements proposed by users that the Code::Blocks project developer could consider:

Update the icons for a better visual appreciation since Code::Blocks has ancient icons;

Use only a few options when creating a project, as OSS projects in this category usually involve a few steps;

The console should be executed at the bottom of the project. Currently, a screen takes up a lot of space and makes the user’s work more difficult;

Reduce the number of icons in the project interface, leaving only the icons of the most used functionalities, such as commenting and uncommenting code;

Consider a digital repository to store all the projects created to avoid the problems of assigning default paths.

Finally, we completed the document recording the problems identified during the virtual meeting with the users. In this document, we recorded the name of the expert, date of evaluation, names of the users, type of user, problems in the OSS project, and possible improvements for the OSS project.

Applying the CW technique in the Code::Blocks project revealed several significant usability issues that negatively affect the user experience. Among the main findings, we identified the absence of a default path when creating a project, which generates confusion and slows down the initial configuration process. In addition, the user interface is only available in English, which limits its accessibility for non-English-speaking users. Another critical problem detected is the inability to run a specific project when multiple projects are open simultaneously, forcing the user to close all other projects before continuing. These findings highlight the need for software interface and configuration improvements to facilitate a more intuitive and efficient user experience.

6.2. Results of the Freeplane by Applying FUT

We made a formal e-mail request to one of the Freeplane developers to perform the tests. After 22 days, we have not received a response from the Freeplane developer to our request. Subsequently, we posted this request on the official OSS project forum but received no response. However, two user members of the OSS community expressed their interest in us performing the research but did not mention wanting to participate in applying the FUT. In the first instance, we tried to perform the usability test with real users (i.e., users registered in the Freeplane forum); for this purpose, we published an announcement in the Freeplane project forum inviting users to be part of this study. After 16 days, we have not received a response from users in the project forum. Given the lack of collaboration from actual users of the Freeplane OSS community to perform the tests, we proceeded to recruit users by other means. These users were six students from the Software Engineering program at the UTEQ. These recruited users were considered viable since they have experience using OSS projects and believe they can use Freeplane as a support tool to perform tasks and exposure work in their daily work.

To execute the FUT, we emailed the formal invitations, the pre-test and post-test questionnaires, and the consent for image capture. We also defined a date and time for the test’s execution. First, we conducted the pilot test with two student users from UTEQ, who had to make a mind map that collected all the aspects that should be considered to organize an ideal trip (i.e., a journey in which nothing fails). In this pilot test, we detected problems in the task approach and the focus of several questions in the pre-test and post-test forms. These problems were corrected before the final usability evaluation of Freeplane was performed. In this pilot test, we evidenced a serious problem foreseen by the research team: the technique requires constant feedback between the user and the expert. When working in a joint session, it is possible that several participants are talking and may interrupt the interaction between one of them and the evaluator. Therefore, as a countermeasure, we defined that only one user should participate in the testing session.

Users completed a pre-test survey before the evaluation to identify user profiles, which was conducted to learn about the users and their appreciation of the OSS project. The main results of the pre-test are summarized below:

The age range of the users was between 22 and 30 years old;

Concerning the area of work where the participants worked, all of them were in engineering;

In total, 67% of the participants had a medium level of computer knowledge, and 33.3% had a high level of expertise. Thus, it can be concluded that they were people experienced in handling software in general;

When the users were asked about making a mind map in the last month, 83% had needed to do so, and only 16% had not been required to do so in the previous month;

Half of the users have yet to experience using mind mapping software;

When asked about the frequency of users’ use of OSS projects, the results indicate that 66.6% use them frequently, 16.7% use them very frequently, and another 16.7% hardly use them at all.

During the final evaluation, as users performed the formal usability test, participants forgot the importance of sharing their opinions regarding the tasks they were performing. However, the expert intervened by asking their views on the activities performed. In addition, sometimes users do not know how to perform an activity, so the expert provides clues. At the end of the formal usability test, we asked the participants about their experience developing the test and recommendations to solve the problems encountered. Below, we report some errors found by the participants during the formal usability test: (i) the icons do not relate to the functions they perform; (ii) the circle next to the node moves arbitrarily from one side to the other; and (iii) when selecting the connect to node button, a dialog box to select the node to connect to is not displayed.

The FUT technique was crucial in identifying usability issues in the selected OSS project. This technique allowed us to directly observe users as they interacted with the software, revealing specific topics such as navigation difficulties, frequent errors, and confusing aspects of the interface. The application of FUT facilitated the collection of detailed user experience data, highlighting critical areas that required improvement. In addition, it provided valuable information on how real users perceive and use the software, which is essential for making accurate and effective adjustments to the interface and functionality of the OSS project.

6.3. Results of the Scribus Case by Applying TA

In the case of the Scribus project, to begin with, we made a formal request for authorization (via email) to the developers to perform usability tests. Due to the lack of collaboration from the OSS community to apply the TA, recruiting real users in the pilot and final tests was impossible. For this reason, we conducted the tests with external users, third-year Software Engineering students at UTEQ. It is essential to mention that this participation does not detract from the objectivity of the usability tests’ application, nor does it trigger ambiguous and confusing tests because the researchers do not influence the users at the moment of performing the proposed tasks.

Before starting the final test, we conducted a pilot test with three users instructed in the activity. For each user, we monitored the time it took them to execute the test, taking note of the comments they made regarding the activity. All users agreed on the same points and stalled on the same parts of the activities. One of the main reasons the pilot users were slow to finish the test was the excess of interfaces that the OSS project has for navigating and accessing an option. The design of the interfaces and implementation of the icons are essential points mentioned in this test. This pilot test aimed to identify possible problems that may occur in the final test.

For the final test with the application of the TA technique adapted in the Scribus project, we invited eight users via email and WhatsApp. The profile of these users is as follows: they are Software Engineering students with experience in web design. In addition, we gave instructions for the development of the virtual meeting (e.g., install Scribus) and explained the document with the tasks to be performed. Of the eight invited users, seven confirmed their attendance at the virtual meeting. During the virtual meeting, we discussed the purpose of the usability evaluation through TA with the participants. In addition, we explained in detail the documents sent to the participants’ e-mail, emphasizing that these documents served as a guide for developing the tasks. Each user performed three tasks. The execution of each task was recorded and monitored with a stopwatch to know the time it took them to accomplish it. The three activities were (i) inserting a header in a document, (ii) inserting a table, and (iii) inserting an image. It took users approximately 3 to 4 min to complete these tasks. It is essential to mention that these tasks are representative of Scribus’ functionality. Users commented on the difficulties or problems they encountered with Scribus when performing these tasks. These difficulties are the following:

The interface could be more intuitive for developing the tasks specified in the document sent to users to perform tasks with the Scribus tool;

It is impossible to change the font size immediately; it is necessary to navigate between several options;

There is a problem in the properties window when scrolling with the mouse wheel. Some values may change unintentionally due to a “phantom focus”. This problem occurs when, although the cursor is not over an active field, the system focuses on a previously selected field;

Inserting an image directly from the web into the project is impossible;

It is initially impossible to change an image’s opacity. The process must be repeated to observe the change;

The option to apply opacity to a table needs to be clarified because its description does not detail precisely what opacity will be given and because there are two similar options in the same properties window;

Selecting the Edit Image option displays an error indicating that the function does not exist;

The icons in the properties window and the top toolbar could be more intuitive;

It needs to be clarified how to fit an image completely within its frame, filling all available space without leaving empty margins;

When designing a table and entering the number of columns and rows, it is only possible to enter up to 9 columns or rows.

In this test, we observed that it took users longer than necessary to perform tasks, as the interface was not intuitive, and they had to access many shortcuts to perform such activities. In addition, we identified that the interface properties have problems (e.g., changing color, font, document formatting). Generally speaking, user participants commented that the Scribus interface could be more intuitive and indicated they felt uncomfortable because they lost time performing the tasks. Finally, the participants suggested some suggestions for improvement to overcome the drawbacks of Scribus. Some examples of proposals are the following: (i) Use familiar buttons for the user, similar to those used in Microsoft Word’s graphical interface. (ii) Allow changing the text size in real time. (iii) Correct errors that occur when changing the opacity of an image and inserting tables. (iv) Eliminate functionalities with errors, such as the one that occurs when selecting the Edit Image option. (v) Use icons according to the objective of the action. (vi) Include the option to add tables using the right mouse click. (vii) Instead of turning off properties that do not correspond to a frame, it is preferable not to present them to avoid using unnecessary space.

The primary outcomes of using the TA technique in the Scribus case study included the identification of non-intuitive interfaces that caused user confusion and delays in task completion, usability issues that required additional steps or caused errors during task execution, and user frustrations and suggestions. These frustrations were related to complex navigation and unclear instructions. Meanwhile, suggestions included designing more familiar buttons and improving real-time feedback mechanisms. These results highlighted the effectiveness of the TA technique in uncovering usability issues and gathering valuable feedback directly from the user experience.

The adaptations made to our study’s CW, FUT, and TA techniques have significantly impacted the results obtained. The adaptations allowed a more effective integration of these techniques in the specific contexts of the selected OSS projects (i.e., Code::Blocks, Freeplane, and Scribus). The CW adaptation, which included remote user participation and the replacement of usability experts by students under the supervision of mentors, facilitated the identification of usability problems and proposed viable solutions. In FUT, modifications allowed for a more realistic and accessible evaluation, using tools enabling online meetings and adapting procedures for geographically distributed users. Finally, adaptations in TA, such as conducting online sessions and involving students as experts under the supervision of mentors, allowed detailed data to be collected on users’ interactions and thoughts in real time, providing deeper insight into usability issues. These adaptations improved the efficiency and effectiveness of usability evaluations. They demonstrated the feasibility of applying these techniques in OSS projects, overcoming typical barriers such as geographical dispersion and lack of specialized usability resources.

Finally, our multiple case study identified several common usability problems in the Code::Blocks, Freeplane, and Scribus projects. These problems include non-intuitive interfaces, icons, and buttons outside their functions and a confusing menu structure. In addition, users needed help performing basic tasks due to the need for clear directions and the absence of default paths for saving projects. Specific problems were also reported, such as the lack of dialog boxes when connecting nodes and the need for multiple steps to complete simple tasks. In general, the low usability of these projects is attributed to an overloaded interface and the need for more consideration of user experience in the functionalities’ design.

8. Discussion of Results

In the HCI area, usability techniques, whose main objective is to obtain usable software, have been successfully applied to create usable software. However, they are used within the framework of a user-centered design. Therefore, it does not consider OSS communities’ characteristics and development philosophy, making it necessary to adapt the techniques to allow their application in this type of development. These adaptations are based on the adverse conditions of each usability technique to be applied in OSS projects.

We identified two adverse conditions that the CW, FUT, and TA share. First, they require an expert for their application. Secondly, they necessarily need the physical participation of the users. The CW has a third adverse condition, which corresponds to the fact that prior preparation is necessary for its application, e.g., conceiving the task to be performed by the user during the assessment, and in the OSS community, the work performed is entirely voluntary and developed in the free time of its members. We overcome some adverse conditions by using certain web artifacts (e.g., an online survey, a forum, and a blog) known to the OSS community.

Concerning the CW technique, we propose three adaptations. First, we suggested that the usability expert be replaced by an HCI student under the supervision of a mentor. This represented a viable alternative to the presence of an expert, allowing practical application of the CW. Second, we propose that user participation be conducted remotely through online platforms, allowing us to broaden the scope of this study and facilitate collaboration, overcoming the geographic and logistical limitations associated with physical presence. Third, we propose to leverage the tasks that users typically perform with the OSS project rather than pre-defining such tasks. While applying the CW, we identified a significant challenge in communicating with the Code::Blocks community. The lead developer’s lack of response over an extended period (16 days) evidenced the barriers to obtaining the necessary authorization to apply for the CW. However, the positive response from users recruited by email demonstrated significant interest in the CW and gave us a representative sample for evaluation. Once the CW was applied, we identified several problems related to the interface and user experience in the Code::Blocks project. The main issues included (i) difficulties in identifying key interface elements, such as the execute button; (ii) no default path to save created projects; and (iii) an overloaded interface that made it difficult to perform basic tasks. These findings underscore the importance of addressing the usability aspects of the Code::Blocks project.

Regarding the FUT technique, we identified two necessary adaptations to allow its adoption in OSS projects: (i) replacing the usability expert with an HCI student supervised by a mentor, and (ii) user participation is carried out remotely through, for example, virtual meetings. When applying the FUT, we encounter two drawbacks. First, when contacting the OSS project managers, we have yet to obtain a response to our request for authorization to perform the tests in the selected OSS projects. Second, there is a lack of collaboration among Freeplane community users (i.e., users registered in the forums) to participate in the evaluation. However, despite these problems, adapting the FUT has allowed us to incorporate it into the Freeplane project since it requires a small number of representative participants (minimum of three users) to obtain a reliable result [

34]. In our case, we had the participation of six UTEQ student users. While applying the FUT, we collected several significant comments from users that provided detailed insight into the challenges encountered in the interface and the overall user experience. These comments covered various aspects, ranging from navigation to understanding the functions and visual appearance of the interface. User feedback highlighted three areas for improvement in the Freeplane project. First, there are difficulties in navigating and understanding the functions; for example, users face problems moving the root node or coloring nodes. Second, users reported problems in visualizing-colored nodes, understanding the function of specific icons, and understanding the logic behind some actions, such as cloning nodes or connecting elements. Thirdly, users expressed the need for a clear and accessible properties section and the ability to drag and drop elements from a toolbar.

Regarding the TA technique, as with the FUT, we proposed two adaptations to allow its incorporation in OSS developments. First, replacing the expert with an HCI student supervised by a mentor, and second, we suggest that user participation be performed remotely through, for example, virtual meetings. While applying the TA, we identified two main challenges. First, difficulties associated with user recruitment. The lack of response from the leading developer of the Scribus project made it difficult to recruit users from the OSS community. In addition, we observed a lack of interest or availability on the part of the community to participate in this type of study, possibly due to time constraints or motivation. Second, we identified that many potential users needed to be more comfortable activating their cameras during the virtual sessions, which further limited the participation and availability of the user sample. Once the TA technique was applied, we identified several usability problems, such as (i) some icons do not represent the function they perform, (ii) some options are not found where users would intuitively look for them, and (iii) the interface looks unpleasant, among others.

The findings of our study provide a broader understanding of usability in OSS projects by identifying and addressing the specific adverse conditions that hinder the implementation of usability techniques in these environments. By adapting CW, FUT, and TA techniques for application in OSS projects, we have demonstrated that it is possible to overcome the inherent limitations of OSS communities, such as geographically dispersed membership and lack of dedicated usability resources. These adaptations not only facilitate usability evaluation in OSS but also highlight the importance of considering the unique characteristics of these projects when designing and implementing usability evaluations. Ultimately, our proposals contribute to better integration of usability into OSS development, promoting more intuitive and efficient interfaces that benefit a wide variety of users.

Our study demonstrates the feasibility of adapting usability evaluation techniques in OSS projects, improving the end-user experience. These adaptations allow OSS communities, often limited in resources and expertise, to implement usability practices without needing experts. By fostering a user-centered design culture and providing valuable feedback, developers can improve the software’s functionality and usability, increasing user adoption and satisfaction. In addition, documenting and standardizing these processes provides a replicable framework for future evaluations, facilitating the integration of new contributions and continuous improvements to the software.

To address the challenges faced by OSS projects in integrating usability practices, we propose several adaptations: (i) We facilitate remote user participation through virtual meetings and online surveys due to the geographic dispersion of collaborators. (ii) We replace the lack of usability experts with advanced HCI students supervised by mentors, providing hands-on experience to students. (iii) We use regular tasks that users perform in the OSS project, minimizing additional burden and obtaining more relevant results. (iv) We formalize each step of the adapted evaluation process and develop detailed documentation to guide its implementation in future OSS projects. These adaptations improve usability and foster greater awareness and appreciation of usability within OSS communities.

During our research, we encountered several specific limitations: (i) Difficulty in recruiting active participants from OSS communities, which was mitigated by involving UTEQ students. (ii) Lack of resources and usability experts in these communities, which was addressed by replacing experts with HCI students under mentoring supervision. (iii) Geographic dispersion of members, which was solved by using virtual tools such as Google Meet. (iv) Challenges in implementing traditional usability techniques, which were overcome by adapting CW, FUT, and TA techniques to the specific characteristics of OSS projects.

One of the main challenges in obtaining collaboration from the OSS community was the need for more response from the core developers and community users. During the evaluation of the Code::Blocks, Freeplane, and Scribus projects, we needed more interest or willingness to participate in usability testing. To overcome these challenges, we implemented several alternative methods for data collection. We used online surveys, forums, blogs, and virtual meetings to facilitate remote user participation, thus overcoming geographic and logistical constraints. In addition, we recruited external users, such as university students, who could participate under a mentor’s supervision. These strategies not only broadened the scope of the study but also improved the validity and reliability of our results by including a more diverse and representative sample of users. The lack of collaboration in usability evaluation highlights the importance of implementing mechanisms that actively motivate members of OSS communities to participate in this type of activity. Strategies such as (i) gamification, (ii) offering recognition, (iii) providing users with tools that reduce the effort required to report suggestions and opinions on usability issues, (iv) offering financial incentives to users who provide feedback or participate in usability testing, and (v) incorporating usability practices into development processes could be effective in overcoming this barrier. In addition, formalizing usability procedures tailored to the characteristics of OSS projects could facilitate their acceptance and application by volunteer developers. Future research must consider these strategies to improve participation and, thus, the technical robustness of usability studies in the OSS context.

While our study applied different techniques to the three selected projects, we recognize that applying the same technique to all three projects could provide a more unified perspective on the applicability of the proposed framework. However, our decision to apply a different technique to each OSS project was due to the heterogeneous characteristics of the selected OSS projects, which led us to prioritize the exploration of the adaptability of the framework to different contexts and needs.

10. Conclusions

The key takeaways of our study for improving usability in OSS projects are the following: Adapting usability techniques such as CW, FUT, and TA requires specific adjustments to be effective in OSS contexts, including remote user participation and replacing usability experts with supervised students. An active community and constant updates are essential for successfully implementing usability techniques, while developer education and training, along with comprehensive documentation and support resources, facilitate the adoption of these techniques. These measures ensure that usability improvements are effective and sustainable over the long term.

The objective of our research work has been to evaluate the feasibility of applying CW, FUT, and TA in the OSS: Code::Blocks, Freeplane, and Scribus projects. Getting users to participate in the application of the techniques selflessly took work. As mentioned above, since the users are geographically distributed worldwide, they do not have enough time or resources. Without some incentive, it is not easy to get them to participate. On the other hand, we rely on the participation of external users (friends and colleagues of the UTEQ) because we did not obtain any response from the user communities for the projects or their developers. For this reason, we chose to recruit external users with intermediate to advanced knowledge. We achieved this by disseminating the material to gather information through email, WhatsApp, and forums.

To apply the three techniques, we created several templates that served as tools to collect specific information from the OSS case study projects. The participants in the usability evaluations showed great interest and were very candid in their opinions and feedback. Our adaptations to the three usability techniques CW, FUT, and TA proved effective in evaluating the OSS: Code::Blocks, Freeplane, and Scribus projects, respectively, despite our user communication and participation challenges. These findings highlight the importance of adapting evaluation methodologies to specific contexts and the need for close collaboration between researchers and the software development community to improve OSS projects’ quality and user experience continuously.

The main adverse conditions of the CW, FUT, and TA that we identified as hindering their application in OSS developments are mainly twofold. First, a usability expert is needed to apply the technique. Secondly, there is a lack of face-to-face users. To solve these difficulties, we propose, on the one hand, that the expert be replaced, for example, by a student or group of HCI students under the supervision of a mentor. On the other hand, the participation of OSS users should be virtual. That is, users participate through remote meetings. Applying CW, FUT, and TA represents a significant advantage over others (such as heuristic evaluation and automated usability testing) because they interact personally with users facing problems in person or virtually.

In the case of the application of the CW, we suggest considering external users with an intermediate–advanced level of expertise, and preferably a participation of more than five users [

33], since it may happen that one or more users do not show up during the application of the technique, either for personal reasons or technical problems. For the case of the FUT, we recommend involving at least five users to ensure a representative usability evaluation, ideally with varied profiles reflecting the diversity of end users. For the TA, it is advisable to integrate detailed individual sessions to capture users’ intuitive reactions and thought processes during interaction with the OSS project.

Finally, we consider that the three OSS projects, Code::Blocks, Freeplane, and Scribus, have a long way to go to improve their usability. The developers of the OSS projects considered in our study use only common sense to achieve usability in their software developments, coinciding with what was stated by Andreasen et al. [

2]. This results in the development of software with low-quality criteria. As mentioned above, OSS projects need the support of a team with experience in usability and who are willing to help them improve the quality of their software products.

For OSS communities to implement usability techniques effectively, the following practical recommendations are suggested: (i) provide training in usability techniques through workshops and online courses, (ii) involve usability experts through collaborations and mentoring, (iii) provide accessible tools and resources for usability evaluation, (iv) integrate these techniques into the software development process, (v) maintain clear documentation on usability processes and results, and (v) foster a culture that values usability, promoting its benefits and recognizing efforts in this area. These measures ensure that usability becomes an integral and valued part of OSS project development, thereby improving the user experience and the success of the software.

The future of usability practices in OSS development is promising and full of potential for significant advances. As OSS continues to grow and become more integral in various industries, the emphasis on usability is expected to increase. This will be driven by increased collaboration between developers and user experience experts, adopting standardized usability frameworks, developing tools to support usability testing, and community-driven improvements. In addition, integrating artificial intelligence into usability testing will provide intelligent insights and predictions of user behavior. At the same time, inclusive design will ensure that OSS projects are accessible to many users. By embracing these trends and developments, the OSS community can significantly improve the usability of their projects, making them more user-friendly and accessible to a broader audience, which will contribute to the success and adoption of OSS in various sectors.

Because of the findings of this study, we have to identify four directions for future research in the area of usability improvement in OSS projects. First, it is recommended that the number of case studies be expanded to include a wider variety of OSS projects covering different domains and software types. This will allow for better validation and generalization of the results obtained. Second, it is essential to investigate the application of usability evaluation techniques beyond the three techniques studied (CW, FUT, and TA) to explore the effectiveness of other methods in the OSS context. This will allow us to broaden the spectrum of available tools and gain a more complete understanding of the user experience in these environments. Third, we also consider it vital to study further the factors that affect the OSS community’s participation in usability studies. Developing effective strategies to foster collaboration and user feedback will be fundamental to improving the quality of OSS projects and their adaptation to the needs of end users. Finally, another area of interest is the development of specific tools or adaptations of existing tools to facilitate usability evaluation in OSS environments (for example, a browser extension for collecting end-user feedback directly from the OSS project interface). These tools should be designed to consider the particularities and limitations of the community and OSS projects to optimize the evaluation process and improve the user experience.