Educational Roles and Scenarios for Large Language Models: An Ethnographic Research Study of Artificial Intelligence

Abstract

1. Introduction

- To establish a general framework for the evaluation of LLMs in education, especially in the context of their current benefits and challenges and the extant literature, treating LLMs by using qualitative and ethnographic methods;

- To open a new direction for the role of AI in educational research by providing an AI-guided perspective concerning its own role in the educational process.

2. Theoretical Background: Artificial Intelligence in Education (AIEd)

3. SWOT Analysis

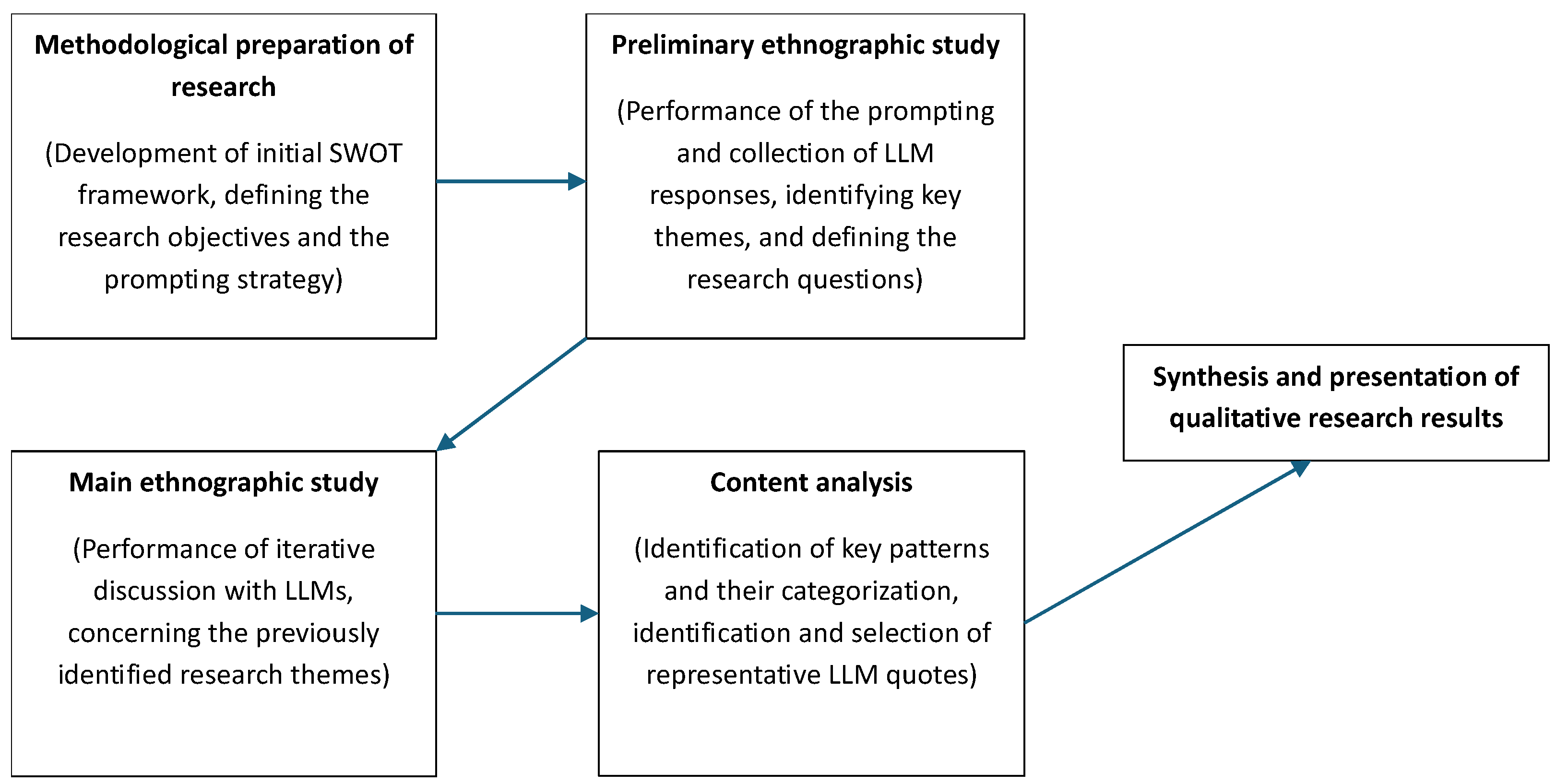

4. Materials and Methods

5. Results

5.1. Identification of Ethnographic Research Questions (Preliminary Ethnographic Study)

5.2. Results of the (Main) Digital Ethnographic Study

- Adoption and user perception: Stakeholders of AI applications include educators, researchers, administrators, and students. The need for efficiency, individualized instruction, opportunities for effective research, and skill development drives them. According to LLMs, their motives vary greatly, with administrators focusing on operational efficiency and educators on the pedagogical advancement. As pointed out by LLM1, “The adoption of AI tools within the educational system is influenced by various stakeholders, each with their motivations”. LLM2 provided a more complex and comprehensive view of how educators might perceive LLMs in educational settings: “How educators might perceive me:

- ○

- A valuable resource: I could be a powerful tool for research, streamlining literature reviews, and aiding data analysis for both educators and students. I can also help with lesson planning, content creation, and provide personalized support to students.

- ○

- A potential disruptor: The potential to outsource some tasks to LLMs can both enhance education and raise concerns about academic integrity, student skill development, and changing roles in academia.

- ○

- An ethical question: My use raises discussions about originality, plagiarism, the potential for biases within my training data, and the need for responsible guidelines as AI integration increases.

- ○

- Collaborative tool: I’m best viewed as an assistant rather than a replacement for educators. The most effective applications of LLMs will involve human oversight and critical thinking skills”.

- Threats and concerns about AI in education: Threats and concerns about AI in education should be viewed in the context of its potential benefits, which were previously discussed in this paper. Probably the most important fear among many educators concerns being replaced by AI. While we can be optimistic about the capabilities of AI, the debate becomes more complex due to skills gaps, ethical dilemmas rooted in privacy concerns, and the risks of depersonalizing educational processes due to AI integration. Relevant interview quotes for this content dimension include the impact on evaluation practices raised by an LLM1: “Educators are redesigning assignments to focus more on critical thinking, creativity, and originality”. LLM2 analyzed perceptions of human stakeholders in the education and research sectors, as presented in Table 5.

- Impact on educational practices: When considering the effects of AI in educational practice, the issue of tyranny of efficiency could be raised. The focus on efficiency might undermine educational values such as critical thinking and individual ethics. At the same time, AI holds great promise in allowing the personalization of learning pathways and increasing student engagement. With more and more educational content being created by AI, there is an increased need to generally transform assignment evaluation and grading. More emphasis should be placed on integrity and ethics in writing assignments, student engagement, creativity, and critical thinking above rote learning.

- Research efficiency vs. human values: In terms of balancing the outcomes of AI applications in education, there is a delicate balance between achieving research productivity and preserving human values. AI can potentially expedite and extend the reach of literature reviews, but legitimate concerns about placing quantity over quality and research integrity exist. AI technology can facilitate interdisciplinary research and enable researchers to ask more holistic questions. Nonetheless, concerns have been raised about relying excessively on AI outputs and processes. The tension between the depth of critical engagement and the speed of information processing is part of a much larger discussion over what role education should play in the digital age. In this context, LLM1 warned of a possibly more general role of AI responsibility that would risk educational quality: “There is concern that an over-reliance on AI could lead to a one-size-fits-all approach to education”. Simultaneously, LLM2 pointed out that the potential biases and ethical issues are not a consequence of AI per se, but rather a consequence of the existing societal biases and issues: “It is crucial to remember that AI itself isn’t inherently biased. It’s the biases within the data used to train AI, along with unequal access, that create the disparity in how it is used”.

- Reasons for non-usage: Concerns over the impact of technology companies on research directions and the concept of knowledge were raised by the dependence on AI-powered platforms. Ethical concerns also play a role in some stakeholders resisting adopting AI systems in environments. For institutions lacking internet access expertise in AI technologies and funding for implementation, there is a risk of widening the AI divide, i.e., the lack of AI access (comparable to the classical notion of the “Digital Divide”): “Students from lower socioeconomic backgrounds or those in underfunded institutions may face significant barriers to accessing AI technologies” (LLM1). In addition, LLM2 further emphasized the social consequences of technological development by raising the following points:

- ○

- “Reinforced educational divide: The potential for AI to help bridge educational gaps is undermined if access isn’t equitable.

- ○

- Loss of potential: Talented students from underfunded backgrounds are hindered in reaching their full potential and contributing to the innovation economy of the future.

- ○

- Social impacts: The AI-driven economy will rely on AI-literate workers. Unequal access to AI education contributes to wider societal divides and lack of diversity in the tech sector”.

6. Discussion and Practical Implications

6.1. Discussion of Qualitative Research Results

- Routine tasks can be automated, freeing up more time for meaningful interactions with students, as already indicated by the literature on the nature of learning in the age of rapid AI development [49,50]. This also creates possibilities for innovation, such as AI-driven adaptive learning platforms and novel AIEd use cases.

- Too much reliance on automation using AI might diminish faculty precision, nuance, and creativity in creating educational materials and focusing on their specializations. Educational materials should emphasize students’ analytical thinking [51] as grading becomes oriented toward the assessment of reasoning and critical interpretation of facts [52]. Faculty also needs to engage in fact-checking continuously and transparently communicate with students about the role of AI in creating content.

- The contemporary curricula should also include AI literacy [53] to ensure that faculty and students can use AI tools efficiently and critically and follow technological developments.

6.2. Practical Implications for Educational Practice Innovation

6.3. Limitations and Biases of Current Research and Future Research Directions

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- TechTarget Editorial. Available online: https://www.techtarget.com/searchenterpriseai/definition/AI-Artificial-Intelligence (accessed on 7 January 2024).

- Merriam-Webster. Available online: https://www.merriam-webster.com/dictionary/artificial%20intelligence (accessed on 7 January 2024).

- Wah, B.W.; Huang, T.S.; Joshi, A.K.; Moldovan, D.; Aloimonos, J.; Bajcsy, R.K.; Ballard, D.; DeGroot, D.; DeJong, K.; Dyer, C.R.; et al. Report on workshop on high performance computing and communications for grand challenge applications: Computer vision, speech and natural language processing, and artificial intelligence. IEEE Trans. Knowl. Data Eng. 1993, 5, 138–154. [Google Scholar] [CrossRef] [PubMed]

- What Is a Large Language Model (LLM)? Available online: https://www.elastic.co/what-is/large-language-models (accessed on 7 January 2024).

- Crompton, H.; Burke, D. Artificial intelligence in higher education: The state of the field. Int. J. Educ. Technol. High. Educ. 2023, 20, 22. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial Intelligence in Education: A Review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Ahmad, S.F.; Han, H.; Alam, M.M.; Rehmat, M.; Irshad, M.; Arraño-Muñoz, M.; Ariza-Montes, A. Impact of artificial intelligence on human loss in decision making, laziness and safety in education. Humanit. Soc. Sci. Commun. 2023, 10, 311. [Google Scholar] [CrossRef] [PubMed]

- Stokel-Walker, C.; Van Noorden, R. What ChatGPT and generative AI mean for science. Nature 2023, 614, 214–216. [Google Scholar] [CrossRef]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef]

- Goertzel, B. Generative AI vs. AGI: The Cognitive Strengths and Weaknesses of Modern LLMs. arXiv 2023, arXiv:2309.10371. [Google Scholar] [CrossRef]

- Preiksaitis, C.; Rose, C. Opportunities, Challenges, and Future Directions of Generative Artificial Intelligence in Medical Education: Scoping Review. JMIR Med. Educ. 2023, 9, e48785. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 6. [Google Scholar] [CrossRef]

- Nori, H.; Lee, Y.T.; Zhang, S.; Carignan, D.; Edgar, R.; Fusi, N.; King, N.; Larson, J.; Li, Y.; Liu, W.; et al. Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine. arXiv 2023, arXiv:2311.16452. [Google Scholar] [CrossRef]

- Pagani, R.N.; de Sá, C.P.; Corsi, A.; de Souza, F.F. AI and Employability: Challenges and Solutions from this Technology Transfer. In Smart Cities and Digital Transformation: Empowering Communities, Limitless Innovation, Sustainable Development and the Next Generation; Lytras, M.D., Housawi, A.A., Alsaywid, B.S., Eds.; Emerald Publishing: Bingley, UK, 2023; pp. 253–284. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L.B. Intelligence Unleashed: An Argument for AI in Education; UCL Institute of Education: London, UK, 2016; Available online: http://discovery.ucl.ac.uk/1475756/ (accessed on 7 January 2024).

- Ouyang, F.; Zheng, L.; Jiao, P. Artificial Intelligence in Online Higher Education: A Systematic Review of Empirical Research from 2011–2020. Educ. Inf. Technol. 2022, 27, 7893–7925. [Google Scholar] [CrossRef]

- Perez, S.; Massey-Allard, J.; Butler, D.; Ives, J.; Bonn, D.; Yee, N.; Roll, I. Identifying Productive Inquiry in Virtual Labs Using Sequence Mining. In Artificial Intelligence in Education; André, E., Baker, R., Hu, X., Rodrigo, M.M.T., du Boulay, B., Eds.; Springer: Cham, Switzerland, 2017; Volume 10331, pp. 287–298. [Google Scholar] [CrossRef]

- Jonassen, D.; Davidson, M.; Collins, M.; Campbell, J.; Haag, B.B. Constructivism and Computer-Mediated Communication in Distance Education. Am. J. Distance Educ. 1995, 9, 7–25. [Google Scholar] [CrossRef]

- Salmon, G. E-Moderating: The Key to Teaching and Learning Online; Routledge: London, UK, 2000. [Google Scholar]

- Bahadır, E. Using Neural Network and Logistic Regression Analysis to Predict Prospective Mathematics Teachers’ Academic success upon entering graduate education. Kuram Uygulamada Egit. Bilim. 2016, 16, 943–964. [Google Scholar] [CrossRef]

- Baker, T.; Smith, L. Educ-AI-tion Rebooted? Exploring the Future of Artificial Intelligence in Schools and Colleges; Nesta Foundation: London, UK, 2019; Available online: https://media.nesta.org.uk/documents/Future_of_AI_and_education_v5_WEB.pdf (accessed on 7 January 2024).

- Chu, H.; Tu, Y.; Yang, K. Roles and Research Trends of Artificial Intelligence in Higher Education: A Systematic Review of the Top 50 Most-Cited Articles. Australas. J. Educ. Technol. 2022, 38, 22–42. [Google Scholar] [CrossRef]

- Crompton, H.; Bernacki, M.L.; Greene, J. Psychological Foundations of Emerging Technologies for Teaching and Learning in Higher Education. Curr. Opin. Psychol. 2020, 36, 101–105. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Ozbel, B.K.; Dudaklı, N.; Subulan, K.; Şenol, M.E. Process Mining Based Approach to Performance Evaluation in Computer-Aided Examinations. Comput. Appl. Eng. Educ. 2018, 26, 1841–1861. [Google Scholar] [CrossRef]

- Dever, D.A.; Azevedo, R.; Cloude, E.B.; Wiedbusch, M. The Impact of Autonomy and Types of Informational Text Presentations in Game-Based Environments on Learning: Converging Multi-Channel Processes Data and Learning Outcomes. Int. J. Artif. Intell. Educ. 2020, 30, 581–615. [Google Scholar] [CrossRef]

- Verdú, E.; Regueras, L.M.; Gal, E.; de Castro, J.P.; Verdú, M.J.; Kohen-Vacs, D. Integration of an Intelligent Tutoring System in a Course of Computer Network Design. Educ. Technol. Res. Dev. 2017, 65, 653–677. [Google Scholar] [CrossRef]

- Salas-Pilco, S.; Yang, Y. Artificial Intelligence Application in Latin America Higher Education: A Systematic Review. Int. J. Educ. Technol. High. Educ. 2022, 19, 21. [Google Scholar] [CrossRef]

- Baker, R.S.; Hawn, A. Algorithmic Bias in Education. Int. J. Artif. Intell. Educ. 2022, 32, 1052–1092. [Google Scholar] [CrossRef]

- Helms, M.M.; Nixon, J. Exploring SWOT analysis–where are we now? A review of academic research from the last decade. J. Strategy Manag. 2010, 3, 215–251. [Google Scholar] [CrossRef]

- Benzaghta, M.A.; Elwalda, A.; Mousa, M.M.; Erkan, I.; Rahman, M. SWOT analysis applications: An integrative literature review. J. Glob. Bus. Insights 2021, 6, 55–73. [Google Scholar] [CrossRef]

- Humble, N.; Mozelius, P. The threat, hype, and promise of artificial intelligence in education. Discov. Artif. Intell. 2022, 2, 22. [Google Scholar] [CrossRef]

- Choudhury, S.; Pattnaik, S. Emerging themes in e-learning: A review from the stakeholders’ perspective. Comput. Educ. 2020, 144, 103657. [Google Scholar] [CrossRef]

- Kitchin, R. Thinking critically about and researching algorithms. In The Social Power of Algorithms; Beer, D., Ed.; Routledge: New York, NY, USA, 2019; pp. 14–29. [Google Scholar]

- Huang, J.; Gu, S.S.; Hou, L.; Wu, Y.; Wang, X.; Yu, H.; Han, J. Large Language Models Can Self-Improve. arXiv 2022, arXiv:2210.11610. [Google Scholar] [CrossRef]

- Baek, J.; Jauhar, S.K.; Cucerzan, S.; Hwang, S.J. ResearchAgent: Iterative Research Idea Generation over Scientific Literature with Large Language Models. arXiv 2024, arXiv:2404.07738, 2024. [Google Scholar] [CrossRef]

- Argyle, L.; Amirova, A.; Fteropoulli, T.; Ahmed, N.; Cowie, M.R.; Leibo, J.Z. Framework-Based Qualitative Analysis of Free Responses of Large Language Models: Algorithmic Fidelity. PLoS ONE 2024, 19, e0300024. [Google Scholar] [CrossRef]

- De Seta, G.; Pohjonen, M.; Knuutila, A. Synthetic Ethnography: Field Devices for the Qualitative Study of Generative Models. Soc. Sci. Res. Netw. 2024. Available online: https://osf.io/preprints/socarxiv/zvew4 (accessed on 17 October 2024).

- Murthy, D. Digital ethnography: An examination of the use of new technologies for social research. Sociology 2008, 42, 837–855. [Google Scholar] [CrossRef]

- Eysenbach, G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers. JMIR Med. Educ. 2023, 9, e46885. [Google Scholar] [CrossRef]

- OpenAI Prompt Engineering. Available online: https://platform.openai.com/docs/guides/prompt-engineering (accessed on 12 February 2024).

- Google Prompt Design Strategies. Available online: https://ai.google.dev/docs/prompt_best_practices (accessed on 12 February 2024).

- Farrokhnia, M.; Banihashem, S.K.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2024, 61, 460–474. [Google Scholar] [CrossRef]

- Moore, M. Vygotsky’s Cognitive Development Theory. In Encyclopedia of Child Behavior and Development; Goldstein, S., Naglieri, J.A., Eds.; Springer: Boston, MA, USA, 2011. [Google Scholar] [CrossRef]

- Tzuriel, D. The Socio-Cultural Theory of Vygotsky. In Mediated Learning and Cognitive Modifiability. Social Interaction in Learning and Development; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Polyportis, A.; Pahos, N. Navigating the Perils of Artificial Intelligence: A Focused Review on ChatGPT and Responsible Research and Innovation. Humanit. Soc. Sci. Commun. 2024, 11, 1–10. [Google Scholar] [CrossRef]

- Alqahtani, T.; Badreldin, H.A.; Alrashed, M.; Alshaya, A.I.; Alghamdi, S.S.; bin Saleh, K.; Alowais, S.A.; Alshaya, O.A.; Rahman, I.; Al Yami, M.S.; et al. The emergent role of artificial intelligence, natural learning processing, and large language models in higher education and research. Res. Soc. Adm. Pharm. 2023, 19, 1236–1242. [Google Scholar] [CrossRef] [PubMed]

- Yan, L.; Sha, L.; Zhao, L.; Li, Y.; Martinez-Maldonado, R.; Chen, G.; Li, X.; Jin, Y.; Gašević, D. Practical and ethical challenges of large language models in education: A systematic scoping review. Br. J. Educ. Technol. 2024, 55, 90–112. [Google Scholar] [CrossRef]

- Kshirsagar, P.R.; Jagannadham, D.B.V.; Alqahtani, H.; Noorulhasan Naveed, Q.; Islam, S.; Thangamani, M.; Dejene, M. Human Intelligence Analysis through Perception of AI in Teaching and Learning. Comput. Intell. Neurosci. 2022, 9160727. [Google Scholar] [CrossRef] [PubMed]

- Senior, J.; Gyarmathy, É. AI and Developing Human Intelligence: Future Learning and Educational Innovation; Routledge: London, UK, 2021. [Google Scholar]

- Morales-Chan, M.; Amado-Salvatierra, H.R.; Hernandez-Rizzardini, R. AI-Driven Content Creation: Revolutionizing Educational Materials. In Proceedings of the Eleventh ACM Conference on Learning@ Scale, Atlanta, GA, USA, 18–20 July 2024; pp. 556–558. [Google Scholar] [CrossRef]

- Owan, V.J.; Abang, K.B.; Idika, D.O.; Etta, E.O.; Bassey, B.A. Exploring the Potential of Artificial Intelligence Tools in Educational Measurement and Assessment. Eurasia J. Math. Sci. Technol. Educ. 2023, 19, em2307. [Google Scholar] [CrossRef] [PubMed]

- Allen, L.K.; Kendeou, P. ED-AI Lit: An Interdisciplinary Framework for AI Literacy in Education. Policy Insights Behav. Brain Sci. 2024, 11, 3–10. [Google Scholar] [CrossRef]

- Kim, J. Leading Teachers’ Perspective on Teacher-AI Collaboration in Education. Educ. Inf. Technol. 2024, 29, 8693–8724. [Google Scholar] [CrossRef]

- Ji, H.; Han, I.; Ko, Y. A Systematic Review of Conversational AI in Language Education: Focusing on the Collaboration with Human Teachers. J. Res. Technol. Educ. 2023, 55, 48–63. [Google Scholar] [CrossRef]

- Elliott, D. This AI Tutor Could Make Humans “10 Times Smarter”, Its Creator Says. World Economic Forum. 2024. Available online: https://www.weforum.org/agenda/2024/07/ai-tutor-china-teaching-gaps/ (accessed on 4 August 2024).

- Willige, A. From Virtual Tutors to Accessible Textbooks: 5 Ways AI Is Transforming Education. World Economic Forum. 2024. Available online: https://www.weforum.org/agenda/2024/05/ways-ai-can-benefit-education/ (accessed on 4 August 2024).

- Fan, Q.; Qiang, C.Z. Tipping the Scales: AI’s Dual Impact on Developing Nations. World Bank. 2024. Available online: https://blogs.worldbank.org/en/digital-development/tipping-the-scales--ai-s-dual-impact-on-developing-nations (accessed on 4 August 2024).

- OECD. Governing with Artificial Intelligence; OECD Publications: 2024. Available online: https://www.oecd.org/content/dam/oecd/en/publications/reports/2024/06/governing-with-artificial-intelligence_f0e316f5/26324bc2-en.pdf (accessed on 4 August 2024).

- Doshi-Velez, F.; Kim, B. Towards a Rigorous Science of Interpretable Machine Learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

Strengths

| Weaknesses

|

Opportunities

| Threats

|

Cultural and Social Dynamics:

| Human–AI Interaction:

| Equity and Access:

|

Implications for Pedagogy and Knowledge Production:

| ||

Cultural impact:

| User experience:

| Human dimensions:

|

Adoption and User Perception:

| Impact on Practices:

|

Values and Power Dynamics:

| Focus on Non-Users:

|

Common anxieties:

| Positive co-creation outlook:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alfirević, N.; Rendulić, D.; Fošner, M.; Fošner, A. Educational Roles and Scenarios for Large Language Models: An Ethnographic Research Study of Artificial Intelligence. Informatics 2024, 11, 78. https://doi.org/10.3390/informatics11040078

Alfirević N, Rendulić D, Fošner M, Fošner A. Educational Roles and Scenarios for Large Language Models: An Ethnographic Research Study of Artificial Intelligence. Informatics. 2024; 11(4):78. https://doi.org/10.3390/informatics11040078

Chicago/Turabian StyleAlfirević, Nikša, Darko Rendulić, Maja Fošner, and Ajda Fošner. 2024. "Educational Roles and Scenarios for Large Language Models: An Ethnographic Research Study of Artificial Intelligence" Informatics 11, no. 4: 78. https://doi.org/10.3390/informatics11040078

APA StyleAlfirević, N., Rendulić, D., Fošner, M., & Fošner, A. (2024). Educational Roles and Scenarios for Large Language Models: An Ethnographic Research Study of Artificial Intelligence. Informatics, 11(4), 78. https://doi.org/10.3390/informatics11040078

_Bryant.png)