An Approach to Assess the Impact of Tutorials in Video Games

Abstract

1. Introduction

- The core of the approach is a classification framework, which enables us to categorize the tutorials of some selected video games belonging to a specific genre based on a set of gaming features (e.g., player’s freedom degree, context-sensitivity of the tutorial, etc.).

- The second step consists of developing a single-level video game equipped with a tutorial realized in different variants in a way that each variant reproduces the mechanisms underlying any of the most common gaming features found in the previous step.

- The third step concerns the enactment of a user experiment involving different groups of players, instructing them to play the main level of the (novel) video game in the absence of any tutorial or after having completed (only) one of the tutorial variants.

- The fourth step consists of administering dedicated questionnaires to the players involved in the user experiment. The target is to understand which tutorial variant was the most effective for the comprehension of the game mechanisms, combining players’ evaluation with empirical measures collected during the user experiment.

2. Background and Related Work

2.1. Previous Works on Assessing Tutorials

2.2. Novelties

3. Approach

3.1. Classification Framework

- Tutorial presence: true if the game under analysis provides some in-game tutorial.

- Context sensitivity: true if the instructions for a certain action or game mechanic are shown to the players only when they really need to use them during the gameplay.

- Freedom: true if the players are provided with some freedom during the tutorial, e.g., they can make choices in the game.

- Availability of help: true if, during the tutorial, the game understands when the player is in need of help and reacts to that through textual, visual, or graphical cues.

- 5.

- Printed: true if the game provides printed documentation with the game instructions.

- 6.

- On-screen text: true if the game delivers instructions to the players through text.

- 7.

- Voice: true if the game delivers instructions through voice.

- 8.

- Video: true if the game explains mechanics or commands employing dedicated videos, including cut scenes.

- 9.

- Helping avatar: true if an in-game assistant supports the player. In-game assistants range from a non-playable character (NPC) to fictitious characters speaking to the player through a phone call, etc. We notice that a helping avatar is considered part of the game world; i.e., it is not simply a voice or a text providing specific instructions.

- 10.

- Controller diagram: true if the game shows the players (on request) an image describing how commands are mapped to the input device.

- 11.

- Command scheme customization: true if the game lets the player change the command scheme mapping.

- 12.

- Skippable: true if the tutorial can be completely skipped.

- 13.

- Story integration: true if the tutorial is integrated into the game’s story.

- 14.

- Practice tool presence: true if the players are provided with a safe place to practice and get used to commands and game mechanics.

3.2. Questionnaires Development and Evaluation Techniques

- Demographic information (DI): Users’ experience with video games and with the particular genre in which the analysis will focus, along with age and gender.

- Simple debriefing (SD): It is interesting to know if the user usually skips tutorials in video games when possible and if, just after having played the game, s/he felt like the tutorial was helpful.

- Learnability of the game (LG): To measure if the game is easier to learn when users are presented with one particular tutorial.

- How much users felt loaded (UL): Some questions to understand the amount of mental workload required to the users while playing the game.

- Performance self evaluation (PE): To measure how users felt successful while playing the game.

- User experience (UX): Some thoughts from the users about their experience while playing the game.

- Pre-Questionnaire: It was built to obtain demographic information on the participants and to understand their expertise with video games. The pre-questionnaire provides the following items (cf. DI):

- (a)

- Which is an email address to which we can send the game? (open answer)

- (b)

- What is your age? (open answer)

- (c)

- Which is your gender? (female/male/prefer not to say/other)

- (d)

- How much are you experienced with video games? (Likert scale from 1—I never play games to 5—I always play games)

- (e)

- How much are you experienced with the genre of video games under analysis? (Likert scale from 1—I never play this genre of games to 5—I always play this genre of games)

- (f)

- Please indicate your favorite video game’s genre (open answer, optional).

- (g)

- Consent data collection (yes/no).

- Post-Questionnaire: It was built to understand how each tutorial variant can influence the player experience in the context of a certain game genre. The post-questionnaire provides the following items:

- (a)

- Questions about learnability (cf. LG):

- I have perfectly understood how to perform actions (with examples) for playing the game. (Likert scale from 0—Never to 3—Always)

- The information provided throughout the game (with examples) are clear. (Likert scale from 0—Never to 3—Always)

- (b)

- Debriefing questions (cf. SD):

- Before playing the game, would you have skipped the tutorial if it was possible? (yes/no)

- Please explain in a few lines the reason of your choice (open answer, optional).

- After you have finished playing the game, do you think that the tutorial was useful? (yes/no).

- Please explain in a few lines the reason for your choice (open answer, optional).

- (c)

- NASA Task Load Index [30], in which every item is evaluated with a Likert scale ranging from 1—Low to 10—High (cf. UL):

- How much mental and perceptual activity was required (e.g., thinking, deciding, calculating, remembering, looking, searching, etc.) to play the game?

- How much pressure did you feel, due to the pace at which the game is set, or due to the setting of the game?

- How successful do you think you were in playing the game?

- How hard did you have to mentally work to accomplish your level of performance?

- How frustrated (e.g., insecure, discouraged, irritated, stressed and annoyed) did you feel while playing the game?

- (d)

- User eXperience Questionnaire [29]: It consists of a set of pairs of contrasting attributes. The attributes are at the opposite values on a Likert scale ranging from (1) to (7). Users can express opinions on the game by choosing the value that most closely reflects their impression (cf. UX).

- (1) Annoying–(7) Enjoyable

- (1) Not understandable–(7) Understandable

- (1) Creative–(7) Dull

- (1) Valuable–(7) Inferior

- (1) Boring–(7) Exciting

- (1) Not interesting–(7) Interesting

- (1) Easy to learn–(7) Difficult to learn

- (1) Unpredictable–(7) Predictable

- (1) Fast–(7) Slow

- (1) Inventive–(7) Conventional

- (1) Obstructive–(7) Supportive

- (1) Good–(7) Bad

- (1) Complicated–(7) Easy

- (1) Unlikable–(7) Pleasing

- (1) Usual–(7) Leading edge

- (1) Unpleasant–(7) Pleasant

- (1) Secure–(7) Not secure

- (1) Motivating–(7) Demotivating

- (1) Meets expectations–(7) Does not meet expectations

- (1) Inefficient–(7) Efficient

- (1) Clear–(7) Confusing

- (1) Impractical–(7) Practical

- (1) Organized–(7) Cluttered

- (1) Attractive–(7) Unattractive

- (1) Friendly–(7) Unfriendly

- (1) Conservative–(7) Innovative

- File Logging: to obtain empirical measures of users’ performance directly from the game. This step could vary greatly depending on the genre of the video games on which the study focuses, but it should contain every parameter related to users’ in-game actions that can be captured. In particular, we chose to log the following parameters:

- Time spent in the tutorial.

- Time spent in each level.

- Number of times the user tried each level.

- Number of deaths in each level (relevant only for some game genres).

- Number of deaths in the tutorial (relevant only for some game genres).

- Time stamps for important events.

- Numbers of enemies killed (relevant only for some game genres).

- Final result (defeat/victory/drop-out)

- Empirical measure of performance (dependent from the game prototype).

4. Use Case

4.1. Applying the Classification Framework

- The most popular one, with 10 entries in the table consisted of: presence of tutorial, context sensitivity, NOT freedom, help availability, text usage, voice usage, NOT video usage, helping avatar, NOT skippable, story integration, NOT practice tool, NOT printed, command scheme customization and controller diagram. This combination of gaming features is commonly found in narrative-driven FPSs, such as “Destiny”.

- Excluding the games belonging to the previous selection from the table, we are left with great diversity. Some video games, though, differ only for the evaluation of one or two parameters. Thus, we decided to select "Overwatch" (and its combination of gaming features) as representative of the games not belonging to the first selection. Therefore. the second combination consisted of: presence of tutorial, context sensitivity, freedom, help availability, text usage, voice usage, NOT video usage, helping avatar, skippable, NOT story integration, practice tool, NOT printed, command scheme customization and controller diagram. Games of this second group can be found by searching in the table for rows where the parameter “Pratice Tool Presence” is true.

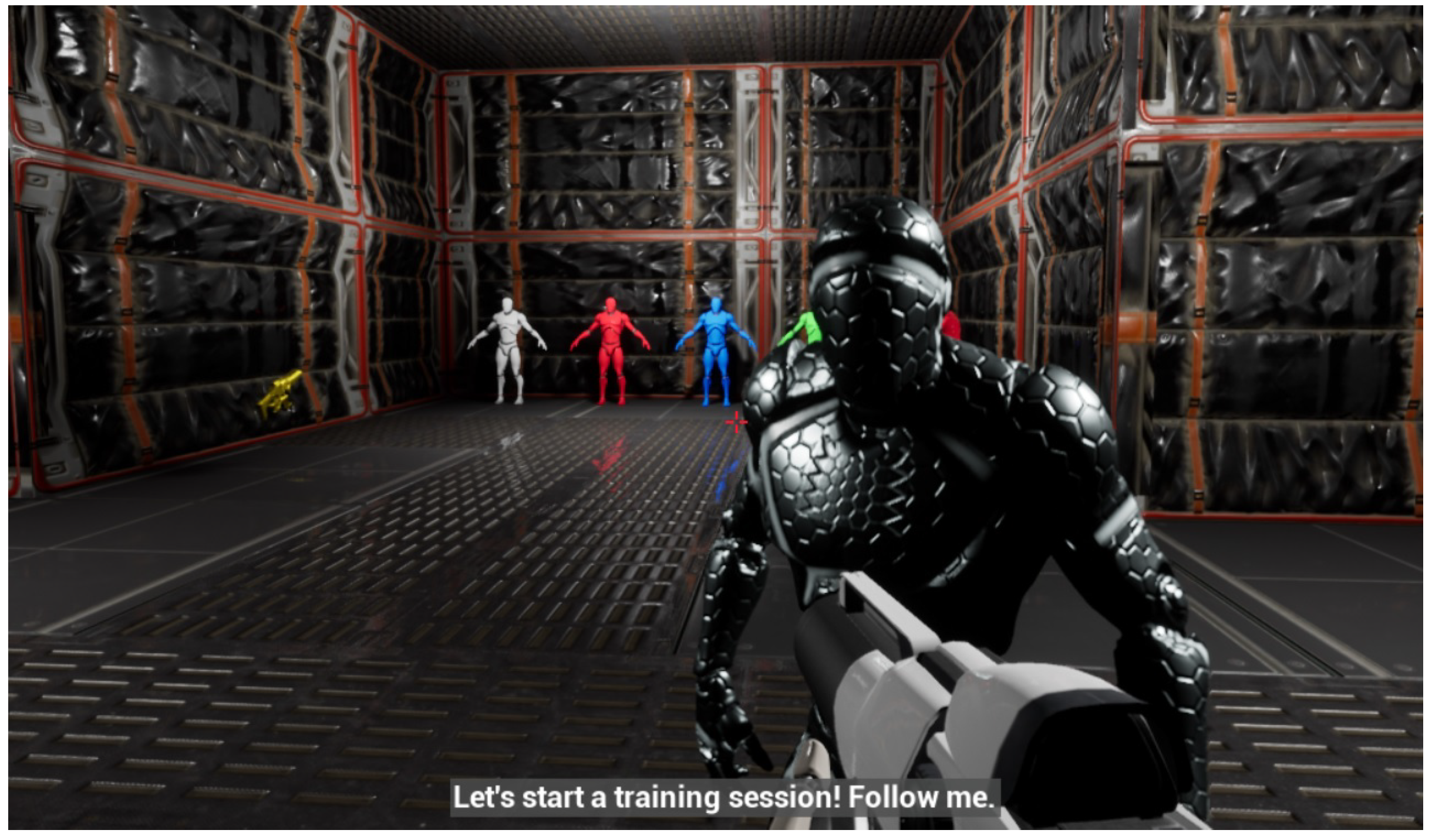

4.2. Game Prototype Development

- Unlimited ammunition with no reload time;

- One bullet, of the right color, is enough to kill an enemy;

- Players can pick up golden guns. This will give them the ability to shoot golden bullets that can kill every enemy for 10 s;

- Players die if an enemy stays within a specific radius from them for some time;

- The last room of the level contains the final boss that requires 10 shots of a special gun to be killed;

- Doors can be opened by shooting colored buttons;

- In the main level, enemies are spawned in infinite waves;

- Adaptive difficulty that gently invites users to play by combining exploration and shooting.

4.3. User Testing

4.3.1. Recruitment Phase

4.3.2. Pre-Questionnaire

4.3.3. Testing Phase

- G1: “Narrative Tutorial Group” (15 participants): played a version of the game equipped with the first tutorial variant followed by the main game level.

- G2: “Simple Tutorial Group” (16 participants): played a version of the game equipped with the second tutorial variant followed by the main game level.

- G3: “No Tutorial Group” (15 participants): played a version of the game equipped only with the main game level (no tutorial available).

4.3.4. Post-Questionnaire and File Logging

4.4. Results

- Users in G3 (no tutorial) felt themselves more successful during the gaming experience but perceived the game pace as slower and, in general, the game mechanics more difficult to learn with respect to the other groups;

- Users in G1 (first tutorial variant) found the game more friendly than users in G3 and more understandable if compared with users in G2 and G3;

5. Discussion, Future Work and Concluding Remarks

- AAA game modalities are currently being pushed as a standard for the future [35]. We believe that delivering an approach for realizing effective tutorials for AAA games can also positively affect the tutorial design for other kinds of games, where carefully crafting a tutorial requires a significant effort.

- AAA game developers are less likely to invest many resources in an aspect of the game (i.e., the tutorial design) that is time-consuming, provides less entertainment to the final user, or can potentially be skipped [8]. In this respect, our approach aims to mitigate the burden of designing effective tutorials by supporting game designers in selecting the most suitable subset of components for tutorial creation.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bergonse, R. Fifty Years on, What exactly is a video game? An essentialistic definitional approach. Comput. Games J. 2017, 6, 239–255. [Google Scholar] [CrossRef]

- Veneruso, S.V.; Ferro, L.S.; Marrella, A.; Mecella, M.; Catarci, T. CyberVR: An Interactive Learning Experience in Virtual Reality for Cybersecurity Related Issues. In Proceedings of the International Conference on Advanced Visual Interfaces, Salerno, Italy, 28 September–2 October 2020. [Google Scholar]

- Ferro, L.S.; Sapio, F. Another Week at the Office (AWATO)—An Interactive Serious Game for Threat Modeling Human Factors. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 5–8 October 2020; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- van der Stappen, A.; Liu, Y.; Xu, J.; Yu, X.; Li, J.; Van Der Spek, E.D. MathBuilder: A collaborative AR math game for elementary school students. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play Companion—Extended Abstracts, Barcelona, Spain, 22–25 October 2019. [Google Scholar]

- Squire, K.D. Video game–based learning: An emerging paradigm for instruction. Perform. Improv. Q. 2008, 21, 7–36. [Google Scholar] [CrossRef]

- Tannahill, N.; Tissington, P.; Senior, C. Video games and higher education: What can “Call of Duty” teach our students? Front. Psychol. 2012, 3, 210. [Google Scholar] [CrossRef] [PubMed]

- Andersen, E.; O’Rourke, E.; Liu, Y.E.; Snider, R.; Lowdermilk, J.; Truong, D.; Cooper, S.; Popovic, Z. The impact of tutorials on games of varying complexity. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- White, M.M. Learn to Play: Designing Tutorials for Video Games; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- De Freitas, S. Are games effective learning tools? A review of educational games. J. Educ. Technol. Soc. 2018, 21, 74–84. [Google Scholar]

- Gamito, S.; Martinho, C. Highlight the Path Not Taken to Add Replay Value to Digital Storytelling Games. In Proceedings of the International Conference on Interactive Digital Storytelling, Tallinn, Estonia, 7–10 December 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 61–70. [Google Scholar]

- Roth, C.; Vermeulen, I.; Vorderer, P.; Klimmt, C. Exploring replay value: Shifts and continuities in user experiences between first and second exposure to an interactive story. Cyberpsychology Behav. Soc. Netw. 2012, 15, 378–381. [Google Scholar] [CrossRef] [PubMed]

- Phoenix, D.A. How to Add Replay Value to Your Educational Game. J. Appl. Learn. Technol. 2014, 4, 20–23. [Google Scholar]

- Ballew, T.V.; Jones, K.S. Designing Enjoyable Video games: Do Heuristics Differentiate Bad from Good? In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage CA: Los Angeles, CA, USA, 2006. [Google Scholar]

- Johnson, D.; Gardner, M.J.; Perry, R. Validation of two game experience scales: The player experience of need satisfaction (PENS) and game experience questionnaire (GEQ). Int. J. Hum. Comput. Stud. 2018, 118, 38–46. [Google Scholar] [CrossRef]

- Klimmt, C.; Blake, C.; Hefner, D.; Vorderer, P.; Roth, C. Player performance, satisfaction, and video game enjoyment. In Proceedings of the International Conference on Entertainment Computing; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Green, M.C.; Khalifa, A.; Barros, G.A.; Togelius, J. “Press Space to Fire”: Automatic Video Game Tutorial Generation. arXiv 2018, arXiv:1805.11768. [Google Scholar] [CrossRef]

- Sweetser, P.; Wyeth, P. GameFlow: A model for evaluating player enjoyment in games. Comput. Entertain. 2005, 3, 3. [Google Scholar] [CrossRef]

- Federoff, M.A. Heuristics and Usability Guidelines for the Creation and Evaluation of Fun in Video Games. Ph.D. Thesis, The Australian National University, Acton, Australia, December 2004. [Google Scholar]

- Ryan, R.M.; Rigby, C.S.; Przybylski, A. The motivational pull of video games: A self-determination theory approach. Motiv. Emot. 2006, 30, 344–360. [Google Scholar] [CrossRef]

- Poels, K.; de Kort, Y.A.; IJsselsteijn, W.A. D3.3: Game Experience Questionnaire: Development of a Self-Report Measure to Assess the Psychological Impact of Digital Games; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2007. [Google Scholar]

- Aytemiz, B.; Karth, I.; Harder, J.; Smith, A.M.; Whitehead, J. Talin: A Framework for Dynamic Tutorials Based on the Skill Atoms Theory. In Proceedings of the AIIDE, Edmonton, AB, Canada, 13–17 November 2018. [Google Scholar]

- Paras, B. Learning to Play: The Design of In-Game Training to Enhance Video game Experience. Ph.D. Thesis, School of Interactive Arts and Technology, Simon Fraser University, Burnaby, BC, Canada, 2006. [Google Scholar]

- Frommel, J.; Fahlbusch, K.; Brich, J.; Weber, M. The effects of context-sensitive tutorials in virtual reality games. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play, Amsterdam, The Netherlands, 15–18 October 2017. [Google Scholar]

- Zaidi, S.F.M.; Moore, C.; Khanna, H. Towards integration of user-centered designed tutorials for better virtual reality immersion. In Proceedings of the 2nd International Conference on Image and Graphics Processing, Beijing, China, 23–25 August 2019. [Google Scholar]

- Humayoun, S.R.; Catarci, T.; de Leoni, M.; Marrella, A.; Mecella, M.; Bortenschlager, M.; Steinmann, R. Designing mobile systems in highly dynamic scenarios: The WORKPAD methodology. Knowl. Technol. Policy 2009, 22, 25–43. [Google Scholar] [CrossRef]

- Humayoun, S.R.; Catarci, T.; de Leoni, M.; Marrella, A.; Mecella, M.; Bortenschlager, M.; Steinmann, R. The WORKPAD user interface and methodology: Developing smart and effective mobile applications for emergency operators. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 343–352. [Google Scholar]

- Marrella, A.; Mecella, M.; Russo, A. Collaboration on-the-field: Suggestions and beyond. In Proceedings of the 8th International Conference on Information Systems for Crisis Response and Management (ISCRAM 2011), Lisbon, Portugal, 8–11 May 2011. [Google Scholar]

- Dix, A. Statistics for HCI: Making Sense of Quantitative Data. Synth. Lect. Hum. Centered Inform. 2020, 13, 1–181. [Google Scholar]

- Hinderks, A.; Schrepp, M.; Mayo, F.J.D.; Escalona, M.J.; Thomaschewski, J. Developing a UX KPI based on the user experience questionnaire. Comput. Stand. Interfaces 2019, 65, 38–44. [Google Scholar] [CrossRef]

- Hart, S.G. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Sage Publications: Thousand Oaks, CA, USA, 2006; Volume 50, pp. 904–908. [Google Scholar]

- Sauro, J.; Lewis, J.R. Quantifying the User Experience: Practical Statistics for User Research; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Rogers, S. Level Up! The Guide to Great Video Game Design; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Fullerton, T. Game Design Workshop: A Playcentric Approach to Creating Innovative Games; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Chakravarti, I.M.; Laha, R.G.; Roy, J. Handbook of methods of applied statistics. In Wiley Series in Probability and Mathematical Statistics (USA); Wiley: Hoboken, NJ, USA, 1967. [Google Scholar]

- Bernevega, A.; Gekker, A. The Industry of Landlords: Exploring the Assetization of the Triple-A Game. Games Cult. 2022, 17, 47–69. [Google Scholar] [CrossRef]

| Game | Tutorial Presence | Context Sensitivity | Freedom | Availability of Help | Text | Helping Avatar | Video | Voice | Skippable | Story Integration | Practice Tool | Printed | Command Scheme Customization | Controller Diagram |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overwatch | Y | Y | N | Y | Y | Y | N | Y | Y | N | Y | N | Y | Y |

| Apex Legends | Y | Y | Y | Y | Y | Y | N | Y | N | N | Y | N | Y | Y |

| Destiny | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Destiny 2 | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| COD MW2 | Y | Y | N | N | Y | Y | N | Y | N | N | N | N | Y | Y |

| COD Ghosts | Y | Y | N | N | Y | Y | N | N | N | Y | N | N | Y | Y |

| COD BO2 | Y | Y | N | N | Y | N | N | N | N | Y | N | N | Y | Y |

| Doom 2016(Normal) | Y | Y | N | N | Y | N | N | N | N | Y | N | N | Y | Y |

| Doom 2016(Hard) | N | Y | N | N | N | N | N | N | N | N | N | N | Y | Y |

| Mirror’s Edge | Y | Y | N | Y | Y | Y | Y | Y | N | N | Y | N | Y | Y |

| Mirror’s Edge Catalyst | Y | Y | N | Y | Y | Y | Y | Y | N | Y | Y | N | Y | Y |

| Bioshock | Y | Y | Y | Y | Y | Y | Y | Y | N | Y | N | N | Y | Y |

| Bioshock 2 | Y | Y | Y | Y | Y | Y | Y | N | N | Y | N | N | Y | Y |

| Bioshock Infinite | Y | Y | Y | Y | Y | N | Y | N | N | Y | N | N | Y | Y |

| Portal | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Portal 2 | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Spec Ops: the Line | Y | Y | N | N | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Half-life | N | N | N | N | N | N | N | N | N | N | N | Y | Y | Y |

| Half-life 2 | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Singularity | Y | Y | N | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Fallout 3 | Y | Y | Y | Y | Y | Y | Y | N | N | Y | N | N | Y | Y |

| Fallout 4 | Y | Y | Y | N | Y | N | Y | N | N | Y | N | N | Y | Y |

| TES3: Morrowind | Y | Y | Y | N | Y | N | N | N | N | Y | N | N | Y | Y |

| TES4: Oblivion | Y | Y | Y | Y | Y | Y | N | N | N | Y | N | N | Y | Y |

| TES5: Skyrim | Y | Y | Y | Y | Y | Y | N | Y | N | Y | N | N | Y | Y |

| Metro Exodus | Y | Y | N | Y | Y | N | N | N | N | Y | N | N | Y | Y |

| Battlefield 1 | Y | Y | N | Y | Y | Y | N | Y | N | N | N | N | Y | Y |

| SW Battlefront 2 | Y | Y | N | Y | Y | Y | Y | Y | N | Y | N | N | Y | Y |

| STALKER: Shadow of Chernobyl | Y | Y | N | Y | Y | Y | Y | Y | N | Y | N | N | Y | Y |

| Valorant | Y | Y | N | Y | Y | N | N | Y | Y | N | Y | N | Y | Y |

| Team Fortress 2 | Y | Y | M | Y | Y | N | Y | N | Y | N | Y | N | Y | Y |

| Paladins | Y | Y | N | Y | Y | Y | N | Y | Y | N | Y | N | Y | Y |

| Element | First Tutorial Variant | Second Tutorial Variant |

|---|---|---|

| Tutorial Presence | yes | yes |

| Context Sensitivity | yes | yes |

| Freedom | no | no |

| Availability of Help | yes | yes |

| Text | yes | yes |

| Helping Avatar | yes | yes |

| Voice | yes | yes |

| Video | no | no |

| Skippable | no | yes |

| Story Integration | yes | no |

| Practice Tool | no | yes |

| Printed | no | no |

| Command Scheme Customization | yes | yes |

| Controller Diagram | yes | yes |

| Element | ANOVA | T-Test Analysis |

|---|---|---|

| Perceived level of success (1–10) | p-value: 0.012 F-value: 4.87 | p-value: 0.002 for G3 > G1 p-value: 0.018 for G3 > G2 |

| Not-Understandable/Understandable (1–7) | p-value: 0.011 F-value: 5.01 | p-value: 0.003 for G1 > G3 p-value: 0.003 for G1 > G2 |

| Easy/Difficult to learn (1–7) | p-value: 0.038 F-value: 3.54 | p-value: 0.012 for G1 < G3 p-value: 0.026 for G2 < G3 |

| Fast/Slow Pace (1–7) | p-value: 0.016 F-value: 4.53 | p-value: 0.004 for G1 < G3 p-value: 0.015 for G2 < G3 |

| Friendly/Unfriendly (1–7) | p-value: 0.034 F-value: 3.66 | p-value: 0.004 for G1 < G3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benvenuti, D.; Ferro, L.S.; Marrella, A.; Catarci, T. An Approach to Assess the Impact of Tutorials in Video Games. Informatics 2023, 10, 6. https://doi.org/10.3390/informatics10010006

Benvenuti D, Ferro LS, Marrella A, Catarci T. An Approach to Assess the Impact of Tutorials in Video Games. Informatics. 2023; 10(1):6. https://doi.org/10.3390/informatics10010006

Chicago/Turabian StyleBenvenuti, Dario, Lauren S. Ferro, Andrea Marrella, and Tiziana Catarci. 2023. "An Approach to Assess the Impact of Tutorials in Video Games" Informatics 10, no. 1: 6. https://doi.org/10.3390/informatics10010006

APA StyleBenvenuti, D., Ferro, L. S., Marrella, A., & Catarci, T. (2023). An Approach to Assess the Impact of Tutorials in Video Games. Informatics, 10(1), 6. https://doi.org/10.3390/informatics10010006

_Bryant.png)