Abstract

We analyse the ruin probabilities for a renewal insurance risk process with inter-arrival times depending on the claims that arrive within a fixed (past) time window. This dependence could be explained through a regenerative structure. The main inspiration of the model comes from the bonus-malus (BM) feature of pricing car insurance. We discuss first the asymptotic results of ruin probabilities for different regimes of claim distributions. For numerical results, we recognise an embedded Markov additive process, and via an appropriate change of measure, ruin probabilities could be computed to a closed-form formulae. Additionally, we employ the importance sampling simulations to derive ruin probabilities, which further permit an in-depth analysis of a few concrete cases.

1. Introduction

With the ever growing popularity of bonus-malus systems in the car insurance industry, one interesting question to study is whether it really reduces the associated risk and by how much. A common measure to assess risks that an insurer is exposed to is the so-called ruin probability. Motivated by such questions, we derive ruin probabilities in models with a dependence structure that captures the key feature of a simple bonus system, namely that there is a premium discount when no claims are observed in the previous year. Inspired by this feature, we found a regenerative structure within inter-claim times that could describe the properties of an analogous bonus system, also referred to as a no claims discount (NCD) system.

In the classical collective risk insurance model, the independence among inter-claim times is one of the crucial assumptions. However, in reality, one could easily find violations of such an assumption. For instance, in car insurance, if there has been a long waiting time before a claim, the next inter-arrival time can be long, as well, because the policyholders are potentially “good drivers”; or it could be the other way around, where some policyholders only start to use their cars a long time after purchasing them, then claims would suddenly arrive more frequently after a long silence. Hence, a shorter interval before the next claim can be expected. Therefore, a natural way to relax this assumption is to introduce a dependence structure in-between inter-arrival times, and that is the main purpose of this paper.

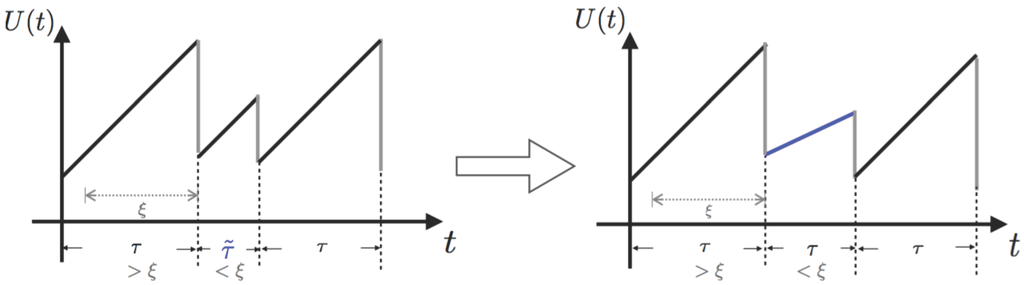

For the simplest case, comparing the current time since the last claim with a fixed threshold ξ (either exceeded or not exceeded) determines the distribution for the time until the next claim. A risk-lover insurer can set a larger mean for the following claim interval, whereas a risk-averse one will possibly choose a lower mean. We will use to denote the subsequent random inter-arrival time when the current one is larger than ξ. The graph on the left in Figure 1 shows a sample path of this underlying risk surplus process.

Figure 1.

Model transform.

Figure 1 illustrates how a change of inter-arrival time distribution could equivalently be represented by a modification in the premium rate. Let us denote an arbitrary inter-claim time by τ. The left graph shows a risk surplus process with inter-arrival times switching between two different random variables according to the dependence structure addressed earlier, whereas the graph on the right presents a two-level premium varying risk surplus process where the premium rate decreases after a relatively long wait, which exceeds a fixed number ξ, i.e., a bonus for no claims. After that, since the second waiting interval is less than ξ, the premium rate returns to its original value, and so on and so forth.

Clearly, the inter-arrival times switching between two different random variables can be reflected by adjustment on premium rates, as long as the increment of the surplus process in this time interval is kept the same, where as a function of time describes the reserve level overtime as defined by Equation (1). That is to say, whenever a large inter-arrival time that is above ξ is witnessed, the next inter-claim time will switch to a random variable with a different distribution. Notice that the two models presented in Figure 1 are equivalent only when working with ruin probabilities under an infinite-time horizon, but not for a finite-time case.

These graphs also suggest that our proposed model could also, to some extent, mimic the nature of a no claim discount (NCD) system. This term refers to a simplified bonus-malus (BM) system, a bonus system, with only premium discounts when there are no claims witnessed in a fixed time window. We can thus naturally draw the analogy between a bonus-only system and the risk model with these two-level varying premiums. Although we cannot equate them because the NCD systems are for individual risks, the feature of varying premiums could be generalised to the collective level. For instance, in the case where no claims are reported to the insurer in a fixed time period, the premium rate could see a slight decrease because of all individuals’ premium discounts. Looking back at Figure 1, could be assumed to have a smaller mean than τ for the interpretation resulting from the effect of a drop in the premium rate. The opposite case implies a malus-only system, which is not often seen in practice. However, as mentioned before, a conservative insurer might choose this option. Additionally, for computational reasons, we make the assumption that the inter-exchange of the randomness of inter-arrival times only happens after a jump rather than precisely at the end of the fixed window.

In risk theory, one of the extensions from a classical collective risk model involves the relaxation of the assumption of independence. Hence, dependence models have been introduced under a risk theory framework in various kinds of ways. For a dependence within claims, Albrecher et al. [1] calculated ruin probabilities by using Archimedean survival copulas. Through a copula method, Valdez and Mo [2] also worked with risk models with dependence among claim occurrences focusing more on the simulation side. The dependence between claim sizes and inter-arrival times was also analysed. Albrecher and Boxma [3] first considered the case when the inter-claim time depends on the previous claim size with a random threshold. Due to the complexity of inverting Laplace transform, they could only obtain the results in terms of Laplace transforms. Kwan and Yang [4] then studied such a dependence structure with a deterministic threshold, and they were able to express ruin probability explicitly by solving a system of ordinary delay differential equations. Furthermore, Constantinescu et al. [5] also considered risk processes with various forms of dependence between inter-arrival times and claim sizes. This work though aims at adjusting premiums dynamically in order to meet the solvency requirement on the ruin probability for a fixed initial reserve using the idea developed by Dubey [6], rather than calculating the probability of ruin.

On the other hand, we have included some references here to remind the readers of the concept of bonus-malus systems, as well as research that has attempted studying the risks associated with this kind of systems. Most of the research on bonus-malus systems mainly relied on the construction of a discrete time Markov chain in order to compute different levels of prices; see, e.g., Lemaire [7]. Due to the possibility of slow convergence to stationarity, Asmussen [8] added an age-correction and implemented numerical analyses for various bonus-malus systems in different countries. Instead of considering premium levels depending only on claims in the previous period, it is alternatively suggested to take into account the entire history where a Bayesian view could be adopted as in Asmussen [8]. A recent work by Ni et al. [9] also applied the Bayesian approach to reflect this idea and obtained premiums in a closed form when claim severities are assumed to be Weibull distributed.

There have been a few papers investigating ruin probabilities for a bonus-malus system recently. Working with real data, Afonso et al. [10] calculated ruin probabilities under a realistic bonus-malus framework and found an increase in ruin probability for a closed portfolio due to reduced income. The idea in their article, which was earlier developed in Afonso et al. [11] is that they first analyse ruin probabilities for a single year conditioning on the reserve levels at the beginning and the end of the year. Since the premium rate is kept constant within a year, a classical technique could be borrowed. Then, they use approximations and estimate ruin probabilities numerically. On the other hand, the incorporation of the feature of a bonus-malus system into a risk model is similar to a variation in the premium rates after a claim arrival. For such a dependence, by employing a Bayesian estimator in a risk model and using a comparison method with the classical case, Dubey [6] and Li et al. [12] could interpret ruin probabilities in terms of a classic one. In this paper, we follow a similar idea. However, our work here is to model the dependence between premium rates and the claim arrivals within a fixed time window. In our opinion, it mimics better the key feature of a bonus system. The consequence of this approach is that it implies modelling the dependence between two consecutive inter-claim times based on a fixed threshold. This serves as the main goal of this work. Furthermore, the allowance of such dependence obviously violates the renewal property as in the classical model. Nevertheless, a regenerative structure can be identified so that analyses are possible. For the literature on regenerative processes, we refer to Palmowski and Zwart [13,14].

Aiming at studying the ruin probability for a risk model with dependent inter-claim times, we try to investigate this model under a regenerative framework using various methods. We start by looking at some asymptotic results via adopting theories developed for general regenerative processes in Palmowski and Zwart [13,14]. For the Cramér case, it still shows exponential tails for the probability of ruin. All asymptotic results are shown for a general situation where the distribution for each random variable is not specified. Then, by constructing an appropriate Markov additive process and using the importance sampling method, we can run simulations to get numerical results for the case where inter-claim times and claims are exponentially distributed. In the end, we employ the crude Monte Carlo simulations to compare the underlying ruin probability with a classic one as a case analysis. In addition, we look at the influence of claim distributions on the ruin probabilities, where we found that there is no significant differences in ruin probabilities when altering between exponential and Pareto claims. In general, results suggest that the use of bonus systems may not act in favour of the reduction on ruin probabilities. That is probably because premium discounts generally decrease the risk reserves.

2. The Model

Let us start by describing the model that we will work with in this paper. We denote by the amount of surplus of an insurance portfolio at time t:

In the above classical model Equation (1), c represents the constant rate of premium inflow, is an arrival process that counts the number of claims incurred during the time interval and is a sequence of independent and identically distributed (i.i.d.) claim sizes with distribution function and density (also independent of the claim arrival process ). We assume that almost surely as . One of the crucial quantities to investigate in this context is the probability that the surplus in the portfolio will not be sufficient to cover the claims for the first time, which is called the probability of ruin:

Here, is the initial reserve in the portfolio and

is the time of ruin for an initial surplus x. We specify the counting process , which in the classical models is a Poisson process. Let be the sequence of inter-claim times. In this paper, we analyse the model when the distribution of depends on the number of claims that appeared within a fixed past time window ξ as follows,

It is true that when such a dependence structure is introduced, a direct use of renewal theory is no longer applicable. However, even though it is not renewal at each jump epoch, the process in fact renews after several jumps, and we call this a "regeneration". Thus, we define the regenerative epochs for our model in the following way.

Definition 1.

Let

Then the regeneration epochs are defined as:

for , with and .

Roughly speaking, at these epochs (the arrival times with a zero number of arrivals within the last time window lagged by ξ) the risk process loses its ‘memory’, and starting at these epochs, the stochastic evolution remains the same. A formal definition of a regenerative process can be found in Appendix A1 in Asmussen and Albrecher [15]. It easy to observe that the risk process is indeed regenerative with regeneration epochs , . In this case, , for and . Notice that we define the regenerative epochs in such a way that the concern only lies in whether there are claims or not in the past fixed window ξ rather than how many of them there are.

Furthermore, let us consider the claim surplus process denoted by:

and let:

and:

Then, due to the regenerative structure of the claim surplus process , the discrete-time process () is a random walk. The crucial observation used in this paper is:

The simplest case that we focus on has inter-claim times following two random variables τ and . We choose τ when in a past time-window of length ξ, there is at least one claim; otherwise, we choose as the inter-arrival time. Hence, for ,

It is a natural choice since usually in the insurance industry, a long “silence” translates into a different behaviour of the arrival process just right after it. To rephrase it, our current model incorporates a dependence structure between each pair of consecutive inter-arrival times. Whenever an inter-arrival time exceeds ξ, the next one would have the same distribution as . Otherwise, it conforms to τ.

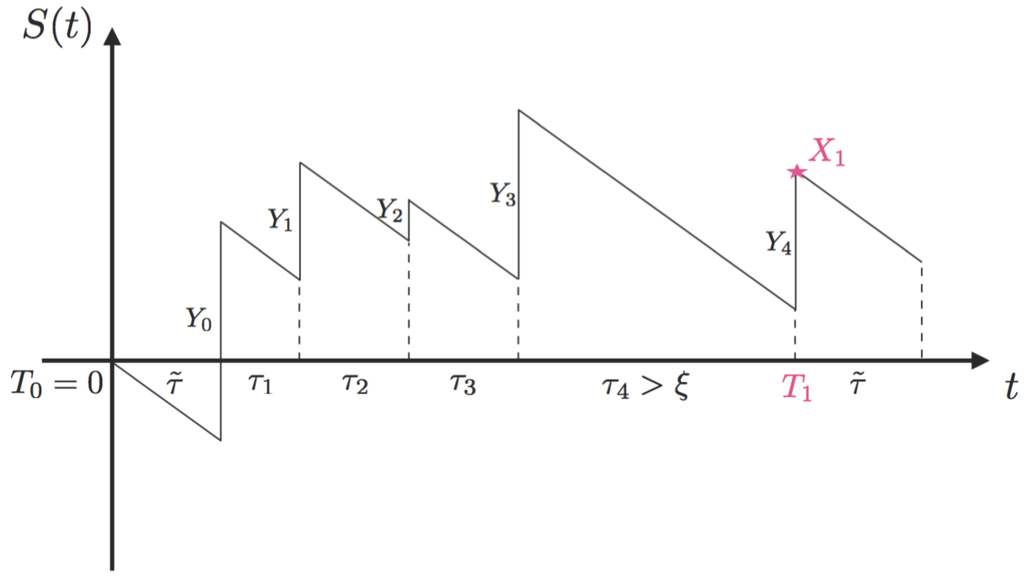

More interestingly, as mentioned earlier, one motivation of such a model set-up is the analogy, although not the same, with a basic bonus system, i.e., a system where policyholders enjoy discounts when they do not file claims for a certain period (but with no penalties). Such an analogy is possible given that the premium discounts for individuals in an NCD system could be equivalently reflected by a drop in the premium rates at the collective level. Without loss of generality, Figure 1 plots an example of such risk processes and demonstrates how our model reflects the feature of a bonus system. A sample path of the claim surplus process we will be working with is given in Figure 2 (where we assume starting from ).

Figure 2.

A sample path of the regenerative process.

Recall from Equation (2) that is the end value at the first regenerative epoch. Then, it is not difficult to observe that it has the same law as:

where N is a geometrical random variable with parameter . Here, , and , . For simplicity, we assume here and throughout the paper.

The paper is organized as follows. Section 3 presents asymptotic results about ruin probabilities under three different regimes for claim distributions, using asymptotics derived for general regenerative processes as in [13,14]. Section 4 demonstrates some numerical results via simulations and discusses a case analysis, including a comparison with ruin in a classical risk model. The simulations used in this section are based on the embedded Markov additive process within our model and rely on the importance sampling method via a change of measure. A few conclusions will be drawn in Section 5.

3. Asymptotic Results

In this section, we look at three different situations for claim distributions and analyse the asymptotic ruin probability associated with each of them. Inter-arrival times considered in this section are general random variables if not mentioned otherwise.

3.1. The Heavy-Tailed Case

Let us first discuss the heavy-tailed case. We start with the assumption that the distribution of the generic belongs to the class of subexponential distribution functions, where a distribution function on if and only if , for all x, and:

(where is the convolution of G with itself). Here, denotes the tail distribution given by . More generally, a distribution function G on is subexponential if and only if is subexponential, where and is the indicator function of a set A. We further assume throughout that are strong subexponential distributions. According to Definition 3.22 in [16], a distribution function G on belongs to the class , i.e., G is strong subexponential, if and only if , for all x, and:

where:

is the mean of G. It is again known that the property depends only on the tail of G. Further, if then and also where:

is the integrated, or second-tail, distribution function determined by G. See [16] for details.

Theorem 2.

If and , then:

as , with .

Proof.

This proof is similar to Theorem 1 in [13]. ☐

Note that:

Assume now that τ and are light-tailed, that is there exists , such that and and that:

Then, from Foss et al. [16], we have that:

and by Equation (4) and Corollary 3.40 in [16], we have that:

where Y is a generic claim size. Moreover,

with:

Remark 1.

Reducing to the Cramér–Lundberg model

By removal of the dependence assumption in our setting, our model will reduce to the classical model. The independent case is referring to the situation when . Substituting this into Equation (10) yields,

In addition, it simplifies μ to:

According to Equation (7),

If we assume , and the safety loading to be ρ i.e., , and knowing that , the above identity could be reduced to:

which coincides with the approximated ruin probability for the classic risk model with subexponential claims, as shown by Theorem 1.36 in [17]. ☐

3.2. The Intermediate Case

We now consider the case where satisfies:

for every fixed y, as , and:

This is equivalent to the condition that . The case is treated in Section 3.1, so we assume . Another assumption we make is that:

which implies that Cramér’s condition is not satisfied. Finally, we specify the tail behaviour of . The case where has a heavier tail than is already covered in the previous subsection. Motivated by this, we assume that:

(we allow the limit to equal zero). Furthermore, we assume that there exists a bounded function g, such that:

for all real values of a.

Theorem 3.

Suppose that Equations (12)–(16) are satisfied. Then:

Proof.

The proof can be found as Theorem 3 in [13]. ☐

We discuss now when condition Equations (12)–(16) are satisfied. Assume that:

that is satisfies assumption Equations (12)–(14). Then, by representation Equation (4), we can easily check that also satisfies:

and:

3.3. The Cramér Case

In this subsection, we review the extension of the classical Cramér case from random walks to perturbed random walks and regenerative processes.

Theorem 4.

Assume that there exists a solution to the equation:

Assume furthermore that is non-lattice and that . Then:

with for independent M of and .

Proof.

See [18], Theorem 5.2. ☐

It is easy to see that K is bounded from above by:

In fact, it is even bounded above by:

Note that by Equation (4), the Cramér adjustment coefficient solves:

for:

where . The above calculations show also that the moment generating function (m.g.f.)ϕ of has the following representation:

We can now identify the constant :

under the assumption that:

Remark 2.

Example 1.

A special example of exponentially-distributed , and would lead to:

and:

This implies that:

Moreover, since:

the NPC condition is equivalent to:

Furthermore, as a connection with Section 4, it is worth mentioning here that the above identity should coincide with:

where:

and:

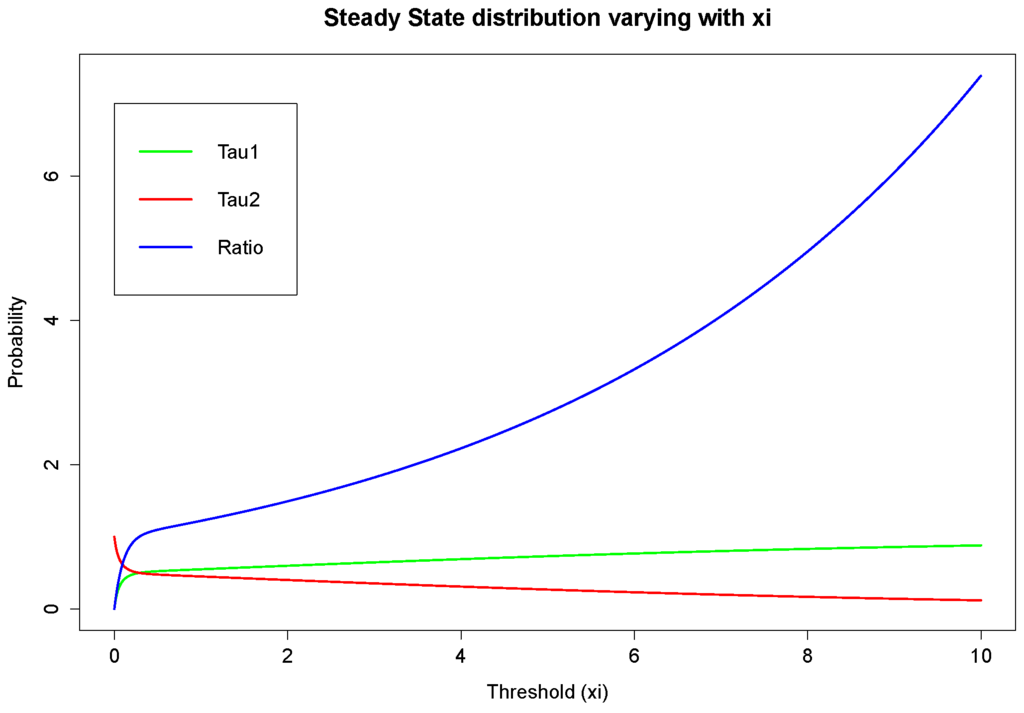

denote the steady state distribution in the Markovian environment of τ and . That is to say, when the process becomes stationary, the probability to have an inter-arrival time less than or equal to ξ (State 1) would be , while that for it being larger than ξ (State 2) is represented by . The graph depicted in Figure 3 shows an example of this distribution. It could be seen that the probability of being in State 1 in our case is monotonically increasing with ξ. The blue line represents the ratio of probabilities between State 1 and State 2, thus having the same monotonicity as the green line. This will be analysed further via simulation.

Figure 3.

Steady state distribution when .

4. Numerical Results

In this section, we explain several methods to simulate the ruin probabilities for our model. Initially, we tried the crude Monte Carlo simulation, but as in the classical case, several issues arise, including determining a time range large enough so that it approximates an infinite time ruin probability. Then, we employed the importance sampling technique, which allows us to simulate ruin probabilities under a new measure where ruin certainly happens. However, this does not give us the ruin probability defined in our original problem. Hence, after a deeper analysis, the construction of a Markov additive process assists in developing a more sophisticated importance sampling technique for our model when the inter-claim times, as well as claims are exponentially distributed. Using this Markov additive method, sample paths have been simulated according to the process given by Equation (42) from which ruin probabilities can be derived. At the end of this section, we present a case study using the crude Monte Carlo simulation where ruin probabilities for our model are compared to the ones under a classical setting, aiming at answering the question of whether the introduction of the dependence structure reduces risks, which originated this work. We also investigate the influence of the two different claim distributions on simulated ruin probabilities.

4.1. Importance Sampling and Change of Measure

One cause of drawbacks when using the crude Monte Carlo simulation is that ruin probabilities are inefficient, i.e., the ruin probability tends to zero very quickly, when the initial reserve u is large. This can be explained by the Cramér–Lundberg asymptotic formula, namely, asymptotically, the ruin probability has an exponentially decay with respect to u. The other reason for not simply adopting a crude Monte Carlo simulation is the fact that we are trying to simulate an infinite time ruin probability under a finite time horizon. In order to overcome this problem, the importance sampling technique has been brought in. The key idea behind it is to find an equivalent probability measure under which the process has a probability of ruin equal to one.

Let us start from something trivial. For the moment, we only consider the “ruin probability” when the time between regenerative epochs is ignored. In other words, we now look at our process from a macro perspective. Since it is a renewal process at each regenerative time epoch, we may omit the situations where ruin happens within these intervals. We refer to it as the “macro” process and its corresponding ruin probability as the “macro” ruin probability in the sequel. We can then define the macro ruin time as:

Consequently, the macro ruin probability denoted by should be smaller than or equal to the ruin probability associated with our actual risk process . However, for illustration purposes, it is worth covering the nature of change of measure under the framework of this macro process first before we dig into more complex details.

Theorem 5.

Assume that there exists a κ, such that . Consider a new measure , such that:

with and defined in a similar way. Then, we could establish the same relation as in the classical case for the moment generating function ϕ of , if it exists,

Proof.

Rewriting Equation (25), we derive:

First, we note that:

Note that have the same form. Then, Equation (35) can be modified into:

Now let,

Then,

☐

To analyse Equation (34) further, can be seen as shifting to the left by κ. We know that the NPC for the macro process requires , i.e., . Additionally, Equation (23) should have a positive root κ if the tail of the claims distribution is exponentially bounded. That is to say, would result in a positive drift of the macro claim surplus process and then cause a macro ruin to happen with certainty. The new m.g.f. verifies the statement. Hence, we can write a macro ruin probability as:

with . For a strict and detailed proof, please refer to Asmussen and Albrecher [15] (Chapter IV, Theorem 4.3). Moreover, from Equation (34), it follows that:

where is absolutely continuous with respect to (up to time n) with a likelihood ratio,

Define a new stopping time . Note that the event is equivalent to . From the optional stopping theorem, it follows that for any set , we have:

See ([15] Chapter III, Theorem 1.3) for more details.

This means that we could simulate macro ruin probabilities under the new measure where the ruin happens with probability one. We do it using the new law of given in Equation (40). To each ruin event, we add weight where is the observed macro ruin time. Summing up all events with weights, we derive the ruin probability . In this way, we can avoid infinite time simulations. For the case where everything is exponentially distributed, as was considered in Example 1, we have that under , the simulation should be made according to new parameters:

In this case, has the law of:

where and .

4.2. Embedded Markov Additive Process

To get more precise simulation results than the macro ruin probability since they are giving a lower estimate only, we have to understand the structure of our process better. To implement this, we will use the theory of discrete-time Markov additive processes. For simplicity, we assume everything to be exponentially distributed with , and , respectively.

Recall our process as described by Equation (2) and note that ruin happens only at claim arrivals for and . From time to , the distribution of the increment only depends on the relation between and ξ. Hence, we could discretize given in Equation (2) to and, thus, transfer the model into a new one () by adding a Markov state process defined on . The index represents the occupying state of at time . For instance, State 1 describes a status where the current inter-arrival time is less than or equal to ξ, while State 2 refers to the opposite situation. For convenience, we construct based on the choice of : implies , and otherwise. Note that the two-state Markov chain has a transition probability matrix with the -th element being , as follows:

where and . We also define a new process whose increment is governed by . More specifically, two scenarios could be analysed to explain this process. Given , Scenario 1 is when , i.e., and . Then, comparing τ with ξ, there is a chance q of obtaining given and p having given , with the corresponding increment being and , respectively. On the contrary, Scenario 2 represents the situation where the current state is , i.e., and . Thus, all of the variables above are presented in the same way only with a tilde sign added on τ, p and q.

is a discrete time bivariate Markov process also referred to as a discrete-time Markov additive process (MAP). The moment of ruin is the first passage time of over level , defined by:

Without loss of generality, assume that . Then, the event is equivalent to . This implies that:

To perform the simulation, we will derive now the special representation of the underlying ruin probability using a new change of measure. We start from identifying a kernel matrix with the -th entry given by . Here, and denote the probability measure conditional on the event and its corresponding expectation, respectively. Then, for , an m.g.f of the measure is with:

Additionally, based on the additive structure of the process for we have,

We will now present a few facts that will be used in the main construction.

Lemma 6.

We have,

where is the eigenvalue of and is the corresponding right eigenvector.

Proof.

Note that:

where is a standard basis vector. This completes the proof. ☐

Lemma 7.

The following sequence:

is a discrete-time martingale.

Proof.

Let Then,

which gives the assertion of the lemma. ☐

Define now a new conditional probability measure using the Radon–Nikodym derivative as follows:

Lemma 8.

Under the new measure , process is again MAP specified by the Laplace transform of its kernel in the following way:

where is a diagonal matrix with on the diagonal.

Proof.

Note that the kernel of can be written as:

This shows that the new measure is exponentially proportional to the old one, which ensures that is absolutely continuous with respect to . Further transferring it into the matrix m.g.f. form yields the desired result. ☐

Corollary 9.

Under the new measure , the MAP consists of a Markov state process , which has a transition probability matrix:

where:

and an additive component with random variables with laws given in Theorem 5 where θ should be chosen everywhere instead of κ.

In fact, when , coincides with defined by Theorem 5. Recall from Equation (31) and . Since , then implies . Now, the main representation used in simulations follows straightforwardly from the above lemmas and the optional stopping theorem, as was already done in the previous section, and it is given in the next theorem.

Theorem 10.

The ruin probability for the underlying process Equation (2) equals:

where denotes the overshoot at the time of ruin .

Proof.

The result follows straight from Lemma 8 and Corollary 9 using the same reasoning as introduced in Section 4.1. ☐

Now, we will simulate ruin events using new parameters of the model identified in Corollary 9. We start from State 2 of . We will run our risk process until a ruin event. With each ruin event, we will associate its weight by:

Summing and averaging all weights gives the estimate of the ruin probability .

Remark 3.

In addition, it has been discovered that has an eigenvalue equal to one and:

is the corresponding right eigenvector. ☐

Proof.

Indeed, let λ denote the eigenvalue of . Thus, we can write,

Recall Equation (22); clearly, is a solution to the above equation. That directly leads to , and one can obtain:

Plugging in the parameters completes the proof. ☐

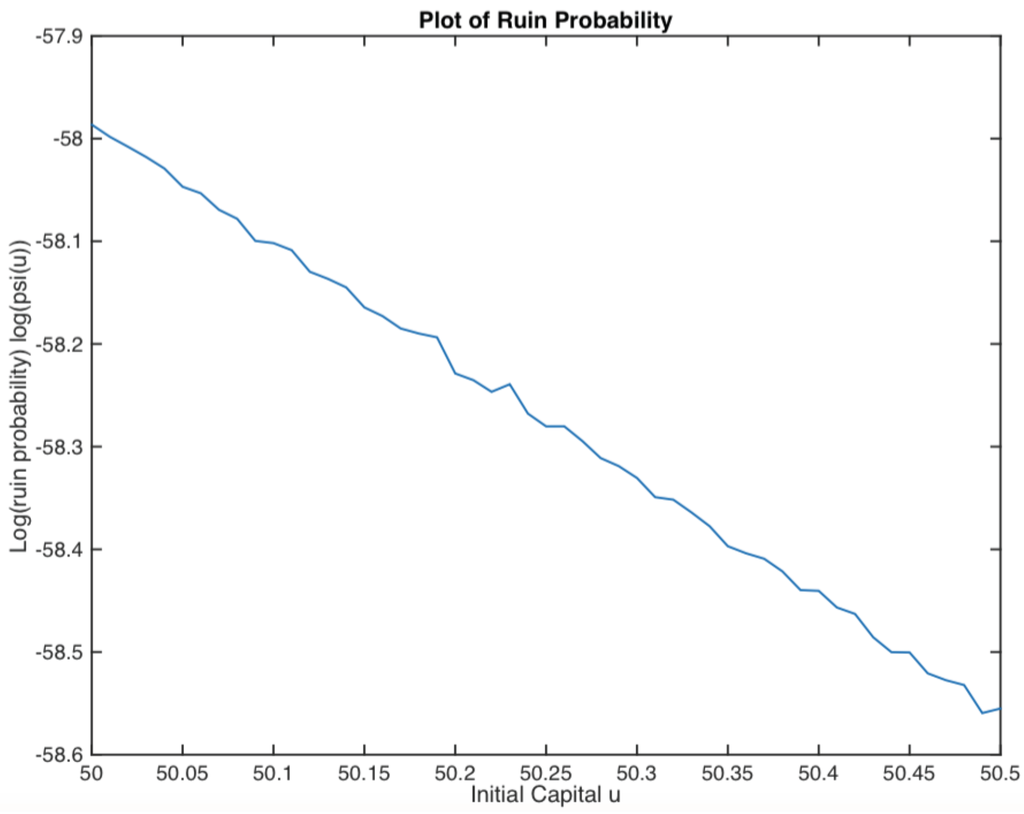

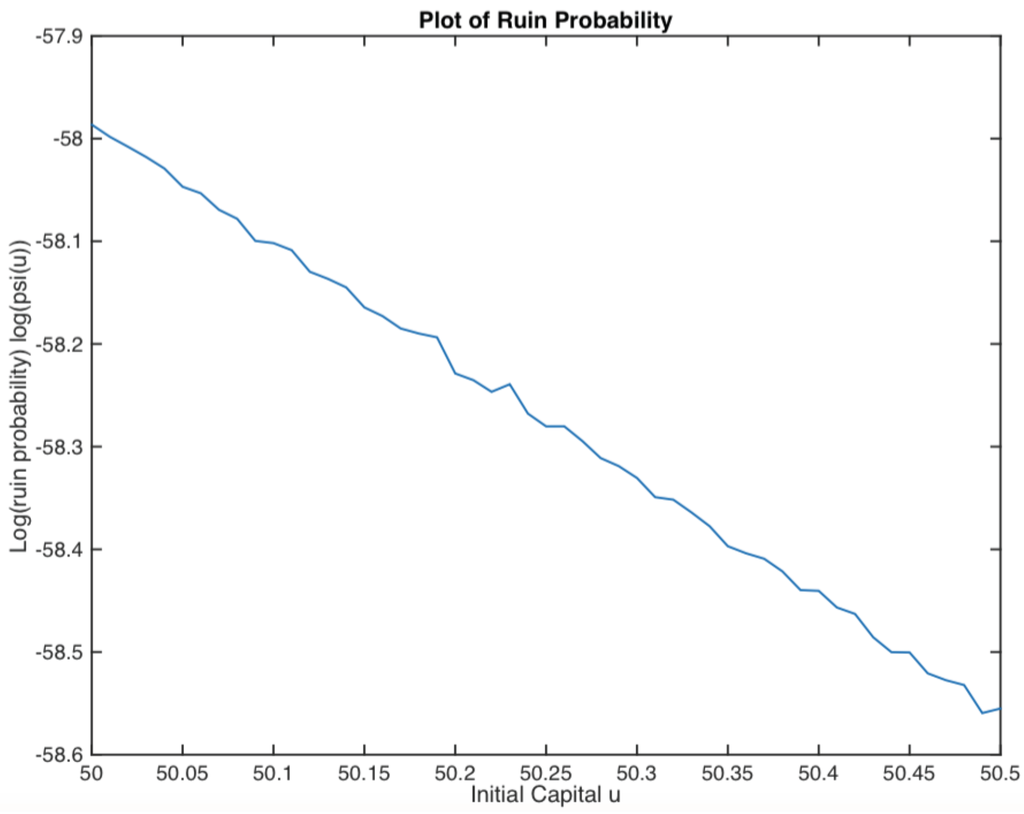

Example 2.

Assume that Y, τ and have exponential distributions with parameters , and , respectively. The smallest positive real root of Equation (25) is calculated for , and its corresponding right eigenvector is . Then, the ruin probability is plotted in Figure 4. It displays an exponential decay, as we expected.

Figure 4.

Logarithm of ruin probability.

4.3. Case Study

In this subsection, we derive a few results via a crude Monte Carlo simulation method. The key idea is to simulate the process according to the model setting and simply count the number of paths that get to ruin. Due to the nature of this approach, a ‘maximum’ time should be set beforehand, which means we are in fact simulating a finite time ruin probability. However, this drawback may be ignored as long as we are not getting a lot of zeros.

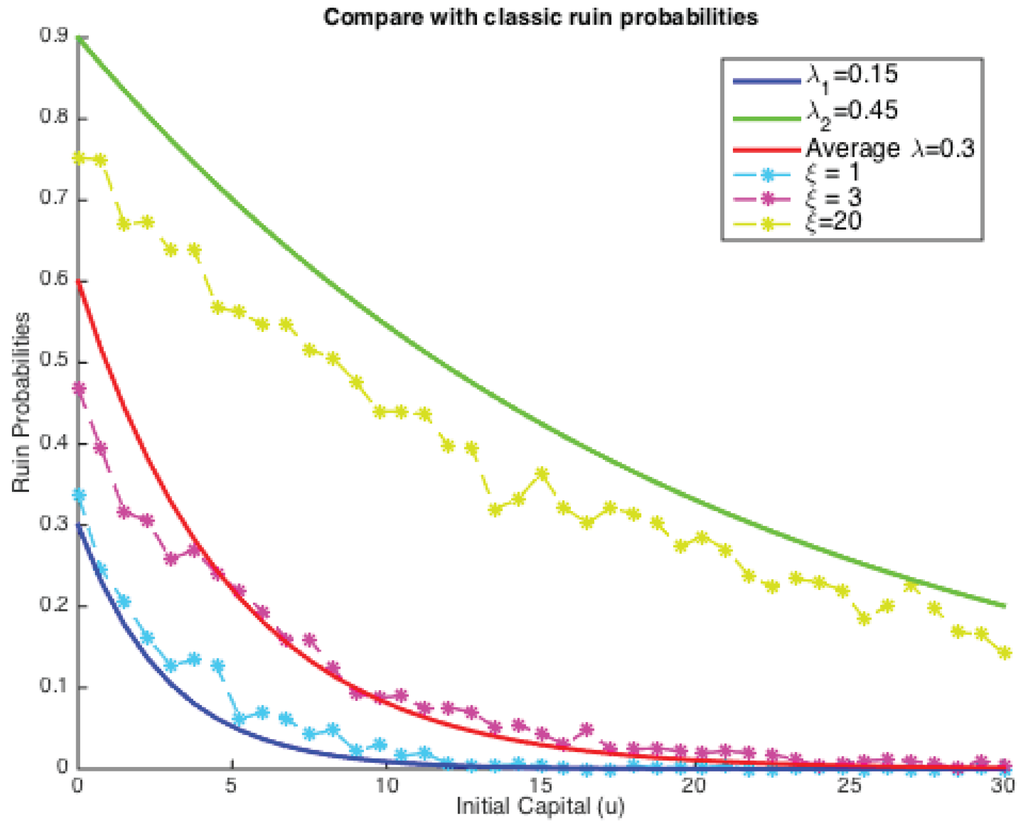

Our task is to compare the simulated results with a classical analytical ruin function when exponential claims are considered.

Hence, for the simplest case of exponentially-distributed claim costs, we plotted both the classic ruin probabilities and our simulated ones on the same graph, as shown below (see Figure 5).

Figure 5.

Comparison with classic ruin probabilities with exponential claims.

It could be concluded that under two given parameters for Poisson intensity, simulated finite ruin probabilities in our model lie between two extremes, but have many possibilities in between. The comparison depends extensively on the value of ξ. These results also confirmed Theorem 4 that the tail of the ruin function in our case still has an exponential decay, and ξ is strongly related to the solution for κ. In other words, when the dependence is introduced, it is not clear that ruin probabilities would see an improvement.

More precisely, solid lines show classical ruin probabilities (infinite time) as a function of the initial reserve u, and each of them denotes an individual choice of Poisson parameters (, ) with the middle one being the average of the other two (). It is clear that the larger the Poisson parameter, the higher is the ruin probability. On the other hand, those dotted lines are the simulated results from our risk model with dependence for the same given pair of Poisson parameters and . The four layers here correspond to four different choices of values for ξ, i.e., . If , the simulated ruin probability (in fact finite-time) tends to a classical case with the lower claim arrival intensities ( here), which explains the blue dotted line lying around the dark blue solid line. On the contrary, when , the simulated ruin probabilities approach the other end. This then triggered us to search for a ξ, such that the simulated ruin probability coincides with a classical one. Let us see an example here, if:

based on the parameters we chose in Figure 5. That implies the choice of our fixed window is the average length of these two kinds of inter-arrival times. However, as can be seen from Figure 5, the dotted line with lies closer than the one with to the red solid line. This suggests that the choice of ξ will influence the simulated ruin probabilities and, thus, the comparison with a classical one. It is also very likely that there exists a ξ, such that our simulated ruin probabilities concur with the classic ones.

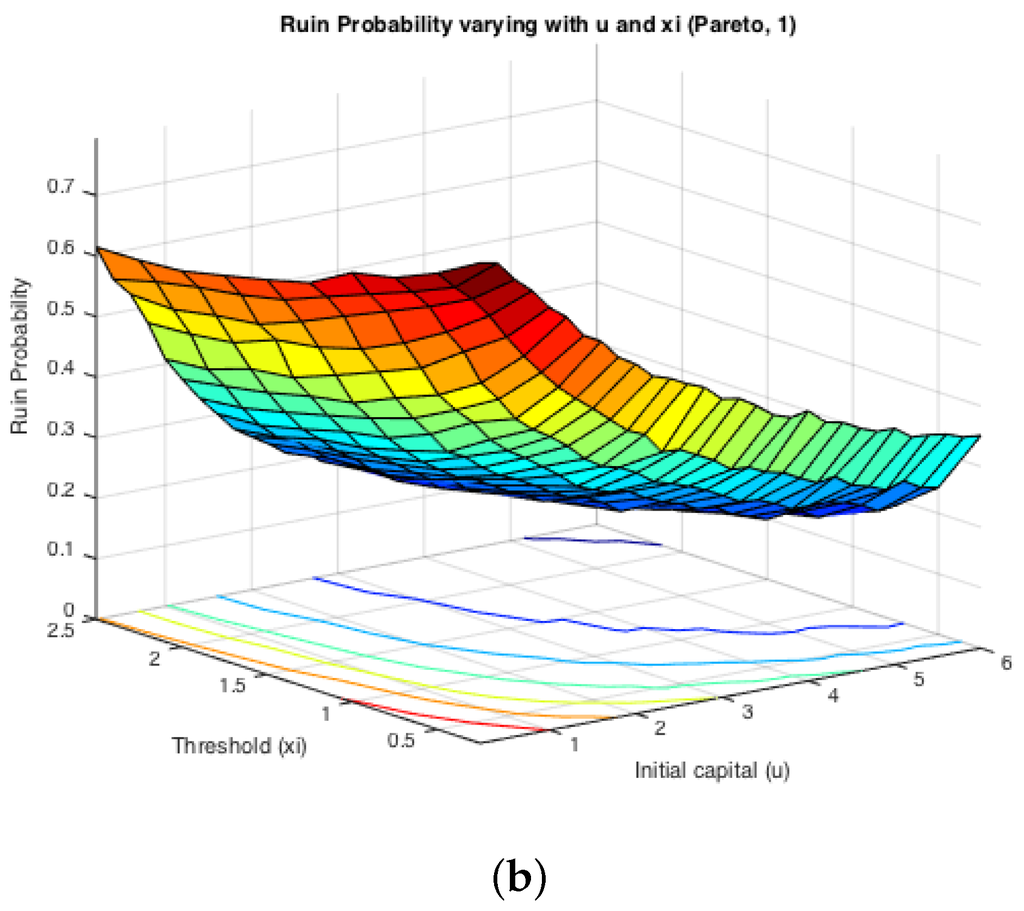

While the first half of the Monte Carlo simulation looked at the influence of ξ on the simulated ruin probabilities, the second step is to see the effects of claim sizes. The typical representations of light-tailed and heavy-tailed distributions, exponential and Pareto, were assumed for claim severities. The inter-arrival times were switching between two different exponentially-distributed random variables with parameters and . Two cases were simulated: either or . It is expected that the effects from claim severity distributions on infinite time ruin probabilities would be tiny, as they normally affect more severely the deficit at ruin. Here, since we actually simulate finite-time ruin probabilities, we are curious whether the same conclusion can be drawn.

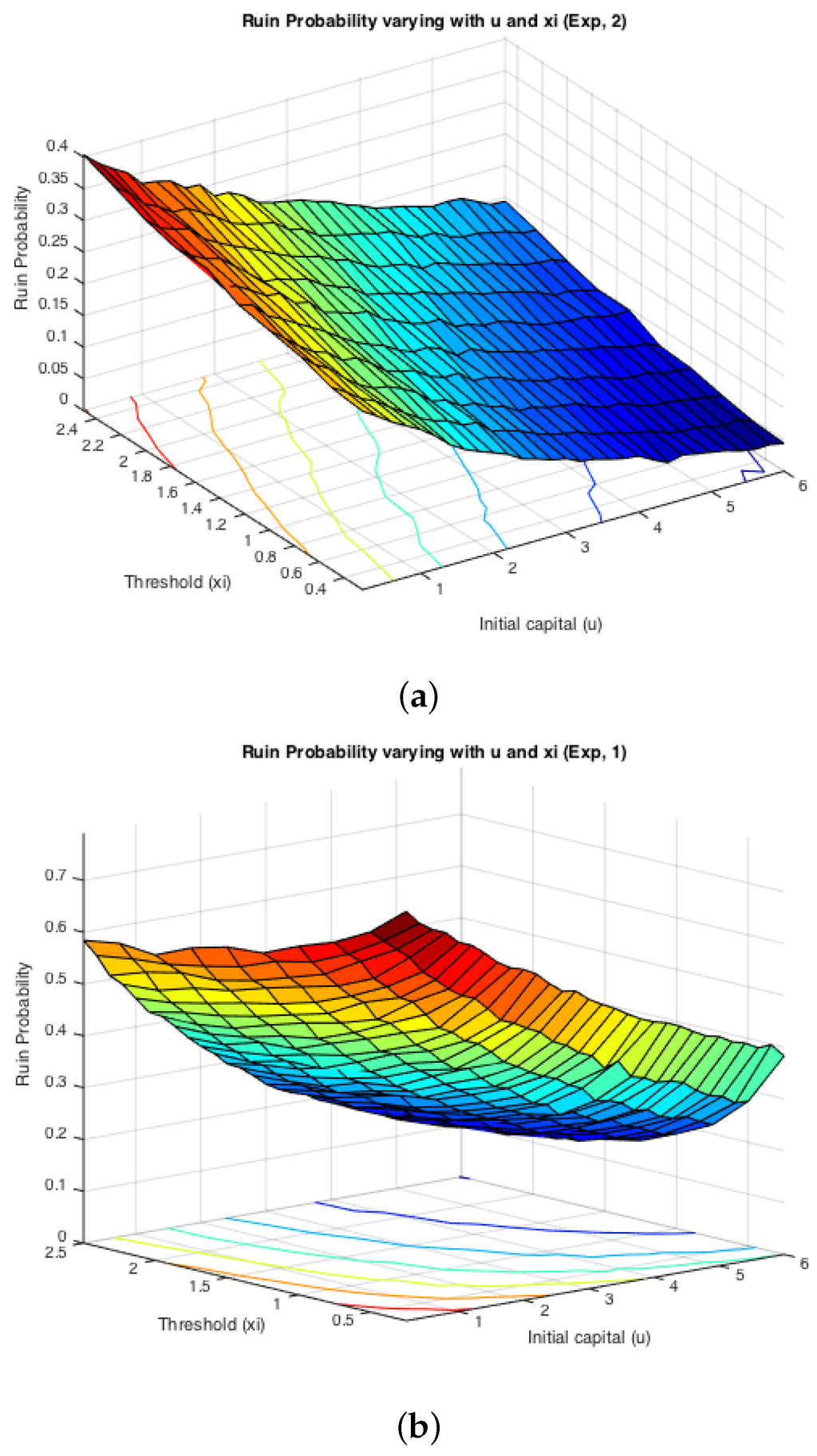

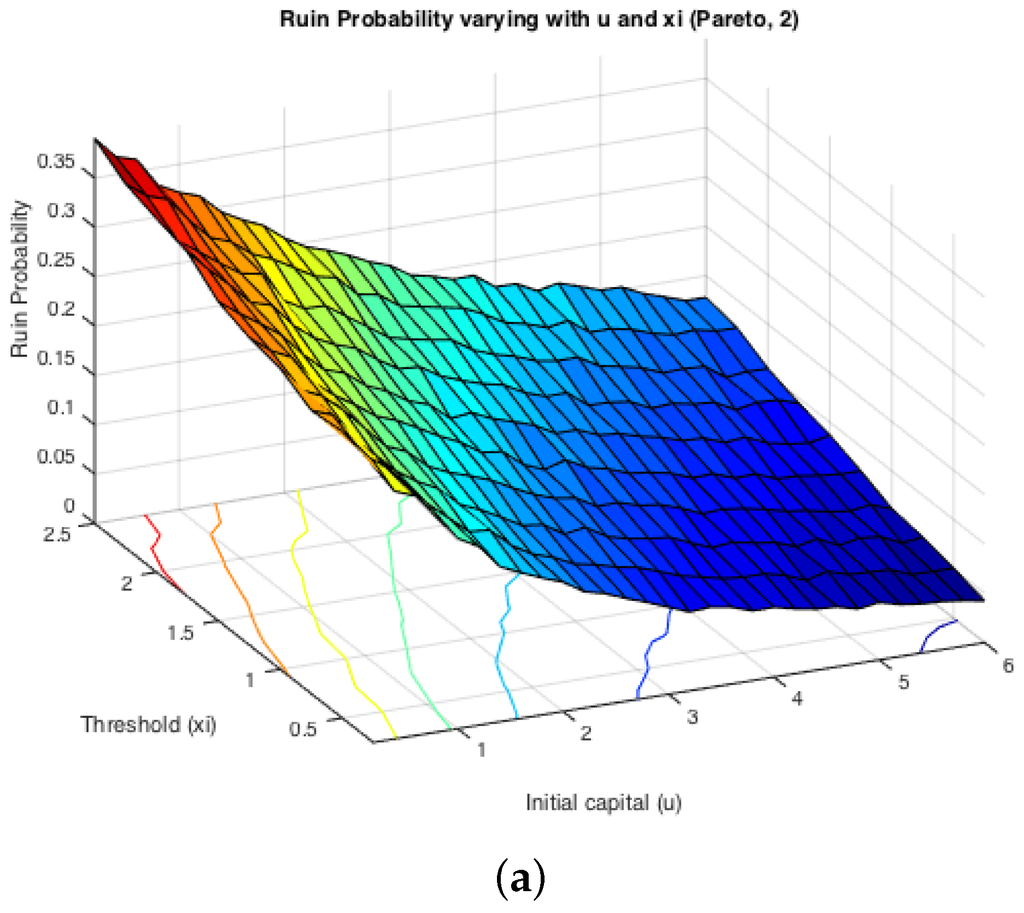

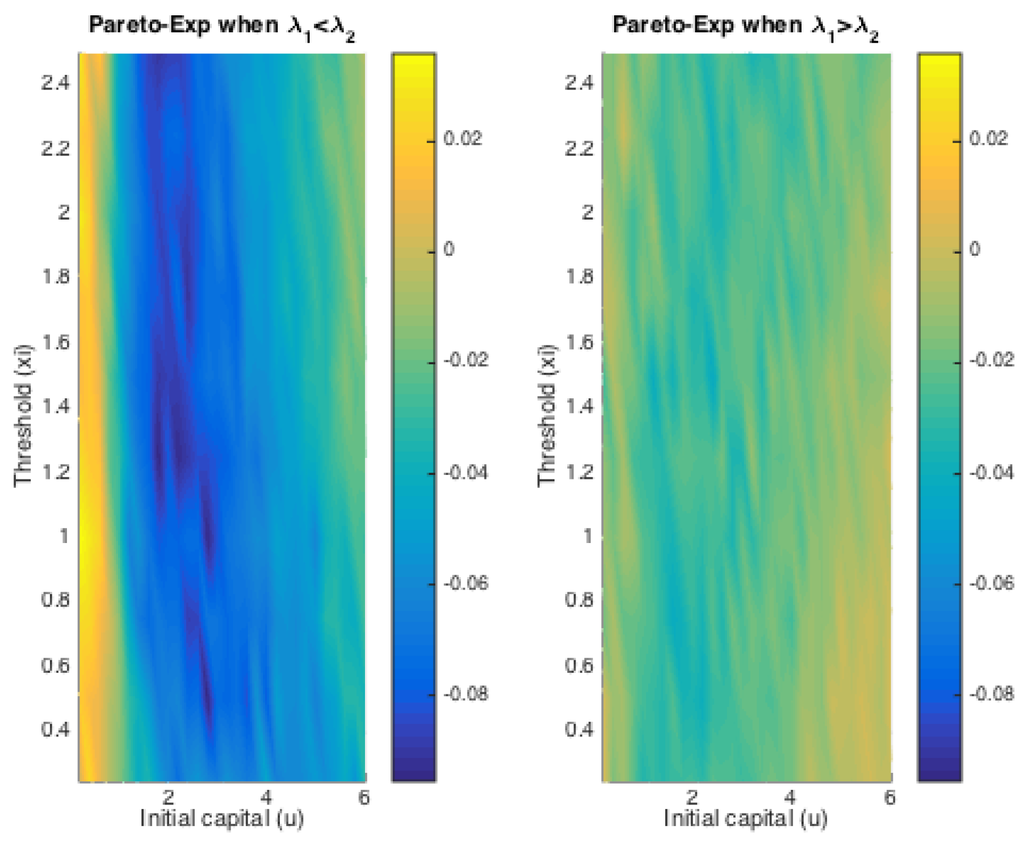

Figure 6 displays the two cases for exponential claims, while Figure 7 does that for Pareto claims. All of these four graphs demonstrate a decreasing trend for simulated finite-time ruin probabilities over the amount of initial surplus, which is as expected. In general, the differences between ruin probabilities for exponentially-distributed claim costs and those for Pareto ones are not significant. To be more precise, the exact values of these disparities are plotted in Figure 8. The color bar shows the scale of the graph, and yellow represents values around zero. Indeed, the differences are very small. Furthermore, it can be seen that the disparities behave differently when and when . For the former case, ruin probabilities for Pareto claims tend to be smaller than those for exponential claims when the initial reserve is not little, whereas there seems to be no distinction between the two claim distributions in the latter case. One way to explain this is that claim distributions would have more impact on the deficit at ruin, because the claim frequency is not affected, as in an infinite-time ruin case. However, this is just a sample simulated result from which we cannot draw a general conclusion.

Figure 6.

Examples: ruin probabilities for exponential claims. (a) Ruin probabilities when , , ; (b) ruin probabilities when .

Figure 7.

Examples: ruin probabilities for Pareto claims. (a) Ruin probabilities when , , ; (b) ruin probabilities when .

Figure 8.

Differences in ruin probabilities using two claim distributions.

On the other hand, it can be seen from the projections on the plane that the magnitude of and causes different monotonicity of ruin probabilities with respect to the fixed window ξ. If , the probability of ruin is monotonically increasing with the increase of ξ. If , it appears to be monotonically decreasing with the rise of ξ. This conclusion for monotonicity is true for both models with heavy-tailed claims and those with light-tailed ones. Such behaviour could also be theoretically verified if we look at the stationary distribution of the Markov Chain created by the exchange of inter-claim times given by Equations (29) and (30). The increase of ξ will raise the probability of getting an inter-claim time smaller than ξ at steady state, i.e.,

Then, that directly leads to an increasing number of τ. Furthermore, the ruin probability is associated with:

for any fixed time T, where and denote the number of times τ and appearing in the process. Notice that stays the same, even though the value of ξ alters. Therefore, now, the magnitude of depends only on and the distribution of i.i.d . The change of ξ alters only the former value. Intuitively, a rise in indicates an increase in and a decrease in , whose amount is denoted by and , respectively. Since the sum of τs and s is kept constant, we have:

If , then , which implies . That is to say, the increase of is more than the drop in , so that sees a rise in the end. Thus, it leads to a higher ruin probability. On the contrary, when , i.e., , as ξ goes up, ruin probabilities would experience a monotone decay. This reasoning is visually reflected in Figure 6 and Figure 7 shown above, and it could also be noticed that the distribution of claims does not affect such monotonicity.

Therefore, by observation, these results suggest that when , the larger the choice of the fixed window ξ, the smaller the ruin probability will be, and vice versa. On the contrary, when , the larger the choice of the fixed window ξ, the larger the ruin probability will be, and vice versa. In fact, was mentioned in the Introduction (Figure 1) to be an assumption for a premium discount process, which resembles a bonus system. Such an observation suggests that if the insurer opts to investigate claims histories less frequently, i.e., choosing a larger ξ, the ruin probability tends to be smaller. This potentially implies a smaller ruin probability if no premium discount is offered to policyholders. It seems that to minimise an insurer’s probability of ruin probably relies more on premium incomes. The use of bonus systems may not help in decreasing such probabilities. The case of suggests an opposite conclusion for conservative insurers. This again addresses the significance of premium income to an insurer. In this situation, the ruin probability could be reduced if the insurer reviews the policyholders’ behaviours more frequently, indicating more premium incomes.

5. Conclusions

In this paper, we introduce a dependence structure within inter-arrival times into a classical insurance risk model. Precisely, the inter-arrival times switch between two random variables when compared to a fixed window ξ. Such an interchange could be equivalently converted to the change of premium rates based on recent claims, as shown by Figure 1. If the premium rates drop after a long wait, a resemblance could be drawn to a basic NCD system where there are only two classes: either a base or discounted level. Notice that the drop in premium rate is not the same as a discount for an individual in the actual NCD system, but their relation can be estimated based on a link from the individual to the collective level. However, the main goal of this paper is to study the ruin probability related to a risk model, as described in Section 2.

Several different approaches have been undertaken to study the ruin probability under the framework of a regenerative process. It is not surprising that under the Cramér assumption Equation (19), the ruin function still has an exponential tail. Since asymptotic results are not exact, we conducted numerical analyses based on different approaches. As a main contribution, we explained how we could construct a discrete Markov additive process from the model under consideration when everything is exponentially distributed. By a change of measure via exponential families, ruin probabilities were possible to simulate through a closed-form formulae Equation (47). Furthermore, we present a case study using Monte Carlo simulations. It has been shown that the underlying probability has the opposite monotonicity with respect to the fixed time window ξ when two random variables for the inter-claim times swap parameters. Additionally, it implies that the use of bonus systems may not be helpful for reducing ruin probabilities, as the premium incomes seem to be more important. However, bonus systems could still be used as a means of attracting market share, which is beneficial to the business in many ways.

Acknowledgments

This work is under support from the Risk Analysis, Ruin and Extremes (RARE)-318984 project, a Marie Curie IRSES Fellowship within the 7th European Community Framework Programme. Zbigniew Palmowski kindly acknowledges the support from the National Science Centre under the grant 2015/17/B/ST1/01102. The authors would like to thank the anonymous reviewers for their useful suggestions that improved the quality of the paper.

Author Contributions

ZP and SD conceived of and designed the model. WN connected the work with the bonus-malus model. ZP, WN and CC conducted analyses in detail. SD introduced simulation methods. CC and WN interpreted the idea and wrote the paper. All authors substantially contributed to the work.

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; nor in the decision to publish the results.

References

- H. Albrecher, C. Constantinescu, and S. Loisel. “Explicit ruin formulas for models with dependence among risks.” Insur. Math. Econ. 48 (2011): 265–270. [Google Scholar] [CrossRef]

- E.A. Valdez, and K. Mo. Ruin Probabilities with Dependent Claims. Working Paper; Sydney, Australia: UNSW, 2002. [Google Scholar]

- H. Albrecher, and O.J. Boxma. “A ruin model with dependence between claim sizes and claim intervals.” Insur. Math. Econ. 35 (2004): 245–254. [Google Scholar] [CrossRef]

- I.K. Kwan, and H. Yang. “Dependent insurance risk model: Deterministic threshold.” Commun. Stat. Theory Methods 39 (2010): 765–776. [Google Scholar] [CrossRef]

- C. Constantinescu, V. Maume-Deschamps, and N. Ragnar. “Risk processes with dependence and premium adjusted to solvency targets.” Eur. Actuar. J. 2 (2012): 1–20. [Google Scholar] [CrossRef]

- A. Dubey. “Probabilité de ruine lorsque le paramètre de Poisson est ajusté a posteriori.” Mitteilungen der Vereinigung Schweiz Versicherungsmathematiker 2 (1977): 130–141. [Google Scholar]

- J. Lemaire. Bonus-Malus Systems in Automobile Insurance. Boston, MA, USA: Springer Science & Business Media, 2012. [Google Scholar]

- S. Asmussen. “Modeling and Performance of bonus-malus Systems: Stationarity versus Age-Correction.” Risks 2 (2014): 49–73. [Google Scholar] [CrossRef]

- W. Ni, C. Constantinescu, and A.A. Pantelous. “Bonus-malus systems with Weibull distributed claim severities.” Ann. Actuar. Sci. 8 (2014): 217–233. [Google Scholar] [CrossRef]

- L.B. Afonso, C. Rui, A.D. Egídio dos Reis, and G. Guerreiro. “Bonus malus systems and finite and continuous time ruin probabilities in motor insurance.” Preprint. 2015. [Google Scholar]

- L.B. Afonso, A.D. Egídio dos Reis, and H.R. Waters. “Calculating continuous time ruin probabilities for a large portfolio with varying premiums.” ASTIN Bull. 39 (2009): 117–136. [Google Scholar] [CrossRef]

- B. Li, W. Ni, and C. Constantinescu. “Risk models with premiums adjusted to claims number.” Insur. Math. Econ. 65 (2015): 94–102. [Google Scholar] [CrossRef]

- Z. Palmowski, and B. Zwart. “Tail asymptotics for the supremum of a regenerative process.” J. Appl. Probab. 44 (2007): 349–365. [Google Scholar] [CrossRef]

- Z. Palmowski, and B. Zwart. “On perturbed random walks.” J. Appl. Probab. 47 (2010): 1203–1204. [Google Scholar] [CrossRef]

- S. Asmussen, and H. Albrecher. Ruin Probabilities. Singapore: World Scientific, 2010, Volume 14. [Google Scholar]

- S. Foss, D. Korshunov, and S. Zachary. An Introduction to Heavy-Tailed and Subexponential Distributions. Berlin, Germany: Springer, 2011. [Google Scholar]

- P. Embrechts, C. Klüppelberg, and T. Mikosch. Modelling Extremal Events for Insurance and Finance. Berlin, Germany: Springer, 1997. [Google Scholar]

- C.M. Goldie. “Implicit renewal theory and tails of solutions of random equations.” Ann. Appl. Probab. 1 (1991): 126–166. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).