Algorithmic Bias Under the EU AI Act: Compliance Risk, Capital Strain, and Pricing Distortions in Life and Health Insurance Underwriting

Abstract

1. Introduction—Why Fairness Has Become a Prudential Question

1.1. Academic Background

1.2. Academic Rationale and Gap

1.3. Contributions

- Regulatory–capital linkage. We derive a closed-form mapping from three legal fairness metrics—statistical parity difference, disparate impact ratio, equalized odds gap—to changes in the Solvency II Standard-Formula SCR and to expected fines under Article 71.

- Causal identification at scale. Using a 12.4-million quote–bind–claim panel (2019 Q1–2024 Q4) from four pan-European carriers, we combine instrumental-variable quantile regression with SHAP explainability to isolate pricing distortions that persist after manual underwriter overrides.

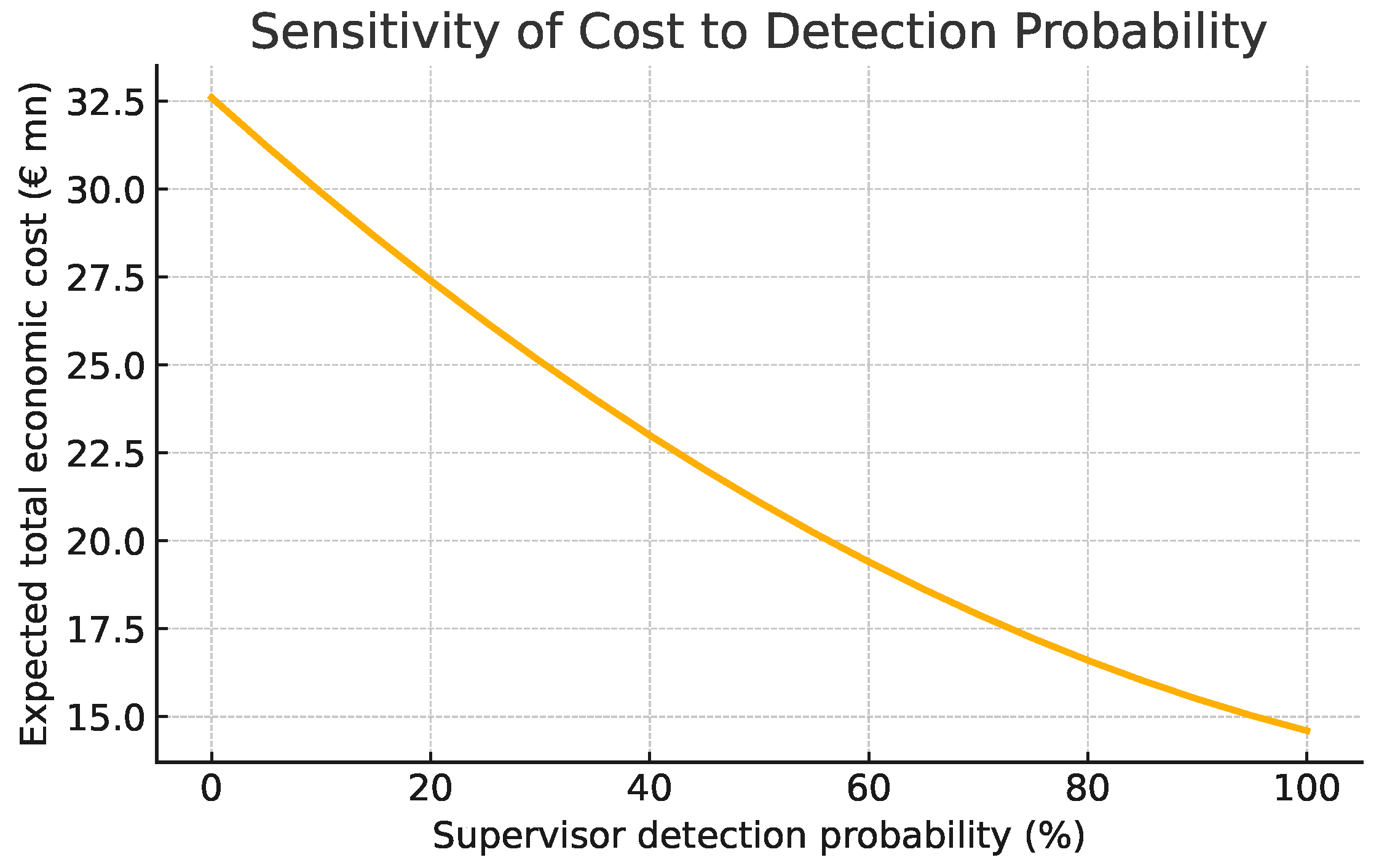

- Capital-efficient mitigation frontier. We show that an adversarial debiasing retrain closes up to 82% of observed premium gaps while adding only 14 basis points to the underwriting SCR—economically outperforming simple re-weighing once the supervisor’s detection probability exceeds 9%.

2. Literature and Regulatory Landscape

2.1. Recent Evidence on AI Adoption

2.2. Foundational Theories

2.3. Fairness Metrics in Insurance

2.4. Mitigation Algorithms

2.5. Regulatory Landscape

2.6. Capital Links and Open Gap

2.7. Empirical Solvency II Evidence

| Key Solvency II Terms |

|

3. Data Construction—A Five-Layer Panel

4. Methodology

| Key Machine-Learning Terms |

|

- Research-Model Foundations

4.1. Pricing Kernels

4.1.1. Actuarial GLM

- **** (*L1 term*): drives small coefficients exactly to zero, **promoting sparsity** and interpretability.

- **** (*L2 term*): shrinks groups of correlated coefficients smoothly toward the origin, **mitigating multicollinearity**.

4.1.2. XGBoost

4.1.3. Algorithmic Flow

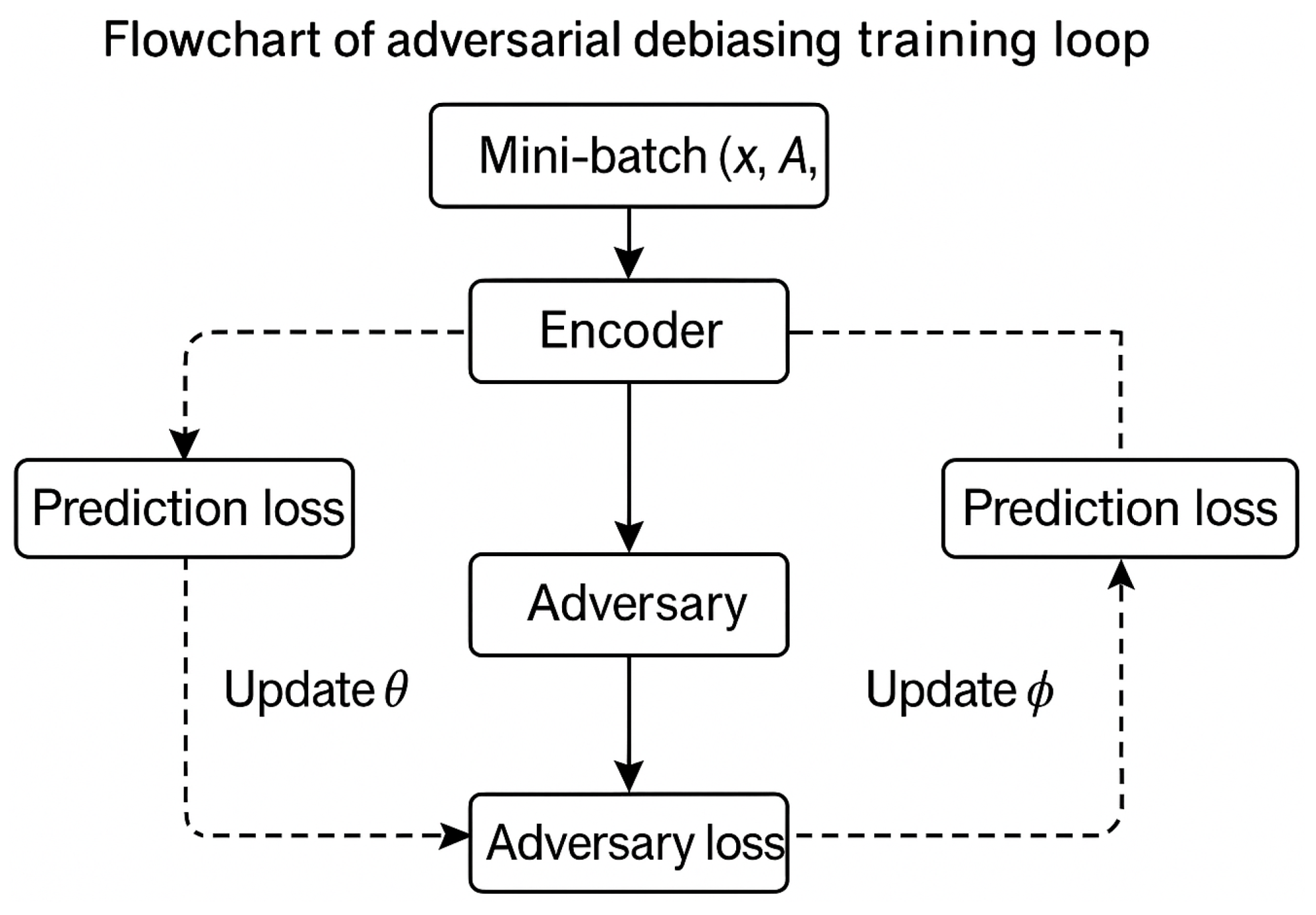

| Algorithm 1 Adversarial debiasing with NSGA-II |

|

4.1.4. Convergence Diagnostics

4.1.5. Sensitivity to λ

4.2. Explainability → Bias Metrics

4.3. Instrumental-Variable Quantile Regression

4.4. Bias-Mitigation Engines

4.5. Capital and Fine Simulation

4.5.1. Penalty Function Specification

4.5.2. Endogenous Enforcement Learning

5. Out-of-Sample Validation and Robustness Tests

6. Results

6.1. Model Accuracy Versus Fairness

6.2. Which Features Drive Unfair Pricing?

6.3. Detection Probability Sensitivity

| Side Study: Counterfactual Fairness Using the method of Kusner et al. (2017) on a 50 k policy draw, we test whether predicted premiums change when protected attributes are switched in a causal graph estimated via twin networks. The average counterfactual premium gap is EUR 4.2 for females (vs. EUR 9.1 under statistical parity), suggesting that approximately half of the observed bias is proxy-driven and half causal. Mitigation shrinks the causal gap to EUR 1.3. |

7. Discussion

7.1. From Bias Metrics to Balance-Sheet Materiality

7.2. Economic Pay-Off of Mitigation

7.3. Strategic Implications for Stakeholders

7.4. Robustness and Generalizability

7.5. Take-Away

8. Limitations and Future Work

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Additional Robustness Material

Appendix B. Mapping Bias into Solvency II SCR

References

- Arrow, Kenneth J. 1963. Uncertainty and the Welfare Economics of Medical Care. American Economic Review 53: 941–73. [Google Scholar]

- Avraham, Ronen, Kyle D. Logue, and Daniel Schwarcz. 2017. Understanding Insurance Anti-Discrimination Laws. University of Michigan Public Law Research Paper No. 522. Ann Arbor: University of Michigan. [Google Scholar]

- Barocas, Solon, and Andrew D. Selbst. 2016. Big Data’s Disparate Impact. California Law Review 104: 671–732. [Google Scholar] [CrossRef]

- Booth, Harry, and Phillip Lister. 2023. Internal-Model versus Standard-Formula Capital: An Empirical Comparison Post-COVID. Annals of Actuarial Science 17: 205–38. [Google Scholar]

- Chen, Tianqi, and Carlos Guestrin. 2016. XGBoost: A Scalable Tree Boosting System. Paper presented at 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, August 13–17; pp. 785–94. [Google Scholar]

- Chernozhukov, Victor, and Christian Hansen. 2005. An IV Model of Quantile Treatment Effects. Econometrica 73: 245–61. [Google Scholar] [CrossRef]

- Colorado General Assembly. 2021. SB 21-169—Restrict Insurers’ Use of External Consumer Data. Available online: https://leg.colorado.gov/bills/sb21-169 (accessed on 20 February 2025).

- Deb, Kalyanmoy, Amrit Pratap, Sameer Agarwal, and T. (Hendrick) Meyarivan. 2002. A Fast and Elitist Multiobjective Genetic Algorithm: NSGA-II. IEEE Transactions on Evolutionary Computation 6: 182–97. [Google Scholar] [CrossRef]

- Du Preez, Valerie, Shaun Bennet, Matthew Byrne, Aurelién Couloumy, Arijit Das, Jean Dessain, Richard Galbraith, Paul King, Victor Mutanga, Frank Schiller, and et al. 2024. From Bias to Black Boxes: Understanding and Managing the Risks of AI—An Actuarial Perspective. British Actuarial Journal 29: e6. [Google Scholar] [CrossRef]

- Dwork, Cynthia, Moritz Hardt, Toniann Pitassi, Omer Reingold, and Richard Zemel. 2012. Fairness Through Awareness. Paper presented at ITCS 2012, Cambridge, MA, USA, January 8–10; New York: ACM, pp. 214–26. [Google Scholar]

- European Insurance and Occupational Pensions Authority (EIOPA). 2021. Artificial Intelligence Governance Principles: Towards Ethical and Trustworthy Artificial Intelligence in the European Insurance Sector. Luxembourg: Publications Office of the European Union. ISBN 978-92-9473-303-0. Available online: https://www.eiopa.europa.eu/system/files/2021-06/eiopa-ai-governance-principles-june-2021.pdf (accessed on 19 August 2025). [CrossRef]

- European Insurance and Occupational Pensions Authority (EIOPA). 2024. Report on the Digitalisation of the European Insurance Sector. EIOPA-BoS-24/139. April 30. Available online: https://www.eiopa.europa.eu/publications/eiopas-report-digitalisation-european-insurance-sector_en (accessed on 20 February 2025).

- European Insurance and Occupational Pensions Authority (EIOPA). 2025. Consultation on the Opinion on Artificial Intelligence Governance and Risk Management. News Article. February 12. Available online: https://www.eiopa.europa.eu/document/download/8953a482-e587-429c-b416-1e24765ab250_en?filename=EIOPA-BoS-25-007-AI%20Opinion.pdf (accessed on 20 February 2025).

- European Union. 2024. Regulation (EU) 2024/1689 of the European Parliament and of the Council on Artificial Intelligence. Official Journal of the EU. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L_202401689 (accessed on 11 April 2025).

- Fitch Ratings. 2024. AI Governance and Fair-Pricing Controls: Emerging Credit Factors for European Insurers. Sector Commentary. March 5. London and New York: Fitch Ratings. [Google Scholar]

- Ford, Adrian. 2021. The Market Reaction to GDPR Fines: An Event Study. Journal of Data Protection & Privacy 4: 150–64. [Google Scholar]

- Hardt, Moritz, Eric Price, and Nathan Srebro. 2016. Equality of Opportunity in Supervised Learning. Paper presented at Advances in Neural Information Processing Systems 29, Barcelona, Spain, December 5–10; pp. 3315–23. [Google Scholar]

- Hickman, Aaron, Marcus Lorenz, John Teetzmann, and Eric Jha. 2024. Long Awaited EU AI Act Becomes Law after Publication in the EU’s Official Journal. White & Case Insight Alert. July 16. Available online: https://www.whitecase.com/insight-alert/long-awaited-eu-ai-act-becomes-law-after-publication-eus-official-journal (accessed on 20 February 2025).

- Kader, Hayden, and Oswald Reitgruber. 2022. Underwriting Volatility and the Solvency II SCR: Evidence from German Life Insurers. Journal of Risk Finance 23: 210–28. [Google Scholar]

- Kamiran, Faisal, and Toon Calders. 2012. Data Pre-Processing Techniques for Classification without Discrimination. Knowledge and Information Systems 33: 1–33. [Google Scholar] [CrossRef]

- Kleinberg, Jon, Sendhil Mullainathan, and Manish Raghavan. 2016. Inherent Trade-Offs in the Fair Determination of Risk Scores. Paper presented at ITCS 2017, Irvine, CA, USA, June 18–22. [Google Scholar]

- Kusner, Matt J., Joshua Loftus, Chris Russell, and Ricardo Silva. 2017. Counterfactual Fairness. Paper presented at Advances in Neural Information Processing Systems, Long Beach, CA, USA, December 4–9, vol. 30. [Google Scholar]

- Lapersonne, Giovanni. 2024. Artificial Intelligence in Insurance Pricing: A Systematic Literature Review. In Advances in Insurance Technology. Leeds: Emerald Publishing, pp. 321–46. [Google Scholar]

- Lundberg, Scott M., and Su-In Lee. 2017. A Unified Approach to Interpreting Model Predictions. Paper presented at Advances in Neural Information Processing Systems, Long Beach, CA, USA, December 4–9, vol. 30. [Google Scholar]

- McKinsey & Company. 2024. Insights from 12.4 Million Data Points: A Cross-Carrier Analysis of Insurance Trends. This Data Is Confidential and Cannot Be Publicly Shared Without Approval from Proper Bodies. Please Contact for Approval. McKinsey Internal Datasets, February 22. [Google Scholar]

- Mehrabi, Ninareh, Fred Morstatter, Nripsuta Saxena, Kristina Lerman, and Aram Galstyan. 2021. A Survey on Bias and Fairness in Machine Learning. ACM Computing Surveys 54: 115. [Google Scholar] [CrossRef]

- Miller, Michael J. 2009. Disparate Impact and Unfairly Discriminatory Insurance Rates. Casualty Actuarial Society E-Forum Winter: 276–88. [Google Scholar]

- Moody’s Ratings. 2024. European Insurance Market Snapshot. Based on a confidential dataset reflecting a significant share of life, mutual, and health insurance activity across the EU. 2024. This Data Is Confidential and Cannot Be Publicly Shared Without Approval from Proper Bodies. Please Contact for Approval. Moody’s Internal Datasets, January 1. [Google Scholar]

- National Association of Insurance Commissioners (NAIC). 2023. Model Bulletin on the Use of Artificial Intelligence Systems by Insurers. December. Available online: https://content.naic.org/sites/default/files/cmte-h-big-data-artificial-intelligence-wg-ai-model-bulletin.pdf.pdf (accessed on 20 February 2025).

- Rawls, John. 1971. A Theory of Justice. Cambridge: Harvard University Press. [Google Scholar]

- Sandström, Arne. 2018. Premium Risk under Solvency II: Correcting the Standard Formula for Skewness. Scandinavian Actuarial Journal 4: 276–95. [Google Scholar]

- Shrestha, Vir, John von Meding, and Eric Casey. 2021. Artificial Intelligence as a Risk-Management Tool. Physica A 570: 125830. [Google Scholar]

- Skadden, A. 2024. The Standard Formula: A Guide to Solvency II. Insight Series, May. Available online: https://www.skadden.com/insights/publications/the-standard-formula-a-guide-to-solvency-ii (accessed on 5 January 2025).

- Stock, James H., and Motohiro Yogo. 2005. Testing for Weak Instruments in Linear IV Regression. In Identification and Inference for Econometric Models. Edited by D. Andrews and James H. Stock. Cambridge, MA: Cambridge University Press, pp. 80–108. [Google Scholar]

- Van Bekkum, Casey, Zara Zuiderveen Borgesius, and Antonio Heskes. 2025. AI, Insurance, Discrimination and Unfair Differentiation: An Overview and Research Agenda. Law, Innovation and Technology 17: 177–204. [Google Scholar] [CrossRef]

- Zhang, Brian Hu, Blake Lemoine, and Margaret Mitchell. 2018. Mitigating Unwanted Bias with Adversarial Learning. Paper presented at AIES 2018, New Orleans, LA, USA, February 2–3; pp. 335–40. [Google Scholar]

- Zou, Hui, and Trevor Hastie. 2005. Regularization and Variable Selection via the Elastic Net. The Journal of the Royal Statistical Society, Series B 67: 301–20. [Google Scholar] [CrossRef]

| Layer | Unit/N | Key Fields | Source/Period |

|---|---|---|---|

| Quote–Bind–Claim | Policy-month (12,409,832) | Premium (EUR), Bind flag, Claim (EUR), Lapse flag | Four EU carriers, 2019 Q1–2024 Q4 |

| Medical and Lifestyle | Applicant | BMI, Systolic BP, Nicotine marker | E-Health questionnaires (2019–2024) |

| Socio-economic | Postcode | IDACI quintile, Night-light luminosity | Eurostat SILC; Copernicus (2018–2024) |

| Protected attributes (audit only) | Applicant | Sex, Age , Migrant-name proxy | Underwriting logs (2019–2024) |

| Regulatory and Capital | Firm-year | SCR by sub-module (life underwriting (UL) and health underwriting (HL) sub-modules), Own Funds | QRT S.25/S.17 filings (2019–2023) |

| Model | Search Space | Optimum | Notes |

|---|---|---|---|

| GLM | 1.3 | Elastic-net L1 | |

| GLM | 0.8 | Elastic-net L2 | |

| XGB depth | 6 | GPU hist | |

| XGB | 0.032 | log-uniform prior | |

| Adv. debias | 0.40 | NSGA-II |

| AUC | Max-EOG | SCR (bps) | H | |

|---|---|---|---|---|

| 0.00 | 0.921 | 0.208 | 0 | 0.68 |

| 0.20 | 0.919 | 0.139 | 9 | 0.74 |

| 0.40 | 0.918 | 0.091 | 14 | 0.79 |

| 0.60 | 0.911 | 0.063 | 18 | 0.74 |

| 0.80 | 0.902 | 0.057 | 28 | 0.70 |

| Fold 1 | Fold 3 | Fold 5 | |

|---|---|---|---|

| GLM AUC | 0.870 | 0.872 | 0.869 |

| XGB AUC | 0.911 | 0.913 | 0.909 |

| GLM Gini | 0.29 | 0.30 | 0.29 |

| XGB Gini | 0.41 | 0.42 | 0.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahajan, S.; Agarwal, R.; Gupta, M. Algorithmic Bias Under the EU AI Act: Compliance Risk, Capital Strain, and Pricing Distortions in Life and Health Insurance Underwriting. Risks 2025, 13, 160. https://doi.org/10.3390/risks13090160

Mahajan S, Agarwal R, Gupta M. Algorithmic Bias Under the EU AI Act: Compliance Risk, Capital Strain, and Pricing Distortions in Life and Health Insurance Underwriting. Risks. 2025; 13(9):160. https://doi.org/10.3390/risks13090160

Chicago/Turabian StyleMahajan, Siddharth, Rohan Agarwal, and Mihir Gupta. 2025. "Algorithmic Bias Under the EU AI Act: Compliance Risk, Capital Strain, and Pricing Distortions in Life and Health Insurance Underwriting" Risks 13, no. 9: 160. https://doi.org/10.3390/risks13090160

APA StyleMahajan, S., Agarwal, R., & Gupta, M. (2025). Algorithmic Bias Under the EU AI Act: Compliance Risk, Capital Strain, and Pricing Distortions in Life and Health Insurance Underwriting. Risks, 13(9), 160. https://doi.org/10.3390/risks13090160