1. Introduction

Creating an accurate loss model for insurance data is a vital topic in actuarial science. Insurance industry data have distinct characteristics, including a high frequency of small losses and a scarcity of significant losses. Traditional heavy-tailed distributions often struggle to capture the skewness and fat-tailed properties inherent in insurance data. As a result, many researchers have explored alternative distributions to better fit the loss data. One promising approach is the use of composite distributions, which combine a standard distribution with positive support, such as the Exponential, Inverse Gaussian, Inverse Gamma, Weibull, and Log-Normal distributions for minor losses, with the Pareto distribution, which accounts for extreme losses that occur infrequently.

Klugman et al. (

2012) provided a comprehensive discussion on modeling data sets in actuarial science.

Teodorescu and Vernic (

2006) examined the Exponential–Pareto composite model and derived the maximum likelihood estimator for the threshold parameter

.

Preda and Ciumara (

2006) utilized the composite models Weibull–Pareto and Log-Normal-Pareto to analyze insurance losses. The models described in the article have two key parameters: the support parameter, denoted as

, and the threshold parameter, represented by

. The authors developed algorithms to find and compare the maximum likelihood estimates (MLEs) for these two unknown parameters. In their work,

Cooray and Cheng (

2013) estimated the parameters of the Log-Normal-Pareto composite distribution using Bayesian methods, employing both Jeffreys and conjugate priors. They opted for Markov Chain Monte Carlo (MCMC) methods instead of deriving closed mathematical formulas. Additionally,

Scollnik and Sun (

2012) developed several composite Weibull–Pareto models.

Aminzadeh and Deng (

2018) revisited the composite Exponential-Pareto distribution and provided a Bayesian estimate of the threshold parameter using an inverse-gamma prior distribution. Additionally,

Bakar et al. (

2015) introduced several new composite models based on the Weibull distribution, specifically designed for analyzing heavy-tailed insurance loss data. These models were applied to two real insurance loss data sets, and their goodness-of-fit was evaluated. Furthermore,

Deng and Aminzadeh (

2023) recently examined a mixture of prior distributions for the Exponential-Pareto and Inverse Gamma–Pareto composite models.

Teodorescu and Vernic (

2013) developed the composite Gamma-Type II Pareto model and derived the composite Gamma–Pareto as its special case, which has three free parameters. However, the new composite Gamma–Pareto model proposed in this article has only two free parameters, which makes it a more desirable model to work with, particularly when used for regression modeling. This means that fewer parameters need to be estimated using a data set.

Cooray and Ananda (

2005) introduce a two-parameter composite Log-Normal-Pareto model and utilizes the model to analyze fire insurance data. Additionally,

Scollnik (

2007) discusses limitations of the composite Log-Normal-Pareto model in

Cooray and Ananda (

2005) and presents two different composite models based on the Log-Normal and Pareto models to address the concerns. In this article, the performance of the three composite models is discussed and compared using the fire insurance data set. More recently,

Grün and Miljkovic (

2019) provides a comprehensive analysis of composite loss models on the Danish fire losses data set, derived from 16 parametric distributions commonly used in actuarial science; however, the Gamma–Pareto composite model proposed in the current article is not among the best 20 fitting composite models for the fire data listed in

Grün and Miljkovic (

2019).

There has been limited research on regression models for composite distributions.

Konşuk Ünlü (

2022) considered a composite Log-Normal-Pareto Type II regression model; however, their estimation of the regression parameters relied on the Particle Swarm Optimization method to analyze household budget data. Furthermore, Bayesian inference was not addressed in that study. In contrast, the method proposed in the current article provides accurate estimates of regression parameters by directly optimizing the likelihood function using Mathematica code specifically written for this research. Additionally, the proposed method can be applied more generally to other composite distributions beyond the Gamma–Pareto composite, which serves as the example in this article.

This article presents an innovative computational tool for efficiently finding the MLEs and approximate Bayes estimates of regression parameters using the ABC algorithm, particularly when the response variable Y is linked to multiple covariates.

In

Section 2, we introduce a new composite Gamma–Pareto distribution which has only two parameters. We demonstrate that the smoothness conditions of its density function allow us to simplify four parameters into a two-parameter probability density function (PDF). Additionally, we outline a numerical maximization approach for accurately computing the MLEs.

Section 3 formulates the likelihood function in a regression context, establishing connections between the response variable and covariates. In

Section 4, we apply a numerical optimization method and the Fisher information matrix with Mathematica to compute the MLEs of the regression parameters, ensuring reliable estimates throughout our analysis.

Section 5 discusses the ABC algorithm for estimating regression parameters. This algorithm utilizes the Fisher information matrix and assumes a multivariate normal distribution as the prior, generating a large number of samples. The samples that are “accepted” represent the posterior distribution and are used to compute the approximate Bayesian estimates. This section also outlines the steps necessary to calculate both the MLEs and ABC-based estimates for the regression parameters. A summary of the simulation results is provided in

Section 6.

Section 7 presents a Chi-Square test for evaluating the goodness of fit of a data set generated from the composite Pareto model.

Section 8 provides a real data set labeled as “Total Auto Claims in Thousands of Swedish Kronor”. For this data, the Chi-Square test is also applied to assess how well the claims fit the Gamma–Pareto model. Mathematica codes, labeled B–D, are included in the

Supplementary Materials, and Mathematica code A for the simulations involved in the regression model is available upon request.

2. Derivation of Gamma–Pareto Composite Distribution

The single-parameter exponential dispersion family (EDF) pdf for a random variable

Y is given by

where

is the cumulant functin,

is the canonocal parameter,

is the dispersion parameter, and

is the normalization not depending on

. Note that

. Let

with the PDF

and assume

is known. It can be shown that choosing

, we get

. As a result,

, our canonical link is

, and

is the canonical parameter. Therefore, the PDF of the gamma distribution with this canonical parameterization can be written as

which will be used in developing the Gamma–Pareto composite model proposed in the article. It is worth mentioning that with this parameterization,

takes negative values.

In the following, we derive the PDF of the proposed Gamma–Pareto model. Let

Y be a random variable with the probability density function

where

and

The derivation of the normalizing constant

c, which can be found via

is shown below after reducing the number of parameters from four to two.

is the PDF of the Gamma distribution based on the canonical parametrization mentioned earlier with the parameters

and

.

is the PDF of the Pareto distribution with parameters

and

. The advantages of the canonical parametrization for the gamma distribution are twofold: 1. It enables us to include a dispersion parameter

which is related to

through

, where

v is the exposure or given weight. Without loss of generality, it is common to let

. Note that the smaller the

value, the larger the

value. Therefore, the selected value

controls the variation in the data. 2. The likelihood function becomes unimodal with a unique global maximum.

To ensure the smoothness of the composite density function, it is assumed that the two probability density functions are continuous and differentiable at

. That is,

The equation

leads to

and as a result,

Using

, we have

Using Equation (

1) in the above equation, we obtain

Equating (1) and (2) leads to

Letting

, the above equation can be rewritten as

For a selected value of

, non-linear Equation (

4), which has two solutions, can be solved for

w via Mathematica. For example, if

, the negative solution is

, and for

, the negative solution is

. Note that the acceptable solution to (4) must be negative, as

, and

. Using

, we have

. Since for any value of

, as mentioned,

w is a function of

through (4), it is concluded that

is a negative number, which is only a function of

.

It can be shown that the normalizing constant

c for the Gamma–Pareto can be written as

where

is the gamma function and

is the upper incomplete gamma function. Both functions can be computed via Mathematica. Since

w is a function of

,

c is a constant that does not depend on

or

. Therefore, the composite Gamma–Pareto density function is given by

where

and

. It is worth mentioning that the above PDF has only two parameters,

and

.

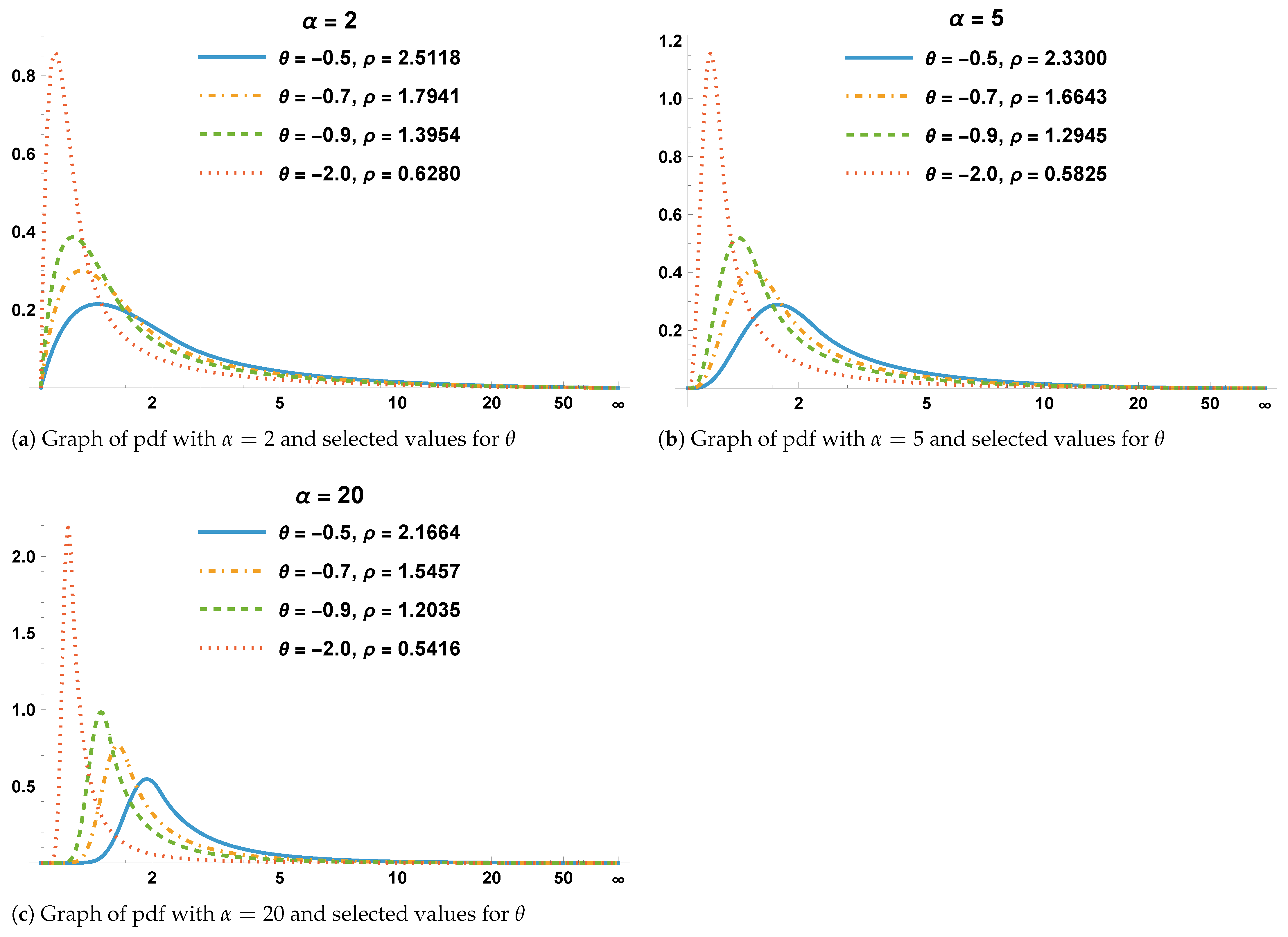

Figure 1a–c confirm that for a selected value of

, the graph of

is a smooth curve. Also,

Figure 1a–c reveal that the dispersion decreases as

increases, and the PDF becomes more symmetric.

The first moment of the distribution is given in Equation (9) in the subsection titled “Maximum Likelihood Method for Regression Parameters.” The conditions for the existence of the first moment, verified by Mathematica, are

which imply

. That is,

It can also be shown that the conditions for the existence of the second moment are

Table 1 utilizes Equation (

4) and reveals that for a variety of selected values of

, the conditions for the first and second moments are satisfied. We can see that for a positive value of

, the condition (

) for the first moment is satisfied. Also, for small values of

,

Table 1 reveals that

. However, for large values of

, we have

.

3. MLE for and the Value of

This section proposes a numerical approach to provide the ML estimate for the parameter

for the PDF

, derived in

Section 1. For

, without loss of generality, for an integer

m, assume

, where

. Assume that

and

, respectively, are from the Gamma and Parerto components of the composite distribution. The log-likelihood function can be written as

Recall from

Section 2 that for a given value of

, we can find

w using Equation (

4). We then define

as

, where

. Due to this reduction in the number of parameters, it is not possible to derive closed formulas for the maximum likelihood estimates (MLEs) directly through differentiation of

, since a specific value for

is required. Instead, we propose using a numerical optimization algorithm via Mathematica, specifically the NMaximize function, to search for an approximate value of

and the correct value of

m. Code B (see the

Supplementary Materials) computes the MLE of

, along with an approximate value for

and the correct value for

m, using a sample from the Gamma–Pareto distribution.

In the simulation studies, Code C (see the

Supplementary Materials) utilizes “true” values

and a sample size

n to generate

samples from the composite distribution. The average maximum likelihood estimate (MLE)

and the average squared error (ASE) are calculated. For each simulated sample, the corresponding values for

w and

are determined using

. A numerical optimization method, as outlined in Code B, is then employed to compute

and the correct value of

m.

Table 2 presents a summary of the simulation studies and reveals that as the sample size

n increases, the Average Squared Error (ASE), given inside parentheses,

decreases for all values of

and

listed in the table. Additionally, for specific combinations of

, a larger

results in a smaller

. This relation is more apparent when

is not very small.

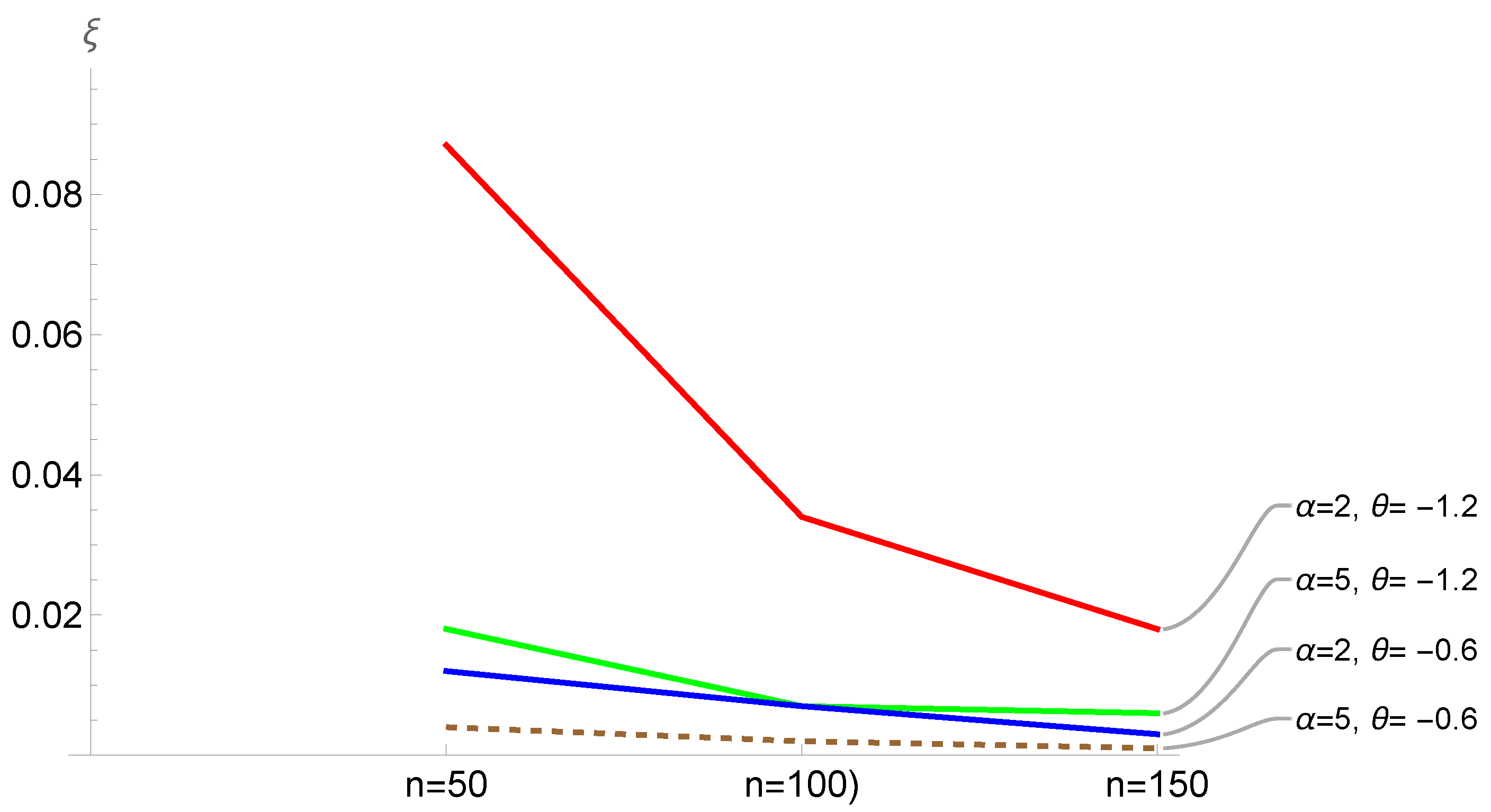

Figure 2 provides a visual summary of the numbers in

Table 2, which also supports the same conclusion. This outcome is expected, as a larger value of

leads to reduced variation within the sample, thereby providing a more accurate estimate for

.

4. Regression Model for Gamma–Pareto Composite Distribution

In this section, in the context of the regression analysis, it is assumed that can be approximated through a search method (see Code B) using a random sample . Therefore, is the only parameter that links the response variable Y to covariates. Hence, an approximated value is used in the regression analysis with the ultimate goal of estimating regression parameters through a link function for . For each row, , of the design matrix , let denote a realization of the response variable Y from the composite PDF (4), and without loss of generality, assume that is an ordered random sample of size k, where are assumed to be from the first part (Gamma) of the composite distribution and are observed values from the second part (Pareto) of the composite distribution.

It is assumed that there are

potential predictors related to the response variable

Y. The first column of

X contains 1’s, which accounts for the intercept parameter

. Therefore, the regression parameters are denoted by

. As discussed in

Section 2, based on the parameterization we considered, the canonical link function is

. For

,

. As a result,

Recall that

is assumed to be known or can be approximated. Therefore, to define a link function in the context of regression modeling that relates

to the covariates, we need to choose a function of covariates that is positive. There are several options:

Option 3 provides a positive value for the mean, as required. However, algebraically, it may not be the best choice for optimizing the likelihood function to find MLEs of regression parameters and compute the Fisher information matrix. Option 2 is undesirable because, depending on the covariate data, it is possible that , which would make ; therefore, it is not acceptable. Consequently, we consider Option 1 with the exponential function that ensures , which means

Note that

and

. Substituting for

in the density function, the composite Gamma–Pareto density function is

It is noted that the observation

in the second part of the PDF is related to the covariates

through

Maximum Likelihood Method for Regression Parameters

This section provides MLEs of regression parameters and utilizes the Fisher information matrix to compute the variance–covariance matrix for the estimators. Recall that

and

. Therefore, the global maximum for (6) with respect to the regression parameters can be found by maximizing

NMaximize in Mathematica is used in our code to maximize

l to find the MLE

. A few predictors might not be statistically significant. We propose computing the inverse of the estimated Fisher information matrix and using Wald’s test to identify statistically significant predictors. Let

is a

vector. We aim to compute the

matrix, denoted as IFM =

, which represents the inverse of the estimated Fisher information matrix. This matrix estimates the variance–covariance matrix for

. Since we are using numerical maximization with Mathematica, we will not be considering the iterative Fisher scoring algorithm. The algorithm for the parameter estimates is given by

where

v is the iteration index. However, calculating the Fisher information matrix is necessary for identifying statistically significant predictors in the regression model using Wald’s test statistic.

involves

Y; consequently, the computation of

requires estimating the expected value. From the composite probability density function (PDF) discussed in

Section 2, it can be shown that

In the context of regression analysis, Equation (9) can be rewritten as

Note that

and

. Therefore,

is a positive number, as expected, and can be estimated using

. The Mathematica code for this article uses (10) to compute

. Once

is obtained, the Wald’s test is utilized as shown below:

where

is the

gth diagonal element of IFM, and it estimates the variance of

. For a large sample size

k, due to the asymptotic large sample property of MLE, the estimators

are asymptotically normally distributed. Therefore, if

, it is concluded that at

q level of significance, predictor

is not significant and that the matrix

X should be updated accordingly. Using the updated matrix

X, the new set of MLEs for the remaining regression parameters is found and tested for significance. This process continues until all remaining predictors in the model are significant.

5. Bayesian Estimation of Regression Parameters

According to the classical Bayesian approach, given a prior distribution

for a parameter

(or multi-parameters

for the distribution of a random variable

Y, the corresponding posterior PDF

is formulated, where

are observed values of

Y in a sample of size

n.

is the likelihood function and

C is a normalizing constant, defined as

provided that

is a continuous prior distribution. Under the squared-error loss function, Bayes estimates of the parameters

are analytically derived via the corresponding expected values based on the posterior distribution. This may require writing the posterior PDF as

if

are assumed to be dependent.

Identifying a conjugate prior for a likelihood function can often be a challenging task. This is especially true when we want the posterior distribution to belong to the same class as the prior distribution. Depending on the form of the likelihood function, it might also be impossible to express the posterior PDF in a recognizable form, which complicates deriving the Bayes estimator as the expected value of the posterior. To address these challenges, a common approach is to utilize a Markov Chain Monte Carlo (MCMC) algorithm. This method involves selecting a proposal distribution for the parameters of the posterior probability density function (PDF), which may not align with any well-known distribution class. The algorithm then performs simulations until it confirms that convergence has been achieved. For example,

Aminzadeh and Deng (

2022) proposes a computational method that leverages an MCMC algorithm to estimate the Bayes estimate of the renewal function when the interarrival times follow a Pareto distribution.

To develop a Bayesian algorithm using the Gamma–Pareto composite distribution and a non-linear regression model, it is essential to establish a multivariate prior distribution for the regression parameters

. Since these parameters can assume any value, the multivariate normal distribution is the most suitable choice for the prior distribution.

where

and

is the corresponding

positive-definite variance–covariance matrix of

The posterior PDF via (5) and (11) is written as

It is important to note that in total, there are

hyperparameters involved in the prior distribution. Choosing appropriate values for these hyperparameters can be challenging. For the current model, we need to know values for

(where

) as well as the covariances

(for

and

).

Aminzadeh and Deng (

2018,

2022) explored a data-driven approach to help select hyperparameter values when relevant information is unavailable. Their method involves using the MLEs of the parameters of interest to match the expected value of the prior distribution with the MLE while also choosing hyperparameters that minimize the prior distribution’s variance. The idea is to incorporate the information in the MLEs and assign appropriate hyperparameter values. This approach results in several equations that can be solved simultaneously to find the hyperparameters. Simulation studies in their articles suggest that this method provides accurate Bayesian estimates that outperform MLEs in terms of accuracy.

5.1. Approximate Bayesian Computation

In this article, we take a data-driven approach. However, because the posterior in Equation (12) is challenging to work with, we turn to the Approximate Bayesian Computation (ABC) method, which relies on extensive simulations. The foundational concepts of ABC date back to the 1980s when Donald Rubin introduced them. Since then, various researchers have employed this method, mainly when deriving the posterior probability density function (PDF) is intractable.

Lintusaari et al. (

2017) provide a comprehensive overview of recent developments in the ABC method.

5.2. Computation Steps for ML and ABC Estimates Using Simulated Data

For selected “true” values of and a chosen design matrix X, we generate samples for from the Gamma–Pareto composite distribution. The following steps in Mathematica Code A compute the MLEs and approximate Bayesian estimates of . It is important to note that represents the intercept parameter, and the first column of the matrix X consists of ones.

In the

jth iteration

, the numerical maximization method via NMaximize in Mathematica is applied to

(see

Section 4) to compute MLEs

and Wald’s test statistic is used to identify the significant predictors that should remain in the model. Recall that we need to update the design matrix

X, if necessary. This also applies to the first column of the matrix

X. For ease of presentation, it is assumed that all predictors are retained in the model. The overall MLE is computed as

and with the average squared error (ASE)

Note that using

jth generated sample

, the corresponding

matrix is computed, and then the overall variance–covariance matrix

is computed and is used in Wald’s test statistic.

Using IFM, generate random samples from a multivariate normal distribution . Let , denote the vth generated sample, .

Based on vth simulated sample , generate sample of size k from the composite distribution using the ith row of the updated design matrix X and the link function Let us denote the simulated samples by .

Following the ABC algorithm, simulated samples should be compared with , using some summary statistics. If the difference in the absolute value of selected summary statistics is less than a selected tolerance error , then the vth simulated in Step 3 is accepted as a sample from the posterior distribution and is moved to the set of “accepted” samples. In this article, we use the 30th and 90th percentiles as the summary statistics. The reason for the two percentiles is that the samples from the composite distribution are expected to have small and very large values in each sample. Therefore, for a more accurate comparison, two percentiles are employed. and , respectively, denote the tolerance errors for 30th and 90th percentiles. Note that there are original samples from Step 1 and samples in Step 3. For comparison purposes, we compare all samples with one of the samples from Step 1. Note that choosing only one generated sample for comparison is exactly the practical case, as we are given only one data set on the response variable to analyze. But in the simulation scenario, the “original sample” can be any of the samples from Step 1. The sample can be selected at random. In the simulation studies, two options are considered: 1. All samples are compared with the Average of samples, 2. All samples are compared with a Random sample selected from the samples. Again, it is noted that option 2 is the practical case, as only one sample in an actual application is available to analyze.

Letting

denote the number of acceptable simulated samples

from the multivariate normal distribution in Step 2, we define an approximate Bayes estimate as

with the ASE,

The values of tolerance errors and in the code should depend on the available and simulated data. Small tolerance values would lead to a small . On the other hand, significant tolerance limits would force to be close to Nss. Therefore, before making all comparisons in Step 4, it is recommended to print a few values for the absolute differences between the 30th percentiles of the original and simulated samples, as well as for the 90th percentiles, to get an idea of the appropriate choices for and . It is worth emphasizing that according to the ABC algorithm, the set of acceptable are considered random samples from the posterior distribution (14).

6. Simulation for Composite Gamma–Pareto Regression

We conduct simulation studies to compare the accuracy of ML and Bayesian methods. For selected values of k (sample size), (the parameter vector), , and p, we generate samples from the PDF (4) to obtain the MLEs. Additionally, we generate simulated samples from a multivariate normal distribution using the Approximate Bayesian Computation (ABC) algorithm to derive the Bayesian estimates of the regression parameters.

Case 1: For the sample size

and

,

Table 3 provides a summary of the simulation results with the “true” regression parameters

,

, and

. The selected design matrix,

, is given below

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1.3. | 1.6 | 1.1 | 1.7 | 1.2 | 1.5 | 1.9 | 1.3 | 1.3 | 2.2 | 1.2 | 1.5 | 1.5 | 2.7 | 1.4 | 1.5 | 1.7 | 2.7 | 1.6 | 1.8 | 1.6 | 1.3 | 1.1 | 1.5 | 1.4 | 2.0 | 1.9 | 1.4 | 1.5 | 1.9 |

| 2.5 | 2.3 | 2.4 | 2.1 | 2.3 | 1.7 | 2.4 | 1.5 | 2.3 | 1.3 | 1.9 | 1.9 | 2.1 | 2.3 | 1.1 | 2.3 | 1.1 | 2.6 | 2.4 | 2.1 | 2.0 | 2.3 | 2.4 | 1.5 | 2.4 | 2.2 | 1.1 | 2.8 | 2.8 | 2.0 |

Table 3 and

Table 4 illustrate that the ABC-based estimator generally has a lower Average Squared Error (ASE) compared to the ML estimator. Additionally, both the Random and Average options used to compare the original samples with those generated via the ABC method show nearly identical levels of accuracy.

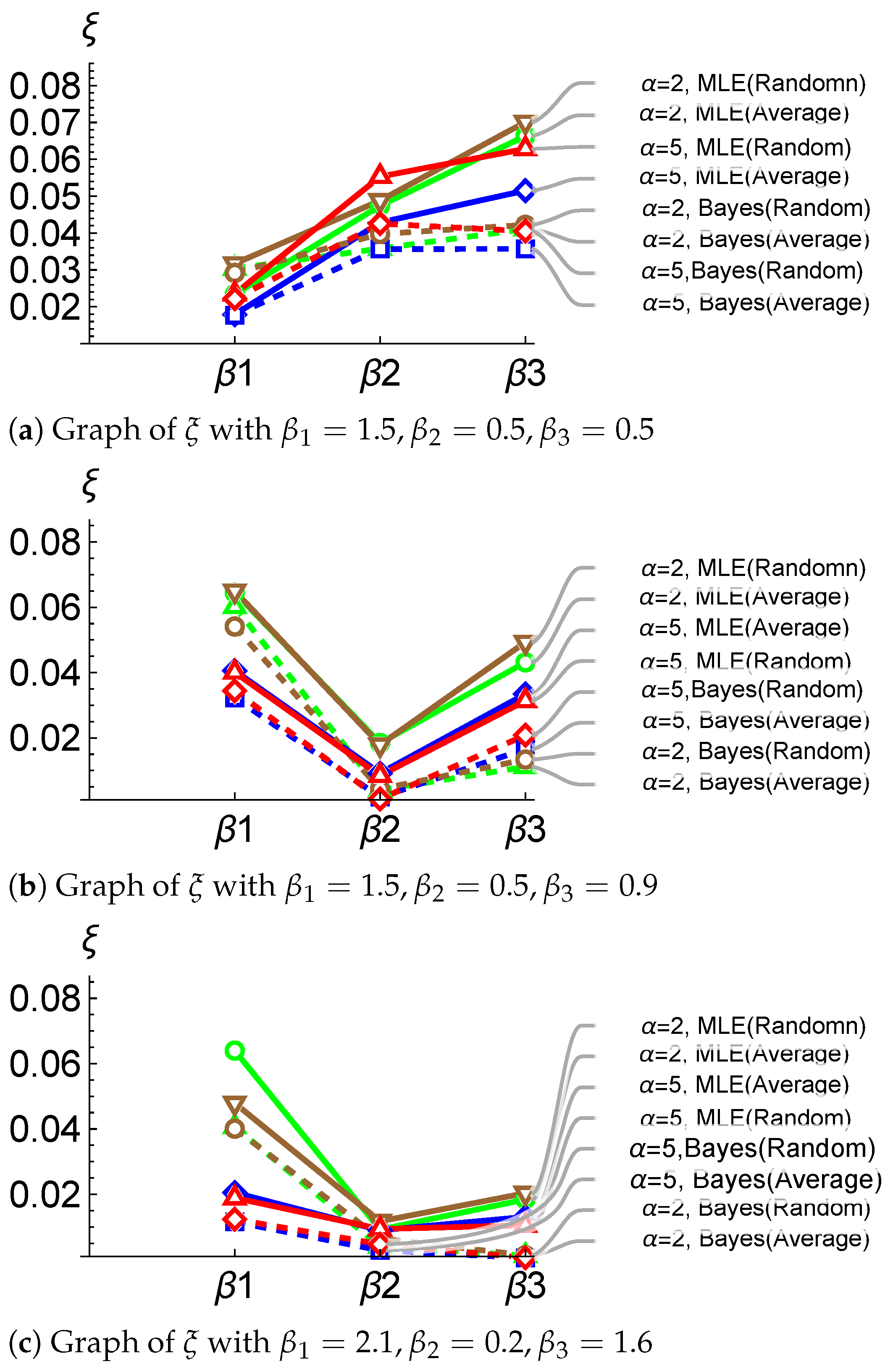

Figure 3 is derived from

Table 3. Note that there are three different sets of “true” values for the regression parameters considered in

Table 3 and

Table 4. Solid and dashed lines represent the

values obtained using the MLE method and the ABC method, respectively. Since the solid lines are usually higher than the corresponding dashed lines, we can conclude that the MLE method results in more variability for the estimators than the ABC method. Note that the relation between ASE values solely depends on the selected “true” values of

, and

in simulations, as well as the covariate values in the design matrix. For example, in

Figure 3b,c,

is the smallest, while in

Figure 3a, that is not the case. It is worth mentioning that the Random option is more realistic because it takes into account that we typically analyze only one data set in real-world scenarios. This means that the samples produced by the ABC algorithm are compared exclusively with the original data set.

Case 2: For

,

and the same “true” regression parameters as in Case 1, a summary of simulation results is given in

Table 4. The design matrix is

, where

is

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1.2 | 1.7 | 1.3 | 1.6 | 1.4 | 1.8 | 1.1 | 1.5 | 1.2 | 1.3 | 1.5 | 1.7 | 1.3 | 1.5 | 1.6 | 1.3 | 2.1 | 1.6 | 1.4 | 1.5 |

| 2.4 | 2.4 | 2.6 | 2.0 | 2.5 | 2.2 | 2.7 | 2.9 | 2.7 | 2.5 | 2.7 | 2.6 | 1.7 | 2.3 | 2.5 | 2.3 | 2.6 | 2.9 | 2.8 | 3.1 |

7. Goodness-of-Fit Test

This section details the goodness-of-fit of a data set to the Gamma–Pareto composite distribution. Using the composite PDF in

Section 2, the CDF of the distribution can be derived as

The derivation of the CDF is based on the constraints (summarized below) on the parameters discussed in

Section 2.

For a sorted data set

from the Gamma–Pareto distribution, the correct value of

m, the MLE

, and an approximate value for

are computed via Code B and plugged into Code D (see the

Supplementary Materials), which provides the Chi-Square goodness-of-fit test statistic.

The data in

Table 5 is generated from the Gamma–Pareto composite distribution. For the data set, Code B provides

,

, and

. For this data, the Chi-Square goodness-of-fit test (see Code D) is applied using the CDF (see

Section 7) and its inverse function to determine the intervals:

with the corresponding observed frequencies: {12, 20, 14, 17, 33, 14, 11, 16, 10}. The Chi-Square test = 7.11064. Since the critical value is

at the 0.01 level of significance, the goodness-of-fit of the data to the Pareto-Gamma model is not rejected.

It is worth mentioning that the Gamma–Pareto model introduced in the current article does not provide an adequate fit to the Fire data that was analyzed by

Grün and Miljkovic (

2019). The proposed composite model, like other composite models in the literature, would be superior to common heavy-tailed distributions, such as the Gamma and Weibull distributions, provided that the data contain several extreme loss values.

8. Numerical Example

The following data represents the total payment for auto claims in thousands of Swedish Kronor.

y = {4.4, 6.6, 11.8, 12.6, 13.2, 14.6, 14.8, 15.7, 20.9, 21.3, 23.5, 27.9, 31.9, 32.1, 38.1, 39.6, 39.9, 40.3, 46.2, 48.7, 48.8, 50.9, 52.1, 55.6, 56.9, 57.2, 58.1, 59.6, 65.3, 69.2, 73.4, 76.1, 77.5, 77.5, 87.4, 89.9, 92.6, 93., 95.5, 98.1, 103.9, 113., 119.4, 133.3, 134.9, 137.9, 142.1, 152.8, 161.5, 162.8, 170.9, 181.3, 187.5, 194.5, 202.4, 209.8, 214., 217.6, 244.6, 248.1, 392.5, 422.2}

We applied Code B and obtained the approximate values of

,

, and

. For this data, the Chi-Square goodness-of-fit test (see Code D) is applied using the CDF (see

Section 7) and its inverse function to determine the following intervals:

with the corresponding observed frequencies: {2, 6, 10, 25, 19}. The Chi-Square test = 7.67. Since the critical value is

at the 0.01 level of significance, the goodness-of-fit of the data to the Pareto–Gamma model is not rejected. Given potential covariates, the proposed estimation methods in the article can be used via Code A to estimate regression parameters.

9. Summary

This article introduces a new composite distribution, the Gamma–Pareto, based on the Gamma and Pareto distributions. Several measures of this composite distribution are derived, including the CDF, which is used for the Anderson–Darling and Chi-Square goodness-of-fit tests. The article aims to provide innovative computational tools for estimating regression parameters through a link function that connects small and large response variable values to covariates. Specific Mathematica codes are provided, utilizing numerical approximations to MLEs and Bayesian estimates via the ABC algorithm. This proposed ABC method uses a multivariate normal distribution as the prior, employs a data-driven approach to select hyperparameters, and relies on the MLEs of regression parameters and the Fisher information matrix to compute approximate Bayesian estimates. The 30th and 90th percentiles serve as summary statistics to identify “acceptable samples”, which are a subset of larger samples generated from the prior. The Anderson–Darling goodness-of-fit test assesses how well the response variable aligns with the composite distribution. Simulation results indicate that the ABC method generally outperforms the ML method in terms of accuracy.