Abstract

Building small-population mortality tables has great practical importance in actuarial applications. In recent years, several works in the literature have explored different methodologies to quantify and assess longevity and mortality risk, especially within the context of small populations, and many models dealing with this problem usually use a two-population approach, modeling a mortality spread between a larger reference population and the population of interest, via likelihood-based techniques. To broaden the tools at actuaries’ disposal to build small-population mortality tables, a general structure for a two-step two-population model is proposed, its main element of novelty residing in a machine-learning-based approach to mortality spread estimation. In order to obtain this, Contrast Trees and the related Estimation Contrast Boosting techniques have been applied. A quite general machine-learning-based model has then been adapted in order to generalize Italian actuarial practice in company tables estimation and implemented using data from the Human Mortality Database. Finally, results from the ML-based model have been compared to those obtained from the traditional model.

1. Introduction

Producing accurate mortality projections is often of great importance in an actuarial context, in order to predict death rates for pricing or reserving purposes, to construct policyholders’ death tables for an insurance company, or, more generally, to accurately assess the mortality of a population of interest such as the participants of a pension fund, while mortality projection has received a considerable amount of attention in the actuarial literature, the main focus usually involves national or similarly sized populations; small populations, such as those of regions within a country or groups of annuitants, are far less studied.

Quantifying and projecting mortality for such small populations is non-trivial, because their mortality rates tend to be characterized by high variability and irregular behavior, scarce availability of data in temporal terms, and missing records. Furthermore, due to the usually short-period availability of data, mortality projections are quite uncertain and sensitive to the choice of the fitting time period—plain extrapolation of historic trends could produce questionable results and biologically implausible death tables. In such cases, standard mortality models may not be relevant and the resulting mortality rates could be unreliable. Consequently, using established models, such as the Lee–Carter model (Lee and Carter 1992), to project mortality for small populations is not advisable (Booth et al. 2006; Jarner and Kryger 2011), as it could lead to unrealistic results. Furthermore, population size can have a noticeable effect on the variation of parameters’ estimates, as in the case of the Cairns–Blake–Dowd model (Cairns et al. 2006; Chen et al. 2017). Moreover, as observed by Jarner and Jallbjørn (2022), the aim of mortality projection models should be twofold: on one hand, the predictions produced by the models have to be accurate, as usual in actuarial literature; on the other hand, the models’ output should be stable in respect to annual updates of the data. This is to ensure that decision-makers, such as annuity providers or pension authorities, do not suffer consequences (respectively, significant shifts in liabilities and capital requirements or fluctuations in statutory retirement age) from result volatility or systematic errors. Stability requirements are particularly difficult to meet for smaller populations. Nonetheless, small-population mortality models have great practical interest, for example, in evaluating undertaking-specific mortality tables relative to a portfolio of contracts or in tackling issues pertaining to longevity swaps in the context of pension scheme de-risking.

To overcome such difficulties in modeling mortality for small populations, the mortality parameter estimation is performed in reference to the mortality profile of some larger group, economically and socially similar to the population of interest; borrowing information in such a way could lead to more stable results, as noted by Ahcan et al. (2014). This approach to the problem is known as two-population modeling and can be used to define several classes of models, from extensions of the Lee–Carter model (see, e.g., Russolillo et al. 2011 or Butt and Haberman 2009) and the Cairns–Blake–Dowd model (as in Li et al. 2015) to Bayesian approaches (Cairns et al. 2011) and frailty-based methods (Jarner and Kryger 2011). More extensive reviews are available in Menzietti et al. (2019) and Villegas et al. (2017).

Borrowing terminology and model specification from Villegas et al. (2017), the larger population will be denoted as reference population and the smaller population of interest as the book population. Two-population models used in the actuarial literature can be written using a general formulation in terms of central mortality rates , respectively, for the reference and book populations, as:

Equation (1) describes the mortality in the reference population in terms of a , the reference level for a group aged x and of a cohort effect . N bilinear terms specify the mortality trend via time indexes , whose effect on single age groups is modulated by the terms . In Equation (2), book population modeling is expressed in terms of the difference between reference and book population mortalities, i.e., the mortality of the book population is not directly modeled, rather, the mortality spread between the reference and book populations is. Therefore, the quantities in Equation (2) refer to mortality trend differences such that the book population average mortality level is and cohort effect is , while age-period terms dictate how the differences in mortality change with time in each age group. The number of bilinear terms, M, is usually at most equal to N. Depending on how the models are specified, additional constraints may be needed in order to ensure uniqueness of parameter estimation for Equations (1) and (2). Parameter estimation is performed using maximum likelihood in two steps, firstly, relative to the reference population, and then, conditional on the first-stage estimation, the parameters describing mortality spread between the two populations are estimated. Such an approach, widespread in the actuarial literature for small-population models (see, e.g., Jarner and Kryger 2011; Menzietti et al. 2019; Wan and Bertschi 2015; Li et al. 2015), is based on the assumption that mortality rate differences can be modeled in the same way as mortality rates are, using the same functional form as in Villegas et al. (2018).

Mortality projection is then performed by specifying the dynamics of period indexes, which are modeled using a multivariate random walk with drift. As for the book populations, it is assumed that in the long run, the two populations will experience similar mortality improvements, thus, the spread in time indexes and cohort effects are modeled as stationary processes (see Villegas et al. 2017 for more details). Two-step estimation for two-population models then relies on the distributional assumptions for the numbers of deaths in the reference and book populations and on likelihood-based estimation for modeling the mortality spread.

The purpose of this paper is to present a two-population model where mortality spread is estimated using machine learning techniques, namely Contrast Trees and Estimation Contrast Boosting proposed by Friedman (2020). The model is first described in general terms and then adapted to generalize Italian actuarial practices for company table estimation. Using data from the Human Mortality Database, this new model is evaluated for some book populations and then compared to the traditional methodology.

The remainder of this work is organized as follows: In Section 2, Contrast Tree terminology and algorithms are briefly recalled (Section 2.1), then the Italian actuarial practice for company table estimation is described and extended, making use of Contrast-Tree-related techniques (Section 2.2). Some preliminary consideration about the data are reported in Section 2.3. Section 3 presents and discusses the results from the extended model, firstly by addressing some issues about model calibration (Section 3.1) and then, assessing the performance of the extended model using the traditional approach as a benchmark (Section 3.2). Finally, in Section 4, some conclusions are drawn.

2. Materials and Methods

2.1. Contrast Trees and Estimation Boosting

Contrast Trees (CTs) are a general methodology that, leveraging tree-based machine learning techniques, allows for assessing differences between two output variables defined on the same input space, be they predictions resulting from different models or observed outcome data. Specifically, the goal of the Contrast Tree method is to partition the input space to uncover regions presenting higher values of some difference-related quantity between two arbitrary outcome variables (Friedman 2020). Moreover, a boosting procedure making use of CTs can be constructed, in order to reduce differences between the two target variables once differences have been uncovered.

Available data consist of N observations of the form , where is a P-dimensional vector of observed numerical predictor variables and and are the corresponding values of two outcome variables. These can be estimates for a given quantity from different models, or the observed values of a certain quantity of interest. Given a certain sub-region of the input space, a discrepancy measure, describing the difference between the outcome variables, is defined as a function of the data points in the sub-region as follows:

The particular choice of discrepancy function depends on the problem at hand. While discrepancies are conceptually similar to loss functions, it must be noted that in the context of Contrast Trees, they are not required to be convex, differentiable nor expressible as sum of terms, each involving a single observation. In the following, we’ll make use of the mean absolute difference discrepancy:

where is the number of data points (or, alternatively the sum of weights) relative to region . A brief review of possible choices for discrepancy functions, and their relation to different estimation problems, can be found in Friedman (2020).

Contrast Trees produce a partition of the input space into M components, each one with an associated value for the discrepancy. Such partition can be analyzed to assess discrepancy patterns, bearing some similarities to residuals analysis in the Generalized Linear Model framework. The procedures requires, as hyperparameters, the minimum number of data points in a region, and the maximum number of regions in a tree. Of course, a trade-off exists between the explainability of the region patterns and the number of regions themselves. Low values of and high values of lead to a low number of larger regions, defined by simpler rules, which are, therefore, easier to interpret and analyze. Conversely, less-populated regions may uncover smaller regions and more complicated patterns, whose interpretation could be less straightforward. A brief overview of the iterative splitting procedure of the input space is given in Algorithm 1.

| Algorithm 1 Iterative splitting procedure—Construction of a Contrast Tree | |

| Require: | |

| ⋄ Choose | ▹ Maximum number of regions |

| ⋄ Choose | ▹ Minimum number of data points in a region |

| for do | ▹ Loop over successive trees |

| ⋄ The input space is partitioned into M disjoint regions ; | |

| for do | ▹ Loop over regions in a tree |

| for do | ▹ Loop over predictors |

| for all do | ▹ Loop over values of a predictor |

| ⋄ Define two provisional sub-regions and , with corresponding | |

| discrepancies and : | |

| This rule is the same as for ordinary regression trees for numeric | |

| variables (see Breiman et al. 2017 for further details); | |

| ⋄ Calculate the quality of the split : | |

| where and are the quota of observations in region falling | |

| within each provisional sub-region and is a regulation parameters: | |

| as found by Friedman (2020), results are insensitive to its value; | |

| end for | |

| end for | |

| ⋄ The split point for variable , whose value maximizes , with | |

| associated discrepancies and is associated with the m-th region; | |

| ⋄ Calculate discrepancy improvement for the m-th region: | |

| if then | ▹ Stopping condition (1) |

| ⋄ STOP | |

| end if | |

| end for | |

| ⋄ The region , whose associated split maximizes , is replaced by its associated | |

| sub-regions, s.t. the input space is now partitioned into regions | |

| if then | ▹ Stopping condition (2) |

| ⋄ STOP | |

| end if | |

| end for | |

Results from the CT procedure can be summarized in a so-called lack-of-fit (LOF) curve, which associates to the m-th region the following coordinates:

where the abscissa is the quota of data points having a discrepancy greater or equal to that of the m-th region and the ordinate is the average weighted discrepancy in those same regions. Contrast Trees can be applied to assess the goodness-of-fit of a certain model, by choosing as output variables (i.e., “contrasting”) predicted values from the model and observed ones. High-discrepancy regions resulting from the procedure can be easily detected and interpreted, without having to formulate distributional hypotheses to define a likelihood as multiple models, of any nature, can be compared by estimating a Contrast Tree for each one, contrasting predicted values with out-of-sample observed outcomes. An application of CTs to model diagnostics in the context of mortality models can be found in Levantesi et al. (2024).

Contrast Trees may also be employed to improve model accuracy, by means of an iterative procedure that reduces uncovered errors and calculates an additive correction to produce more accurate predictions. Estimation Contrast Boosting (ECB) gradually modifies a starting value of z using an additive term, reducing its discrepancy with y, producing, at each step k, a partition of the input space where every element m has an associated update . The resulting prediction for z is then adjusted accordingly, so that an updated estimate is produced. Similarly to Gradient Boosting Machines, performance accuracy is often improved by the adoption of a learning rate hyperparameter (see Friedman 2001 for more details about the role of the learning rate).

Please note that in Estimation Contrast Boosting, the two response variables are no longer equivalent: response y is taken as the reference, while response z is adjusted. A quick presentation of the boosting procedure is given in Algorithm 2. Since any point in the input space lies within a single region of each of the trees resulting from the ECB procedure, each with associated updates , and given an initial value , the boosted estimate is computed as:

More details about the iterative splitting procedure to produce Contrast Trees or the ECB algorithm can be found in Friedman (2020) and Levantesi et al. (2024).

| Algorithm 2 Estimation Contrast Boosting |

|

2.2. Small-Population Tables in Relation to Italian Actuarial Practice

In Italian actuarial practice, the assessment of mortality in life insurance companies is heavily influenced by ISVAP Regulation N.22 of 4 April 2008 and its successive modifications1. Article 23-bis, paragraph 9 of the regulation states that “The Insurance company conducting life business presents to IVASS the comparison between technical bases, different from interest rates, used for the calculation of technical provisions, and the results of direct experience”. This happens through the filling of Table 1/1 of Module 41 contained in the Additional Information to Financial Statements (“Informazioni aggiuntive al bilancio d’esercizio”).

The module is organized separately by risk type (longevity or mortality), product type, age range, and sex—these features define the risk classes to be considered. The underlying idea, common in actuarial mathematics, is to analyze the portfolio separately for groups defined by known risk factors, so that individuals composing these groups can be considered homogeneous from the point of view of probabilistic evaluation. The comparison between direct experience and technical bases consists of reporting the expected number of deaths in the company portfolio, the expected sum of benefit to be paid, the actual number of deaths, and the actual paid sum of benefits. Expected number of deaths and expected sum of benefit to be paid are defined as follows:

where is the number of insured persons of age x in risk cluster C at the beginning of the year, is the total insured sum for insured persons of age x in cluster C at the beginning of the year and is the probability of death between ages x and , usually dependent on sex. The table constitutes part of the prudential technical basis used for pricing purposes. The time period to which all quantities refer is the solar year of the Financial Statements in consideration.

While not required by the Solvency II directive, in Italian actuarial practice, a very similar approach is adopted in order to produce the mortality hypotheses to be used in the calculation of best-estimate liabilities and, more generally, to estimate company mortality tables, especially in the case of products subject to mortality risks. In this case, given a certain risk cluster C usually described by sex, risk type (mortality, longevity), and product type (e.g., endowment or term life insurance), an adaptation coefficient is calculated as:

or, using the sum of benefits as a reference:

where is the observed number of deaths for age x in cluster C, is the sum of benefits paid for insureds dead at age x in cluster C, and is the probability of death, distinct by sex, from some reference mortality table, usually a national table of some sort (e.g., the SIM2011 table or ISTAT table for people residing in Italy). The table is a demographic-realistic technical base that can be updated over time.

The adapted death rates or that will constitute the company death tables are then calculated as follows:

Essentially, this method scales one-year death probabilities in order to reproduce the total number of deaths or the total benefits paid. Data used for the calculation include observations from the last available calendar years. Usually, ten calendar years of data are used as this ensures a sufficiently stable output without having to resort to data from much earlier calendar years, which is often not available and could not reflect the actual, more recent, trends in mortality dynamics. This methodology features ease of implementation but applies the same adaptation coefficient to all age classes, thus creating a mortality profile that has the same shape as the reference profile, while the company underwriting process may affect each age group differently. In the remainder of this work, we will refer to this technique as “table scaling”.

To overcome the limitations of table scaling, the ECB algorithm briefly described in Section 2.1 can be utilized to build mortality tables for small populations, using a machine learning approach for mortality spread calculation and generalizing the Italian actuarial practice. To the best of the author’s knowledge, this is the first attempt to apply a machine-learning approach to compute mortality spread in a two-population model.

Let and be some quantity describing mortality in terms of predictor matrix X and calendar year t, respectively, for the reference and book populations, available for observation years . These can be one-year death probabilities, central mortality rates, mortality odds, or, more generally, any quantity describing mortality in a population. The Estimation Boosting algorithm can then be applied to variables and , using the quantity relative to reference population as the z input of Algorithm 2. The ECB procedure then estimates updates , which can be used to transform the quantity relative to the reference population into the corresponding quantity relative to the book population, as per Equation (6):

A shift in the target of the estimation boosting procedure must be emphasized; while in Friedman (2020), the goal was to reduce differences between outcomes y and z by producing an updated estimate and calculate the updates only as a consequence, now, the objective is to obtain the updates themselves, in order to transform the quantity of interest of the reference population into that of the book population. It should also be noted that, on the terminological side, “reference” has two meanings in the context, respectively, of two-population models and Estimation Contrast Boosting. In the first case, it indicates the larger population whose mortality is used as a baseline for mortality spread modeling, while, in the second case, it designates the y output variable of the ECB algorithm, in this case , which, in the present application, is relative to the book population.

Now, the quantity can be projected to calendar years using some standard mortality projection model, which is feasible for the reference population, thus obtaining estimates for the quantity of interest in future calendar years. Some assumptions on the updates must then be made, to extend their range of application from calendar years , on which they have been estimated, to projection calendar years The extended updates can then be applied to projected estimates in order to obtain boosted estimates for the book population relative to projection calendar years:

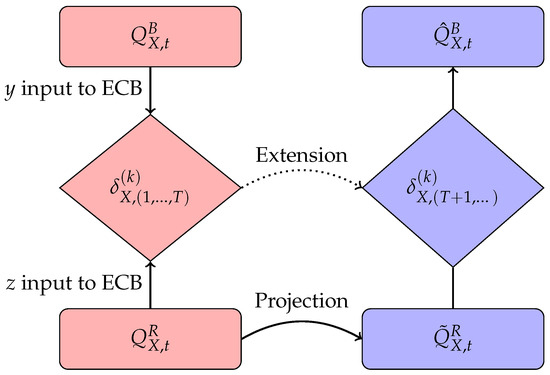

This methodology pertains to two-step models for a small population, with the main difference residing in the quantification of mortality spread, which now relies on a machine-learning technique. It must be emphasized that the book population is not required to be similar to the reference population in terms of geographical, historical, or socio-economical features. In such cases, however, a faster estimation boosting convergence and, more generally, a better model performance can be expected. The procedure just described is summarized in Figure 1, where cells in red refer to calendar years , while cells in blue refer to calendar years In the remainder of this work, the process outlined in Figure 1 will be referred to as “ECB adaptation”.

Figure 1.

The ECB adaptation process for small-population mortality tables.

Before model implementation, some critical issues must be pointed out. First, no mechanism for the extension of updates to projection calendar years is straightforwardly provided. So, not having at our disposal a tool for update projection, a reduced form of the input design matrix for Estimation Contrast Boosting could be used, adopting as predictors just the variables age, cohort, or a combination of the two. This issue will be further addressed in Section 3.1. It should also be noted that using data from the same calendar years for the reference population mortality projection and ECB calibration is not strictly necessary; a longer time series of data could be used for mortality projection, while a shorter time series could be used to calibrate the ECB updates. In fact, not using a mechanism to project the ECB updates means implicitly assuming that the updates themselves, i.e., the transformation function from reference population to book population mortality, do not change with time. This means assuming that relative mortality levels are stable during both ECB calibration and projection time periods, thus suggesting the use of data relative to the most recent years.

Aside from these points of attention, this model is quite flexible, leaving considerable freedom in the choice of the quantity of interest, the projection model, and the structure of the design matrix used as input in the ECB updates’ estimation.

2.3. Data

Data used for numerical evaluation have been provided by the Human Mortality Database (Human Mortality Database (2024)). Due to a lack of company-specific data, the Italian male population is used as the reference population, while the male populations of Austria, Slovenia, and Lithuania are used as book populations. The time period taken into consideration is 1950–2019, for ages between 30 and 90. Calendar years from 2000 to 2019 will be used for mortality projection purposes. Data relative to Slovenia and Lithuania are available, starting, respectively, from 1983 and 1959. The first two populations can be considered close, at least in geographical terms, to the reference population. Conversely, the Lithuanian population has been selected in order to investigate the ECB adaptation procedure behavior when the book and reference populations differ substantially.

Using the approach proposed by Keyfitz and Caswell (2005), to have a first qualitative evaluation of similarity between two reference and book populations, Standardized Death Rates (SDR) relative to the period 1950–1999 are calculated separately for the age groups 30–50, 50–70, and 70–90, and then, for the whole age range in consideration. Relative to country I, ages ranging from to , using reference population R, SDRs are computed as:

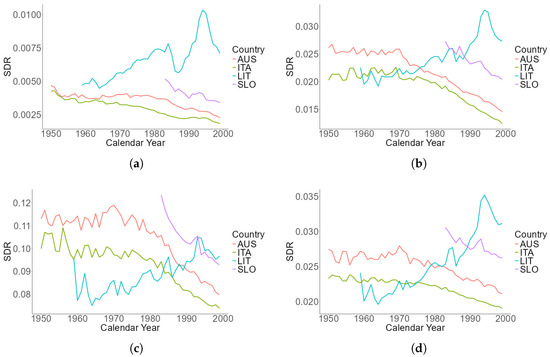

where m denotes the central death rate and E the central exposure-to-risk. The resulting weighted central mortality rates are reported in Figure 2.

Figure 2.

Standardized Death Rates (SDR) by age group. (a) ages 30–50; (b) ages 50–70; (c) ages 70–90; and (d) ages 30–90.

Italian and Austrian mortality present similar trends, with quite a regular behavior, both on separate and joint age groups. Slovenian mortality data, while available only since 1983, displays a similar, albeit more irregular, pattern. This means that the average mortality rate varies in a similar way for the three populations. Since the Slovenian population is the least numerous of the three countries, this more erratic behavior of its associated SDR is expected. Conversely, Lithuanian mortality exhibits a completely different pattern. Using the age range 30–90, its SDR is smaller than the Italian SDR until 1975, then, it steadily increases until 1995, before eventually decreasing again. For older age groups, represented in (c), Lithuanian SDR is characterized by high volatility up until the 1970s, then increases until becoming the greatest between the four nations considered.

Following Menzietti et al. (2019), a relative Measure of Mortality (RMM) is also computed as:

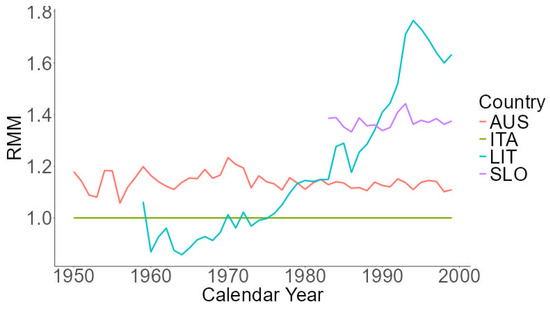

The nearer the RRM value is to 1, the closer the book and reference populations are on average in terms of mortality. As can be seen from Figure 3, the mortality of the reference population tends to be the lowest, in contrast to what would presumably happen using, say, a group of annuitants as the book population.

Figure 3.

Relative Mortality Measure (RMM) relative to ages 30–90.

Again, we can clearly see that Italian, Austrian, and Slovenian mortalities evolve similarly. Lithuanian relative mortality dynamics behave differently—while initially lower in respect to the reference population, they become higher and higher from the mid-sixties to the mid-nineties, then decrease. This suggests caution must be exercised in the choice of the time frame per ECB mortality boosting calibration.

3. Results

3.1. Model Calibration

Since the focus of this paper is not the choice of the best mortality model for the reference population but rather, studying the implementation of a machine-learning-based mortality spread estimation technique, the choice of the mortality model for reference population mortality projection has not been investigated in detail. The projection has been performed using a simple Lee–Carter mortality model, with temporal indexes dynamics described by a random walk with drift, as suggested by Tuljapurkar et al. (2000). The Lee–Carter dynamic mortality model has been implemented using the R package “StMoMo” Villegas et al. (2018). Following Pitacco (2009), the time period for calibrating mortality projection for the reference population is chosen to be from 1950 to 1999, using ages from 30 to 90 (see also Lee and Miller 2001). Central mortality rates, with corresponding death rates, have been projected to the period 2000–2019. Central mortality rates resulting from Lee–Carter projections can be transformed into one-year death probabilities using the constant force of mortality hypothesis (see, e.g., Pitacco 2009):

Contrast Trees and Estimation Contrast Boosting have been evaluated using the R package “ConTree” developed by Friedman and Narasimhan (2023), using as a response variable one-year death probabilities.

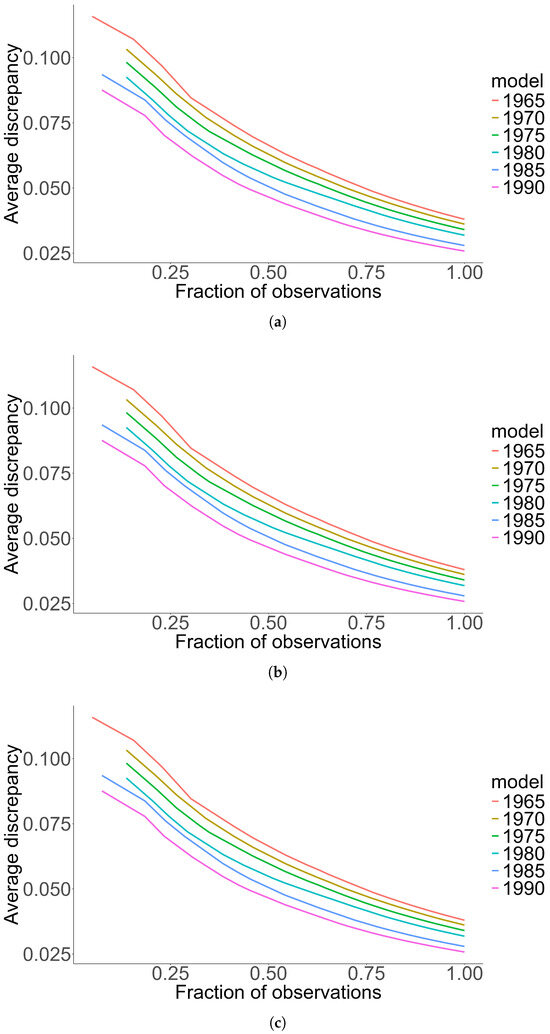

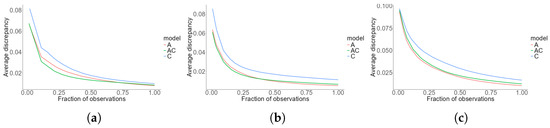

As stated before, the time frame used for ECB adaptation calibration does not have to coincide with that used for reference population mortality projection. To investigate which time period should be used to estimate ECB updates, different Contrast Estimation Boosting adaptations are performed for different starting years, from 1965 and every fifth year until 1999. For Slovenia, data allow for 1983 as the minimum starting date. Each ECB procedure was performed, for the sake of simplicity, using only Age as a predictor (more on that in the following) and adopting central death rates as the quantity of interest, s.t. , and the updates resulting from each procedure were applied to Italian projected mortality rates, obtaining an estimate for book population mortality rates. A Contrast Tree has been estimated using discrepancy Equation (4) for each ECB output. Data relative to the 2000–2019 time frame are split in half into training and test sets for Contrast-Tree estimation and evaluation purposes, respectively, using the maximum dissimilarity approach (Willett 1999); the corresponding lack-of-fit curves are shown in Figure 4.

Figure 4.

Lack-of-fit curves for Estimation Contrast Boosting calibration period assessment. Plots refer, respectively, to Austrian (a), Slovenian (b) and Lithuanian (c) book populations.

Very different behaviors are apparent from the first and third plots: while for an Austrian book population using a longer data series leads to a more accurate estimation of future book population mortality rates, for a Lithuanian book population, the situation is reversed. Moreover, the relative variation in average discrepancy for a Lithuanian book population (as can be seen in the far right of the graph) is quite impacted by the choice of starting year. Such behavior can be explained in terms of the Relative Mortality Measures reported in Figure 3: Austrian mortality dynamics are quite aligned with the Italian dynamics, so using a longer data series allows for a more robust estimation of ECB updates, which do not presumably change too much in the period 1965–1999 (please note that the calendar years 1950–1964, where Austrian mortality exhibits greater volatility in relative mortality, were not used in the ECB estimation). Conversely, Lithuanian relative mortality evolves significantly in the 1959–1999 time period and the assumption of time-independent ECB updates should be called into question. The results for a Slovenian book population constitute a middle ground: ECB updates produced using data from just 1990 onwards yield worse results in comparison to using 1980 onwards, across all input space. This suggests that, due to the irregularity in the Slovenian mortality pattern, using only the more recent calendar years to estimate the updates could lead to unreliable results.

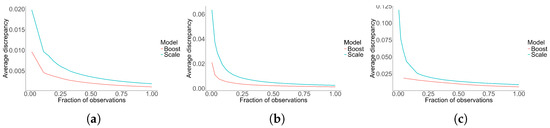

In order to assess the most sensible choice for ECB predictors and given the absence of an obvious choice for a projection mechanism for the updates , it should be observed that using only the Age and Cohort variables from the ECB modeling does not require any form of time-series extrapolation, as stated by Alai and Sherris (2014). Therefore, for each book population, three ECB adaptations were performed, using as predictors variables Age, Cohort, or both. As before, the quantity of interest is the central mortality rate. The updates have been calibrated, for each book population, on the minimum discrepancy time interval identified previously. The resulting mortality rates for the book populations have been compared to the observed mortality rates using lack-of-fit curves, average discrepancy, Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE). Data for Contrast Tree estimation have been split into training and test sets using the maximum dissimilarity approach, while RMSE and MAPE have been calculated on the whole projection period. The results are shown in Figure 5 and in Table 1.

Figure 5.

Lack-of-fit curves for Estimation Contrast Boosting predictor assessment, relative to the three different design matrices taken into consideration. Plots refer, respectively, to Austrian (a), Slovenian (b) and Lithuanian (c) book populations.

Table 1.

Performance statistics for different design matrix structures for ECB adaptation.

For all three book populations, similar conclusions can be drawn: Cohort-based ECB features the highest discrepancy across the whole of the input space. For the Slovenian and Austrian populations, Age–Cohort-based ECB produces the best performances in high discrepancy regions, but when considering the entirety of the input space, Age-based ECB seems to perform slightly better. As for the Lithuanian book population, Age-based ECB seems to consistently produce more accurate outputs. Note the different scale on the ordinate axis for Lithuanian discrepancy. The performance statistics reported in Table 1 are consistent with the conclusions drawn from the LOF curves: with the exception of RMSE for Cohort-based ECB for the Slovenian and Lithuanian book populations, Age-based Estimation Contrast Boosting seems to consistently yield better performance.

3.2. Generalization of Italian Actuarial Practice Using ECB Adaptation

The procedure presented in Section 2.2 has been further specified and adapted in order to build a generalized machine-learning-based version of the table scaling technique. To mimic the procedure, one-year death probabilities have been adopted as the quantity of interest. Because of the additive nature of the updates and to replicate the multiplicative scaling used to adapt national mortality tables, Estimation Contrast Boosting has been applied to the natural logarithm of such probabilities. The updates have been estimated using data relative to the time period 1990–1999, notwithstanding the results in Section 3.1, to reproduce the length of the time series usually available to an insurance company. The ECB updates have been extended to the calendar years 2000–2019 using Age-based Estimation Contrast Boosting.

Performance has been assessed comparing adapted death probabilities for the book population, observed death probabilities, and rescaled death probabilities by means of Contrast Trees with discrepancy Equation (4), RMSE, and MAPE. Data used to estimate and evaluate Contrast Trees are split in half using the maximum dissimilarity approach, while RMSE and MAPE have been calculated on the whole projection period.

Lack-of-fit curves relative to the three book populations are shown in Figure 6. In all three cases, the discrepancy resulting for the ECB boosting procedure is lower than that resulting from mortality scaling across the whole input space. However, the scale of discrepancy is quite different across the book populations. The LOF curve for the ECB procedure presents a flatter profile, while, for the mortality scaling, the LOF curve presents very high values of discrepancy at low values of observations. This means that accuracy issues relative to mortality scaling occur especially in high-discrepancy regions. Therefore, the flexibility of the ECB procedure allows for separate updates for different age classes, while it is possible to extend mortality adaptation by estimating different coefficients on different age classes, in the case of ECB, such classes are determined directly from the procedure and do not require further input, nor expert judgement. It should also be recalled that the assumption of relative mortality stability between the reference and book populations is not verified for Lithuania, so results relative to the Lithuanian book population must be taken with caution.

Figure 6.

LOF curves comparing mortality scaling and ECB mortality boosting. Plots refer, respectively, to Austrian (a), Slovenian (b) and Lithuanian (c) book populations.

Numerical indicators in Table 2 are consistent with the LOF curves, although they provide less detail: ECB adaptation features lower average discrepancy and RMSE in all three cases, while MAPE is comparable for the Austrian and Slovenian book populations. It can be noted, in line with Figure 6, that the Austrian book population is characterized by the lowest values of the inaccuracy indexes, followed by Slovenia and finally, Lithuania.

Table 2.

Performance statistics for mortality scaling and ECB adaptation.

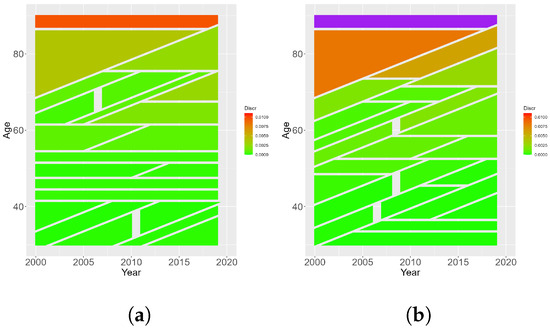

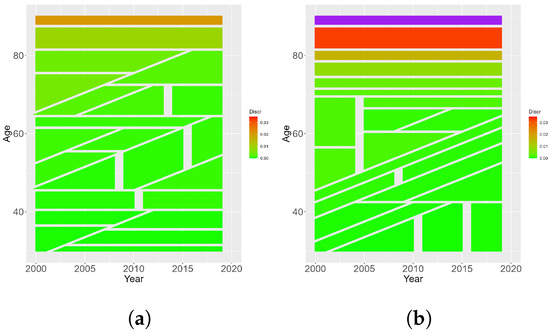

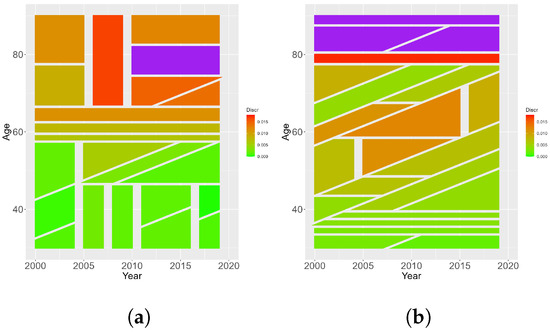

As already observed, model performance differs most in the high-discrepancy regions. Since the input space used in Contrast Tree estimation is quite simple, consisting only of age, calendar year, and cohort predictors, we can represent the pattern of discrepancy on the input space for ages 30–90 and calendar years 2000–2019, easily identifying such regions. The results are presented in Figure 7, Figure 8 and Figure 9 for the Austrian, Slovenian, and Lithuanian book populations, respectively. Green regions have lower discrepancy values, while red regions have higher discrepancies. For the sake of image readability, regions whose associated discrepancy value exceeds the maximum on the scale to the right are colored purple.

Figure 7.

Regions uncovered by the Contrast Tree on the input space relative to Austrian book population. (a) shows discrepancy for ECB mortality adaptation, while (b) shows discrepancy for mortality scaling.

Figure 8.

Regions uncovered by the Contrast Tree on the input space relative to Slovenian book population. (a) shows discrepancy for ECB mortality adaptation, while (b) shows discrepancy for mortality scaling.

Figure 9.

Regions uncovered by the Contrast Tree on the input space relative to Lithuanian book population. (a) shows discrepancy for ECB mortality adaptation, while (b) shows discrepancy for mortality scaling.

For Austrian males, both models are quite well-behaved (i.e., produce relatively low values for discrepancy) until age 65. For older ages, the accuracy of both models starts to deteriorate, but this effect is much more noticeable for table scaling and particularly sizeable at very old ages, regardless of calendar year. For the Lithuanian book population, this pattern is much less evident, and discrepancy is more evenly distributed in the input space. A better performance for ECB adaptation is apparent across the whole of the input space.

Similar considerations can be applied to the Slovenian male population. Please note that the almost constant value of discrepancy across the whole input space corresponds directly with the flat profiles of LOF curves reported in Figure 6b.

For the Lithuanian book population, the pattern described for Austria and Slovenia is less evident. Although high discrepancy tends to be more evenly distributed across the input space, older ages still tend to be associated with worse model performance.

4. Discussion

In this paper, using the tools provided by Contrast Trees, a machine-learning approach to mortality spread estimation in the context of two-population models has been constructed. The proposed model computes mortality spread using Estimation Contrast Boosting (ECB), and produces book population mortality table by applying ECB updates to the mortality-related quantities of a reference, much larger, population, whose mortality can be projected using well-attested models. This ECB-based model provides a good degree of flexibility, allowing for a variety of mortality-related quantities of interest, dynamic mortality models for reference population projection, and predictor structure for ECB update estimation, while some caution is advised when assessing the relative mortality structure of the two populations taken into account and when extending the ECB updates to the mortality projection time frame, the model can be quite easily implemented.

Finally, taking into account Italian actuarial practice, ECB mortality adaptation has been used to generalize the mortality scaling usually used within insurance companies for estimating a realistic demographic technical basis. The new procedure leads to more accurate estimation results compared to those obtained with the traditional technique and also overcomes its main limitation of applying the same adaptation coefficient to all ages, while these results seems promising, further investigation is needed since the ECB methodology has not been tested on company data, the Relative Mortality Measure of which could behave differently in respect to the results in Section 2.3.

Nonetheless, the results seem promising. The main merits of the model reside in its ease of implementation, making use of data readily available to life insurers, and its preservation of the main advantage of table scaling, namely, its interpretation in terms of multiplicative corrections to national death tables. This has great importance in the regulatory and decision-making context, allowing analysis of mortality adjustments separately by age and cohort classes without having to resort to black-box machine-learning models. Therefore, decision-makers such as insurers and pension providers can make informed decisions and rely on models that potentially perform better than traditional models in terms of predictive power, without having to sacrifice explanatory power.

Funding

This research received no external funding.

Data Availability Statement

Data used in this study are available at www.mortality.org, accessed on 11 October 2023.

Acknowledgments

This work is based on part of the author’s Ph.D. thesis, defended at the Department of Statistical Sciences, Sapienza University of Rome, Italy. The author would like to thank Prof. Susanna Levantesi, under whose supervision the thesis was developed and written. The views expressed here, as well as any inaccuracies and mistakes, are, of course, the author’s sole responsibility.

Conflicts of Interest

The author declares no conflicts of interest.

Note

| 1 | Provvedimento ISVAP of 29 January 2010 N. 2771, Provvedimento ISVAP of 17 November 2010 N. 28452, Provvedimento IVASS of 6 December 2016 N. 53, and Provvedimento IVASS of 14 February 2018 N. 68. |

References

- Ahcan, Ales, Darko Medved, Annamaria Olivieri, and Ermanno Pitacco. 2014. Forecasting mortality for small populations by mixing mortality data. Insurance: Mathematics and Economics 54: 12–27. [Google Scholar] [CrossRef]

- Alai, Daniel H., and Michael Sherris. 2014. Rethinking age-period-cohort mortality trend models. Scandinavian Actuarial Journal 2014: 208–27. [Google Scholar] [CrossRef]

- Booth, Heather, Rob J. Hyndman, Leonie Tickle, and Piet de Jong. 2006. Lee-Carter mortality forecasting: A multi-country comparison of variants and extensions. Demographic Research 15: 289–310. [Google Scholar] [CrossRef]

- Breiman, Leo, Jerome Friedman, Richard A. Olshen, and Charles J. Stone. 2017. Classification and Regression Trees. New York: Chapman and Hall/CRC. [Google Scholar]

- Butt, Zoltan, and Steven Haberman. 2009. Llc: A Collection of R Functions for Fitting a Class of Lee–Carter Mortality Models Using Iterative Fitting Algorithms. Available online: https://openaccess.city.ac.uk/id/eprint/2321/ (accessed on 21 August 2024).

- Cairns, Andrew J. G., David Blake, and Kevin Dowd. 2006. A Two-Factor Model for Stochastic Mortality with Parameter Uncertainty: Theory and Calibration. Journal of Risk and Insurance 73: 687–718. [Google Scholar] [CrossRef]

- Cairns, Andrew J. G., David Blake, Kevin Dowd, Guy D. Coughlan, and Marwa Khalaf-Allah. 2011. Bayesian Stochastic Mortality Modelling for Two Populations. ASTIN Bulletin: The Journal of the IAA 41: 29–59. [Google Scholar]

- Chen, Liang, Andrew J. G. Cairns, and Torsten Kleinow. 2017. Small population bias and sampling effects in stochastic mortality modelling. European Actuarial Journal 7: 193–230. [Google Scholar] [CrossRef]

- Friedman, Jerome H. 2001. Greedy function approximation: A gradient boosting machine. The Annals of Statistics 29: 1189–232. [Google Scholar] [CrossRef]

- Friedman, Jerome H. 2020. Contrast trees and distribution boosting. Proceedings of the National Academy of Sciences 117: 21175–84. [Google Scholar] [CrossRef]

- Friedman, Jerome H., and Balasubramanian Narasimhan. 2023. conTree: Contrast Trees and Boosting. R Package Version 0.3-1. Available online: https://CRAN.R-project.org/package=conTree (accessed on 21 August 2024).

- Human Mortality Database. 2024. University of California, Berkeley (USA); Max Planck Institute for Demographic Research (Germany); French Institute for Demographic Studies (France). Available online: https://www.mortality.org (accessed on 21 August 2024).

- Jarner, Søren F., and Snorre Jallbjørn. 2022. The saint model: A decade later. ASTIN Bulletin: The Journal of the IAA 52: 483–517. [Google Scholar] [CrossRef]

- Jarner, Søren Fiig, and Esben Masotti Kryger. 2011. Modelling Adult Mortality in Small Populations: The SAINT Model. ASTIN Bulletin: The Journal of the IAA 41: 377–418. [Google Scholar]

- Keyfitz, Nathan, and Hal Caswell. 2005. Applied Mathematical Demography. Statistics for Biology and Health. New York: Springer. [Google Scholar]

- Lee, Ronald, and Timothy Miller. 2001. Evaluating the performance of the lee-carter method for forecasting mortality. Demography 38: 537–49. [Google Scholar] [CrossRef] [PubMed]

- Lee, Ronald D., and Lawrence R. Carter. 1992. Modeling and Forecasting U.S. Mortality. Journal of the American Statistical Association 87: 659–71. [Google Scholar] [CrossRef]

- Levantesi, Susanna, Matteo Lizzi, and Andrea Nigri. 2024. Enhancing diagnostic of stochastic mortality models leveraging contrast trees: An application on Italian data. Quality & Quantity 58: 1565–81. [Google Scholar]

- Li, Johnny Siu-Hang, Rui Zhou, and Mary Hardy. 2015. A step-by-step guide to building two-population stochastic mortality models. Insurance: Mathematics and Economics 63: 121–34. [Google Scholar] [CrossRef]

- Menzietti, Massimiliano, Maria Francesca Morabito, and Manuela Stranges. 2019. Mortality Projections for Small Populations: An Application to the Maltese Elderly. Risks 7: 35. [Google Scholar] [CrossRef]

- Pitacco, Ermanno. 2009. Modelling Longevity Dynamics for Pensions and Annuity Business. Oxford: OUP. [Google Scholar]

- Russolillo, Maria, Giuseppe Giordano, and Steven Haberman. 2011. Extending the Lee–Carter model: A three-way decomposition. Scandinavian Actuarial Journal 2011: 96–117. [Google Scholar] [CrossRef]

- Tuljapurkar, Shripad, Nan Li, and Carl Boe. 2000. A universal pattern of mortality decline in the G7 countries. Nature 405: 789–92. [Google Scholar] [CrossRef]

- Villegas, Andrés M., Steven Haberman, Vladimir K. Kaishev, and Pietro Millossovich. 2017. A comparative study of two population models for the assessment of basis risk in longvity hedges. ASTIN Bulletin: The Journal of the IAA 47: 631–79. [Google Scholar] [CrossRef]

- Villegas, Andrés M., Vladimir K. Kaishev, and Pietro Millossovich. 2018. StMoMo: An R Package for Stochastic Mortality Modeling. Journal of Statistical Software 84: 1–38. [Google Scholar] [CrossRef]

- Wan, Cheng, and Ljudmila Bertschi. 2015. Swiss coherent mortality model as a basis for developing longevity de-risking solutions for Swiss pension funds: A practical approach. Insurance: Mathematics and Economics 63: 66–75. [Google Scholar] [CrossRef]

- Willett, Peter. 1999. Dissimilarity-Based Algorithms for Selecting Structurally Diverse Sets of Compounds. Journal of Computational Biology 6: 447–57. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).