Abstract

We propose an accurate data-driven numerical scheme to solve stochastic differential equations (SDEs), by taking large time steps. The SDE discretization is built up by means of the polynomial chaos expansion method, on the basis of accurately determined stochastic collocation (SC) points. By employing an artificial neural network to learn these SC points, we can perform Monte Carlo simulations with large time steps. Basic error analysis indicates that this data-driven scheme results in accurate SDE solutions in the sense of strong convergence, provided the learning methodology is robust and accurate. With a method variant called the compression–decompression collocation and interpolation technique, we can drastically reduce the number of neural network functions that have to be learned, so that computational speed is enhanced. As a proof of concept, 1D numerical experiments confirm a high-quality strong convergence error when using large time steps, and the novel scheme outperforms some classical numerical SDE discretizations. Some applications, here in financial option valuation, are also presented.

1. Introduction

The highly successful deep learning paradigm (LeCun et al. 2015) receives a lot of attention in science and engineering. Within numerical mathematics, the machine learning methodology has, for example, successfully entered the field of numerically solving partial differential equations (PDEs) (Bar-Sinai et al. 2019; Beck et al. 2018; Han et al. 2018; Maziar et al. 2019; Sirignano and Spiliopoulos 2018; Xie et al. 2018). The aim with machine learning is then to either speed up the solution process or to solve high-dimensional problems that are not easily handled by the traditional numerical methods.

In this paper, we develop a highly accurate numerical discretization scheme for scalar stochastic differential equations (SDEs), which is based on taking possibly large discrete time steps. We “learn” to take large time steps, with the help of the stochastic collocation Monte Carlo sampler (SCMC) proposed by Grzelak et al. (2019), and by using an artificial neural network (ANN), within the classical supervised learning context.

SDEs are widely used to describe uncertain phenomena, in physics, finance, epidemics, amongst others, as a means to model and quantify uncertainty. The corresponding solutions are stochastic processes. Numerical approximation of the solution to an SDE is standard practice, as an analytic solution is typically not available. The most commonly known technique to solve SDEs is based on the Monte Carlo (MC) simulation, for which the SDE first needs to be discretized. There are quite a few applications that could benefit from an accurate and efficient numerical method on the basis of a large time step discretization (Li et al. 2021), for example, in finance, the valuation of path-dependent financial derivatives or financial risk management where counterparty credit risk plays a role.

Basically, there are two ways to measure the convergence rate of discrete solutions to SDEs, by means of the approximation to the sample path or by approximation to the corresponding distribution. This way, strong and weak convergence of a numerical SDE solutions have respectively been defined (see Platen 1999). Weak convergence, the convergence in distributional sense, is often addressed in the literature. Moment-matching, for example, is a basic technique used to improve weak convergence. Strong pathwise convergence is particularly challenging, and requires accurate conditional distributions. There are natural approaches to improve strong convergence properties, i.e., by adding higher order terms or by using finer time grids. However, these are nontrivial and costly, especially when considering multi-dimensional SDEs.

We aimed to develop highly accurate numerical schemes by means of deep learning, for which the strong error of the discretization does not depend on the size of the simulation time step. For this, we employ the SCMC method as an efficient approach for approximating (conditional) distribution functions. The distribution function of interest is expanded as a polynomial in terms of a random variable, which is cheap to sample from at given collocation points, and interpolation takes place between these points. The resulting big time step discretization, in which the SCMC methodology is combined with deep learning, is called the Seven-League scheme1 here, and we abbreviate it by the 7L scheme.

There are different reasons to learn stochastic collocation points instead of the sample paths directly. Stochastic collocation points have a specific physical meaning, which makes the data-driven scheme explainable. Monte Carlo sample paths are random, while collocation points are path independent and deterministic (i.e., representing key features of a probability distribution), which simplifies the learning process when using neural networks. Unlike the SCMC method, which provides accurate Monte Carlo samples given a constant time step and for one specific instance of the SDE parameters, the 7L methodology enables us to generate samples for a wide range of time steps and for many different instances of the model parameters (i.e., for a family of SDEs), by means of a neural network to learn the evolution of the collocation points over time for many different model parameters.

The 7L scheme is composed of two separate phases under the framework of supervised learning, i.e., an offline (training) phase and an online (prediction) phase. The training phase, which usually requires heavy computation and many datasets, is done only once and offline. The prediction phase, which is a computationally cheap and highly efficient process, can be performed in an online fashion.

This paper serves to show that the proposed methodology performs very well for scalar SDEs. This work can thus be seen as a proof of concept. Higher-dimensional extensions of the 7L scheme will be presented in forth-coming work. Because this methodology is however “grid-based” we will not reach extremely high dimensions without additional enhancement. This is left for future work.

The remainder of this paper is organized as follows. In Section 2, SDEs, the discretization, stochastic collocation, and the connection between SDE discretizations and the SCMC method are introduced. In Section 3, the data-driven methodology is explained to address large time step simulation, i.e., the 7L scheme, for SDEs. ANNs will be used as function approximators to learn the stochastic (conditional) collocation points. A brief description of their details is placed in Section 3.3. In Section 4, we introduce a decompression-compression technique to accelerate the computation. This latter efficient variant is named the 7L-CDC scheme (i.e., seven-league compression–decompression scheme). Section 5 presents numerical experiments to show the performance of the proposed approach. Furthermore, the corresponding error is analyzed. Section 6 concludes.

2. Stochastic Differential Equations and Stochastic Collocation

We first describe the basic, well-known SDE setting, and explain our notation.

2.1. SDE Basics

We work with a real-valued random variable , defined on the probability space with filtration , sample space , -algebra and probability measure . For the time evolution of , consider the generic scalar Itô SDE,

with the drift term , the diffusion term , model parameters , Wiener process , and given initial value . When the drift and diffusion terms satisfy some regularity conditions (e.g., the global Lipschitz continuity (Karatzas and Shreve 1988, p. 289)), the existence and uniqueness of the solution of (1) are guaranteed. The cumulative distribution function of , , is available and the corresponding density function, evolving over time, is described by the Fokker–Planck equation (Risken 1984).

With a discretization in time interval , with equidistant time step , the discrete random variable at time is denoted by . Traditional numerical schemes have been designed based on Itô’s lemma, in a similar fashion as the Taylor expansion is used to discretize deterministic ODEs and PDEs. The basic discretization, for each Monte Carlo path, is the Euler–Maruyama scheme (Platen 1999), which reads,

where is a realization (i.e., a number) from random variable , which represents the numerical approximation to exact solution at time point , and a realization is drawn from the random variable X, which here follows the standard normal distribution . Moreover, (a number) will be used as the notation for a realization of .

In addition, the Milstein discretization (Milstein 1975) reads,

where represents the derivative with respect to of . When the drift and diffusion terms are independent of time t, the SDE is called time-invariant.

Two error convergence criteria are commonly used to measure the SDE discretization accuracy; that is, the convergence in the weak and strong sense. Strong convergence, which is of our interest here, is defined as follows.

Definition 1.

Let be the exact solution of an SDE at time , its discrete approximation with time step converges in the strong sense, with order , if there exists a constant K such that

It is well-known that the Euler–Maruyama scheme (2) has strong convergence , while the Milstein scheme (3) has = 1.0. When deriving high-order schemes for SDEs, the rules of Itô calculus must be respected (Platen 1999). As a result, there will be eight terms in a Taylor SDE scheme with = 1.5, and twelve with = 2.0, and the computational complexity increases. As a consequence, higher order schemes are involved and somewhat expensive. Convergence of the numerical solution for is guaranteed, but the computational costs increase significantly to achieve accurate solutions.

The generic form of the above mentioned numerical schemes to solve the Itô SDE is as follows,

where m represents the number of polynomial terms, and the coefficients are pre-defined and equation-dependent. For the sake of notational convenience, let . For example, for the Euler–Maruyama scheme (2), with , we have

while for the Milstein scheme, with , it follows that

With these explicit coefficients we arrive at the probability distribution of the random variable,

These discrete SDE schemes are based on a series of transformations of the previous realization to approximate the conditional distribution,

A numerical scheme is thus essentially based on the conditional sampling . The Euler–Maruyama scheme draws from a normal distribution, with a specific mean and variance, to approximate the distribution in the next time point, while the Milstein scheme combines a normal and a chi-squared distribution. Similarly, we can derive the stochastic collocation methods.

2.2. Stochastic Collocation Method

Let us assume two random variables, Y and X, where the latter one is cheaper to sample from (e.g., X is a Gaussian random variable). These two scalar random variables are connected, via,

where is a uniformly distributed random variable, and are supposed to be cumulative distribution functions (CDFs). Note that and are random variables following the same uniform distribution. and are supposed to be strictly increasing functions, so that the following inversion holds true,

where and are samples (numbers) from Y and X, respectively. The mapping function, , connects the two random variables and guarantees that equals , in distributional sense and also element-wise. The mapping function should be approximated, i.e., , by a function which is computationally cheap. When function is available, we may generate “expensive” samples, from Y, by using the cheaper random samples from X.

The stochastic collocation Monte Carlo method (SCMC) developed in Grzelak et al. (2019) aims to find an accurate mapping function in an efficient way. The basic idea is to employ Equation (11) at specific collocation points and approximate the function by a suitable monotonic interpolation between these points. This procedure, see Algorithm 1, reduces the number of expensive inversions to obtain many samples from .

| Algorithm 1: SCMC Method |

Taking an interpolation function of degree (with , as we need at least two collocation points), as an example, the following steps need to be performed:

|

The SCMC method parameterizes the distribution function by imposing probability constraints at the given collocation points. Taking the Lagrange interpolation as an example, we can expand function in the form of polynomial chaos,

Monotonicity of interpolation is an important requirement, particularly when dealing with peaked probability distributions.

The Cameron-Martin Theorem (Cameron and Martin 1947) states that any distribution can be approximated by a polynomial chaos approximation based on the normal distribution, but also other random variables may be used for X (see, for example, Grzelak et al. 2019).

3. Methodology

For our purposes, given , the conditional variable can be written as,

where the coefficients are now functions of realization . Equation (13), for large m-values, holds for any , particularly also for large . As such the scheme can be interpreted as an almost exact simulation scheme for an SDE under consideration. By the scheme in (13) we can thus take large time steps in a highly accurate discretization scheme. More specifically, a sample from the known distribution X can be mapped onto a corresponding unique sample of the conditional distribution by the coefficient functions.

There are essentially two possibilities for using an ANN in the framework of the stochastic collocation method, the first being to directly learn the (time-dependent) polynomial coefficients, , in (13), the second to learn the collocation points, . The two methods are equivalent mathematically, but the latter, our method of choice, appears more stable and flexible. Here, we explain how to learn the collocation points, , which is then followed by inferring the polynomial coefficients. When the stochastic collocation points at time are known, the coefficients in (13) can be easily computed.

An SDE solution is represented by its cumulative distribution at the collocation points, plus a suitable accurate interpolation . In other words, the SCMC method forces the distribution functions (the target and the numerical approximation) to strictly match at the collocation points over time. The collocation points are dynamic and evolve with time.

3.1. Data-Driven Numerical Schemes

Calculating the conditional distribution function requires generating samples conditionally on previous realizations of the stochastic process. Based on a general polynomial expression, the conditional sample, in discrete form, is defined as follows,

where , and the coefficients , at time , are functions of the variables , see Equations (6) and (7).

In the case of a Markov process, the future does not dependent on past values. Given , the random variable only depends on the increment . The process has independent increments, and the conditional distribution at time given information up to time only depends on the information at .

Similar to these coefficient functions, the m conditional stochastic collocation points at time , , with , can be written as a functional relation,

A closed-form expression for function is generally not available. Finding the conditional collocation points can however be formulated as a regression problem.

It is well-known that neural networks can be utilized as universal function approximators (Cybenko 1989). We then generate random data points in the domain of interest and the ANN should “learn the mapping function ”, in an offline ANN training stage. The SCMC method is here used to compute the corresponding collocation points at each time point, which are then stored to train the ANN, in a supervised learning fashion (see, for example, Goodfellow et al. 2016).

3.2. The Seven-League Scheme

Next, we detail the generation of the stochastic collocation points to create the training data. Consider a stochastic process , , where represents the maximum time horizon for the process that we wish to sample from. When the analytical solution of the SDE is not available (and we cannot use an exact simulation scheme with large time steps), a classical numerical scheme will be employed, based on tiny constant time increments , a discretization in the time-wise direction with grid points , to generate a sufficient number of highly accurate samples at each time point , to approximate the corresponding cumulative functions highly accurately. Note that the training samples are generated with a fixed time discretization, and in the case of a Markov process, one can easily obtain discrete values for the underlying stochastic process for many possible time increments, e.g., , . With the obtained samples, we approximate the corresponding marginal collocation points at time , as follows,

where, with integer , , , represent the approximate collocation points of at time , and are optimal collocation points of variable X. For simplicity, consider , so that the points are known analytically and do not depend on time point . In the case of a normal distribution, these points are known quadrature points, and tabulated, for example, in (Grzelak et al. 2019). After this first step, we have the set of collocation points, , for and . Subsequently, the from (16) are used as the ground-truth to train the ANN.

In the second step, we determine the conditional collocation points. For each time step and collocation point indexed by j, a nested Monte Carlo simulation is then performed to generate the conditional samples. Similar to the first step, we obtain the conditional collocation points from each of these sub-simulations using (16). With being an integer representing the number of conditional collocation points, the above process yields the following set of conditional collocation points,

where is a conditional collocation point, and , , . Note that, in the case of Markov processes, the above generic procedure can be simplified by just varying the initial value instead of running a nested Monte Carlo simulation. Specifically, we then set , and to generate the corresponding conditional collocation points.

The inverse function, , is often not known analytically, and needs to be derived numerically. An efficient procedure for this is presented in (Grzelak 2019). Of course, it is well-known that the computation of is equivalent to the computation of the quantile at level p.

We encounter essentially four types of stochastic collocation (SC) points: are called the original SC points, are original conditional collocation points, are the marginal SC points, and are the conditional SC points. For example, is conditional on a realization . In the context of Markov processes, the marginal SC points only depend on the initial value , thus they are a special type of conditional collocation points, i.e., . When a previous realization happens to be a collocation point, e.g., , we have , which will be used to develop a variation of the 7L scheme in Section 4.

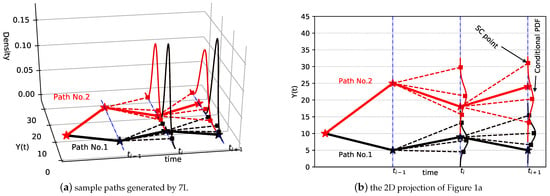

When the data generation is completed, the ANNs are trained in a supervised-learning fashion, on the generated SC points to approximate the function H in (15), giving us a learned function . This is called the training phase. With the trained ANNs, we can approximate new collocation points, and develop a numerical solver for SDEs, which is the Seven-League scheme (7L), see Algorithm 2. Figure 1 gives a schematic illustration of Monte Carlo sample paths that are generated by the 7L scheme.

| Algorithm 2: 7L Scheme |

|

Figure 1.

Schematic diagram of the 7L scheme. Here conditional SC points, represented by ■, are conditional on a previous realization, denoted by ★. “Conditional PDF” is the conditional probability density function, defined by these conditional SC points. The density function, which is not required by 7L, is plotted only for illustration purposes.

Remark 1

(Lagrange interpolation issue). In the case of classical Lagrange interpolation, Vandermonde matrix A should not get too large, as the matrix would then suffer from ill-conditioning. However, when employing orthogonal polynomials, this drawback is removed. More details can be found in Grzelak et al. (2019).

When the approximation errors from the ANN and SCMC techniques are sufficiently small, the strong convergence properties of the 7L scheme can be estimated, as follows,

where time step is used to define the ANN training data-set, and the actual time step is used for ANN prediction, with . Based on the trained 7L scheme, the strong error, , thus, does not grow with the actual time step . In particular, let us assume , for example , when employing the Euler–Maruyama scheme with time step during the ANN learning phase, we expect a strong convergence of , which then equals , while the use of the Milstein scheme during training would result in accuracy. When , the time step during the learning phase is 100 times smaller than , which has a corresponding effect on the overall scheme’s accuracy in terms of its strong, pathwise convergence. The maximum value of the time step in the 7L scheme can be set up to for a Markov process. With a time step , we solve the SDE in an iterative way until the actual terminal time T, which can be much larger than the training time horizon . An error analysis can be found in Section 5.2.

3.3. The Artificial Neural Network

The ANN to learn the conditional collocation points is detailed in this subsection. Neural networks can be utilized as powerful functions to approximate a nonlinear relationship. In fact, we will employ a rather basic fully-connected neural network configuration for our learning task.

A fully connected neural network, without skip connections, can be described as a composition function, i.e.,

where represents the input variables, being the hidden parameters (i.e., weights and biases), the number of hidden layers. We can expand the hidden parameters as,

where and represent the weight matrix and the bias vector, respectively, in the ℓ-th hidden layer.

Each hidden-layer function, , takes input signals from the output of a previous layer, computes an inner product of weights and inputs, and adds a bias. It sends the resulting value in an activation function to generate the output.

Let denote the output of the j-th neuron in the ℓ-th layer. Then,

where , , and is a nonlinear transfer function (i.e., activation function). With a specific configuration, including the architecture, the hidden parameters, activation functions, and other specific operations (e.g., drop out), the ANN in (21) becomes a deterministic, complicated, composite function.

Supervised machine learning (Goodfellow et al. 2016) is used here to determine the weights and biases, where the ANN should learn the mapping from a given input to a given output, so that for a new input, the corresponding output will be accurately approximated. Such ANN methodology consists of basically two phases. During the (time-consuming, but offline) training phase the ANN learns the mapping, with many in- and output samples, while in the testing phase, the trained model is used to very rapidly approximate new output values for other parameter sets, in the online stage.

In a supervised learning context, the loss function measures the distance between the target function and the function implied by the ANN. During the training phase, there are many known data samples available, which are represented by input-output pairs . With a user-defined loss function , training neural networks is formulated as

where the hidden parameters are estimated to approximate the function of interest in a certain norm. More specifically, in our case, the input, , equals , and the output, , represents the collocation points , as in Equation (18). In the domain of interest , we have a collection of data points , , and their corresponding collocation points , which form a vector of input-output pairs . For example, using the -norm, the discrete form of the loss function reads,

One of the popular approaches for training ANNs is to optimize the hidden parameters via backpropagation, for instance, using stochastic gradient descent (Goodfellow et al. 2016).

4. An Efficient Large Time Step Scheme: Compression–Decompression Variant

The 7L scheme employs the ANNs to generate the conditional collocation points for all samples of a previous time point, see Figure 1b. The extensive use of ANNs in the methodology has an impact on the method’s computational complexity.

In order to speed up the data-driven 7L scheme procedure, we introduce a compression–decompression (CDC) variant, in the online validation phase. Please note that the offline learning phase is identical for both variants. The so-called 7L-CDC scheme, to be developed in this section, only uses the ANNs to determine the conditional collocation points for the optimal collocation points of a previous time point. All other samples will be computed by means of accurate interpolation. The computational complexity is reduced when the chosen interpolation is computationally cheaper than using ANNs.

By the compression–decompression procedure, Monte Carlo sample paths based on SDEs can be recovered from a 3D matrix. We then employ the 7L scheme procedure only to compute the entries of the encoded matrices at time point (see Section 4.1), which leads to a reduction of the computational cost in many cases.

Next, we will explain the process of recovering the sample paths from a known matrix C using the decompression method.

4.1. CDC Variant

With a discretization , we define a 3D matrix , which consists of entries in total. Recall that represents the number of collocation points and the number of conditional collocation points, N the number of grid points over time. and may vary with time points (in case of an adaptive scheme, for example), but we use constant values for and . For each time point , we construct a 2D matrix ,

with , , the original SC points, the marginal collocation points (depending on the initial value ), and , , the k-th original conditional SC points, the conditional SC points (depending on the marginal collocation points ). We thus represent the conditional SC points, , by matrix elements . The two empty cells in (26) are not addressed in the computation. Moreover, at the last time point, , is not needed.

Remark 2

(Time-dependent elements). As the original collocation points, and , do not depend on time, we can remove the first row and the first column of matrix to obtain a time-dependent version, , with the following elements,

An entry in matrix can be computed by the trained ANNs, as follows,

using the marginal SC points,

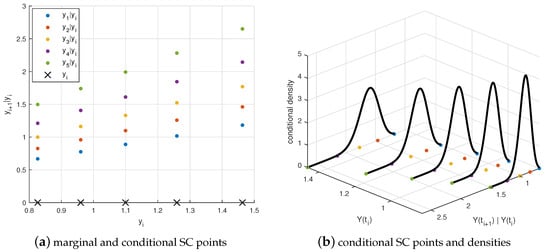

where , , represents the ANN function which approximates the j-th collocation point when , and the j-th conditional collocation point when . When , . Figure 2 shows an example of the distribution of the conditional SC points when and . When the matrices have been defined, all sample paths are compressed into a structured matrix. In other words, matrix contains all of the information needed to perform the Monte Carlo simulation of the SDEs, apart from the interpolation technique.

Figure 2.

Schematic illustration of matrix C, with five marginal SC points and five conditional SC points. The conditional SC points are dependent on the realization connected to the corresponding marginal SC point.

The resulting matrix C will be decompressed to generate Monte Carlo sample paths with the help of an interpolation. The process of decompression is straightforward given a matrix . In addition to the interpolation process in SCMC (see Equation (19)), an interpolation is needed to compute conditional collocation points for previous realizations, based on the matrix .

Suppose a vector of samples at time , and we wish to generate samples of . For a specific sample , we need to calculate conditional SC points. To obtain the k-th () conditional SC point, we take marginal collocation points and their k-th conditional collocation points, using functions (28) and (29), to form input-output pairs . This combination gives us the interpolation function , through which we can obtain the k-th conditional SC point of at time point ,

As a result, for each sample , we obtain interpolation nodes, which form a set of pairs, ,, up to , which are used to determine the interpolation function required by SCMC. Afterwards, to generate a new sample , the mapping function defines a conditional sample by taking in a random sample from X,

The choice of the appropriate number of (conditional) collocation points is a trade-off between the computational cost and the required accuracy. When the number of collocation points tends to infinity, the 7L-CDC scheme will resemble the 7L scheme from Section 3.1. A schematic picture is presented in Figure 3.

Figure 3.

Schematic diagram of the 7L-CDC scheme at time . (a): marginal SC points, corresponding to Equation (29). (b): sample paths generated by 7L-CDC. The triple {2,1,3}, in the picture, represents the third conditional SC point, dependent on the first marginal SC point at time point . The above procedure is also applicable to other time points.

Remark 3

(Computation time). During the online phase of the method, the total computation time of the large time step schemes consists of essentially two parts, calculation of the conditional SC points, and generating random samples by interpolation (the second part). The difference between the 7L and 7L-CDC schemes is found in the computation of the conditional SC points, the generation of the samples is identical for both schemes.

In this first part, for the 7L-CDC scheme, the work consists of setting up matrix C by the ANNs and computing the conditional SC points by the interpolation. In matrix C, there are elements that are computed by the ANNs, where N represents the number of time points, the number of collocation points and the number of conditional collocation points. Depending on the collocation points, the interpolation is based on conditional collocation points for each path. For the 7L scheme, elements, where M is the total number of paths, are computed by the ANNs. The time ratio between the 7L-CDC and 7L schemes is found to be,

with the computational time of the ANN (i.e., the function ), for the interpolation (i.e., the function in (30)), which is a polynomial function of . Given the fact that the number of sample paths is typically much larger than the number of SC points ,

When the employed interpolation is computationally cheaper than the ANNs, so that the 7L-CDC scheme needs fewer computations than the 7L scheme.

4.2. Interpolation Techniques

To define the function in (13) or in (30), we will compare three different interpolation techniques.

A bijective mapping function is obtained by the monotonic piecewise cubic Hermite interpolating polynomial (PCHIP) (Fritsch and Carlson 1980). Assuming there are multiple data points, , using,

the derivatives at the points are computed as a weighted average,

where and . At each data point, the first derivative is guaranteed to be continuous, and a cubic spline is used to interpolate between the data points. If , then , PCHIP requires more computations than a Lagrange interpolation, but it results in a monotonic function which is generally advantageous.

The convergence of the stochastic collocation method is not really dependent on the monotonicity of the mapping function, so an interpolation based on Lagrange polynomials is possible in practice. The barycentric version of Lagrange interpolation (Berrut and Trefethen 2004), our second interpolation technique, provides a rapid and stable interpolation scheme, which is applied when using Lagrange interpolation in our numerical experiments. With help of the basic Lagrange interpolation expressions, however, we can conveniently perform theoretical analysis.

The third technique is based on choosing the interpolation points carefully (e.g., as the Chebyshev zeros) to achieve a stable interpolation. The Chebyshev interpolation (Rivlin 1990) is of the form,

where are interpolation basis functions, here Chebyshev orthogonal polynomials, up to degree . The Chebyshev nodes in the interval are computed as,

When the polynomial degree increases, the Chebyshev interpolation retains uniform convergence. In financial mathematics, Chebyshev interpolation has been successfully used, for example, to compute parametric option prices and implied volatility in (Gaß et al. 2018; Glau et al. 2019; Glau and Mahlstedt 2019). When the interpolation points are not Chebyshev nodes (e.g., Gauss quadrature points), the Chebyshev coefficients can be estimated by means of a least squares regression, which is also called the Chebyshev fit. In such case, the coefficients in (14) can be explicitly computed, in contrast to the barycentric Lagrange interpolation.

The selection of a suitable interpolation technique depends on various factors, for instance, speed, monotonicity, availability of coefficients. These three interpolation methods will be compared in the numerical section.

4.3. Pathwise Sensitivity

Often in computations with stochastic variables, we wish to determine the derivatives of the variables of interest, the so-called pathwise sensitivities. This is generally not a trivial exercise in a Monte Carlo setting, see, for example, the discussions in (Capriotti 2010; Giles and Glasserman 2006; Jain et al. 2019; Oosterlee and Grzelak 2019). With our new large time step schemes, we determine the pathwise sensitivities of the computed stochastic variables in a natural way, based on the available information in the (conditional) SC points and the interpolation. In this section, we derive the pathwise sensitivity of the state variable with respect to model parameters .

The first derivative with respect to parameter of the conditional distribution in Equation (13) reads,

where is the total derivative of function and are basis functions, which do not depend on the model parameters. For the derivative in (33) at time , the expression of the ANN (21), given the specific activation function, is available. So, the function is analytically differentiable. As a result, can be easily computed, by means of automatic differentiation in the machine learning framework. Thus, we arrive at the sensitivity of a sample path with respect to model parameters, as follows,

5. Numerical Experiments

In this section with numerical experiments we will give evidence of the high quality of our numerical SDE solver, by analyzing in detail its components. For this purpose, we mainly focus on the Geometric Brownian Motion SDE, which reads,

where the model parameters are the constant drift and volatility coefficients, i.e., , and the initial value is given by at time . For (35) a continuous-time analytic expression for the asset price at time t is available, i.e.,

where , and is governed by the lognormal distribution. The derivative of the stock price with respect to volatility is available in closed form, and reads,

This expression will be used as the reference value of the sensitivity obtained from the 7L discretization.

Furthermore, the Ornstein–Uhlenbeck process is explained and also analyzed, in Section 5.3.2. We will employ the large time step discretization, in which the conditional collocation points are computed by the trained ANN, and compare the results of the novel scheme with those obtained by the Milstein SDE discretization.

5.1. ANN Training Details

GBM and the OU process are Markov processes, so the conditional distribution at time given information up to time only depends on the information at time . The ANN (15) will therefore be used for the conditional collocation stochastic points, with , for GBM, and for the OU process (as will be discussed in Section 5.3.2).

Regarding the size of the compression–decompression matrix, the more conditional collocation points, the better the accuracy of the 7L-CDC method. A 5 × 5 matrix size (i.e., five marginal and five conditional SC points) is preferred, taking into account the computing effort and the accuracy. In Grzelak et al. (2019) it has been discussed and shown that highly accurate approximations could already be obtained with a small number of collocation points.

As the first method component, we evaluate the quality of the ANN which defines the collocation points, for the GBM dynamics. For this purpose, random points (i.e., sets of input parameters) are generated by using Latin hypercube sampling (LHS) in the domain of interest for the three parameters , see Table 1. As the second step, for each point, a Monte Carlo method is employed to simulate the discretized SDE based on the tiny time step . We use an Euler–Maruyama time discretization for this purpose, with the number of time points and the time horizon . At each time step, , the conditional distribution function is computed, based on the many generated MC paths. This way, the resulting collocation points for the “big time step”, , are also obtained, to form the required training dataset.

Table 1.

Training data, . Here is an example for training on five SC points.

We set , , . The amount of training data used is given by samples in total, which are divided into an ANN training () and an ANN testing () set.

The ANN hyperparameters have an impact on the errors from the optimization related to training the ANN, as well as on the model performance. The approximation capacity does not only depend on the number of hidden parameters, but also on the network structure (i.e., on the width and depth of the network). In principle, deep neural networks have more powerful expressiveness than shallow neural networks. The fully connected neural network employed will be composed of one input layer, one output layer and four hidden layers. Each hidden layer consists of 50 neurons, with Softplus, i.e., as the activation function (Nwankpa et al. 2018). Before training the ANN, the hidden parameters are initialized via the Glorot technique (Glorot and Bengio 2010). Training goes in batches. At each iteration, a variant of SGD, the Adam (Kingma and Ba 2014) optimization algorithm, which implements adaptive learning rates, randomly selects a portion of the training samples according to the batch size, to calculate the gradient for updating the hidden parameters. In an epoch, all training samples have been processed by the optimizer. The mean squared error (MSE), which measures the distance between the ground-truth and the model values in supervised learning, is used to update the hidden parameters during training. The measure mean absolute error (MAE), i.e., is also estimated, as the pathwise error of the 7L scheme is related to the maximum absolute difference in the approximated collocation points, , in Section 5.3, see the derivation in the next section.

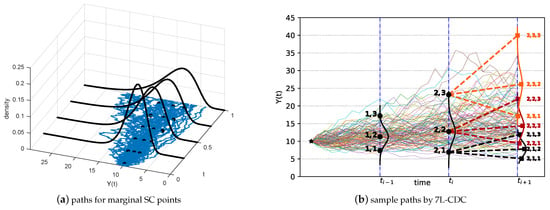

The training process starts with a relatively large learning rate (i.e., ) to avoid getting stuck in local optima. After 1000 epochs, the learning rate is reduced to , followed by training 500 more epochs, to achieve a steady convergence. Afterwards, the trained ANN is evaluated on the testing dataset, with the results presented in Figure 4 (for two of the collocation points) and Table 2. Clearly, the predicted values fit very well with the true values of the stochastic collocation points. This implies that the trained ANNs reach a highly satisfactory generalization, and generate accurate and robust approximation results for all five collocation points.

Figure 4.

The goodness-of-fit on test dataset. Two scatter plots show the relation between the predicted values and the ground truth.

Table 2.

The approximation performance on test data set.

5.2. Numerical Error Analysis, the Lagrangian Case

There are essentially two approximation errors in the 7L scheme, a neural network approximation error when generating the collocation points, and an SCMC error when representing the conditional distribution function.

Considering d inputs, the neural network may approximate any function , from the function space , where the derivatives up to order are Lipschitz continuous Yarotsky (2017). The input and output variables can be normalized to the unit interval . With a fixed network architecture during training, the approximation error can be assessed, as follows.

Theorem 1.

From Yarotsky (2017), given any , there exists a neural network which is capable of approximating any function with error , based on the following configuration:

- at least piece-wise activation functions,

- at least hidden layers and weights and computation units, where depends on the parameters d and n.

When the architecture is dynamic, the error bound can be further reduced, as shown in Montanelli and Du (2019) and Yarotsky (2017). One of the assumptions is that the ANNs are sufficiently trained, so that the optimization error is negligible.

The error from the SCMC methodology was derived in Grzelak et al. (2019). The optimal collocation points, , , correspond to the zeros of an orthogonal polynomial. In the case of Lagrange interpolation, when the collocation method can be connected to Gauss quadrature, we have

with , the difference between the target and the SC approximated function, the weight function, and the quadrature weights. When the Gauss–Hermite quadrature is used with m collocation points. the approximation error of the CDF can be estimated as,

where and the distance function

with . In other words, the error of approximating the target CDF converges exponentially to zero when the number of corresponding collocation points increases.

At each time point , the process is approximated using the collocation method, by a polynomial , i.e., in the case of classical Lagrange interpolation, using ,

where the collocation points . Because of the use of an ANN, the collocation points are not exact, but they are approximated with , where represents the ANN approximated value. The error associated with can be estimated as in (Montanelli and Du 2019). The impact of on the obtained output distribution needs to be assessed. Let denote the approximate function based on the predicted ANN collocation points , and x a random sample from the standard normal distribution . The approximation error, in the strong sense, is given by

Note that the interpolation functions are identical as they depend solely on the x values. We arrive at the following error related to the ANNs,

Considering the error introduced by SCMC in (39), the total pathwise error reads

In other words, the expected pathwise error can be bounded by the approximation CDF error plus the largest difference in the ANN approximated collocation points.

Kolmogorov–Smirnov Test

The Kolmogorov–Smirnov test, calculating the supremum of a set of distances, is used to measure the nonparametric distance between two empirical cumulative distribution functions. We perform the two-sample Kolmogorov–Smirnov test, as follows,

where and are two empirical cumulative distribution functions, one from the 7L-CDC solution and the other one from the reference distribution. We take the analytic solution of the GBM as the reference distribution.

Remark 4

(Time horizon for 7L-CDC). The information in Table 1 is used to train the mapping function between a realization (including marginal SC points) and its conditional SC points, via Equation (28). For the marginal SC points in Equation (29), however, we need training data up to terminal time T. So, we generate a second dataset in which the time reaches (the terminal time of interest) and the upper value for equals 5. These two datasets are merged into one set in order to train the ANNs for the 7L-CDC methodology.

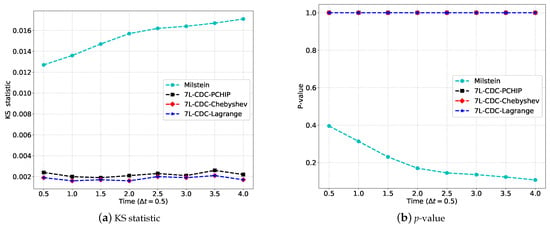

Figure 5 shows the Kolmogorov–Smirnov test at different time points based on 10,000 samples. We focus on the CDC methodology here, and compare the accuracy with the different interpolation methods in the figure. Clearly, the KS statistic and also the corresponding p-values for the 7L-CDC schemes are much better than those of the Milstein scheme in Figure 5. This is an indication that the CDFs that originate from the 7L-CDC schemes resemble the target CDF much better, with high confidence. In addition, unlike the Milstein scheme, the 7L-CDC schemes exhibit an almost constant difference between the approximated and target CDFs with increasing time.

Figure 5.

The Kolmogorov–Smirnov test: , with 10,000 samples. When we have a small KS statistic or a large p-value, the hypothesis that the distributions of the two sets of random samples are the same can not be rejected.

We will also analyze the costs of the different interpolation methods within 7L-CDC. The two steps which require interpolation are the computation of the conditional collocation points and the generation of conditional samples. The computational speed of the 7L-CDC scheme depends on the employed interpolation method, see Table 3. In general, to generate a solution with the same strong order in the numerical error, the Milstein scheme will require more computation time, here about 27 s, while the 7L scheme needs 13 s and 7L-CDC (barycentric version) 5 s when . The larger the time step, the more computation time will be saved.

Table 3.

The CPU running time (s) to reach the same accuracy (CPU: E3-1240, 3.40 GHz): simulating 10,000 sample paths until terminal time , based on marginal/conditional SC points. Here, for the 7L scheme, PCHIP is used as the interpolant in Step 3 of Algorithm 1.

Note that, in order to achieve a similar accuracy in the strong sense, the Euler–Maruyama scheme requires a much finer time grid, by a factor of , than the 7L scheme. When is sufficiently large, the 7L-CDC scheme outperforms the Euler–Maruyama scheme, in terms of both accuracy and speed. For example, in Table 3, when , and when . In other words, the “online version” of the Euler–Maruyama discretization is computationally slower than the online phase of the 7L scheme to achieve the same accuracy. Additionally, computational time of the 7L scheme can be further reduced by parallelization, for example, using GPUs .

5.3. Pathwise Error Convergence

In this section, we compare the pathwise errors of our proposed novel discretization with those of the classical discretization schemes.

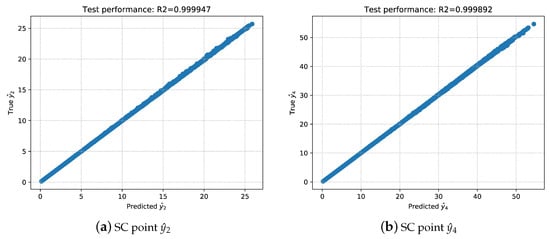

5.3.1. GBM Process

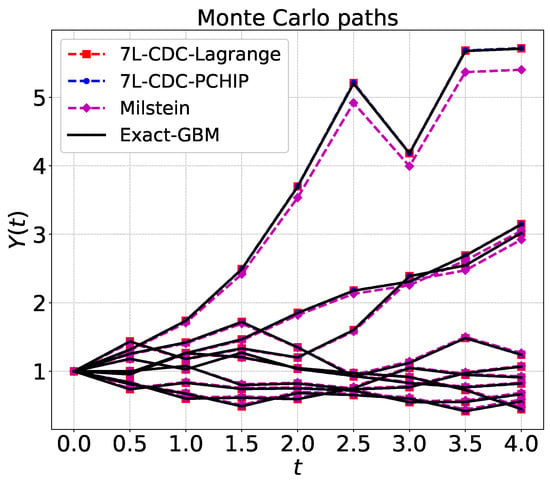

We analyze here the strong convergence properties of the new methodology for the GBM process. For GBM, the exact path is given by the expression (36). The random number, which is drawn from , is the same for the exact solution (36), the novel schemes (14) and the Milstein scheme (3). The pathwise differences between the numerical schemes and the exact simulation are plotted in Figure 6. When , the 7L-CDC scheme presents superior paths as compared to the Milstein scheme, in terms of its pathwise error comparing to the exact path.

Figure 6.

Paths generated by 7L-CDC: time step , GBM with , , . The paths are with Chebyshev interpolation, which are not plotted, are identical to ones from Lagrange in this case.

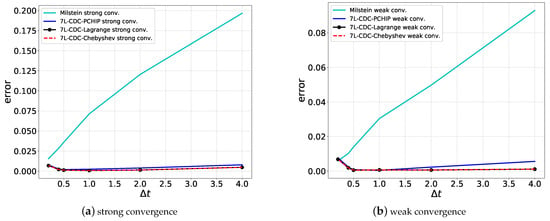

As shown in Figure 7, the 7L-CDC scheme gives rise to flat, almost constant, strong and weak error convergence curves for many different -values, suggesting a small, constant convergence error even with large time steps . The Milstein scheme has the strong order of convergence , so that a larger time step gives rise to a larger error. When the time step becomes small, more time points are needed to reach a time T, and then the resulting recursive error of the 7L-CDC scheme increases.

Figure 7.

The strong error is estimated as , see Equation (4) and the weak error by , see (Oosterlee and Grzelak 2019, p. 261) for details on the computation of the convergence rate. There are sample paths in total.

The number of conditional collocation points, by which the conditional distribution at a next time point is mostly determined, has a significant contribution to the convergence order of the 7L-CDC scheme. As mentioned, we found empirically that five conditional collocation points are preferable in terms of computing effort versus accuracy. CDC matrix C is then of size ; that is, at each time point, there are five collocation points and each of these has five conditional collocation points.

5.3.2. Ornstein–Uhlenbeck Process

In the case of Markov processes, any SDE which can be solved by the Euler–Maruyama discretization can be solved by our ANN methodology, with improved strong convergence properties. We also wish to confirm the strong convergence properties for another stochastic process in this section.

The mean reverting Ornstein–Uhlenbeck (OU) process (Uhlenbeck and Ornstein 1930) is defined as,

with the long term mean of , the speed of mean reversion, and the volatility. The initial value is , and the model parameters are . Its analytical solution is given by,

with , . Equation (45) is used to compute the reference value to the pathwise error and the strong convergence.

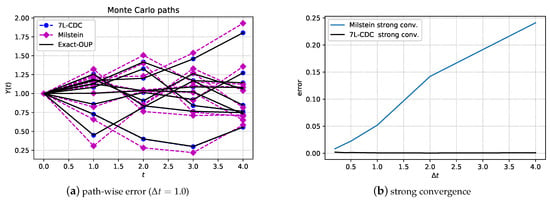

We employ the same data-driven procedure as for GBM to discretize and solve the OU process. In the training phase, the Euler–Maruyama scheme (2) is used to discretize the OU dynamics and generate the dataset. Note that the Milstein and Euler schemes are identical in the case of the OU process. As the OU process is a Markov process, we again can vary to find the relation between the conditional SC points and the marginal SC points (i.e., as in Equation (28)). Similar to Table 1, we employ five SC points to learn within the ANN, with , , , , see Section 5.1 for the details of the training process.

After the training, the obtained ANNs will be applied to solve the OU process with specific parameters and details of our interest. We provide an example in Figure 8, which confirms that the sample paths generated by 7L-CDC are as accurate as the exact solution, and the error, in the sense of strong convergence, stays close to zero even with a large time step.

Figure 8.

Paths and strong convergence for the OU process, using , , , . The sample paths with barycentric, Chebyshev and PCHIP interpolation overlap for the 7L-CDC scheme. There are five marginal and five conditional SC points at each time point.

5.4. Applications in Finance

The possibility to take large time steps and still get accurate SDE solutions is certainly interesting in computational finance, as there are several financial products that are updated on a daily basis (think of an over-night interest rate), whereas monitoring of financial contracts and risk management monitoring is typically only done on a weekly, monthly of even yearly basis. In such situations, our novel scheme will be useful. Research into large time step simulations is state-of-the-art in computational finance, see the exact (and almost exact) Monte Carlo simulation papers, e.g., Broadie and Kaya (2006); Leitao et al. (2017) for the SABR and Heston stochastic volatility asset dynamics, respectively.

5.4.1. The Asian Option

Moreover, the strong convergence property of an SDE discretization is important in many cases. When valuing so-called path-dependent options, for example, improved strong convergence enhances the convergence of a Monte Carlo simulation. Options are governed by their pay-off function (i.e., the option value at the final time of the contract, ). Here we consider a path-dependent exotic option, the so-called European-style Asian option, which has a payoff that is based on a time-averaged underlying stock price. For example, the pay-off of a fixed strike Asian option is given by

where T is the option contract’s expiry time, and is the predetermined strike price. Here, denotes the discrete arithmetic average of the stock prices over monitoring dates ,

where is the observed stock price at time , . Averaging thus takes place in the time-wise direction, and we consider pricing financial options based on the discrete arithmetic average of a number of stock prices.

We assume here that the underlying stock price follows Geometric Brownian motion, as in Equation (35), under the risk-neutral measure, meaning , where r is the risk-free interest rate. There is a cash account , governed by . The value of European-style Asian option is then given by

Because the pay-off is clearly a path-dependent quantity for such options, it is expected that an improved strong convergence, obtained with the variant 7L-CDC, will result in superior convergence, as compared to classical numerical discretization schemes.

The relative error is presented, which is defined as

where is based on the exact GBM Monte Carlo simulation. As shown in Table 4, the 7L-CDC scheme gives highly accurate Asian option prices, compared to the Milstein scheme. As the accuracy of Asian option prices depends directly on the accuracy of the realized paths, an increasing number of monitoring dates will give rise to higher accuracy by 7L-CDC.

Table 4.

Pricing Asian European-style option with a fixed strike price, using , , , , the number of sample paths .

Next, we focus on the Asian option’s sensitivity. The sensitivity of the option price with respect to volatility is called Vega, which can be computed in a pathwise fashion (see Chapter 7 in Glasserman 2004), as follows,

where N represents the number of grid points over time. The chain rule is employed to derive the sensitivity. First of all, we compute the gradient of the payoff function with respect to the underlying stock price, by

Then, the derivative of the stock price at time with respect to the model parameter, , can be found with the trained ANNs, as given by Equation (33). Vega can be estimated by,

When there are M sample paths, we have,

As shown in Table 1, there are four input arguments in the function (note that the drift term equals the interest rate, i.e., , in the risk-neutral world). Since the previous realization is a function of the model parameters (here r and ), at time , the total derivative of with respect to the volatility in Equation (34) becomes

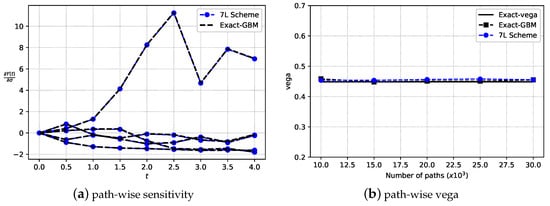

where is known at the previous time point. Like simulating the Monte Carlo paths, the calculation of this derivative is done iteratively. Figure 9a compares the pathwise sensitivities obtained via Equations (37) and (51). Clearly, the pathwise derivative by the 7L scheme is very similar to the analytical solution. Figure 9b confirms that the ANN methodology computes a highly accurate Asian option Vega by means of the above pathwise sensitivity. Summarizing, the sensitivity with respect to model parameters can highly accurately be obtained from the trained ANNs. As the 7L-CDC scheme is composed of marginal and conditional collocation points, the above procedure of computing the pathwise sensitivity is also applicable to the variant 7L-CDC, by using the chain rule.

Figure 9.

Path-wise estimator of Vega: Exact Vega is calculated by means of the central finite difference. The parameters are , , , , , , .

5.4.2. Bermudan Option Valuation

When dealing with so-called Bermudan options, the option contract holder has the right (but not the obligation) to exercise the option contract at a finite number of pre-specified dates up to final time T. At an exercise date, when the holder decides to exercise the Bermudan option, she immediately obtains the current payoff value of the contract. Alternatively, she may also wait until the next exercise opportunity. The Bermudan option can be exercised at the following set of exercise dates, , with a constant time difference, , for any .

In this experiment, we compare the performance of the new 7L-CDC discretization scheme with a classical scheme. Valuation of the Bermudan option will take place by means of the well-known Longstaff–Schwartz Monte Carlo (LSMC) method (Longstaff and Schwartz 2015), a least squares Monte Carlo method. The Longstaff–Schwartz algorithm is presented, for convenience, in the Appendix A.

The difference between a large time step simulation and a classical simulation, like the Milstein scheme, is that a classical scheme requires additional time steps to be taken between the early-exercise dates of the Bermudan option, while with the 7L-CDC scheme, we can perform one-step Monte Carlo simulation without any intermediate grid points between adjacent early-exercise dates.

We also assume here that the underlying stock price follows Geometric Brownian motion, as in Equation (35), under the risk-neutral measure, with . A Bermudan put option, with risk-free interest rate , pay-off function with strike price and initial stock price , is priced based on Monte Carlo paths. The matrix size within the 7L-CDC scheme is set to . The terminal time is with a constant time step . The random seed is chosen to be zero when drawing random numbers. We compare the relative errors , where is computed with the help of a Monte Carlo method based on the exact simulation of GBM (36).

As shown in Table 5, the option prices based on the 7L-CDC Monte Carlo simulation are highly satisfactory, and the related error does not increase with larger time steps . In contrast, a larger time step gives rise to significant pricing errors, in the case of the Milstein discretization.

Table 5.

Bermudan put option prices based on large time step Monte Carlo simulations.

Remark 5.

In principle, a sample value can be any rational number. So, a path value may reach a larger stock price than the prescribed upper bound in Table 1. The stock prices outside the training interval are called outliers. Outliers did not appear in the experiments of Table 5. As an alternative method to avoid the appearance of outliers, one may scale the asset price, to remove the dependence on the initial value. For example, GBM can be scaled by Using Itô’s lemma, we have a drift-less process, where the initial value . The following formula returns the original variable, In such case, scaling guarantees a fixed initial value, for example, .

6. Conclusions and Outlook

We developed a data-driven numerical solver for stochastic differential equations, by which large time step simulations can be carried out accurately in the sense of strong convergence. With a combination of artificial neural networks and the stochastic collocation Monte Carlo method, a small number of stochastic collocation points are learned by the ANN to approximate a nonlinear function which can be used to compute the unknown collocation points. Theoretical analysis indicates that the numerical error is controllable and does not increase when the simulation time step increases.

There are several advantages to the proposed approach. The powerful expressive ability of neural networks enables the ANNs to accurately approximate stochastic collocation points. The compression–decompression method reduces the computational costs, so that the numerical method can be applied in practice. In finance, the proposed big time step methodology will be highly beneficial for the generation for path-dependent financial option contracts or in risk management applications.

As an outlook, it will be relevant to extend the introduced methodology to solving higher-dimensional or more involved SDE dynamics. We will define multi-dimensional stochastic collocation points (i.e., by means of a tensor) for a multi-dimensional system of SDEs, and choose a Convolutional Neural Network (LeCun et al. 2015) to efficiently process these collocation points. Of course, this may not trivially generalize to truly high-dimensional systems, but approximation of moderate dimensionality should be possible The computational speed can be further improved by parallel computation, for example, on GPUs. Non-Markovian processes may also be solved with a large time step by the proposed ANN method, where the conditional collocation points are dependent on past realizations. Fractional Brownian motion (Mandelbrot and Van Ness 1968) forms a relevant example, which is used for the simulation of rough volatility in finance (Gatheral et al. 2018). In such a context, advanced variants of fully connected neural networks, e.g., recurrent neural networks (RNN) or long short-term memory (LSTM) networks (see a review in Yu et al. 2019), are recommended when approximating the nonlinear transition probability function, for example, Equation (13).

As another outlook, multilevel Monte Carlo (MLMC) methods, as developed by (Giles 2008, 2015), form another interesting research topic for our large time step accurate discretization schemes. It is well-known that the strong convergence properties of SDE discretizations impact the efficiency of the MLMC methods.

Author Contributions

Writing—original draft preparation, S.L.; SCMC method and research advice, L.A.G.; Supervision, C.W.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

S.L. would like to thank the China Scholarship Council (CSC) for the financial support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Longstaff–Schwartz Algorithm

For convenience, we detail the Longstaff–Schwartz Monte Carlo (LSMC) algorithm here (see Algorithm A1).

| Algorithm A1: 7L Scheme Longstaff–Schwartz Algorithm |

|

Note

| 1 | With seven-league boots, we are marching through the time-wise direction, see also https://en.wikipedia.org/wiki/Seven-league_boots, accessed on 1 September 2020. |

References

- Bar-Sinai, Yohai, Stephan Hoyer, Jason Hickey, and Michael P. Brenner. 2019. Learning data-driven discretizations for partial differential equations. Proceedings of the National Academy of Sciences of the United States of America 116: 15344–49. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beck, Christian, Sebastian Becker, Philipp Grohs, Nor Jaafari, and Arnulf Jentzen. 2018. Solving stochastic differential equations and Kolmogorov equations by means of deep learning. arXiv arXiv:1806.00421. [Google Scholar]

- Berrut, Jean-Paul, and Lloyd N. Trefethen. 2004. Barycentric Lagrange Interpolation. SIAM Review 46: 501–17. [Google Scholar] [CrossRef] [Green Version]

- Broadie, Mark, and Özgür Kaya. 2006. Exact simulation of stochastic volatility and other affine jump diffusion processes. Operations Research 54: 217–31. [Google Scholar] [CrossRef] [Green Version]

- Cameron, Robert H., and William T. Martin. 1947. The orthogonal development of nonlinear functionals in series of Fourier-Hermite functionals. Annals of Mathematics 48: 385–92. [Google Scholar] [CrossRef]

- Capriotti, Luca. 2010. Fast Greeks by Algorithmic Differentiation. Journal of Computational Finance 14: 3–35. [Google Scholar] [CrossRef]

- Cybenko, George. 1989. Approximation by superpositions of a sigmoidal function. Mathematics of Control, Signals and Systems 2: 303–14. [Google Scholar] [CrossRef]

- Fritsch, Frederick N., and Ralph E. Carlson. 1980. Monotone piecewise cubic interpolation. SIAM Journal on Numerical Analysis 17: 238–46. [Google Scholar] [CrossRef]

- Gaß, Maximilian, Kathrin Glau, Mirco Mahlstedt, and Maximilian Mair. 2018. Chebyshev interpolation for parametric option pricing. Finance and Stochastics 22: 701–31. [Google Scholar] [CrossRef] [Green Version]

- Gatheral, Jim, Thibault Jaisson, and Mathieu Rosenbaum. 2018. Volatility is rough. Quantitative Finance 18: 933–49. [Google Scholar] [CrossRef]

- Giles, Michael B. 2008. Multilevel Monte Carlo Path Simulation. Operations Research 56: 607–17. [Google Scholar] [CrossRef] [Green Version]

- Giles, Michael B. 2015. Multilevel Monte Carlo methods. Acta Numerica 24: 259–328. [Google Scholar] [CrossRef] [Green Version]

- Giles, Michael B., and Paul Glasserman. 2006. Smoking adjoints: Fast Monte Carlo Greeks. Risk 19: 88–92. [Google Scholar]

- Glasserman, Paul. 2004. Monte Carlo Methods in Financial Engineering. New York: Springer. [Google Scholar]

- Glau, Kathrin, Paul Herold, Dilip B. Madan, and Christian Pötz. 2019. The Chebyshev method for the implied volatility. Journal of Computational Finance 23: 1–31. [Google Scholar]

- Glau, Kathrin, and Mirco Mahlstedt. 2019. Improved error bound for multivariate Chebyshev polynomial interpolation. International Journal of Computer Mathematics 96: 2302–14. [Google Scholar] [CrossRef]

- Glorot, Xavier, and Yoshua Bengio. 2010. Understanding the difficulty of training deep feedforward neural networks. Paper present at Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, May 13–15; pp. 249–56. [Google Scholar]

- Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. 2016. Deep Learning. Cambridge: MIT Press. [Google Scholar]

- Grzelak, Lech A. 2019. The collocating local volatility framework—A fresh look at efficient pricing with smile. International Journal of Computer Mathematics 96: 2209–28. [Google Scholar] [CrossRef]

- Grzelak, Lech A., Jeroen Witteveen, Maria Suarez-Taboada, and Cornelis W. Oosterlee. 2019. The stochastic collocation Monte Carlo sampler: Highly efficient sampling from expensive distributions. Quantitative Finance 19: 339–56. [Google Scholar] [CrossRef]

- Han, Jiequn, Arnulf Jentzen, and E. Weinan. 2018. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences of the United States of America 115: 8505–10. [Google Scholar] [CrossRef] [Green Version]

- Jain, Shashi, Álvaro Leitao, and Cornelis W. Oosterlee. 2019. Rolling Adjoints: Fast Greeks along Monte Carlo scenarios for early-exercise options. Journal of Computational Science 33: 95–112. [Google Scholar] [CrossRef] [Green Version]

- Karatzas, Ioannis, and Steven E. Shreve. 1988. Brownian Motion and Stochastic Calculus. New York: Springer. [Google Scholar]

- Kingma, Diederik P., and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. arXiv arXiv:1412.6980. [Google Scholar]

- LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. 2015. Deep learning. Nature 521: 436–44. [Google Scholar] [CrossRef]

- Leitao, Álvaro, Lech A. Grzelak, and Cornelis W. Oosterlee. 2017. On a one time-step Monte Carlo simulation approach of the SABR model: Application to European options. Applied Mathematics and Computation 293: 461–79. [Google Scholar] [CrossRef] [Green Version]

- Li, Xingjie, Fei Lu, and Felix X. F. Ye. 2021. ISALT: Inference-based schemes adaptive to large time-stepping for locally Lipschitz ergodic systems. arXiv arXiv:2102.12669. [Google Scholar] [CrossRef]

- Longstaff, Francis A., and Eduardo S. Schwartz. 2015. Valuing American Options by Simulation: A Simple Least-Squares Approach. The Review of Financial Studies 14: 113–47. [Google Scholar] [CrossRef] [Green Version]

- Mandelbrot, Benoit B., and John W. Van Ness. 1968. Fractional Brownian Motions, Fractional Noises and Applications. SIAM Review 10: 422–37. [Google Scholar] [CrossRef]

- Maziar, Raissi, Paris Perdikaris, and George E. Karniadakis. 2019. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics 378: 686–707. [Google Scholar]

- Milstein, Grigori N. 1975. Approximate integration of stochastic differential equations. Theory of Probability and Its Applications 19: 557–62. [Google Scholar]

- Montanelli, Hadrien, and Qiang Du. 2019. New Error Bounds for Deep ReLU Networks Using Sparse Grids. SIAM Journal on Mathematics of Data Science 1: 78–92. [Google Scholar] [CrossRef] [Green Version]

- Nwankpa, Chigozie, Winifred Ijomah, Anthony Gachagan, and Stephen Marshall. 2018. Activation Functions: Comparison of trends in Practice and Research for Deep Learning. arXiv arXiv:1811.03378. [Google Scholar]

- Oosterlee, Cornelis W., and Lech A. Grzelak. 2019. Mathematical Modeling and Computation in Finance. Singapore: World Scientific. [Google Scholar]

- Platen, Eckhard. 1999. An introduction to numerical methods for stochastic differential equations. Acta Numerica 8: 197–246. [Google Scholar] [CrossRef] [Green Version]

- Risken, Hannes. 1984. The Fokker-Planck Equation: Methods of Solution and Applications. Springer Series in Synergetics; New York: Springer. [Google Scholar]

- Rivlin, Theodore J. 1990. Chebyshev Polynomials: From Approximation Theory to Algebra and Number Theory. Pure and Applied Mathematics: A Wiley Series of Texts, Monographs and Tracts; Hoboken: Wiley. [Google Scholar]

- Sirignano, Justin, and Konstantinos Spiliopoulos. 2018. Dgm: A deep learning algorithm for solving partial differential equations. Journal of Computational Physics 375: 1339–64. [Google Scholar] [CrossRef] [Green Version]

- Uhlenbeck, George E., and Leonard Ornstein. 1930. On the Theory of the Brownian Motion. Physical Review 36: 823–41. [Google Scholar] [CrossRef]

- Xie, You, Erik Franz, Mengyu Chu, and Nils Thuerey. 2018. TempoGAN: A Temporally Coherent, Volumetric GAN for Super-Resolution Fluid Flow. ACM Transactions on Graphics 37: 1–15. [Google Scholar] [CrossRef]

- Yarotsky, Dmitry. 2017. Error bounds for approximations with deep ReLU networks. Neural Networks 94: 103–14. [Google Scholar] [CrossRef] [Green Version]

- Yu, Yong, Xiaosheng Si, Changhua Hu, and Jianxun Zhang. 2019. A Review of Recurrent Neural Networks: LSTM Cells and Network Architectures. Neural Computation 31: 1235–70. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).