The Expanding Frontier: The Role of Artificial Intelligence in Pediatric Neuroradiology

Abstract

1. Introduction

2. Current Applications of AI in Pediatric Neuroradiology

2.1. AI-Powered Workflow Management in Pediatric Neuroradiology

2.1.1. Triage in the Emergency Setting

2.1.2. Exam Scheduling

2.1.3. Imaging Protocol Optimization, Image Enhancement, and Synthetic Imaging

2.1.4. Exam Prioritization and Report Generation

2.1.5. Communication of Urgent and Unexpected Findings

2.1.6. Workflow Organization and Workload Distribution

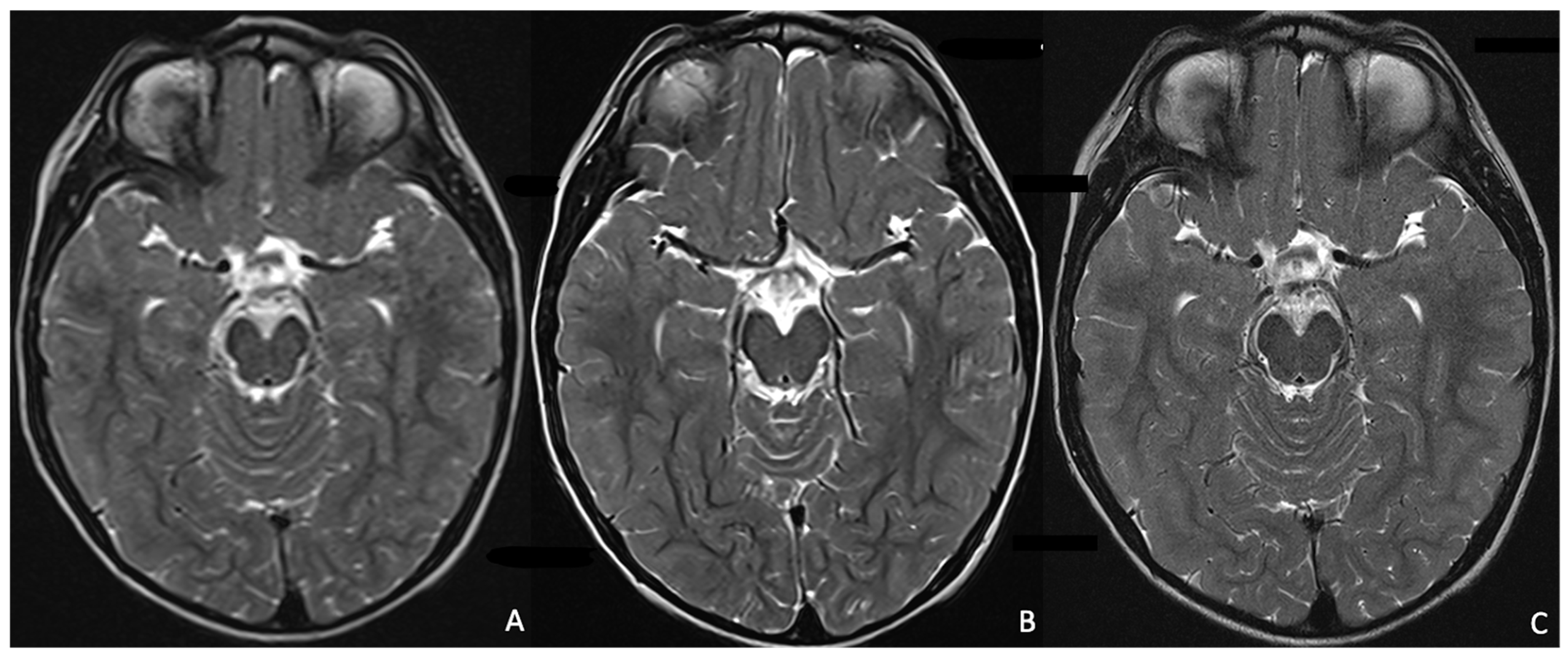

2.2. Current Clinical Applications in Pediatric Neuroradiology

2.2.1. AI Implementation in Pediatric MRI Image Acquisition and Reconstruction, and Artefact Correction

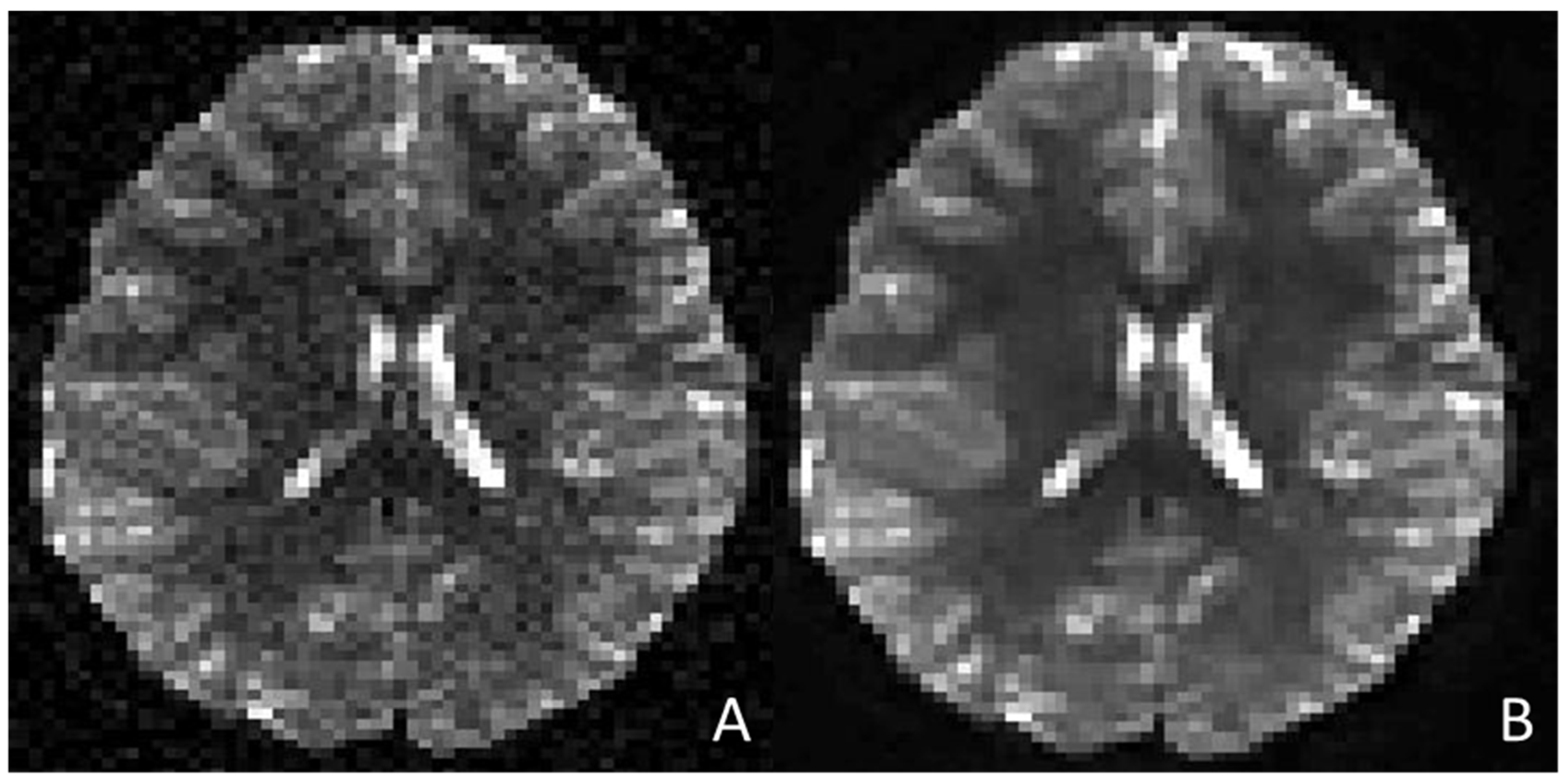

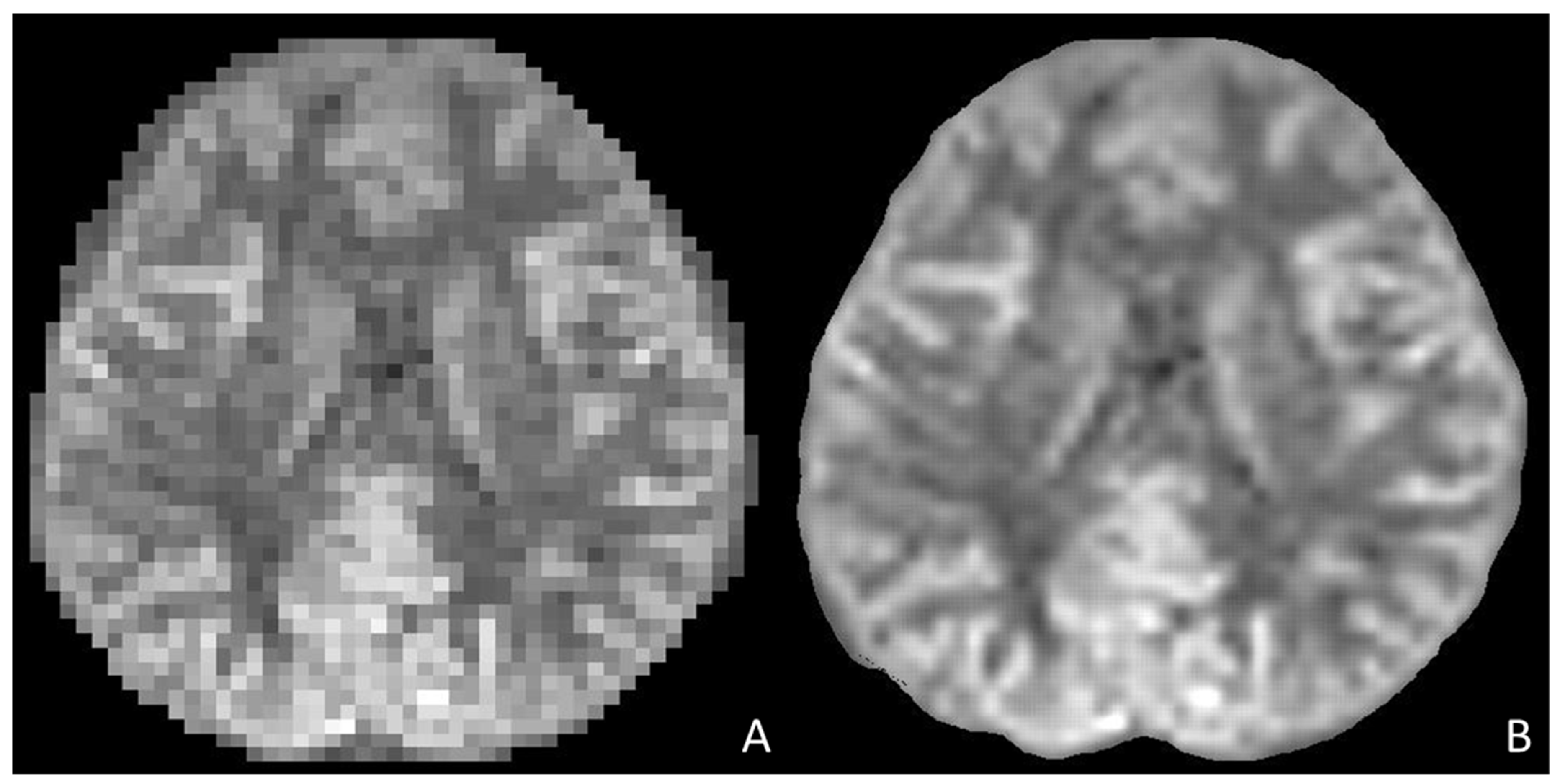

MRI Image Acquisition and Reconstruction

Motion Artefact Correction

2.2.2. Disease Classification, Prognostication, and Treatment Response Prediction

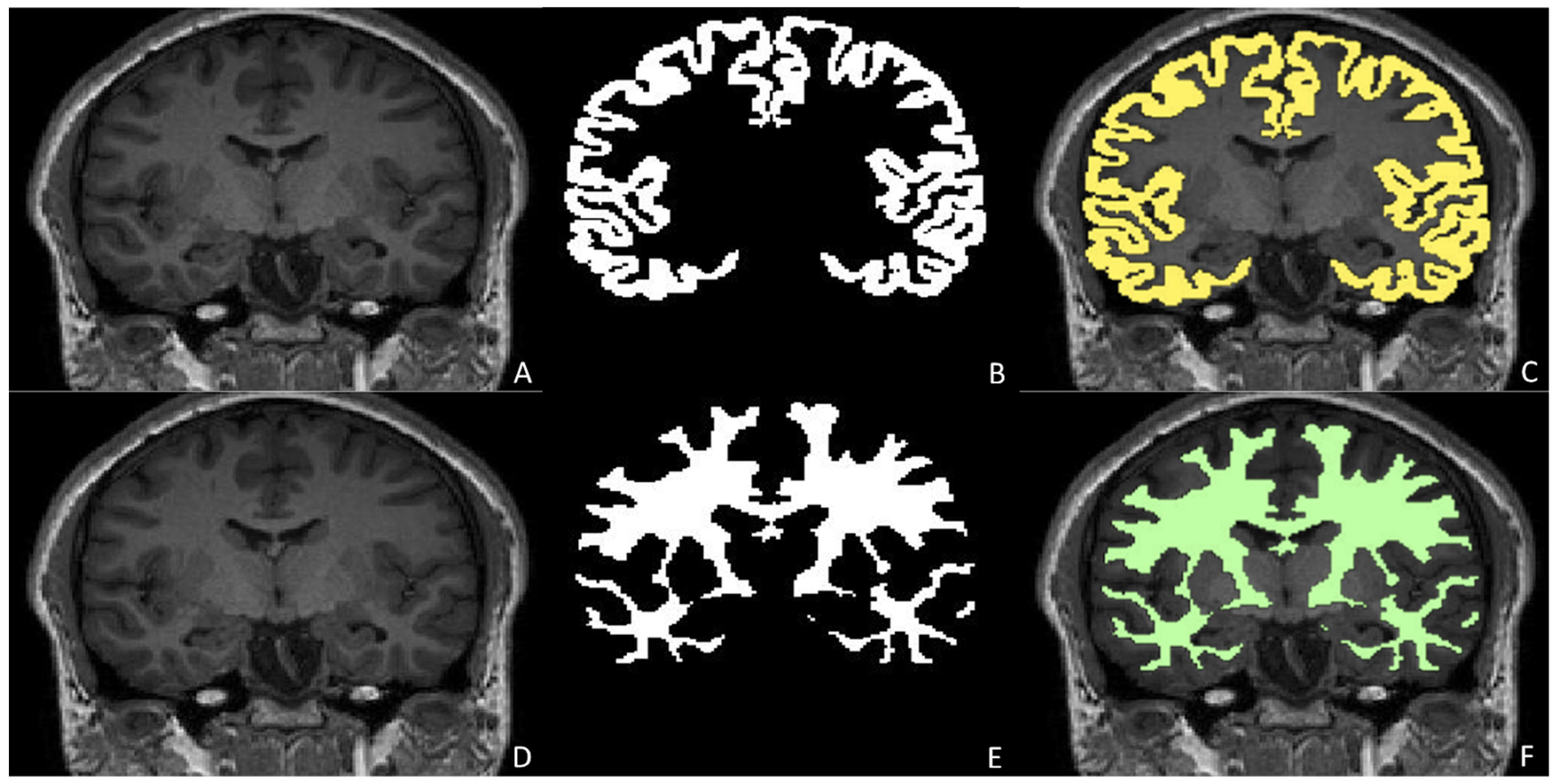

Anatomical Segmentation and Quantitative Assessment in Pediatric Neuroradiology

Tumour Detection, Characterization, and Evolution

Traumatic Brain Injury

Congenital Brain Malformations

Epilepsy Detection and Pre-Surgical Planning

White Matter Disorders

Neurodevelopmental Disorders

3. Current Challenges and Ethical Considerations

3.1. Data Privacy and Security

3.2. Informed Consent and Pediatric Autonomy

3.3. Algorithmic Transparency and Explainability

3.4. Bias and Representativeness, Robustness and Generalizability of AI Algorithms

3.5. Regulatory and PACS Implementation Hurdles

3.6. Clinical Responsibility and Human Oversight

4. Future Perspectives

4.1. Federated Learning

4.2. Collaborative Research and AI Development

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Bhatia, A.; Khalvati, F.; Ertl-Wagner, B.B. Artificial Intelligence in the Future Landscape of Pediatric Neuroradiology: Opportunities and Challenges. AJNR Am. J. Neuroradiol. 2024, 45, 549–553. [Google Scholar] [CrossRef]

- Pringle, C.; Kilday, J.-P.; Kamaly-Asl, I.; Stivaros, S.M. The Role of Artificial Intelligence in Paediatric Neuroradiology. Pediatr. Radiol. 2022, 52, 2159–2172. [Google Scholar] [CrossRef]

- Hua, S.B.Z.; Heller, N.; He, P.; Towbin, A.J.; Chen, I.Y.; Lu, A.X.; Erdman, L. Lack of Children in Public Medical Imaging Data Points to Growing Age Bias in Biomedical AI. medRxiv 2025. [Google Scholar] [CrossRef] [PubMed]

- Martinelli, D.; Catesini, G.; Greco, B.; Guarnera, A.; Parrillo, C.; Maines, E.; Longo, D.; Napolitano, A.; De Nictolis, F.; Cairoli, S.; et al. Neurologic Outcome Following Liver Transplantation for Methylmalonic Aciduria. J. Inherit. Metab. Dis. 2023, 46, 450–465. [Google Scholar] [CrossRef] [PubMed]

- Siri, B.; Greco, B.; Martinelli, D.; Cairoli, S.; Guarnera, A.; Longo, D.; Napolitano, A.; Parrillo, C.; Ravà, L.; Simeoli, R.; et al. Positive Clinical, Neuropsychological, and Metabolic Impact of Liver Transplantation in Patients with Argininosuccinate Lyase Deficiency. J. Inherit. Metab. Dis. 2025, 48, e12843. [Google Scholar] [CrossRef] [PubMed]

- Straus Takahashi, M.; Donnelly, L.F.; Siala, S. Artificial Intelligence: A Primer for Pediatric Radiologists. Pediatr. Radiol. 2024, 54, 2127–2142. [Google Scholar] [CrossRef]

- Ranschaert, E.; Topff, L.; Pianykh, O. Optimization of Radiology Workflow with Artificial Intelligence. Radiol. Clin. N. Am. 2021, 59, 955–966. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Tejani, A.S.; Cook, T.S.; Hussain, M.; Sippel Schmidt, T.; O’Donnell, K.P. Integrating and Adopting AI in the Radiology Workflow: A Primer for Standards and Integrating the Healthcare Enterprise (IHE) Profiles. Radiology 2024, 311, e232653. [Google Scholar] [CrossRef]

- Bizzo, B.C.; Almeida, R.R.; Alkasab, T.K. Artificial Intelligence Enabling Radiology Reporting. Radiol. Clin. N. Am. 2021, 59, 1045–1052. [Google Scholar] [CrossRef]

- Davendralingam, N.; Sebire, N.J.; Arthurs, O.J.; Shelmerdine, S.C. Artificial Intelligence in Paediatric Radiology: Future Opportunities. Br. J. Radiol. 2021, 94, 20200975. [Google Scholar] [CrossRef]

- Sammer, M.B.K.; Akbari, Y.S.; Barth, R.A.; Blumer, S.L.; Dillman, J.R.; Farmakis, S.G.; Frush, D.P.; Gokli, A.; Halabi, S.S.; Iyer, R.; et al. Use of Artificial Intelligence in Radiology: Impact on Pediatric Patients, a White Paper From the ACR Pediatric AI Workgroup. J. Am. Coll. Radiol. 2023, 20, 730–737. [Google Scholar] [CrossRef]

- Moltoni, G.; Lucignani, G.; Sgrò, S.; Guarnera, A.; Rossi Espagnet, M.C.; Dellepiane, F.; Carducci, C.; Liberi, S.; Iacoella, E.; Evangelisti, G.; et al. MRI Scan with the “Feed and Wrap” Technique and with an Optimized Anesthesia Protocol: A Retrospective Analysis of a Single-Center Experience. Front. Pediatr. 2024, 12, 1415603. [Google Scholar] [CrossRef]

- Brendlin, A.S.; Schmid, U.; Plajer, D.; Chaika, M.; Mader, M.; Wrazidlo, R.; Männlin, S.; Spogis, J.; Estler, A.; Esser, M.; et al. AI Denoising Improves Image Quality and Radiological Workflows in Pediatric Ultra-Low-Dose Thorax Computed Tomography Scans. Tomography 2022, 8, 1678–1689. [Google Scholar] [CrossRef]

- Ng, C.K.C. Artificial Intelligence for Radiation Dose Optimization in Pediatric Radiology: A Systematic Review. Children 2022, 9, 1044. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.-J.; Choi, Y.H.; Lee, S.B.; Cho, Y.J.; Lee, S.; Cheon, J.-E. Low-Iodine-Dose Computed Tomography Coupled with an Artificial Intelligence-Based Contrast-Boosting Technique in Children: A Retrospective Study on Comparison with Conventional-Iodine-Dose Computed Tomography. Pediatr. Radiol. 2024, 54, 1315–1324. [Google Scholar] [CrossRef]

- Gong, E.; Pauly, J.M.; Wintermark, M.; Zaharchuk, G. Deep Learning Enables Reduced Gadolinium Dose for Contrast-Enhanced Brain MRI. J. Magn. Reson. Imaging 2018, 48, 330–340. [Google Scholar] [CrossRef] [PubMed]

- Borisch, E.A.; Froemming, A.T.; Grimm, R.C.; Kawashima, A.; Trzasko, J.D.; Riederer, S.J. Model-Based Image Reconstruction with Wavelet Sparsity Regularization for through-Plane Resolution Restoration in T -Weighted Spin-Echo Prostate MRI. Magn. Reson. Med. 2023, 89, 454–468. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yang, M.; Bian, T.; Wu, H. MRI Super-Resolution Analysis via MRISR: Deep Learning for Low-Field Imaging. Information 2024, 15, 655. [Google Scholar] [CrossRef]

- Hewlett, M.; Petrov, I.; Johnson, P.M.; Drangova, M. Deep-Learning-Based Motion Correction Using Multichannel MRI Data: A Study Using Simulated Artifacts in the fastMRI Dataset. NMR Biomed. 2024, 37, e5179. [Google Scholar] [CrossRef]

- Lucas, A.; Campbell Arnold, T.; Okar, S.V.; Vadali, C.; Kawatra, K.D.; Ren, Z.; Cao, Q.; Shinohara, R.T.; Schindler, M.K.; Davis, K.A.; et al. Multi-Contrast High-Field Quality Image Synthesis for Portable Low-Field MRI Using Generative Adversarial Networks and Paired Data. medRxiv 2023. [Google Scholar] [CrossRef]

- Bahloul, M.A.; Jabeen, S.; Benoumhani, S.; Alsaleh, H.A.; Belkhatir, Z.; Al-Wabil, A. Advancements in Synthetic CT Generation from MRI: A Review of Techniques, and Trends in Radiation Therapy Planning. J. Appl. Clin. Med. Phys. 2024, 25, e14499. [Google Scholar] [CrossRef] [PubMed]

- Takita, H.; Matsumoto, T.; Tatekawa, H.; Katayama, Y.; Nakajo, K.; Uda, T.; Mitsuyama, Y.; Walston, S.L.; Miki, Y.; Ueda, D. AI-Based Virtual Synthesis of Methionine PET from Contrast-Enhanced MRI: Development and External Validation Study. Radiology 2023, 308, e223016. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Guarnera, A.; Moltoni, G.; Dellepiane, F.; Lucignani, G.; Rossi-Espagnet, M.C.; Campi, F.; Auriti, C.; Longo, D. Bacterial Meningoencephalitis in Newborns. Biomedicines 2024, 12, 2490. [Google Scholar] [CrossRef]

- Filippi, C.G.; Stein, J.M.; Wang, Z.; Bakas, S.; Liu, Y.; Chang, P.D.; Lui, Y.; Hess, C.; Barboriak, D.P.; Flanders, A.E.; et al. Ethical Considerations and Fairness in the Use of Artificial Intelligence for Neuroradiology. AJNR Am. J. Neuroradiol. 2023, 44, 1242–1248. [Google Scholar] [CrossRef]

- Martin, D.; Tong, E.; Kelly, B.; Yeom, K.; Yedavalli, V. Current Perspectives of Artificial Intelligence in Pediatric Neuroradiology: An Overview. Front. Radiol. 2021, 1, 713681. [Google Scholar] [CrossRef]

- Guarnera, A.; Valente, P.; Pasquini, L.; Moltoni, G.; Randisi, F.; Carducci, C.; Carboni, A.; Lucignani, G.; Napolitano, A.; Romanzo, A.; et al. Congenital Malformations of the Eye: A Pictorial Review and Clinico-Radiological Correlations. J. Ophthalmol. 2024, 2024, 5993083. [Google Scholar] [CrossRef]

- Bailey, C.R.; Bailey, A.M.; McKenney, A.S.; Weiss, C.R. Understanding and Appreciating Burnout in Radiologists. Radiographics 2022, 42, E137–E139. [Google Scholar] [CrossRef]

- Nair, A.; Ong, W.; Lee, A.; Leow, N.W.; Makmur, A.; Ting, Y.H.; Lee, Y.J.; Ong, S.J.; Tan, J.J.H.; Kumar, N.; et al. Enhancing Radiologist Productivity with Artificial Intelligence in Magnetic Resonance Imaging (MRI): A Narrative Review. Diagnostics 2025, 15, 1146. [Google Scholar] [CrossRef]

- Xiao, H.; Yang, Z.; Liu, T.; Liu, S.; Huang, X.; Dai, J. Deep Learning for Medical Imaging Super-Resolution: A Comprehensive Review. Neurocomputing 2025, 630, 129667. [Google Scholar] [CrossRef]

- Molina-Maza, J.M.; Galiana-Bordera, A.; Jimenez, M.; Malpica, N.; Torrado-Carvajal, A. Development of a Super-Resolution Scheme for Pediatric Magnetic Resonance Brain Imaging Through Convolutional Neural Networks. Front. Neurosci. 2022, 16, 830143. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Ma, A.; Feng, Q.; Wang, R.; Cheng, L.; Chen, X.; Yang, X.; Liao, K.; Miao, Y.; Qiu, Y. Super-Resolution of Brain Tumor MRI Images Based on Deep Learning. J. Appl. Clin. Med. Phys. 2022, 23, e13758. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, M.A.; Fain, S.B.; Riederer, S.J. Effect of Windowing and Zero-Filled Reconstruction of MRI Data on Spatial Resolution and Acquisition Strategy. J. Magn. Reson. Imaging 2001, 14, 270–280. [Google Scholar] [CrossRef]

- Ebel, A.; Dreher, W.; Leibfritz, D. Effects of Zero-Filling and Apodization on Spectral Integrals in Discrete Fourier-Transform Spectroscopy of Noisy Data. J. Magn. Reson. 2006, 182, 330–338. [Google Scholar] [CrossRef]

- Yoon, J.H.; Nickel, M.D.; Peeters, J.M.; Lee, J.M. Rapid Imaging: Recent Advances in Abdominal MRI for Reducing Acquisition Time and Its Clinical Applications. Korean J. Radiol. 2019, 20, 1597–1615. [Google Scholar] [CrossRef]

- Schlemper, J.; Caballero, J.; Hajnal, J.V.; Price, A.N.; Rueckert, D. A Deep Cascade of Convolutional Neural Networks for Dynamic MR Image Reconstruction. IEEE Trans. Med. Imaging 2018, 37, 491–503. [Google Scholar] [CrossRef]

- Ye, J.C.; Eldar, Y.C.; Unser, M. Deep Learning for Biomedical Image Reconstruction; Cambridge University Press: Cambridge, UK, 2023; ISBN 9781316517512. [Google Scholar]

- Lee, D.; Yoo, J.; Tak, S.; Ye, J.C. Deep Residual Learning for Accelerated MRI Using Magnitude and Phase Networks. IEEE Trans. Biomed. Eng. 2018, 65, 1985–1995. [Google Scholar] [CrossRef]

- Lustig, M.; Donoho, D.; Pauly, J.M. Sparse MRI: The Application of Compressed Sensing for Rapid MR Imaging. Magn. Reson. Med. 2007, 58, 1182–1195. [Google Scholar] [CrossRef]

- Aggarwal, H.K.; Mani, M.P.; Jacob, M. MoDL: Model-Based Deep Learning Architecture for Inverse Problems. IEEE Trans. Med. Imaging 2019, 38, 394–405. [Google Scholar] [CrossRef]

- Zhang, S.; Zhong, M.; Shenliu, H.; Wang, N.; Hu, S.; Lu, X.; Lin, L.; Zhang, H.; Zhao, Y.; Yang, C.; et al. Deep Learning-Based Super-Resolution Reconstruction on Undersampled Brain Diffusion-Weighted MRI for Infarction Stroke: A Comparison to Conventional Iterative Reconstruction. AJNR Am. J. Neuroradiol. 2025, 46, 41–48. [Google Scholar] [CrossRef]

- Matsuo, K.; Nakaura, T.; Morita, K.; Uetani, H.; Nagayama, Y.; Kidoh, M.; Hokamura, M.; Yamashita, Y.; Shinoda, K.; Ueda, M.; et al. Feasibility Study of Super-Resolution Deep Learning-Based Reconstruction Using K-Space Data in Brain Diffusion-Weighted Images. Neuroradiology 2023, 65, 1619–1629. [Google Scholar] [CrossRef]

- Cole, J.H.; Poudel, R.P.K.; Tsagkrasoulis, D.; Caan, M.W.A.; Steves, C.; Spector, T.D.; Montana, G. Predicting Brain Age with Deep Learning from Raw Imaging Data Results in a Reliable and Heritable Biomarker. Neuroimage 2017, 163, 115–124. [Google Scholar] [CrossRef]

- Behl, N. Deep Resolve—Mobilizing the Power of Networks. In MAGNETOM Flash; Siemens Healthineers: Erlangen, Germany, 2021; Volume 78. [Google Scholar]

- Cordero-Grande, L.; Christiaens, D.; Hutter, J.; Price, A.N.; Hajnal, J.V. Complex Diffusion-Weighted Image Estimation via Matrix Recovery under General Noise Models. Neuroimage 2019, 200, 391–404. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.; Singh, N.; Kaur, L. Deep Learning Methods for 3D Magnetic Resonance Image Denoising, Bias Field and Motion Artifact Correction: A Comprehensive Review. Phys. Med. Biol. 2024, 69, 23TR01. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Xu, J.; Turk, E.A.; Grant, P.E.; Golland, P.; Adalsteinsson, E. Enhanced Detection of Fetal Pose in 3D MRI by Deep Reinforcement Learning with Physical Structure Priors on Anatomy. Med. Image Comput. Comput. Assist. Interv. 2020, 12266, 396–405. [Google Scholar]

- Kim, S.-H.; Choi, Y.H.; Lee, J.S.; Lee, S.B.; Cho, Y.J.; Lee, S.H.; Shin, S.-M.; Cheon, J.-E. Deep Learning Reconstruction in Pediatric Brain MRI: Comparison of Image Quality with Conventional T2-Weighted MRI. Neuroradiology 2023, 65, 207–214. [Google Scholar] [CrossRef] [PubMed]

- Usman, M.; Latif, S.; Asim, M.; Lee, B.-D.; Qadir, J. Retrospective Motion Correction in Multishot MRI Using Generative Adversarial Network. Sci. Rep. 2020, 10, 4786. [Google Scholar] [CrossRef]

- Chen, Z.; Pawar, K.; Ekanayake, M.; Pain, C.; Zhong, S.; Egan, G.F. Deep Learning for Image Enhancement and Correction in Magnetic Resonance Imaging-State-of-the-Art and Challenges. J. Digit. Imaging 2023, 36, 204–230. [Google Scholar] [CrossRef]

- Chang, Y.; Li, Z.; Saju, G.; Mao, H.; Liu, T. Deep Learning-Based Rigid Motion Correction for Magnetic Resonance Imaging: A Survey. Meta-Radiology 2023, 1, 100001. [Google Scholar] [CrossRef]

- Maclaren, J.; Herbst, M.; Speck, O.; Zaitsev, M. Prospective Motion Correction in Brain Imaging: A Review. Magn. Reson. Med. 2013, 69, 621–636. [Google Scholar] [CrossRef]

- Zaitsev, M.; Akin, B.; LeVan, P.; Knowles, B.R. Prospective Motion Correction in Functional MRI. Neuroimage 2017, 154, 33–42. [Google Scholar] [CrossRef]

- Power, J.D.; Barnes, K.A.; Snyder, A.Z.; Schlaggar, B.L.; Petersen, S.E. Spurious but Systematic Correlations in Functional Connectivity MRI Networks Arise from Subject Motion. Neuroimage 2012, 59, 2142–2154. [Google Scholar] [CrossRef] [PubMed]

- Satterthwaite, T.D.; Ciric, R.; Roalf, D.R.; Davatzikos, C.; Bassett, D.S.; Wolf, D.H. Motion Artifact in Studies of Functional Connectivity: Characteristics and Mitigation Strategies. Hum. Brain Mapp. 2019, 40, 2033–2051. [Google Scholar] [CrossRef] [PubMed]

- Alkhulaifat, D.; Rafful, P.; Khalkhali, V.; Welsh, M.; Sotardi, S.T. Implications of Pediatric Artificial Intelligence Challenges for Artificial Intelligence Education and Curriculum Development. J. Am. Coll. Radiol. 2023, 20, 724–729. [Google Scholar] [CrossRef]

- Pacchiano, F.; Tortora, M.; Doneda, C.; Izzo, G.; Arrigoni, F.; Ugga, L.; Cuocolo, R.; Parazzini, C.; Righini, A.; Brunetti, A. Radiomics and Artificial Intelligence Applications in Pediatric Brain Tumors. World J. Pediatr. 2024, 20, 747–763. [Google Scholar] [CrossRef]

- Forestieri, M.; Napolitano, A.; Tomà, P.; Bascetta, S.; Cirillo, M.; Tagliente, E.; Fracassi, D.; D’Angelo, P.; Casazza, I. Machine Learning Algorithm: Texture Analysis in CNO and Application in Distinguishing CNO and Bone Marrow Growth-Related Changes on Whole-Body MRI. Diagnostics 2023, 14, 61. [Google Scholar] [CrossRef]

- Wagner, M.W.; Hainc, N.; Khalvati, F.; Namdar, K.; Figueiredo, L.; Sheng, M.; Laughlin, S.; Shroff, M.M.; Bouffet, E.; Tabori, U.; et al. Radiomics of Pediatric Low-Grade Gliomas: Toward a Pretherapeutic Differentiation of Mutated and -Fused Tumors. AJNR Am. J. Neuroradiol. 2021, 42, 759–765. [Google Scholar] [CrossRef]

- Wagner, M.W.; Namdar, K.; Napoleone, M.; Hainc, N.; Amirabadi, A.; Fonseca, A.; Laughlin, S.; Shroff, M.M.; Bouffet, E.; Hawkins, C.; et al. Radiomic Features Based on MRI Predict Progression-Free Survival in Pediatric Diffuse Midline Glioma/Diffuse Intrinsic Pontine Glioma. Can. Assoc. Radiol. J. 2023, 74, 119–126. [Google Scholar] [CrossRef]

- Cardoso, M.J.; Modat, M.; Wolz, R.; Melbourne, A.; Cash, D.; Rueckert, D.; Ourselin, S. Geodesic Information Flows: Spatially-Variant Graphs and Their Application to Segmentation and Fusion. IEEE Trans. Med. Imaging 2015, 34, 1976–1988. [Google Scholar] [CrossRef]

- Reuter, M.; Schmansky, N.J.; Rosas, H.D.; Fischl, B. Within-Subject Template Estimation for Unbiased Longitudinal Image Analysis. Neuroimage 2012, 61, 1402–1418. [Google Scholar] [CrossRef] [PubMed]

- Shaikh, A.; Amin, S.; Zeb, M.A.; Sulaiman, A.; Al Reshan, M.S.; Alshahrani, H. Enhanced Brain Tumor Detection and Segmentation Using Densely Connected Convolutional Networks with Stacking Ensemble Learning. Comput. Biol. Med. 2025, 186, 109703. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. ISBN 9783319245737. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. arXiv 2016, arXiv:1606.04797. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. IEEE Trans. Med. Imaging 2020, 39, 1856–1867. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A Self-Configuring Method for Deep Learning-Based Biomedical Image Segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Havaei, M.; Davy, A.; Warde-Farley, D.; Biard, A.; Courville, A.; Bengio, Y.; Pal, C.; Jodoin, P.-M.; Larochelle, H. Brain Tumor Segmentation with Deep Neural Networks. Med. Image Anal. 2017, 35, 18–31. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Girum, K.B.; Créhange, G.; Hussain, R.; Lalande, A. Fast Interactive Medical Image Segmentation with Weakly Supervised Deep Learning Method. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1437–1444. [Google Scholar] [CrossRef]

- Grimm, F.; Edl, F.; Kerscher, S.R.; Nieselt, K.; Gugel, I.; Schuhmann, M.U. Semantic Segmentation of Cerebrospinal Fluid and Brain Volume with a Convolutional Neural Network in Pediatric Hydrocephalus-Transfer Learning from Existing Algorithms. Acta Neurochir. 2020, 162, 2463–2474. [Google Scholar] [CrossRef]

- Billot, B.; Greve, D.N.; Puonti, O.; Thielscher, A.; Van Leemput, K.; Fischl, B.; Dalca, A.V.; Iglesias, J.E. SynthSeg: Segmentation of Brain MRI Scans of Any Contrast and Resolution without Retraining. arXiv 2021, arXiv:2107.09559. [Google Scholar] [CrossRef]

- WHO Classification of Tumours Editorial Board. Central Nervous System Tumours; International Agency for Research on Cancer: Lyon, France, 2022; ISBN 9789283245087. [Google Scholar]

- Guarnera, A.; Ius, T.; Romano, A.; Bagatto, D.; Denaro, L.; Aiudi, D.; Iacoangeli, M.; Palmieri, M.; Frati, A.; Santoro, A.; et al. Advanced MRI, Radiomics and Radiogenomics in Unravelling Incidental Glioma Grading and Genetic Status: Where Are We? Medicina 2025, 61, 1453. [Google Scholar] [CrossRef]

- Guarnera, A.; Romano, A.; Moltoni, G.; Ius, T.; Palizzi, S.; Romano, A.; Bagatto, D.; Minniti, G.; Bozzao, A. The Role of Advanced MRI Sequences in the Diagnosis and Follow-up of Adult Brainstem Gliomas: A Neuroradiological Review. Tomography 2023, 9, 1526–1537. [Google Scholar] [CrossRef] [PubMed]

- Tampu, I.E.; Bianchessi, T.; Blystad, I.; Lundberg, P.; Nyman, P.; Eklund, A.; Haj-Hosseini, N. Pediatric Brain Tumor Classification Using Deep Learning on MR Images with Age Fusion. Neurooncol. Adv. 2025, 7, vdae205. [Google Scholar] [CrossRef] [PubMed]

- Aamir, M.; Rahman, Z.; Dayo, Z.A.; Abro, W.A.; Uddin, M.I.; Khan, I.; Imran, A.S.; Ali, Z.; Ishfaq, M.; Guan, Y.; et al. A Deep Learning Approach for Brain Tumor Classification Using MRI Images. Comput. Electr. Eng. 2022, 101, 108105. [Google Scholar] [CrossRef]

- Chakrabarty, S.; Sotiras, A.; Milchenko, M.; LaMontagne, P.; Hileman, M.; Marcus, D. MRI-Based Identification and Classification of Major Intracranial Tumor Types by Using a 3D Convolutional Neural Network: A Retrospective Multi-Institutional Analysis. Radiol. Artif. Intell. 2021, 3, e200301. [Google Scholar] [CrossRef]

- Das, D.; Mahanta, L.B.; Ahmed, S.; Baishya, B.K. Classification of Childhood Medulloblastoma into WHO-Defined Multiple Subtypes Based on Textural Analysis. J. Microsc. 2020, 279, 26–38. [Google Scholar] [CrossRef]

- Li, Y.; Zhuo, Z.; Weng, J.; Haller, S.; Bai, H.X.; Li, B.; Liu, X.; Zhu, M.; Wang, Z.; Li, J.; et al. A Deep Learning Model for Differentiating Paediatric Intracranial Germ Cell Tumour Subtypes and Predicting Survival with MRI: A Multicentre Prospective Study. BMC Med. 2024, 22, 375. [Google Scholar] [CrossRef]

- Voicu, I.P.; Dotta, F.; Napolitano, A.; Caulo, M.; Piccirilli, E.; D’Orazio, C.; Carai, A.; Miele, E.; Vinci, M.; Rossi, S.; et al. Machine Learning Analysis in Diffusion Kurtosis Imaging for Discriminating Pediatric Posterior Fossa Tumors: A Repeatability and Accuracy Pilot Study. Cancers 2024, 16, 2578. [Google Scholar] [CrossRef]

- Di Giannatale, A.; Di Paolo, P.L.; Curione, D.; Lenkowicz, J.; Napolitano, A.; Secinaro, A.; Tomà, P.; Locatelli, F.; Castellano, A.; Boldrini, L. Radiogenomics Prediction for MYCN Amplification in Neuroblastoma: A Hypothesis Generating Study. Pediatr. Blood Cancer 2021, 68, e29110. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- Fathi Kazerooni, A.; Arif, S.; Madhogarhia, R.; Khalili, N.; Haldar, D.; Bagheri, S.; Familiar, A.M.; Anderson, H.; Haldar, S.; Tu, W.; et al. Automated Tumor Segmentation and Brain Tissue Extraction from Multiparametric MRI of Pediatric Brain Tumors: A Multi-Institutional Study. Neurooncol. Adv. 2023, 5, vdad027. [Google Scholar] [CrossRef] [PubMed]

- Familiar, A.M.; Fathi Kazerooni, A.; Vossough, A.; Ware, J.B.; Bagheri, S.; Khalili, N.; Anderson, H.; Haldar, D.; Storm, P.B.; Resnick, A.C.; et al. Towards Consistency in Pediatric Brain Tumor Measurements: Challenges, Solutions, and the Role of Artificial Intelligence-Based Segmentation. Neuro Oncol. 2024, 26, 1557–1571. [Google Scholar] [CrossRef] [PubMed]

- Capriotti, G.; Guarnera, A.; Romano, A.; Moltoni, G.; Granese, G.; Bozzao, A.; Signore, A. Neuroimaging of Mild Traumatic Injury. Semin. Nucl. Med. 2025, 55, 512–525. [Google Scholar] [CrossRef] [PubMed]

- Lampros, M.; Symeou, S.; Vlachos, N.; Gkampenis, A.; Zigouris, A.; Voulgaris, S.; Alexiou, G.A. Applications of Machine Learning in Pediatric Traumatic Brain Injury (pTBI): A Systematic Review of the Literature. Neurosurg. Rev. 2024, 47, 737. [Google Scholar] [CrossRef]

- Pierre, K.; Turetsky, J.; Raviprasad, A.; Sadat Razavi, S.M.; Mathelier, M.; Patel, A.; Lucke-Wold, B. Machine Learning in Neuroimaging of Traumatic Brain Injury: Current Landscape, Research Gaps, and Future Directions. Trauma Care 2024, 4, 31–43. [Google Scholar] [CrossRef]

- Tunthanathip, T.; Oearsakul, T. Application of Machine Learning to Predict the Outcome of Pediatric Traumatic Brain Injury. Chin. J. Traumatol. 2021, 24, 350–355. [Google Scholar] [CrossRef]

- Chong, S.-L.; Liu, N.; Barbier, S.; Ong, M.E.H. Predictive Modeling in Pediatric Traumatic Brain Injury Using Machine Learning. BMC Med. Res. Methodol. 2015, 15, 22. [Google Scholar] [CrossRef]

- Daley, M.; Cameron, S.; Ganesan, S.L.; Patel, M.A.; Stewart, T.C.; Miller, M.R.; Alharfi, I.; Fraser, D.D. Pediatric Severe Traumatic Brain Injury Mortality Prediction Determined with Machine Learning-Based Modeling. Injury 2022, 53, 992–998. [Google Scholar] [CrossRef]

- Huth, S.F.; Slater, A.; Waak, M.; Barlow, K.; Raman, S. Predicting Neurological Recovery after Traumatic Brain Injury in Children: A Systematic Review of Prognostic Models. J. Neurotrauma 2020, 37, 2141–2149. [Google Scholar] [CrossRef]

- Barkovich, A.J.; Guerrini, R.; Kuzniecky, R.I.; Jackson, G.D.; Dobyns, W.B. A Developmental and Genetic Classification for Malformations of Cortical Development: Update 2012. Brain 2012, 135, 1348–1369. [Google Scholar] [CrossRef]

- Guarnera, A.; Lucignani, G.; Parrillo, C.; Rossi-Espagnet, M.C.; Carducci, C.; Moltoni, G.; Savarese, I.; Campi, F.; Dotta, A.; Milo, F.; et al. Predictive Value of MRI in Hypoxic-Ischemic Encephalopathy Treated with Therapeutic Hypothermia. Children 2023, 10, 446. [Google Scholar] [CrossRef] [PubMed]

- Guarnera, A.; Bottino, F.; Napolitano, A.; Sforza, G.; Cappa, M.; Chioma, L.; Pasquini, L.; Rossi-Espagnet, M.C.; Lucignani, G.; Figà-Talamanca, L.; et al. Early Alterations of Cortical Thickness and Gyrification in Migraine without Aura: A Retrospective MRI Study in Pediatric Patients. J. Headache Pain 2021, 22, 79. [Google Scholar] [CrossRef] [PubMed]

- Xie, H.N.; Wang, N.; He, M.; Zhang, L.H.; Cai, H.M.; Xian, J.B.; Lin, M.F.; Zheng, J.; Yang, Y.Z. Using Deep-Learning Algorithms to Classify Fetal Brain Ultrasound Images as Normal or Abnormal. Ultrasound Obstet. Gynecol. 2020, 56, 579–587. [Google Scholar] [CrossRef]

- Vahedifard, F.; Adepoju, J.O.; Supanich, M.; Ai, H.A.; Liu, X.; Kocak, M.; Marathu, K.K.; Byrd, S.E. Review of Deep Learning and Artificial Intelligence Models in Fetal Brain Magnetic Resonance Imaging. World J. Clin. Cases 2023, 11, 3725–3735. [Google Scholar] [CrossRef]

- Attallah, O.; Sharkas, M.A.; Gadelkarim, H. Deep Learning Techniques for Automatic Detection of Embryonic Neurodevelopmental Disorders. Diagnostics 2020, 10, 27. [Google Scholar] [CrossRef]

- Lin, M.; Zhou, Q.; Lei, T.; Shang, N.; Zheng, Q.; He, X.; Wang, N.; Xie, H. Deep Learning System Improved Detection Efficacy of Fetal Intracranial Malformations in a Randomized Controlled Trial. NPJ Digit. Med. 2023, 6, 191. [Google Scholar] [CrossRef]

- Priya, M.; Nandhini, M. Detection of Fetal Brain Abnormalities Using Data Augmentation and Convolutional Neural Network in Internet of Things. Measur. Sens. 2023, 28, 100808. [Google Scholar] [CrossRef]

- Zhao, L.; Asis-Cruz, J.D.; Feng, X.; Wu, Y.; Kapse, K.; Largent, A.; Quistorff, J.; Lopez, C.; Wu, D.; Qing, K.; et al. Automated 3D Fetal Brain Segmentation Using an Optimized Deep Learning Approach. AJNR Am. J. Neuroradiol. 2022, 43, 448–454. [Google Scholar] [CrossRef]

- Nosarti, C.; Murray, R.M.; Hack, M. Neurodevelopmental Outcomes of Preterm Birth: From Childhood to Adult Life; Cambridge University Press: Cambridge, UK, 2010; ISBN 9781139487146. [Google Scholar]

- Urbańska, S.M.; Leśniewski, M.; Welian-Polus, I.; Witas, A.; Szukała, K.; Chrościńska-Krawczyk, M. Epilepsy Diagnosis and Treatment in Children—New Hopes and Challenges—Literature Review. J. Pre-Clin. Clin. Res. 2024, 1, 40–49. [Google Scholar] [CrossRef]

- Colombo, N.; Tassi, L.; Galli, C.; Citterio, A.; Lo Russo, G.; Scialfa, G.; Spreafico, R. Focal Cortical Dysplasias: MR Imaging, Histopathologic, and Clinical Correlations in Surgically Treated Patients with Epilepsy. AJNR Am. J. Neuroradiol. 2003, 24, 724–733. [Google Scholar]

- Gill, R.S.; Lee, H.-M.; Caldairou, B.; Hong, S.-J.; Barba, C.; Deleo, F.; D’Incerti, L.; Mendes Coelho, V.C.; Lenge, M.; Semmelroch, M.; et al. Multicenter Validation of a Deep Learning Detection Algorithm for Focal Cortical Dysplasia. Neurology 2021, 97, e1571–e1582. [Google Scholar] [CrossRef]

- Adler, S.; Wagstyl, K.; Gunny, R.; Ronan, L.; Carmichael, D.; Cross, J.H.; Fletcher, P.C.; Baldeweg, T. Novel Surface Features for Automated Detection of Focal Cortical Dysplasias in Paediatric Epilepsy. Neuroimage Clin. 2017, 14, 18–27. [Google Scholar] [CrossRef] [PubMed]

- Dell’Isola, G.B.; Fattorusso, A.; Villano, G.; Ferrara, P.; Verrotti, A. Innovating Pediatric Epilepsy: Transforming Diagnosis and Treatment with AI. World J. Pediatr. 2025, 21, 333–337. [Google Scholar] [CrossRef] [PubMed]

- Jin, B.; Krishnan, B.; Adler, S.; Wagstyl, K.; Hu, W.; Jones, S.; Najm, I.; Alexopoulos, A.; Zhang, K.; Zhang, J.; et al. Automated Detection of Focal Cortical Dysplasia Type II with Surface-Based Magnetic Resonance Imaging Postprocessing and Machine Learning. Epilepsia 2018, 59, 982–992. [Google Scholar] [CrossRef] [PubMed]

- Ganji, Z.; Hakak, M.A.; Zamanpour, S.A.; Zare, H. Automatic Detection of Focal Cortical Dysplasia Type II in MRI: Is the Application of Surface-Based Morphometry and Machine Learning Promising? Front. Hum. Neurosci. 2021, 15, 608285. [Google Scholar] [CrossRef]

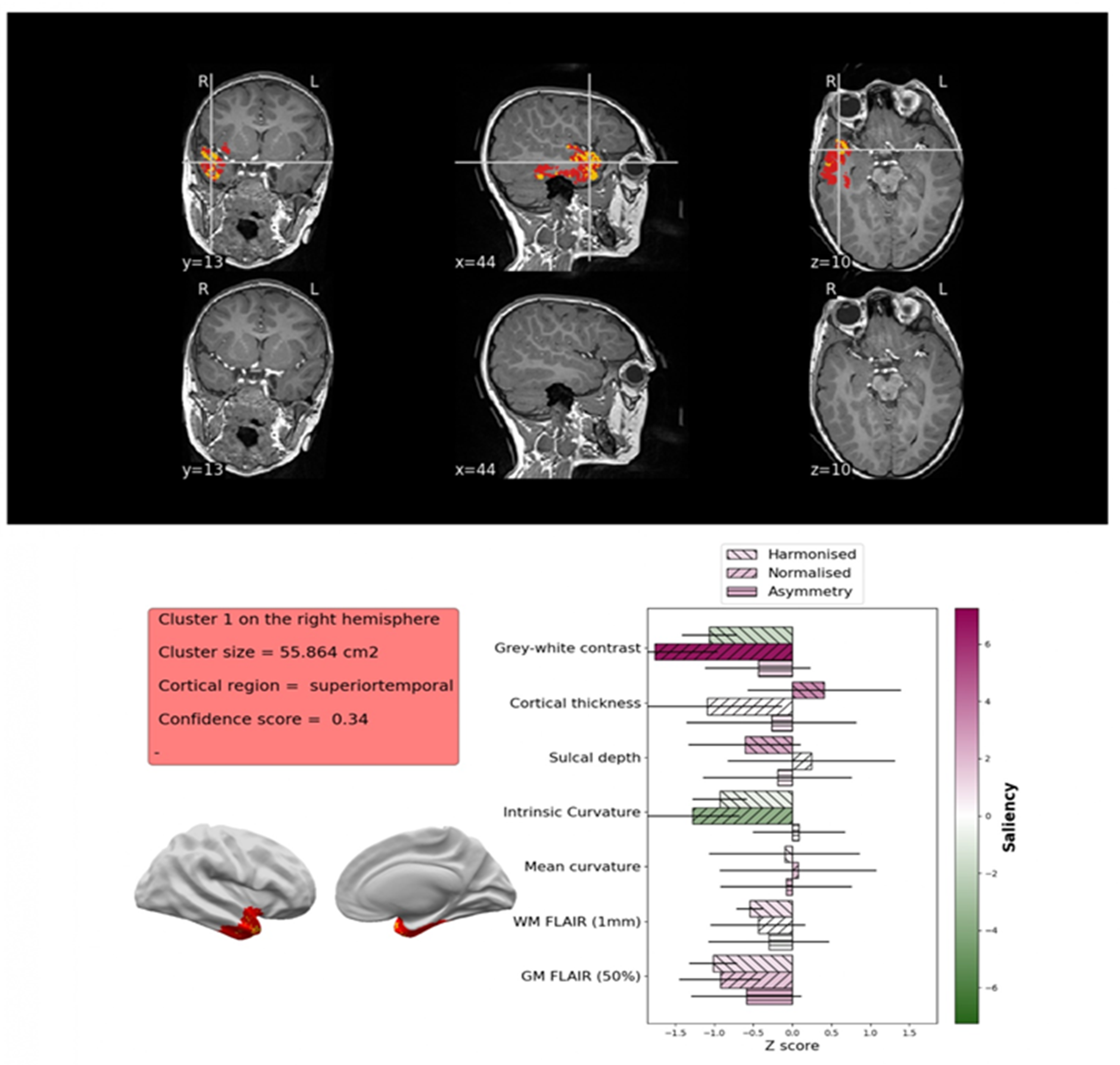

- Spitzer, H.; Ripart, M.; Whitaker, K.; Napolitano, A.; De Palma, L.; De Benedictis, A.; Foldes, S.; Humphreys, Z.; Zhang, K.; Hu, W.; et al. Interpretable Surface-Based Detection of Focal Cortical Dysplasias: A MELD Study. medRxiv 2021. [Google Scholar] [CrossRef]

- Cohen, N.T.; You, X.; Krishnamurthy, M.; Sepeta, L.N.; Zhang, A.; Oluigbo, C.; Whitehead, M.T.; Gholipour, T.; Baldeweg, T.; Wagstyl, K.; et al. Networks Underlie Temporal Onset of Dysplasia-Related Epilepsy: A MELD Study. Ann. Neurol. 2022, 92, 503–511. [Google Scholar] [CrossRef]

- Ripart, M.; Spitzer, H.; Williams, L.Z.J.; Walger, L.; Chen, A.; Napolitano, A.; Rossi-Espagnet, C.; Foldes, S.T.; Hu, W.; Mo, J.; et al. Detection of Epileptogenic Focal Cortical Dysplasia Using Graph Neural Networks: A MELD Study. JAMA Neurol. 2025, 82, 397–406. [Google Scholar] [CrossRef]

- Hirano, R.; Asai, M.; Nakasato, N.; Kanno, A.; Uda, T.; Tsuyuguchi, N.; Yoshimura, M.; Shigihara, Y.; Okada, T.; Hirata, M. Deep Learning Based Automatic Detection and Dipole Estimation of Epileptic Discharges in MEG: A Multi-Center Study. Sci. Rep. 2024, 14, 24574. [Google Scholar] [CrossRef]

- Gleichgerrcht, E.; Munsell, B.C.; Alhusaini, S.; Alvim, M.K.M.; Bargalló, N.; Bender, B.; Bernasconi, A.; Bernasconi, N.; Bernhardt, B.; Blackmon, K.; et al. Artificial Intelligence for Classification of Temporal Lobe Epilepsy with ROI-Level MRI Data: A Worldwide ENIGMA-Epilepsy Study. Neuroimage Clin. 2021, 31, 102765. [Google Scholar] [CrossRef]

- Thom, M. Review: Hippocampal Sclerosis in Epilepsy: A Neuropathology Review. Neuropathol. Appl. Neurobiol. 2014, 40, 520–543. [Google Scholar] [CrossRef]

- Jiménez-Murillo, D.; Castro-Ospina, A.E.; Duque-Muñoz, L.; Martínez-Vargas, J.D.; Suárez-Revelo, J.X.; Vélez-Arango, J.M.; de la Iglesia-Vayá, M. Automatic Detection of Focal Cortical Dysplasia Using MRI: A Systematic Review. Sensors 2023, 23, 7072. [Google Scholar] [CrossRef]

- Zhang, S.; Zhuang, Y.; Luo, Y.; Zhu, F.; Zhao, W.; Zeng, H. Deep Learning-Based Automated Lesion Segmentation on Pediatric Focal Cortical Dysplasia II Preoperative MRI: A Reliable Approach. Insights Imaging 2024, 15, 71. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Ahmed, S.N.; Mandal, M. Automated Detection of Focal Cortical Dysplasia Using a Deep Convolutional Neural Network. Comput. Med. Imaging Graph. 2020, 79, 101662. [Google Scholar] [CrossRef] [PubMed]

- Fischl, B. FreeSurfer. Neuroimage 2012, 62, 774–781. [Google Scholar] [CrossRef] [PubMed]

- Spitzer, H.; Ripart, M.; Whitaker, K.; D’Arco, F.; Mankad, K.; Chen, A.A.; Napolitano, A.; De Palma, L.; De Benedictis, A.; Foldes, S.; et al. Interpretable Surface-Based Detection of Focal Cortical Dysplasias: A Multi-Centre Epilepsy Lesion Detection Study. Brain 2022, 145, 3859–3871. [Google Scholar] [CrossRef]

- Zhang, F.; Savadjiev, P.; Cai, W.; Song, Y.; Rathi, Y.; Tunç, B.; Parker, D.; Kapur, T.; Schultz, R.T.; Makris, N.; et al. Whole Brain White Matter Connectivity Analysis Using Machine Learning: An Application to Autism. Neuroimage 2018, 172, 826–837. [Google Scholar] [CrossRef]

- Zhu, J.; Yao, S.; Yao, Z.; Yu, J.; Qian, Z.; Chen, P. White Matter Injury Detection Based on Preterm Infant Cranial Ultrasound Images. Front. Pediatr. 2023, 11, 1144952. [Google Scholar] [CrossRef]

- Sun, X.; Niwa, T.; Okazaki, T.; Kameda, S.; Shibukawa, S.; Horie, T.; Kazama, T.; Uchiyama, A.; Hashimoto, J. Automatic Detection of Punctate White Matter Lesions in Infants Using Deep Learning of Composite Images from Two Cases. Sci. Rep. 2023, 13, 4426. [Google Scholar] [CrossRef]

- Schlüter, A.; Rodríguez-Palmero, A.; Verdura, E.; Vélez-Santamaría, V.; Ruiz, M.; Fourcade, S.; Planas-Serra, L.; Martínez, J.J.; Guilera, C.; Girós, M.; et al. Diagnosis of Genetic White Matter Disorders by Singleton Whole-Exome and Genome Sequencing Using Interactome-Driven Prioritization. Neurology 2022, 98, e912–e923. [Google Scholar] [CrossRef]

- Ecker, C.; Bookheimer, S.Y.; Murphy, D.G.M. Neuroimaging in Autism Spectrum Disorder: Brain Structure and Function across the Lifespan. Lancet Neurol. 2015, 14, 1121–1134. [Google Scholar] [CrossRef]

- Mous, S.E.; Muetzel, R.L.; El Marroun, H.; Polderman, T.J.C.; van der Lugt, A.; Jaddoe, V.W.; Hofman, A.; Verhulst, F.C.; Tiemeier, H.; Posthuma, D.; et al. Cortical Thickness and Inattention/hyperactivity Symptoms in Young Children: A Population-Based Study. Psychol. Med. 2014, 44, 3203–3213. [Google Scholar] [CrossRef]

- Castellanos, F.X.; Tannock, R. Neuroscience of Attention-Deficit/hyperactivity Disorder: The Search for Endophenotypes. Nat. Rev. Neurosci. 2002, 3, 617–628. [Google Scholar] [CrossRef]

- Heinsfeld, A.S.; Franco, A.R.; Craddock, R.C.; Buchweitz, A.; Meneguzzi, F. Identification of Autism Spectrum Disorder Using Deep Learning and the ABIDE Dataset. Neuroimage Clin. 2018, 17, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Eslami, T.; Almuqhim, F.; Raiker, J.S.; Saeed, F. Machine Learning Methods for Diagnosing Autism Spectrum Disorder and Attention-Deficit/Hyperactivity Disorder Using Functional and Structural MRI: A Survey. Front. Neuroinform. 2020, 14, 575999. [Google Scholar] [CrossRef] [PubMed]

- Lohani, D.C.; Rana, B. ADHD Diagnosis Using Structural Brain MRI and Personal Characteristic Data with Machine Learning Framework. Psychiatry Res. Neuroimaging 2023, 334, 111689. [Google Scholar] [CrossRef] [PubMed]

- Sen, B.; Borle, N.C.; Greiner, R.; Brown, M.R.G. A General Prediction Model for the Detection of ADHD and Autism Using Structural and Functional MRI. PLoS ONE 2018, 13, e0194856. [Google Scholar] [CrossRef]

- Bahathiq, R.A.; Banjar, H.; Bamaga, A.K.; Jarraya, S.K. Machine Learning for Autism Spectrum Disorder Diagnosis Using Structural Magnetic Resonance Imaging: Promising but Challenging. Front. Neuroinform. 2022, 16, 949926. [Google Scholar] [CrossRef]

- Moridian, P.; Ghassemi, N.; Jafari, M.; Salloum-Asfar, S.; Sadeghi, D.; Khodatars, M.; Shoeibi, A.; Khosravi, A.; Ling, S.H.; Subasi, A.; et al. Automatic Autism Spectrum Disorder Detection Using Artificial Intelligence Methods with MRI Neuroimaging: A Review. Front. Mol. Neurosci. 2022, 15, 999605. [Google Scholar] [CrossRef]

- Geis, J.R.; Brady, A.P.; Wu, C.C.; Spencer, J.; Ranschaert, E.; Jaremko, J.L.; Langer, S.G.; Kitts, A.B.; Birch, J.; Shields, W.F.; et al. Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement. Can. Assoc. Radiol. J. 2019, 70, 329–334. [Google Scholar] [CrossRef]

- Cohen, I.G.; Amarasingham, R.; Shah, A.; Xie, B.; Lo, B. The Legal and Ethical Concerns That Arise from Using Complex Predictive Analytics in Health Care. Health Aff. 2014, 33, 1139–1147. [Google Scholar] [CrossRef]

- Grote, T.; Berens, P. On the Ethics of Algorithmic Decision-Making in Healthcare. J. Med. Ethics 2020, 46, 205–211. [Google Scholar] [CrossRef]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key Challenges for Delivering Clinical Impact with Artificial Intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef] [PubMed]

- Sun, Q.; Akman, A.; Schuller, B.W. Explainable Artificial Intelligence for Medical Applications: A Review. ACM Trans. Comput. Healthc. 2025, 6, 1–31. [Google Scholar] [CrossRef]

- Dalboni da Rocha, J.L.; Lai, J.; Pandey, P.; Myat, P.S.M.; Loschinskey, Z.; Bag, A.K.; Sitaram, R. Artificial Intelligence for Neuroimaging in Pediatric Cancer. Cancers 2025, 17, 622. [Google Scholar] [CrossRef] [PubMed]

- Larrazabal, A.J.; Nieto, N.; Peterson, V.; Milone, D.H.; Ferrante, E. Gender Imbalance in Medical Imaging Datasets Produces Biased Classifiers for Computer-Aided Diagnosis. Proc. Natl. Acad. Sci. USA 2020, 117, 12592–12594. [Google Scholar] [CrossRef]

- Vafaeikia, P.; Wagner, M.W.; Hawkins, C.; Tabori, U.; Ertl-Wagner, B.B.; Khalvati, F. MRI-Based End-To-End Pediatric Low-Grade Glioma Segmentation and Classification. Can. Assoc. Radiol. J. 2024, 75, 153–160. [Google Scholar] [CrossRef]

- Motamed, S.; Rogalla, P.; Khalvati, F. Data Augmentation Using Generative Adversarial Networks (GANs) for GAN-Based Detection of Pneumonia and COVID-19 in Chest X-Ray Images. Inf. Med. Unlocked 2021, 27, 100779. [Google Scholar] [CrossRef]

- Hedderich, D.M.; Weisstanner, C.; Van Cauter, S.; Federau, C.; Edjlali, M.; Radbruch, A.; Gerke, S.; Haller, S. Artificial Intelligence Tools in Clinical Neuroradiology: Essential Medico-Legal Aspects. Neuroradiology 2023, 65, 1091–1099. [Google Scholar] [CrossRef]

- Recht, M.P.; Dewey, M.; Dreyer, K.; Langlotz, C.; Niessen, W.; Prainsack, B.; Smith, J.J. Integrating Artificial Intelligence into the Clinical Practice of Radiology: Challenges and Recommendations. Eur. Radiol. 2020, 30, 3576–3584. [Google Scholar] [CrossRef]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Raggio, C.B.; Zabaleta, M.K.; Skupien, N.; Blanck, O.; Cicone, F.; Cascini, G.L.; Zaffino, P.; Migliorelli, L.; Spadea, M.F. FedSynthCT-Brain: A Federated Learning Framework for Multi-Institutional Brain MRI-to-CT Synthesis. arXiv 2024, arXiv:2412.06690. [Google Scholar] [CrossRef]

- Bauer, A.; Bosl, W.; Aalami, O.; Schmiedmayer, P. Toward Scalable Access to Neurodevelopmental Screening: Insights, Implementation, and Challenges. arXiv 2025, arXiv:2503.13472. [Google Scholar]

- Orchard, C.; King, G.; Tryphonopoulos, P.; Gorman, E.; Ugirase, S.; Lising, D.; Fung, K. Interprofessional Team Conflict Resolution: A Critical Literature Review. J. Contin. Educ. Health Prof. 2024, 44, 203–210. [Google Scholar] [CrossRef]

- Guan, H.; Yap, P.-T.; Bozoki, A.; Liu, M. Federated Learning for Medical Image Analysis: A Survey. arXiv 2023, arXiv:2306.05980. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guarnera, A.; Napolitano, A.; Liporace, F.; Marconi, F.; Rossi-Espagnet, M.C.; Gandolfo, C.; Romano, A.; Bozzao, A.; Longo, D. The Expanding Frontier: The Role of Artificial Intelligence in Pediatric Neuroradiology. Children 2025, 12, 1127. https://doi.org/10.3390/children12091127

Guarnera A, Napolitano A, Liporace F, Marconi F, Rossi-Espagnet MC, Gandolfo C, Romano A, Bozzao A, Longo D. The Expanding Frontier: The Role of Artificial Intelligence in Pediatric Neuroradiology. Children. 2025; 12(9):1127. https://doi.org/10.3390/children12091127

Chicago/Turabian StyleGuarnera, Alessia, Antonio Napolitano, Flavia Liporace, Fabio Marconi, Maria Camilla Rossi-Espagnet, Carlo Gandolfo, Andrea Romano, Alessandro Bozzao, and Daniela Longo. 2025. "The Expanding Frontier: The Role of Artificial Intelligence in Pediatric Neuroradiology" Children 12, no. 9: 1127. https://doi.org/10.3390/children12091127

APA StyleGuarnera, A., Napolitano, A., Liporace, F., Marconi, F., Rossi-Espagnet, M. C., Gandolfo, C., Romano, A., Bozzao, A., & Longo, D. (2025). The Expanding Frontier: The Role of Artificial Intelligence in Pediatric Neuroradiology. Children, 12(9), 1127. https://doi.org/10.3390/children12091127