“Making Data the Drug”: A Pragmatic Pilot Feasibility Randomized Crossover Trial of Data Visualization as an Intervention for Pediatric Chronic Pain

Abstract

1. Introduction

2. Materials and Methods

2.1. Setting and Participants

2.1.1. Setting

2.1.2. Eligibility Criteria

2.1.3. Recruitment and Consent

2.1.4. Power Calculation

2.2. Ecological Momentary Assessment, Visualizations, and Smartphone Deployment

2.3. Procedures

2.3.1. Randomization

2.3.2. Part A: EMA

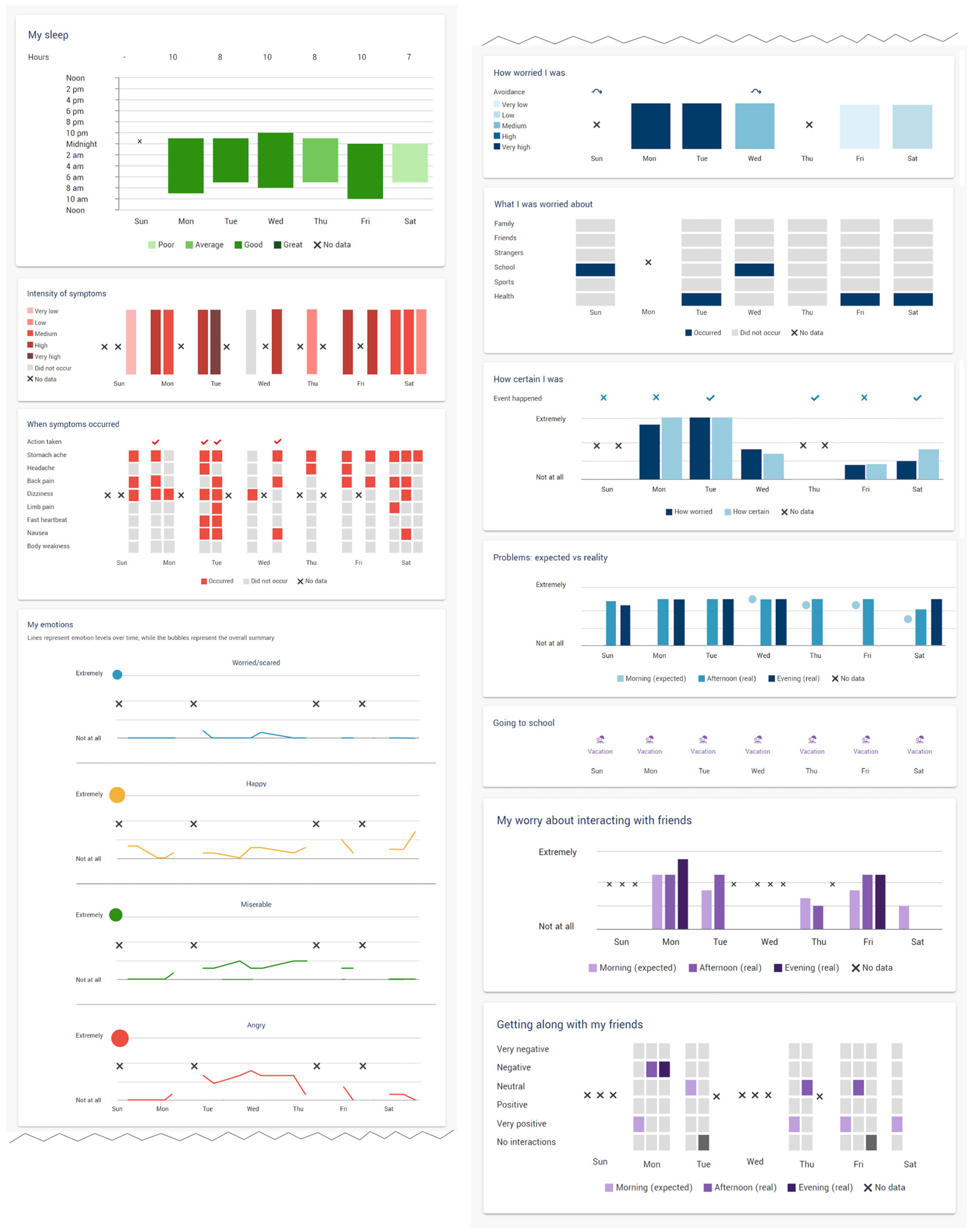

2.3.3. Part B: EMA + Data Visualization

2.3.4. Technical Issues during Deployment

2.3.5. Deviations from Pre-Registered Plan

2.4. Measures

2.4.1. Baseline Assessment

2.4.2. Feasibility Outcomes

- Recruitment rate: Number who agreed to participate of the total number eligible, including reasons for declining participation.

- Retention rate: Number of participants who completed the entire trial of the total who consented.

- Data completion rate and timeliness/duration of completion: Number of EMA data points completed by participants during the trial, whether EMA ratings were completed during the timeframe (versus back-filling), and length of time spent completing the EMA.

- Participant ratings of acceptability and feasibility: Investigator-created questionnaire administered at the end of each week.

- Participant reports of barriers and adverse events: Log of issues reported to the study team and distress reports from the post-week questionnaire.

- Engagement with visualization dashboard: Number of participants who reported viewing the visualizations of the total who participated.

- Participant ratings of data visualization: Questionnaire completed by the subset of participants who took part in post-trial interviews; results reported in [28].

2.5. Data Analysis

2.5.1. Masking to Condition

2.5.2. Interim Analysis and Stopping Guidelines

2.5.3. Feasibility Outcomes

3. Results

3.1. Demographics and Baseline Characteristics

3.2. Data Cleaning

3.3. Primary Feasibility Outcomes

3.3.1. Recruitment Rate

3.3.2. Retention Rate

3.3.3. Data Completion Rate

3.3.4. Participant Ratings of Acceptability and Feasibility

3.3.5. Participant Reports of Barriers and Adverse Events

3.3.6. Engagement with Data Dashboard

3.3.7. Participant Ratings of Data Visualization Use

4. Discussion

4.1. Limitations

4.2. Lessons Learned and Recommendations for Future Trials

- Trial timing may have impacted the findings as most recruitment and data collection occurred during the final exam period and summer holidays for adolescents in Canada. While this may have offered more flexibility in participant schedule, it also made some aspects of recruitment and maintaining engagement more challenging when participants were in high-stress periods, had more unstructured time, or were travelling for the holidays.

- A thorough orientation to the platform is likely to be necessary, given that many youths reported being unable to access the visualizations. Additionally, improved clarity around visualization access and/or built-in prompts to access visualizations may support youth navigation within the platform.

- Participant feedback suggested that are other important variables that participants would wish to track. While many of the EMA domains largely mapped on to established core outcome sets for pediatric chronic pain clinical trials [40], there is an opportunity to further tailor this based on variables of interest for youth with chronic pain. Information about diet, other symptoms, menstrual cycle, and other pain triggers were reported as being variables of interest for youth to track.

- Even with an established platform, more thorough testing of the data pipeline before implementation, including checking of the export and collection of data processes, may have mitigated some of the matching and data loss issues encountered later.

4.3. Clinical Implications

4.4. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- King, S.; Chambers, C.T.; Huguet, A.; MacNevin, R.C.; McGrath, P.J.; Parker, L.; MacDonald, A.J. The Epidemiology of Chronic Pain in Children and Adolescents Revisited: A Systematic Review. Pain 2011, 152, 2729–2738. [Google Scholar] [CrossRef] [PubMed]

- Groenewald, C.B.; Essner, B.S.; Wright, D.; Fesinmeyer, M.D.; Palermo, T.M. The Economic Costs of Chronic Pain among a Cohort of Treatment-Seeking Adolescents in the United States. J. Pain 2014, 15, 925–933. [Google Scholar] [CrossRef] [PubMed]

- Walker, L.S.; Dengler-Crish, C.M.; Rippel, S.; Bruehl, S. Functional Abdominal Pain in Childhood and Adolescence Increases Risk for Chronic Pain in Adulthood. Pain 2010, 150, 568–572. [Google Scholar] [CrossRef] [PubMed]

- Walker, L.S.; Sherman, A.L.; Bruehl, S.; Garber, J.; Smith, C.A. Functional Abdominal Pain Patient Subtypes in Childhood Predict Functional Gastrointestinal Disorders with Chronic Pain and Psychiatric Comorbidities in Adolescence and Adulthood. Pain 2012, 153, 1798–1806. [Google Scholar] [CrossRef] [PubMed]

- Vinall, J.; Pavlova, M.; Asmundson, G.; Rasic, N.; Noel, M. Mental Health Comorbidities in Pediatric Chronic Pain: A Narrative Review of Epidemiology, Models, Neurobiological Mechanisms and Treatment. Children 2016, 3, 40. [Google Scholar] [CrossRef] [PubMed]

- Murray, C.B.; Groenewald, C.B.; de la Vega, R.; Palermo, T.M. Long-Term Impact of Adolescent Chronic Pain on Young Adult Educational, Vocational, and Social Outcomes. Pain 2019, 161, 439–445. [Google Scholar] [CrossRef]

- Pagé, M.G.; Gauvin, L.; Sylvestre, M.P.; Nitulescu, R.; Dyachenko, A.; Choinière, M. An Ecological Momentary Assessment Study of Pain Intensity Variability: Ascertaining Extent, Predictors, and Associations with Quality of Life, Interference and Health Care Utilization among Individuals Living with Chronic Low back Pain. J. Pain 2022, 23, 1151–1166. [Google Scholar] [CrossRef]

- Birnie, K.A.; Killackey, T.; Backlin, G.; Gavin, F.; Harris, C.; Jordan, I.; Kim, L.; Marianayagam, J.; Swidrovich, J.; Lalonde, C.; et al. Ensuring Equity and Inclusion in Virtual Care Best Practices for Diverse Populations of Youth with Chronic Pain. Healthc. Q. 2022, 24, 25–30. [Google Scholar] [CrossRef]

- Ma, K.P.K.; Stephens, K.A.; Geyer, R.E.; Prado, M.G.; Mollis, B.L.; Zbikowski, S.M.; Waters, D.; Masterson, J.; Zhang, Y. Developing Digital Therapeutics for Chronic Pain in Primary Care: A Qualitative Human-Centered Design Study of Providers’ Motivations and Challenges. JMIR Form. Res. 2023, 7, e41788. [Google Scholar] [CrossRef]

- Birnie, K.A.; Pavlova, M.; Neville, A.; Noel, M.; Jordan, I.; Jordan, E.; Marianayagam, J.; Stinson, J.; Lorenzetti, D.L.; Faulkner, V.; et al. Rapid Evidence and Gap Map of Virtual Care Solutions across a Stepped Care Continuum for Youth with Chronic Pain and Their Families in Response to the COVID-19 Pandemic. Pain 2021, 162, 2658–2668. [Google Scholar] [CrossRef]

- Psihogios, A.M.; Lane-Fall, M.B.; Graham, A.K. Adolescents Are Still Waiting on a Digital Health Revolution: Accelerating Research-to-Practice Translation through Design for Implementation. JAMA Pediatr. 2022, 176, 545–546. [Google Scholar] [CrossRef]

- Palermo, T.M.; de la Vega, R.; Murray, C.; Law, E.; Zhou, C. A Digital Health Psychological Intervention (WebMAP Mobile) for Children and Adolescents with Chronic Pain: Results of a Hybrid Effectiveness-Implementation Stepped-Wedge Cluster Randomized Trial. Pain 2020, 161, 2763–2774. [Google Scholar] [CrossRef] [PubMed]

- Heathcote, L.C.; Cunningham, S.J.; Webster, S.N.; Tanna, V.; Mattke, E.; Loecher, N.; Spunt, S.L.; Simon, P.; Dahl, G.; Walentynowicz, M.; et al. Smartphone-Based Ecological Momentary Assessment to Study “Scanxiety” among Adolescent and Young Adult Survivors of Childhood Cancer: A Feasibility Study. Psychooncology 2022, 31, 1322–1330. [Google Scholar] [CrossRef] [PubMed]

- Shiffman, S.; Stone, A.A.; Hufford, M.R. Ecological Momentary Assessment. Annu. Rev. Clin. Psychol. 2008, 4, 1–32. [Google Scholar] [CrossRef] [PubMed]

- Boerner, K.E.; Pearl-Dowler, L.; Holsti, L.; Siden, H.; Wharton, M.-N.; Oberlander, T.F. Family Perspectives on In-Home Multimodal Longitudinal Data Collection for Children Who Function across the Developmental Spectrum. J. Dev. Behav. Pediatr. 2023, 44, e284–e291. [Google Scholar] [CrossRef]

- Overton, M.; Ward, S.; Swain, N.; Falling, C.; Gwynne-Jones, D.; Fillingim, R.; Mani, R. Are Ecological Momentary Assessments of Pain Valid and Reliable? A Systematic Review and Meta-Analysis. Clin. J. Pain 2023, 39, 29–40. [Google Scholar] [CrossRef] [PubMed]

- Cushing, C.C.; Kichline, T.; Blossom, J.B.; Friesen, C.A.; Schurman, J.V. Tailoring Individualized Evaluation of Pediatric Abdominal Pain Using Ecological Momentary Assessment (EMA): A Pilot Study Testing Feasibility and Acceptability. Clin. J. Pain 2019, 35, 859–868. [Google Scholar] [CrossRef]

- Darabos, K.; Li, Y.; O’Hagan, B.; Palermo, T.; King-Dowling, S.; Jubelirer, T.; Huang, J.; Barakat, L.P.; Schwartz, L.A. Trajectories of Pain Severity and Interference Among Adolescent and Young Adults With Cancer: A Microlongitudinal Study. Clin. J. Pain 2022, 38, 443–450. [Google Scholar] [CrossRef]

- Kichline, T.; Cushing, C.C.; Connelly, M.; Black, W.R.; Simons, L.E.; Friesen, C.; Schurman, J.V. Microtemporal Relationships in the Fear Avoidance Model: An Ecological Momentary Assessment Study. Clin. J. Pain 2022, 38, 562–567. [Google Scholar] [CrossRef]

- May, M.; Junghaenel, D.U.; Ono, M.; Stone, A.A.; Schneider, S. Ecological Momentary Assessment Methodology in Chronic Pain Research: A Systematic Review. J. Pain 2018, 19, 699. [Google Scholar] [CrossRef]

- Nim, C.; Vach, W.; Downie, A.; Kongsted, A. Do Visual Pain Trajectories Reflect the Actual Course of Low back Pain? A Longitudinal Cohort Study. J. Pain 2023, in press. [Google Scholar] [CrossRef]

- Fisher, E.; Law, E.; Dudeney, J.; Palermo, T.M.; Stewart, G.; Eccleston, C. Psychological Therapies for the Management of Chronic and Recurrent Pain in Children and Adolescents. Cochrane Database Syst. Rev. 2018, 9, CD003968. [Google Scholar]

- Boerner, K.E.; Desai, U.; MacLean, K.; Munzner, T.; Foladare, H.; Gill, J.; Oberlander, T.F. Data Visualization as an Intervention for Pediatric Chronic Pain: A Pilot Feasibility Study Protocol for a Randomized Controlled Crossover Trial. Pilot Feasibility Stud. 2022, 8, 223. [Google Scholar] [CrossRef] [PubMed]

- Heron, K.E.; Everhart, R.S.; McHale, S.M.; Smyth, J.M. Using Mobile-Technology-Based Ecological Momentary Assessment (EMA) Methods with Youth: A Systematic Review and Recommendations. J. Pediatr. Psychol. 2017, 42, 1087–1107. [Google Scholar] [CrossRef]

- Wen, C.K.F.; Schneider, S.; Stone, A.A.; Spruijt-Metz, D. Compliance with Mobile Ecological Momentary Assessment Protocols in Children and Adolescents: A Systematic Review and Meta-Analysis. J. Med. Internet Res. 2017, 19, e132. [Google Scholar] [CrossRef]

- Bromberg, M.H.; Connelly, M.; Anthony, K.K.; Gil, K.M.; Schanberg, L.E. Prospective Mediation Models of Sleep, Pain, and Daily Function in Children with Arthritis Using Ecological Momentary Assessment. Clin. J. Pain 2016, 32, 471–477. [Google Scholar] [CrossRef] [PubMed]

- Czajkowski, S.M.; Powell, L.H.; Adler, N.; Naar-King, S.; Reynolds, K.D.; Hunter, C.M.; Laraia, B.; Olster, D.H.; Perna, F.M.; Peterson, J.C.; et al. From Ideas to Efficacy: The ORBIT Model for Developing Behavioral Treatments for Chronic Diseases. Health Psychol. 2015, 34, 971–982. [Google Scholar] [CrossRef]

- Desai, U.; Foladare, H.; Boerner, K.E.; Oberlander, T.F.; Munzner, T.M.; MacLean, K.E. MyWeekInSight: Designing and Evaluating the Use of Visualization in Self-Management of Chronic Pain by Youth. 2023; in preparation. [Google Scholar]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research Electronic Data Capture (REDCap)-A Metadata-Driven Methodology and Workflow Process for Providing Translational Research Informatics Support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef]

- Smith, A.R.; Kircanski, K.; Brotman, M.A.; Do, Q.B.; Subar, A.R.; Silk, J.S.; Engel, S.; Crosby, R.D.; Harrewijn, A.; White, L.K.; et al. Advancing Clinical Neuroscience through Enhanced Tools: Pediatric Social Anxiety as an Example. Depress. Anxiety 2019, 36, 701–711. [Google Scholar] [CrossRef]

- Careteam. Available online: Getcareteam.com (accessed on 28 September 2021).

- Mara, C.A.; Kashikar-Zuck, S.; Cunningham, N.; Goldschneider, K.R.; Huang, B.; Dampier, C.; Sherry, D.D.; Crosby, L.; Farrell Miller, J.; Barnett, K.; et al. Development and Psychometric Evaluation of the PROMIS Pediatric Pain Intensity Measure in Children and Adolescents with Chronic Pain. J. Pain 2020, 22, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Varni, J.W.; Stucky, B.D.; Thissen, D.; Dewitt, E.M.; Irwin, D.E.; Lai, J.S.; Yeatts, K.; Dewalt, D.A. PROMIS Pediatric Pain Interference Scale: An Item Response Theory Analysis of the Pediatric Pain Item Bank. J. Pain 2010, 11, 1109–1119. [Google Scholar] [CrossRef] [PubMed]

- Irwin, D.E.; Stucky, B.; Langer, M.M.; Thissen, D.; DeWitt, E.M.; Lai, J.-S.; Varni, J.W.; Yeatts, K.; DeWalt, D.A. An Item Response Analysis of the Pediatric PROMIS Anxiety and Depressive Symptoms Scales. Qual. Life Res. 2010, 19, 595–607. [Google Scholar] [CrossRef] [PubMed]

- Stone, A.L.; Walker, L.S.; Heathcote, L.C.; Hernandez, J.M.; Basch, M.C.; Wilson, A.C.; Simons, L.E. Somatic Symptoms in Pediatric Patients with Chronic Pain: Proposed Clinical Reference Points for the Children’s Somatic Symptoms Inventory (Formerly Children’s Somatization Inventory). J. Pain 2019, 20, 932. [Google Scholar] [CrossRef]

- Hseih, H.-F.; Shannon, S.E. Three Approaches to Qualitative Content Analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef]

- Statistics Canada Appendix 5.1 Ethnic Origins Disseminated from 2016, 2011 and 2006. Available online: https://www12.statcan.gc.ca/census-recensement/2016/ref/dict/app-ann/a5_1-eng.cfm (accessed on 14 June 2023).

- Palermo, T.M.; Slack, K.; Loren, D.; Eccleston, C.; Jamison, R.N. Measuring and Reporting Adverse Events in Clinical Trials of Psychological Treatments for Chronic Pain. Pain 2020, 161, 713–717. [Google Scholar] [CrossRef]

- Stevens, W.J.M.; van der Sande, R.; Beijer, L.J.; Gerritsen, M.G.M.; Assendelft, W.J.J. EHealth Apps Replacing or Complementing Health Care Contacts: Scoping Review on Adverse Effects. J. Med. Internet Res. 2019, 21, e10736. [Google Scholar] [CrossRef]

- Palermo, T.M.; Walco, G.A.; Paladhi, U.R.; Birnie, K.A.; Crombez, G.; de la Vega, R.; Eccleston, C.; Kashikar-Zuck, S.; Stone, A.L. Core Outcome Set for Pediatric Chronic Pain Clinical Trials: Results from a Delphi Poll and Consensus Meeting. Pain 2021, 162, 2539–2547. [Google Scholar] [CrossRef]

- Garrido, S.; Millington, C.; Cheers, D.; Boydell, K.; Schubert, E.; Meade, T.; Nguyen, Q.V. What Works and What Doesn’t Work? A Systematic Review of Digital Mental Health Interventions for Depression and Anxiety in Young People. Front. Psychiatry 2019, 10, 759. [Google Scholar] [CrossRef]

- DALL·E 2. Available online: https://openai.com/dall-e-2 (accessed on 14 June 2023).

- Grunberg, V.A.; Greenberg, J.; Mace, R.A.; Bakhshaie, J.; Choi, K.W.; Vranceanu, A.M. Fitbit Activity, Quota-Based Pacing, and Physical and Emotional Functioning among Adults with Chronic Pain. J. Pain 2022, 23, 1933–1944. [Google Scholar] [CrossRef]

- Randall, E.T.; Shapiro, J.B.; Smith, K.R.; Jervis, K.N.; Logan, D.E. Under Pressure to Perform. Clin. J. Pain 2019, 35, 967–974. [Google Scholar] [CrossRef]

- Nelson, S.; Cunningham, N. The Impact of Posttraumatic Stress Disorder on Clinical Presentation and Psychosocial Treatment Response in Youth with Functional Abdominal Pain Disorders: An Exploratory Study. Children 2020, 7, 56. [Google Scholar] [CrossRef] [PubMed]

- Langford, D.J.; Lou, R.; Sheen, S.; Amtmann, D.; Colloca, L.; Edwards, R.R.; Farrar, J.T.; Katz, N.P.; McDermott, M.P.; Reeve, B.B.; et al. Expectations for Improvement: A Neglected but Potentially Important Covariate or Moderator for Chronic Pain Clinical Trials. J. Pain 2023, 24, 575–581. [Google Scholar] [CrossRef] [PubMed]

- de la Vega, R.; Palermo, T.M. Mediating Role of Treatment Perceptions in the Relationship between Individual Characteristics and Engagement with a Digital Psychological Intervention for Pediatric Chronic Pain: Secondary Data Analysis. JMIR Pediatr. Parent. 2023, 6, e42399. [Google Scholar] [CrossRef] [PubMed]

- Barnes, K.; Faasse, K.; Geers, A.L.; Helfer, S.G.; Sharpe, L.; Colloca, L.; Colagiuri, B. Can Positive Framing Reduce Nocebo Side Effects? Current Evidence and Recommendation for Future Research. Front. Pharmacol. 2019, 10, 439084. [Google Scholar] [CrossRef] [PubMed]

- Crum, A.; Zuckerman, B. Changing Mindsets to Enhance Treatment Effectiveness. JAMA 2017, 317, 2063. [Google Scholar] [CrossRef]

- Webb, C.A.; Forgeard, M.; Israel, E.S.; Lovell-Smith, N.; Beard, C.; Björgvinsson, T. Personalized Prescriptions of Therapeutic Skills from Patient Characteristics: An Ecological Momentary Assessment Approach. J. Consult. Clin. Psychol. 2022, 90, 51–60. [Google Scholar] [CrossRef]

- Schneider, S.; Junghaenel, D.U.; Smyth, J.M.; Wen, C.K.F.; Stone, A.A. Just-in-Time Adaptive Ecological Momentary Assessment (JITA-EMA). Behav. Res. Methods 2023, 1, 1–19. [Google Scholar] [CrossRef]

- Fundoiano-Hershcovitz, Y.; Pollak, K.; Goldstein, P. Personalizing Digital Pain Management with Adapted Machine Learning Approach. Pain Rep. 2023, 8, e1065. [Google Scholar] [CrossRef]

- Higgins, K.S.; Tutelman, P.R.; Chambers, C.T.; Witteman, H.O.; Barwick, M.; Corkum, P.; Grant, D.; Stinson, J.N.; Lalloo, C.; Robins, S.; et al. Availability of Researcher-Led EHealth Tools for Pain Assessment and Management: Barriers, Facilitators, Costs, and Design. Pain Rep. 2018, 3, e686. [Google Scholar] [CrossRef]

- Cohen, K.A.; Schleider, J.L. Adolescent Dropout from Brief Digital Mental Health Interventions within and beyond Randomized Trials. Internet Interv. 2022, 27, 100496. [Google Scholar] [CrossRef] [PubMed]

- Curran, G.M.; Bauer, M.; Mittman, B.; Pyne, J.M.; Stetler, C. Effectiveness-Implementation Hybrid Design: Combining Elements of Clinical Effectiveness and Implementation Research to Enhance Public Health. Med. Care 2012, 50, 217–226. [Google Scholar] [CrossRef] [PubMed]

- Pope, N.; de Candido, L.K.; Crellin, D.; Palmer, G.; South, M.; Harrison, D. Call to Focus on Digital Health Technologies in Hospitalized Children’s Pain Care: Clinician Experts’ Qualitative Insights on Optimizing Electronic Medical Records to Improve Care. Pain 2023, 164, 1608–1615. [Google Scholar] [CrossRef]

- Birnie, K.A.; Dib, K.; Ouellette, C.; Dib, M.A.; Nelson, K.; Pahtayken, D.; Baerg, K.; Chorney, J.; Forgeron, P.; Lamontagne, C.; et al. Partnering For Pain: A Priority Setting Partnership to Identify Patient-Oriented Research Priorities for Pediatric Chronic Pain in Canada. CMAJ Open 2019, 7, E654. [Google Scholar] [CrossRef]

- Eldridge, S.M.; Chan, C.L.; Campbell, M.J.; Bond, C.M.; Hopewell, S.; Thabane, L.; Lancaster, G.A. CONSORT 2010 statement: Extension to randomised pilot and feasibility trials. BMJ 2016, 355, i5239. [Google Scholar] [CrossRef] [PubMed]

| N (%) or M (SD) | |

|---|---|

| Age (years) | 15.63 (1.73) Range = 12–18 years |

| Sex assigned at birth | |

| Female | 34 (73.9%) |

| Male | 12 (26.1%) |

| Gender identity a,b | |

| Female | 30 (65.2%) |

| Male | 14 (30.4%) |

| Indigenous or other cultural gender minority identity | 1 (2.2%) |

| Non-binary | 1 (2.2%) |

| Born in Canada | 43 (93.5%) |

| Languages spoken at home a,b | |

| English | 45 (97.8%) |

| Other c | 8 (17.4%) |

| Ethnicity a,b,d | |

| African origins | 1 (2.2%) |

| Asian origins | 8 (17.4%) |

| European origins | 29 (63.0%) |

| North American Aboriginal origins | 3 (6.5%) |

| Other North American origins | 7 (15.2%) |

| Family socioeconomic status b | |

| Very well off | 6 (13%) |

| Quite well off | 10 (21.7%) |

| Average | 22 (47.8%) |

| Not so well off | 4 (8.7%) |

| Not at all well off | 1 (2.2%) |

| Current treatments a | |

| Over-the-counter medication | 26 (56.5%) |

| Prescription medication | 18 (39.1%) |

| Physiotherapy | 23 (50%) |

| Psychology | 8 (17.4%) |

| Occupational therapy | 3 (6.5%) |

| Naturopathic therapy | 6 (13%) |

| Chiropractic therapy | 6 (13%) |

| Massage therapy | 21 (45.7%) |

| Acupuncture | 7 (15.2%) |

| Hot-cold treatments | 5 (10.9%) |

| Herbal remedies | 4 (8.7%) |

| Counselling or other mental health treatments | 15 (32.6%) |

| Other (myoactivation and supplements) | 2 (4.3%) |

| N (%) or M (SD) | |

|---|---|

| Pain | |

| Location b | |

| Musculoskeletal | 27 (61%) |

| Back | 11 (25%) |

| Multi-site | 8 (18%) |

| Abdominal | 6 (13%) |

| Headache, migraine | 3 (7%) |

| Other | 6 (13%) |

| Number of pain sites c | 2.2 (2.4) Range = 1–12 |

| Duration (years) | 4.8 (3.9) Range = 0.5–18 |

| Intensity (past 7 days) | 5.5 (2.1) Range = 1–9 |

| Pain interference (PROMIS), T-score | 61.60 (7.42) Range = 9–38 |

| Somatic symptoms (CSSI-8) | 14.59 (6.68) Range = 3–29 |

| Depressive symptoms (PROMIS), T-score d | 57.53 (9.72) Range = 35.2–73.6 |

| Anxiety symptoms (PROMIS), T-score | 55.04 (8.74) Range = 33.5–69.4 |

| Part A (EMA Only) n = 37 | Part B (EMA + Visualization) n = 34 | |

|---|---|---|

| Quantitative questions | ||

| How comfortable did you feel answering the questions on the EMA? | ||

| Very uncomfortable | 8 (21.6%) | 4 (11.8%) |

| Uncomfortable | - | - |

| Neutral | 7 (18.9%) | 6 (17.6%) |

| Comfortable | 15 (40.5%) | 12 (35.3%) |

| Very comfortable | 7 (18.9%) | 12 (35.5%) |

| How was it filling out questions 3 times a day? | ||

| 3 times a day was too much | 13 (35.1%) | 12 (35.3%) |

| 3 times a day was just right | 22 (59.5%) | 21 (61.8%) |

| 3 times a day was too few times | 2 (5.4%) | 1 (2.9%) |

| How did you find the number of questions asked each time? | ||

| Too many questions | 3 (8.1%) | 6 (17.6%) |

| Just right | 30 (81.1%) | 25 (73.5%) |

| Too few questions | 4 (10.8%) | 3 (8.8%) |

| What did you do when you received the reminder if you were in the middle of doing something else? | ||

| Stopped what I was doing and answered the questions | 8 (22.2%) | 10 (29.4%) |

| Finished the tasks I was working on and did the questions after | 26 (72.2%) | 20 (58.8%) |

| Did the questions later | 5 (13.9%) | 5 (14.7%) |

| Other | 6 (16.7%) | 8 (23.5%) |

| Is the amount that you got paid to participate in this study enough for what we are asking you to do? | ||

| Yes | 31 (86.1%) | 32 (94.1%) |

| Qualitative (free-text) questions | ||

| What did you like about participating in the EMA study this week? a | ||

| Feasibility, including facilitating routine | 11 (29.7%) “I liked how I didn’t have to worry about them because they were fast and easy and i didn’t have much in my day so it followed my schedule” | 6 (17.6%) “That it was from home.” |

| Opportunity for reflection | 9 (24.3%) “I am able to think more about how I feel each day. Normally I don’t think of checking up on myself.” | 6 (17.6%) “Participating in the survey this week gave me insight to the what triggers my pain and how it affects how I am feeling. The survey also made me reflect throughout the day which helped me feel present during the week. ” |

| Helping others | 4 (10.8%) “I liked participating in this because it helps the study and can help others in the future” | 5 (14.7%) “I’m glad that my experiences might help other children and youth in my position.” |

| Seeing patterns | 3 (8.1%) “Participating in the EMA study this week allowed me to reflect on my physical and emotional feelings throughout the day. It helped me notice patterns in my behaviour that show me how I function on a day to day basis.” | 5 (14.7%) “I liked how I could document my emotions and have them displayed on a chart. I also liked how I got the option to rate my pain from almost no pain to the worst I experienced. Overall it was eye opening to be able to look back on the data and see my behavior.” |

| Expressing self | 2 (5.4%) “I felt like I had somewhere to vent my feelings and how I felt during the week” | 2 (5.9%) “I liked being able to express my feelings” |

| Tracking | 3 (8.1%) “Being able to keep track of my emotions and feelings” | 2 (5.9%) “It helped me keep track of which day of the week it was. It was useful to write down my pain. It made me realize how many symptoms I have and experience all the time.” |

| Others less commonly reported included the visualizations (n = 3) and feeling cared for/checked in on (n = 2). | ||

| What did you dislike about participating in the EMA study this week? a | ||

| Burden | 15 (40.5%) “I didn’t like that I had do to i in the car a couple times.” | 12 (35.3%) “Although I liked the routine the times fell awkwardly with my work schedule. I felt rushed to complete the noon submission because I was doing it at work” |

| Technical issues | 6 (16.7%) “Sometimes I would do the survey and it wouldn’t save” | 11 (32.4%) “However, the system did not register many of the surveys that I actually did fill out and complete on my phone. This also made the graphs difficult to interpret and read because of all the missed data.” |

| Repetitive nature of questions/procedures | 7 (18.9%) “The questions weren’t diverse enough to get different answers out of me some days.” | 4 (11.7%) “it was very tedious after a couple days” |

| Content of EMA d | 6 (16.7%) “I disliked that all three surveys were mainly about the thing I was most worried about that day. Often, if the thing I was worried about was a specific event, it had ended before I finished all three surveys. This made it difficult to answer the questions for an event that was already over.” | 6 (17.6%) “I sometimes did not feel like the questions applied to me or my life, and I wish there were more about the pain I am experiencing and how it affects me.” |

| Issues related to WiFi/data access | 1 (2.7%) “that you needed wifi to fill out the questions” | 2 (5.9%) “The fact you needed to have wifi and if wasn’t like an app this was difficult for people who don’t have data.” |

| Did you have any problems in doing the EMA study? If so, please describe them here (e.g., technical difficulties, not having access to your smartphone when the text messages arrived, questions too hard to understand, forgot to answer): | ||

| Technical issues | 18 (48.6%) | 20 (58.8%) |

| Too busy/prompts at difficult times to respond | 7 (18.9%) | 4 (11.7%) |

| Forgot | 5 (13.5%) | 4 (11.7%) |

| Issues related to WiFi/data access, internet outage, etc. | 2 (5.4%) | 3 (8.8%) |

| Wording of questions | 2 (5.4%) | 3 (8.8%) |

| What did you think of the timing of the text message reminders? a | ||

| Good c | 23 (62.2%) | 19 (55.9%) |

| Did not receive reminders | 4 (10.8%) | 3 (8.8%) |

| Morning prompt (8:00 A.M.) was too early | 3 (8.1%) | 4 (11.7%) |

| Afternoon prompt (noon) was too busy | 2 (5.4%) | 1 (2.9%) |

| Evening prompt (6:00 P.M.) was too early/late | 1 (2.7%) | 2 (5.9%) |

| Did not have access to phone | 1 (2.7%) | 2 (5.9%) |

| Is there anything else we could track with the EMA questions that would help us better understand your pain/your day? a,b | ||

| Diet | 12 (32.4%) | 10 (29.4%) |

| Symptoms (e.g., more details about pain, other symptoms) | 8 (21.6%) | 11 (32.3%) |

| Menstrual cycle | 7 (18.9%) | 11 (32.3%) |

| Weather | 8 (21.6%) | 2 (5.9%) |

| Physical activity | 5 (13.5%) | 4 (11.7%) |

| Details about the day (e.g., major events, context like scheduling) | 5 (13.5%) | 3 (8.8%) |

| Other less frequent responses included pain triggers, medications, healthcare interactions, other health conditions, stressful events, pain relievers, pain-related cognitions, and opportunities to elaborate on existing topics. | ||

| What kinds of questions should we be answering with this kind of research? a | ||

| Relationship between mental health and pain | 7 (18.9%) | 7 (20.6%) |

| How to improve care (self-management or provider-led) | 7 (18.9%) | 6 (17.6%) |

| Impact of pain on daily life | 5 (13.5%) | 4 (11.7%) |

| Characteristics of pain (e.g., frequency, variability, tolerance) | 1 (2.7%) | 5 (14.7%) |

| Pain origins and associated triggers | 2 (5.4%) | 4 (11.7%) |

| Relationship between sleep/fatigue and pain | 2 (5.4%) | 2 (5.9%) |

| Other less frequent responses included understanding the impact of pain on functioning, impact of tracking pain, accuracy in estimating events, and comparing individual responses with population-level | ||

| Do you have any other questions or feedback you would like to give us about participating in this study? a | ||

| Enjoyed the experience/found it beneficial | 11 (29.7%) | 5 (14.7%) |

| Technical suggestions/issues (e.g., preference for an app than a website, did not receive reminders, no data/WiFi access) | 3 (8.1%) | 4 (11.7%) |

| Suggestions or confusion regarding EMA question wording | 4 (10.8%) | 1 (2.9%) |

| Did not know how to access mental health resources mentioned by the team | 2 (5.4%) | 2 (5.9%) |

| Suggestions regarding timing of prompts | 1 (2.7%) | 2 (5.9%) |

| Participants Who Looked at the Dashboard (n = 16) | |

|---|---|

| Things they liked, found helpful, or useful | |

| Looking at trends (56%) | “Being able to visually see how I was doing through the week” |

| Memory aid (13%) | “Allowed me to see everything at once instead of trying to remember how I felt on a certain day” |

| Specific variables (13%) | “the expectations vs realty was a good reality check if you were overthinking” |

| Things they disliked, found unhelpful or confusing | |

| Data display (31%) | “There should be an option to change what type of graph so it is easier to read for some.” |

| Found it generally confusing (25%) | “It was difficult and confusing to interpret/read with missing data so it looked “blotchy” instead of being a consistent chart.” |

| Missing data (19%) | “Some of the graph styles were not fitting of the data they were representing, as well as the gaps in the data due to technical error made it really hard to properly read in the case of such a short study” |

| Not useful (6%) | “I looked at it, but did not really find much use in it. It did not really help me much” |

| Participants that did not look at the dashboard (n = 17) | |

| Why not? | |

| Could not find it/did not know it existed (53%) | “I assume I would love to look at a visual graph of my day, but I couldn’t find my dashboard anywhere so I am still looking.” |

| Too busy or forgot (29%) | “the amount of time i put in each day felt like enough and it didn’t bother me to not check it regularly” |

| Did not want to look (6%) | “Because I didn’t want to” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boerner, K.E.; Desai, U.; Luu, J.; MacLean, K.E.; Munzner, T.; Foladare, H.; Shen, J.; Gill, J.; Oberlander, T.F. “Making Data the Drug”: A Pragmatic Pilot Feasibility Randomized Crossover Trial of Data Visualization as an Intervention for Pediatric Chronic Pain. Children 2023, 10, 1355. https://doi.org/10.3390/children10081355

Boerner KE, Desai U, Luu J, MacLean KE, Munzner T, Foladare H, Shen J, Gill J, Oberlander TF. “Making Data the Drug”: A Pragmatic Pilot Feasibility Randomized Crossover Trial of Data Visualization as an Intervention for Pediatric Chronic Pain. Children. 2023; 10(8):1355. https://doi.org/10.3390/children10081355

Chicago/Turabian StyleBoerner, Katelynn E., Unma Desai, Jessica Luu, Karon E. MacLean, Tamara Munzner, Haley Foladare, Jane Shen, Javed Gill, and Tim F. Oberlander. 2023. "“Making Data the Drug”: A Pragmatic Pilot Feasibility Randomized Crossover Trial of Data Visualization as an Intervention for Pediatric Chronic Pain" Children 10, no. 8: 1355. https://doi.org/10.3390/children10081355

APA StyleBoerner, K. E., Desai, U., Luu, J., MacLean, K. E., Munzner, T., Foladare, H., Shen, J., Gill, J., & Oberlander, T. F. (2023). “Making Data the Drug”: A Pragmatic Pilot Feasibility Randomized Crossover Trial of Data Visualization as an Intervention for Pediatric Chronic Pain. Children, 10(8), 1355. https://doi.org/10.3390/children10081355