This section presents a detailed quantitative and qualitative evaluation of the proposed model on four CNS tumor datasets.

3.1. Experimental Setup and Datasets

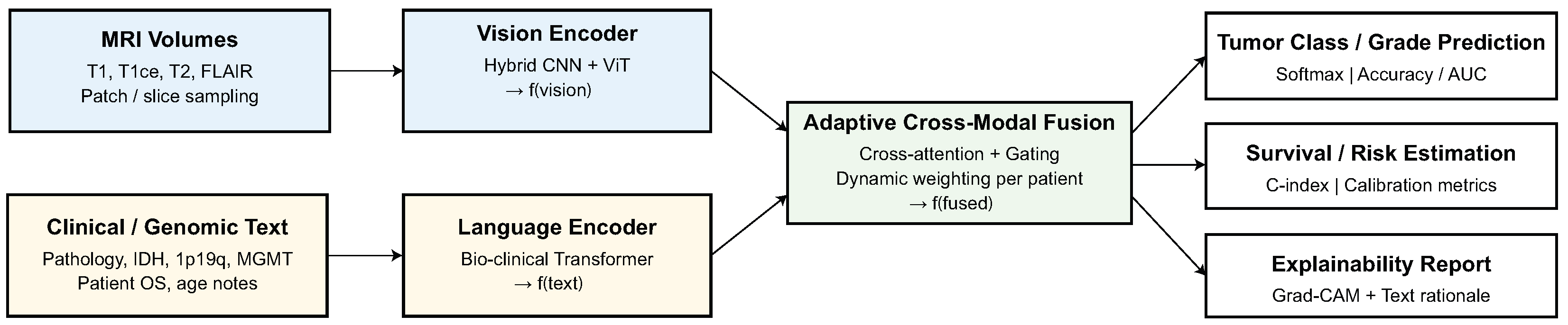

The proposed methodology was evaluated using four public CNS tumor datasets: BraTS (overall survival prediction subset), TCGA-GBM/LGG, REMBRANDT, and GLASS. These datasets include a wide range of imaging methods, acquisition scanners, and patient demographics, which makes it possible to test how well multimodal diagnostics work and how well they work in different fields. Each dataset includes , -contrast (), , and FLAIR MRI sequences, as well as clinical data in text or structured form, such as age, tumor grade, IDH mutation, MGMT methylation, 1p/19q codeletion status, and therapy response. With more than 1700 patient cases, these datasets make it possible to undertake benchmarking within and across domains in different imaging and reporting settings.

The AVLT model was trained with the AdamW optimizer, using a starting learning rate of , a batch size of 8, and a total of 120 training epochs, while also using a cosine-annealing learning-rate scheduler with a linear warm-up of the first 10% of iterations. A weight decay of and a dropout rate of 0.1 were utilized on the vision encoder, language encoder, and fusion layers. To stabilize training, gradient clipping with a value of 1.0 was applied. All experiments were conducted with the same hyperparameter settings to create a fair comparison across datasets. All models were implemented in Python 3.10, with PyTorch 2.0.1, HuggingFace Transformers 4.31.0, and MONAI, version 1.2.0, with CUDA version 11.8; training and inference were performed using an NVIDIA RTX 5070 GPU, manufactured by NVIDIA Corporation (Santa Clara, CA, USA). All additional experiments were conducted with the same software configuration to allow us to replicate the experiments in the future.

The BraTS overall survival (OS) subset is comprised of 285 subjects with pre-operative multi-sequence MRI (, , , FLAIR), all of which are co-registered to a common anatomical space, skull-stripped, and have isotropic 1 mm voxel spacing. Clinical metadata consists of patient age at diagnosis and survival categories (short-term: <300 days, mid-term: 300–450 days, long-term: >450 days). Unfortunately, there are no molecular markers (ex: IDH or MGMT) provided in this subset, thus making it more suitable for multimodal modeling for survival. The TCGA-GBM/LGG cohort has approximately 600 subjects obtained from The Cancer Imaging Archive (TCIA) which includes paired MRI and clinically rich metadata. The MRI protocol consists of , , , and FLAIR sequences, each with varying acquisition parameters between institutions and scanners. Included metadata consists of patient demographics, tumor grade (GBM, LGG), IDH mutation, MGMT promoter methylation, 1p/19q codeletion, and patient survival. The diagnostic and clinical characteristics of TCGA make it the primary radiogenomic domain used to model multimodal fusion of both imaging and molecular descriptors.

The REMBRANDT dataset comprises nearly 500 glioma cases that were collected from a range of institutions providing considerable heterogeneity in MRI acquisition protocols, resolutions, and scanner types. For the majority of cases, there are standardized , , , and FLAIR sequences of MRI. In terms of clinical metadata, the data includes age, tumor histology, grade, and overall survival, identifying the selected genomic markers when provided with the available data. Due to its strong variability across institutions, REMBRANDT is a dataset which therefore is an ideal testbed to test robustness to distributional shift. The GLASS cohort is approximately 300 longitudinal glioma cases which include paired primary and recurrent MRI scans generally consisting of , , , and FLAIR. This dataset also provides follow-up information with detailed recurrence information, treatment response, and transcriptomic markers for a subset of individuals. Due to GLASS being an unseen domain (in the LODO evaluation) with longitudinal and recurrence metadata, it will solely be used as we assess the generalizability and recurrence prediction.

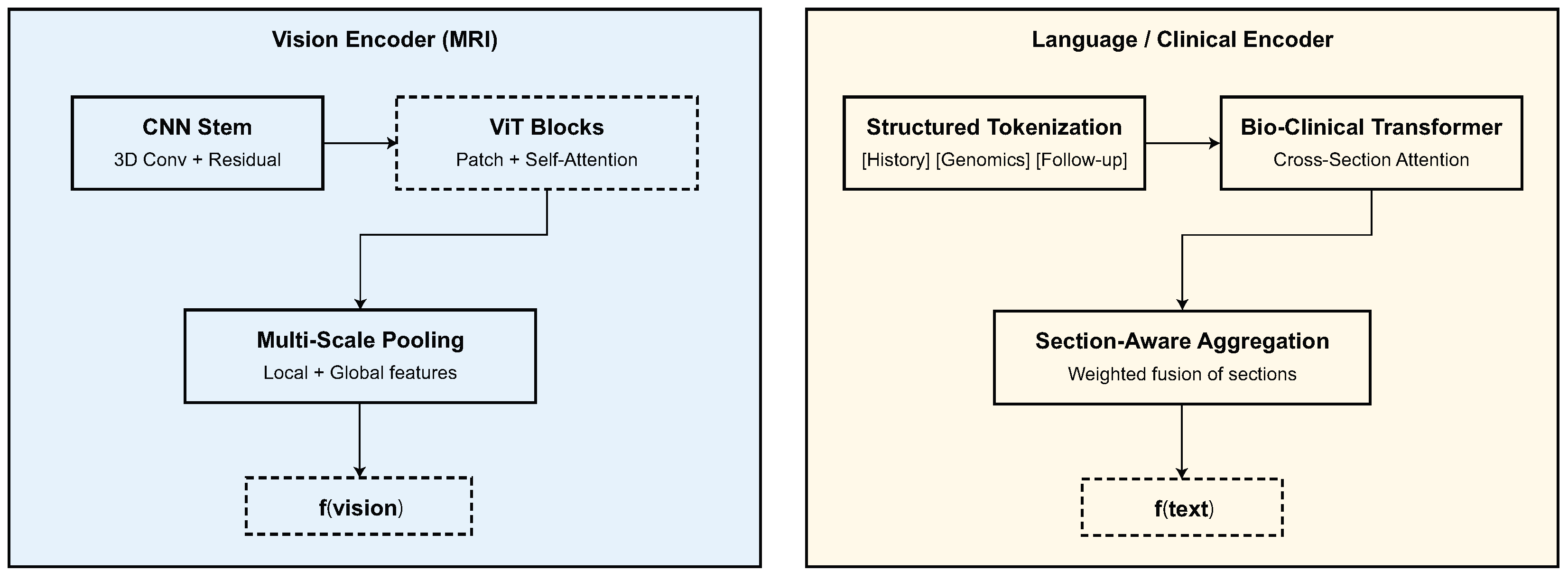

All MRI volumes were co-registered to a standard anatomical space, skull-stripped, bias-corrected using N4 normalization, and resampled to an isotropic voxel spacing of mm3 Each volume was intensity-normalized to zero mean and unit variance, and slices were resized to pixels for transformer-based encoding. Clinical text was tokenized using the BioBERT vocabulary, and structured numerical attributes (e.g., age and tumor grade) were normalized to using min–max scaling. For multimodal consistency, patients missing either imaging or textual components were excluded from training and validation.

A LODO strategy was adopted for cross-domain evaluation, where three datasets were used for training and the remaining one served as the unseen target domain. Formally, if denotes all available datasets, then for each iteration, the source domain and target domain are defined as and . The model was trained for 150 epochs using the AdamW (implemented in PyTorch version 2.0.1) optimizer with an initial learning rate of , weight decay of , and batch size of 8, applying cosine learning rate scheduling. During each LODO iteration, performance was tracked using classification measures such as accuracy (ACC), precision (PRE), recall (REC), F1-score (F1), and area under the ROC curve (AUC), as well as regression metrics like C-index and MAE for survival prediction tasks. The mean performance across all four LODO folds shows that the proposed model can diagnose problems in any domain.

3.2. Performance on Individual Datasets

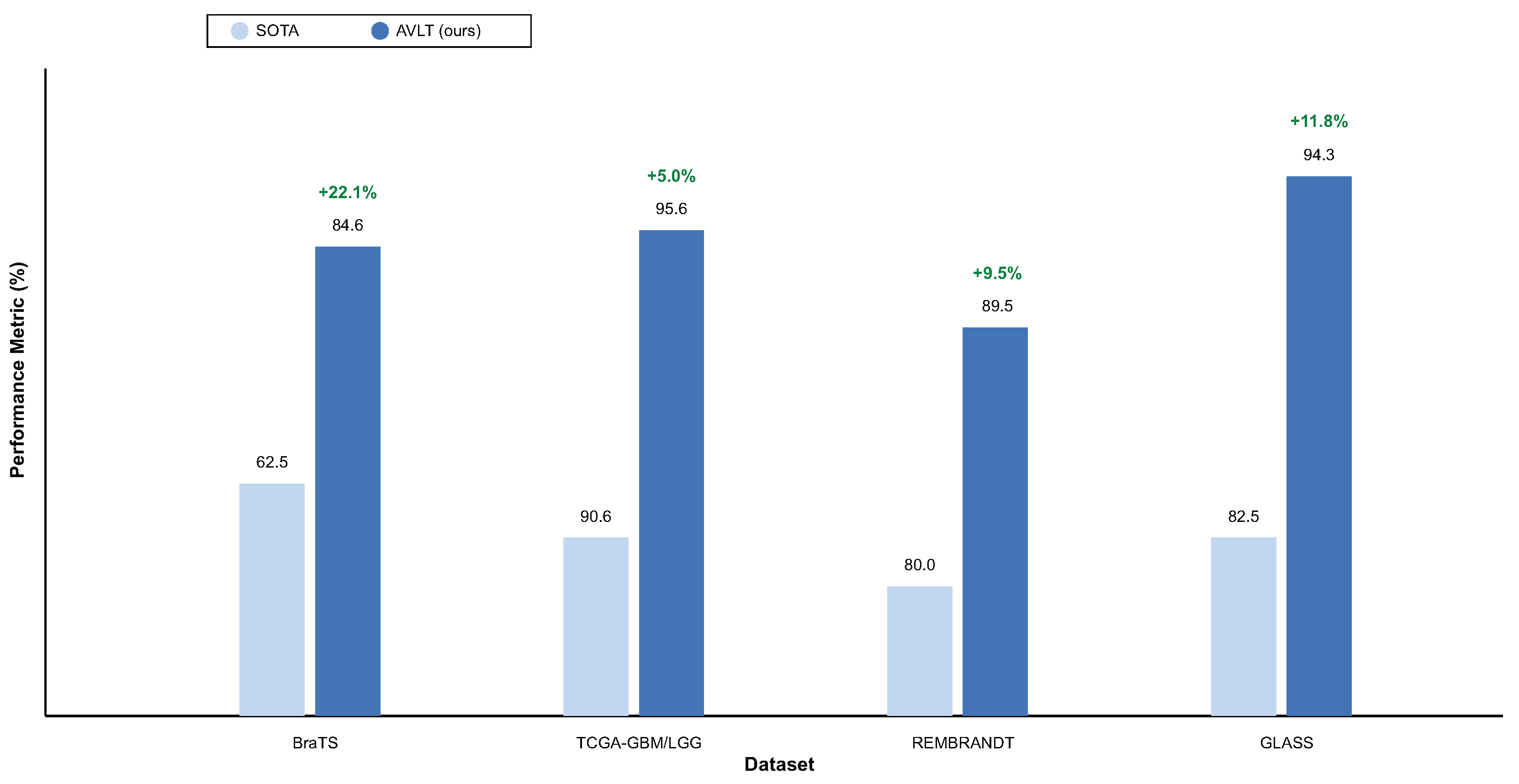

We begin by evaluating the proposed model’s performance within datasets such as BraTS (OS prediction subset), TCGA-GBM/LGG, REMBRANDT, and GLASS to determine its discriminative ability. The model can adapt to diverse tumor shapes, types of scanners, and levels of detail in the annotations because each dataset is from a distinct imaging and clinical region. For fairness, all of the baseline architectures were trained using the same preparation, data augmentation, and optimization procedures.

The selected baselines for each dataset reflect the most up-to-date and competitive state-of-the-art approaches in the literature, including radiogenomics foundation models, longitudinal survival architectures, and cross-attention multimodal fusion networks that jointly take advantage of both MRI and clinical information. This approach guarantees that the reported improvements in AVLT over ResNet- and ViT-style backbones, radiogenomics pipelines, and medical CLIP-adapted models are improvements over strong modern benchmarks, rather than trivial or outmodeled reference methods. Even though all benchmark datasets in our experiments have the complete set of multimodal information, AVLT is designed to function in a missing modality at inference, using adaptive gating and domain-agnostic normalization in the case of either absent clinical text or MRI sequences.

Table 2 indicates that the AVLT framework is better at predicting the BraTS overall survival (OS) subset. It had a far higher accuracy of

and AUC of

than any of the baseline designs. The network collects morphological and contextual predictive indications by combining MRI features and clinical descriptions in different ways. This gives it a C-index of

, which shows that it agrees well with patient survival ratings. AVLT with GradAlign is stable, which shows that adaptive optimization is strong. Unimodal baselines such as ResNet-50 (

) and ViT (

) exhibit inadequate discrimination, underscoring the necessity for cross-modal representation learning. The lowest performance (

%) is when classical radiomics and SVM are combined. This shows how useful end-to-end deep learning integration can be. AVLT has the best classification metrics, precision, recall, and survival concordance on the BraTS dataset, showing that it works well for multimodal prognostic modeling.

Table 3 shows that the AVLT is the best method for predicting IDH mutations on the TCGA-GBM/LGG dataset. The model does better than both radiogenomic and transformer-based baselines, with an accuracy of

% and an AUC of

%. Removing the language branch lowers accuracy by 3.5%, which shows how important the clinical literature is for molecular prediction. FoundBioNet and Cross-Attention Fusion Net are competitive but have lower accuracy and AUC values. This shows that adaptive cross-modal alignment in AVLT makes representations that are easier to tell apart. Three-dimensional ResNet-18 and ClinicalBERT are examples of single-modality architectures that do not do a good job of making predictions. These results demonstrate that adaptive attention enhances generalization and resilience on the TCGA-GBM/LGG dataset by amalgamating imaging and textual descriptors.

Table 4 shows that the AVLT works well on the REMBRANDT dataset, even if there is a lot of variation between institutions and acquisition methods. The proposed model has an accuracy of

% and an AUC of

%, which shows that it can work in different imaging circumstances. Eliminating the ANM diminishes accuracy to

%, underscoring the importance of adaptive feature recalibration in mitigating scanner-induced intensity variations. When you take out text mode, performance drops even more, to

%, which shows how important complementary clinical explanations are for understanding radiology. AVLT is better than the FoundBioNet transfer baseline (

%) in both accuracy and AUC by more than 11% and 9%, respectively. This shows that it can adapt to new domains better. CNN-BERT and Cross-Attention CNN are consistent but not as good, while ViT and 3D CNN do not stay stable across different imaging sources. The AVLT shows amazing diagnostic consistency across different groups in the REMBRANDT dataset.

The AVLT is the best model for predicting tumor recurrence on the GLASS dataset, as demonstrated in

Table 5. The model beats all the others with an accuracy of

% and an AUC of

%. The C-index of

shows that there is a strong link between expected and actual recurrence results. AVLT is around 12% better than the best LUNAR model, which shows that it has more discriminative power. Other multimodal baselines, such as Multi-Modal FusionNet and GraphNet-MRI, perform well, although they fall short of the proposed method by 1.5–2% on crucial metrics. Three-dimensional ResNet-18, Swin-Transformer, and CNN-BERT are all classic CNN- and transformer-based architectures that give middling results and increased variability. This suggests that feature alignment across imaging modalities is less dependable. AVLT’s adaptive cross-modal attention and robust feature normalization make it easier to combine MRI features and predict clinical signs from text, which makes a big difference.

To provide a more complete evaluation of diagnostic performance and model stability, we report in

Table 6 the full set of standard classification metrics—including accuracy, precision, recall (sensitivity), specificity, F1-score, and AUC—together with standard deviations computed over five independent runs for each dataset. This consolidated view complements the dataset-specific tables presented earlier and illustrates the robustness of AVLT across multiple domains.

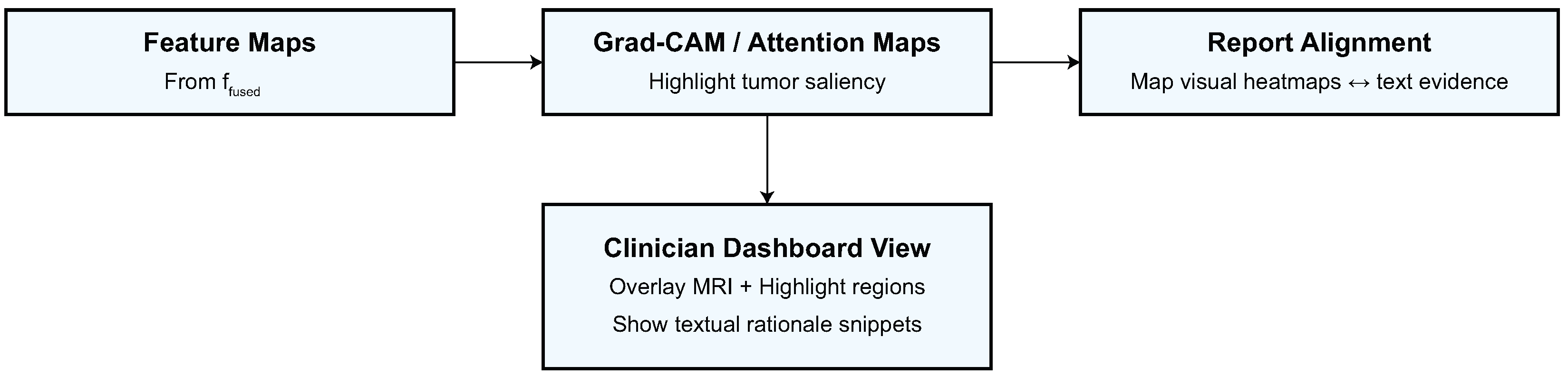

Figure 6 illustrates the resilient and equitable predictive efficacy of the proposed AVLT model across all analyzed datasets through class-wise behavior. The model effectively categorizes short-, medium-, and long-term survival groups within the BraTS overall survival (OS) dataset, showcasing its sensitivity to minor prognostic variations. The TCGA-GBM/LGG dataset shows that AVLT can also tell the difference between GBM and LGG subtypes, which shows that it can find multimodal patterns that are important for telling the difference between tumor grades. The REMBRANDT and GLASS datasets are hard to work with since they have different types of images and smaller groups of people. However, the confusion matrices show that there is a lot of diagonal dominance and only a few binary class misclassifications. These findings demonstrate AVLT’s class-specific detection capabilities and its dependability in detecting tumor categories and predicting outcomes across various clinical datasets.

3.4. Ablation Studies

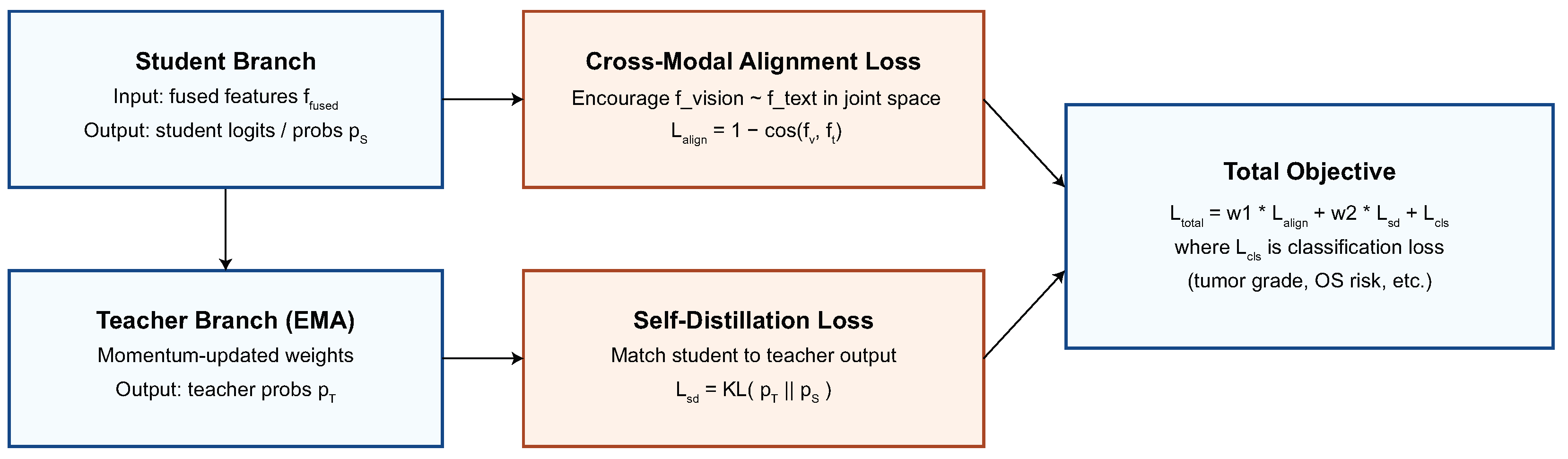

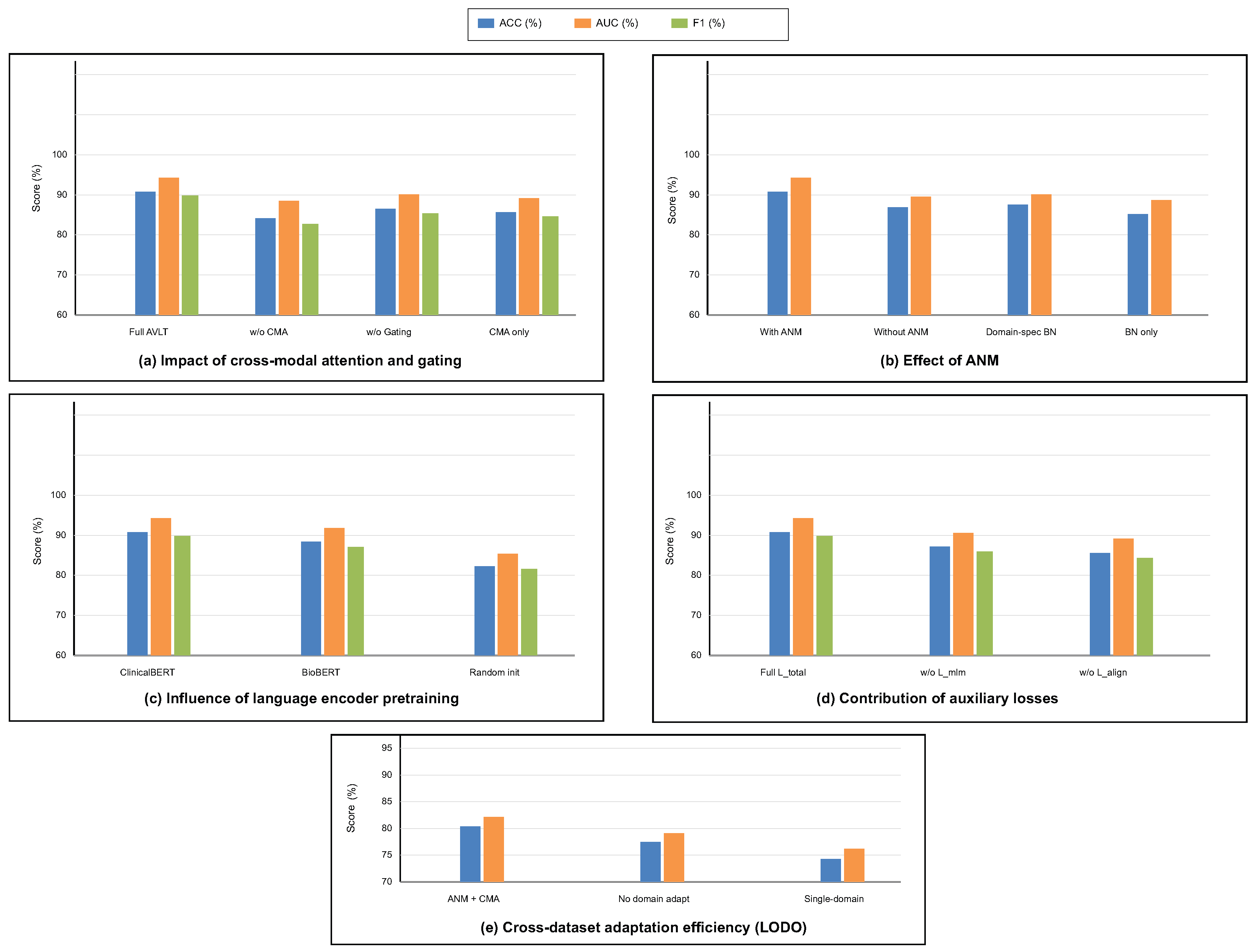

To investigate the contribution of each architectural component and training objective, a series of ablation experiments were conducted on the integrated multimodal framework. This study looks into how taking out or modifying modules affects how well the model works and how well it generalizes. To ensure statistical reliability, each ablation was conducted under uniform training settings with five independent iterations. We look at the cross-modal attention and gating mechanism, ANM, language encoder pretraining, auxiliary loss functions, and the strategy for adapting to different datasets. These studies clarify the comparative significance of each design element and validate the synergistic impact of the proposed components in resilient multimodal learning. The alternative methodologies “AVLT no Language Branch” and “AVLT no Text Modality” serve as reasonable substitutes for clinical narratives that were not scalable, providing an empirical approximation of informative robustness when text is missing.

To offer more context for the ablation studies, we further clarify what each component contributes. The CMA module encourages fine-grained interaction between MRI patches and text tokens, and removing it diminishes the semantic alignment achieved between modalities. The gating parameter

dynamically adapts the importance of the modality per patient; fixing

would restrict the model to a consistent fusion policy, unable to adapt to heterogeneous tumor presentations. The impact of the ANM module itself is critical to reducing variations in the data across scanners and institutions, which explains the significant performance loss associated with its removal. Finally, the auxiliary objectives (masked language modeling and alignment regularization) not only contribute to semantically coherent representations but also contribute to stabilizing optimization. The ablation studies depicted in

Table 7 and

Table 8 emphasize that each component contributes to both multimodal diagnostic performances in different ways.

The findings in

Table 8 analyze the influence of cross-modal attention and gating mechanisms on the model’s predictive performance. The whole AVLT achieves the best accuracy (

%) and AUC (

%), which shows that dynamic feature interaction between visual and textual modalities is helpful. Taking out the CMA block makes performance drop to

%, which shows how important cross-modal correlation learning is for combining MRI and clinical text data. Setting the gating parameter

to a fixed value causes a little drop in accuracy to

%, which shows that adaptive weighting of modality inputs makes representation more flexible. CMA alone, without the ANM, also does not work well, which shows that strong multimodal fusion needs inter-modality attention and domain-adaptive normalization. These statistics demonstrate that CMA and gating mechanisms must work together for the proposed design to work.

Table 9 shows how the ANM affects generalization across datasets and performance stability. The whole model with ANM does better than any other normalization method, with an accuracy of

% and an AUC of

%. Taking off the ANM lowers the accuracy to

% and the C-index to

, which means that fixed normalization layers cannot handle changes in the distribution of features between datasets. After the vanilla setting, domain-specific batch normalization improves outcomes by a small amount, but it is still 4.6% worse than adaptive formulation. The baseline that uses traditional BatchNorm does not work well, which shows that dynamic normalization statistics are needed for domain adaption. ANM enhances the resilience of multimodal feature calibration against scanner variability and acquisition heterogeneity across datasets.

The outcomes in

Table 10 illustrate the influence of language encoder initialization procedures on model performance. The best results come from initializing ClinicalBERT, which has an accuracy of

%, an F1-score of

%, and an AUC of

%. Pretraining on clinical narratives that are specific to a certain field improves multimodal reasoning by giving it context. After initializing the encoder using BioBERT, which is trained on the biological literature instead of clinical language, the accuracy and AUC drop to

% and

%, respectively. This means that the encoder is less aligned with the semantics of diagnostic language and reports. Pretraining is necessary for acquiring domain-specific linguistic structure, as the randomly initialized model exhibits worse performance in classification and discrimination metrics. These results demonstrate that clinically pretrained embeddings enhance semantic coherence, vision–language fusion accuracy, and interpretability.

Table 11 shows how each auxiliary loss influences model optimization and multimodal alignment. The full training setting with

gives the best results, with an accuracy of

% and an AUC of

%. This shows that the combined loss formulation does a good job of balancing visual and textual contributions. Taking away the masked language modeling loss

lowers the accuracy to

% and the AUC to

%, showing that it helps keep the meaning of words and stop language drift during joint training. Removing contrastive alignment loss

makes accuracy drop to

% and AUC drop to

%, which shows how important cross-modal consistency supervision is for coherent feature fusion. These data demonstrate that auxiliary losses enhance the quality of intra-modality representation and inter-modality correspondence, hence boosting classification and calibration performance.

Table 12 looks at how domain adaption methods change cross-dataset generalization in LODO contexts. The proposed setup with ANM and cross-modal attention has the best generalization score, with an accuracy of

% and an AUC of

%. This demonstrates that modality-specific statistics and attention-based feature recalibration facilitate the model’s knowledge transfer across domains. When domain adaptation is turned off, performance drops sharply to

% accuracy and

% AUC. This shows that multimodal networks are sensitive to changes in distribution. The single-domain training scenario using only the BraTS dataset yields the least favorable findings, achieving an accuracy of

, which signifies inadequate generalization to alternative datasets. These results show that cross-dataset adaptation through ANM and CMA integration is necessary for sustained and domain-invariant multimodal representation learning.

The ablation analysis shown in

Figure 7 shows how much each module in the proposed AVLT framework contributes to the overall result. It shows that both cross-modal attention and adaptive gating greatly improve accuracy, AUC, and F1-score.

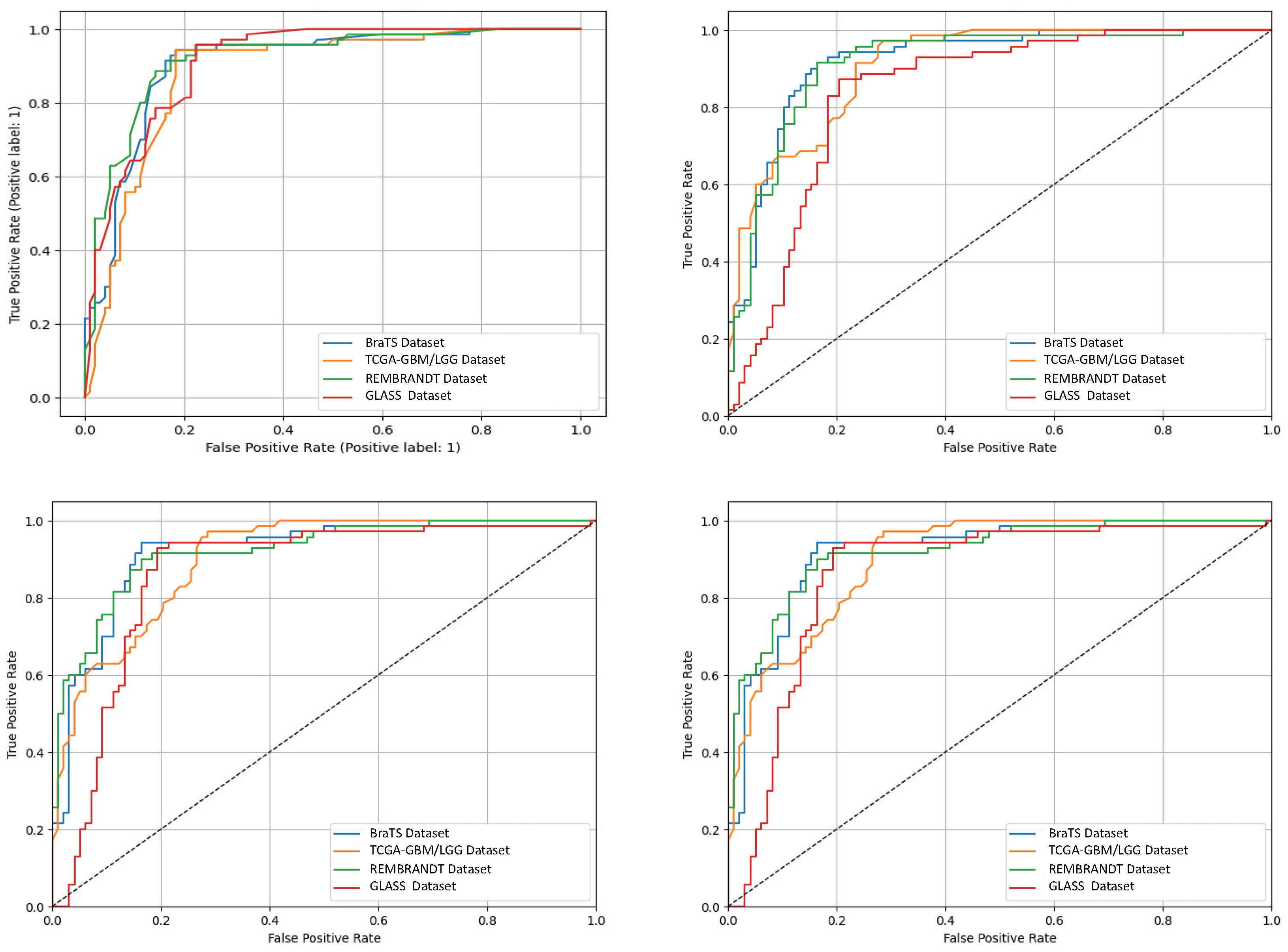

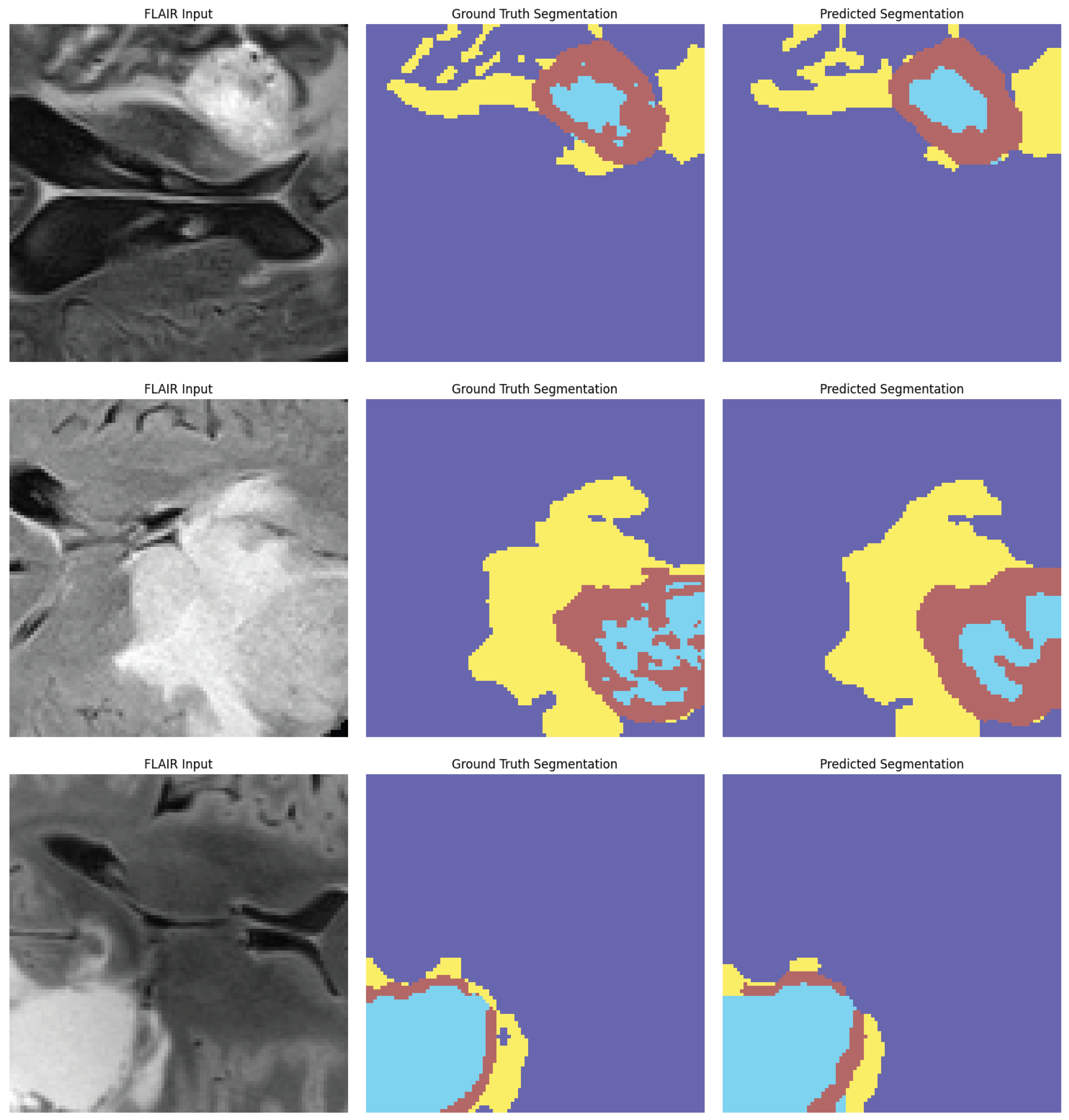

Figure 8 shows receiver-operating characteristic (ROC) curves from four independent experimental runs on different CNS tumor datasets. These curves show that the model’s performance is stable and that it can consistently and accurately tell the difference between diagnostic classes. Qualitative visualizations in

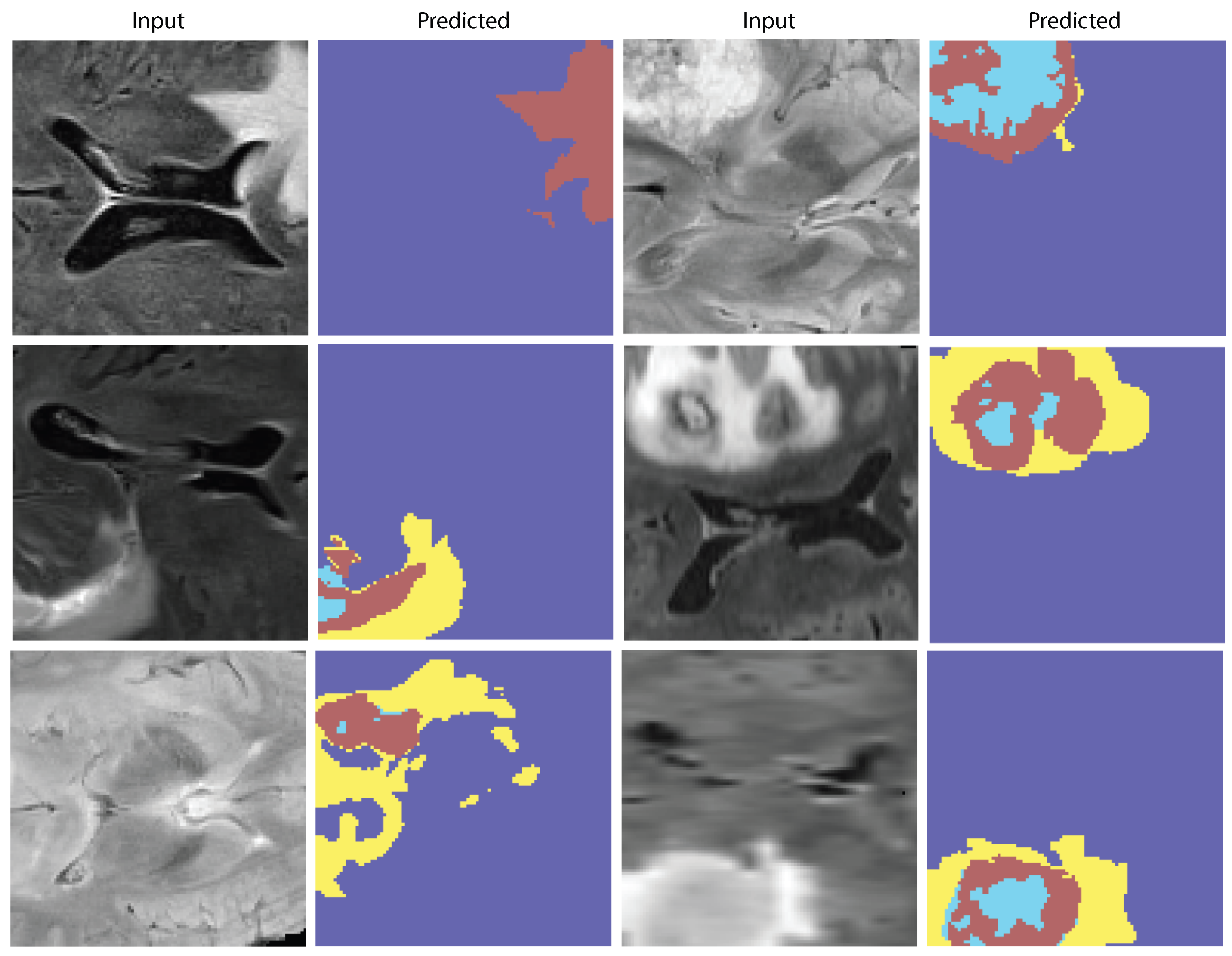

Figure 9 demonstrate the model’s capacity to distinguish tumor locations and identify clinically significant spatial patterns, hence enhancing its interpretability. Furthermore, the cross-dataset transfer results presented in

Figure 10 validate the robust generalization capability of AVLT in the face of domain alterations, underscoring its resilience when utilized with novel data distributions and multi-institutional cohorts.