Enteroscopy versus Video Capsule Endoscopy for Automatic Diagnosis of Small Bowel Disorders—A Comparative Analysis of Artificial Intelligence Applications

Abstract

:1. Introduction

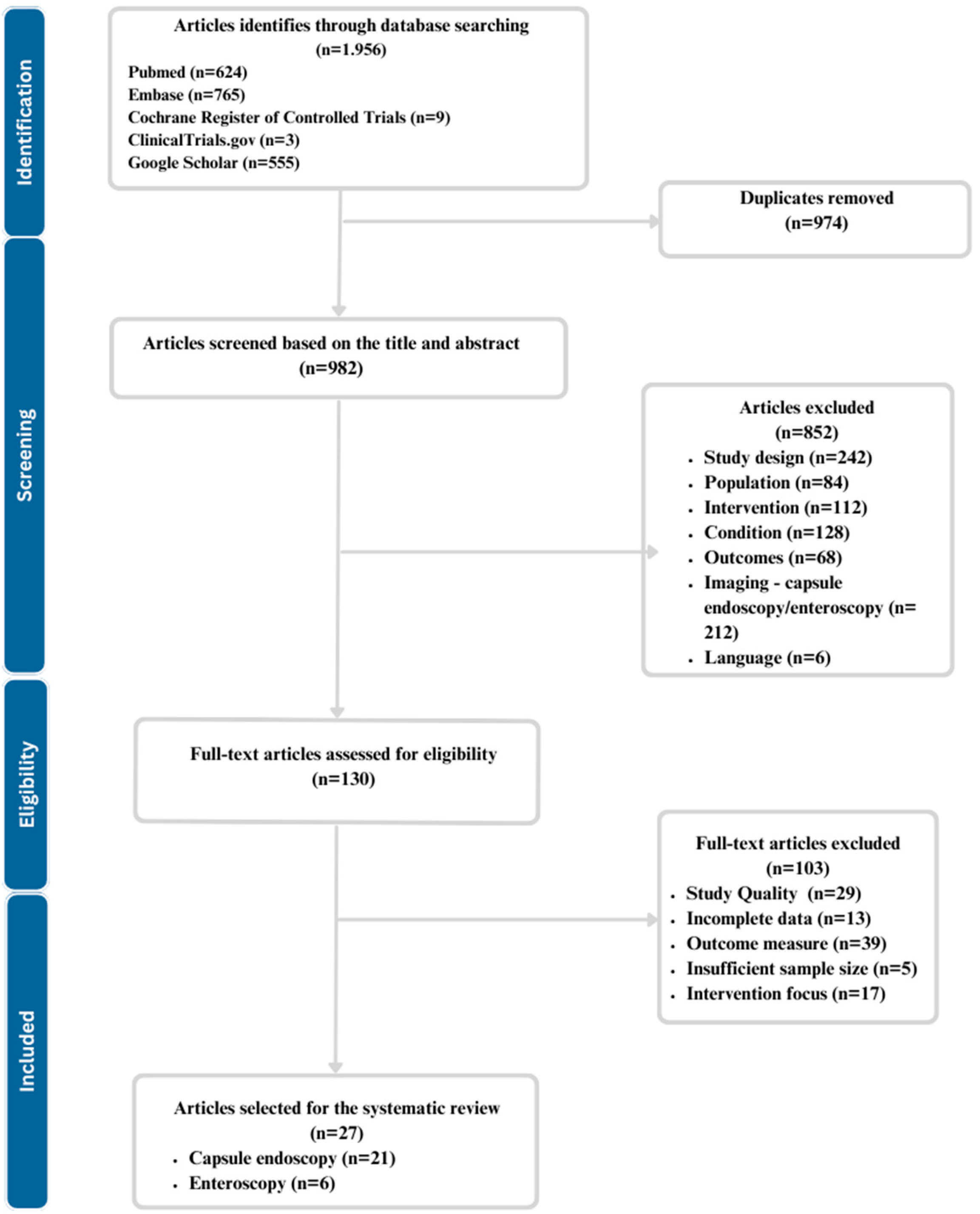

2. Materials and Methods

- Eligibility criteria

- Population: Studies involving human subjects of all ages diagnosed with or suspected small bowel disorders have been included, whereas studies involving animal subjects or in vitro have been excluded.

- Intervention: Studies that utilize artificial intelligence (AI), machine learning, deep learning, convolutional neural networks, or computer-aided diagnosis in the processing of capsule endoscopy and/or enteroscopy images for diagnosis were added. Studies using capsule endoscopy or enteroscopy without any form of AI for diagnosis or other gastrointestinal diseases without focusing on small bowel disorders were removed.

- Comparator: The presence of a control group was not mandatory for the screening and selection process. If a control group was present, it had to be diagnosed through standard diagnostic methods without the use of AI.

- Outcome Measures: Studies should focus on diagnostic metrics such as sensitivity, specificity, predictive values, or other performance metrics of AI-based techniques and not patient satisfaction or cost-effectiveness, which offers no objective performance measurement.

- Initial Screening: The title and abstract have been screened for relevance by 2 independent reviewers. Each database was progressively, one at a time/one after the other screened for title and abstract. All relevant articles have been added to the Mendeley Account in the folder dedicated to abstract and title screening. Afterwards, the duplicates were removed with a function integrated into Mendeley.

- Full-Text Review for Eligibility: For studies passing the initial screening and entering the eligibility stage, full-text articles were retrieved and assessed for eligibility based on predefined inclusion and exclusion criteria. Inclusion criteria included studies that applied AI to either enteroscopy or capsule endoscopy for diagnosing small bowel disorders and provided data on diagnostic accuracy. Exclusion criteria included case reports, conference abstracts, non-English articles, studies lacking relevant data, and others elaborated in the eligibility criteria section.

- Data Extraction: Data were extracted using a standardized form by two independent reviewers. The extracted information included study design, sample size, type of AI algorithm, small bowel disorder investigated, diagnostic modality (enteroscopy or capsule endoscopy), diagnostic performance metrics (such as sensitivity, specificity, accuracy), and any usability measures (e.g., time efficiency), using the standardized form. Discrepancies in data extraction were resolved through discussion and consensus.

3. Results

3.1. Videocapsule Endoscopy

3.2. Enteroscopy

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lebwohl, B.; Rubio-Tapia, A. Epidemiology, Presentation, and Diagnosis of Celiac Disease. Gastroenterology 2021, 160, 63–75. [Google Scholar] [CrossRef] [PubMed]

- Colombel, J.F.; Shin, A.; Gibson, P.R. AGA Clinical Practice Update on Functional Gastrointestinal Symptoms in Patients with Inflammatory Bowel Disease: Expert Review. Clin. Gastroenterol. Hepatol. 2019, 17, 380–390.e1. [Google Scholar] [CrossRef] [PubMed]

- Camilleri, M. Diagnosis and Treatment of Irritable Bowel Syndrome: A Review. JAMA 2021, 325, 865–877, Erratum in: JAMA 2021, 325, 1568. [Google Scholar] [CrossRef] [PubMed]

- Riff, B.P.; DiMaio, C.J. Exploring the Small Bowel: Update on Deep Enteroscopy. Curr. Gastroenterol. Rep. 2016, 18, 28. [Google Scholar] [CrossRef] [PubMed]

- Sakurai, T.; Fujimori, S.; Hayashida, M.; Hanada, R.; Akiyama, J.; Sakamoto, C. Repeatability of small bowel transit time in capsule endoscopy in healthy subjects. Biomed. Mater. Eng. 2018, 29, 839–848. [Google Scholar] [CrossRef]

- Assumpção, P.; Khayat, A.; Araújo, T.; Barra, W.; Ishak, G.; Cruz, A.; Santos, S.; Santos, Â.; Demachki, S.; Assumpção, P.; et al. The Small Bowel Cancer Incidence Enigma. Pathol. Oncol. Res. 2020, 26, 635–639. [Google Scholar] [CrossRef]

- Benson, A.B.; Venook, A.P.; Al-Hawary, M.M.; Arain, M.A.; Chen, Y.J.; Ciombor, K.K.; Cohen, S.A.; Cooper, H.S.; Deming, D.A.; Garrido-Laguna, I.; et al. Small Bowel Adenocarcinoma, Version 1.2020, NCCN Clinical Practice Guidelines in Oncology. J. Natl. Compr. Cancer Netw. 2019, 17, 1109–1133. [Google Scholar] [CrossRef]

- Kim, J.S.; Park, S.H.; Hansel, S.; Fletcher, J.G. Imaging and Screening of Cancer of the Small Bowel. Radiol. Clin. N. Am. 2017, 55, 1273–1291. [Google Scholar] [CrossRef]

- Iddan, G.; Meron, G.; Glukhovsky, A.; Swain, P. Wireless capsule endoscopy. Nature 2000, 405, 417–418. [Google Scholar] [CrossRef]

- Marlicz, W.; Koulaouzidis, G.; Koulaouzidis, A. Artificial Intelligence in Gastroenterology-Walking into the Room of Little Miracles. J. Clin. Med. 2020, 9, 3675. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

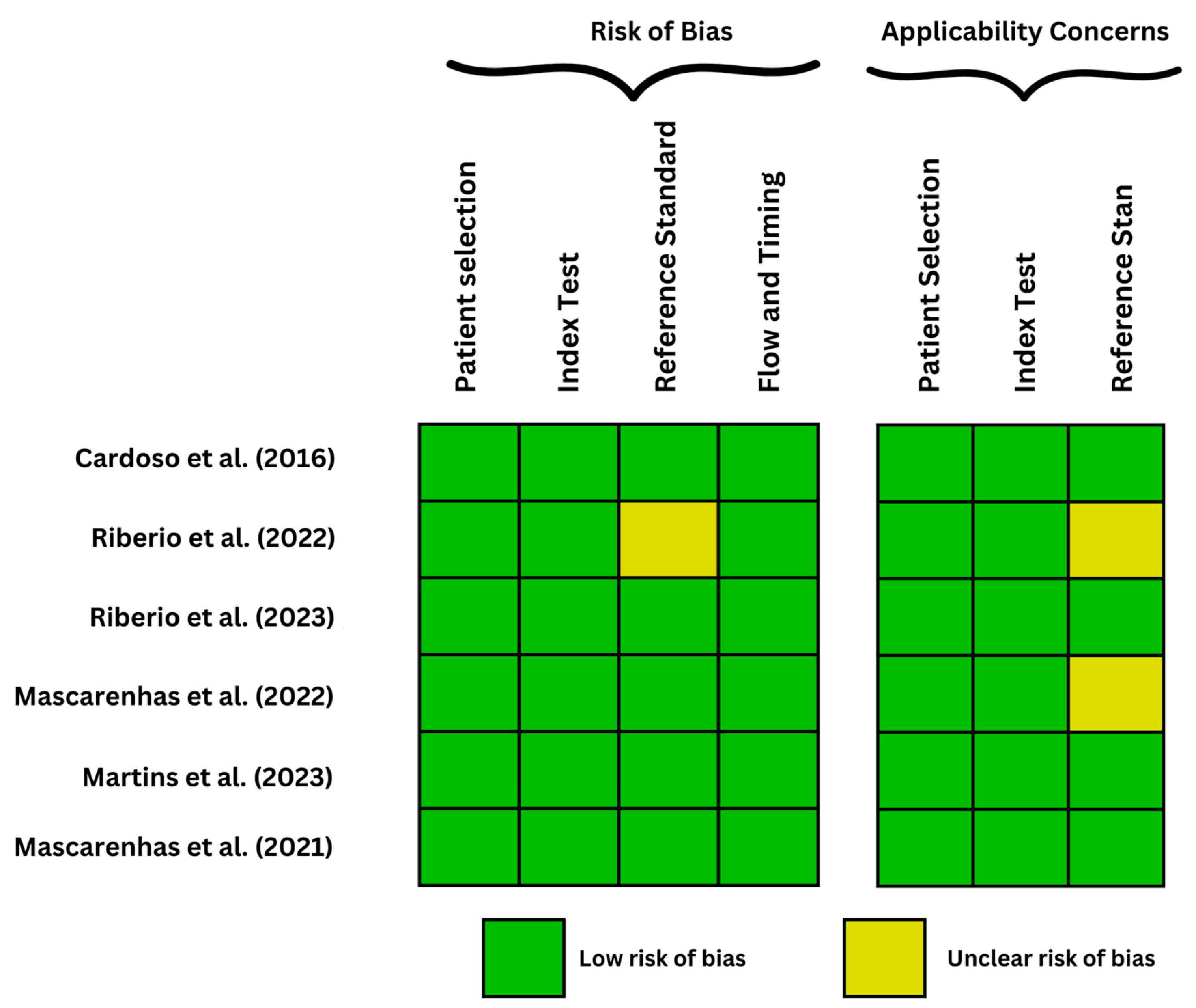

- Martins, M.; Mascarenhas, M.; Afonso, J.; Ribeiro, T.; Cardoso, P.; Mendes, F.; Cardoso, H.; Andrade, P.; Ferreira, J.; Macedo, G. Deep-Learning and Device-Assisted Enteroscopy: Automatic Panendoscopic Detection of Ulcers and Erosions. Medicina 2023, 59, 172. [Google Scholar] [CrossRef]

- Cardoso, H.; Rodrigues, J.; Marques, M.; Ribeiro, A.; Vilas-Boas, F.; Santos-Antunes, J.; Rodrigues-Pinto, E.; Silva, M.; Maia, J.C.; Macedo, G. Malignant Small Bowel Tumors: Diagnosis, Management and Prognosis. Acta Med. Port. 2015, 28, 448–456. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, T.; Saraiva, M.M.; Afonso, J.; Cardoso, P.; Ferreira, J.; Andrade, P.; Cardoso, H.; Macedo, G. Performance of an artificial intelligence algorithm for the detection of gastrointestinal angioectasia in device-assisted enteroscopy: A pilot study. Endoscopy 2022, 54, S183–S184. [Google Scholar] [CrossRef]

- Ribeiro, T.; Mascarenhas, M.; João, A.; Pedro, C.; Hélder, C.; João, F.; Patrícia, A.; Guilherme, M. Development of a combined deep learning model for automatic detection of multiple gastrointestinal lesions in device-assisted enteroscopy using convolutional neural networks. Endoscopy 2023, 55, S148. [Google Scholar] [CrossRef]

- Mascarenhas, M.; Afonso, J.; Ribeiro, T.; Cardoso, P.; Cardoso, H.; Ferreira, J.; Andrade, A.; Macedo, G. S599 Deep Learning and Device-Assisted Enteroscopy: Automatic Detection of Pleomorphic Gastrointestinal Lesions in Device-Assisted Enteroscopy. Am. J. Gastroenterol. 2022, 117, e421. [Google Scholar] [CrossRef]

- Mascarenhas Saraiva, M.; Ribeiro, T.; Afonso, J.; Andrade, P.; Cardoso, P.; Ferreira, J.; Cardoso, H.; Macedo, G. Deep Learning and Device-Assisted Enteroscopy: Automatic Detection of Gastrointestinal Angioectasia. Medicina 2021, 57, 1378. [Google Scholar] [CrossRef] [PubMed]

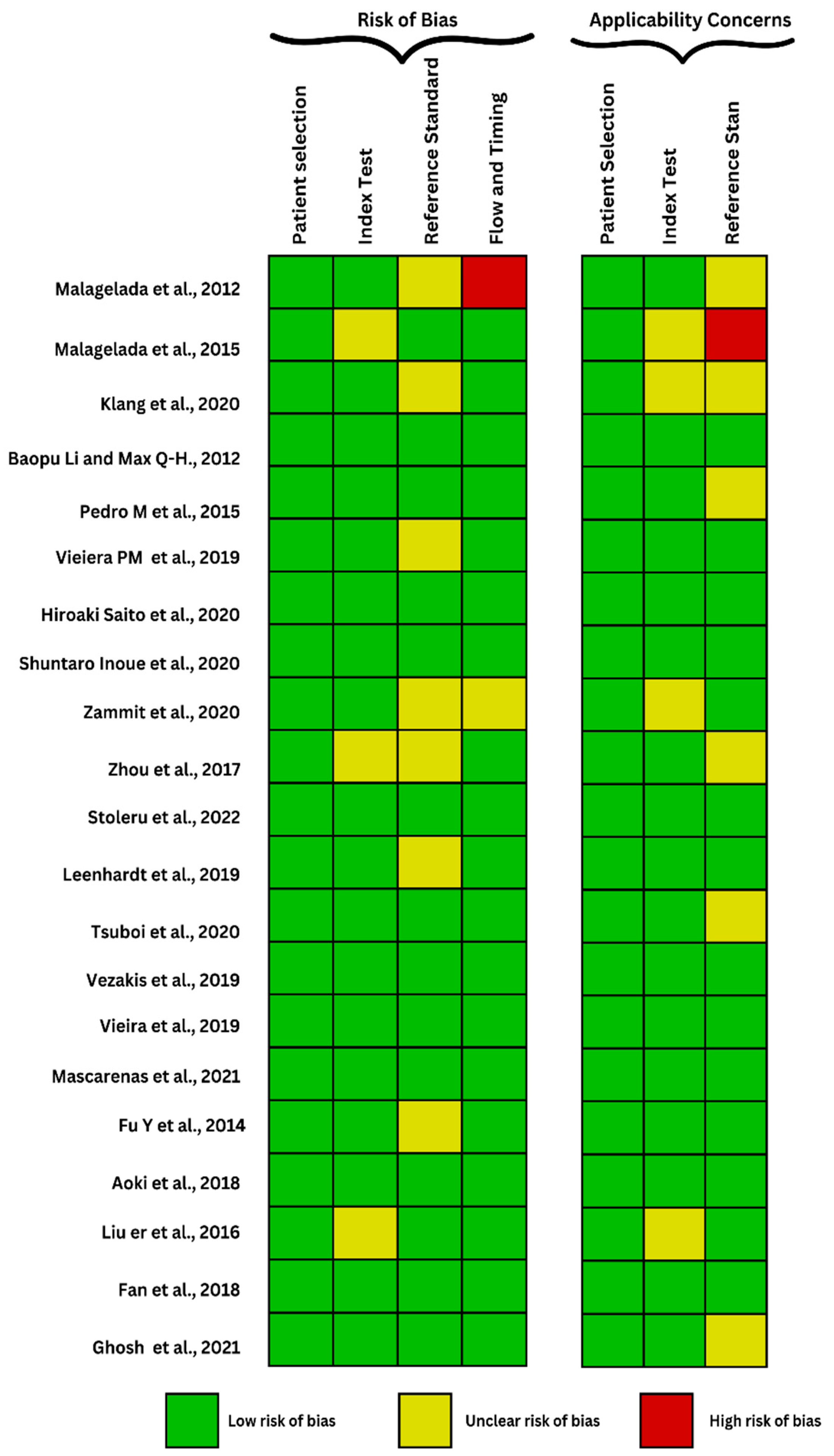

- Malagelada, C.; Drozdzal, M.; Seguí, S.; Mendez, S.; Vitrià, J.; Radeva, P.; Santos, J.; Accarino, A.; Malagelada, J.-R.; Azpiroz, F. Classification of functional bowel disorders by objective physiological criteria based on endoluminal image analysis. Am. J. Physiol. Gastrointest. Liver Physiol. 2015, 309, 413–419. [Google Scholar] [CrossRef]

- Malagelada, C.; De Lorio, F.; Seguí, S.; Mendez, S.; Drozdzal, M.; Vitria, J.; Radeva, P.; Santos, J.; Accarino, A.; Malagelada, J.R.; et al. Functional gut disorders or disordered gut function? Small bowel dysmotility evidenced by an original technique. Neurogastroenterol. Motil. 2012, 24, 223-e105. [Google Scholar] [CrossRef]

- Klang, E.; Barash, Y.; Margalit, R.Y.; Soffer, S.; Shimon, O.; Albshesh, A.; Ben-Horin, S.; Amitai, M.M.; Eliakim, R.; Kopylov, U. Deep learning algorithms for automated detection of Crohn’s disease ulcers by video capsule endoscopy. Gastrointest. Endosc. 2020, 91, 606–613.e2. [Google Scholar] [CrossRef]

- Li, B.; Meng, M.Q.H. Tumor recognition in wireless capsule endoscopy images using textural features and SVM-based feature selection. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 323–329. [Google Scholar] [CrossRef] [PubMed]

- Vieira, P.M.; Ramos, J.; Lima, C.S. Automatic detection of small bowel tumors in endoscopic capsule images by ROI selection based on discarded lightness information. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2015, 2015, 3025–3028. [Google Scholar] [CrossRef] [PubMed]

- Vieira, P.M.; Freitas, N.R.; Valente, J.; Vaz, I.F.; Rolanda, C.; Lima, C.S. Automatic detection of small bowel tumors in wireless capsule endoscopy images using ensemble learning. Med. Phys. 2020, 47, 52–63. [Google Scholar] [CrossRef] [PubMed]

- Saito, H.; Aoki, T.; Aoyama, K.; Kato, Y.; Tsuboi, A.; Yamada, A.; Fujishiro, M.; Oka, S.; Ishihara, S.; Matsuda, T.; et al. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2020, 92, 144–151.e1. [Google Scholar] [CrossRef] [PubMed]

- Inoue, S.; Shichijo, S.; Aoyama, K.; Kono, M.; Fukuda, H.; Shimamoto, Y.; Nakagawa, K.; Ohmori, M.; Iwagami, H.; Matsuno, K.; et al. Application of Convolutional Neural Networks for Detection of Superficial Nonampullary Duodenal Epithelial Tumors in Esophagogastroduodenoscopic Images. Clin. Transl. Gastroenterol. 2020, 11, e00154. [Google Scholar] [CrossRef] [PubMed]

- Chetcuti Zammit, S.; Bull, L.A.; Sanders, D.S.; Galvin, J.; Dervilis, N.; Sidhu, R.; Worden, K. Towards the Probabilistic Analysis of Small Bowel Capsule Endoscopy Features to Predict Severity of Duodenal Histology in Patients with Villous Atrophy. J. Med. Syst. 2020, 44, 195. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Han, G.; Li, B.N.; Lin, Z.; Ciaccio, E.J.; Green, P.H.; Qin, J. Quantitative analysis of patients with celiac disease by video capsule endoscopy: A deep learning method. Comput. Biol. Med. 2017, 85, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Stoleru, C.A.; Dulf, E.H.; Ciobanu, L. Automated detection of celiac disease using Machine Learning Algorithms. Sci. Rep. 2022, 12, 4071. [Google Scholar] [CrossRef]

- Leenhardt, R.; Vasseur, P.; Li, C.; Saurin, J.C.; Rahmi, G.; Cholet, F.; Becq, A.; Marteau, P.; Histace, A.; Dray, X.; et al. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest. Endosc. 2019, 89, 189–194. [Google Scholar] [CrossRef]

- Tsuboi, A.; Oka, S.; Aoyama, K.; Saito, H.; Aoki, T.; Yamada, A.; Matsuda, T.; Fujishiro, M.; Ishihara, S.; Nakahori, M.; et al. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig. Endosc. 2020, 32, 382–390. [Google Scholar] [CrossRef]

- Vezakis, I.A.; Toumpaniaris, P.; Polydorou, A.A.; Koutsouris, D. A Novel Real-time Automatic Angioectasia Detection Method in Wireless Capsule Endoscopy Video Feed. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 2019, 4072–4075. [Google Scholar] [CrossRef] [PubMed]

- Vieira, P.M.; Silva, C.P.; Costa, D.; Vaz, I.F.; Rolanda, C.; Lima, C.S. Automatic Segmentation and Detection of Small Bowel Angioectasias in WCE Images. Ann. Biomed. Eng. 2019, 47, 1446–1462. [Google Scholar] [CrossRef] [PubMed]

- Saraiva, M.J.M.; Afonso, J.; Ribeiro, T.; Ferreira, J.; Cardoso, H.; Andrade, A.P.; Parente, M.; Natal, R.; Mascarenhas Saraiva, M.; Macedo, G. Deep learning and capsule endoscopy: Automatic identification and differentiation of small bowel lesions with distinct haemorrhagic potential using a convolutional neural network. BMJ Open Gastroenterol. 2021, 8, e000753. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Zhang, W.; Mandal, M.; Meng, M.Q.H. Computer-aided bleeding detection in WCE video. IEEE J. Biomed. Health Inform. 2014, 18, 636–642. [Google Scholar] [CrossRef] [PubMed]

- Aoki, T.; Yamada, A.; Aoyama, K.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2019, 89, 357–363.e2. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.Y.; Gan, T.; Rao, N.N.; Xing, Y.W.; Zheng, J.; Li, S.; Luo, C.S.; Zhou, Z.J.; Wan, Y.L. Identification of lesion images from gastrointestinal endoscope based on feature extraction of combinational methods with and without learning process. Med. Image Anal. 2016, 32, 281–294. [Google Scholar] [CrossRef] [PubMed]

- Fan, S.; Xu, L.; Fan, Y.; Wei, K.; Li, L. Computer-aided detection of small intestinal ulcer and erosion in wireless capsule endoscopy images. Phys. Med. Biol. 2018, 63, 165001. [Google Scholar] [CrossRef]

- Ghosh, T.; Chakareski, J. Deep Transfer Learning for Automated Intestinal Bleeding Detection in Capsule Endoscopy Imaging. J. Digit. Imaging 2021, 34, 404–417. [Google Scholar] [CrossRef]

- Rome IV Criteria—Rome Foundation. Available online: https://theromefoundation.org/rome-iv/rome-iv-criteria/ (accessed on 23 July 2023).

- Black, C.J.; Drossman, D.A.; Talley, N.J.; Ruddy, J.; Ford, A.C. Functional gastrointestinal disorders: Advances in understanding and management. Lancet 2020, 396, 1664–1674. [Google Scholar] [CrossRef]

- Bassotti, G.; Bologna, S.; Ottaviani, L.; Russo, M.; Dore, M.P. Intestinal manometry: Who needs it? Gastroenterol. Hepatol. Bed Bench 2015, 8, 246–252. [Google Scholar]

- Ohkubo, H.; Kessoku, T.; Fuyuki, A.; Iida, H.; Inamori, M.; Fujii, T.; Kawamura, H.; Hata, Y.; Manabe, N.; Chiba, T.; et al. Assessment of small bowel motility in patients with chronic intestinal pseudo-obstruction using cine-MRI. Am. J. Gastroenterol. 2013, 108, 1130–1139. [Google Scholar] [CrossRef] [PubMed]

- McAlindon, M.E.; Ching, H.L.; Yung, D.; Sidhu, R.; Koulaouzidis, A. Capsule endoscopy of the small bowel. Ann. Transl. Med. 2016, 4, 369. [Google Scholar] [CrossRef] [PubMed]

- Jemal, A.; Siegel, R.; Ward, E.; Hao, Y.; Xu, J.; Murray, T.; Thun, M.J. Cancer statistics, 2008. CA Cancer J. Clin. 2008, 58, 71–96. [Google Scholar] [CrossRef] [PubMed]

- Becq, A.; Rahmi, G.; Perrod, G.; Cellier, C. Hemorrhagic angiodysplasia of the digestive tract: Pathogenesis, diagnosis, and management. Gastrointest. Endosc. 2017, 86, 792–806. [Google Scholar] [CrossRef] [PubMed]

- Warkentin, T.E.; Moore, J.C.; Anand, S.S.; Lonn, E.M.; Morgan, D.G. Gastrointestinal Bleeding, Angiodysplasia, Cardiovascular Disease, and Acquired von Willebrand Syndrome. Transfus. Med. Rev. 2003, 17, 272–286. [Google Scholar] [CrossRef]

- Raju, G.S.; Gerson, L.; Das, A.; Lewis, B. American Gastroenterological Association (AGA) Institute technical review on obscure gastrointestinal bleeding. Gastroenterology 2007, 133, 1697–1717. [Google Scholar] [CrossRef]

- Lin, S.; Rockey, D.C. Obscure gastrointestinal bleeding. Gastroenterol. Clin. N. Am. 2005, 34, 679–698. [Google Scholar] [CrossRef]

- Kim, B.S.M.; Li, B.T.; Engel, A.; Samra, J.S.; Clarke, S.; Norton, I.D.; Li, A.E. Diagnosis of gastrointestinal bleeding: A practical guide for clinicians. World J. Gastrointest. Pathophysiol. 2014, 5, 467. [Google Scholar] [CrossRef]

- Pennazio, M.; Rondonotti, E.; Despott, E.J.; Dray, X.; Keuchel, M.; Moreels, T.; Sanders, D.S.; Spada, C.; Carretero, C.; Cortegoso Valdivia, P.; et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Guideline—Update 2022. Endoscopy 2023, 55, 58–95. [Google Scholar] [CrossRef]

- Cardoso, P.; Saraiva, M.M.; Afonso, J.; Ribeiro, T.; Andrade, P.; Ferreira, J.; Cardoso, H.; Macedo, G. Artificial Intelligence and Device-Assisted Enteroscopy: Automatic Detection of Enteric Protruding Lesions Using a Convolutional Neural Network. Clin. Transl. Gastroenterol. 2022, 13, e00514. [Google Scholar] [CrossRef]

- Shahidi, N.C.; Ou, G.; Svarta, S.; Law, J.K.; Kwok, R.; Tong, J.; Lam, E.C.; Enns, R. Factors associated with positive findings from capsule endoscopy in patients with obscure gastrointestinal bleeding. Clin. Gastroenterol. Hepatol. 2012, 10, 1381–1385. [Google Scholar] [CrossRef] [PubMed]

- Gunjan, D.; Sharma, V.; Rana, S.S.; Bhasin, D.K. Small bowel bleeding: A comprehensive review. Gastroenterol. Rep. 2014, 2, 262. [Google Scholar] [CrossRef] [PubMed]

- Iakovidis, D.K.; Koulaouzidis, A. Software for enhanced video capsule endoscopy: Challenges for essential progress. Nat. Rev. Gastroenterol. Hepatol. 2015, 12, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Penrice, D.D.; Rattan, P.; Simonetto, D.A. Artificial Intelligence and the Future of Gastroenterology and Hepatology. Gastro Hep Adv. 2022, 1, 581–595. [Google Scholar] [CrossRef]

- Yoon, J. Hyperspectral Imaging for Clinical Applications. BioChip J. 2022, 16, 1–12. [Google Scholar] [CrossRef]

- Ma, F.; Yuan, M.; Kozak, I. Multispectral imaging: Review of current applications. Surv. Ophthalmol. 2023, 68, 889–904. [Google Scholar] [CrossRef]

- Nalepa, J. Recent Advances in Multi- and Hyperspectral Image Analysis. Sensors 2021, 21, 6002. [Google Scholar] [CrossRef]

- Baumer, S.; Streicher, K.; Alqahtani, S.A.; Brookman-Amissah, D.; Brunner, M.; Federle, C.; Muehlenberg, K.; Pfeifer, L.; Salzberger, A.; Schorr, W.; et al. Accuracy of polyp characterization by artificial intelligence and endoscopists: A prospective, non-randomized study in a tertiary endoscopy center. Endosc. Int. Open 2023, 11, E818–E828. [Google Scholar] [CrossRef]

| Author (Year) | Disease and Investigation | Algorithm Type | Number of Patients/Images | Main Findings |

|---|---|---|---|---|

| Malagelada et al. (2012) [19] | Functional small bowel disorders Video capsule | Iterative/automatic classifier—One-class SVM | 80 patients with functional bowel disorders and 70 healthy subjects | 26% of patients and 1% of healthy subjects were in the abnormal zone (above the 66% cut-off), 65% patients and 93% healthy subjects were very likely normal (below the 33% cut-off), while a relatively low proportion (9% of patients and 6% of healthy subjects) remained in the gray zone (between 66% and 33% cut-offs) |

| Malagelada et al. (2015) [18] | Functional small bowel disorders Video capsule | Automatic classifier—one-class SVM | 196 patients with functional bowel disorder and 48 healthy subjects | A significantly greater proportion of patients in the test set, 32 of 129 patients (25%), were found outside the normal range (p = 0.000 by chi-square) as in the training set. |

| Klang et al. (2020) [20] | Chron’s disease Video capsule | Xception CNN | 7.391 of images with ulcers and 10.249 with normal mucosa, out of which 6672 were with CD and 3577 were with normal CEs, from 49 patients | There were 2 different experiment designs, with the classifier reaching accuracies ranging from 95.4% to 96.7% for the first, the accuracies ranged from 73.7% to 98.2%, higher than the second experiment, all that in less than 3.5 min for the complete film analysis |

| Baopu, Max (2012) [21] | Malignant abnormalities Video capsule | SVM-SFFS and SVM-RFE | 600 tumor ROIs and 600 normal ROIs from 10 patients | 2 feature SVMs were used to maximize the classification accuracy, one of which reached a diagnostic accuracy of 92.4%; however, there was a low sensitivity of lesion detection (88.6% for SVM-RFE and 83.1% for SVM-SFFS) |

| Pedro et al. (2015) [22] | Malignant abnormalities Video capsule | SVM and multilayer perception | 14 patients, 700 frames labeled tumoral frames and 2500 normal frames | Average from the 4 groups/sets where different features were analysed, where sensitivity, specificity, and accuracy from the MLP algorithm which performed better than the SVM, were 96.75%, 97.47% and 97.2%, respectively, with features from the entire image. |

| Vieiera et al. (2019) [23] | Malignant abnormalities Video capsule | Firstly, the Gaussian Mixture Model was used to separate abnormal from normal tissue. Additionally, a modified version of the Anderson method for convergence acceleration of the expectation–maximization algorithm is proposed. | 936 frames from 29 patients with adenocarcinomas, lymphomas, carcinoid tumors, and sarcomas, and 3000 normal images | The best results were achieved with the third training scheme, where sensitivity, specificity, AUC and accuracy achieved were 96.1%, 98.3%, 96.5%, and 97.6%, respectively. |

| Saito et al. (2020) [24] | Malignant abnormalities Video capsule | Single shot multiBox detector 12 (deep neural network architecture), without an alteration of the underlying algorithm | 30.584 images from 292 patients were used for training and validating the CNN, out of which 10.000 were normal images and 7.507 presented with protruding lesions | For the whole capsule endoscopy films analysis, which simulates a real-life analysis, the sensitivity was 98.6% and the classification of lesions has a sensitivity of 86.5%, 92.0%, 95.8%, 77.0%, and 94.4% for the detection of polyps, nodules, epithelial tumors, SMTs, and venous structures, respectively |

| Inoue et al. (2020) [25] | Malignant abnormalities Video capsule | Single-Shot Multibox Detector | 1546 training images from 96 tumors for the training data set and 399 images from 34 SNADETs | NBI (narrow band imaging) having a significantly higher sensitivity (NBI vs. WLI = 98.5% vs. 92.9%) and lower specificity than WLI (white-light imaging) (NBI vs. WLI = 77.8% vs. 89.2%) |

| Zammit et al. (2020) [26] | Celiac disease Video capsule | Leave-one-out cross-validation model | 81 SBCE results of 72 patients. | SBCE imaging results were used to differentiate diagnose between Celiac disease and Serology negative villous atrophy and assess the severity of Celiac disease. Using the Maximum Likelihood approach as predictive method and Leave-one-out cross-validation model (LOOCV) to validate the predictive method the team was able to achieve 69.1% of accuracy when differentiating between the two diseases and assessing the severity of Celiac disease. The accuracy value increased up to 75.3% after including the estimate of the distribution for the two diseases. |

| Zhou et al. (2017) [27] | Celiac disease Video capsule | DCNN | 11 Celiac disease confirmed patients and 10 patients for control group | SBCE results were used to train a DCNN which was able to diagnose Celiac disease with 100% sensitivity and specificity. Moreover, Zhou et al. [27] introduced a new term based on their results: Evaluation confidence—which can be useful to suspect Celiac disease when the value is above 50% and can be used to predict the severity of disease, with high value of Evaluation confidence corresponding with disease severity. |

| Stoleru et al. (2022) [28] | Celiac disease Video apsule | SVM | 109 SBCE results from 65 Celiac disease patients and 45 Control group | Part SBCE examination results were used to train a Machine Learning algorithm and then used on the rest of the available imaging data. The researchers compared the diagnostical results of 3 different ML algorithms, from which Linear Support Vector Machine (SVM) was the most performant one with 96% sensitivity and 94% precision. |

| Leenhardt et al. (2019) [29] | Angioectasia Video capsule | CNN | 208 patients (126 men, 82 women) 6360 still frames extracted from 1341 SB-CE videos | Results for the examined dataset reveal a 100% sensitivity and a 96% specificity, and the authors concluded that they had outstanding diagnostic accuracy for GIA detection. |

| Tsuboi et al. (2020) [30] | Angioectasia Video capsule | CNN with Single Shot MultiBox Detector | 169 patients with confirmed small bowel angioectasia and 20 healthy patients | The trained CNN required 323 s to evaluate the images, with an average speed of 32.5 images per second. The AUC of CNN used to detect angioectasia was 0.998. The correct distinction rate was 83.3% (15/18) in Type 1a and 97.9% (465/475) in Type 1b proving that not only did this algorithm predict angioectasia but it also classified each angioectasia lesion by its type |

| Vezakis et al. (2019) [31] | Angioectasia Video capsule | CNN | 725 images from ImageNet Out of these images, 350 depicted a normal mucosa, 196 depicted bubbles, 75 depicted blood vessels and 104 depicted angioectasia. The validation dataset consisted of 3 full-length WCE videos from patients who were diagnosed with multiple small bowel angioectasia, by a medical professional. | Possible ROIs are suggested in the initial stage. In the second stage, a properly trained CNN automatically evaluates the ROIs. The sensitivity and specificity of this approach were determined as 92.7% and 99.5%, respectively. During the manual inspection of the videos, 55 angioectasias were detected in 436 frames. Out of these 55 lesions, 51 were detected successfully by the algorithm. |

| Vieira et al. (2019) [32] | Angiectasia Video capsule | Multiple color spaces; Maximum a Posteriori Multilayer perceptron; neural network and SVM | 798 images (248 images with angioectasias and 550 normal images) | The program employed a pre-processing method to identify ROIs that were then investigated further. The use of MRFs to simulate the neighborhood of pixels improves lesion segmentation, particularly with the addition of the suggested weighted-boundary function. A MLP classifier produced the most accurate outcomes (96.60% sensitivity and 94.08% specificity, for an overall accuracy of 95.58%). |

| Mascarenhas et al. (2021) [33] | Bleeding lesions Vascular lesions Ulcers Erosions Video capsule | CNN Xception model | 4319 patients 5739 exams from which 53,555 images of CE were obtained | The method scored very high specificity rates in identifying correctly all the mucosal lesions present in the images. The algorithm detects lymphangiectasias with a sensitivity of 88% and a specificity of over 99% and xanthomas with a sensitivity 85% and a specificity of >99%. Mucosal erosions were detected with a sensitivity of 73% and specificity of 99%. Mucosal ulcers were identified with a sensitivity of 81% for P1 lesions and 94% for P2 lesions. Vascular lesions with high-bleeding potential were identified with a sensitivity and specificity of 91% and 99%. Mucosal red spots were detected with a sensitivity of 79% and a specificity of 99%. |

| Fu et al. (2014) [34] | Bleeding lesions of the small bowel’s mucosa Video capsule | SVM | 20 videos consisting of 1000 bleeding frames and 4000 non-bleeding frames | The method has been proven to be better at detecting bleeding pixels than other methods used before. This algorithm is based on grouping similar pixels together, based on color and location, to reduce computational time. |

| Aoki et al. (2018) [35] | Erosions and ulcerations of the small bowel mucosa Video capsule | CNN Single Shot Detector | Validation set: 65 patients 10,440 independent images (440 with erosions and ulcerations and 10.000 showing normal small bowel mucosa) Test set: 115 patients from which 5360 images were obtained (all images contained erosions and ulcerations) | The method was focused on detecting both erosions and ulcerations in each set of CE images. The CNN proved to have great performance in detecting both types of lesions with an AUC of 0.958 (95% CI, 0.947–0.968). This is the first machine learning based method aimed specifically at detecting erosions and ulcerations in video capsule images of the small bowel. |

| Liu et al. (2016) [36] | Small intestinal bleeding Video capsule | joint diagonalisation principal component analysis | 530 images from 30 patients | The feature extraction models achieved AUCs of 0.9776, 0.8844, and 0.9247 in detecting small intestinal bleeding. |

| Fan et al. (2018) [37] | Erosions Ulcers Video capsule | CNN | 144 patients, 32 cases of erosions, 47 cases of ulcer and 65 normal cases Ulcer detection dataset: 8250 images (3250 with ulcers) Erosions detection dataset: 12910 images (out of which 4910 with erosions) | The algorithm obtained great performance in detecting erosions and ulcers of the small bowel with an accuracy of 95.16% for ulcers) and 95.34% for erosions. However, it still had a 5% rate of failure in correctly identifying the images with certain lesions. The ulcers were identified with greater success with a higher sensitivity rate due to the anatomical characteristics of the lesion: ulcers are usually in the form of blocks while erosions are small and punctiform. |

| Ghosh et al. (2021) [38] | Bleeding lesions Video capsule | CNN- based deep learning framework. Two CNNs were used CNN-1 for classification of bleeding and not bleeding images (AlexNet architecture) CNN-2 for detection of bleeding segments | 2350 images (out of which 450 frames of bleeding) from two online available clinical datasets | This method achieved an AUC of 0.998. In the case of active bleeding, the algorithm detects the bleeding regions with some false positives. The inactive bleeding regions are detected with higher accuracy. The aim of this study is to assist physicians in the reviewing process of CE images, and it enables a five-time reduction of the time needed to assess a dataset consisting of 50–70 thousand frames. |

| Author (Year) | Disease and Investigation | Algorithm Type | Number of Patients/Images | Main Findings |

|---|---|---|---|---|

| Cardoso et al. (2022) [13] | protruding lesions (epithelial and subepithelial tumors) DAE | CNN- Xception model | 7.925 from 72 patients, of which 2.535 images presented with these protruding lesions and 5.390 normal | The algorithm was trained on 80% of the total images and validated by 20%, namely 507 images with protruding lesions and 1.078 images with normal findings, it yielded a sensitivity of 97% and a specificity of 97.4% and an amazing AUC of 1.00 |

| Ribeiro et al. (2022) [14] | Angioectasia DAE | CNN | 6740 images extracted from 72 DAE performed, out of which 1395 comprised of angioectasia | After the validation stage, the model had a promising accuracy of 95.3%, a sensitivity of 88.5%, and a high specificity of 97.1%. With an AUC of 0.98 |

| Ribeiro et al. (2023) [15] | angioectasia, hematic residues, protruding lesions, ulcers, erosions DAE | CNN | 250 DAE with a total of 12.870 images, 433 images of unclassified abnormalities and 6.139 images of normal mucosa | The algorithm yielded superior results/parameter values compared to the pilot study, with an overall AUC of 0.99, sensitivity and specificity of 96.2% and 95%, respectively |

| Mascarenhas et al. (2022) [16] | erosions, ulcers, protruding, vascular lesions, hematic residues DAE | CNN | 18.380 images of erosions, ulcers, protruding, and vascular lesions and hematic residues from 260 DAEs | In the validation phase, it yielded an AUC 1.00 with a 96.2% sensitivity and 95% specificity |

| Martins et al. (2023) [12] | Erosions and ulcers DAE | CNN- XCeption model | 6772 images (633 ulcers or erosions) | The detection of erosions and ulcers through panendoscopic analysis with an AUC of 1.00, a sensitivity of 88.5% and a specificity of 99.7%. It was trained on 250 DAE exams, with frames extracted and classified into normal and ulcerative (n = 678) mucosa by 3 experienced gastroenterologists, with a total of 6.772 images used. |

| Mascarenhas et al. (2021) [17] | Angioectasia DAE | CNN- XCeption model | the full-length videos of 72 patients undergoing DAE were extracted as 6740 still frames | The validation set consisted of 1348 images. The CNN analyzed each picture and predicted a classification (normal mucosa vs. angioectasia), which was then compared to the specialists’ classification. Overall, our automated approach showed an 88.5% sensitivity, a 97.1% specificity, an 88.8% positive predictive value, and a 97.0% negative predictive value. The network’s overall accuracy was 95.3%. The CNN finished reading the validation picture set in 9 s. This corresponds to an estimated reading rate of 6.4 ms/frame. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Popa, S.L.; Stancu, B.; Ismaiel, A.; Turtoi, D.C.; Brata, V.D.; Duse, T.A.; Bolchis, R.; Padureanu, A.M.; Dita, M.O.; Bashimov, A.; et al. Enteroscopy versus Video Capsule Endoscopy for Automatic Diagnosis of Small Bowel Disorders—A Comparative Analysis of Artificial Intelligence Applications. Biomedicines 2023, 11, 2991. https://doi.org/10.3390/biomedicines11112991

Popa SL, Stancu B, Ismaiel A, Turtoi DC, Brata VD, Duse TA, Bolchis R, Padureanu AM, Dita MO, Bashimov A, et al. Enteroscopy versus Video Capsule Endoscopy for Automatic Diagnosis of Small Bowel Disorders—A Comparative Analysis of Artificial Intelligence Applications. Biomedicines. 2023; 11(11):2991. https://doi.org/10.3390/biomedicines11112991

Chicago/Turabian StylePopa, Stefan Lucian, Bogdan Stancu, Abdulrahman Ismaiel, Daria Claudia Turtoi, Vlad Dumitru Brata, Traian Adrian Duse, Roxana Bolchis, Alexandru Marius Padureanu, Miruna Oana Dita, Atamyrat Bashimov, and et al. 2023. "Enteroscopy versus Video Capsule Endoscopy for Automatic Diagnosis of Small Bowel Disorders—A Comparative Analysis of Artificial Intelligence Applications" Biomedicines 11, no. 11: 2991. https://doi.org/10.3390/biomedicines11112991

APA StylePopa, S. L., Stancu, B., Ismaiel, A., Turtoi, D. C., Brata, V. D., Duse, T. A., Bolchis, R., Padureanu, A. M., Dita, M. O., Bashimov, A., Incze, V., Pinna, E., Grad, S., Pop, A.-V., Dumitrascu, D. I., Munteanu, M. A., Surdea-Blaga, T., & Mihaileanu, F. V. (2023). Enteroscopy versus Video Capsule Endoscopy for Automatic Diagnosis of Small Bowel Disorders—A Comparative Analysis of Artificial Intelligence Applications. Biomedicines, 11(11), 2991. https://doi.org/10.3390/biomedicines11112991