Abstract

Multiple prediction models for risk of in-hospital mortality from COVID-19 have been developed, but not applied, to patient cohorts different to those from which they were derived. The MEDLINE, EMBASE, Scopus, and Web of Science (WOS) databases were searched. Risk of bias and applicability were assessed with PROBAST. Nomograms, whose variables were available in a well-defined cohort of 444 patients from our site, were externally validated. Overall, 71 studies, which derived a clinical prediction rule for mortality outcome from COVID-19, were identified. Predictive variables consisted of combinations of patients′ age, chronic conditions, dyspnea/taquipnea, radiographic chest alteration, and analytical values (LDH, CRP, lymphocytes, D-dimer); and markers of respiratory, renal, liver, and myocardial damage, which were mayor predictors in several nomograms. Twenty-five models could be externally validated. Areas under receiver operator curve (AUROC) in predicting mortality ranged from 0.71 to 1 in derivation cohorts; C-index values ranged from 0.823 to 0.970. Overall, 37/71 models provided very-good-to-outstanding test performance. Externally validated nomograms provided lower predictive performances for mortality in their respective derivation cohorts, with the AUROC being 0.654 to 0.806 (poor to acceptable performance). We can conclude that available nomograms were limited in predicting mortality when applied to different populations from which they were derived.

1. Introduction

The first cases of pneumonia caused by a new coronavirus [1] were reported just over three years ago in Wuhan, China [2]. The disease caused by coronavirus 2019 (COVID-19) has since spread globally to constitute a public health emergency of international concern [3]. Although most patients had mild or moderate symptoms, a proportion of severely ill patients progressed rapidly to acute respiratory failure, with mortality in 49% [4]. Early identification and supportive care could effectively reduce the incidence of critical illness and in-hospital mortality. Hence, from the early stages of the pandemic, many risk-prediction models, or nomograms, were developed [5] by integrating demographic, clinical, and exploratory findings during early contact with health care systems. However, the merits of most available tools still remain unclear since many were developed to predict a diverse mix of complications (including aggravated disease, need for invasive ventilation, or admission to ICU) in addition to in-hospital mortality; furthermore, most had not been applied to different patient cohorts to those from which they were derived. External validation is essential before implementing nomograms in clinical practice [6]; however, almost no prognostic model for in-hospital mortality for COVID-19 has as yet been validated.

In this research, we aimed to systematically review and critically appraise all currently available prediction models for in-hospital mortality caused by COVID-19. We also aim to compare prediction performances by retrospectively applying nomograms to a well-defined severe patient series admitted to our hospital.

2. Materials and Methods

2.1. Eligibility Criteria and Searches

This review was conducted and is reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) guidelines; a study protocol was registered with PROSPERO [CRD42020226076]. The MEDLINE, EMBASE, Scopus, and Web of Science (WOS) databases were searched for literature published up to 25 August 2021 (Table S1). No restrictions were applied to language or methodological design. No restrictions were placed on prediction horizon (how far ahead the model predicts) within the admission period, or countries or study settings. Additional relevant papers were identified by screening reference lists of included documents. Literature searches were repeated on 20 April 2022 to retrieve the most recent papers and provide updated results.

Studies were included if they (a) described the development/derivation and/or validation of a multivariable tool designed to predict risk of in-hospital mortality in patients with a confirmed diagnosis of severe COVID-19 infection, (b) provided the sensitivity and specificity of the tool or gave sufficient data to allow these metrics to be calculated, and (c) defined the variables or combination of variables used to predict the risk of mortality from COVID-19.

2.2. Study Selection

Two reviewers (MM-M and AJL) independently screened titles and abstracts against eligibility criteria. Potentially eligible papers were obtained, and the full texts were independently examined by the same reviewers. Any disagreements were resolved through discussion.

2.3. Data Extraction and Synthesis

Data extraction of included articles was undertaken by two independent reviewers (MM-M and AJL) and checked against full-text papers by a third reviewer (AAA) to ensure accuracy. Using a predeveloped template based on the CHARMS (critical appraisal and data extraction for systematic reviews of prediction modeling studies) checklist [7], information was extracted on study characteristics, source of data, participant eligibility and recruitment method, sample size, method for measurement of outcome, number, type and definition of predictors, number of participants with missing data for each predictor and handling of missing data, modeling method, model performance, and whether priori cut points were used. In addition, we assessed the method used for testing model performance, and the final and other multivariable models.

For all in-hospital mortality prediction models, area under the receiver operator characteristic curve (AUROC) or concordant (C)-index was used to compare discrimination (the ability of a tool to identify those patients who died from COVID-19 from those who did not). Due to the marked heterogeneity of included studies in terms of the study designs, populations, variable definitions, selection of predictions, use of different tool thresholds, and variable modeling performance, we were unable to perform any meta-analyses. Instead, performance characteristics were summarized in tabular form and a narrative synthesis approach was used.

2.4. Risk of Bias Assessment

PROBAST (prediction model risk of bias assessment tool) was used to assess the risk of bias and applicability of the included studies [8]. PROBAST assesses both the risk of bias and concerns regarding applicability of a study that develops or validates a multivariable diagnostic or prognostic prediction model. It includes 20 signaling questions across 4 domains (participants, predictors, outcome, and analysis). Each domain was rated as having “high”, “low”, or “unclear” (where insufficient information was provided) risk of bias. Two reviewers (A.A. and A.J.L.) independently assessed each study. Ratings were compared and disagreements were resolved by consensus.

2.5. External Validation of Included Clinical Prediction Rules

External validation was carried out as long as the variables integrated in a model were available among those registered in an external validation cohort of patients. No other restrictions were placed on the type of variable that could be included in a tool.

Data on 444 adults with confirmed SARS-CoV-2 infections, who were admitted to our hospital due to severe COVID-19 between 26 February to 31 May 2020 and with a 90-day follow-up period available, were used for the external validation of selected clinical prediction rules. Detailed methods and clinical and demographic characteristics of this patient series have been previously described [9,10]. All patients were independent from the data used in the derivation of any of the included clinical prediction rules. Data from the validation cohort were recoded to reproduce predictors and primary outcomes of each included clinical prediction rule, and modifications were made to match available data. The same point assignment and cut-off values provided by original derivation cohorts were used for external validation analyses.

3. Results

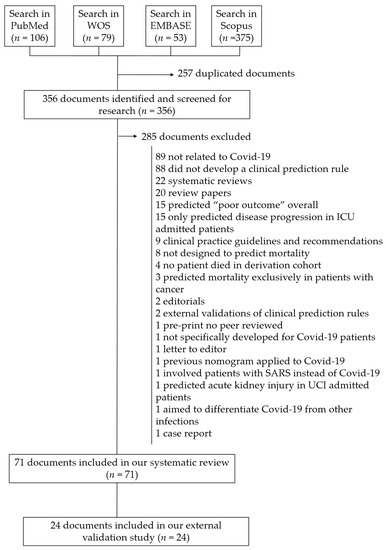

The systematic review flow-chart is shown in Figure 1. Overall, 71 studies with a derivation of a clinical prediction rule for mortality outcome from COVID-19 were identified [2,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80]. Forty studies used data from patients from China [2,11,12,13,14,20,21,22,24,26,27,29,30,31,32,33,40,41,42,44,45,48,51,53,54,55,59,60,61,63,65,66,67,69,70,74,78,79,80], eight from the United States [37,38,43,46,62,64,75,77], five from Spain [28,35,36,50,76], three from the United Kingdom [15,18,23], six from Italy [17,47,52,57,58,73], two from Mexico [16,49], four from Korea [19,34,68,71], two from Turkey [39,56], and one from Pakistan [25].

Figure 1.

Flow diagram of included documents.

The search strategy in the different databases is detailed in Table S1. The characteristics of the included clinical prediction rules are shown in Table S2.

Study data were collected between 1 January and 20 May 2020, during the first wave of the pandemic; the earliest data were provided by Chinese hospitals, all of them prior to 31 March 2020. The latest admissions were recorded in hospitals in the US and Mexico, all of them between the beginning of March and the end of May 2020.

Fifty-five studies aimed to exclusively predict in-hospital mortality [2,11,14,15,16,18,19,20,21,22,23,24,29,30,31,32,34,35,36,39,40,41,42,43,44,45,47,50,51,52,54,56,57,58,59,60,61,62,64,65,66,67,68,69,70,71,72,73,74,75,76,77,79], while thirteen reported on combined outcomes (progression of COVID-19 to severe, including need for invasive artificial respiration, ICU admission, or death) [12,17,25,26,27,28,33,35,37,38,46,55,80]. We ensured deceased patients were effectively included among the dataset and numbers reported. Among studies aimed at reporting on disease progression, a predictive nomogram for mortality was specifically provided in nine of the studies [17,25,27,28,37,38,46,49,80] but not in the other four [12,26,33,55].

Study setting included general population from database sources [19,23,49,58,68,71], clinical records from series of patients admitted to hospital [2,11,12,14,16,17,18,20,21,22,24,25,27,28,29,30,31,32,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,50,51,52,53,54,55,56,57,59,60,61,62,63,64,65,66,67,69,70,72,73,75,77,79,80], and healthcare databases [15,74,76]. One clinical prediction rule was developed exclusively from patients admitted to ICU [33].

All studies used a retrospective design for derivation and 21 were performed in multiple centers [12,13,15,16,19,21,23,24,26,28,29,31,34,43,45,47,48,49,52,54]. Among nomograms developed from hospital records, the derivation sample size was small (less than 300 patients) in 24 studies [2,12,14,17,25,27,28,30,31,32,33,34,35,37,40,42,47,48,55,67,73,78,79] and relatively small (300 to 500 patients) in 14 studies [11,13,16,18,20,22,39,41,44,51,60,74,75,80]. Only 20 hospital-based studies derived mortality prediction rules from series over 1000 patients [21,24,26,36,38,43,50,52,54,59,62,63,65,66,68,69,70,71,72,77]. Large administrative databases of COVID-19-infected patients and hospitalizations of over 10,000 patients were used in four studies [15,19,23,49] (Table S2).

Thirty-four nomograms were derived and validated (in different patients from the same institution) in the same study; random training test splits [14,17,18,19,23,24,30,32,33,41,45,50,51,55,59,60,61,62,63,65,66,67,71,75,76,77] and temporal splits [15,43,44,46,53,70] were performed in fourteen and five studies, respectively. One study performed validation by leave-one-hospital-out cross validation [72].

Only 12 studies externally validated their clinical prediction tool in a cohort of patients in a different institution [11,13,26,38,48,52,55,65,66,70,74,75] from which it was derived.

Overall, data from 317,840 SARS-Cov-2-infected patients were included in the derivation cohorts of the 71 predictive models, 36,882 of whom died. The percentage of deaths varied widely between studies, from 2.2% to 48.9%.

Patients with missing data for any predictor were excluded from analyses in 17 studies [16,18,19,24,29,30,32,40,42,48,51,53,56,59,64,71,78], missing values were provided by imputation methods in a further 21 [11,14,23,26,33,38,43,44,45,50,52,58,60,63,69,72,74,75], or were not reported in the remaining [2,12,13,15,17,20,21,22,25,28,31,34,35,36,37,39,41,46,47,49,54,55,57,61,66,67,73,76,77,79,80]. The absence of predictors was most likely a random event and was not associated with the outcome.

3.1. Models to Predict Risks of COVID-19-Related Mortality in Hospitalized Patients

Overall, 67 models identified predictors for risk of COVID-19-related mortality in patients admitted to hospitals, and a further 3 also included information from the general population and/or out-patients [19,23,49,71,77]. The vast majority of these studies used multivariable logistic regression models to identify predictors [2,12,13,14,16,17,18,20,21,22,24,25,26,27,30,31,32,33,34,35,37,38,39,41,43,45,46,47,48,49,51,52,53,54,56,57,58,59,62,63,64,65,66,68,69,70,71,72,73,74,75,76,77,78,79,80]. Least absolute shrinkage and selection operator (LASSO) were used in 14 studies [11,19,20,23,26,38,42,51,55,59,61,63,67,79], machine learning techniques were applied in an additional 12 [15,19,24,33,38,43,44,48,50,74,75,76], random decision forest or gradient decision trees (GBDT) in 10 studies [24,29,43,48,50,60,63,72,75,76], and artificial neural networks in 4 studies [18,36,50,76]. In nineteen studies, several of these methods of derivation were applied together [18,19,20,24,26,33,38,43,48,50,51,59,60,63,72,74,75,76,79].

The complete data on predictors were reported in all the 71 studies, and formulas to calculate mortality risks were provided or could be extracted in 32 studies (Table S2). The authors of three additional studies for which we were unable to find the formula [2,31,32] were twice contacted by email but did not respond. In the remaining studies, the formula could not be provided, as these predictive models were derived from complex techniques (decision trees or machine learning). Eight predictive models provided an online-available tool to automatically predict outcomes [15,16,17,26,48,50,52].

The most frequently used prognostic variables for mortality (included at least five times among the different nomograms) were age (in 53 models), diabetes mellitus (in 11 models), chronic lung disease (COPD or asthma) (8 models), heart disease or cardiac failure (in 13), chronic kidney insufficiency (in 10), hypertension (in 7), and chronic liver disease (in 5 models). Comorbidity, defined either as number of conditions selected from a predefined list or Charlons index, were recognized as determinants for mortality in nine additional models.

Clinical predictors at admission included in final models consisted of dyspnea/taquipnea (14 models) and radiographic chest alteration (7 models). Analytical variables at admission identified as predictors included serum lactate dehydrogenase (LDH) in 24 prediction rules, C-reactive protein in 28 models, lymphocytes (either absolute number per µL or neutrophils-to-lymphocytes ratio) in 23 models, renal function (defined in terms of urea, BUN, serum creatinine, or glomerular filtration rates) in 20 models, and respiratory function parameters (peripheral O2 saturation, supplemental O2 at admission, PaO2/FiO2, or alveolar-arterial oxygen gradient) in 23 prediction models. D-dimer was included in 16 models and platelet count in 8 additional ones. Markers for liver injury (elevated billirrubin or aminotransferase levels) and myocardial damage (including either troponine I, myoglobine, or creatine phosphokinase) were included as predictors for mortality in 17 and 7 nomograms, respectively. Details on all predictors included in final models are provided in Table S2.

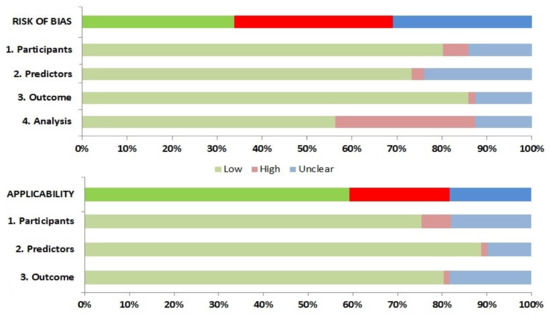

3.2. Risk of Bias

Twenty-five studies were at high risk of bias (ROB) according to assessment with PROBAST, and a further twenty-three showed an unclear ROB (Figure 2 and Table S3). This suggests that their performances when used in predicting in-hospital mortality caused by COVID-19 is probably lower than that reported. Fifty-seven studies (80.3%) were evaluated as being of low ROB for the participants′ domain, thus indicating that the participants enrolled in the studies were representative of the models′ targeted populations. All but 19 studies had a low ROB for the predictor domain (with the remaining being unclear), which indicates that predictors were available at the models’ intended time of use and clearly defined, or independent from mortality. There were concerns about bias induced by the outcome measurement in ten studies, especially due to lack of information on time intervals between predictor assessment and outcome determination as a result of registering data from infected outpatients, or for confusingly considering losses to follow-up as deceased patients. Twenty-two studies were evaluated as high ROB for the analysis domain, mainly because calibration was not assessed, or due to risk of model overfitting when complex modeling strategies were used.

Figure 2.

Risk of bias assessment according to the Prediction model Risk of Bias Assessment Tool (PROBAST).

The applicability of the different CPRs was also assessed. High ROB in population domain was mainly due to inclusion of patients without severe COVID-19 in original derivation cohorts. Studies that derived CPR for disease progression outcomes were evaluated as being of unclear ROB. Predictors derived from patient cohorts with small numbers of deceased patients determined their unclear applicability. Predictors obtained from patients in ICU were considered to be of high ROB regarding their applicability to our systematic review. Combined outcomes (e.g., disease progression) were considered of high or unclear ROB when only a minority of deceased patients was included in derivation cohorts.

3.3. Evaluation of Tool Performance in Predicting COVID-19-Related Mortality

Studies that predicted mortality in derivation cohorts reported AUROC between 0.701 and 1. C-index values ranged between 0.823 and 0.970. These values were over 0.9 (very good test) and over 0.97 (outstanding test) in 31 and 6 of the 71 predictive models, respectively. When provided, sensitivity values for cut-off points provided by the different authors in derivation cohorts ranged between 32% and 98.4%, with specificity ranging from to 100% to 38.6%.

Overall, 33 predictive models were applied to new patients (internal validation). No major differences among derivation and internal validation cohorts were found in 5 of the studies [11,23,43,51,66]; in 7 studies, a significantly lower mortality rate was documented in internal validation cohorts [17,38,44,65,70,81,82], and 14 studies provided no details on differences in demographic and clinical features between derivation and internal validation patient subsets [14,18,19,24,26,30,32,33,45,46,48,50,53,55]. Overall, prediction models provided AUROC ranging from 0.73 to 0.991 and were quite similar to those provided in derivation cohorts, with only two nomograms proving lower predictive performance [17,30].

For all the 11 studies that externally validated their clinical prediction rules in patients from different institutions, the observed prediction performances were quite similar in each validation subcohort compared to respective derivation cohorts [11,13,26,38,48,52,55,65,70,74,75]. Thus, AUROC in Weng et al. were 0.921 and 0.975 in derivation and external validation cohorts [11], respectively; Xiao et al. studies provided 0.943 and 0.826 for derivation and validation, respectively [81]; Liang et al. provided AUROC of 0.88 for both derivation and validation cohorts [26]; the XGBoost model used by Vaid et al. provided AUC-ROC values 0.853 and 0.85, respectively, for derivation and validation cohorts [38]; Magro et al. provided AUROC values of 0.822 and 0.820 for the derivation and external validation cohorts [52]; and they were 0.943 and 0.878 in the study by Zhang et al. [55]. In addition, Li developed a CPR from a hospital in Wuhan, China, which provided a C-index of 0.97 in the derivation cohort. It was 0.96 in the internal validation cohort recruited in a second hospital in Wuhan and 0.92 when externally validated in a third neighboring hospital [70]. Similar results were found by He et al. as well in their study involving patients from three hospitals in the Hubei province, China [65].

Notably, external validation was mostly performed for patient cohorts from the same cities or countries that the prediction rules were derived in. However, Rahman et al. derived a prognostic model from 375 COVID-19 patients admitted to Tongji Hospital, China, with an AUROC value of 0.961; when it was validated with an external cohort of 103 patients of Dhaka Medical College, Bangladesh, the AUC value was 0.963 [75].

Sample sizes for data that were used in external validation of each clinical prediction rule were smaller than the respective derivation cohort.

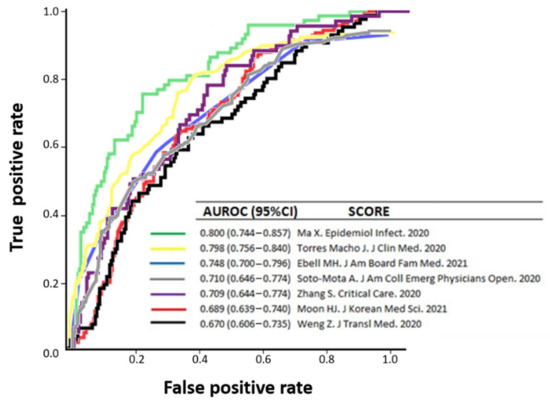

3.4. External Validation in the Same New Cohort of Patients

Predicting variables included in 25 out of the 71 prognostic models identified in our systematic review were present in our local cohort of patients [11,12,15,16,22,25,26,28,30,34,35,37,39,40,45,47,48,49,50,56,60,62,71,81], thus allowing external validation on its performance in a separate clinical setting [9] to assess how the rule could be used in real-life. Prediction rules were validated on subcohorts that included 208 to 444 patients (depending on the number of cases that had all the variables incorporated in each model). For all 25 prognostic models, prediction performances for mortality were notably lower than in the respective derivation cohorts or internal validation cohorts, with AUROC values ranging from 0.654 to 0.806 (poor to acceptable performance). No nomogram was considered good or outstanding to predict in-hospital mortality among our own cohort of patients. A wide variability of sensitivity (ranging 15.5% to 100%) and specificity (1.3% to 98.5%) was found when the best cut-off values (as provided by original authors) were selected (Table 1). Figure 3 represents a comparator of receiver operator characteristic curves for low ROB predictive models for in-hospital mortality, applicable to our external validation population.

Table 1.

Prognostic models for mortality from COVID-19, externally validated in a cohort of patients admitted to Tomelloso General Hospital, Spain. Risk categories and cut-off points were defined according to original models. Performance of each model (area under receiver operator curve (AUROC), sensitivity (%), specificity (%), and positive predictive value (PPV)/negative predictive value (NPV) (%) with 95% confidence intervals), if reported, were calculated over model validation subcohort of N participants.

Figure 3.

Comparison of receiver operator characteristic curves for low-risk-of-bias-predictive models for in-hospital mortality [11,16,30,45,50,62,71], applicable to our external validation population.

4. Discussion

In this systematic review, we identified, retrieved, and critically appraised 71 individual studies that develop prediction models to support the prognostication of death among patients with COVID-19. To our knowledge, this is the first systematic assessment and comparison of prognostic performances of existing clinical prediction rules on risk for in-hospital mortality caused by severe COVID-19. All models were developed during the first wave of the pandemic and reported very-good-to-outstanding predictive performances in derivation and internal validation cohorts.

Predictive tools comprised simple analytical values-based nomograms, nomograms which included symptoms, analytical values and imaging tests, and more complex diagnostic prediction models that incorporated symptoms, test results, and comorbid conditions. Predicting factors included in the different nomograms varied widely among studies, but many have been repeatedly associated with poor prognosis in COVID-19. Thus, advanced age, COPD, heart disease, hypertension, chronic kidney failure, and diabetes were positively associated with risk of death in at least five nomograms and have been related to progression to severe disease as well [83]. In contrast, male sex, smoking history, and obesity were exceptionally included in nomograms, despite being identified as risk factors for progression to severe disease and death in COVID-19 patients in some studies [84,85,86]. Organ failure, including pneumonia, respiratory insufficiency, and ischemic cardiac or liver injury were repeatedly included in nomograms and have been related to poor prognosis in independent research [87,88,89]. Inflammatory markers such as C-reactive protein, D-dimer, as well as lymphopenia, thrombocytopenia, and elevated LHD were recognized by the earlier literature on COVID-19 [90] and the most recent research [9]. Models developed using data from different countries agreed on including common predictive analytical values, despite most nomograms being developed by China.

The methods to develop the different prognostic models available varied greatly in terms of modeling technique, methodology, and rigor of construction. Only 15 were assessed as of low ROB for development and applicability together. The prognostic performances of most tools were evaluated solely within the study datasets, with internal validation carried out on a subset of the original cohorts, thus reproducing the AUROC values provided in derivation subsets. Internally validating on the same cohort used for derivation usually overestimated the performance of scores [91]. For relatively small data sets, as those used to derive most of the nomograms, internal validation by bootstrap techniques might not be sufficient or indicative for the model′s performance in future patients [6], despite demonstrating the stability and quality of the predictors selected within the same cohort. Only 11 tools were externally validated in other participants in the same article. However, these were almost always on patients from the same or neighboring cities as those included in the derivation cohort and therefore likely to have similar characteristics, thus showing overlapping results.

Until now, one single nomogram had been externally evaluated for its predictive capacity for COVID-19-related in-hospital mortality in a different series [49]. The predictive performance of this nomogram developed in Mexico, reduced markedly when applied to a different patient dataset from the same country [16], with the AUROC decreasing from 0.823 to 0.690. A still unpublished prediction rule derived from patients in Wuhan, China, provided C-index for death of 0.91 that only decreased to 0.74 when it was externally validated in patients admitted to hospital in London (UK) [92]. This research is, to our knowledge, the first in externally validating prediction rules for in-hospital mortality caused by COVID-19 altogether and provides further evidence of their limited performance when applied to different clinical settings. The study by Rahman et al. [75] represents a second attempt to externally validate; better results were produced, but these were affected by high risks of bias. It can be suggested therefore that each nomogram developed to predict mortality from COVID-19 should be applied to the same clinical settings from where it was derived.

The main strengths of this study include its systematic search on the multiple literature databases that index the main results of research on COVID-19; the fact that the research is up to date; and the critical appraisal of the methods and ROB of the studies retrieved. The different nomograms have been analyzed in detail and formulas to allow for the estimating of mortality risk for any population by using each of them were provided whenever possible. Finally, the predictive performance of nomograms based on demographic, clinical, and analytical parameters available in usual clinical practice were evaluated in a single external series of COVID-19 patients admitted to hospital.

Some weaknesses should be acknowledged for our study. These are mainly as a result of the heterogeneity of source documents, which derive from different populations with variable disease severity, and cared for in not necessarily comparable healthcare settings. While certain clinical and laboratory variables were identified to contribute objectively to mortality in most studies, many others varied widely among different prediction models. As several nomograms included variables that are not routinely used in clinical practice, we could not provide external validation. Additionally, we did not retrieve studies only available as preprints, which might improve after peer review. Finally, no prediction model was derived from or validated in patients infected by COVID-19 during the second and successive waves of the pandemic, and their current usefulness has not been evaluated.

5. Conclusions

To conclude, our research demonstrates the limitations of prognostic rules for risk of mortality from COVID-19 when applied to different populations from which they were derived. Demographic, clinical, and analytical determinants for risk of mortality are influenced and modulated by many factors inherent to each clinical setting, which are not easily controllable or reproducible. Once main determinants for COVID-19-related mortality at hospital admission have been identified, the best predictive models could be those developed in each particular clinical setting.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/biomedicines10102414/s1. Table S1: Search strategies carried out in four bibliographic databases of documents that report on clinical prediction models for hospital mortality caused by COVID-19. Table S2: Overview of prediction models for mortality risk from COVID-19 identified in a systematic review of the literature, and performance of each model in derivation cohorts. Formulas to calculate mortality risk to be applied to any population were provided or extracted from original documents. Table S3: Risk of bias assessment (using PROBAST) based on four domains across the studies that developed and/or validated prediction models for in-hospital mortality due to coronavirus disease 2019.

Author Contributions

M.M.M.-M. conceptualized the idea. Á.A. and A.J.L. initialized, conceived and supervised the project, and performed database searchers. M.M.M.-M., Á.A. and A.J.L. extracted data from original sources. Á.A. carried out external validation analysis. M.M.M.-M., Á.A. and A.J.L. critically appraised all documents. A.J.L. drafted the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Association of Biomedical Research La Mancha Centro.

Institutional Review Board Statement

No authorization from an IRB was required to conduct the systematic review of the literature. The recruitment of the external validation cohort of patients with COVID-19 admitted to Tomelloso General Hospital was approved by the Ethics Committee of La Mancha-Centro General Hospital at Alcázar de San Juan (protocol code 142-C) on 24 May 2020.

Informed Consent Statement

The Ethics Committee waived the need for obtaining informed consent from patients admitted to our hospital due to COVID-19 during the first wave of the pandemic and who were used to validate the different clinical prediction rules.

Data Availability Statement

The data that support the findings of external validation of clinical prediction rules for this study are available from the corresponding author upon reasonable request.

Acknowledgments

We would like to dedicate this paper to those who have devoted their lives to the battle with coronavirus.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, R.; Zhao, X.; Li, J.; Niu, P.; Yang, B.; Wu, H.; Wang, W.; Song, H.; Huang, B.; Zhu, N.; et al. Genomic Characterisation and Epidemiology of 2019 Novel Coronavirus: Implications for Virus Origins and Receptor Binding. Lancet 2020, 395, 565–574. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, L.; Chen, J.; Yuan, X.; Shen, Q.; Dong, S.; Cheng, B.; Guo, T.-M. Clinical Features Predicting Mortality Risk in Older Patients with COVID-19. Curr. Med. Res. Opin. 2020, 36, 1753–1759. [Google Scholar] [CrossRef] [PubMed]

- Phelan, A.L.; Katz, R.; Gostin, L.O. The Novel Coronavirus Originating in Wuhan, China: Challenges for Global Health Governance. JAMA 2020, 323, 709–710. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; McGoogan, J.M. Characteristics of and Important Lessons from the Coronavirus Disease 2019 (COVID-19) Outbreak in China: Summary of a Report of 72,314 Cases from the Chinese Center for Disease Control and Prevention. JAMA 2020, 323, 1239–1242. [Google Scholar] [CrossRef]

- Wynants, L.; Van Calster, B.; Collins, G.S.; Riley, R.D.; Heinze, G.; Schuit, E.; Bonten, M.M.J.; Dahly, D.L.; Damen, J.A.A.; Debray, T.P.A.; et al. Prediction Models for Diagnosis and Prognosis of COVID-19: Systematic Review and Critical Appraisal. BMJ 2020, 369, m1328. [Google Scholar] [CrossRef]

- Bleeker, S.E.; Moll, H.A.; Steyerberg, E.W.; Donders, A.R.T.; Derksen-Lubsen, G.; Grobbee, D.E.; Moons, K.G.M. External Validation Is Necessary in Prediction Research: A Clinical Example. J. Clin. Epidemiol. 2003, 56, 826–832. [Google Scholar] [CrossRef]

- Moons, K.G.M.; de Groot, J.A.H.; Bouwmeester, W.; Vergouwe, Y.; Mallett, S.; Altman, D.G.; Reitsma, J.B.; Collins, G.S. Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modelling Studies: The CHARMS Checklist. PLoS Med. 2014, 11, e1001744. [Google Scholar] [CrossRef]

- Moons, K.G.M.; Wolff, R.F.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S. PROBAST: A Tool to Assess Risk of Bias and Applicability of Prediction Model Studies: Explanation and Elaboration. Ann. Intern. Med. 2019, 170, W1. [Google Scholar] [CrossRef]

- Maestre-Muñiz, M.M.; Arias, Á.; Arias-González, L.; Angulo-Lara, B.; Lucendo, A.J. Prognostic Factors at Admission for In-Hospital Mortality from COVID-19 Infection in an Older Rural Population in Central Spain. J. Clin. Med. 2021, 10, 318. [Google Scholar] [CrossRef]

- Maestre-Muñiz, M.M.; Arias, Á.; Mata-Vázquez, E.; Martín-Toledano, M.; López-Larramona, G.; Ruiz-Chicote, A.M.; Nieto-Sandoval, B.; Lucendo, A.J. Long-Term Outcomes of Patients with Coronavirus Disease 2019 at One Year after Hospital Discharge. J. Clin. Med. 2021, 10, 2945. [Google Scholar] [CrossRef]

- Weng, Z.; Chen, Q.; Li, S.; Li, H.; Zhang, Q.; Lu, S.; Wu, L.; Xiong, L.; Mi, B.; Liu, D.; et al. ANDC: An Early Warning Score to Predict Mortality Risk for Patients with Coronavirus Disease 2019. J. Transl. Med. 2020, 18, 328. [Google Scholar] [CrossRef] [PubMed]

- Ji, D.; Zhang, D.; Xu, J.; Chen, Z.; Yang, T.; Zhao, P.; Chen, G.; Cheng, G.; Wang, Y.; Bi, J.; et al. Prediction for Progression Risk in Patients with COVID-19 Pneumonia: The CALL Score. Clin. Infect. Dis. 2020, 71, 1393–1399. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Zhang, W.-F.; Gong, M.; Zhang, Y.; Chen, L.; Zhu, H.; Hu, C.; Kang, P.; Liu, L.; Zhu, H. Development and Validation of the HNC-LL Score for Predicting the Severity of Coronavirus Disease 2019. eBioMedicine 2020, 57, 102880. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, H.; Qiao, R.; Ge, Q.; Zhang, S.; Zhao, Z.; Tian, C.; Ma, Q.; Shen, N. Thrombo-Inflammatory Features Predicting Mortality in Patients with COVID-19: The FAD-85 Score. J. Int. Med. Res. 2020, 48, 030006052095503. [Google Scholar] [CrossRef] [PubMed]

- Knight, S.R.; Ho, A.; Pius, R.; Buchan, I.; Carson, G.; Drake, T.M.; Dunning, J.; Fairfield, C.J.; Gamble, C.; Green, C.A.; et al. Risk Stratification of Patients Admitted to Hospital with COVID-19 Using the ISARIC WHO Clinical Characterisation Protocol: Development and Validation of the 4C Mortality Score. BMJ 2020, 370, m3339. [Google Scholar] [CrossRef]

- Soto-Mota, A.; Marfil-Garza, B.A.; Martínez Rodríguez, E.; Barreto Rodríguez, J.O.; López Romo, A.E.; Alberti Minutti, P.; Alejandre Loya, J.V.; Pérez Talavera, F.E.; Ávila Cervera, F.J.; Velazquez Burciaga, A.; et al. The Low-harm Score for Predicting Mortality in Patients Diagnosed with COVID-19: A Multicentric Validation Study. J. Am. Coll. Emerg. Physicians Open 2020, 1, 1436–1443. [Google Scholar] [CrossRef]

- Foieni, F.; Sala, G.; Mognarelli, J.G.; Suigo, G.; Zampini, D.; Pistoia, M.; Ciola, M.; Ciampani, T.; Ultori, C.; Ghiringhelli, P. Derivation and Validation of the Clinical Prediction Model for COVID-19. Intern. Emerg. Med. 2020, 15, 1409–1414. [Google Scholar] [CrossRef]

- Abdulaal, A.; Patel, A.; Charani, E.; Denny, S.; Alqahtani, S.A.; Davies, G.W.; Mughal, N.; Moore, L.S.P. Comparison of Deep Learning with Regression Analysis in Creating Predictive Models for SARS-CoV-2 Outcomes. BMC Med. Inform. Decis. Mak. 2020, 20, 299. [Google Scholar] [CrossRef]

- An, C.; Lim, H.; Kim, D.-W.; Chang, J.H.; Choi, Y.J.; Kim, S.W. Machine Learning Prediction for Mortality of Patients Diagnosed with COVID-19: A Nationwide Korean Cohort Study. Sci. Rep. 2020, 10, 18716. [Google Scholar] [CrossRef]

- Chen, H.; Chen, R.; Yang, H.; Wang, J.; Hou, Y.; Hu, W.; Yu, J.; Li, H. Development and Validation of a Nomogram Using on Admission Routine Laboratory Parameters to Predict In-hospital Survival of Patients with COVID-19. J. Med. Virol. 2021, 93, 2332–2339. [Google Scholar] [CrossRef]

- Chen, R.; Liang, W.; Jiang, M.; Guan, W.; Zhan, C.; Wang, T.; Tang, C.; Sang, L.; Liu, J.; Ni, Z.; et al. Risk Factors of Fatal Outcome in Hospitalized Subjects with Coronavirus Disease 2019 from a Nationwide Analysis in China. Chest 2020, 158, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Cheng, A.; Hu, L.; Wang, Y.; Huang, L.; Zhao, L.; Zhang, C.; Liu, X.; Xu, R.; Liu, F.; Li, J.; et al. Diagnostic Performance of Initial Blood Urea Nitrogen Combined with D-Dimer Levels for Predicting in-Hospital Mortality in COVID-19 Patients. Int. J. Antimicrob. Agents 2020, 56, 106110. [Google Scholar] [CrossRef] [PubMed]

- Clift, A.K.; Coupland, C.A.C.; Keogh, R.H.; Diaz-Ordaz, K.; Williamson, E.; Harrison, E.M.; Hayward, A.; Hemingway, H.; Horby, P.; Mehta, N.; et al. Living Risk Prediction Algorithm (QCOVID) for Risk of Hospital Admission and Mortality from Coronavirus 19 in Adults: National Derivation and Validation Cohort Study. BMJ 2020, 371, m3731. [Google Scholar] [CrossRef]

- Gao, Y.; Cai, G.-Y.; Fang, W.; Li, H.-Y.; Wang, S.-Y.; Chen, L.; Yu, Y.; Liu, D.; Xu, S.; Cui, P.-F.; et al. Machine Learning Based Early Warning System Enables Accurate Mortality Risk Prediction for COVID-19. Nat. Commun. 2020, 11, 5033. [Google Scholar] [CrossRef] [PubMed]

- Kamran, S.M.; Mirza, Z.-H.; Moeed, H.A.; Naseem, A.; Hussain, M.; Fazal, I.; Saeed, F.; Alamgir, W.; Saleem, S.; Riaz, S. CALL Score and RAS Score as Predictive Models for Coronavirus Disease 2019. Cureus 2020, 12, e11368. [Google Scholar] [CrossRef] [PubMed]

- Liang, W.; Liang, H.; Ou, L.; Chen, B.; Chen, A.; Li, C.; Li, Y.; Guan, W.; Sang, L.; Lu, J.; et al. Development and Validation of a Clinical Risk Score to Predict the Occurrence of Critical Illness in Hospitalized Patients with COVID-19. JAMA Intern. Med. 2020, 180, 1081–1089. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z.; Jiang, W.; Wang, J.; Zhu, M.; Song, J.; Wang, X.; Su, Y.; Xiang, G.; Ye, M.; et al. Clinical Predictors of COVID-19 Disease Progression and Death: Analysis of 214 Hospitalised Patients from Wuhan, China. Clin. Respir. J. 2021, 15, 293–309. [Google Scholar] [CrossRef]

- Lorente, L.; Martín, M.M.; Argueso, M.; Solé-Violán, J.; Perez, A.; Marcos y Ramos, J.A.; Ramos-Gómez, L.; López, S.; Franco, A.; González-Rivero, A.F.; et al. Association between Red Blood Cell Distribution Width and Mortality of COVID-19 Patients. Anaesth. Crit. Care Pain Med. 2020, 40, 100777. [Google Scholar] [CrossRef]

- Ma, X.; Li, A.; Jiao, M.; Shi, Q.; An, X.; Feng, Y.; Xing, L.; Liang, H.; Chen, J.; Li, H.; et al. Characteristic of 523 COVID-19 in Henan Province and a Death Prediction Model. Front. Public Health 2020, 8, 475. [Google Scholar] [CrossRef]

- Ma, X.; Ng, M.; Xu, S.; Xu, Z.; Qiu, H.; Liu, Y.; Lyu, J.; You, J.; Zhao, P.; Wang, S.; et al. Development and Validation of Prognosis Model of Mortality Risk in Patients with COVID-19. Epidemiol. Infect. 2020, 148, e168. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Huang, J.; Geng, Y.; Jiang, S.; Zhou, Q.; Chen, X.; Hu, H.; Li, W.; Zhou, C.; et al. A Nomogramic Model Based on Clinical and Laboratory Parameters at Admission for Predicting the Survival of COVID-19 Patients. BMC Infect. Dis. 2020, 20, 899. [Google Scholar] [CrossRef] [PubMed]

- Pan, D.; Cheng, D.; Cao, Y.; Hu, C.; Zou, F.; Yu, W.; Xu, T. A Predicting Nomogram for Mortality in Patients with COVID-19. Front. Public Health 2020, 8, 461. [Google Scholar] [CrossRef] [PubMed]

- Pan, P.; Li, Y.; Xiao, Y.; Han, B.; Su, L.; Su, M.; Li, Y.; Zhang, S.; Jiang, D.; Chen, X.; et al. Prognostic Assessment of COVID-19 in the Intensive Care Unit by Machine Learning Methods: Model Development and Validation. J. Med. Internet Res. 2020, 22, e23128. [Google Scholar] [CrossRef] [PubMed]

- Park, J.G.; Kang, M.K.; Lee, Y.R.; Song, J.E.; Kim, N.Y.; Kweon, Y.O.; Tak, W.Y.; Jang, S.Y.; Lee, C.; Kim, B.S.; et al. Fibrosis-4 Index as a Predictor for Mortality in Hospitalised Patients with COVID-19: A Retrospective Multicentre Cohort Study. BMJ Open 2020, 10, e041989. [Google Scholar] [CrossRef]

- Salto-Alejandre, S.; Roca-Oporto, C.; Martín-Gutiérrez, G.; Avilés, M.D.; Gómez-González, C.; Navarro-Amuedo, M.D.; Praena-Segovia, J.; Molina, J.; Paniagua-García, M.; García-Delgado, H.; et al. A Quick Prediction Tool for Unfavourable Outcome in COVID-19 Inpatients: Development and Internal Validation. J. Infect. 2021, 82, e11–e15. [Google Scholar] [CrossRef]

- Santos-Lozano, A.; Calvo-Boyero, F.; López-Jiménez, A.; Cueto-Felgueroso, C.; Castillo-García, A.; Valenzuela, P.L.; Arenas, J.; Lucia, A.; Martín, M.A.; COVID-19 Hospital ’12 Octubre’ Clinical Biochemisty Study Group. Can Routine Laboratory Variables Predict Survival in COVID-19? An Artificial Neural Network-Based Approach. Clin. Chem. Lab. Med. 2020, 58, e299–e302. [Google Scholar] [CrossRef]

- Turcotte, J.J.; Meisenberg, B.R.; MacDonald, J.H.; Menon, N.; Fowler, M.B.; West, M.; Rhule, J.; Qureshi, S.S.; MacDonald, E.B. Risk Factors for Severe Illness in Hospitalized COVID-19 Patients at a Regional Hospital. PLoS ONE 2020, 15, e0237558. [Google Scholar] [CrossRef]

- Vaid, A.; Somani, S.; Russak, A.J.; De Freitas, J.K.; Chaudhry, F.F.; Paranjpe, I.; Johnson, K.W.; Lee, S.J.; Miotto, R.; Richter, F.; et al. Machine Learning to Predict Mortality and Critical Events in a Cohort of Patients with COVID-19 in New York City: Model Development and Validation. J. Med. Internet Res. 2020, 22, e24018. [Google Scholar] [CrossRef]

- Varol, Y.; Hakoglu, B.; Kadri Cirak, A.; Polat, G.; Komurcuoglu, B.; Akkol, B.; Atasoy, C.; Bayramic, E.; Balci, G.; Ataman, S.; et al. The Impact of Charlson Comorbidity Index on Mortality from SARS-CoV-2 Virus Infection and A Novel COVID-19 Mortality Index: CoLACD. Int. J. Clin. Pract. 2021, 75, e13858. [Google Scholar] [CrossRef]

- Wang, B.; Zhong, F.; Zhang, H.; An, W.; Liao, M.; Cao, Y. Risk Factor Analysis and Nomogram Construction for Non-Survivors among Critical Patients with COVID-19. Jpn. J. Infect. Dis. 2020, 73, 452–458. [Google Scholar] [CrossRef]

- Wang, R.; He, M.; Yin, W.; Liao, X.; Wang, B.; Jin, X.; Ma, Y.; Yue, J.; Bai, L.; Liu, D.; et al. The Prognostic Nutritional Index Is Associated with Mortality of COVID-19 Patients in Wuhan, China. J. Clin. Lab. Anal. 2020, 34, e23566. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Zhou, S.; Wang, Y.; Lv, W.; Wang, S.; Wang, T.; Li, X. A Prediction Model of Outcome of SARS-CoV-2 Pneumonia Based on Laboratory Findings. Sci. Rep. 2020, 10, 14042. [Google Scholar] [CrossRef] [PubMed]

- Yadaw, A.S.; Li, Y.; Bose, S.; Iyengar, R.; Bunyavanich, S.; Pandey, G. Clinical Features of COVID-19 Mortality: Development and Validation of a Clinical Prediction Model. Lancet Digit. Health 2020, 2, e516–e525. [Google Scholar] [CrossRef]

- Yan, L.; Zhang, H.-T.; Goncalves, J.; Xiao, Y.; Wang, M.; Guo, Y.; Sun, C.; Tang, X.; Jing, L.; Zhang, M.; et al. An Interpretable Mortality Prediction Model for COVID-19 Patients. Nat. Mach. Intell. 2020, 2, 283–288. [Google Scholar] [CrossRef]

- Zhang, S.; Guo, M.; Duan, L.; Wu, F.; Hu, G.; Wang, Z.; Huang, Q.; Liao, T.; Xu, J.; Ma, Y.; et al. Development and Validation of a Risk Factor-Based System to Predict Short-Term Survival in Adult Hospitalized Patients with COVID-19: A Multicenter, Retrospective, Cohort Study. Crit. Care 2020, 24, 438. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, A.; Hou, W.; Graham, J.M.; Li, H.; Richman, P.S.; Thode, H.C.; Singer, A.J.; Duong, T.Q. Prediction Model and Risk Scores of ICU Admission and Mortality in COVID-19. PLoS ONE 2020, 15, e0236618. [Google Scholar] [CrossRef]

- Zinellu, A.; Arru, F.; De Vito, A.; Sassu, A.; Valdes, G.; Scano, V.; Zinellu, E.; Perra, R.; Madeddu, G.; Carru, C.; et al. The De Ritis Ratio as Prognostic Biomarker of In-hospital Mortality in COVID-19 Patients. Eur. J. Clin. Investig. 2021, 51, e13427. [Google Scholar] [CrossRef]

- Hu, C.; Liu, Z.; Jiang, Y.; Shi, O.; Zhang, X.; Xu, K.; Suo, C.; Wang, Q.; Song, Y.; Yu, K.; et al. Early Prediction of Mortality Risk among Patients with Severe COVID-19, Using Machine Learning. Int. J. Epidemiol. 2020, 49, 1918–1929. [Google Scholar] [CrossRef]

- Bello-Chavolla, O.Y.; Bahena-López, J.P.; Antonio-Villa, N.E.; Vargas-Vázquez, A.; González-Díaz, A.; Márquez-Salinas, A.; Fermín-Martínez, C.A.; Naveja, J.J.; Aguilar-Salinas, C.A. Predicting Mortality Due to SARS-CoV-2: A Mechanistic Score Relating Obesity and Diabetes to COVID-19 Outcomes in Mexico. J. Clin. Endocrinol. Metab. 2020, 105, 2752–2761. [Google Scholar] [CrossRef]

- Torres-Macho, J.; Ryan, P.; Valencia, J.; Pérez-Butragueño, M.; Jiménez, E.; Fontán-Vela, M.; Izquierdo-García, E.; Fernandez-Jimenez, I.; Álvaro-Alonso, E.; Lazaro, A.; et al. The PANDEMYC Score. An Easily Applicable and Interpretable Model for Predicting Mortality Associated with COVID-19. J. Clin. Med. 2020, 9, 3066. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, Y.; Zhao, X.; Wang, L.; Liu, F.; Wang, T.; Ye, D.; Lv, Y. Diagnostic Performance of a Blood Urea Nitrogen to Creatinine Ratio-Based Nomogram for Predicting In-Hospital Mortality in COVID-19 Patients. Risk Manag. Healthc. Policy 2021, 14, 117–128. [Google Scholar] [CrossRef] [PubMed]

- Magro, B.; Zuccaro, V.; Novelli, L.; Zileri, L.; Celsa, C.; Raimondi, F.; Gori, M.; Cammà, G.; Battaglia, S.; Genova, V.G.; et al. Predicting In-Hospital Mortality from Coronavirus Disease 2019: A Simple Validated App for Clinical Use. PLoS ONE 2021, 16, e0245281. [Google Scholar] [CrossRef] [PubMed]

- Niu, Y.; Zhan, Z.; Li, J.; Shui, W.; Wang, C.; Xing, Y.; Zhang, C. Development of a Predictive Model for Mortality in Hospitalized Patients with COVID-19. Disaster Med. Public Health Prep. 2022, 16, 1398–1406. [Google Scholar] [CrossRef] [PubMed]

- Ding, Z.; Li, G.; Chen, L.; Shu, C.; Song, J.; Wang, W.; Wang, Y.; Chen, Q.; Jin, G.; Liu, T.; et al. Association of Liver Abnormalities with In-Hospital Mortality in Patients with COVID-19. J. Hepatol. 2020, 74, 1295–1302. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, Q.; Zhang, X.; Liu, S.; Chen, W.; You, J.; Chen, Q.; Li, M.; Chen, Z.; Chen, L.; et al. Clinical Utility of a Nomogram for Predicting 30-Days Poor Outcome in Hospitalized Patients with COVID-19: Multicenter External Validation and Decision Curve Analysis. Front. Med. 2020, 7, 590460. [Google Scholar] [CrossRef]

- Acar, H.C.; Can, G.; Karaali, R.; Börekçi, Ş.; Balkan, İ.İ.; Gemicioğlu, B.; Konukoğlu, D.; Erginöz, E.; Erdoğan, M.S.; Tabak, F. An Easy-to-Use Nomogram for Predicting in-Hospital Mortality Risk in COVID-19: A Retrospective Cohort Study in a University Hospital. BMC Infect. Dis. 2021, 21, 148. [Google Scholar] [CrossRef]

- Bellan, M.; Azzolina, D.; Hayden, E.; Gaidano, G.; Pirisi, M.; Acquaviva, A.; Aimaretti, G.; Aluffi Valletti, P.; Angilletta, R.; Arioli, R.; et al. Simple Parameters from Complete Blood Count Predict In-Hospital Mortality in COVID-19. Dis. Markers 2021, 2021, 8863053. [Google Scholar] [CrossRef] [PubMed]

- Besutti, G.; Ottone, M.; Fasano, T.; Pattacini, P.; Iotti, V.; Spaggiari, L.; Bonacini, R.; Nitrosi, A.; Bonelli, E.; Canovi, S.; et al. The Value of Computed Tomography in Assessing the Risk of Death in COVID-19 Patients Presenting to the Emergency Room. Eur. Radiol. 2021, 31, 9164–9175. [Google Scholar] [CrossRef]

- Chen, B.; Gu, H.-Q.; Liu, Y.; Zhang, G.; Yang, H.; Hu, H.; Lu, C.; Li, Y.; Wang, L.; Liu, Y.; et al. A Model to Predict the Risk of Mortality in Severely Ill COVID-19 Patients. Comput. Struct. Biotechnol. J. 2021, 19, 1694–1700. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Al-Madeed, S.; Zughaier, S.M.; Doi, S.A.R.; Hassen, H.; Islam, M.T. An Early Warning Tool for Predicting Mortality Risk of COVID-19 Patients Using Machine Learning. Cogn. Comput. 2021, 1–16, online ahead of print. [Google Scholar] [CrossRef]

- Dong, Y.-M.; Sun, J.; Li, Y.-X.; Chen, Q.; Liu, Q.-Q.; Sun, Z.; Pang, R.; Chen, F.; Xu, B.-Y.; Manyande, A.; et al. Development and Validation of a Nomogram for Assessing Survival in Patients with COVID-19 Pneumonia. Clin. Infect. Dis. 2021, 72, 652–660. [Google Scholar] [CrossRef] [PubMed]

- Ebell, M.H.; Cai, X.; Lennon, R.; Tarn, D.M.; Mainous, A.G.; Zgierska, A.E.; Barrett, B.; Tuan, W.-J.; Maloy, K.; Goyal, M.; et al. Development and Validation of the COVID-NoLab and COVID-SimpleLab Risk Scores for Prognosis in 6 US Health Systems. J. Am. Board Fam. Med. 2021, 34, S127–S135. [Google Scholar] [CrossRef] [PubMed]

- Guan, X.; Zhang, B.; Fu, M.; Li, M.; Yuan, X.; Zhu, Y.; Peng, J.; Guo, H.; Lu, Y. Clinical and Inflammatory Features Based Machine Learning Model for Fatal Risk Prediction of Hospitalized COVID-19 Patients: Results from a Retrospective Cohort Study. Ann. Med. 2021, 53, 257–266. [Google Scholar] [CrossRef]

- Harmouch, F.; Shah, K.; Hippen, J.T.; Kumar, A.; Goel, H. Is It All in the Heart? Myocardial Injury as Major Predictor of Mortality among Hospitalized COVID-19 Patients. J. Med. Virol. 2021, 93, 973–982. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Song, C.; Liu, E.; Liu, X.; Wu, H.; Lin, H.; Liu, Y.; Li, Q.; Xu, Z.; Ren, X.; et al. Establishment of Routine Clinical Indicators-Based Nomograms for Predicting the Mortality in Patients with COVID-19. Front. Med. 2021, 8, 706380. [Google Scholar] [CrossRef]

- Jiang, M.; Li, C.; Zheng, L.; Lv, W.; He, Z.; Cui, X.; Dietrich, C.F. A Biomarker-Based Age, Biomarkers, Clinical History, Sex (ABCS)-Mortality Risk Score for Patients with Coronavirus Disease 2019. Ann. Transl. Med. 2021, 9, 230. [Google Scholar] [CrossRef]

- Ke, Z.; Li, L.; Wang, L.; Liu, H.; Lu, X.; Zeng, F.; Zha, Y. Radiomics Analysis Enables Fatal Outcome Prediction for Hospitalized Patients with Coronavirus Disease 2019 (COVID-19). Acta Radiol. 2022, 63, 319–327. [Google Scholar] [CrossRef]

- Kim, D.H.; Park, H.C.; Cho, A.; Kim, J.; Yun, K.; Kim, J.; Lee, Y.-K. Age-Adjusted Charlson Comorbidity Index Score Is the Best Predictor for Severe Clinical Outcome in the Hospitalized Patients with COVID-19 Infection. Medicine 2021, 100, e25900. [Google Scholar] [CrossRef]

- Li, J.; Yang, L.; Zeng, Q.; Li, Q.; Yang, Z.; Han, L.; Huang, X.; Chen, E. Determinants of Mortality of Patients with COVID-19 in Wuhan, China: A Case-Control Study. Ann. Palliat. Med. 2021, 10, 3937–3950. [Google Scholar] [CrossRef]

- Li, L.; Fang, X.; Cheng, L.; Wang, P.; Li, S.; Yu, H.; Zhang, Y.; Jiang, N.; Zeng, T.; Hou, C.; et al. Development and Validation of a Prognostic Nomogram for Predicting In-Hospital Mortality of COVID-19: A Multicenter Retrospective Cohort Study of 4086 Cases in China. Aging 2021, 13, 3176–3189. [Google Scholar] [CrossRef]

- Moon, H.J.; Kim, K.; Kang, E.K.; Yang, H.-J.; Lee, E. Prediction of COVID-19-Related Mortality and 30-Day and 60-Day Survival Probabilities Using a Nomogram. J. Korean Med. Sci. 2021, 36, e248. [Google Scholar] [CrossRef] [PubMed]

- Ottenhoff, M.C.; Ramos, L.A.; Potters, W.; Janssen, M.L.F.; Hubers, D.; Hu, S.; Fridgeirsson, E.A.; Piña-Fuentes, D.; Thomas, R.; van der Horst, I.C.C.; et al. Predicting Mortality of Individual Patients with COVID-19: A Multicentre Dutch Cohort. BMJ Open 2021, 11, e047347. [Google Scholar] [CrossRef] [PubMed]

- Pfeifer, N.; Zaboli, A.; Ciccariello, L.; Bernhart, O.; Troi, C.; Fanni Canelles, M.; Ammari, C.; Fioretti, A.; Turcato, G. Nomogramm zur Risikostratifizierung von COVID-19-Patienten mit interstitieller Pneumonie in der Notaufnahme: Eine retrospektive multizentrische Studie. Med. Klin. Intensivmed. Notfallmed. 2022, 117, 120–128. [Google Scholar] [CrossRef] [PubMed]

- Rahman, T.; Al-Ishaq, F.A.; Al-Mohannadi, F.S.; Mubarak, R.S.; Al-Hitmi, M.H.; Islam, K.R.; Khandakar, A.; Hssain, A.A.; Al-Madeed, S.; Zughaier, S.M.; et al. Mortality Prediction Utilizing Blood Biomarkers to Predict the Severity of COVID-19 Using Machine Learning Technique. Diagnostics 2021, 11, 1582. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Hoque, M.E.; Ibtehaz, N.; Kashem, S.B.; Masud, R.; Shampa, L.; Hasan, M.M.; Islam, M.T.; Al-Maadeed, S.; et al. Development and Validation of an Early Scoring System for Prediction of Disease Severity in COVID-19 Using Complete Blood Count Parameters. IEEE Access 2021, 9, 120422–120441. [Google Scholar] [CrossRef]

- Sánchez-Montañés, M.; Rodríguez-Belenguer, P.; Serrano-López, A.J.; Soria-Olivas, E.; Alakhdar-Mohmara, Y. Machine Learning for Mortality Analysis in Patients with COVID-19. Int. J. Environ. Res. Public Health 2020, 17, 8386. [Google Scholar] [CrossRef]

- Wang, H.; Ai, H.; Fu, Y.; Li, Q.; Cui, R.; Ma, X.; Ma, Y.; Wang, Z.; Liu, T.; Long, Y.; et al. Development of an Early Warning Model for Predicting the Death Risk of Coronavirus Disease 2019 Based on Data Immediately Available on Admission. Front. Med. 2021, 8, 699243. [Google Scholar] [CrossRef]

- Wang, K.; Zuo, P.; Liu, Y.; Zhang, M.; Zhao, X.; Xie, S.; Zhang, H.; Chen, X.; Liu, C. Clinical and Laboratory Predictors of In-Hospital Mortality in Patients with Coronavirus Disease-2019: A Cohort Study in Wuhan, China. Clin. Infect. Dis. 2020, 71, 2079–2088. [Google Scholar] [CrossRef]

- Xiao, F.; Sun, R.; Sun, W.; Xu, D.; Lan, L.; Li, H.; Liu, H.; Xu, H. Radiomics Analysis of Chest CT to Predict the Overall Survival for the Severe Patients of COVID-19 Pneumonia. Phys. Med. Biol. 2021, 66, 105008. [Google Scholar] [CrossRef]

- Yu, J.; Nie, L.; Wu, D.; Chen, J.; Yang, Z.; Zhang, L.; Li, D.; Zhou, X. Prognostic Value of a Clinical Biochemistry-Based Nomogram for Coronavirus Disease 2019. Front. Med. 2021, 7, 597791. [Google Scholar] [CrossRef]

- Xiao, M.; Hou, M.; Liu, X.; Li, Z.; Zhao, Q. Clinical characteristics of 71 patients with coronavirus disease 2019. J. Cent. South Univ. Med. Sci. 2020, 45, 790–796. [Google Scholar] [CrossRef]

- Magro, F.; Gionchetti, P.; Eliakim, R.; Ardizzone, S.; Armuzzi, A.; Barreiro-de Acosta, M.; Burisch, J.; Gecse, K.B.; Hart, A.L.; Hindryckx, P.; et al. Third European Evidence-Based Consensus on Diagnosis and Management of Ulcerative Colitis. Part 1: Definitions, Diagnosis, Extra-Intestinal Manifestations, Pregnancy, Cancer Surveillance, Surgery, and Ileo-Anal Pouch Disorders. J. Crohns Colitis 2017, 11, 649–670. [Google Scholar] [CrossRef] [PubMed]

- Dorjee, K.; Kim, H.; Bonomo, E.; Dolma, R. Prevalence and Predictors of Death and Severe Disease in Patients Hospitalized Due to COVID-19: A Comprehensive Systematic Review and Meta-Analysis of 77 Studies and 38,000 Patients. PLoS ONE 2020, 15, e0243191. [Google Scholar] [CrossRef]

- Zhao, Q.; Meng, M.; Kumar, R.; Wu, Y.; Huang, J.; Lian, N.; Deng, Y.; Lin, S. The Impact of COPD and Smoking History on the Severity of COVID-19: A Systemic Review and Meta-Analysis. J. Med. Virol. 2020, 92, 1915–1921. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharyya, A.; Seth, A.; Srivast, N.; Imeokparia, M.; Rai, S. Coronavirus (COVID-19): A Systematic Review and Meta-Analysis to Evaluate the Significance of Demographics and Comorbidities. Res. Sq. 2021. [Google Scholar] [CrossRef]

- Ho, J.S.Y.; Fernando, D.I.; Chan, M.Y.; Sia, C.H. Obesity in COVID-19: A Systematic Review and Meta-Analysis. Ann. Acad. Med. Singap. 2020, 49, 996–1008. [Google Scholar] [CrossRef]

- Zhao, B.-C.; Liu, W.-F.; Lei, S.-H.; Zhou, B.-W.; Yang, X.; Huang, T.-Y.; Deng, Q.-W.; Xu, M.; Li, C.; Liu, K.-X. Prevalence and Prognostic Value of Elevated Troponins in Patients Hospitalised for Coronavirus Disease 2019: A Systematic Review and Meta-Analysis. J. Intensive Care 2020, 8, 88. [Google Scholar] [CrossRef]

- Del Zompo, F.; De Siena, M.; Ianiro, G.; Gasbarrini, A.; Pompili, M.; Ponziani, F.R. Prevalence of Liver Injury and Correlation with Clinical Outcomes in Patients with COVID-19: Systematic Review with Meta-Analysis. Eur. Rev. Med. Pharmacol. Sci. 2020, 24, 13072–13088. [Google Scholar] [CrossRef]

- Silver, S.A.; Beaubien-Souligny, W.; Shah, P.S.; Harel, S.; Blum, D.; Kishibe, T.; Meraz-Munoz, A.; Wald, R.; Harel, Z. The Prevalence of Acute Kidney Injury in Patients Hospitalized with COVID-19 Infection: A Systematic Review and Meta-Analysis. Kidney Med. 2020, 3, 83–93.e1. [Google Scholar] [CrossRef]

- Ruan, Q.; Yang, K.; Wang, W.; Jiang, L.; Song, J. Clinical Predictors of Mortality Due to COVID-19 Based on an Analysis of Data of 150 Patients from Wuhan, China. Intensive Care Med. 2020, 46, 846–848. [Google Scholar] [CrossRef]

- Steyerberg, E. Clinical Prediction Models: A Practical Approach to Development, Validation, and Updating; Springer: London, UK, 2009; pp. 95–112. [Google Scholar]

- Zhang, H.; Shi, T.; Wu, X.; Zhang, X.; Wang, K.; Bean, D.; Dobson, R.; Teo, J.T.; Sun, J.; Zhao, P.; et al. Risk Prediction for Poor Outcome and Death in Hospital In-Patients with COVID-19: Derivation in Wuhan, China and External Validation in London, UK. medRxiv 2020. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).