Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Group

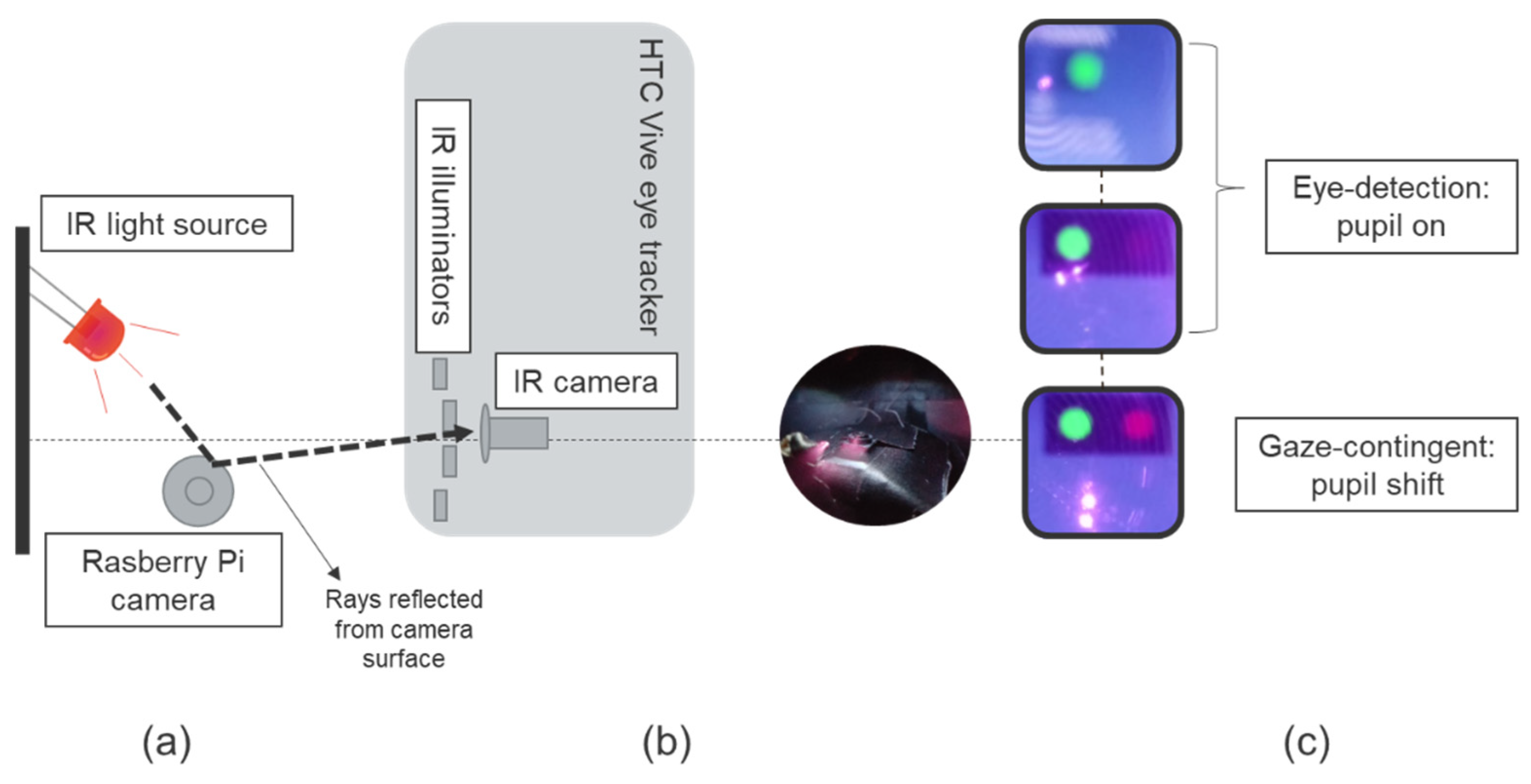

2.2. Set-Up

2.3. Study Parameters

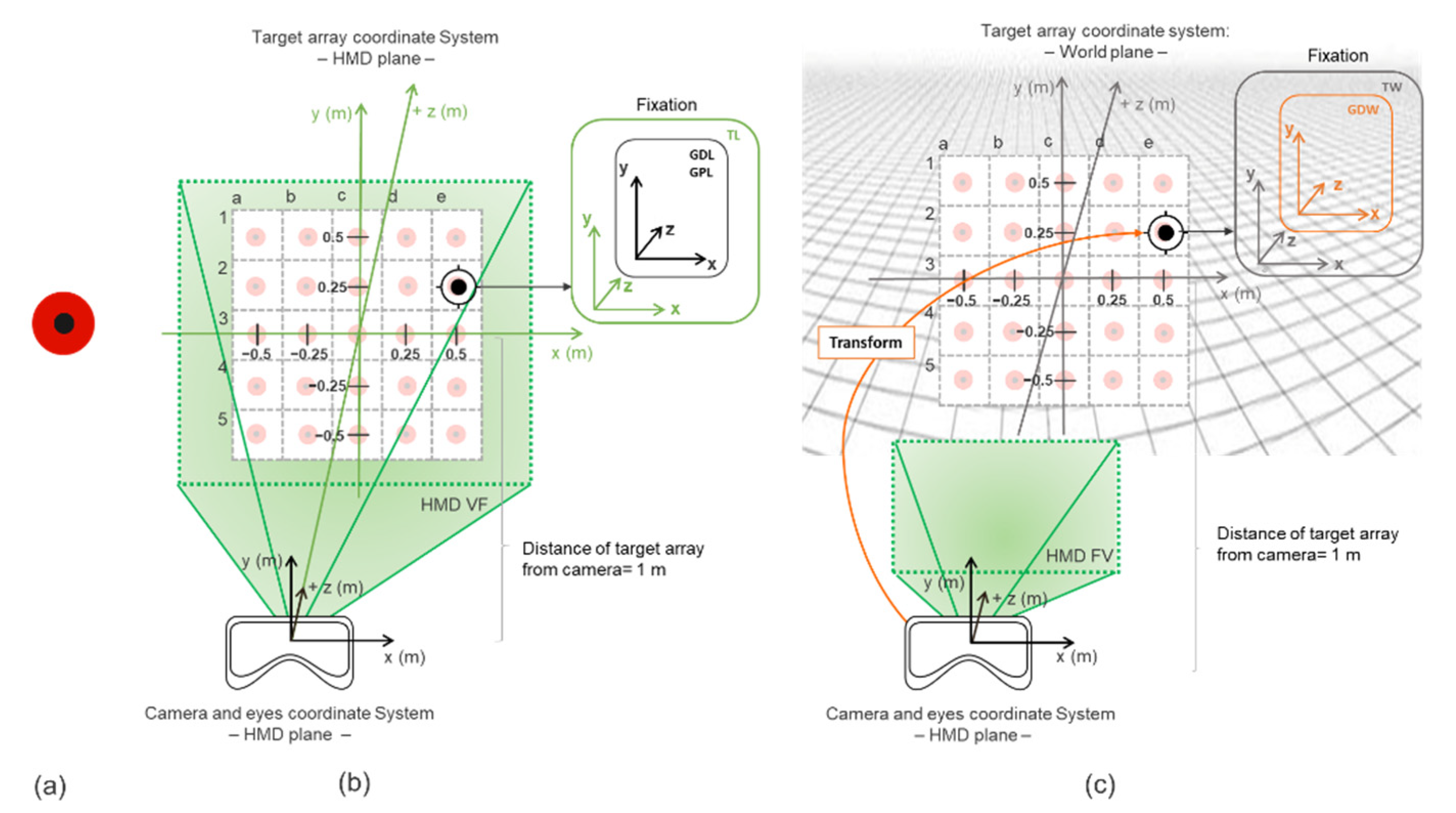

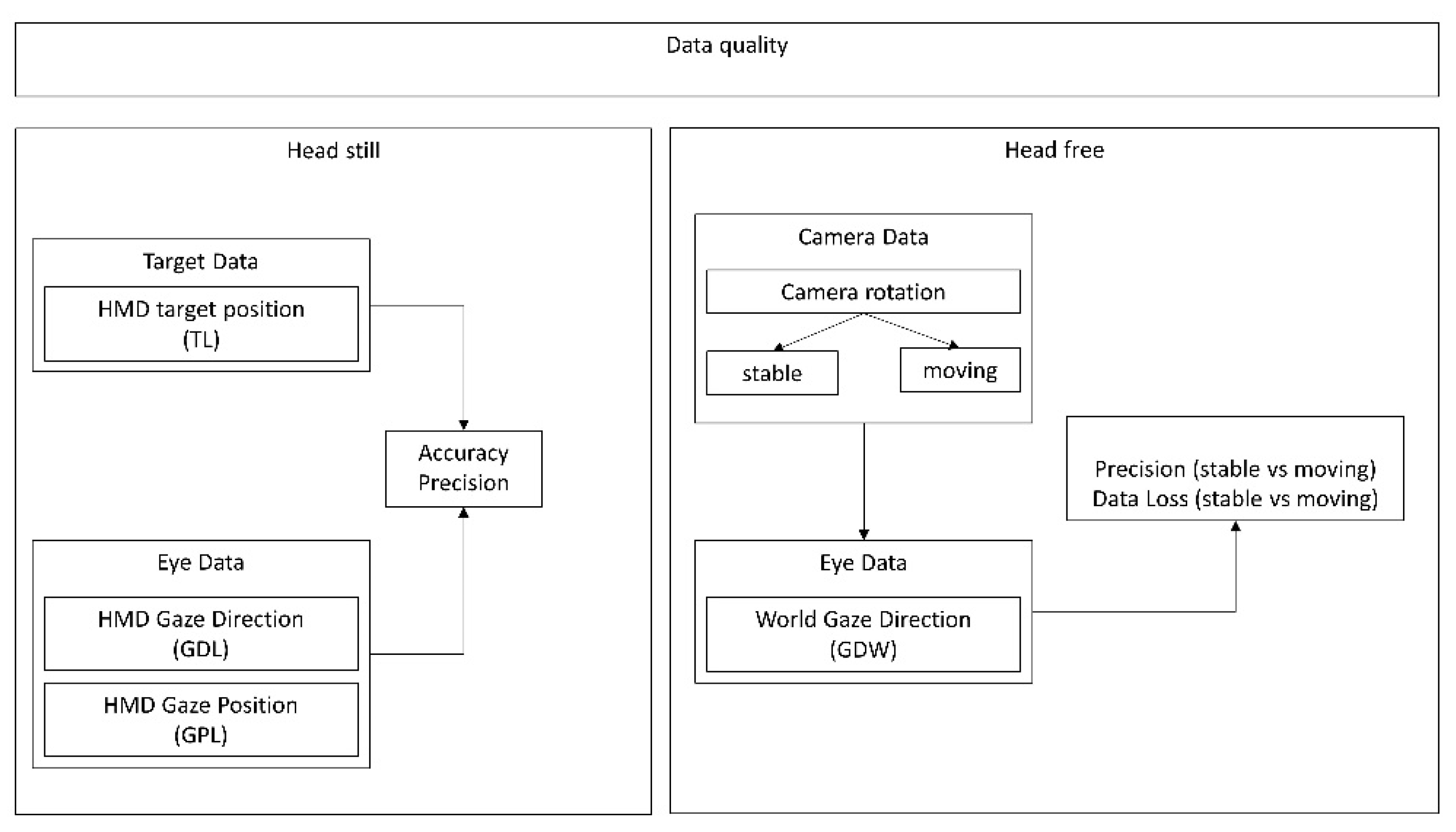

2.3.1. Eye-Tracking Accuracy and Precision Measurements: Head-Still and Head-Free Tests

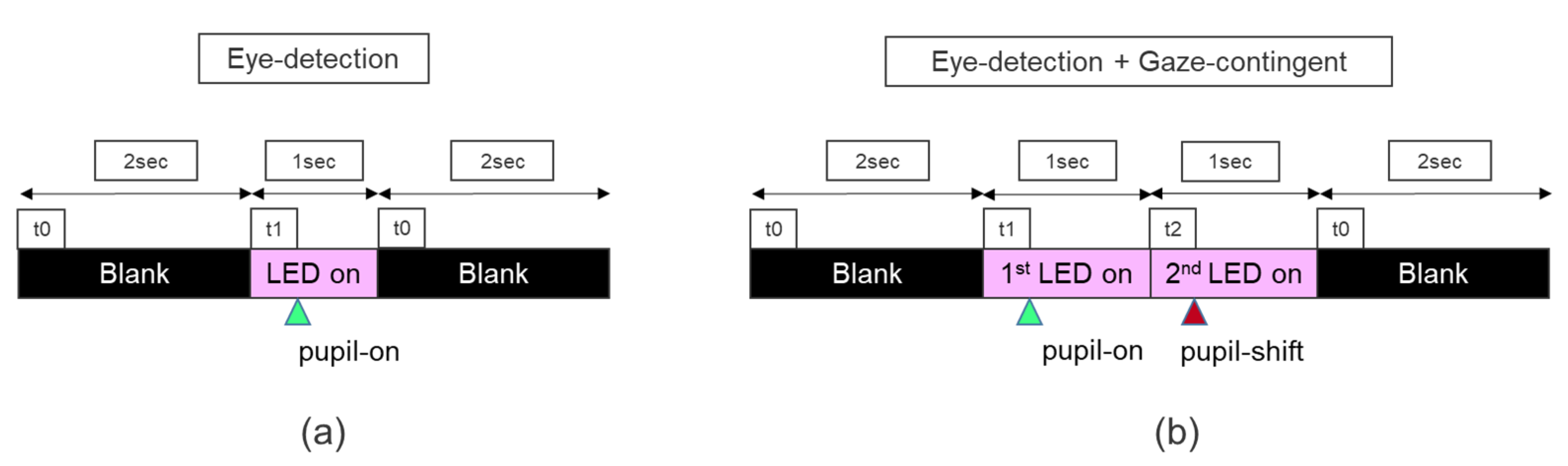

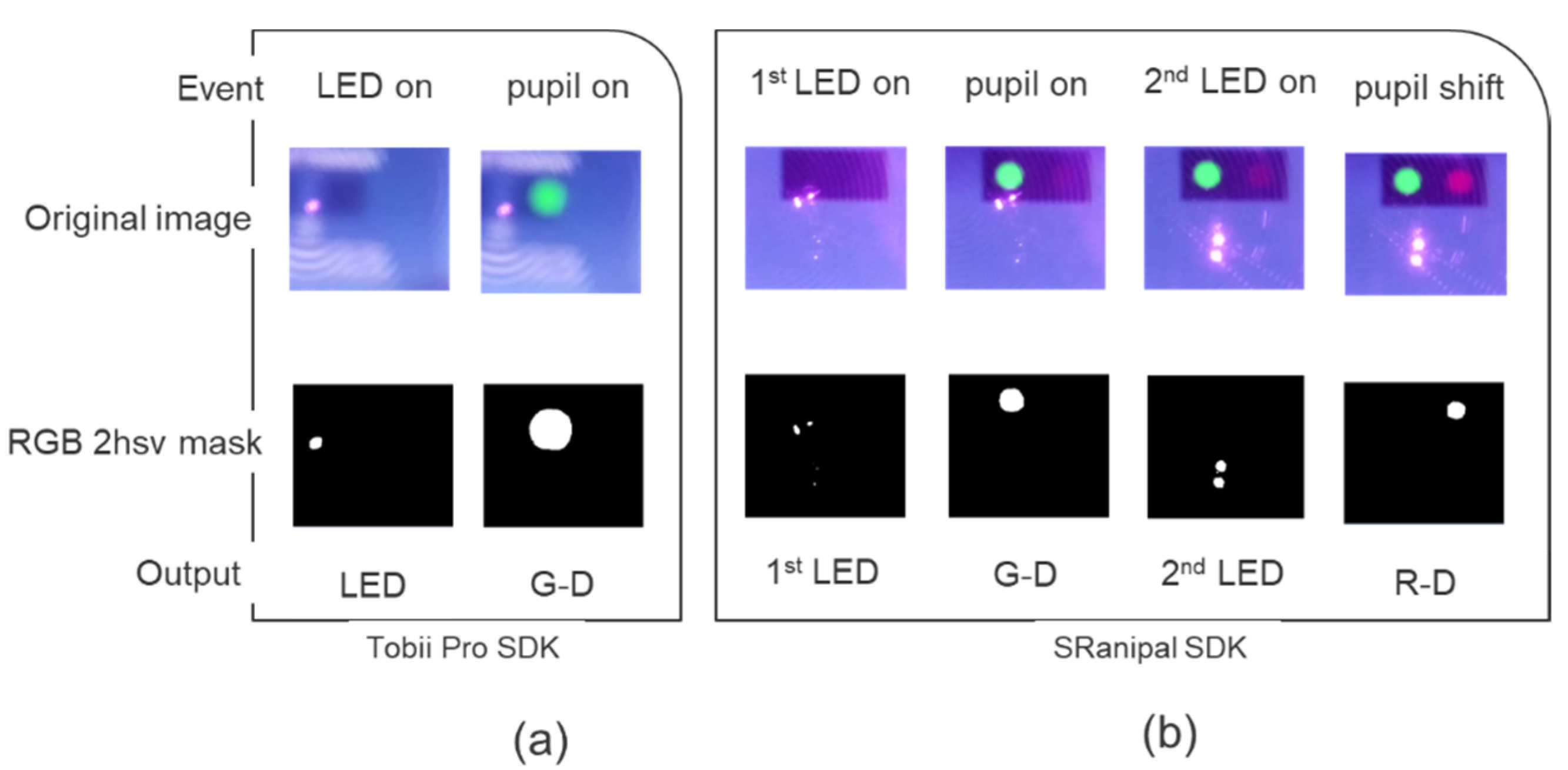

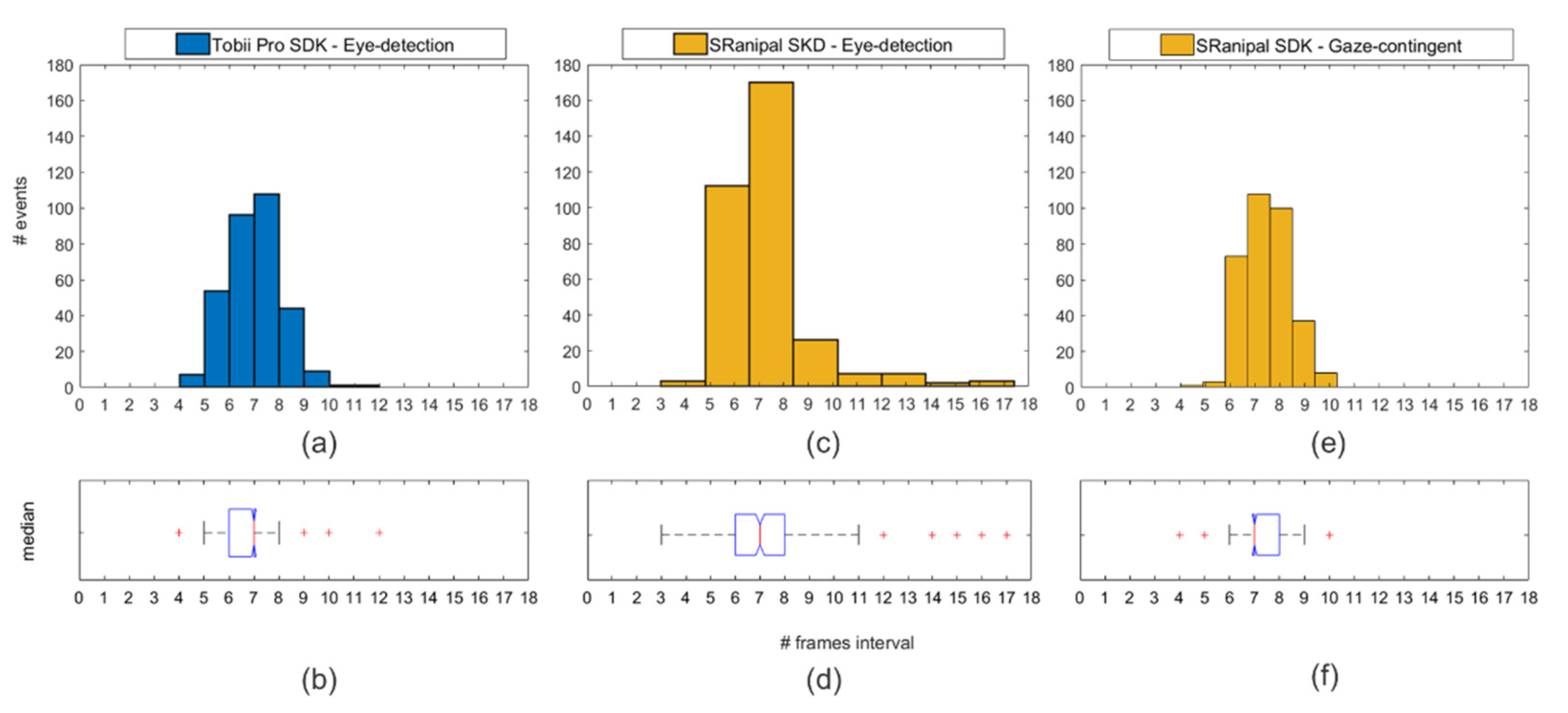

2.3.2. Eye-Tracking Temporal Precision Measurements: Eye-Detection and Gaze-Contingent Tests

2.4. Statistical Analysis

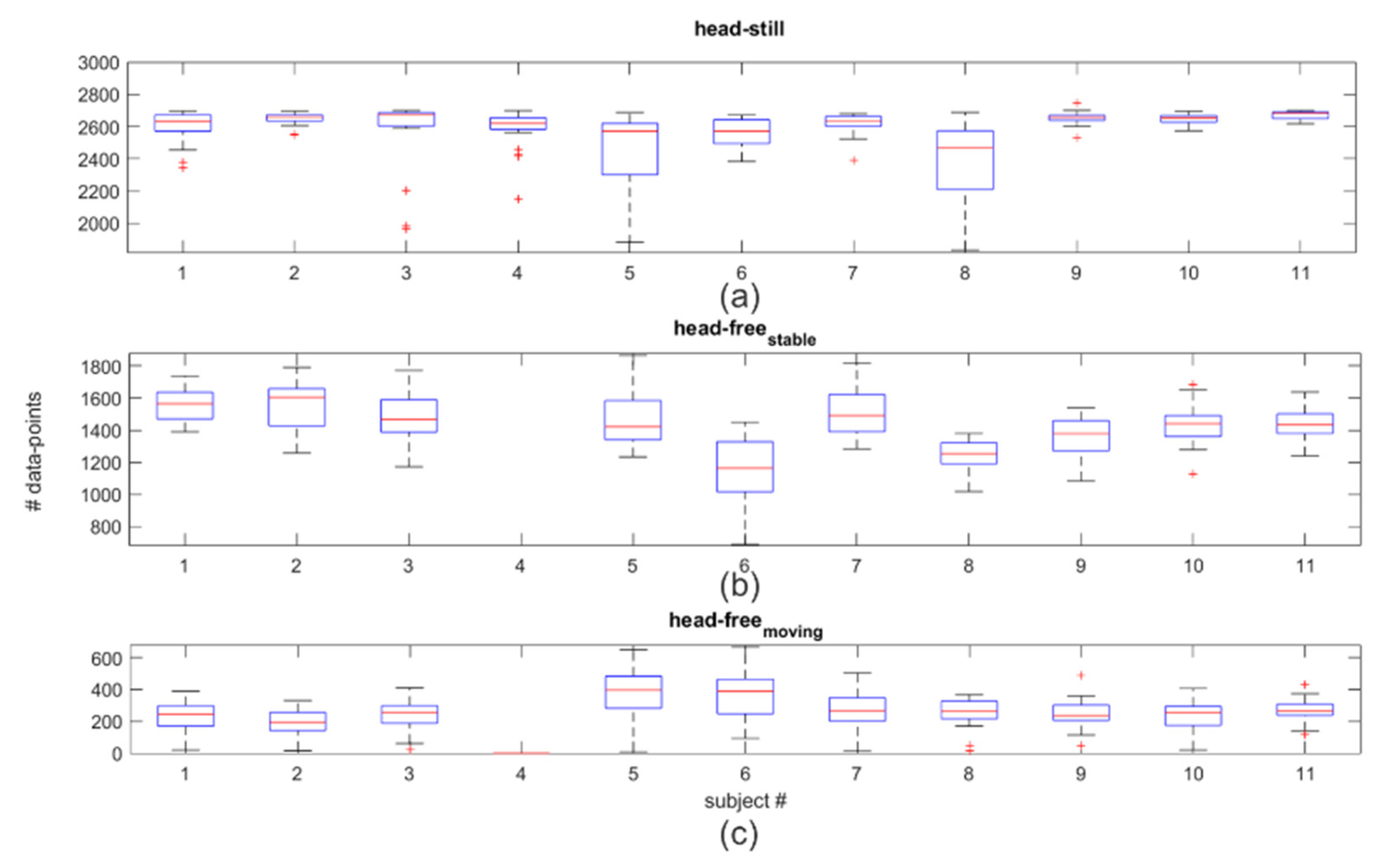

2.4.1. Pre-Processing: Eye-Tracking Accuracy and Precision

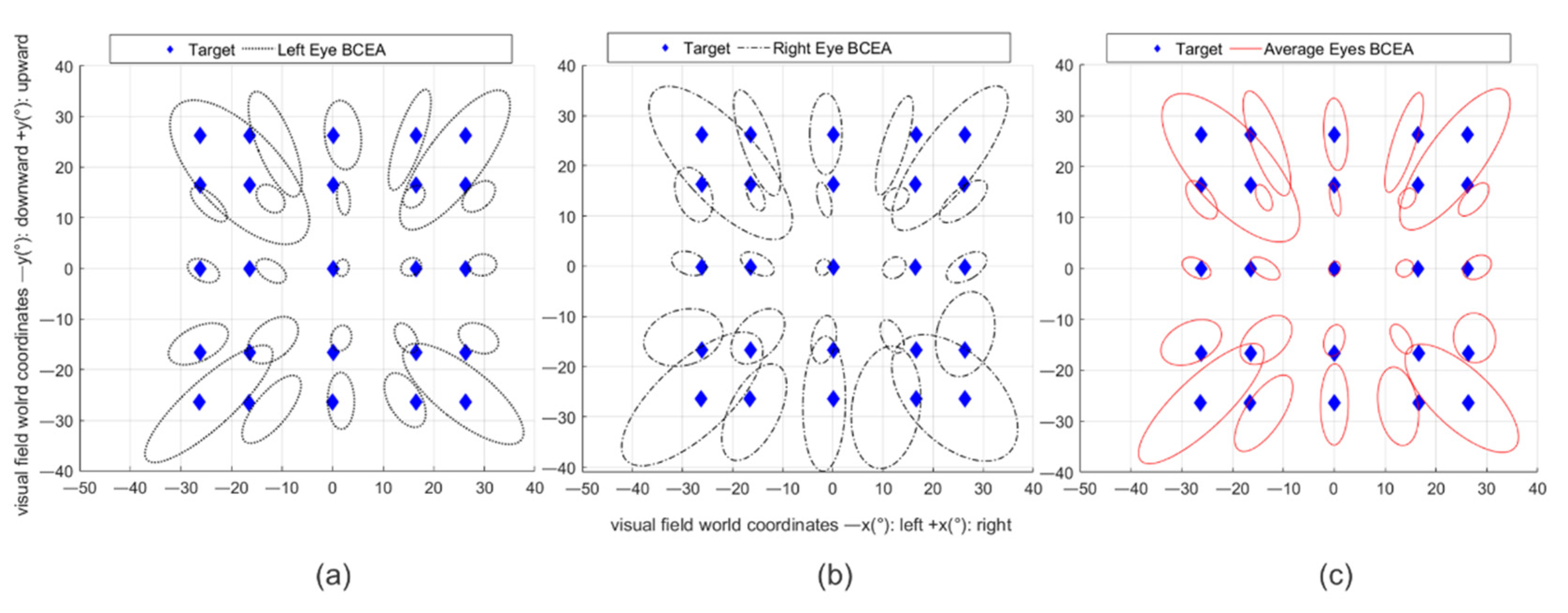

2.4.2. Eye-Tracking Accuracy and Precision

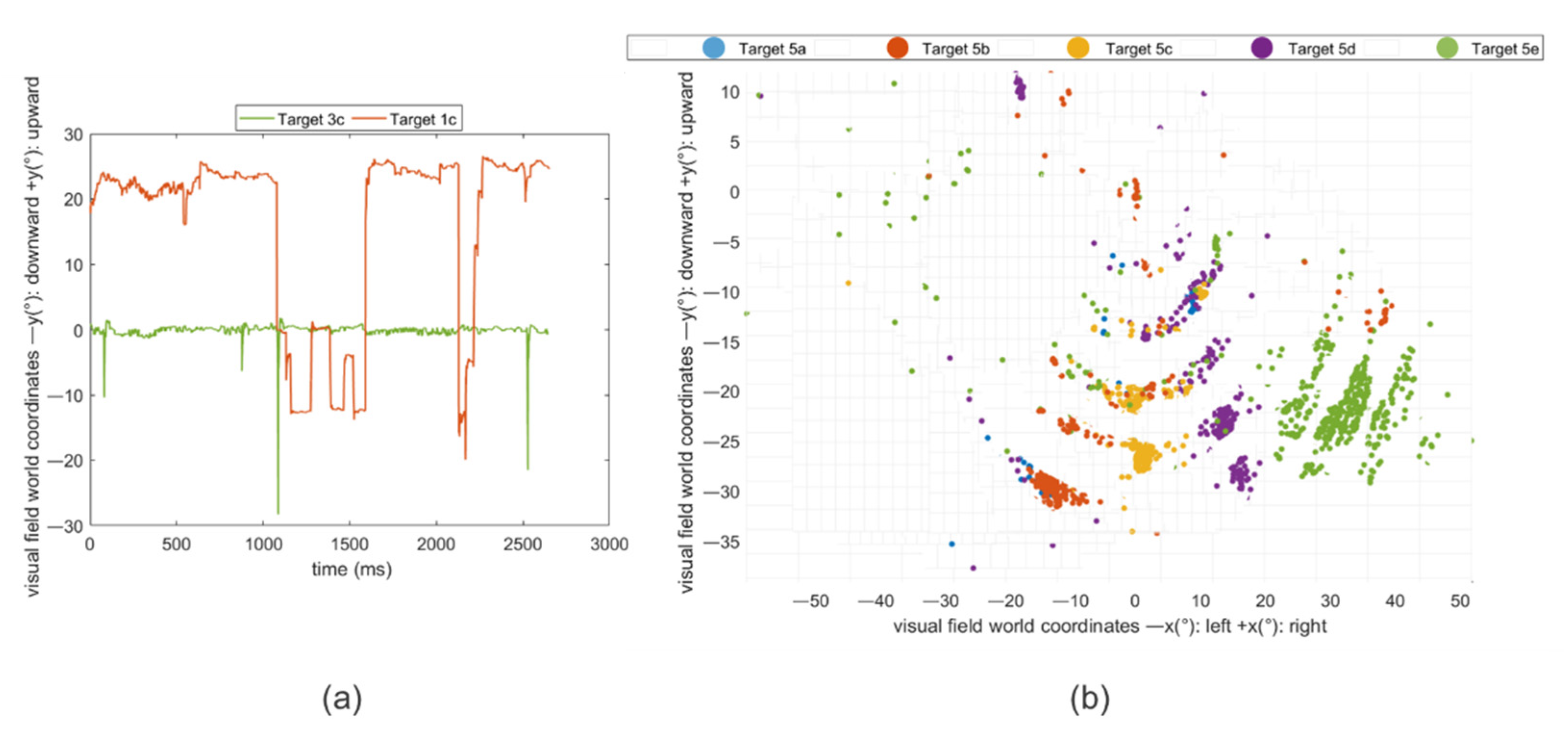

2.4.3. Eye-Tracking Temporal Precision

3. Results

3.1. Pre-Processing: Eye-Tracking Accuracy and Precision

3.2. Eye-Tracking Accuracy and Precision

3.3. Eye-Tracking Accuracy and Precision

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wroblewski, D.; Francis, B.A.; Sadun, A.; Vakili, G.; Chopra, V. Testing of visual field with virtual reality goggles in manual and visual grasp modes. Biomed. Res. Int. 2014, 2014. [Google Scholar] [CrossRef] [PubMed]

- Hotta, K.; Prima, O.D.A.; Imabuchi, T.; Ito, H. VR-HMD Eye Tracker in Active Visual Field Testing. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1843–1847. [Google Scholar]

- Ogura, K.; Sugano, M.; Takabatake, S.; Naitoh, Y.; Nakaoka, K. VR Application for Visual Field Measurement of Unilateral Spatial Neglect Patients Using Eye Tracking. In Proceedings of the 2019 IEEE International Conference on Healthcare Informatics (ICHI), Xi’an, China, 10–13 June 2019; pp. 1–2. [Google Scholar]

- Masnadi, S.; Williamson, B.; González, A.N.V.; LaViola, J.J. VRiAssist: An Eye-Tracked Virtual Reality Low Vision Assistance Tool. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 809–810. [Google Scholar]

- Sayed, A.M.; Abdel-Mottaleb, M.; Kashem, R.; Roongpoovapatr, V.; Elsawy, A.; Abdel-Mottaleb, M.; Parrish II, R.K.; Abou Shousha, M. Expansion of Peripheral Visual Field with Novel Virtual Reality Digital Spectacles. Am. J. Ophthalmol. 2020, 210, 125–135. [Google Scholar] [CrossRef] [PubMed]

- Kasha, J.R., Jr. Visual Field Perimetry Using Virtual Reality Glasses. U.S. Patent 5737060A, 7 April 1998. [Google Scholar]

- Hollander, D.; Volpe, N.; Moster, M.; Liu, G.; Balcer, L.; Judy, K.; Galetta, S. Use of a portable head mounted perimetry system to assess bedside visual fields. Br. J. Ophthalmol. 2000, 84, 1185–1190. [Google Scholar] [CrossRef] [PubMed]

- Plummer, D.J.; Lopez, A.; Azen, S.P.; LaBree, L.; Bartsch, D.-U.G.; Sadun, A.A.; Freeman, W.R. Correlation between static automated and scanning laser entoptic perimetry in normal subjects and glaucoma patients. Ophthalmology 2000, 107, 1693–1701. [Google Scholar] [CrossRef]

- Tsapakis, S.; Papaconstantinou, D.; Diagourtas, A.; Droutsas, K.; Andreanos, K.; Moschos, M.M.; Brouzas, D. Visual field examination method using virtual reality glasses compared with the Humphrey perimeter. Clin. Ophthalmol. (Auckl. Nz) 2017, 11, 1431. [Google Scholar] [CrossRef]

- Erichev, V.; Ermolaev, A.; Antonov, A.; Grigoryan, G.; Kosova, J. New visual field testing possibilities (a preliminary report). Eur. PMC 2018. [Google Scholar] [CrossRef]

- Mees, L.; Upadhyaya, S.; Kumar, P.; Kotawala, S.; Haran, S.; Rajasekar, S.; Friedman, D.S.; Venkatesh, R. Validation of a Head-mounted Virtual Reality Visual Field Screening Device. J. Glaucoma 2020, 29, 86–91. [Google Scholar] [CrossRef] [PubMed]

- Trope, G.; Eizenman, M.; Coyle, E. Eye movement perimetry in glaucoma. Can. J. Ophthalmol. J. Can. D’ophtalmologie 1989, 24, 197–199. [Google Scholar]

- Coco-Martin, M.B.; Piñero, D.P.; Leal-Vega, L.; Hernández-Rodríguez, C.J.; Adiego, J.; Molina-Martín, A.; de Fez, D.; Arenillas, J.F. The Potential of Virtual Reality for Inducing Neuroplasticity in Children with Amblyopia. J. Ophthalmol. 2020, 2020. [Google Scholar] [CrossRef]

- Baloh, R.W.; Sills, A.W.; Kumley, W.E.; Honrubia, V. Quantitative measurement of saccade amplitude, duration, and velocity. Neurology 1975, 25, 1065. [Google Scholar] [CrossRef]

- Bahill, A.; Brockenbrough, A.; Troost, B. Variability and development of a normative data base for saccadic eye movements. Investig. Ophthalmol. Vis. Sci. 1981, 21, 116–125. [Google Scholar]

- Becker, W. The neurobiology of saccadic eye movements. Metrics. Rev. Oculomot. Res. 1989, 3, 13. [Google Scholar]

- Thickbroom, G.; Knezevic, W.; Carroll, W.; Mastaglia, F. Saccade onset and offset lambda waves: Relation to pattern movement visually evoked potentials. Brain Res. 1991, 551, 150–156. [Google Scholar] [CrossRef]

- Rayner, K.; Foorman, B.R.; Perfetti, C.A.; Pesetsky, D.; Seidenberg, M.S. How psychological science informs the teaching of reading. Psychol. Sci. Public Interest 2001, 2, 31–74. [Google Scholar] [CrossRef] [PubMed]

- Behrens, F.; MacKeben, M.; Schröder-Preikschat, W. An improved algorithm for automatic detection of saccades in eye movement data and for calculating saccade parameters. Behav. Res. Methods 2010, 42, 701–708. [Google Scholar] [CrossRef] [PubMed]

- Gibaldi, A.; Vanegas, M.; Bex, P.J.; Maiello, G. Evaluation of the Tobii EyeX Eye tracking controller and Matlab toolkit for research. Behav. Res. Methods 2017, 49, 923–946. [Google Scholar] [CrossRef] [PubMed]

- Brooks, B.A.; Fuchs, A.F. Influence of stimulus parameters on visual sensitivity during saccadic eye movement. Vis. Res. 1975, 15, 1389–1398. [Google Scholar] [CrossRef]

- Riggs, L.A.; Volkmann, F.C.; Moore, R.K.; Ellicott, A.G. Perception of suprathreshold stimuli during saccadic eye movement. Vis. Res. 1982, 22, 423–428. [Google Scholar] [CrossRef]

- Raspberry Pi 3 Model B. Available online: https://web.archive.org/web/20210203153003if_/https://www.raspberrypi.org/products/raspberry-pi-3-model-b/ (accessed on 2 February 2021).

- Raspberry Pi Camera Module v2. Available online: https://web.archive.org/web/20210119155729/https://www.raspberrypi.org/products/camera-module-v2/ (accessed on 19 January 2021).

- Vive Pro Eye. Available online: https://web.archive.org/web/20201111190618/https://www.vive.com/eu/product/vive-pro-eye/overview/ (accessed on 11 November 2020).

- Tobii Pro SDK. Available online: https://web.archive.org/web/20200622201031/https://www.tobiipro.com/product-listing/tobii-pro-sdk/ (accessed on 22 June 2020).

- VIVE Eye Tracking SDK (SRanipal). Available online: https://web.archive.org/web/20200923043350/https://developer.vive.com/resources/vive-sense/sdk/vive-eye-tracking-sdk-sranipal/ (accessed on 23 September 2020).

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Holmqvist, K.; Zemblys, R.; Beelders, T. Magnitude and nature of variability in eye-tracking data. In Proceedings of the European Conference on Eye Movements (ECEM), Wuppertal, Germany, 20–24 August 2017. [Google Scholar]

- Orquin, J.L.; Holmqvist, K. Threats to the validity of eye-movement research in psychology. Behav. Res. Methods 2018, 50, 1645–1656. [Google Scholar] [CrossRef]

- Clemotte, A.; Velasco, M.A.; Torricelli, D.; Raya, R.; Ceres Ruiz, R. Accuracy and precision of the Tobii X2–30 eye-tracking under non ideal conditions. In Proceedings of the 2nd International Congress on Neurotechnology, Electronics and Informatics, Rome, Italy, 25–26 October 2014; pp. 111–116. [Google Scholar] [CrossRef]

- Reingold, E.M. Eye tracking research and technology: Towards objective measurement of data quality. Vis. Cogn. 2014, 22, 635–652. [Google Scholar] [CrossRef]

- Blignaut, P.; Beelders, T. The precision of eye-trackers: A case for a new measure. In Proceedings of the Symposium on eye tracking research and applications (ETRA ’12), Santa Barbara, CA, USA, 28–30 March 2012; pp. 289–292. [Google Scholar]

- Dahlberg, J. Eye tracking with eyeglasses. Master’s Thesis, Umea University, Umea, Sweden, 2010. [Google Scholar]

- Niehorster, D.C.; Santini, T.; Hessels, R.S.; Hooge, I.T.; Kasneci, E.; Nyström, M. The impact of slippage on the data quality of head-worn eye trackers. Behav. Res. Methods 2020, 52, 1140–1160. [Google Scholar] [CrossRef]

- Lohr, D.J.; Friedman, L.; Komogortsev, O.V. Evaluating the Data Quality of Eye Tracking Signals from a Virtual Reality System: Case Study using SMI’s Eye-Tracking HTC Vive. arXiv 2019, arXiv:1912.02083. [Google Scholar]

- Feit, A.M.; Williams, S.; Toledo, A.; Paradiso, A.; Kulkarni, H.; Kane, S.; Morris, M.R. Toward everyday gaze input: Accuracy and precision of eye tracking and implications for design. In Proceedings of the 2017 Chi Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1118–1130. [Google Scholar]

- Lynn, M.H.; Luo, G.; Tomasi, M.; Pundlik, S.; Houston, K.E. Measuring Virtual Reality Headset Resolution and Field of View: Implications for Vision Care Applications. Optom. Vis. Sci. 2020, 97, 573–582. [Google Scholar] [CrossRef]

- Kothari, R.S.; Chaudhary, A.K.; Bailey, R.J.; Pelz, J.B.; Diaz, G.J. EllSeg: An Ellipse Segmentation Framework for Robust Gaze Tracking. arXiv 2020, arXiv:2007.09600. [Google Scholar]

- Guestrin, E.D.; Eizenman, M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Tripathi, S.; Guenter, B. A statistical approach to continuous self-calibrating eye gaze tracking for head-mounted virtual reality systems. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer VISION (WACV), Santa Rosa, CA, USA, 27–29 March 2017; pp. 862–870. [Google Scholar]

- Blignaut, P. Using smooth pursuit calibration for difficult-to-calibrate participants. J. Eye Mov. Res. 2017, 4. [Google Scholar] [CrossRef]

- Drewes, H.; Pfeuffer, K.; Alt, F. Time-and space-efficient eye tracker calibration. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver, CO, USA, 25–28 June 2019; pp. 1–8. [Google Scholar]

- Shanidze, N.M.; Velisar, A. Eye, head, and gaze contributions to smooth pursuit in macular degeneration. J. Neurophysiol. 2020, 124, 134–144. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Mulvey, F. Eye tracker data quality: What it is and how to measure it. In Proceedings of the Symposium on eye tracking research and applications (ETRA ‘12), Santa Barbara, CA, USA, 28–30 March 2012; pp. 45–52. [Google Scholar]

- Nouri-Mahdavi, K. Selecting visual field tests and assessing visual field deterioration in glaucoma. Can. J. Ophthalmol. 2014, 49, 497–505. [Google Scholar] [CrossRef]

- Saunders, D.R.; Woods, R.L. Direct measurement of the system latency of gaze-contingent displays. Behav. Res. Methods 2014, 46, 439–447. [Google Scholar] [CrossRef] [PubMed]

- Loschky, L.C.; Wolverton, G.S. How late can you update gaze-contingent multiresolutional displays without detection? ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2007, 3, 1–10. [Google Scholar] [CrossRef]

- Thomas, M.; Geltmacher, H. Combat simulator display development. Inf. Disp. 1993, 9, 23. [Google Scholar]

- Ohshima, T.; Yamamoto, H.; Tamura, H. Gaze-directed adaptive rendering for interacting with virtual space. In Proceedings of Proceedings of the IEEE 1996 Virtual Reality Annual International Symposium, Santa Clara, CA, USA, 30 March–3 April 1996; pp. 103–110. [Google Scholar]

- Geisler, W.S.; Perry, J.S. Real-time foveated multiresolution system for low-bandwidth video communication. In Proceedings of the Human Vision and Electronic Imaging III, San Jose, CA, USA, 24 January 1998; pp. 294–305. [Google Scholar]

- Albert, R.; Patney, A.; Luebke, D.; Kim, J. Latency requirements for foveated rendering in virtual reality. Acm Trans. Appl. Percept. (TAP) 2017, 14, 1–13. [Google Scholar] [CrossRef]

| VF (°) | −27° | −13° | 0° | 13° | 27° | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L | R | B | L | R | B | L | R | B | L | R | B | L | R | B | |

| 27° | 10.77 | 10.09 | 9.95 | 5.84 | 5.16 | 5.28 | 3.05 | 3.63 | 3.1 | 5.13 | 4.53 | 4.58 | 8.01 | 8.6 | 7.93 |

| 2.64 | 2.67 | 2.29 | 2.27 | 1.91 | 1.86 | 2.84 | 4.29 | 2.79 | 1.9 | 1.98 | 1.72 | 3.18 | 2.18 | 2.25 | |

| 13° | 4.21 | 3.66 | 3.61 | 3.8 | 3.47 | 3.57 | 2.87 | 3.19 | 2.96 | 3.28 | 3.67 | 3.36 | 3.08 | 4.82 | 3.55 |

| 2.16 | 2.4 | 1.94 | 2.31 | 2.11 | 1.87 | 1.87 | 2.1 | 1.67 | 2.12 | 2.06 | 1.75 | 2.22 | 2.03 | 1.88 | |

| 0° | 2.4 | 2.66 | 2.2 | 3.09 | 3.3 | 3.11 | 0.94 | 1.02 | 0.74 | 3.12 | 2.98 | 2.87 | 2.34 | 3.21 | 2.37 |

| 1.95 | 2.08 | 1.79 | 1.73 | 1.81 | 1.55 | 1.64 | 1.8 | 1.45 | 1.98 | 1.95 | 1.68 | 1.94 | 1.95 | 1.76 | |

| −13° | 4.05 | 5.32 | 4.3 | 4.14 | 4.16 | 4.1 | 3.27 | 2.34 | 2.73 | 4.78 | 3.98 | 4.33 | 3.49 | 4.98 | 3.75 |

| 2.02 | 3.4 | 2.35 | 1.63 | 3.16 | 2.03 | 1.73 | 3.91 | 2.31 | 1.87 | 2.08 | 1.67 | 2.09 | 2.35 | 1.92 | |

| −27° | 6.95 | 9.44 | 7.41 | 4.57 | 6.68 | 5.39 | 2.44 | 7.13 | 4.06 | 4.63 | 8.12 | 6.01 | 5.62 | 8.7 | 6.44 |

| 2.02 | 4.81 | 2.89 | 2.51 | 4.32 | 2.81 | 3.12 | 7.13 | 4.06 | 2.87 | 5.99 | 3.5 | 4.13 | 3.4 | 2.95 | |

| Percentile (Head-Still) | Accuracy (°) | Precision (°) |

|---|---|---|

| 25% | 3.21 | 1.63 |

| 50% | 3.98 | 1.95 |

| 75% | 4.88 | 2.51 |

| 90% | 6.06 | 3.55 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sipatchin, A.; Wahl, S.; Rifai, K. Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare 2021, 9, 180. https://doi.org/10.3390/healthcare9020180

Sipatchin A, Wahl S, Rifai K. Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare. 2021; 9(2):180. https://doi.org/10.3390/healthcare9020180

Chicago/Turabian StyleSipatchin, Alexandra, Siegfried Wahl, and Katharina Rifai. 2021. "Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability" Healthcare 9, no. 2: 180. https://doi.org/10.3390/healthcare9020180

APA StyleSipatchin, A., Wahl, S., & Rifai, K. (2021). Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare, 9(2), 180. https://doi.org/10.3390/healthcare9020180