Systematic Review of Prehospital Prediction Models for Identifying Intracerebral Haemorrhage in Suspected Stroke Patients

Abstract

1. Introduction

2. Methods

2.1. Protocol and Registration

2.2. Eligibility Criteria

2.3. Search Strategy

2.4. Study Selection

2.5. Data Extraction

- First author, year, country;

- Study design;

- Study population demographics and baseline characteristics;

- Data collection setting;

- Details of the stroke prediction model and its variables;

- The reference standard used to determine the final diagnosis;

- Reported or calculated diagnostic accuracy metrics, including sensitivity, specificity, positive and negative predictive values, and area under the receiver operating characteristic curve (AUC) with their 95% confidence intervals (CI).

2.6. Risk of Bias and Applicability Assessment

2.7. Data Synthesis

2.8. Patient and Public Involvement

3. Results

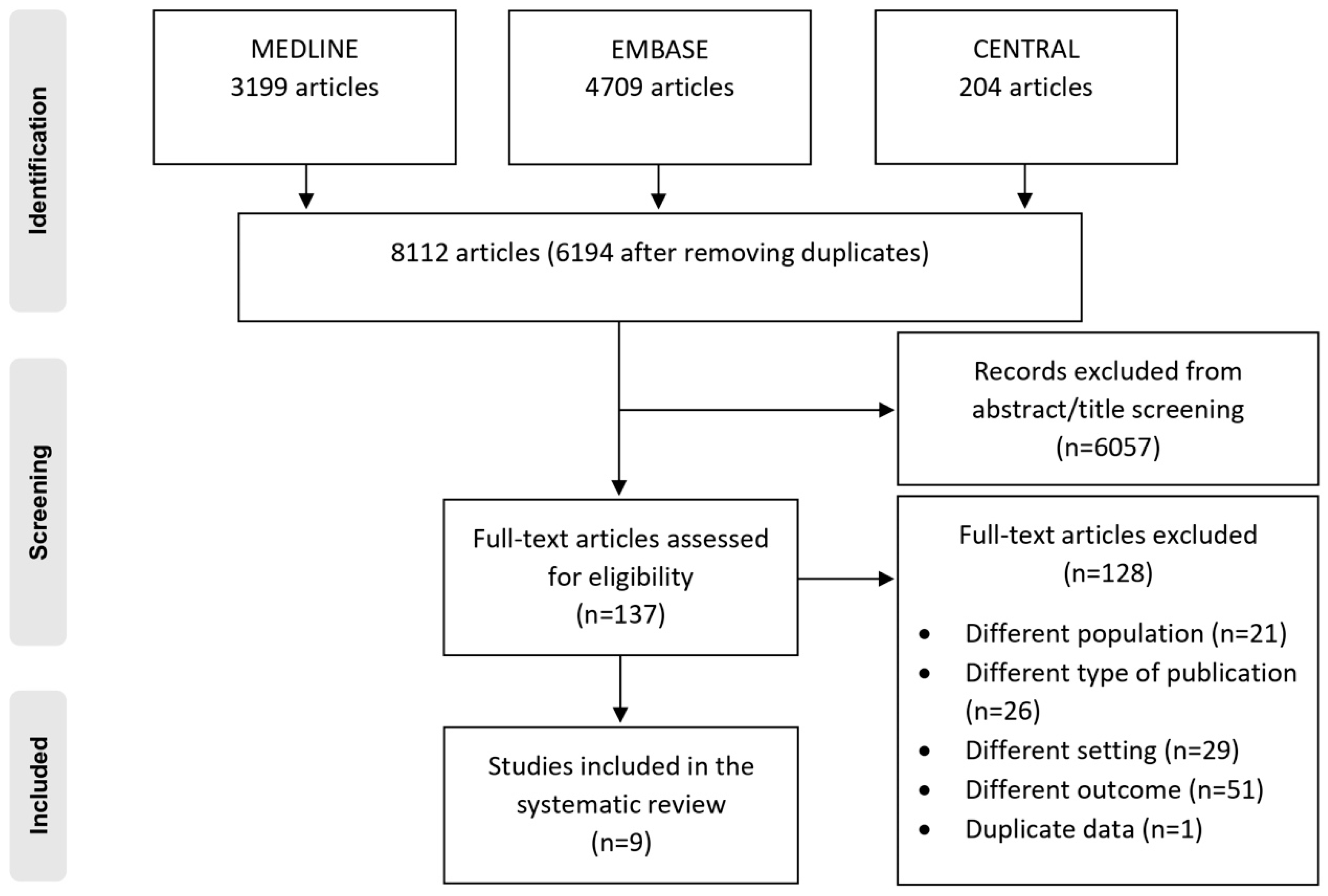

3.1. Study Selection

3.2. Characteristics of the Included Studies

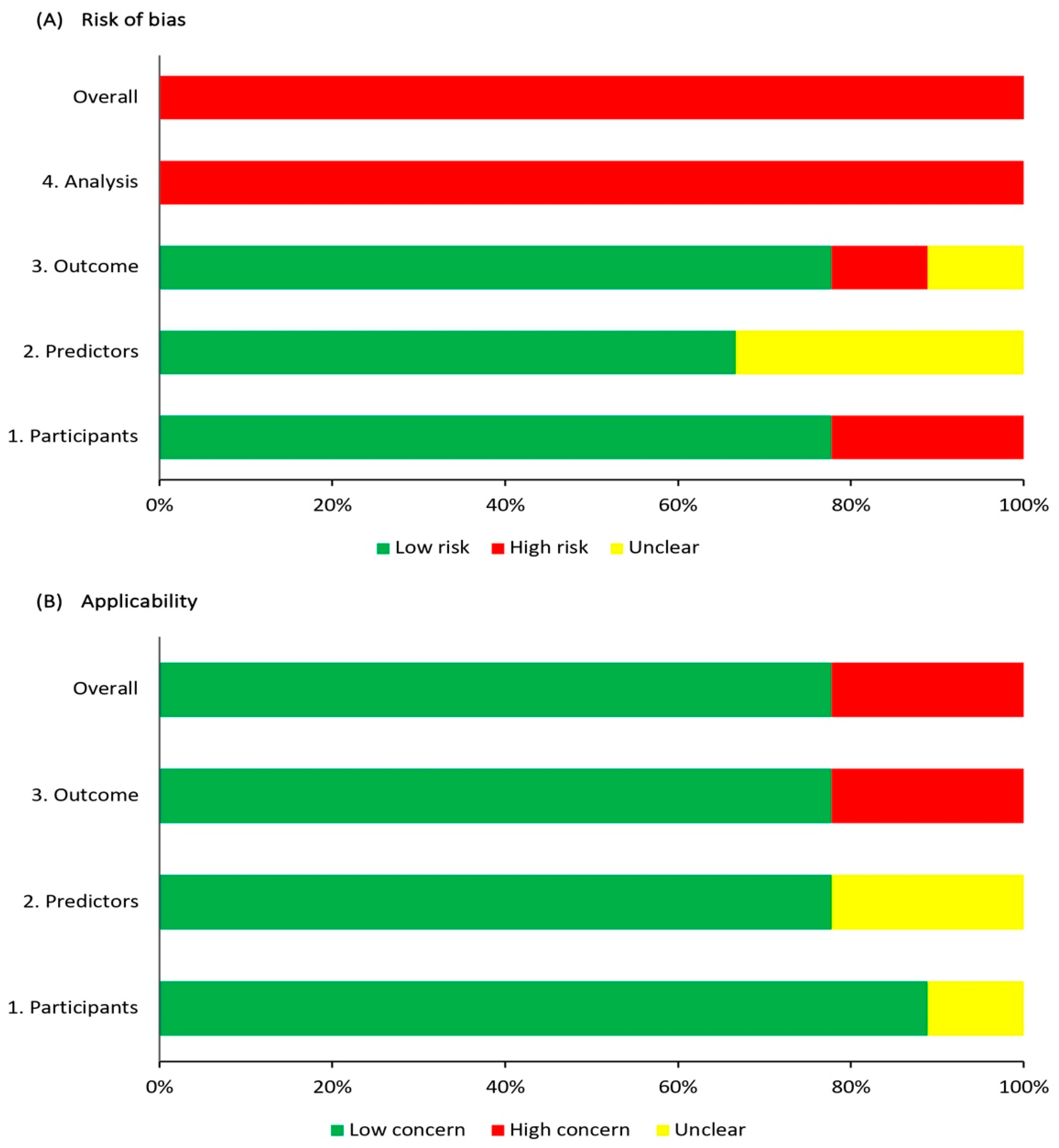

3.3. Risk of Bias and Applicability Assessment

3.4. Model Development and Final Predictor Variables

3.5. Model Performance and Validation

4. Discussion and Recommendations

Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Paroutoglou, K.; Parry-Jones, A.R. Hyperacute management of intracerebral haemorrhage. Clin. Med. 2018, 18 (Suppl. 2), s9–s12. [Google Scholar] [CrossRef]

- van Asch, C.J.; Luitse, M.J.; Rinkel, G.J.; van der Tweel, I.; Algra, A.; Klijn, C.J. Incidence, case fatality, and functional outcome of intracerebral haemorrhage over time, according to age, sex, and ethnic origin: A systematic review and meta-analysis. Lancet Neurol. 2010, 9, 167–176. [Google Scholar] [CrossRef]

- Bowry, R.; Parker, S.A.; Bratina, P.; Singh, N.; Yamal, J.M.; Rajan, S.S.; Jacob, A.P.; Phan, K.; Czap, A.; Grotta, J.C. Hemorrhage Enlargement Is More Frequent in the First 2 Hours: A Prehospital Mobile Stroke Unit Study. Stroke 2022, 53, 2352–2360. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, S.M.; Ziai, W.C.; Cordonnier, C.; Dowlatshahi, D.; Francis, B.; Goldstein, J.N.; Hemphill, J.C., 3rd; Johnson, R.; Keigher, K.M.; Mack, W.J.; et al. 2022 Guideline for the Management of Patients with Spontaneous Intracerebral Hemorrhage: A Guideline From the American Heart Association/American Stroke Association. Stroke 2022, 53, e282–e361. [Google Scholar] [CrossRef] [PubMed]

- Kleindorfer, D.O.; Miller, R.; Moomaw, C.J.; Alwell, K.; Broderick, J.P.; Khoury, J.; Woo, D.; Flaherty, M.L.; Zakaria, T.; Kissela, B.M. Designing a message for public education regarding stroke: Does FAST capture enough stroke? Stroke 2007, 38, 2864–2868. [Google Scholar] [CrossRef] [PubMed]

- Oostema, J.A.; Chassee, T.; Baer, W.; Edberg, A.; Reeves, M.J. Accuracy and Implications of Hemorrhagic Stroke Recognition by Emergency Medical Services. Prehosp. Emerg. Care 2021, 25, 796–801. [Google Scholar] [CrossRef]

- Li, G.; Lin, Y.; Yang, J.; Anderson, C.S.; Chen, C.; Liu, F.; Billot, L.; Li, Q.; Chen, X.; Liu, X.; et al. Intensive Ambulance-Delivered Blood-Pressure Reduction in Hyperacute Stroke. N. Engl. J. Med. 2024, 390, 1862–1872. [Google Scholar] [CrossRef]

- Richards, C.T.; Oostema, J.A.; Chapman, S.N.; Mamer, L.E.; Brandler, E.S.; Alexandrov, A.W.; Czap, A.L.; Martinez-Gutierrez, J.C.; Martin-Gill, C.; Panchal, A.R.; et al. Prehospital Stroke Care Part 2: On-Scene Evaluation and Management by Emergency Medical Services Practitioners. Stroke 2023, 54, 1416–1425. [Google Scholar] [CrossRef]

- Ramos-Pachón, A.; Rodríguez-Luna, D.; Martí-Fàbregas, J.; Millán, M.; Bustamante, A.; Martínez-Sánchez, M.; Serena, J.; Terceño, M.; Vera-Cáceres, C.; Camps-Renom, P.; et al. Effect of Bypassing the Closest Stroke Center in Patients with Intracerebral Hemorrhage: A Secondary Analysis of the RACECAT Randomized Clinical Trial. JAMA Neurol. 2023, 80, 1028–1036. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan-a web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef]

- Moons, K.G.M.; Wolff, R.F.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S. PROBAST: A Tool to Assess Risk of Bias and Applicability of Prediction Model Studies: Explanation and Elaboration. Ann. Intern. Med. 2019, 170, W1–W33. [Google Scholar] [CrossRef]

- MedCalc Software Ltd. Diagnostic Test Evaluation Calculator. Version 22.013. Available online: https://www.medcalc.org/calc/diagnostic_test.php (accessed on 5 October 2023).

- Woisetschläger, C.; Kittler, H.; Oschatz, E.; Bur, A.; Lang, W.; Waldenhofer, U.; Laggner, A.N.; Hirschl, M.M. Out-of-hospital diagnosis of cerebral infarction versus intracranial hemorrhage. Intensive Care Med. 2000, 26, 1561–1565. [Google Scholar] [CrossRef]

- Yamashita, S.; Kimura, K.; Iguchi, Y.; Shibazaki, K.; Watanabe, M.; Iwanaga, T. Kurashiki Prehospital Stroke Subtyping Score (KP3S) as a means of distinguishing ischemic from hemorrhagic stroke in emergency medical services. Eur. Neurol. 2011, 65, 233–238. [Google Scholar] [CrossRef] [PubMed]

- Jin, H.Q.; Wang, J.C.; Sun, Y.A.; Lyu, P.; Cui, W.; Liu, Y.Y.; Zhen, Z.G.; Huang, Y.N. Prehospital Identification of Stroke Subtypes in Chinese Rural Areas. Chin. Med. J. 2016, 129, 1041–1046. [Google Scholar] [CrossRef] [PubMed]

- Uchida, K.; Yoshimura, S.; Hiyama, N.; Oki, Y.; Matsumoto, T.; Tokuda, R.; Yamaura, I.; Saito, S.; Takeuchi, M.; Shigeta, K.; et al. Clinical Prediction Rules to Classify Types of Stroke at Prehospital Stage. Stroke 2018, 49, 1820–1827. [Google Scholar] [CrossRef] [PubMed]

- Chiquete, E.; Jiménez-Ruiz, A.; García-Grimshaw, M.; Domínguez-Moreno, R.; Rodríguez-Perea, E.; Trejo-Romero, P.; Ruiz-Ruiz, E.; Sandoval-Rodríguez, V.; Gómez-Piña, J.J.; Ramírez-García, G.; et al. Prediction of acute neurovascular syndromes with prehospital clinical features witnessed by bystanders. Neurol. Sci. 2021, 42, 3217–3224. [Google Scholar] [CrossRef]

- Uchida, K.; Yoshimura, S.; Sakakibara, F.; Kinjo, N.; Araki, H.; Saito, S.; Morimoto, T. Simplified Prehospital Prediction Rule to Estimate the Likelihood of 4 Types of Stroke: The 7-Item Japan Urgent Stroke Triage (JUST-7) Score. Prehosp. Emerg. Care 2021, 25, 465–474. [Google Scholar] [CrossRef]

- Geisler, F.; Wesirow, M.; Ebinger, M.; Kunz, A.; Rozanski, M.; Waldschmidt, C.; Weber, J.E.; Wendt, M.; Winter, B.; Audebert, H.J. Probability assessment of intracerebral hemorrhage in prehospital emergency patients. Neurol. Res. Pract. 2021, 3, 1. [Google Scholar] [CrossRef]

- Hayashi, Y.; Shimada, T.; Hattori, N.; Shimazui, T.; Yoshida, Y.; Miura, R.E.; Yamao, Y.; Abe, R.; Kobayashi, E.; Iwadate, Y.; et al. A prehospital diagnostic algorithm for strokes using machine learning: A prospective observational study. Sci. Rep. 2021, 11, 20519. [Google Scholar] [CrossRef]

- Uchida, K.; Kouno, J.; Yoshimura, S.; Kinjo, N.; Sakakibara, F.; Araki, H.; Morimoto, T. Development of Machine Learning Models to Predict Probabilities and Types of Stroke at Prehospital Stage: The Japan Urgent Stroke Triage Score Using Machine Learning (JUST-ML). Transl. Stroke Res. 2022, 13, 370–381. [Google Scholar] [CrossRef] [PubMed]

- Runchey, S.; McGee, S. Does this patient have a hemorrhagic stroke? Clinical findings distinguishing hemorrhagic stroke from ischemic stroke. JAMA 2010, 303, 2280–2286. [Google Scholar] [CrossRef] [PubMed]

- Mwita, C.C.; Kajia, D.; Gwer, S.; Etyang, A.; Newton, C.R. Accuracy of clinical stroke scores for distinguishing stroke subtypes in resource poor settings: A systematic review of diagnostic test accuracy. J. Neurosci. Rural Pract. 2014, 5, 330–339. [Google Scholar] [CrossRef]

- Lee, S.E.; Choi, M.H.; Kang, H.J.; Lee, S.J.; Lee, J.S.; Lee, Y.; Hong, J.M. Stepwise stroke recognition through clinical information, vital signs, and initial labs (CIVIL): Electronic health record-based observational cohort study. PLoS ONE 2020, 15, e0231113. [Google Scholar] [CrossRef]

- Ye, S.; Pan, H.; Li, W.; Wang, J.; Zhang, H. Development and validation of a clinical nomogram for differentiating hemorrhagic and ischemic stroke prehospital. BMC Neurol. 2023, 23, 95. [Google Scholar] [CrossRef]

- Kim, H.C.; Nam, C.M.; Jee, S.H.; Suh, I. Comparison of blood pressure-associated risk of intracerebral hemorrhage and subarachnoid hemorrhage: Korea Medical Insurance Corporation study. Hypertension 2005, 46, 393–397. [Google Scholar] [CrossRef] [PubMed]

- Riley, R.D.; Ensor, J.; Snell, K.I.E.; Harrell, F.E., Jr.; Martin, G.P.; Reitsma, J.B.; Moons, K.G.M.; Collins, G.; van Smeden, M. Calculating the sample size required for developing a clinical prediction model. BMJ 2020, 368, m441. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef]

- Almubayyidh, M.; Alghamdi, I.; Parry-Jones, A.R.; Jenkins, D. Prehospital identification of intracerebral haemorrhage: A scoping review of early clinical features and portable devices. BMJ Open 2024, 14, e079316. [Google Scholar] [CrossRef]

- Wolcott, Z.C.; English, S.W. Artificial intelligence to enhance prehospital stroke diagnosis and triage: A perspective. Front. Neurol. 2024, 15, 1389056. [Google Scholar] [CrossRef]

- Kumar, A.; Misra, S.; Yadav, A.K.; Sagar, R.; Verma, B.; Grover, A.; Prasad, K. Role of glial fibrillary acidic protein as a biomarker in differentiating intracerebral haemorrhage from ischaemic stroke and stroke mimics: A meta-analysis. Biomarkers 2020, 25, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kalra, L.-P.; Zylyftari, S.; Blums, K.; Barthelmes, S.; Humm, S.; Baum, H.; Meckel, S.; Luger, S.; Foerch, C. Abstract 57: Rapid Prehospital Diagnosis of Intracerebral Hemorrhage by Measuring GFAP on a Point-of-Care Platform. Stroke 2024, 55 (Suppl. 1), A57. [Google Scholar] [CrossRef]

| Inclusion Criteria |

|

| Exclusion Criteria |

|

| Reference, Year; Country | Study Design | Statistical Model Used | Validation Type | Sample Size (ICH Cases) | Name of the Prediction Model | Target Condition | Data Collection Setting | Gold Standard |

|---|---|---|---|---|---|---|---|---|

| Woisetschläger [14], 2000; Austria | Retrospective-prospective study | Multivariable logistic regression | NR | 224 (118) | Out-of-hospital model | ICH and IS | Prehospital and in-hospital | CT scan |

| Yamashita [15], 2011; Japan | Retrospective study | Multivariable logistic regression | NR | 227 (100) | KP3S | IS | Prehospital and in-hospital | CT or MRI scan |

| Jin [16], 2016; China | Prospective study | Multivariable logistic regression | Training and test split | DC: 1101 (547) VC: 189 (99) | NR | ICH and IS | Prehospital | CT or MRI scan |

| Uchida [17], 2018; Japan | Prospective study | Multivariable logistic regression | External cohort validation | DC: 1229 (169) VC: 1007 (183) | JUST score | Stroke subtypes | Prehospital | CT or MRI scan |

| Chiquete [18], 2021; Mexico | Prospective study | Multiple variable analysis | NR | 369 (107) | NR | Stroke subtypes | Prehospital | CT or MRI scan |

| Uchida [19], 2021; Japan | Retrospective-prospective study | Multivariable logistic regression | External cohort validation | DC: 2236 (352) VC: 964 (138) | JUST-7 score | Stroke subtypes | Prehospital | CT or MRI scan |

| Geisler [20], 2021; Germany | Retrospective study | Multiple variable analysis | External cohort validation | DC: 416 (32) VC: 285 (33) | ph-ICH score | ICH | Prehospital and in-hospital | CT or MRI scan |

| Hayashi [21], 2021; Japan | Prospective study | ML algorithms: logistic regression, random forest, SVM, and XGBoost | Training and test split | DC: 1156 (271) VC: 290 (68) | NR | Stroke subtypes and non-stroke diagnoses | Prehospital | CT or MRI scan |

| Uchida [22], 2022; Japan | Retrospective-prospective study | ML algorithms: logistic regression, random forest, and XGBoost | External cohort validation | DC: 3178 (487) VC: 3127 (372) | JUST-ML | Stroke subtypes and non-stroke diagnoses | Prehospital | CT or MRI scan |

| Reference, Year | Risk of Bias | Applicability | Overall | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Participants | Predictors | Outcome | Analysis | Participants | Predictors | Outcome | Risk of Bias | Applicability | |

| Woisetschläger [14], 2000 | − | ? | − | − | ? | ? | − | − | − |

| Yamashita [15], 2011 | − | ? | ? | − | + | ? | − | − | − |

| Jin [16], 2016 | + | + | + | − | + | + | + | − | + |

| Uchida [17], 2018 | + | + | + | − | + | + | + | − | + |

| Chiquete [18], 2021 | + | + | + | − | + | + | + | − | + |

| Uchida [19], 2021 | + | + | + | − | + | + | + | − | + |

| Geisler [20], 2021 | + | + | + | − | + | + | + | − | + |

| Hayashi [21], 2021 | + | ? | + | − | + | + | + | − | + |

| Uchida [22], 2022 | + | + | + | − | + | + | + | − | + |

| Reference, Year | Comparison | Final Predictive Variables (Score) | Classification System | Sensitivity (95% CI) | Specificity (95% CI) | PPV (95% CI) | NPV (95% CI) | AUC (95% CI) |

|---|---|---|---|---|---|---|---|---|

| Woisetschläger [14], 2000 | ICH vs. IS |

| −3 | 0% | 93% (86.9–97.3) | 0% | 46% (44.4–46.9) | 0.90 (0.86–0.94) |

| −2 | 3% (0.9–8.5) | 62% (52.3–71.5) | 9% (3.6–21.3) | 37% (33.2–40.3) | ||||

| −1 | 10% (5.4–17.1) | 70% (60.1–78.4) | 27% (16.9–40.8) | 41% (37.8–44.5) | ||||

| 0 | 18% (11.4–25.9) | 81% (72.4–88.1) | 51% (37.7–64.6) | 47% (43.9–50.1) | ||||

| +1 | 11% (6.0–18.1) | 97% (92.0–99.4) | 81% (55.9–93.7) | 50% (47.7–51.3) | ||||

| +2 | 25% (17.9–34.3) | 96% (90.6–99.0) | 88% (73.2–95.4) | 54% (50.9–56.5) | ||||

| +3 | 32% (23.9–41.4) | 100% (96.6–100.0) | 100% (90.8–100.0) | 57% (53.9–60.0) | ||||

| Yamashita [15], 2011 | ICH vs. IS |

| 0 | 41% (31.3–51.3) | 91% (85.0–95.6) | 79% (66.9–87.3) | 66% (62.3–70.0) | NR |

| 1 | 44% (34.1–54.3) | 72% (63.8–80.0) | 56% (46.8–64.3) | 62% (57.3–66.8) | ||||

| 2 | 14% (7.9–22.4) | 72% (63.0–79.3) | 28% (18.2–40.5) | 51% (48.0–54.8) | ||||

| 3/4 | 1% (0.0–5.5) | 65% (55.6–72.9) | 2% (0.3–13.7) | 45% (42.1–48.6) | ||||

| Jin [16], 2016 | ICH vs. IS |

| Non-comatose | DC: 58% (51.9–64.8) VC: 66% (50.1–79.5) | DC: 79% (74.8–83.2) VC: 87% (76.2–94.3) | DC: 63% (58.0–68.3) VC: 78% (64.7–87.8) | DC: 76% (72.6–78.5) VC: 78% (70.3–84.6) | NR |

| Comatose | DC: 94% (90.2–96.0) VC: 91% (80.1–97.0) | DC: 42% (34.5–49.8) VC: 39% (21.5–59.4) | DC: 75% (72.3–77.2) VC: 75% (68.3–80.0) | DC: 78% (69.2–84.9) VC: 69% (45.9–85.1) | NR | ||

| Uchida [17], 2018 | ICH vs. any stroke |

| −6 to −2 | VC: 2% (0.3–4.7) | VC: 88% (86.0–90.5) | VC: 3% (1.0–8.9) | VC: 80% (79.7–80.7) | DC: 0.84 VC: 0.77 |

| −1 to 2 | VC: 21% (15.1–27.4) | VC: 46% (42.7–49.6) | VC: 8% (6.0–10.3) | VC: 72% (70.2–74.4) | ||||

| 3 to 4 | VC: 33% (26.0–40.1) | VC: 77% (73.5–79.4) | VC: 24% (19.6–28.4) | VC: 84% (82.2–85.1) | ||||

| 5 to 6 | VC: 31% (24.0–37.8) | VC: 91% (88.5–92.6) | VC: 42% (34.9–49.7) | VC: 85% (84.2–86.7) | ||||

| 7 to 9 | VC: 14% (9.5–20.1) | VC: 98% (97.2–99.1) | VC: 65% (49.7–77.7) | VC: 84% (82.9–84.6) | ||||

| Chiquete [18], 2021 | ICH vs. IS and SAH |

| N/A | 66% (57.0–74.6) | 52% (45.5–57.5) | 36% (29.5–42.7) | 79% (72.2–84.4) | NR |

| Uchida [19], 2021 | ICH vs. any stroke |

| N/A | NR | NR | NR | NR | DC: 0.79 VC: 0.73 |

| Geisler [20], 2021 | DC: ICH vs. IS/TIA/SM VC: ICH vs. IS |

| ≥1.5 | DC: 50% (31.9–68.1) VC: 52% (33.5–69.2) | DC: 80% (75.9–84.1) VC: 87% (82.1–90.8) | DC: 17% (12.4–23.9) VC: 34% (24.6–44.9) | DC: 95% (93.1–96.5) VC: 93% (90.6–95.1) | DC: 0.75 VC: 0.81 |

| ≥2.0 | DC: 38% (21.1–56.3) VC: 39% (22.9–57.9) | DC: 88% (84.4–91.1) VC: 94% (90.4–96.6) | DC: 21% (13.4–30.6) VC: 46% (31.2–62.4) | DC: 94% (92.8–95.7) VC: 92% (90.0–94.0) | ||||

| ≥2.5 | DC: 28% (13.8–46.8) VC: 24% (11.1–42.3) | DC: 96% (93.6–97.8) VC: 98% (95.4–99.4) | DC: 38% (22.2–55.8) VC: 62% (35.7–82.2) | DC: 94% (92.8–95.2) VC: 91% (89.1–92.3) | ||||

| ≥3.0 | DC: 25% (11.5–43.4) VC: 12% (3.4–28.2) | DC: 97% (95.3–98.7) VC: 100% (98.6–100.0) | DC: 44% (25.4–65.3) VC: 100% (39.8–100.0) | DC: 94% (92.7–95.0) VC: 90% (88.5–90.8) | ||||

| ≥3.5 | DC: 13% (3.5–29.0) VC: 3% (0.1–15.8) | DC: 100% (98.6–100.0) VC: 100% (98.6–100.0) | DC: 80% (31.5–97.2) VC: 100% (2.5–100.0) | DC: 93% (92.3–94.0) VC: 89% (88.1–89.3) | ||||

| Hayashi [21], 2021 | ICH vs. other strokes and non-stroke diagnoses |

| XGBoost | DC: 68% VC: 62% | DC: 91% VC: 90% | NR | NR | DC: 0.91 (0.89–0.93) VC: 0.87 (0.82–0.91) |

| Uchida [22], 2022 | ICH vs. other strokes and non-stroke diagnoses |

| Logistic regression | DC: 43% VC: 43% | DC: 45% VC: 92% | DC: 46% VC: 42% | DC: 90% VC: 92% | DC: 0.79 VC: 0.82 |

| Random forest | DC: 42% VC: 41% | DC: 45% VC: 94% | DC: 50% VC: 46% | DC: 90% VC: 92% | DC: 0.79 VC: 0.82 | |||

| XGBoost | DC: 43% VC: 40% | DC: 45% VC: 92% | DC: 48% VC: 41% | DC: 90% VC: 92% | DC: 0.78 VC: 0.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almubayyidh, M.; Alghamdi, I.; Jenkins, D.; Parry-Jones, A. Systematic Review of Prehospital Prediction Models for Identifying Intracerebral Haemorrhage in Suspected Stroke Patients. Healthcare 2025, 13, 876. https://doi.org/10.3390/healthcare13080876

Almubayyidh M, Alghamdi I, Jenkins D, Parry-Jones A. Systematic Review of Prehospital Prediction Models for Identifying Intracerebral Haemorrhage in Suspected Stroke Patients. Healthcare. 2025; 13(8):876. https://doi.org/10.3390/healthcare13080876

Chicago/Turabian StyleAlmubayyidh, Mohammed, Ibrahim Alghamdi, David Jenkins, and Adrian Parry-Jones. 2025. "Systematic Review of Prehospital Prediction Models for Identifying Intracerebral Haemorrhage in Suspected Stroke Patients" Healthcare 13, no. 8: 876. https://doi.org/10.3390/healthcare13080876

APA StyleAlmubayyidh, M., Alghamdi, I., Jenkins, D., & Parry-Jones, A. (2025). Systematic Review of Prehospital Prediction Models for Identifying Intracerebral Haemorrhage in Suspected Stroke Patients. Healthcare, 13(8), 876. https://doi.org/10.3390/healthcare13080876