Transforming Speech-Language Pathology with AI: Opportunities, Challenges, and Ethical Guidelines

Abstract

1. Introduction

2. Applications of AI in Speech-Language Disorders

2.1. Automated Assessment and Screening

2.2. Speech Recognition and Transcription

2.3. Voice and Acoustic Analysis

2.4. Communication Aids and Augmentative Technologies

3. Opportunities Created by AI in Speech-Language Disorders

3.1. Early and Equitable Access to Services

3.2. Support for Remote and Hybrid Models of Care

3.3. Data-Driven Personalization

3.4. Research Acceleration

3.5. Reducing Administrative Burden

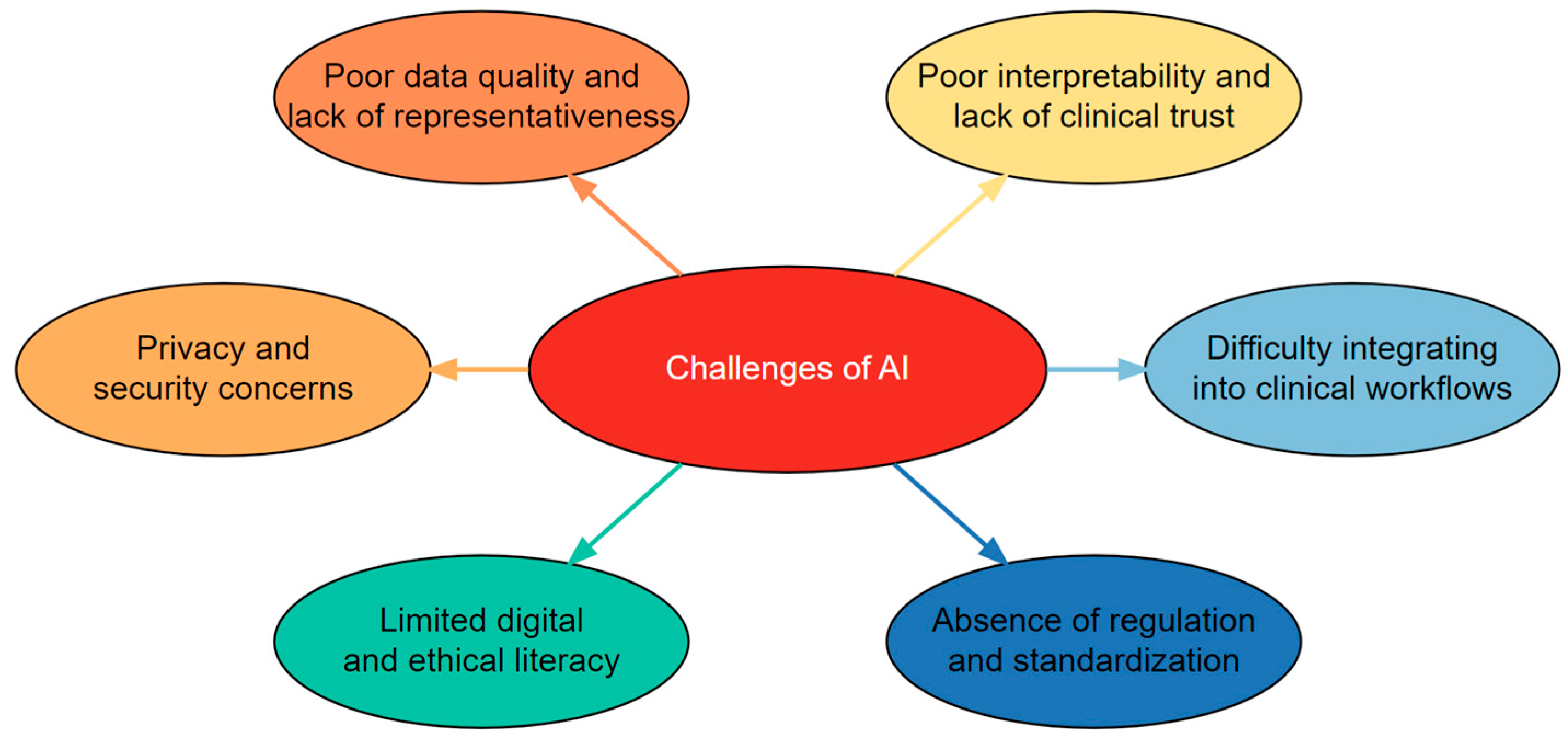

4. Challenges to AI Adoption in Speech-Language Disorders

4.1. Poor Data Quality and Lack of Representativeness

4.2. Poor Interpretability and Lack of Clinical Trust

4.3. Difficulty Integrating into Clinical Workflows

4.4. Absence of Domain-Specific Regulation and Standardization

4.5. Limited Digital and Ethical Literacy

4.6. Privacy and Security Concerns

5. Ethical Guidelines for AI in Speech-Language Disorders

5.1. Beneficence and Non-Maleficence

5.2. Transparency and Explainability

5.3. Fairness and Equity

5.4. Accountability and Governance

5.5. Patient Autonomy and Informed Consent

5.6. Sustainability and Long-Term Impact

6. Future Avenues

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alhejaily, A.M.G. Artificial intelligence in healthcare. Biomed. Rep. 2025, 22, 11. [Google Scholar]

- Leafe, N.; Pagnamenta, E.; Taggart, L.; Donnelly, M.; Hassiotis, A.; Titterington, J. What works, how and in which contexts when supporting parents to implement intensive speech and language therapy at home for children with speech sound disorder? A protocol for a realist review. BMJ Open 2024, 14, e074272. [Google Scholar] [CrossRef] [PubMed]

- Rameau, A.; Cox, S.R.; Sussman, S.H.; Odigie, E. Addressing disparities in speech-language pathology and laryngology services with telehealth. J. Commun. Disord. 2023, 105, 106349. [Google Scholar] [CrossRef] [PubMed]

- Rami, M. Potential impact of artificial intelligence on the practice and profession of speech-language pathology: A working paper. Commun. Sci. Disord. Fac. Publ. 2025. [Google Scholar] [CrossRef]

- Georgiou, G.P. Clinical application of machine learning in biomedical engineering for the early detection of neurological disorders. Ann. Biomed. Eng. 2025, 53, 2389–2391. [Google Scholar] [CrossRef]

- Beccaluva, E.A.; Catania, F.; Arosio, F.; Garzotto, F. Predicting developmental language disorders using artificial intelligence and a speech data analysis tool. Hum.–Comput. Interact. 2024, 39, 8–42. [Google Scholar] [CrossRef]

- Georgiou, G.P.; Theodorou, E. Detection of developmental language disorder in Cypriot Greek children using a neural network algorithm. J. Technol. Behav. Sci. 2024. [Google Scholar] [CrossRef]

- Ramesh, V.; Assaf, R. Detecting autism spectrum disorders with machine learning models using speech transcripts. arXiv 2021, arXiv:2110.03281. [Google Scholar] [CrossRef]

- Cordella, C.; Marte, M.J.; Liu, H.; Kiran, S. An introduction to machine learning for speech-language pathologists: Concepts, terminology, and emerging applications. Perspect. ASHA Spec. Interest Groups 2025, 10, 432–450. [Google Scholar] [CrossRef]

- Tobin, J.; Nelson, P.; MacDonald, B.; Heywood, R.; Cave, R.; Seaver, K.; Green, J.R. Automatic speech recognition of conversational speech in individuals with disordered speech. J. Speech Lang. Hear. Res. 2024, 67, 4176–4185. [Google Scholar] [CrossRef]

- Wang, H.; Jin, Z.; Geng, M.; Hu, S.; Li, G.; Wang, T.; Liu, X. Enhancing pre-trained ASR system fine-tuning for dysarthric speech recognition using adversarial data augmentation. In Proceedings of the 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 12311–12315. [Google Scholar]

- Ayer, M. Relieving administrative burden on clinical staff with streamlined workflows and speech-recognition software. Br. J. Nurs. 2023, 32 (Suppl. S16b), S1–S9. [Google Scholar] [CrossRef]

- Kashif, K. From Detailed Acoustic Analysis to AI: Designing and Developing Advanced Speech Analysis Tools. Ph.D. Thesis, Sapienza University of Rome, Rome, Italy, 2024. [Google Scholar]

- Asci, F.; Marsili, L.; Suppa, A.; Saggio, G.; Michetti, E.; Di Leo, P.; Costantini, G. Acoustic analysis in stuttering: A machine-learning study. Front. Neurol. 2023, 14, 1169707. [Google Scholar] [CrossRef] [PubMed]

- Mittal, V.; Sharma, R.K. Machine learning approach for classification of Parkinson disease using acoustic features. J. Reliab. Intell. Environ. 2021, 7, 233–239. [Google Scholar] [CrossRef]

- Malviya, R.; Rajput, S. AI to Empower People with Disabilities Communication and Autonomy. In Advances and Insights into AI-Created Disability Supports; Springer Nature: Singapore, 2025; pp. 97–113. [Google Scholar]

- Bhardwaj, A.; Sharma, M.; Kumar, S.; Sharma, S.; Sharma, P.C. Transforming pediatric speech and language disorder diagnosis and therapy: The evolving role of artificial intelligence. Health Sci. Rev. 2024, 12, 100188. [Google Scholar] [CrossRef]

- Omoyemi, O.E. Machine learning for predictive AAC: Improving speech and gesture-based communication systems. World J. Adv. Res. Rev. 2024, 24, 2569–2575. [Google Scholar] [CrossRef]

- Zoppo, G.; Marrone, F.; Pittarello, M.; Farina, M.; Uberti, A.; Demarchi, D.; Ricci, E. AI technology for remote clinical assessment and monitoring. J. Wound Care 2020, 29, 692–706. [Google Scholar] [CrossRef]

- Suh, H.; Dangol, A.; Meadan, H.; Miller, C.A.; Kientz, J.A. Opportunities and challenges for AI-based support for speech-language pathologists. In Proceedings of the 3rd Annual Meeting of the Symposium on Human-Computer Interaction for Work, Newcastle, UK, 25–27 June 2024; ACM: New York, NY, USA, 2024; pp. 1–14. [Google Scholar]

- Lee, S.A.S. Virtual speech-language therapy for individuals with communication disorders: Current evidence, limitations, and benefits. Curr. Dev. Disord. Rep. 2019, 6, 119–125. [Google Scholar] [CrossRef]

- McGill, N.; McLeod, S.; Crowe, K.; Wang, C.; Hopf, S.C. Waiting lists and prioritization of children for services: Speech-language pathologists’ perspectives. J. Commun. Disord. 2021, 91, 106099. [Google Scholar] [CrossRef]

- Zugarramurdi, C.; Fernández, L.; Lallier, M.; Carreiras, M.; Valle-Lisboa, J.C. Lexiland: A tablet-based universal screener for reading difficulties in the school context. J. Educ. Comput. Res. 2022, 60, 1688–1715. [Google Scholar] [CrossRef]

- Guglani, I.; Sanskriti, S.; Joshi, S.H.; Anjankar, A. Speech-language therapy through telepractice during COVID-19 and its way forward: A scoping review. Cureus 2023, 15, e44808. [Google Scholar] [CrossRef]

- Benway, N.R.; Preston, J.L. Artificial intelligence–assisted speech therapy for /ɹ/: A single-case experimental study. Am. J. Speech Lang. Pathol. 2024, 33, 2461–2486. [Google Scholar] [CrossRef] [PubMed]

- Gale, R.; Dolata, J.; Prud’hommeaux, E.; Van Santen, J.; Asgari, M. Automatic assessment of language ability in children with and without typical development. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society, Montréal, QC, Canada, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6111–6114. [Google Scholar]

- Gutz, S.E.; Maffei, M.F.; Green, J.R. Feedback from automatic speech recognition to elicit clear speech in healthy speakers. Am. J. Speech Lang. Pathol. 2023, 32, 2940–2959. [Google Scholar] [CrossRef] [PubMed]

- Beauchamp, M.L.; Amorim, K.; Wunderlich, S.N.; Lai, J.; Scorah, J.; Elsabbagh, M. Barriers to access and utilization of healthcare services for minority-language speakers with neurodevelopmental disorders: A scoping review. Front. Psychiatry 2022, 13, 915999. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, G.P.; Theodorou, E. Comprehension of complex syntax by non-English-speaking children with developmental language disorder: A scoping review. Clin. Linguist. Phon. 2023, 37, 1050–1068. [Google Scholar] [CrossRef]

- Zhang, X.; Qin, F.; Chen, Z.; Gao, L.; Qiu, G.; Lu, S. Fast screening for children’s developmental language disorders via comprehensive speech ability evaluation—Using a novel deep learning framework. Ann. Transl. Med. 2020, 8, 707. [Google Scholar] [CrossRef]

- Toki, E.I.; Tsoulos, I.G.; Santamato, V.; Pange, J. Machine learning for predicting neurodevelopmental disorders in children. Appl. Sci. 2024, 14, 837. [Google Scholar] [CrossRef]

- Allam, A.; Feuerriegel, S.; Rebhan, M.; Krauthammer, M. Analyzing patient trajectories with artificial intelligence. J. Med. Internet Res. 2021, 23, e29812. [Google Scholar] [CrossRef]

- Zhang, M.; Tang, E.; Ding, H.; Zhang, Y. Artificial intelligence and the future of communication sciences and disorders: A bibliometric and visualization analysis. J. Speech Lang. Hear. Res. 2024, 67, 4369–4390. [Google Scholar] [CrossRef]

- Green, J.R. Artificial intelligence in communication sciences and disorders: Introduction to the forum. J. Speech Lang. Hear. Res. 2024, 67, 4157–4161. [Google Scholar] [CrossRef]

- Glover, W.J.; Li, Z.; Pachamanova, D. The AI-enhanced future of health care administrative task management. NEJM Catal. Innov. Care Deliv. 2022, 3, 2. [Google Scholar]

- Reddy, S.; Fox, J.; Purohit, M.P. Artificial intelligence-enabled healthcare delivery. J. R. Soc. Med. 2019, 112, 22–28. [Google Scholar] [CrossRef]

- Nazer, L.H.; Zatarah, R.; Waldrip, S.; Ke, J.X.C.; Moukheiber, M.; Khanna, A.K.; Mathur, P. Bias in artificial intelligence algorithms and recommendations for mitigation. PLoS Digit. Health 2023, 2, e0000278. [Google Scholar] [CrossRef]

- Martin, J.L.; Wright, K.E. Bias in automatic speech recognition: The case of African American language. Appl. Linguist. 2023, 44, 613–630. [Google Scholar] [CrossRef]

- Celi, L.A.; Cellini, J.; Charpignon, M.L.; Dee, E.C.; Dernoncourt, F.; Eber, R.; Yao, S. Sources of bias in artificial intelligence that perpetuate healthcare disparities—A global review. PLoS Digit. Health 2022, 1, e0000022. [Google Scholar] [CrossRef] [PubMed]

- Petch, J.; Di, S.; Nelson, W. Opening the black box: The promise and limitations of explainable machine learning in cardiology. Can. J. Cardiol. 2022, 38, 204–213. [Google Scholar] [CrossRef] [PubMed]

- Abgrall, G.; Holder, A.L.; Chelly Dagdia, Z.; Zeitouni, K.; Monnet, X. Should AI models be explainable to clinicians? Crit. Care 2024, 28, 301. [Google Scholar] [CrossRef]

- Rosenbacke, R.; Melhus, Å.; McKee, M.; Stuckler, D. How explainable artificial intelligence can increase or decrease clinicians’ trust in AI applications in health care: Systematic review. JMIR AI 2024, 3, e53207. [Google Scholar] [CrossRef]

- Olakotan, O.; Samuriwo, R.; Ismaila, H.; Atiku, S. Usability Challenges in Electronic Health Records: Impact on Documentation Burden and Clinical Workflow: A Scoping Review. J. Eval. Clin. Pract. 2025, 31, e70189. [Google Scholar] [CrossRef]

- Rose, C.; Chen, J.H. Learning from the EHR to implement AI in healthcare. npj Digit. Med. 2024, 7, 330. [Google Scholar] [CrossRef]

- Schreier, M.; Brandt, R.; Brown, H.; Saensuksopa, T.; Silva, C.; Vardoulakis, L.M. User-Centered Delivery of AI-Powered Health Care Technologies in Clinical Settings: Mixed Methods Case Study. JMIR Hum. Factors 2025, 12, e76241. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. Artificial Intelligence in Software as a Medical Device; FDA: Silver Spring, MD, USA, 2025. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-software-medical-device (accessed on 1 August 2025).

- Schmidt, J.; Schutte, N.M.; Buttigieg, S.; Novillo-Ortiz, D.; Sutherland, E.; Anderson, M.; van Kessel, R. Mapping the regulatory landscape for artificial intelligence in health within the European Union. npj Digit. Med. 2024, 7, 229. [Google Scholar] [CrossRef]

- Wu, K.; Wu, E.; Rodolfa, K.; Ho, D.E.; Zou, J. Regulating AI adaptation: An analysis of AI medical device updates. arXiv 2024, arXiv:2407.16900. [Google Scholar]

- Schubert, T.; Oosterlinck, T.; Stevens, R.D.; Maxwell, P.H.; van der Schaar, M. AI education for clinicians. EClinicalMedicine 2025, 79, 102968. [Google Scholar] [CrossRef] [PubMed]

- Hanna, M.; Yana, S. Stench of Errors or the Shine of Potential: The Challenge of (Ir)Responsible Use of ChatGPT in Speech-Language Pathology. Int. J. Lang. Commun. Disord. 2025, 60, e70088. [Google Scholar] [CrossRef] [PubMed]

- Berisha, V.; Liss, J.M. Responsible development of clinical speech AI: Bridging the gap between clinical research and technology. npj Digit. Med. 2024, 7, 208. [Google Scholar] [CrossRef]

- Ahangaran, M.; Dawalatabad, N.; Karjadi, C.; Glass, J.; Au, R.; Kolachalama, V.B. Obfuscation via pitch-shifting for balancing privacy and diagnostic utility in voice-based cognitive assessment. Alzheimer’s Dement. 2025, 21, e70032. [Google Scholar] [CrossRef]

- WHO. Ethics and Governance of Artificial Intelligence for Health; World Health Organization: Geneva, Switzerland, 2021.

- U.S. Food and Drug Administration. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. Center for Devices and Radiological Health. 2021. Available online: https://www.fda.gov/media/145022/download (accessed on 1 August 2025).

- U.S. Food and Drug Administration. Marketing Submission Recommendations for a Predetermined Change Control Plan for AI-Enabled Device Software Functions. U.S. Department of Health and Human Services. 2024. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/marketing-submission-recommendations-predetermined-change-control-plan-artificial-intelligence (accessed on 19 August 2025).

- U.S. Food and Drug Administration. Artificial Intelligence and Machine Learning in Software as a Medical Device; U.S. Department of Health and Human Services: Washington, DC, USA, 2025.

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. npj Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef]

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The false hope of current approaches to explainable AI in health care. Lancet Digit. Health 2021, 3, e745–e750. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high-stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Koenecke, A.; Nam, A.; Lake, E.; Nudell, J.; Quartey, M.; Mengesha, Z.; Toups, C.; Rickford, J.R.; Jurafsky, D.; Goel, S. Racial disparities in automated speech recognition. Proc. Natl. Acad. Sci. USA 2020, 117, 7684–7689. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef] [PubMed]

- American Medical Association. AMA Principles for Augmented Intelligence Development, Deployment, and Use; AMA: Chicago, IL, USA, 2024. [Google Scholar]

- European Union. Regulation (EU) 2024/1689 laying down harmonised rules on artificial intelligence (AI Act). Off. J. Eur. Union 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj (accessed on 24 September 2025).

- Department of Health & Social Care. A Guide to Good Practice for Digital and Data-Driven Health Technologies. GOV.UK. 2021. Available online: https://www.gov.uk/government/publications/code-of-conduct-for-data-driven-health-and-care-technology/a-guide-to-good-practice-for-digital-and-data-driven-health-technologies (accessed on 1 August 2025).

- Park, H.J. Patient perspectives on informed consent for medical AI: A web-based experiment. Digit. Health 2024, 10, 20552076241247938. [Google Scholar] [CrossRef] [PubMed]

- Busch, F.; Hoffmann, L.; Xu, L.; Zhang, L.J.; Hu, B.; García-Juárez, I.; Toapanta-Yanchapaxi, L.N.; Gorelik, N.; Gorelik, V.; Rodriguez-Granillo, G.A.; et al. Attitudes toward AI in health care and diagnostics among hospital patients: An international multicenter survey. JAMA Netw. Open 2025, 8, e2514452. [Google Scholar] [CrossRef]

- Patterson, D.; Gonzalez, J.; Le, Q.; Liang, C.; Munguia, L.-M.; Rothchild, D.; So, D.; Texier, M.; Dean, J. Carbon emissions and large neural network training. arXiv 2021, arXiv:2104.10350. [Google Scholar] [CrossRef]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and policy considerations for deep learning in NLP. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019), Florence, Italy, 28 July–2 August 2019; ACL: Stroudsburg, PA, USA, 2019; pp. 3645–3650. [Google Scholar]

- NHS England. Delivering a “Net Zero” National Health Service; NHS: London, UK, 2022. [Google Scholar]

- Liu, X.; Rivera, S.C.; Moher, D.; Calvert, M.J.; Denniston, A.K.; Ashrafian, H.; Yau, C. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI extension. Lancet Digit. Health 2020, 2, e537–e548. [Google Scholar] [CrossRef]

- Tatman, R. Gender and dialect bias in YouTube’s automatic captions. In Proceedings of the First ACL Workshop on Ethics in Natural Language Processing, Valencia, Spain, 4 April 2017; ACL: Stroudsburg, PA, USA, 2017; pp. 53–59. [Google Scholar]

- Tomashenko, N.; Miao, X.; Champion, P.; Meyer, S.; Wang, X.; Vincent, E.; Todisco, M. The voiceprivacy 2024 challenge evaluation plan. arXiv 2024, arXiv:2404.02677. [Google Scholar] [CrossRef]

- NIST AI. Artificial Intelligence Risk Management Framework (AI RMF 1.0); NIST: Gaithersburg, MD, USA, 2023. [Google Scholar]

- Schwartz, R.; Dodge, J.; Smith, N.A.; Etzioni, O. Green AI. Commun. ACM 2020, 63, 54–63. [Google Scholar] [CrossRef]

| Application | Explanation |

|---|---|

| Automated assessment and screening | Software that quickly checks speech-language abilities and uses algorithms to flag possible difficulties or risk, so clinicians know who needs a fuller evaluation. |

| Speech recognition and transcription | Tech that turns spoken words into text in real time or from recordings; useful for documentation, captioning, and analyzing what was said. |

| Voice and acoustic analysis | Tools that measure properties of the voice and speech signal to detect or monitor disorders, fatigue, emotion, or treatment progress. |

| Communication aids and augmentative technologies | Devices and apps boards that help people with speech-language disorders produce messages and participate in conversation. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Georgiou, G.P. Transforming Speech-Language Pathology with AI: Opportunities, Challenges, and Ethical Guidelines. Healthcare 2025, 13, 2460. https://doi.org/10.3390/healthcare13192460

Georgiou GP. Transforming Speech-Language Pathology with AI: Opportunities, Challenges, and Ethical Guidelines. Healthcare. 2025; 13(19):2460. https://doi.org/10.3390/healthcare13192460

Chicago/Turabian StyleGeorgiou, Georgios P. 2025. "Transforming Speech-Language Pathology with AI: Opportunities, Challenges, and Ethical Guidelines" Healthcare 13, no. 19: 2460. https://doi.org/10.3390/healthcare13192460

APA StyleGeorgiou, G. P. (2025). Transforming Speech-Language Pathology with AI: Opportunities, Challenges, and Ethical Guidelines. Healthcare, 13(19), 2460. https://doi.org/10.3390/healthcare13192460