Predicting the Length of Stay of Cardiac Patients Based on Pre-Operative Variables—Bayesian Models vs. Machine Learning Models

Abstract

1. Introduction

2. Related Work

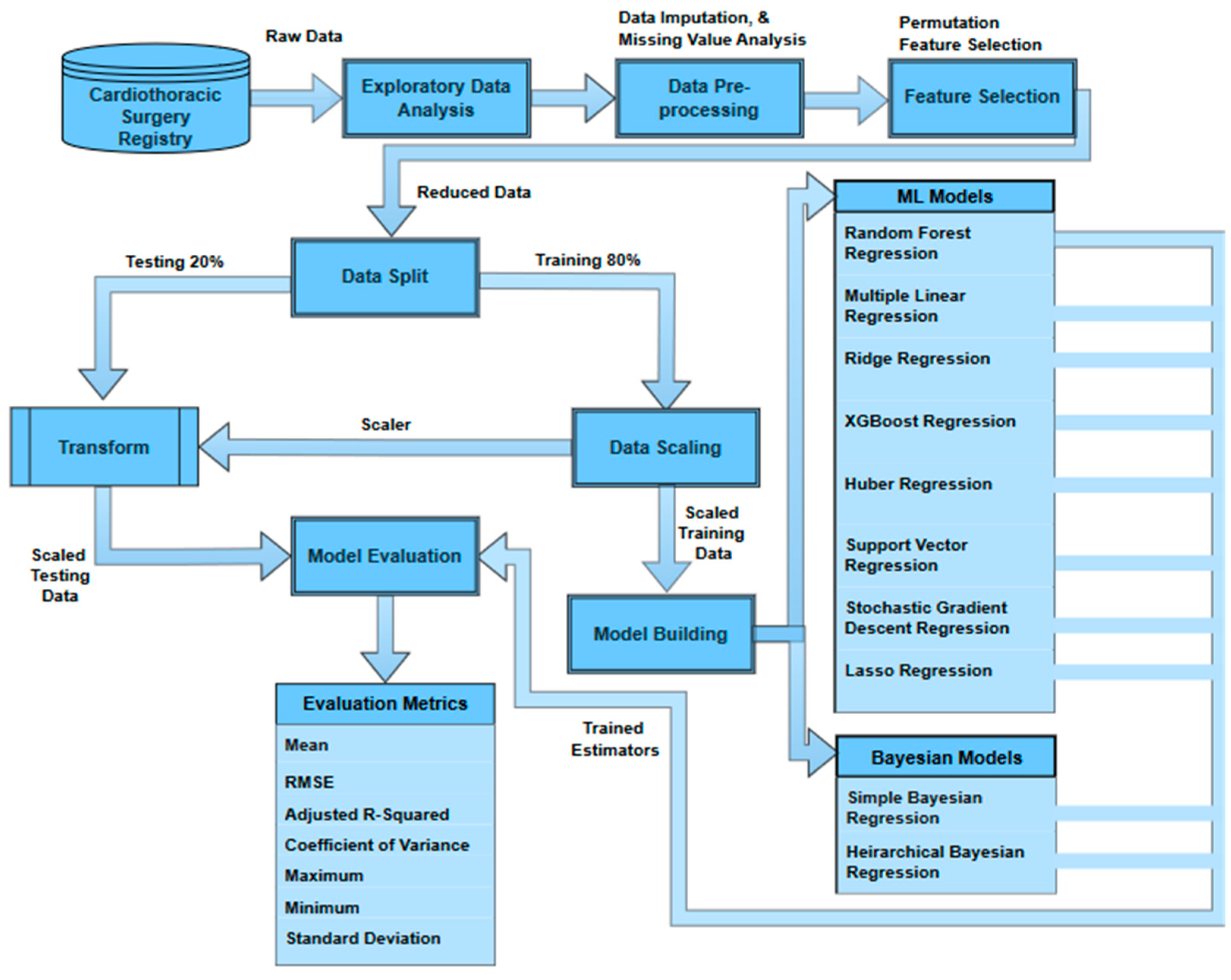

3. Methods

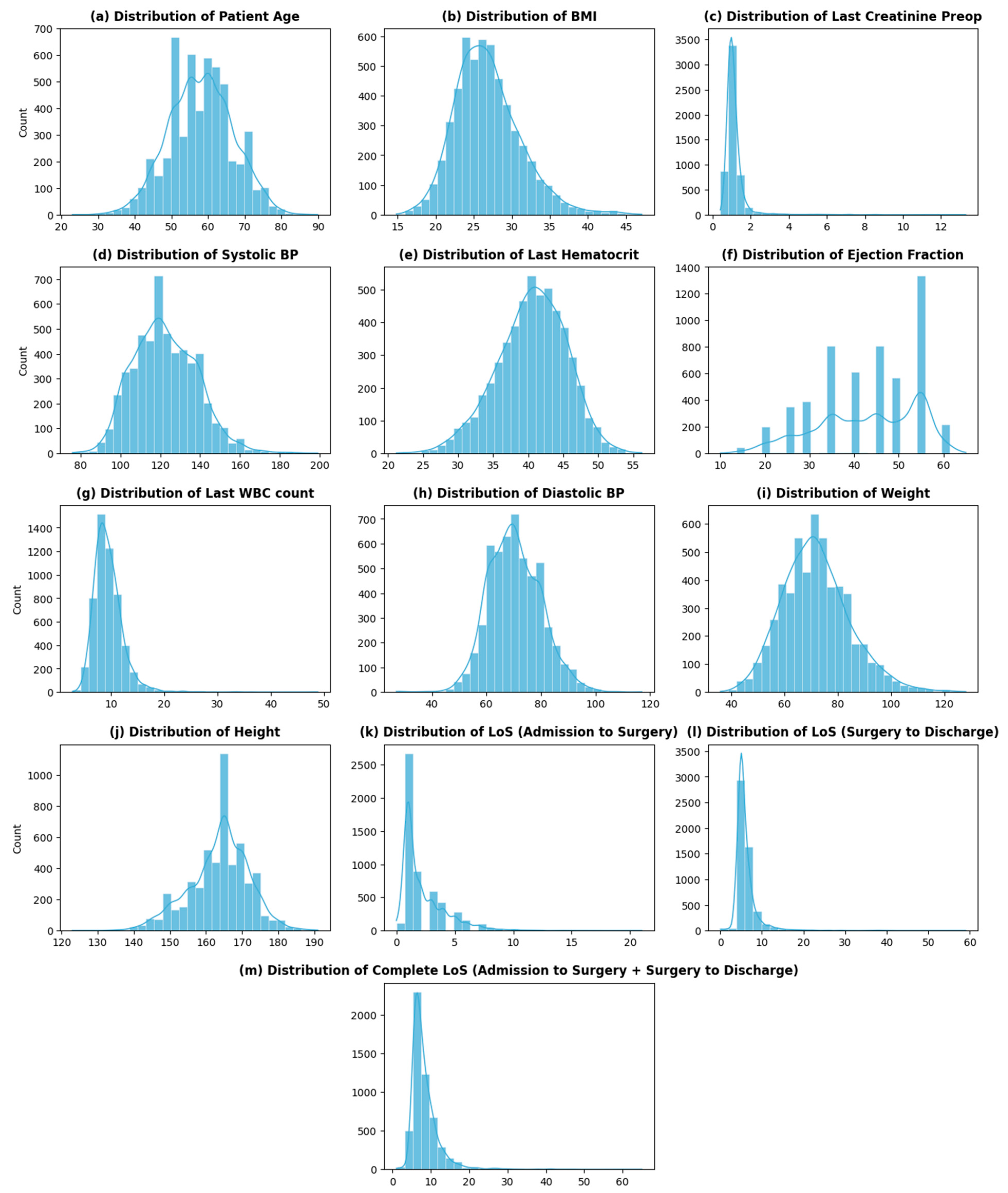

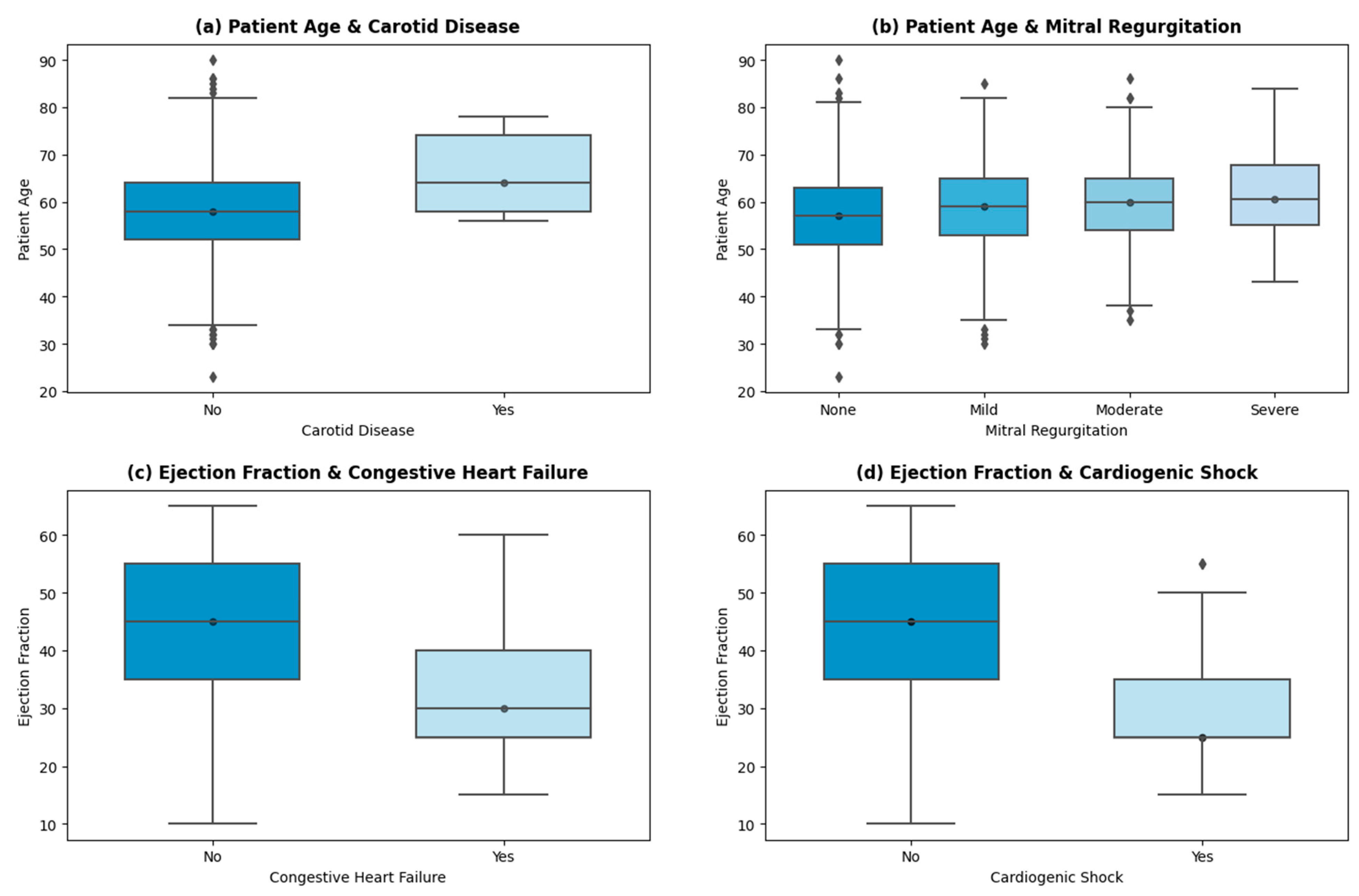

3.1. Data Characteristics

3.1.1. Data Cleaning and Imputation

3.1.2. Data Preparation

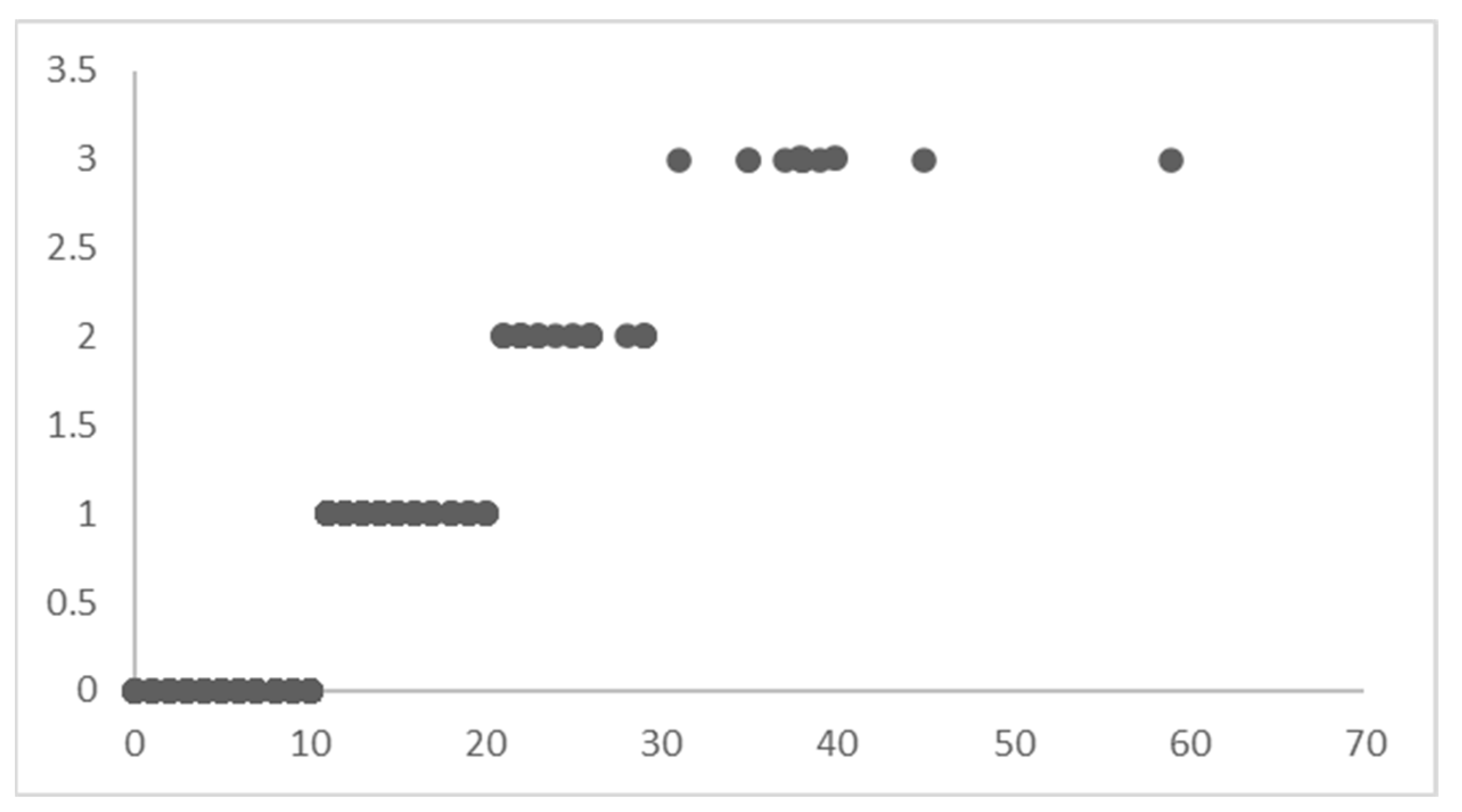

3.2. Permutation Feature Importance (PFI)

3.3. Models

3.3.1. Simple Bayesian Regression Model (SBM)

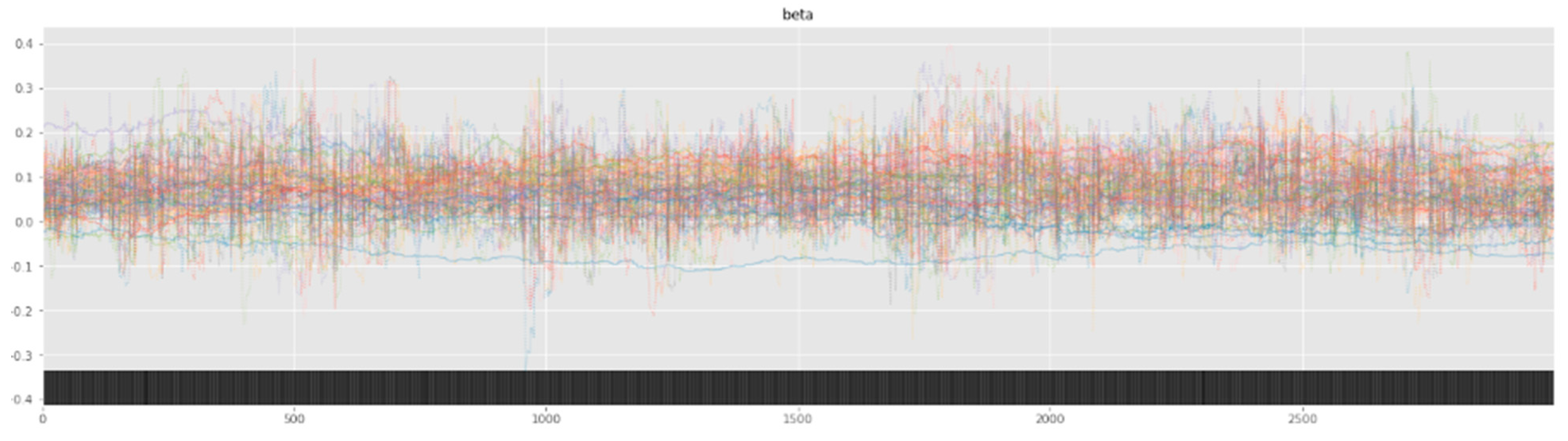

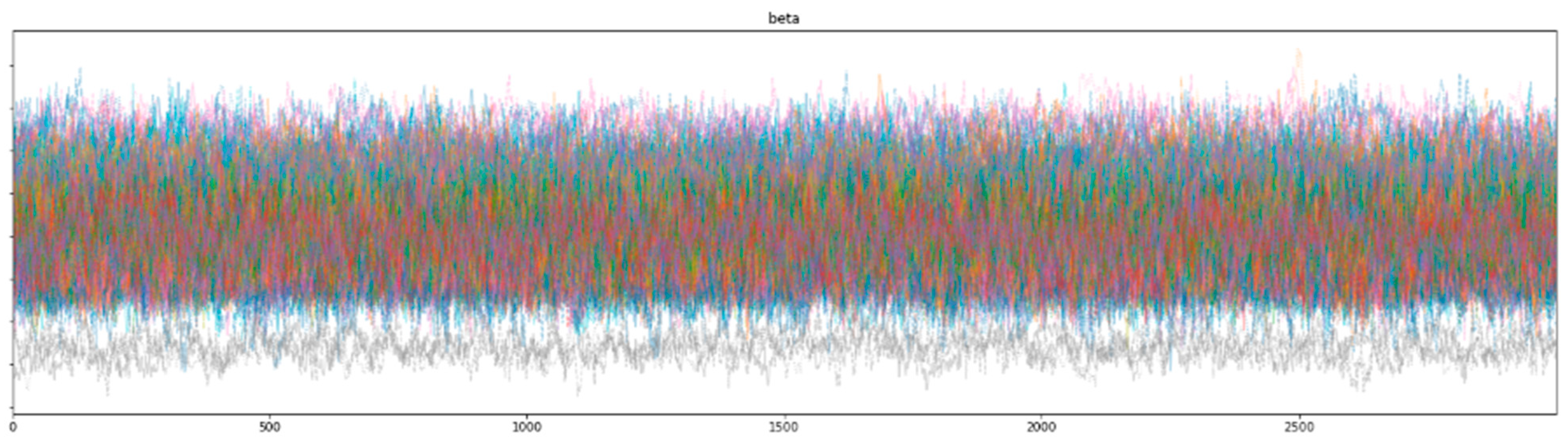

3.3.2. Hierarchical Bayesian Regression Model

3.3.3. ML Models

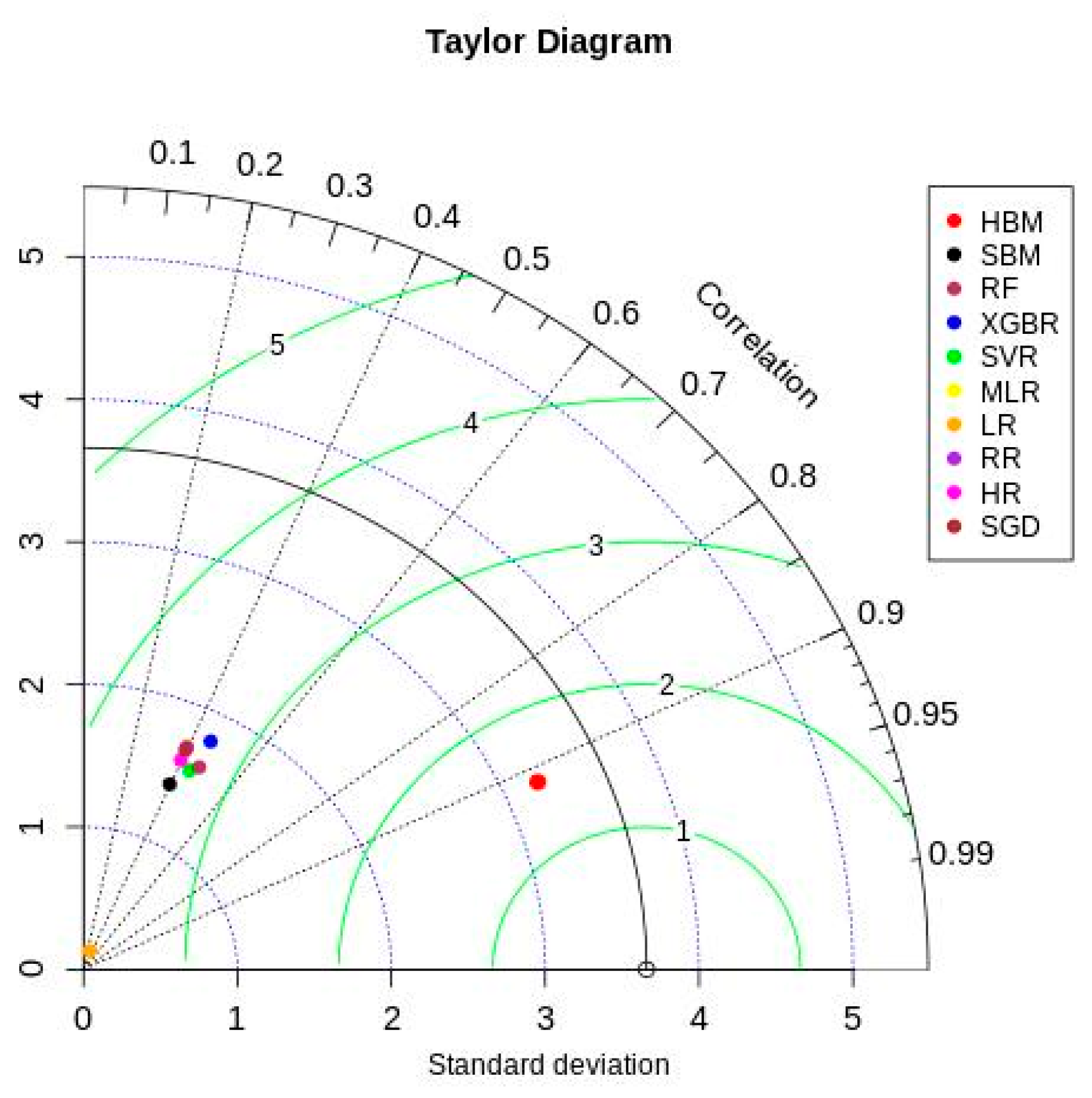

4. Results

5. Discussion

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Dataset Overview

| Feature | Description | Type | Min | Max | Average | Std | IQR | % of Missing Values |

|---|---|---|---|---|---|---|---|---|

| TempSNO | Serial number | Incremental | ||||||

| date_of_admission | Admission date | datetime | 25 November 2015 | 30 December 2020 | 0 | |||

| date_of_surgery | Surgery date | datetime | 28 November 2015 | 31 December 2020 | 0 | |||

| date_of_discharge | Discharge date | datetime | 5 December 2015 | 7 January 2021 | 0 | |||

| patient_age | Age of patient (Years) | Continuous | 23 | 90 | 58.07 | 8.66 | 12 | 0 |

| Admission_to_surgery | Admission to surgery (Days) | Continuous | 0 | 21 | 2.26 | 1.94 | 2 | 0 |

| LOS_Surgery_to_discharge | Surgery to discharge (Days) | Continuous | 0 | 59 | 6.04 | 2.97 | 1 | 0 |

| last_wbc_count | Last WBC count (×109/L) | Continuous | 2.8 | 48.8 | 9.48 | 2.77 | 3.1 | 0.41 |

| BMI | BMI (kg/m2) | Continuous | 14.82 | 47.08 | 26.82 | 4.35 | 5.47 | 0.41 |

| last_hematocrit | Last hematocrit value (%) | Continuous | 21.2 | 56.2 | 40.51 | 4.92 | 6.6 | 0 |

| last_cretenine_preop | Last creatinine value (mg/dL) | Continuous | 0.41 | 13.3 | 1.14 | 0.63 | 0.32 | 0 |

| BPsystolic | Pre-operative systolic BP (mmHg) | Continuous | 76 | 199 | 122.49 | 15.94 | 23 | 0.48 |

| diastolic | Pre-operative diastolic BP (mmHg) | Continuous | 27 | 117 | 70.36 | 9.63 | 14 | 0.41 |

| ejection_fraction | Pre-operative LV ejection fraction (%) | Continuous | 10 | 65 | 42.89 | 11.18 | 20 | 0 |

| Weight | Weight of the patient (kg) | Continuous | 36 | 128 | 71.87 | 12.74 | 17 | 0 |

| Height | Height of the patient (cm) | Continuous | 123 | 191 | 163.6 | 8.33 | 10 | 0 |

| Feature | Description | Type | Labels | n (%) | % of Missing Values |

|---|---|---|---|---|---|

| gender_id | Gender of patient | Categorical | Male, Female | 4360 (82), 954 (17.9) | |

| pulmonary_artery_done | Test for pulmonary artery mean pressure conducted? | Categorical | Yes, No | 2205 (41.4), 3109 (58.5) | 0.41 |

| Active_tobacco_use | Tobacco use within last 6 months | Categorical | Yes, No | 1308 (25.8), 3975 (74.1) | |

| f_history_cad | Family history of coronary artery disease | Categorical | Yes, No | 2664 (49.6), 2699 (50.3) | |

| diabetes | Diabetes/insulin use | Categorical | 2067 (38.5), 2211 (41.2), 1085 (20.3) | ||

| myocardial_infarction | Any prior myocardial infarction (MI) | Categorical | Yes, No | 3816 (71.1), 1547 (28.8) | |

| MI_timing | Time between MI and CABG | Categorical | 1584 (29.5), 16 (0.29), 26 (0.48), 1473 (27.4), 938 (17.4), 1326 (24.7) | ||

| congestive_heart_failure_A | Congestive heart failure | Categorical | Yes, No | 471 (8.7), 4892 (91.2) | |

| NYHA_class | NYHA (New York Heart Association shortness of breath class) during last 2 weeks | Categorical | 2482 (46.2), 62 (1.1), 1059 (19.7), 1513 (28.2), 247 (4.6) | ||

| Cardiac_Presentation_on_Admission | Cardiac symptoms on arrival | Categorical | 301 (5.6), 370 (6.8), 575 (10.7), 1664 (31.0), 1778 (33.1), 675 (12.5) | ||

| Angina_class | Angina Canadian Cardiac Society (CCS) classification within last 2 weeks | Categorical | 1069 (19.7), 26 (0.48), 700 (13.0), 2443 (45.5), 1135 (21.1) | ||

| cardiogenic_shock | Cardiogenic shock at the time of CABG | Categorical | Yes, No | 65 (1.21), 5298 (98.7) | |

| resuscitation | Any cardiac resuscitation within one hour of CABG | Categorical | Yes, No | 15 (0.27), 5348 (99.7) | |

| arrhythmia | Any prior arrhythmia | Categorical | Yes, No | 122 (2.27), 5241 (97.7) | |

| SustVTVF | Sustained VT/VF within 2 weeks | Categorical | Yes, No | 60 (1.11), 5303 (98.8) | |

| AFibFlutter | Afib/Flutter within 2 weeks | Categorical | None, Afib/Flutter, Heart block | 5298 (98.7), 51 (0.95), 14 (0.26) | |

| ventilator_used | Patient already on ventilator | Categorical | Yes, No | 76 (1.41), 5287 (98.5) | |

| beta_blockers_A | Beta blockers within 24 h | Categorical | Yes, No | 4593 (85.6), 770 (14.3) | |

| ace_inhibitors_A | ACE inhibitors within 24 h | Categorical | Yes, No | 1116 (20.8), 4247 (79.1) | |

| nitratesIV | Nitrates I.V at time of CABG | Categorical | Yes, No | 553 (10.3), 4810 (89.6) | |

| Angina_class | Angina Canadian Cardiac Society (CCS) classification within last 2 weeks | Categorical | 1069 (19.7), 26 (0.48), 700 (13.0), 2443 (45.5), 1135 (21.1) | ||

| cardiogenic_shock | Cardiogenic shock at the time of CABG | Categorical | Yes, No | 65 (1.21), 5298 (98.7) | |

| resuscitation | Any cardiac resuscitation within one hour of CABG | Categorical | Yes, No | 15 (0.27), 5348 (99.7) | |

| arrhythmia | Any prior arrhythmia | Categorical | Yes, No | 122 (2.27), 5241 (97.7) | |

| SustVTVF | Sustained VT/VF within 2 weeks | Categorical | Yes, No | 60 (1.11), 5303 (98.8) | |

| AFibFlutter | Afib/Flutter within 2 weeks | Categorical | None, Afib/Flutter, Heart block | 5298 (98.7), 51 (0.95), 14 (0.26) | |

| ventilator_used | Patient already on ventilator | Categorical | Yes, No | 76 (1.41), 5287 (98.5) | |

| beta_blockers_A | Beta blockers within 24 h | Categorical | Yes, No | 4593 (85.6), 770 (14.3) | |

| ace_inhibitors_A | ACE inhibitors within 24 h | Categorical | Yes, No | 1116 (20.8), 4247 (79.1) | |

| nitratesIV | Nitrates I.V at time of CABG | Categorical | Yes, No | 553 (10.3), 4810 (89.6) | |

| anti_coagulants | Anticoagulants within 48 h prior to surgery | Categorical | No, UFH, LMWH, Thrombin inhibitors | 4061 (75.7), 924 (17.2), 375 (6.9), 3 (0.05) | |

| warfarin_A | Warfarin within 24 h of CABG | Categorical | Yes, No | 5254 (97.9), 109 (2.1) | |

| inotropes | Inotropes within 48 h | Categorical | Yes, No | 76 (1.4), 5287 (98.6) | |

| steroids | Steroids witihn 24 h | Categorical | Yes, No | 5 (0.1), 5358 (99.9) | |

| aspirin_A | Aspirin within 5 days of CABG | Categorical | Yes, No | 4758 (88.7), 605 (11.3) | |

| lipid_lowering_A | Any lipid-lowering medications within 24 h of CABG | Categorical | Yes, No | 4696 (87.6), 667 (12.4) | |

| Dyslipidemia | Dyslipidemia with statins | Categorical | No, Yes (On Statin), Yes (Not on Statin) | 11 (0.2), 3254 (60.7), 2091 (39.0), 7 (0.1) | |

| dialysis | Hemodialysis-dependent pre-operatively | Categorical | Yes, No | 42 (0.8), 5321 (99.2) | |

| hypertension | Hypertension | Categorical | Yes, No | 3859 (72.0), 1504 (28.0) | |

| Cerebovascular_disease | Any cerebrovascular disease | Categorical | Yes, No | 283 (5.3), 5080 (94.7) | |

| pulmonary_insuff | Pulmonic insufficiency | Categorical | None, Trivial, Mild, Moderate, Severe | 4705 (87.7), 438 (8.2), 188 (3.5), 6 (0.1), 4 (0.1) | |

| Carotid_disease | Carotid disease | Categorical | Yes, No | 7 (0.1), 5356 (99.9) | |

| chronic_lung_disease | Chronic lung disease | Categorical | Yes, No | 119 (2.2), 5244 (97.8) | |

| FirstCVSurgery | Incidence of CV surgery | Categorical | First CV Surgery, 2nd CV surgery, 3rd CV surgery, 4th CV surgery | 5341 (99.6), 19 (0.4), 2 (0.0), 1 (0.0) | |

| previous_cv_interventions | Previous CV interventions (prior PCI, CABG, or others) | Categorical | Yes, No | 720 (13.4), 4643 (86.6) | |

| previous_coronary_bypass | Previous coronary bypass (CABG) | Categorical | Yes, No | 21 (0.4), 5342 (99.6) | |

| previous_valve | Previous valve surgery | Categorical | Yes, No | 1 (0.0), 5362 (100.0) | |

| intracardiac_device | Previous intracardiac device pacemaker or defibrillator (PPM or ICD) usage | Categorical | Yes, No | 11 (0.2), 5352 (99.8) | |

| Prior_PCI | Any previous angioplasty (PCI) | Categorical | Yes, No | 696 (13.0), 4667 (87.0) | |

| PCI_timing | PCI interval: within or more than 6 h | Categorical | >6 h, <6 h, No PCI | 690 (12.9), 6 (0.1), 4667 (87.0) | |

| Statin_A | Statin within 24 h | Categorical | Yes, No | 4695 (87.5), 668 (12.5) | |

| adp_inhibitors_within_5days | ADP inhibitors within five days | Categorical | Yes, No | 392 (7.3), 4971 (92.7) | |

| bronchodilators | Routine use of bronchodilators | Categorical | Yes, No | 86 (1.6), 5277 (98.4) | |

| Coronaries_diseased | Number of diseased coronary arteries | Categorical | Yes, No | 4842 (90.3), 521 (9.7) | |

| left_main_disease | Left main coronary disease | Categorical | Yes, No | 1007 (18.8), 4356 (81.2) | |

| pulmonary_artery_hypertension | Severe pulmonary artery hypertenstion (PASP 65 mmHg or more) | Categorical | Yes, No | 31 (0.6), 5332 (99.4) | |

| Aortic_regurgitation | Aortic insufficiency | Categorical | None, Mild, Moderate | 4958 (92.4), 375 (7.0), 30 (0.6) | |

| Mitral_regurgitation | Mitral insufficiency | Categorical | None, Mild, Moderate, Severe | 2571 (47.9), 1960 (36.5), 790 (14.7), 42 (0.8) | |

| Tricuspid_regurgitation | Tricuspid insufficiency | Categorical | None, Mild, Moderate, Severe | 3802 (70.9), 1560 (29.1), 1 (0.0) | |

| CABG_status | CABG surgery status | Categorical | Elective, Urgent, Emergent, Emergent Salvage | 3049 (56.9), 2082 (38.8), 217 (4.0), 15 (0.3) | |

| resuscitation | Any cardiac resuscitation within one hour of CABG | Categorical | Yes, No | 15 (0.27), 5348 (99.7) | |

| arrhythmia | Any prior arrhythmia | Categorical | Yes, No | 122 (2.27), 5241 (97.7) | |

| SustVTVF | Sustained VT/VF within 2 weeks | Categorical | Yes, No | 60 (1.11), 5303 (98.8) | |

| AFibFlutter | Afib/Flutter within 2 weeks | Categorical | None, Afib/Flutter, Heart block | 5298 (98.7), 51 (0.95), 14 (0.26) | |

| City | Patient City | Categorical | Karachi, Hyderabad, Quetta, Larkana, Mirpurkhas, Dadu, Others | 3964 (73.9), 416 (7.7), 122 (2.2), 59 (1.1), 59 (1.1), 53 (0.9), 690 (12.8) |

Appendix B. Gelman Rubin Scores

| 1.15 | 1.16 | 1.08 | |||

| 1.15 | 1.1 | 1.4 | |||

| 1.54 | 1.2 | 1.08 | |||

| 1.35 | 1.09 | 1.1 | |||

| 2.06 | 1.31 | 1.14 | |||

| 2.07 | 1.17 | 1.08 | |||

| 1.06 | 1.13 | 1.31 | |||

| 1.2 | 1.12 | 1.14 | |||

| 1.05 | 1.23 | 1.18 | |||

| 1.24 | 1.06 | 1.05 | |||

| 1.22 | 1.12 | 1.18 | |||

| 1.25 | 1.62 | 1.14 | |||

| 1.21 | 1.07 | 1.25 | |||

| 1.12 | 1.18 | 1.15 | |||

| 1.11 | 1.46 | 1.26 |

| 1.01 | 1.0 | 1.0 | |||

| 1.0 | 1.0 | 1.0 | |||

| 1.0 | 1.0 | 1.0 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.1 | 1.01 | 1.0 | |||

| 1.0 | 1.0 | 1.0 | |||

| 1.0 | 1.0 | 1.01 | |||

| 1.01 | 1.01 | 1.0 | |||

| 1.0 | 1.01 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.0 | 1.01 | 1.01 | |||

| 1.0 | 1.01 | 1.0 | |||

| 1.0 | 1.0 | 1.01 | |||

| 1.0 | 1.0 | 1.01 | |||

| 1.01 |

| 1.0 | 1.0 | 1.0 | |||

| 1.0 | 1.0 | 1.01 | |||

| 1.01 | 1.01 | 1.0 | |||

| 1.04 | 1.01 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.04 | 1.01 | 1.01 | |||

| 1.01 | 1.01 | 1.03 | |||

| 1.04 | 1.04 | 1.01 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.0 | 1.01 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.01 | 1.01 | ||||

| 1.0 |

| 1.0 | 1.0 | 1.0 | |||

| 1.0 | 1.04 | 1.01 | |||

| 1.01 | 1.01 | 1.0 | |||

| 1.04 | 1.01 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.01 | 1.02 | 1.0 | |||

| 1.04 | 1.01 | 1.01 | |||

| 1.01 | 1.01 | 1.03 | |||

| 1.04 | 1.04 | 1.01 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.0 | 1.01 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.01 | 1.01 | ||||

| 1.0 |

| 1.0 | 1.0 | 1.0 | |||

| 1.0 | 1.0 | 1.01 | |||

| 1.01 | 1.01 | 1.0 | |||

| 1.04 | 1.01 | 1.01 | |||

| 1.01 | 1.04 | 1.0 | |||

| 1.01 | 1.01 | 1.01 | |||

| 1.01 | 1.0 | 1.0 | |||

| 1.0 | 1.01 | 1.01 | |||

| 1.0 | 1.01 | 1.03 | |||

| 1.04 | 1.04 | 1.01 | |||

| 1.01 | 1.0 | 1.01 | |||

| 1.0 | 1.01 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.0 | 1.0 | ||||

| 1.01 | 1.01 | ||||

| 1.0 |

Appendix C. Estimated Coefficient Values & Feature Importance

| Variable | ±sd | Variable | ±sd | Variable | ±sd | |||

|---|---|---|---|---|---|---|---|---|

| Patient age | 0.086 | ±0.036 | Carotid disease | 0.099 | ±0.041 | BMI | 0.04 | ±0.054 |

| Aortic Regurgitation | 0.125 | ±0.04 | last wbc count | 0.01 | ±0.028 | intracardiac device | 0.061 | ±0.051 |

| last creatinine preop | 0.146 | ±0.048 | adp inhibitors within 5 days | 0.044 | ±0.046 | previous cv interventions | 0.078 | ±0.053 |

| Tricuspid regurgitation | 0.084 | ±0.036 | Arrhythmia | 0.065 | ±0.055 | Statin A | 0.062 | ±0.061 |

| pulmonary insufficiency | 0.118 | ±0.057 | Bronchodilators | 0.094 | ±0.05 | last hematocrit | 0.075 | ±0.055 |

| Angina class | 0.074 | ±0.054 | ejection fraction | 0.096 | ±0.057 | f history cad | 0.08 | ±0.059 |

| AFibFlutter | 0.119 | ±0.043 | Diabetes | 0.09 | ±0.069 | Dyslipidemia | 0.055 | ±0.055 |

| BPsystolic | 0.034 | ±0.025 | FirstCVSurgery | 0.084 | ±0.07 | steroids | 0.057 | ±0.064 |

| dialysis | 0.083 | ±0.048 | Resuscitation | −0.03 | ±0.051 | diastolic | 0.052 | ±0.058 |

| left main disease | 0.107 | ±0.057 | MI timing | 0.087 | ±0.024 | pulmonary artery hyper | 0.054 | ±0.062 |

| PCI timing | 0.069 | ±0.031 | anti coagulants | 0.073 | ±0.052 | NYHA class | 0.094 | ±0.063 |

| CABG status | 0.032 | ±0.039 | previous valve | 0.056 | ±0.066 | chronic lung disease | 0.103 | ±0.065 |

| previous coronary bypass | −0.014 | ±0.035 | congestive heart failure A | 0.079 | ±0.044 | nitratesIV | 0.059 | ±0.066 |

| SustVTVF | 0.112 | ±0.061 | warfarin A | 0.12 | ±0.033 | cardiogenic shock | 0.085 | ±0.068 |

| myocardial infarction | 0.079 | ±0.055 | Coronaries diseased | 0.034 | ±0.048 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.293081 | arrhythmia | 1.646449 | SustVTVF | −1.24274 |

| last_wbc_count | 0.171635 | lipid_lowering_A | 0.143871 | myocardial_infarction | −0.22586 |

| patient_age | 0.223315 | Prior_PCI | −2.74268 | resuscitation | 0.454801 |

| BPsystolic | 0.135273 | Cerebovascular_disease | 0.275619 | dialysis | −0.46064 |

| last_hematocrit | −0.12409 | Statin_A | 0.065754 | steroids | 2.651557 |

| diastolic | −0.11174 | MI_timing | 0.114422 | previous_coronary_bypass | −2.2 × 10−16 |

| pulmonary_insuff | 0.10158 | inotropes | −1.11074 | previous_valve | 2.22 × 10−16 |

| Mitral_regurgitation | 0.087977 | Angina_class | 0.026034 | FirstCVSurgery | 8.88 × 10−16 |

| Cardiac_Presentation_on_Admission | 0.020097 | PCI_timing | −1.36871 | warfarin_A | −2.2 × 10−16 |

| gender_id | 0.32052 | congestive_heart_failure_A | 0.191495 | ace_inhibitors_A | −0.23953 |

| CABG_status | 0.347848 | adp_inhibitors_within_5days | 0.361978 | Carotid_disease | −0.33631 |

| nitratesIV | 0.31499 | cardiogenic_shock | 0.400921 | aspirin_A | −0.2182 |

| f_history_cad | 0.12583 | intracardiac_device | 0.172755 | left_main_disease | −0.09115 |

| beta_blockers_A | −0.32623 | pulmonary_artery_hypertension | 2.171717 | bronchodilators | −0.4348 |

| NYHA_class | 0.123221 | Coronaries_diseased | 0.155315 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.300778 | arrhythmia | 0.515685 | SustVTVF | 0.172685 |

| last_wbc_count | 0.046161 | lipid_lowering_A | 0.0337 | myocardial_infarction | −0.0819 |

| patient_age | 0.085908 | Prior_PCI | −0.30512 | resuscitation | 2 |

| BPsystolic | 0.040163 | Cerebovascular_disease | 0.109956 | dialysis | 0.160044 |

| last_hematocrit | −0.00757 | Statin_A | 0.05522 | steroids | 0 |

| diastolic | −0.0208 | MI_timing | 0.019536 | previous_coronary_bypass | 0 |

| pulmonary_insuff | −8.6× 10−5 | inotropes | −0.67392 | previous_valve | 0 |

| Mitral_regurgitation | 0.020046 | Angina_class | 0.022927 | FirstCVSurgery | 0 |

| Cardiac_Presentation_on_Admission | 8.61× 10−5 | PCI_timing | −0.14735 | warfarin_A | 0 |

| gender_id | 0.31685 | congestive_heart_failure_A | 0.440434 | ace_inhibitors_A | −0.1476 |

| CABG_status | 0.119829 | adp_inhibitors_within_5days | 0.076298 | Carotid_disease | 0.09922 |

| nitratesIV | 0.161362 | cardiogenic_shock | 0.425116 | aspirin_A | −0.05569 |

| f_history_cad | 0.017713 | intracardiac_device | −0.14727 | left_main_disease | −0.03404 |

| beta_blockers_A | −0.12152 | pulmonary_artery_hypertension | 1.672917 | bronchodilators | −0.14199 |

| NYHA_class | 0.038393 | Coronaries_diseased | 0.077312 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.24022 | arrhythmia | 0.565138 | SustVTVF | 0 |

| last_wbc_count | 0.167671 | lipid_lowering_A | 0 | myocardial_infarction | 0 |

| patient_age | 0.231557 | Prior_PCI | 0 | resuscitation | 0 |

| BPsystolic | 0.107887 | Cerebovascular_disease | 0.07128 | dialysis | 0 |

| last_hematocrit | −0.12907 | Statin_A | 0 | steroids | 0 |

| diastolic | −0.08049 | MI_timing | 0.069182 | previous_coronary_bypass | 0 |

| pulmonary_insuff | 0.082534 | inotropes | 0 | previous_valve | 0 |

| Mitral_regurgitation | 0.098205 | Angina_class | 0.030879 | FirstCVSurgery | 0 |

| Cardiac_Presentation_on_Admission | 0.000971 | PCI_timing | 0 | warfarin_A | 0 |

| gender_id | 0.265267 | congestive_heart_failure_A | 0.07735 | ace_inhibitors_A | −0.17409 |

| CABG_status | 0.275316 | adp_inhibitors_within_5days | 0.222577 | Carotid_disease | 0 |

| nitratesIV | 0.228018 | cardiogenic_shock | 0 | aspirin_A | −0.06411 |

| f_history_cad | 0.075935 | intracardiac_device | 0 | left_main_disease | −0.02798 |

| beta_blockers_A | −0.19498 | pulmonary_artery_hypertension | 0.391082 | bronchodilators | 0 |

| NYHA_class | 0.133256 | Coronaries_diseased | 0.05406 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.29287 | arrhythmia | 1.63379 | SustVTVF | −1.22867 |

| last_wbc_count | 0.17179 | lipid_lowering_A | 0.14247 | myocardial_infarction | −0.22742 |

| patient_age | 0.22354 | Prior_PCI | −2.16652 | resuscitation | 0.44811 |

| BPsystolic | 0.13518 | Cerebovascular_disease | 0.27530 | dialysis | −0.45755 |

| last_hematocrit | −0.12417 | Statin_A | 0.06717 | steroids | 2.39899 |

| diastolic | −0.11162 | MI_timing | 0.11477 | previous_coronary_bypass | 0.00000 |

| pulmonary_insuff | 0.10174 | inotropes | −1.09653 | previous_valve | 0.00000 |

| Mitral_regurgitation | 0.08837 | Angina_class | 0.02591 | FirstCVSurgery | 0.00000 |

| Cardiac_Presentation_on_Admission | 0.02033 | PCI_timing | −1.08030 | warfarin_A | 0.00000 |

| gender_id | 0.32028 | congestive_heart_failure_A | 0.19182 | ace_inhibitors_A | −0.23946 |

| CABG_status | 0.34619 | adp_inhibitors_within_5days | 0.36213 | Carotid_disease | −0.32121 |

| nitratesIV | 0.31625 | cardiogenic_shock | 0.38599 | aspirin_A | −0.21897 |

| f_history_cad | 0.12597 | intracardiac_device | 0.16868 | left_main_disease | −0.09149 |

| beta_blockers_A | −0.32527 | pulmonary_artery_hypertension | 2.15283 | bronchodilators | −0.43283 |

| NYHA_class | 0.12320 | Coronaries_diseased | 0.15501 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.27735 | arrhythmia | 0.58070 | SustVTVF | −0.01454 |

| last_wbc_count | 0.05527 | lipid_lowering_A | 0.08576 | myocardial_infarction | −0.07164 |

| patient_age | 0.13353 | Prior_PCI | −1.22463 | resuscitation | 3.02020 |

| BPsystolic | 0.03054 | Cerebovascular_disease | 0.14033 | dialysis | 0.21827 |

| last_hematocrit | −0.01369 | Statin_A | 0.04562 | steroids | 0.08374 |

| diastolic | −0.00455 | MI_timing | 0.01957 | previous_coronary_bypass | 0.00000 |

| pulmonary_insuff | 0.00650 | inotropes | −1.03363 | previous_valve | 0.00000 |

| Mitral_regurgitation | 0.03187 | Angina_class | 0.03338 | FirstCVSurgery | 0.00000 |

| Cardiac_Presentation_on_Admission | 0.00038 | PCI_timing | −0.61239 | warfarin_A | 0.00000 |

| gender_id | 0.25862 | congestive_heart_failure_A | 0.24248 | ace_inhibitors_A | −0.18392 |

| CABG_status | 0.14018 | adp_inhibitors_within_5days | 0.10193 | Carotid_disease | 0.19897 |

| nitratesIV | 0.09323 | cardiogenic_shock | 0.62368 | aspirin_A | −0.06419 |

| f_history_cad | 0.02320 | intracardiac_device | −0.20054 | left_main_disease | −0.03968 |

| beta_blockers_A | −0.15233 | pulmonary_artery_hypertension | 1.65099 | bronchodilators | −0.18209 |

| NYHA_class | 0.04937 | Coronaries_diseased | 0.12048 |

| Variable | Variable | Variable | |||

|---|---|---|---|---|---|

| last_cretenine_preop | 0.30488 | arrhythmia | 0.79300 | SustVTVF | −0.05269 |

| last_wbc_count | 0.14100 | lipid_lowering_A | 0.11070 | myocardial_infarction | −0.17027 |

| patient_age | 0.27325 | Prior_PCI | 1.99228 | resuscitation | −0.00445 |

| BPsystolic | 0.18533 | Cerebovascular_disease | 0.24745 | dialysis | −0.30446 |

| last_hematocrit | −0.12108 | Statin_A | 0.10900 | steroids | 0.07558 |

| diastolic | −0.05952 | MI_timing | 0.14651 | previous_coronary_bypass | 0.00000 |

| pulmonary_insuff | 0.12881 | inotropes | −0.34767 | previous_valve | 0.00000 |

| Mitral_regurgitation | 0.08367 | Angina_class | 0.03855 | FirstCVSurgery | 0.00000 |

| Cardiac_Presentation_on_Admission | −0.00783 | PCI_timing | 1.04908 | warfarin_A | 0.00000 |

| gender_id | 0.35086 | congestive_heart_failure_A | 0.19975 | ace_inhibitors_A | −0.22757 |

| CABG_status | 0.33625 | adp_inhibitors_within_5days | 0.35751 | Carotid_disease | 0.00765 |

| nitratesIV | 0.31133 | cardiogenic_shock | −0.13044 | aspirin_A | −0.16028 |

| f_history_cad | 0.14661 | intracardiac_device | 0.01240 | left_main_disease | −0.08449 |

| beta_blockers_A | −0.26874 | pulmonary_artery_hypertension | 0.61975 | bronchodilators | −0.21460 |

| NYHA_class | 0.14968 | Coronaries_diseased | 0.28836 |

References

- Abd-Elrazek, M.A.; Eltahawi, A.A.; Elaziz, M.H.A.; Abd-Elwhab, M.N. Predicting length of stay in hospitals intensive care unit using general admission features. Ain Shams Eng. J. 2021, 12, 3691–3702. [Google Scholar] [CrossRef]

- Rahman, M.; Kundu, D.; Alam Suha, S.; Siddiqi, U.R.; Dey, S.K. Hospital patients’ length of stay prediction: A federated learning approach. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 7874–7884. [Google Scholar] [CrossRef]

- Samad, Z.; Hanif, B. Cardiovascular Diseases in Pakistan: Imagining a Postpandemic, Postconflict Future. Circulation 2023, 147, 1261–1263. [Google Scholar] [CrossRef] [PubMed]

- Mehta, N.; Pandit, A.; Shukla, S. Transforming healthcare with big data analytics and artificial intelligence: A systematic mapping study. J. Biomed. Inform. 2019, 100, 103311. [Google Scholar] [CrossRef]

- Fernandes, M.P.B.; de la Hoz, M.A.; Rangasamy, V.; Subramaniam, B. Machine Learning Models with Preoperative Risk Factors and Intraoperative Hypotension Parameters Predict Mortality After Cardiac Surgery. J. Cardiothorac. Vasc. Anesth. 2021, 35, 857–865. [Google Scholar] [CrossRef]

- Nilsson, J.; Ohlsson, M.; Thulin, L.; Höglund, P.; Nashef, S.A.; Brandt, J. Risk factor identification and mortality prediction in cardiac surgery using artificial neural networks. J. Thorac. Cardiovasc. Surg. 2006, 132, 12–19.e1. [Google Scholar] [CrossRef]

- Tsai, P.F.; Chen, P.C.; Chen, Y.Y.; Song, H.Y.; Lin, H.M.; Lin, F.M.; Huang, Q.P. Length of Hospital Stay Prediction at the Admission Stage for Cardiology Patients Using Artificial Neural Network. J. Health Eng. 2016, 2016, 7035463. [Google Scholar] [CrossRef]

- Alshakhs, F.; Alharthi, H.; Aslam, N.; Khan, I.U.; Elasheri, M. Predicting Postoperative Length of Stay for Isolated Coronary Artery Bypass Graft Patients Using Machine Learning. Int. J. Gen. Med. 2020, 13, 751–762. [Google Scholar] [CrossRef]

- Austin, P.C.; Rothwell, D.M.; Tu, J.V. A Comparison of Statistical Modeling Strategies for Analyzing Length of Stay after CABG Surgery. Health Serv. Outcomes Res. Methodol. 2002, 3, 107–133. [Google Scholar] [CrossRef]

- Shickel, B.; Tighe, P.J.; Bihorac, A.; Rashidi, P. Deep EHR: A Survey of Recent Advances in Deep Learning Techniques for Electronic Health Record (EHR) Analysis. IEEE J. Biomed. Health Inform. 2018, 22, 1589–1604. [Google Scholar] [CrossRef]

- Jahandideh, S.; Ozavci, G.; Sahle, B.W.; Kouzani, A.Z.; Magrabi, F.; Bucknall, T. Evaluation of machine learning-based models for prediction of clinical deterioration: A systematic literature review. Int. J. Med. Inform. 2023, 175, 105084. [Google Scholar] [CrossRef] [PubMed]

- Colella, Y.; Scala, A.; De Lauri, C.; Bruno, F.; Cesarelli, G.; Ferrucci, G.; Borrelli, A. Studying variables affecting the length of stay in patients with lower limb fractures by means of Machine Learning. In Proceedings of the 2021 5th International Conference on Medical and Health Informatics, Kyoto, Japan, 14–16 May 2021; ACM: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Colella, Y.; De Lauri, C.; Ponsiglione, A.M.; Giglio, C.; Lombardi, A.; Borrelli, A.; Amato, F.; Romano, M. A comparison of different Machine Learning algorithms for predicting the length of hospital stay for pediatric patients. In Proceedings of the 2021 International Symposium on Biomedical Engineering and Computational Biology, Nanchang, China, 13–15 August 2021; ACM: New York, NY, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Trunfio, T.A.; Scala, A.; Della Vecchia, A.; Marra, A.; Borrelli, A. Multiple Regression Model to Predict Length of Hospital Stay for Patients Undergoing Femur Fracture Surgery at ‘San Giovanni di Dio e Ruggi d’Aragona’ University Hospital. In Proceedings of the 8th European Medical and Biological Engineering Conference, Portorož, Slovenia, 29 November–3 December 2020; Springer: Cham, Switzerland, 2021; pp. 840–847. [Google Scholar] [CrossRef]

- Abbas, A.; Mosseri, J.; Lex, J.R.; Toor, J.; Ravi, B.; Khalil, E.B.; Whyne, C. Machine learning using preoperative patient factors can predict duration of surgery and length of stay for total knee arthroplasty. Int. J. Med. Inform. 2021, 158, 104670. [Google Scholar] [CrossRef]

- Barsasella, D.; Gupta, S.; Malwade, S.; Aminin; Susanti, Y.; Tirmadi, B.; Mutamakin, A.; Jonnagaddala, J.; Syed-Abdul, S. Predicting length of stay and mortality among hospitalized patients with type 2 diabetes mellitus and hypertension. Int. J. Med. Inform. 2021, 154, 104569. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.; Wang, B.; Wang, D.; Liu, Z.; Xing, C.; Wu, Y.; Gao, Q.; Zhu, S.; Qu, H.; Jia, Z.; et al. The application of machine learning algorithms in predicting the length of stay following femoral neck fracture. Int. J. Med. Inform. 2021, 155, 104572. [Google Scholar] [CrossRef] [PubMed]

- Hachesu, P.R.; Ahmadi, M.; Alizadeh, S.; Sadoughi, F. Use of Data Mining Techniques to Determine and Predict Length of Stay of Cardiac Patients. Health Inform. Res. 2013, 19, 121. [Google Scholar] [CrossRef]

- Wright, S.; Verouhis, D.; Gamble, G.; Swedberg, K.; Sharpe, N.; Doughty, R.N. Factors influencing the length of hospital stay of patients with heart failure. Eur. J. Heart Fail. 2003, 5, 201–209. [Google Scholar] [CrossRef] [PubMed]

- Rowan, M.; Ryan, T.; Hegarty, F.; O’hare, N. The use of artificial neural networks to stratify the length of stay of cardiac patients based on preoperative and initial postoperative factors. Artif. Intell. Med. 2007, 40, 211–221. [Google Scholar] [CrossRef] [PubMed]

- Tu, J.V.; Guerriere, M.R. Use of a Neural Network as a Predictive Instrument for Length of Stay in the Intensive Care Unit Following Cardiac Surgery. Comput. Biomed. Res. 1993, 26, 220–229. [Google Scholar] [CrossRef]

- Morton, A.; Marzban, E.; Giannoulis, G.; Patel, A.; Aparasu, R.; Kakadiaris, I.A. A Comparison of Supervised Machine Learning Techniques for Predicting Short-Term In-Hospital Length of Stay among Diabetic Patients. In Proceedings of the 2014 13th International Conference on Machine Learning and Applications (ICMLA), Washington, DC, USA, 3–6 December 2014; pp. 428–431. [Google Scholar] [CrossRef]

- Chuang, M.-T.; Hu, Y.-H.; Tsai, C.-F.; Lo, C.-L.; Lin, W.-C. The Identification of Prolonged Length of Stay for Surgery Patients. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Hong Kong, China, 9–12 October 2015; pp. 3000–3003. [Google Scholar]

- Omachonu, V.K.; Suthummanon, S.; Akcin, M.; Asfour, S. Predicting length of stay for Medicare patients at a teaching hospital. Health Serv. Manag. Res. 2004, 17, 1–12. [Google Scholar] [CrossRef]

- Khosravizadeh, O.; Vatankhah, S.; Bastani, P.; Kalhor, R.; Alirezaei, S.; Doosty, F. Factors affecting length of stay in teaching hospitals of a middle-income country. Electron. Phys. 2016, 8, 3042–3047. [Google Scholar] [CrossRef]

- Mekhaldi, R.N.; Caulier, P.; Chaabane, S.; Chraibi, A.; Piechowiak, S. Using Machine Learning Models to Predict the Length of Stay in a Hospital Setting. In Trends and Innovations in Information Systems and Technologies, Proceedings of the WorldCIST 2020, Budva, Montenegro, 7–10 April 2020; Springer: Cham, Switzerland, 2020; pp. 202–211. [Google Scholar] [CrossRef]

- Cai, X.; Perez-Concha, O.; Coiera, E.; Martin-Sanchez, F.; Day, R.; Roffe, D.; Gallego, B. Real-time prediction of mortality, readmission, and length of stay using electronic health record data. J. Am. Med. Inform. Assoc. 2016, 23, 553–561. [Google Scholar] [CrossRef]

- Li, J.-S.; Tian, Y.; Liu, Y.-F.; Shu, T.; Liang, M.-H. Applying a BP Neural Network Model to Predict the Length of Hospital Stay. In Health Information Science, Proceedings of the HIS 2013, London, UK, 25–27 March 2013; Springer: Cham, Switzerland, 2013; pp. 18–29. [Google Scholar] [CrossRef]

- Sáez-Castillo, A.J.; Olmo-Jiménez, M.J.; Sánchez, J.M.P.; Hernández, M.Á.N.; Arcos-Navarro, Á.; Díaz-Oller, J. Bayesian Analysis of Nosocomial Infection Risk and Length of Stay in a Department of General and Digestive Surgery. Value Health 2010, 13, 431–439. [Google Scholar] [CrossRef] [PubMed]

- Ng, S.K.; Yau, K.K.W.; Lee, A.H. Modelling inpatient length of stay by a hierarchical mixture regression via the EM algorithm. Math. Comput. Model. 2003, 37, 365–375. [Google Scholar] [CrossRef]

- Tang, X.; Luo, Z.; Gardiner, J.C. Modeling hospital length of stay by Coxian phase-type regression with heterogeneity. Stat. Med. 2012, 31, 1502–1516. [Google Scholar] [CrossRef] [PubMed]

- Steenman, M.; Lande, G. Cardiac aging and heart disease in humans. Biophys. Rev. 2017, 9, 131–137. [Google Scholar] [CrossRef]

- Rodgers, J.L.; Jones, J.; Bolleddu, S.I.; Vanthenapalli, S.; Rodgers, L.E.; Shah, K.; Karia, K.; Panguluri, S.K. Cardiovascular Risks Associated with Gender and Aging. J. Cardiovasc. Dev. Dis. 2019, 6, 19. [Google Scholar] [CrossRef]

- Natarajan, A.; Samadian, S.; Clark, S. Coronary artery bypass surgery in elderly people. Postgrad. Med. J. 2007, 83, 154–158. [Google Scholar] [CrossRef]

- Ahmad, N.; Raid, M.; Alzyadat, J.; Alhawal, H. Impact of urbanization and income inequality on life expectancy of male and female in South Asian countries: A moderating role of health expenditures. Humanit. Soc. Sci. Commun. 2023, 10, 552. [Google Scholar] [CrossRef]

- Ghazizadeh, H.; Mirinezhad, S.M.R.; Asadi, Z.; Parizadeh, S.M.; Zare-Feyzabadi, R.; Shabani, N.; Eidi, M.; Farkhany, E.M.; Esmaily, H.; Mahmoudi, A.A.; et al. Association between obesity categories with cardiovascular disease and its related risk factors in the MASHAD cohort study population. J. Clin. Lab. Anal. 2020, 34, e23160. [Google Scholar] [CrossRef] [PubMed]

- Powell-Wiley, T.M.; Poirier, P.; Burke, L.E.; Després, J.-P.; Gordon-Larsen, P.; Lavie, C.J.; Lear, S.A.; Ndumele, C.E.; Neeland, I.J.; Sanders, P.; et al. Obesity and Cardiovascular Disease: A Scientific Statement from the American Heart Association. Circulation 2021, 143, e984–e1010. [Google Scholar] [CrossRef] [PubMed]

- van Oostrom, O.; Velema, E.; Schoneveld, A.H.; de Vries, J.P.P.; de Bruin, P.; Seldenrijk, C.A.; de Kleijn, D.P.; Busser, E.; Moll, F.L.; Verheijen, J.H.; et al. Age-related changes in plaque composition. Cardiovasc. Pathol. 2005, 14, 126–134. [Google Scholar] [CrossRef]

- Sertedaki, E.; Veroutis, D.; Zagouri, F.; Galyfos, G.; Filis, K.; Papalambros, A.; Aggeli, K.; Tsioli, P.; Charalambous, G.; Zografos, G.; et al. Carotid Disease and Ageing: A Literature Review on the Pathogenesis of Vascular Senescence in Older Subjects. Curr. Gerontol. Geriatr. Res. 2020, 2020, 8601762. [Google Scholar] [CrossRef] [PubMed]

- Grufman, H.; Schiopu, A.; Edsfeldt, A.; Björkbacka, H.; Nitulescu, M.; Nilsson, M.; Persson, A.; Nilsson, J.; Gonçalves, I. Evidence for altered inflammatory and repair responses in symptomatic carotid plaques from elderly patients. Atherosclerosis 2014, 237, 177–182. [Google Scholar] [CrossRef]

- Moon-Grady, A.J.; Donofrio, M.T.; Gelehrter, S.; Hornberger, L.; Kreeger, J.; Lee, W.; Michelfelder, E.; Morris, S.A.; Peyvandi, S.; Pinto, N.M.; et al. Guidelines and Recommendations for Performance of the Fetal Echocardiogram: An Update from the American Society of Echocardiography. J. Am. Soc. Echocardiogr. 2023, 36, 679–723. [Google Scholar] [CrossRef] [PubMed]

- Klein, A.L.; Burstow, D.J.; Tajik, A.J.; Zachariah, P.K.; Taliercio, C.P.; Taylor, C.L.; Bailey, K.R.; Seward, J.B. Age-related Prevalence of Valvular Regurgitation in Normal Subjects: A Comprehensive Color Flow Examination of 118, Volunteers. J. Am. Soc. Echocardiogr. 1990, 3, 54–63. [Google Scholar] [CrossRef] [PubMed]

- Ponikowski, P.; Voors, A.A.; Anker, S.D.; Bueno, H.; Cleland, J.G.F.; Coats, A.J.S.; Falk, V.; González-Juanatey, J.R.; Harjola, V.-P.; Jankowska, E.A.; et al. 2016 ESC Guidelines for the diagnosis and treatment of acute and chronic heart failure. Eur. Heart J. 2016, 37, 2129–2200. [Google Scholar] [CrossRef] [PubMed]

- Yancy, C.W.; Jessup, M.; Bozkurt, B.; Butler, J.; Casey, D.E.; Drazner, M.H.; Fonarow, G.C.; Geraci, S.A.; Horwich, T.; Januzzi, J.L.; et al. 2013 ACCF/AHA Guideline for the Management of Heart Failure. Circulation 2013, 128, e240–e327. [Google Scholar] [CrossRef]

- DesJardin, J.T.; Teerlink, J.R. Inotropic therapies in heart failure and cardiogenic shock: An educational review. Eur. Heart J. Acute Cardiovasc. Care 2021, 10, 676–686. [Google Scholar] [CrossRef] [PubMed]

- Jakobsen, J.C.; Gluud, C.; Wetterslev, J.; Winkel, P. When and how should multiple imputation be used for handling missing data in randomised clinical trials—A practical guide with flowcharts. BMC Med. Res. Methodol. 2017, 17, 162. [Google Scholar] [CrossRef]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef]

- Molnar, C.; Konig, G.; Herbinger, J.; Freiesleben, T.; Dandl, S.; Scholbeck, C.A.; Casalicchio, G.; Grosse-Wentrup, M.; Bischl, B. General Pitfalls of Model-Agnostic Interpretation Methods for Machine Learning Models. In International Workshop on Extending Explainable AI Beyond Deep Models and Classifiers, Proceedings of the xxAI—Beyond Explainable AI, Vienna, Austria, 18 July 2020; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Strobl, C.; Boulesteix, A.-L.; Zeileis, A.; Hothorn, T. Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC Bioinform. 2007, 8, 25. [Google Scholar] [CrossRef] [PubMed]

- Minnier, J.; Tian, L.; Cai, T. A Perturbation Method for Inference on Regularized Regression Estimates. J. Am. Stat. Assoc. 2011, 106, 1371–1382. [Google Scholar] [CrossRef]

- Watson, S.I.; Lilford, R.J.; Sun, J.; Bion, J. Estimating the effect of health service delivery interventions on patient length of stay: A bayesian survival analysis approach. J. R. Stat. Soc. Ser. C Appl. Stat. 2021, 70, 1164–1186. [Google Scholar] [CrossRef]

- Gilks, W.R. Markov Chain Monte Carlo. In Encyclopedia of Biostatistics; Wiley: Hoboken, NJ, USA, 2005. [Google Scholar] [CrossRef]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of State Calculations by Fast Computing Machines. J. Chem. Phys. 1953, 21, 1087–1092. [Google Scholar] [CrossRef]

- Geman, S.; Geman, D. Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of Images. IEEE Trans. Pattern Anal. Mach. Intell. 1984, PAMI-6, 721–741. [Google Scholar] [CrossRef]

- Hoffman, M.D.; Gelman, A. The No-U-Turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo. J. Mach. Learn. Res. 2014, 15, 1593–1623. [Google Scholar]

- Abril-Pla, O.; Andreani, V.; Carroll, C.; Dong, L.; Fonnesbeck, C.J.; Kochurov, M.; Kumar, R.; Lao, J.; Luhmann, C.C.; Martin, O.A.; et al. PyMC: A modern, and comprehensive probabilistic programming framework in Python. PeerJ Comput. Sci. 2023, 9, e1516. [Google Scholar] [CrossRef] [PubMed]

- Owen, A.B. A robust hybrid of lasso and ridge regression. In Prediction and Discovery; American Mathematical Society: Providence, RI, USA, 2007; pp. 59–71. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the KDD’16: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection Via the Lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Code for the Creation & Testing of the Model. Available online: https://github.com/IBA-THI/Predicting-LoS-Bayesian-Models-VS-Machine-Learning-Models (accessed on 18 October 2023).

- Brown, C.E. Coefficient of Variation. In Applied Multivariate Statistics in Geohydrology and Related Sciences; Springer: Berlin/Heidelberg, Germany, 1998; pp. 155–157. [Google Scholar] [CrossRef]

- Taylor, K.E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. Atmos. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

- Roy, V. Convergence Diagnostics for Markov Chain Monte Carlo. Annu. Rev. Stat. Its Appl. 2020, 7, 387–412. [Google Scholar] [CrossRef]

- Gelman, A.; Rubin, D. Inference from Iterative Simulation Using Multiple Sequences. Stat. Sci. 1992, 7, 457–511. [Google Scholar]

- Lazar, H.L.; Fitzgerald, C.; Gross, S.; Heeren, T.; Aldea, G.S.; Shemin, R.J. Determinants of Length of Stay After Coronary Artery Bypass Graft Surgery. Circulation 1995, 92, 20–24. [Google Scholar] [CrossRef] [PubMed]

- Chiu, W.-T.; Chan, L.; Masud, J.H.B.; Hong, C.-T.; Chien, Y.-S.; Hsu, C.-H.; Wu, C.-H.; Wang, C.-H.; Tan, S.; Chung, C.-C. Identifying Risk Factors for Prolonged Length of Stay in Hospital and Developing Prediction Models for Patients with Cardiac Arrest Receiving Targeted Temperature Management. Rev. Cardiovasc. Med. 2023, 24, 55. [Google Scholar] [CrossRef]

- Siddiqa, A.; Naqvi, S.A.Z.; Ahsan, M.; Ditta, A.; Alquhayz, H.; Khan, M.A. Robust Length of Stay Prediction Model for Indoor Patients. Comput. Mater. Contin. 2022, 70, 5519–5536. [Google Scholar] [CrossRef]

- Bajwa, M.S.; Sohail, M.; Ali, H.; Nazir, U.; Bashir, M.M. Predicting Thermal Injury Patient Outcomes in a Tertiary-Care Burn Center, Pakistan. J. Surg. Res. 2022, 279, 575–585. [Google Scholar] [CrossRef]

| (a) Dataset (Continuous Variables) | ||||||

| Feature | Description | Type | Min | Max | Average | Std |

| admission_to_surgery | Admission to surgery (Days) | Continuous | 0 | 21 | 2.26 | 1.94 |

| LOS_Surgery_to_discharge | Surgery to discharge (Days) | Continuous | 0 | 59 | 6.04 | 2.97 |

| last_wbc_count | Last WBC count (×109/L) | Continuous | 2.8 | 48.8 | 9.48 | 2.77 |

| BMI | BMI (kg/m2) | Continuous | 14.82 | 47.08 | 26.82 | 4.35 |

| Patient_age | Age of patient (Years) | Continuous | 23 | 90 | 58.07 | 8.66 |

| last_hematocrit | Last hematocrit value (%) | Continuous | 21.2 | 56.2 | 40.51 | 4.92 |

| last_cretenine_preop | Last creatinine value (mg/dL) | Continuous | 0.41 | 13.3 | 1.14 | 0.63 |

| BPsystolic | Pre-operative systolic BP (mmHg) | Continuous | 76 | 199 | 122.49 | 15.94 |

| diastolic | Pre-operative diastolic BP (mmHg) | Continuous | 27 | 117 | 70.36 | 9.63 |

| ejection_fraction | Pre-operative LV ejection fraction (%) | Continuous | 10 | 65 | 42.89 | 11.18 |

| weight | Weight of the patient (kg) | Continuous | 36 | 128 | 71.87 | 12.74 |

| height | Height of the patient (cm) | Continuous | 123 | 191 | 163.6 | 8.33 |

| (b) Dataset (Categorical Variables) | ||||||

| Feature | Description | Type | Labels | n (%) | ||

| gender_id | Gender of patient | Categorical | Male, Female | 4360 (82), 954 (17.9) | ||

| pulmonary_artery_done | Test for pulmonary artery mean pressure conducted? | Categorical | Yes, No | 2205 (41.4), 3109 (58.5) | ||

| Active_tobacco_use | Tobacco use within the last 6 months | Categorical | Yes, No | 1308 (25.8), 3975 (74.1) | ||

| f_history_cad | Family history of coronary artery disease | Categorical | Yes, No | 2664 (49.6), 2699 (50.3) | ||

| diabetes | Diabetes/insulin use | Categorical | No, Yes (Non-Insulin Dependent, Yes (Insulin Dependent) | 2067 (38.5), 2211 (41.2), 1085 (20.3) | ||

| myocardial_infarction | Any prior myocardial infarction (MI) | Categorical | Yes, No | 3816 (71.1), 1547 (28.8) | ||

| MI_timing | Time between MI and CABG | Categorical | No MI, <6 h, >6–24 h, 1–7 days, 8–21 days, >21 days | 1584 (29.5), 16 (0.29), 26 (0.48), 1473 (27.4), 938 (17.4), 1326 (24.7) | ||

| congestive_heart_failure_A | Congestive heart failure | Categorical | Yes, No | 471 (8.7), 4892 (91.2) | ||

| NYHA_class | NYHA (New York Heart Association) shortness of breath class during the last 2 weeks | Categorical | Not applicable, NYHA I, NYHA II, NYHA III, NYHA IV | 2482 (46.2), 62 (1.1), 1059 (19.7), 1513 (28.2), 247 (4.6) | ||

| Cardiac_Presentation_on_Admission | Cardiac symptoms on arrival | Categorical | No Symptoms of Angina, Symptoms but unlikely to be ischemic, Stable Angina, Unstable Angina, Non-ST Elevation MI, ST Elevation MI | 301 (5.6), 370 (6.8), 575 (10.7), 1664 (31.0), 1778 (33.1), 675 (12.5) | ||

| patient_age | arrhythmia | Arrhythmia Type Sust VT/VF |

| last_wbc_count | lipid_lowering | myocardial_infarction |

| last_cretenine_preop | Prior_PCI | resuscitation |

| BPsystolic | Cerebovascular_disease | dialysis |

| last_hematocrit | Pulmonary_insuff | steroids |

| Cardiac_Presentation_on_Admission | MI_timing | previous_coronary_bypass |

| Statin | inotropes | previous_valve |

| Mitral_regurgitation | Angina_class | FirstCVSurgery |

| diastolic | PCI_timing | warfarin |

| gender_id | congestive_heart_failure | ace_inhibitors |

| CABG_status | adp_inhibitors_within_5days | Carotid_disease |

| nitratesIV | cardiogenic_shock | aspirin |

| family_history_of Cardiac_disease | intracardiac_device | left_main_disease |

| beta_blockers | pulmonary_artery_hypertension | bronchodilators |

| NYHA_class | Coronaries_diseased |

| Model | Hyperparameters |

|---|---|

| Stochastic Gradient Descent Regression | learning rate: adaptive, inverse scaling factor: 0.899, regularization parameter: 0.890 |

| Huber Regression [57] | k: 4 |

| XGBoost Regression [58] | subsample: 0.8, number of estimators: 1800, minimum sample split: 5, minimum samples leaf: 4, minimum child weight: 6, maximum features: auto, maximum depth: 68, learning rate: 0.01, column sample by tree: 0.2, booster: gbtree, alpha: 0.8, lambda: 0.8 |

| Random Forest Regression [59] | number of estimators: 1200, minimum sample split: 10, minimum sample leaf: 4, maximum features: sqrt, maximum depth: 20, bootstrap: False. |

| Lasso Regression [60] | λ: 0.01 |

| Ridge Regression [61] | λ: 1.08 |

| Support Vector Regression [62] | kernel: polynomial, degree: 2, regularization: 0.3 |

| Multiple Linear Regression |

| Mean | Standard Deviation | Min | Max | CV | Adjusted R-Squared | RMSE | MAE | |

|---|---|---|---|---|---|---|---|---|

| Actual | 8.37 | 3.65 | 1 | 47 | 0.43 | - | - | - |

| HBM | 8.32 | 3.23 | 4 | 40 | 0.38 | 82.3 | 1.49 | 1.16 |

| SBM | 8.31 | 1.41 | 5 | 16 | 0.17 | 11.9 | 3.36 | 2.05 |

| XGB | 8.36 | 1.80 | 6 | 16 | 0.21 | 17.4 | 3.25 | 1.88 |

| RF | 8.34 | 1.60 | 6 | 15 | 0.19 | 18.4 | 3.23 | 1.87 |

| SVR | 7.66 | 1.55 | 5 | 15 | 0.20 | 11.9 | 3.36 | 1.85 |

| Lasso | 8.28 | 0.13 | 8 | 9 | 0.01 | −2.15 | 3.61 | 2.28 |

| Ridge | 8.36 | 1.69 | 5 | 16 | 0.20 | 11.4 | 3.37 | 2.00 |

| SGD | 8.35 | 1.67 | 5 | 15 | 0.20 | 11.4 | 3.36 | 2.00 |

| HR | 8.26 | 1.59 | 5 | 15 | 0.19 | 11.6 | 3.36 | 1.98 |

| MLR | 8.36 | 1.70 | 5 | 16 | 0.20 | 11.3 | 3.37 | 2.00 |

| (a) Estimated Parameter Coefficient Value (Level 0) | |||||

| Parameter | θ ± sd | Parameter | θ ± sd | Parameter | θ ± sd |

| −0.348 ± 0.20 | 0.074 ± 0.19 | −0.035 ± 0.18 | |||

| 0.242 ± 0.08 | 0.073 ± 0.19 | 0.03 ± 0.18 | |||

| 0.212 ± 0.16 | 0.073 ± 0.18 | −0.03 ± 0.06 | |||

| 0.196 ± 0.1 | 0.067 ± 0.09 | −0.029 ± 0.18 | |||

| 0.178 ± 0.06 | 0.064 ± 0.02 | −0.017 ± 0.07 | |||

| 0.16 ± 0.07 | 0.06 ± 0.19 | −0.016 ± 0.03 | |||

| 0.144 ± 0.14 | 0.059 ± 0.17 | −0.014 ± 0.03 | |||

| 0.139 ± 0.12 | 0.058 ± 0.02 | −0.002 ± 0.09 | |||

| −0.136 ± 0.09 | −0.055 ± 0.09 | 0.001 ± 0.18 | |||

| 0.129 ± 0.10 | 0.052 ± 0.17 | −0.001 ± 0.03 | |||

| −0.126 ± 0.19 | 0.044 ± 0.03 | ||||

| −0.113 ± 0.16 | −0.043 ± 0.03 | ||||

| 0.109 ± 0.13 | 0.042 ± 0.13 | ||||

| −0.108 ± 0.06 | 0.041 ± 0.04 | ||||

| 0.089 ± 0.06 | 0.04 ± 0.14 | ||||

| (b) Estimated Parameter Coefficient Value (Level 1) | |||||

| Parameter | θ ± sd | Parameter | θ ± sd | Parameter | θ ± sd |

| 0.388 ± 0.18 | −0.12 ± 0.16 | 0.06 ± 0.18 | |||

| 0.326 ± 0.18 | −0.11 ± 0.13 | 0.06 ± 0.18 | |||

| 0.317 ± 0.17 | 0.11 ± 0.17 | 0.06 ± 0.18 | |||

| 0.305 ± 0.16 | 0.10 ± 0.18 | 0.06 ± 0.19 | |||

| 0.277 ± 0.19 | 0.09 ± 0.17 | −0.05 ± 0.18 | |||

| 0.277 ± 0.09 | 0.08 ± 0.13 | −0.05 ± 0.17 | |||

| 0.241 ± 0.2 | −0.08 ± 0.18 | 0.03 ± 0.12 | |||

| 0.235 ± 0.1 | −0.08 ± 0.14 | 0.01 ± 0.18 | |||

| 0.21 ± 0.19 | 0.08 ± 0.18 | 0.01 ± 0.16 | |||

| −0.19 ± 0.12 | 0.07 ± 0.19 | −0.01 ± 0.17 | |||

| 0.175 ± 0.17 | 0.07 ± 0.19 | ||||

| 0.147 ± 0.17 | 0.07 ± 0.19 | ||||

| 0.145 ± 0.18 | 0.07 ± 0.19 | ||||

| 0.137 ± 0.18 | −0.07 ± 0.18 | ||||

| 0.134 ± 0.09 | 0.07 ± 0.19 | ||||

| 0.133 ± 0.17 | 0.072 ± 0.19 | ||||

| 0.072 ± 0.19 | −0.006 ± 0.12 | ||||

| (c) Estimated Parameter Coefficient Value (Level 2) | |||||

| Parameter | θ ± sd | Parameter | θ ± sd | Parameter | θ ± sd |

| 0.199 ± 0.17 | 0.077 ± 0.19 | 0.060 ± 0.19 | |||

| 0.158 ± 0.18 | 0.077 ± 0.18 | 0.062 ± 0.18 | |||

| 0.109 ± 0.19 | 0.075 ± 0.18 | 0.061 ± 0.19 | |||

| 0.105 ± 0.18 | 0.075 ± 0.19 | 0.059 ± 0.19 | |||

| 0.104 ± 0.19 | 0.075 ± 0.19 | 0.058 ± 0.19 | |||

| 0.1 ± 0.18 | 0.074 ± 0.19 | 0.053 ± 0.19 | |||

| 0.097 ± 0.19 | 0.074 ± 0.19 | 0.049 ± 0.18 | |||

| 0.092 ± 0.19 | 0.072 ± 0.19 | 0.048 ± 0.19 | |||

| 0.092 ± 0.18 | 0.072 ± 0.18 | 0.048 ± 0.19 | |||

| 0.09 ± 0.19 | 0.071 ± 0.19 | 0.021 ± 0.19 | |||

| 0.089 ± 0.18 | 0.071 ± 0.19 | ||||

| 0.086 ± 0.18 | 0.071 ± 0.19 | ||||

| 0.084 ± 0.18 | 0.069 ± 0.18 | ||||

| 0.082 ± 0.19 | 0.069 ± 0.18 | ||||

| 0.082 ± 0.19 | 0.069 ± 0.19 | ||||

| (d) Estimated Parameter Coefficient Value (Level 3) | |||||

| Parameter | θ ± sd | Parameter | θ ± sd | Parameter | θ ± sd |

| 0.103 ± 0.19 | 0.075 ± 0.19 | 0.068 ± 0.19 | |||

| 0.099 ± 0.19 | 0.074 ± 0.19 | 0.067 ± 0.19 | |||

| 0.097 ± 0.19 | 0.074 ± 0.19 | 0.067 ± 0.19 | |||

| 0.087 ± 0.18 | 0.074 ± 0.18 | 0.066 ± 0.18 | |||

| 0.087 ± 0.19 | 0.074 ± 0.19 | 0.064 ± 0.19 | |||

| 0.084 ± 0.19 | 0.073 ± 0.18 | 0.063 ± 0.19 | |||

| 0.083 ± 0.19 | 0.073 ± 0.19 | 0.063 ± 0.19 | |||

| 0.083 ± 0.19 | 0.073 ± 0.18 | 0.061 ± 0.19 | |||

| 0.083 ± 0.18 | 0.072 ± 0.18 | 0.059 ± 0.19 | |||

| 0.081 ± 0.18 | 0.072 ± 0.19 | 0.057 ± 0.19 | |||

| 0.081 ± 0.19 | 0.072 ± 0.18 | ||||

| 0.08 ± 0.19 | 0.071 ± 0.19 | ||||

| 0.078 ± 0.19 | 0.07 ± 0.18 | ||||

| 0.077 ± 0.2 | 0.07 ± 0.19 | ||||

| 0.077 ± 0.18 | 0.069 ± 0.19 | ||||

| Study | Models | Target Type | Variables | Metric | Results |

|---|---|---|---|---|---|

| [1] | ANN, Classification Trees, Tree Bagger, RF, Fuzzy Logic, SVM, KNN, Regression Trees, Naïve Bayes | Classification | Pre-op | Accuracy | 63.21%, 62.90%, 59.89%, 60.21%, 57.56%, 61.89%, 56.95%, 65.86% |

| [12] | RF, ANN | Classification | Pre-op | Accuracy | 92%, 95% |

| [15] | MR, LR, SGD, Elastic Net, Linear SVM, KNN, DT, RF, AdaBoost, XGB, Scikit MLP, PyTorch MLP | Regression | Pre-op | MSE | 0.9, 0.78, 0.78, 0.78, 0.77, 0.96, 0.88, 0.82, 0.84, 0.82, 0.78, 0.68 |

| [16] | LR, Gradeint Boosting Regression, RF, SG | Regression | Pre-op | RMSE | 2.43, 1.97, 1.96, 2.46 |

| [17] | ANN, SVM, PCR | Regression | Intra-op | MAE | 3.0, 2.5, 2.14 |

| [18] | DT, ANN, SVM, Ensemble | Classification | Pre-op | Accuracy | 83.5%, 53.9%, 96.4%, 95.9% |

| [20] | ANN | Classification | Pre-op | AUC | 0.9 |

| [21] | ANN | Classification | Intra-op | ROC | 0.69 |

| [22] | RF, SVM, SVM (Learning Using Previliged Information), MTL (Multi-Task Learning), MLR | Classification | Intra-op | AUC | 0.70, 0.74, 0.76, 0.56, 0.45 |

| [23] | DT, SVM, RF | Classification | Intra-op | Accuracy | 0.75, 0.81, 0.87 |

| [24] | LR | Regression | Intra-op | MAPE | 17.65, 20.12. 22.45, 22.01, 21.84 |

| [27] | Bayesian Network | Classification | Intra-op | AUC | 0.83 |

| [28] | Bayesian Network | Classification | Intra-op | Accuracy | 80% |

| [71] | MLR, Lasso, Ridge, DTR, XGB, RF | Regression | Intra-op | MSE | 38.49, 42.19, 38.49, 5.93, 5.62, 5 |

| [72] | Logistic Regression | Classification | Intra-op | AUC | 0.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdurrab, I.; Mahmood, T.; Sheikh, S.; Aijaz, S.; Kashif, M.; Memon, A.; Ali, I.; Peerwani, G.; Pathan, A.; Alkhodre, A.B.; et al. Predicting the Length of Stay of Cardiac Patients Based on Pre-Operative Variables—Bayesian Models vs. Machine Learning Models. Healthcare 2024, 12, 249. https://doi.org/10.3390/healthcare12020249

Abdurrab I, Mahmood T, Sheikh S, Aijaz S, Kashif M, Memon A, Ali I, Peerwani G, Pathan A, Alkhodre AB, et al. Predicting the Length of Stay of Cardiac Patients Based on Pre-Operative Variables—Bayesian Models vs. Machine Learning Models. Healthcare. 2024; 12(2):249. https://doi.org/10.3390/healthcare12020249

Chicago/Turabian StyleAbdurrab, Ibrahim, Tariq Mahmood, Sana Sheikh, Saba Aijaz, Muhammad Kashif, Ahson Memon, Imran Ali, Ghazal Peerwani, Asad Pathan, Ahmad B. Alkhodre, and et al. 2024. "Predicting the Length of Stay of Cardiac Patients Based on Pre-Operative Variables—Bayesian Models vs. Machine Learning Models" Healthcare 12, no. 2: 249. https://doi.org/10.3390/healthcare12020249

APA StyleAbdurrab, I., Mahmood, T., Sheikh, S., Aijaz, S., Kashif, M., Memon, A., Ali, I., Peerwani, G., Pathan, A., Alkhodre, A. B., & Siddiqui, M. S. (2024). Predicting the Length of Stay of Cardiac Patients Based on Pre-Operative Variables—Bayesian Models vs. Machine Learning Models. Healthcare, 12(2), 249. https://doi.org/10.3390/healthcare12020249