Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities

Abstract

1. Introduction

2. Materials and Methods

2.1. Search Strategy

2.2. Bibliometric and Visual Analysis

2.3. Literature Review and Data Collection

3. Results

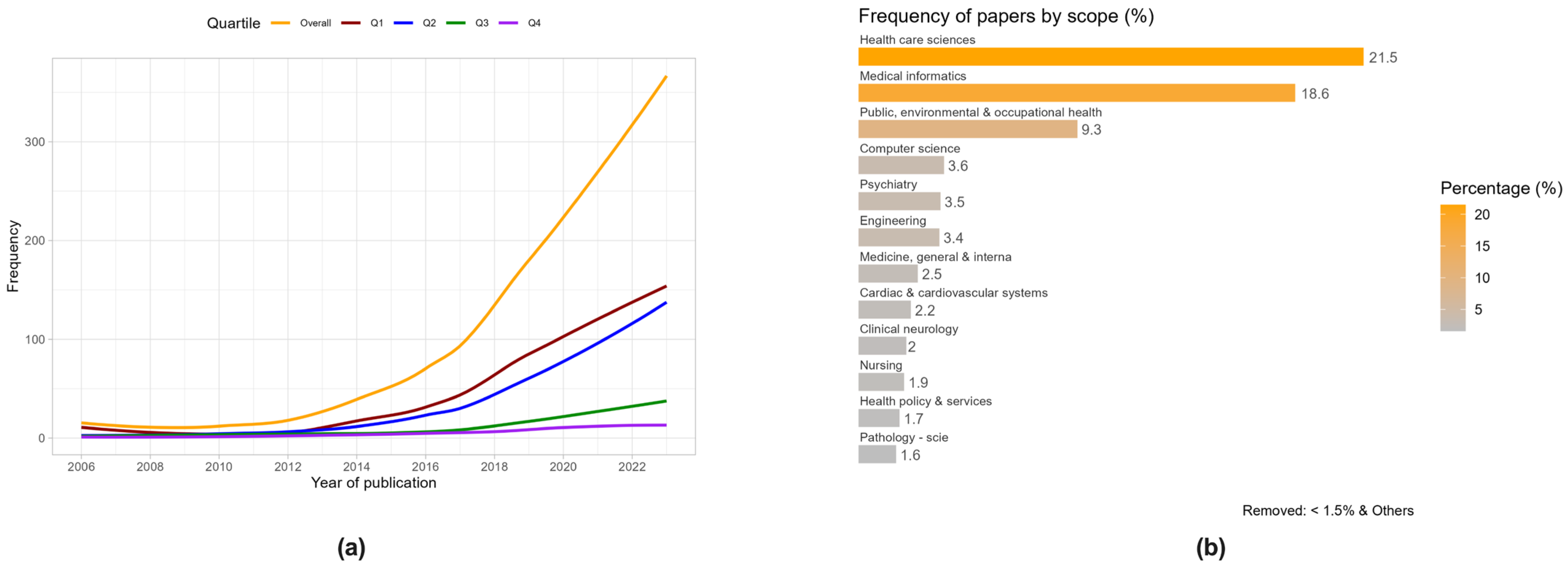

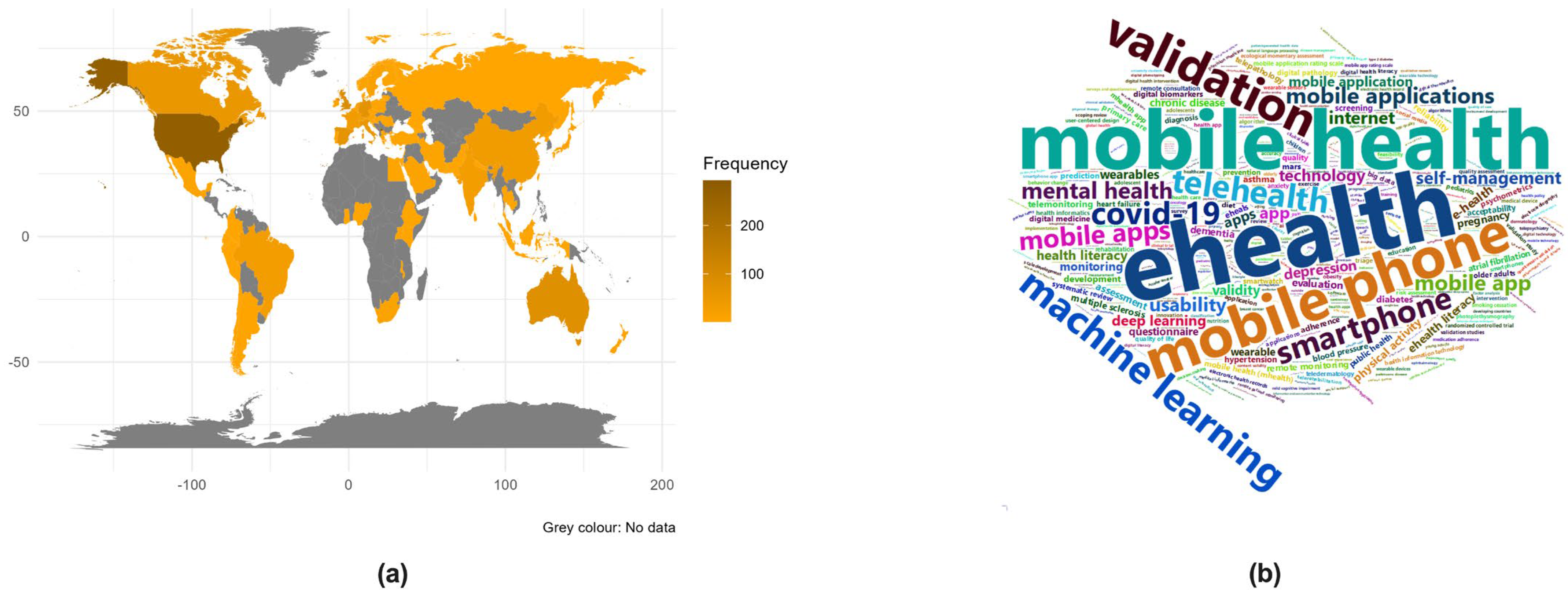

3.1. Bibliometric and Visual Analysis

3.2. Literature Review

3.2.1. Challenges Faced When Evaluating Digital Health Technologies

Challenges from a General and Conceptual Perspective

Challenges in Adoption and Implementation

Challenges of Accreditation Processes

Challenges in Ethical, Legal, and Social Implications

3.2.2. Reviews of DHTs Evaluation Frameworks

3.2.3. Articles Proposing a Multidimensional Evaluation Framework or Model

3.2.4. Articles Proposing or Reviewing Specific Evaluation Dimensions

Safety

Economic Evaluation

Evidence of Clinical Effectiveness

4. Discussion

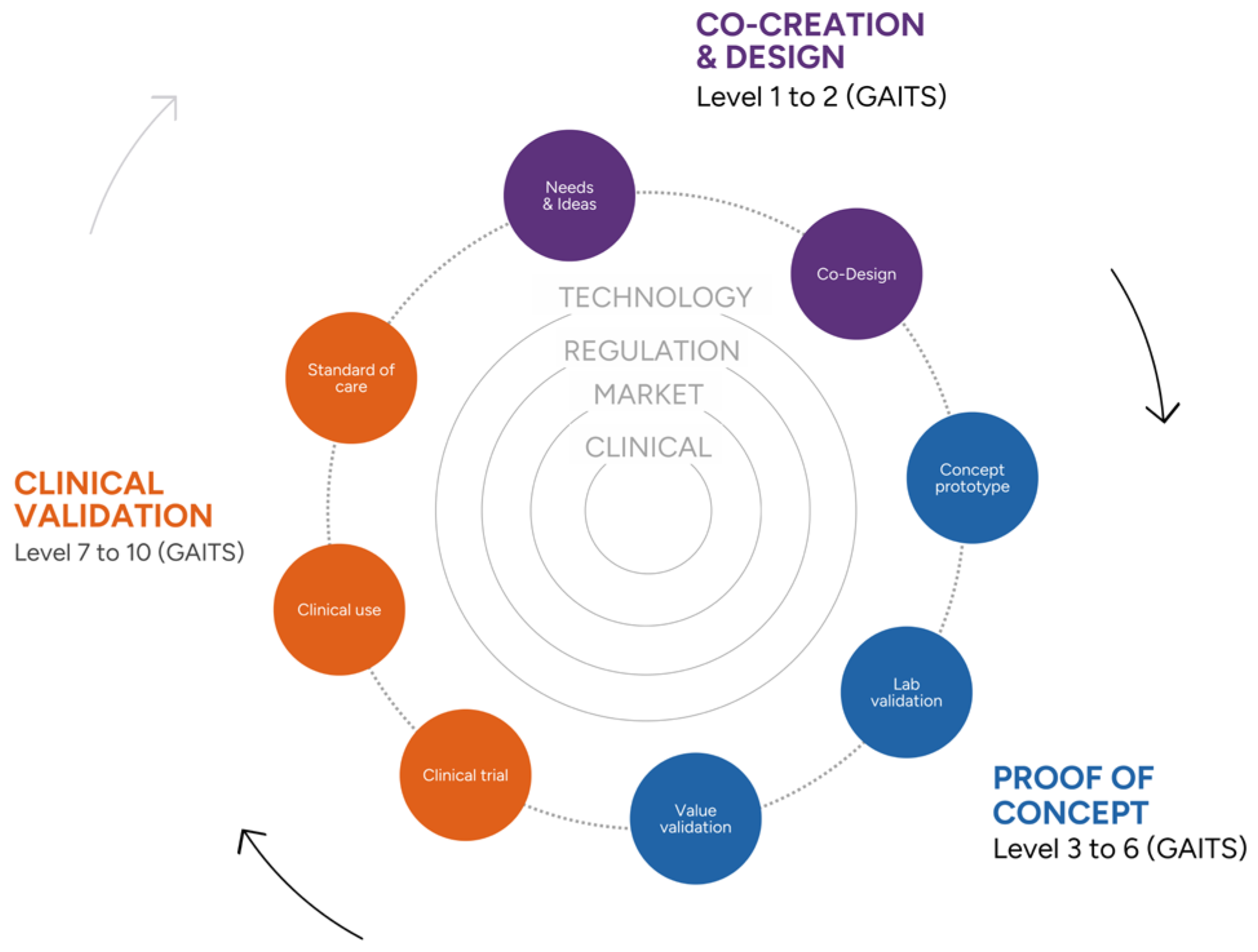

4.1. New Opportunities

The Digital Health Validation Center

4.2. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hägglund, M.; Cajander, Å.; Rexhepi, H.; Kane, B. Editorial: Personalized Digital Health and Patient-Centric Services. Front. Comput. Sci. 2022, 4, 862358. [Google Scholar] [CrossRef]

- Eysenbach, G. What is e-health? J. Med. Internet Res. 2001, 3, E20. [Google Scholar] [CrossRef] [PubMed]

- NICE. UK National Institute for Health and Care Excellence. Evidence Standards Framework for Digital Health Technologies. Updated August 2022. Available online: https://www.nice.org.uk/Media/Default/About/what-we-do/our-programmes/ESF-update-standards-for%20consultation.docx (accessed on 27 August 2023).

- WHO. World Health Organization. Digital Health. Available online: https://www.who.int/europe/health-topics/digital-health#tab=tab_1 (accessed on 21 April 2024).

- Abernethy, A.; Adams, L.; Barrett, M.; Bechtel, C.; Brennan, P.; Butte, A.; Faulkner, J.; Fontaine, E.; Friedhoff, S.; Halamka, J.; et al. The Promise of Digital Health: Then, Now, and the Future. NAM Perspect. 2022, 2022, 202206e. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Directorate-General for Health and Food Safety. Study on Health Data, Digital Health and Artificial Intelligence in Healthcare. Luxembourg: Publications Office of the European Union. 2022. Available online: https://health.ec.europa.eu/publications/study-health-data-digital-health-and-artificial-intelligence-healthcare_en (accessed on 25 August 2023).

- eitHealth. Digital Medical Devices: Paths to European Harmonisation. Summary Report. March 2023. Available online: https://eithealth.eu/wp-content/uploads/2023/02/EIT_Health_Digital-Medical-Devices_Paths-to-European-Harmonisation.pdf (accessed on 27 August 2023).

- Gopal, G.; Suter-Crazzolara, C.; Toldo, L.; Eberhardt, W. Digital transformation in healthcare—Architectures of present and future information technologies. Clin. Chem. Lab. Med. 2019, 57, 328–335. [Google Scholar] [CrossRef] [PubMed]

- Meessen, B. The Role of Digital Strategies in Financing Health Care for Universal Health Coverage in Low- and Middle-Income Countries. Glob. Health Sci. Pract. 2018, 6, S29–S40. [Google Scholar] [CrossRef] [PubMed]

- Reixach, E.; Andrés, E.; Sallent Ribes, J.; Gea-Sánchez, M.; Àvila López, A.; Cruañas, B.; González Abad, A.; Faura, R.; Guitert, M.; Romeu, T.; et al. Measuring the Digital Skills of Catalan Health Care Professionals as a Key Step toward a Strategic Training Plan: Digital Competence Test Validation Study. J. Med. Internet Res. 2022, 24, e38347. [Google Scholar] [CrossRef]

- Mathews, S.C.; McShea, M.J.; Hanley, C.L.; Ravitz, A.; Labrique, A.B.; Cohen, A.B. Digital health: A path to validation. NPJ Digit. Med. 2019, 2, 38. [Google Scholar] [CrossRef]

- Day, S.; Shah, V.; Kaganoff, S.; Powelson, S.; Mathews, S.C. Assessing the Clinical Robustness of Digital Health Startups: Cross-sectional Observational Analysis. J. Med. Internet Res. 2022, 24, e37677. [Google Scholar] [CrossRef] [PubMed]

- Ford, K.L.; Portz, J.D.; Zhou, S.; Gornail, S.; Moore, S.L.; Zhang, X.; Bull, S. Benefits, Facilitators, and Recommendations for Digital Health Academic-Industry Collaboration: A Mini Review. Front. Digit. Health 2021, 3, 616278. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- Subirana, I.; Sanz, H.; Vila, J. Building Bivariate Tables: The compareGroups Package for R. J. Stat. Softw. 2014, 57, 1–16. [Google Scholar] [CrossRef]

- Subirana, I.; Sanz, H.; Vila, J. compareGroups: Building Bivariate Tables. Available online: https://CRAN.R-project.org/package=compareGroups (accessed on 25 November 2023).

- Wickham, H.; Winston, C.; Henry, L.; Pedersen, T.H.; Takahashi, K.; Wilke, C.; Woo, K.; Yutani, H.; Dunnington, D. Ggplot2: Create Elegant Data Visualisations Using the Grammar of Graphics. Available online: https://CRAN.R-project.org/package=ggplot2 (accessed on 25 November 2023).

- Brownrigg, R. Maps: Draw Geographical Maps. Available online: https://CRAN.R-project.org/package=maps (accessed on 25 November 2023).

- Dawei, L.; Guan-tin, C. Wordcloud2: Create Word Cloud by Htmlwidget. Available online: https://github.com/lchiffon/wordcloud2 (accessed on 25 November 2023).

- Agarwal, P.; Gordon, D.; Griffith, J.; Kithulegoda, N.; Witteman, H.O.; Sacha Bhatia, R.; Kushniruk, A.W.; Borycki, E.M.; Lamothe, L.; Springall, E.; et al. Assessing the quality of mobile applications in chronic disease management: A scoping review. NPJ Digit. Med. 2021, 4, 46. [Google Scholar] [CrossRef]

- Haverinen, J.; Keränen, N.; Falkenbach, P.; Maijala, A.; Kolehmainen, T.; Reponen, J. Digi-HTA: Health technology assessment framework for digital healthcare services. FinJeHeW 2019, 11, 326–341. [Google Scholar] [CrossRef]

- Shah, S.S.; Gvozdanovic, A. Digital health; what do we mean by clinical validation? Expert Rev. Med. Devices 2021, 18, 5–8. [Google Scholar] [CrossRef]

- Tarricone, R.; Petracca, F.; Ciani, O.; Cucciniello, M. Distinguishing features in the assessment of mHealth apps. Expert. Rev. Pharmacoecon. Outcomes Res. 2021, 21, 521–526. [Google Scholar] [CrossRef]

- Tarricone, R.; Petracca, F.; Cucciniello, M.; Ciani, O. Recommendations for developing a lifecycle, multidimensional assessment framework for mobile medical apps. Health Econ. 2022, 31 (Suppl. S1), 73–97. [Google Scholar] [CrossRef]

- Baltaxe, E.; Czypionka, T.; Kraus, M.; Reiss, M.; Askildsen, J.E.; Grenkovic, R.; Lindén, T.S.; Pitter, J.G.; Rutten-van Molken, M.; Solans, O.; et al. Digital Health Transformation of Integrated Care in Europe: Overarching Analysis of 17 Integrated Care Programs. J. Med. Internet Res. 2019, 21, e14956. [Google Scholar] [CrossRef]

- Gordon, W.J.; Landman, A.; Zhang, H.; Bates, D.W. Beyond validation: Getting health apps into clinical practice. NPJ Digit. Med. 2020, 3, 14. [Google Scholar] [CrossRef]

- Khine, M. Editorial: Clinical validation of digital health technologies for personalized medicine. Front. Digit. Health 2022, 4, 831517. [Google Scholar] [CrossRef]

- Marwaha, J.S.; Landman, A.B.; Brat, G.A.; Dunn, T.; Gordon, W.J. Deploying digital health tools within large, complex health systems: Key considerations for adoption and implementation. NPJ Digit. Med. 2022, 5, 13. [Google Scholar] [CrossRef]

- van Velthoven, M.H.; Smith, C. Some considerations on digital health validation. NPJ Digit. Med. 2019, 2, 102. [Google Scholar] [CrossRef]

- van Velthoven, M.H.; Wyatt, J.C.; Meinert, E.; Brindley, D.; Wells, G. How standards and user involvement can improve app quality: A lifecycle approach. Int. J. Med. Inform. 2018, 118, 54–57. [Google Scholar] [CrossRef]

- Bente, B.E.; Van Dongen, A.; Verdaasdonk, R.; van Gemert-Pijnen, L. eHealth implementation in Europe: A scoping review on legal, ethical, financial, and technological aspects. Front. Digit. Health 2024, 6, 1332707. [Google Scholar] [CrossRef]

- Deshpande, S.; Rigby, M.; Blair, M. The Limited Extent of Accreditation Mechanisms for Websites and Mobile Applications in Europe. Stud. Health Technol. Inform. 2019, 262, 158–161. [Google Scholar] [CrossRef]

- WHO. World Health Organization (European Region). Exploring the Digital Health Landscape in the WHO European Region: Digital Health Country Profiles. Copenhagen: WHO Regional Office for Europe; 2024. Licence: CC BY-NC-SA 3.0 IGO. Available online: https://iris.who.int/bitstream/handle/10665/376540/9789289060998-eng.pdf?sequence=1 (accessed on 28 April 2024).

- Cordeiro, J.V. Digital Technologies and Data Science as Health Enablers: An Outline of Appealing Promises and Compelling Ethical, Legal, and Social Challenges. Front. Med. 2021, 8, 647897. [Google Scholar] [CrossRef]

- Corte-Real, A.; Nunes, T.; da Cunha, P.R. Reflections about Blockchain in Health Data Sharing: Navigating a Disruptive Technology. Int. J. Environ. Health Res. 2024, 21, 230. [Google Scholar] [CrossRef]

- Allen, B. The Promise of Explainable AI in Digital Health for Precision Medicine: A Systematic Review. J. Pers. Med. 2024, 14, 277. [Google Scholar] [CrossRef]

- Guo, C.; Ashrafian, H.; Ghafur, S.; Fontana, G.; Gardner, C.; Prime, M. Challenges for the evaluation of digital health solutions—A call for innovative evidence generation approaches. NPJ Digit. Med. 2020, 3, 110. [Google Scholar] [CrossRef]

- Azad-Khaneghah, P.; Neubauer, N.; Miguel Cruz, A.; Liu, L. Mobile health app usability and quality rating scales: A systematic review. Disabil. Rehabil. Assist. Technol. 2021, 16, 712–721. [Google Scholar] [CrossRef]

- Enam, A.; Torres-Bonilla, J.; Eriksson, H. Evidence-based evaluation of ehealth interventions: Systematic literature review. J. Med. Internet Res. 2018, 20, e10971. [Google Scholar] [CrossRef]

- Jacob, C.; Lindeque, J.; Klein, A.; Ivory, C.; Heuss, S.; Peter, M.K. Assessing the Quality and Impact of eHealth Tools: Systematic Literature Review and Narrative Synthesis. JMIR Hum. Factors 2023, 10, e45143. [Google Scholar] [CrossRef]

- Lagan, S.; Sandler, L.; Torous, J. Evaluating evaluation frameworks: A scoping review of frameworks for assessing health apps. BMJ Open 2021, 11, e047001. [Google Scholar] [CrossRef]

- Moshi, M.R.; Tooher, R.; Merlin, T. Suitability of current evaluation frameworks for use in the health technology assessment of mobile medical applications: A systematic review. Int. J. Technol. Assess. Health Care 2018, 34, 464–475. [Google Scholar] [CrossRef]

- Muro-Culebras, A.; Escriche-Escuder, A.; Martin-Martin, J.; Roldán-Jiménez, C.; De-Torres, I.; Ruiz-Muñoz, M.; Gonzalez-Sanchez, M.; Mayoral-Cleries, F.; Biró, A.; Tang, W.; et al. Tools for Evaluating the Content, Efficacy, and Usability of Mobile Health Apps According to the Consensus-Based Standards for the Selection of Health Measurement Instruments: Systematic Review. JMIR Mhealth Uhealth 2021, 9, e15433. [Google Scholar] [CrossRef]

- Nouri, R.; Niakan Kalhori, S.R.; Ghazisaeedi, M.; Marchand, G.; Yasini, M. Criteria for assessing the quality of mHealth apps: A systematic review. J. Am. Med. Inf. Assoc. 2018, 25, 1089–1098. [Google Scholar] [CrossRef]

- Woulfe, F.; Fadahunsi, K.P.; Smith, S.; Chirambo, G.B.; Larsson, E.; Henn, P.; Mawkin, M.; O’ Donoghue, J. Identification and evaluation of methodologies to assess the quality of mobile health apps in high-, low-, and middle-income countries: Rapid review. JMIR mHealth uHealth 2021, 9, e28384. [Google Scholar] [CrossRef]

- Gessa, A.; Sancha, P.; Jiménez, A. Quality Assessment of Health-apps using a Public Agency Quality and Safety Seal. The Appsaludable Case. J. Med. Syst. 2021, 45, 73. [Google Scholar] [CrossRef]

- Grau-Corral, I.; Pantoja, P.E.; Grajales Iii, F.J.; Kostov, B.; Aragunde, V.; Puig-Soler, M.; Roca, D.; Couto, E.; Sisó-Almirall, A. Assessing Apps for Health Care Workers Using the ISYScore-Pro Scale: Development and Validation Study. JMIR mHealth uHealth 2021, 9, e17660. [Google Scholar] [CrossRef]

- Henson, P.; David, G.; Albright, K.; Torous, J. Deriving a practical framework for the evaluation of health apps. Lancet Digit. Health 2019, 1, e52–e54. [Google Scholar] [CrossRef]

- Levine, D.M.; Co, Z.; Newmark, L.P.; Groisser, A.R.; Holmgren, A.J.; Haas, J.S.; Bates, D.W. Design and testing of a mobile health application rating tool. NPJ Digit. Med. 2020, 3, 74. [Google Scholar] [CrossRef]

- López Seguí, F.; Pratdepàdua Bufill, C.; Rius Soler, A.; de San Pedro, M.; López Truño, B.; Aguiló Laine, A.; Martínez Roldán, J.; García Cuyàs, F. Prescription and Integration of Accredited Mobile Apps in Catalan Health and Social Care: Protocol for the AppSalut Site Design. JMIR Res. Protoc. 2018, 7, e11414. [Google Scholar] [CrossRef]

- Moshi, M.R.; Tooher, R.; Merlin, T. Development of a health technology assessment module for evaluating mobile medical applications. Int. J. Technol. Assess. Health Care 2020, 36, 252–261. [Google Scholar] [CrossRef]

- Wagneur, N.; Callier, P.; Zeitoun, J.D.; Silber, D.; Sabatier, R.; Denis, F. Assessing a New Prescreening Score for the Simplified Evaluation of the Clinical Quality and Relevance of eHealth Apps: Instrument Validation Study. J. Med. Internet Res. 2022, 24, e39590. [Google Scholar] [CrossRef]

- Woulfe, F.; Fadahunsi, K.P.; O’Grady, M.; Chirambo, G.B.; Mawkin, M.; Majeed, A.; Smith, S.; Henn, P.; O’Donoghue, J. Modification and Validation of an mHealth App Quality Assessment Methodology for International Use: Cross-sectional and eDelphi Studies. JMIR Form. Res. 2022, 6, e36912. [Google Scholar] [CrossRef]

- Ashaba, J.; Nabukenya, J. Beyond monitoring functionality to results evaluation of eHealth interventions: Development and validation of an eHealth evaluation framework. Health Inform. J. 2022, 28, 14604582221141834. [Google Scholar] [CrossRef] [PubMed]

- Kowatsch, T.; Otto, L.; Harperink, S.; Cotti, A.; Schlieter, H. A design and evaluation framework for digital health interventions. It—Inf. Technol. 2019, 61, 253–263. [Google Scholar] [CrossRef]

- van Royen, F.S.; Asselbergs, F.W.; Alfonso, F.; Vardas, P.; van Smeden, M. Five critical quality criteria for artificial intelligence-based prediction models. Eur. Heart J. 2023, 44, 4831–4834. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Lee, M.A. Validation and usability study of the framework for a user needs-centered mHealth app selection. Int. J. Med. Inform. 2022, 167, 104877. [Google Scholar] [CrossRef]

- Llorens-Vernet, P.; Miró, J. Standards for mobile health-related apps: Systematic review and development of a guide. JMIR mHealth uHealth 2020, 8, e13057. [Google Scholar] [CrossRef]

- Mathews, S.C.; McShea, M.J.; Hanley, C.L.; Ravitz, A.; Labrique, A.B.; Cohen, A.B. Reply: Some considerations on digital health validation. NPJ Digit. Med. 2019, 2, 103. [Google Scholar] [CrossRef]

- Akbar, S.; Coiera, E.; Magrabi, F. Safety concerns with consumer-facing mobile health applications and their consequences: A scoping review. J. Am. Med. Inform. Assoc. 2020, 27, 330–340. [Google Scholar] [CrossRef]

- Nurgalieva, L.; O’Callaghan, D.; Doherty, G. Security and Privacy of mHealth Applications: A Scoping Review. IEEE Access 2020, 8, 104247–104268. [Google Scholar] [CrossRef]

- Sanyal, C.; Stolee, P.; Juzwishin, D.; Husereau, D. Economic evaluations of eHealth technologies: A systematic review. PLoS ONE 2018, 13, e0198112. [Google Scholar] [CrossRef] [PubMed]

- Wisniewski, H.; Liu, G.; Henson, P.; Vaidyam, A.; Hajratalli, N.K.; Onnela, J.-P.; Torous, J. Understanding the quality, effectiveness and attributes of top-rated smartphone health apps. Evid. Based Ment. Health 2019, 22, 4–9. [Google Scholar] [CrossRef]

- Iribarren, S.J.; Akande, T.O.; Kamp, K.J.; Barry, D.; Kader, Y.G.; Suelzer, E. Effectiveness of Mobile Apps to Promote Health and Manage Disease: Systematic Review and Meta-analysis of Randomized Controlled Trials. JMIR Mhealth Uhealth 2021, 9, e21563. [Google Scholar] [CrossRef]

- Byambasuren, O.; Sanders, S.; Beller, E.; Glasziou, P. Prescribable mHealth apps identified from an overview of systematic reviews. NPJ Digit. Med. 2018, 1, 12. [Google Scholar] [CrossRef] [PubMed]

- Hrynyschyn, R.; Prediger, C.; Stock, C.; Helmer, S.M. Evaluation Methods Applied to Digital Health Interventions: What Is Being Used beyond Randomised Controlled Trials?-A Scoping Review. Int. J. Environ. Res. Public Health 2022, 19, 5221. [Google Scholar] [CrossRef] [PubMed]

- Bonten, T.N.; Rauwerdink, A.; Wyatt, J.C.; Kasteleyn, M.J.; Witkamp, L.; Riper, H.; van Gemert-Pijnen, L.J.; Cresswell, K.; Sheikh, A.; Schijven, M.P.; et al. Online Guide for Electronic Health Evaluation Approaches: Systematic Scoping Review and Concept Mapping Study. J. Med. Internet Res. 2020, 22, e17774. [Google Scholar] [CrossRef]

- Chen, C.E.; Harrington, R.A.; Desai, S.A.; Mahaffey, K.W.; Turakhia, M.P. Characteristics of Digital Health Studies Registered in ClinicalTrials.gov. JAMA Intern. Med. 2019, 179, 838–840. [Google Scholar] [CrossRef]

- Pyper, E.; McKeown, S.; Hartmann-Boyce, J.; Powell, J. Digital Health Technology for Real-World Clinical Outcome Measurement Using Patient-Generated Data: Systematic Scoping Review. J. Med. Internet Res. 2023, 25, e46992. [Google Scholar] [CrossRef] [PubMed]

- He, X.; He, Q.; Zhu, X. Visual Analysis of Digital Healthcare Based on CiteSpace. J. Biosci. Med. 2023, 11, 252–265. [Google Scholar] [CrossRef]

- Sikandar, H.; Abbas, A.F.; Khan, N.; Qureshi, M.I. Digital Technologies in Healthcare: A Systematic Review and Bibliometric Analysis. Int. J. Online Biomed. Eng. 2022, 18, 34–48. [Google Scholar] [CrossRef]

- Pérez Sust, P.; Solans, O.; Fajardo, J.C.; Medina Peralta, M.; Rodenas, P.; Gabaldà, J.; Garcia Eroles, L.; Comella, A.; Velasco Muñoz, C.; Sallent Ribes, J.; et al. Turning the Crisis Into an Opportunity: Digital Health Strategies Deployed During the COVID-19 Outbreak. JMIR Public Health Surveill 2020, 6, e19106. [Google Scholar] [CrossRef] [PubMed]

- Vuorikari, R.; Kluzer, S.; Punie, Y. DigComp 2.2: The Digital Competence Framework for Citizens—With New Examples of Knowledge, Skills and Attitudes. Available online: https://publications.jrc.ec.europa.eu/repository/handle/JRC128415 (accessed on 29 January 2024).

- DePasse, J.W.; Chen, C.E.; Sawyer, A.; Jethwani, K.; Sim, I. Academic Medical Centers as digital health catalysts. Healthcare 2014, 2, 173–176. [Google Scholar] [CrossRef] [PubMed]

- Ellner, A.L.; Stout, S.; Sullivan, E.E.; Griffiths, E.P.; Mountjoy, A.; Phillips, R.S. Health Systems Innovation at Academic Health Centers: Leading in a New Era of Health Care Delivery. Acad. Med. 2015, 90, 872–880. [Google Scholar] [CrossRef] [PubMed]

- Tseng, J.; Samagh, S.; Fraser, D.; Landman, A.B. Catalyzing healthcare transformation with digital health: Performance indicators and lessons learned from a Digital Health Innovation Group. Healthcare 2018, 6, 150–155. [Google Scholar] [CrossRef]

- Hourani, D.; Darling, S.; Cameron, E.; Dromey, J.; Crossley, L.; Kanagalingam, S.; Muscara, F.; Gwee, A.; Gell, G.; Hiscock, H.; et al. What Makes for a Successful Digital Health Integrated Program of Work? Lessons Learnt and Recommendations From the Melbourne Children’s Campus. Front. Digit. Health 2021, 3, 661708. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomis-Pastor, M.; Berdún, J.; Borrás-Santos, A.; De Dios López, A.; Fernández-Montells Rama, B.; García-Esquirol, Ó.; Gratacòs, M.; Ontiveros Rodríguez, G.D.; Pelegrín Cruz, R.; Real, J.; et al. Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities. Healthcare 2024, 12, 1057. https://doi.org/10.3390/healthcare12111057

Gomis-Pastor M, Berdún J, Borrás-Santos A, De Dios López A, Fernández-Montells Rama B, García-Esquirol Ó, Gratacòs M, Ontiveros Rodríguez GD, Pelegrín Cruz R, Real J, et al. Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities. Healthcare. 2024; 12(11):1057. https://doi.org/10.3390/healthcare12111057

Chicago/Turabian StyleGomis-Pastor, Mar, Jesús Berdún, Alicia Borrás-Santos, Anna De Dios López, Beatriz Fernández-Montells Rama, Óscar García-Esquirol, Mònica Gratacòs, Gerardo D. Ontiveros Rodríguez, Rebeca Pelegrín Cruz, Jordi Real, and et al. 2024. "Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities" Healthcare 12, no. 11: 1057. https://doi.org/10.3390/healthcare12111057

APA StyleGomis-Pastor, M., Berdún, J., Borrás-Santos, A., De Dios López, A., Fernández-Montells Rama, B., García-Esquirol, Ó., Gratacòs, M., Ontiveros Rodríguez, G. D., Pelegrín Cruz, R., Real, J., Bachs i Ferrer, J., & Comella, A. (2024). Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities. Healthcare, 12(11), 1057. https://doi.org/10.3390/healthcare12111057