Fine-Grained Motion Recognition in At-Home Fitness Monitoring with Smartwatch: A Comparative Analysis of Explainable Deep Neural Networks

Abstract

1. Introduction

- We formulated a machine learning problem for recognizing fine-grained differences in a specific at-home fitness activity (squat) in a supervised learning fashion.

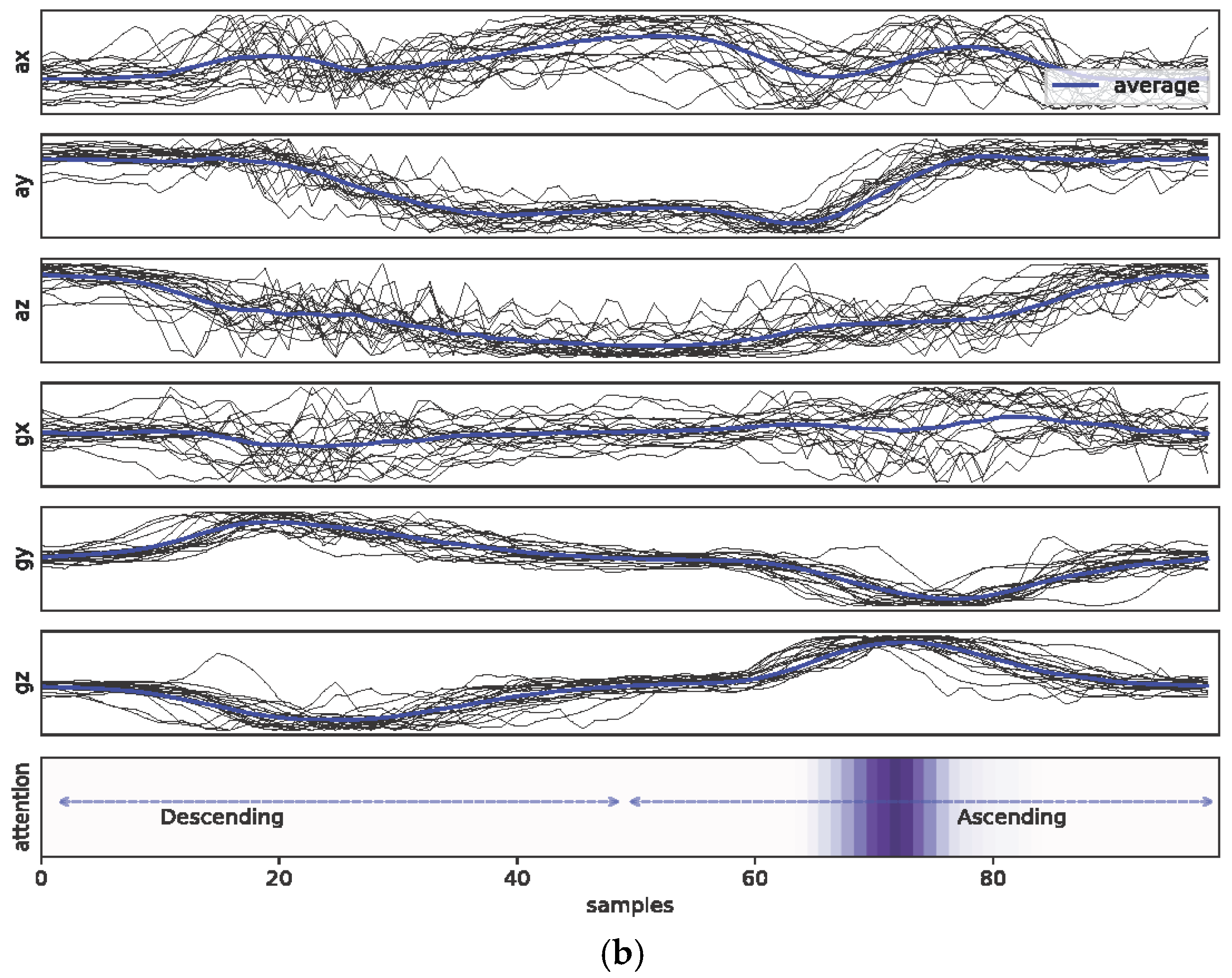

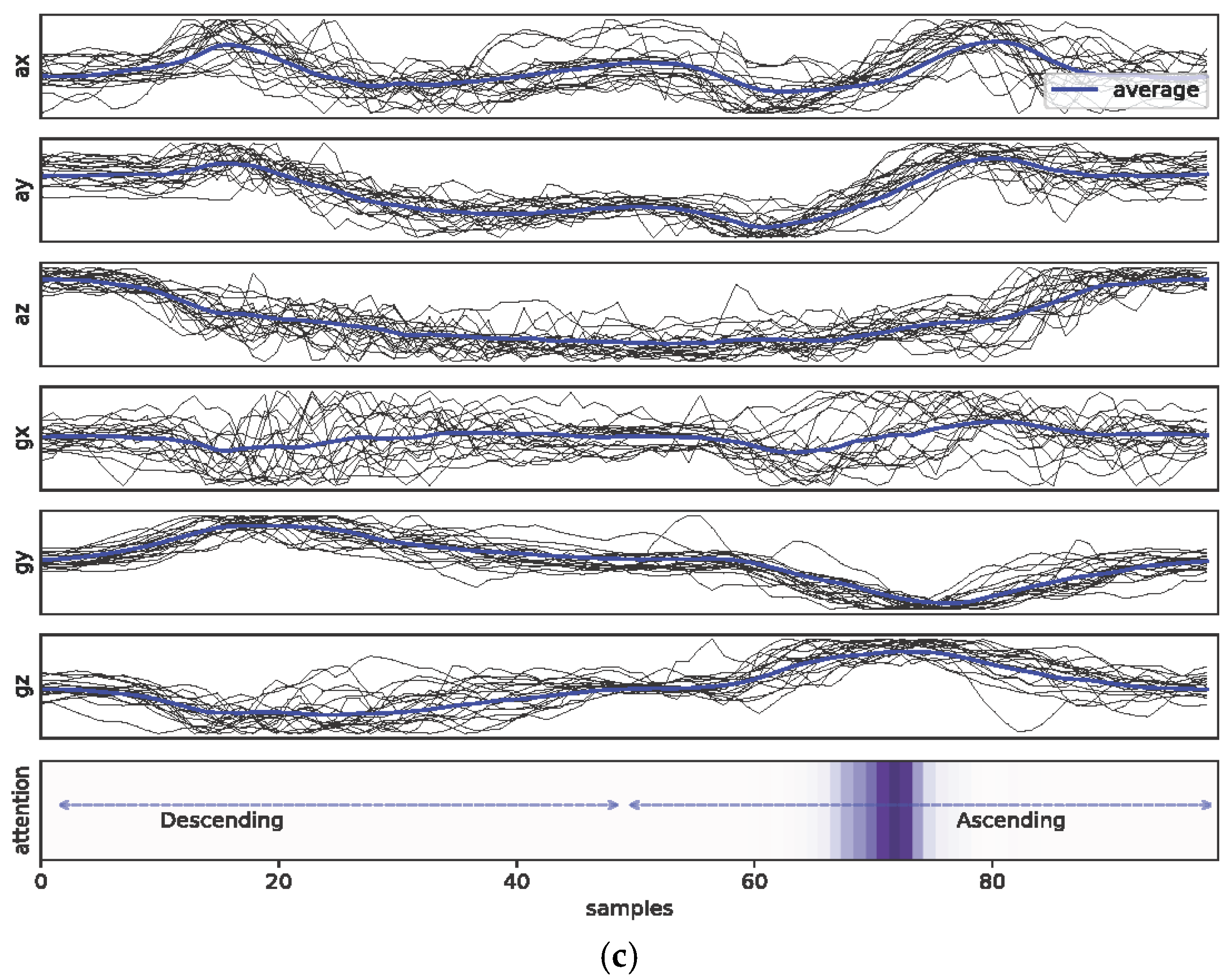

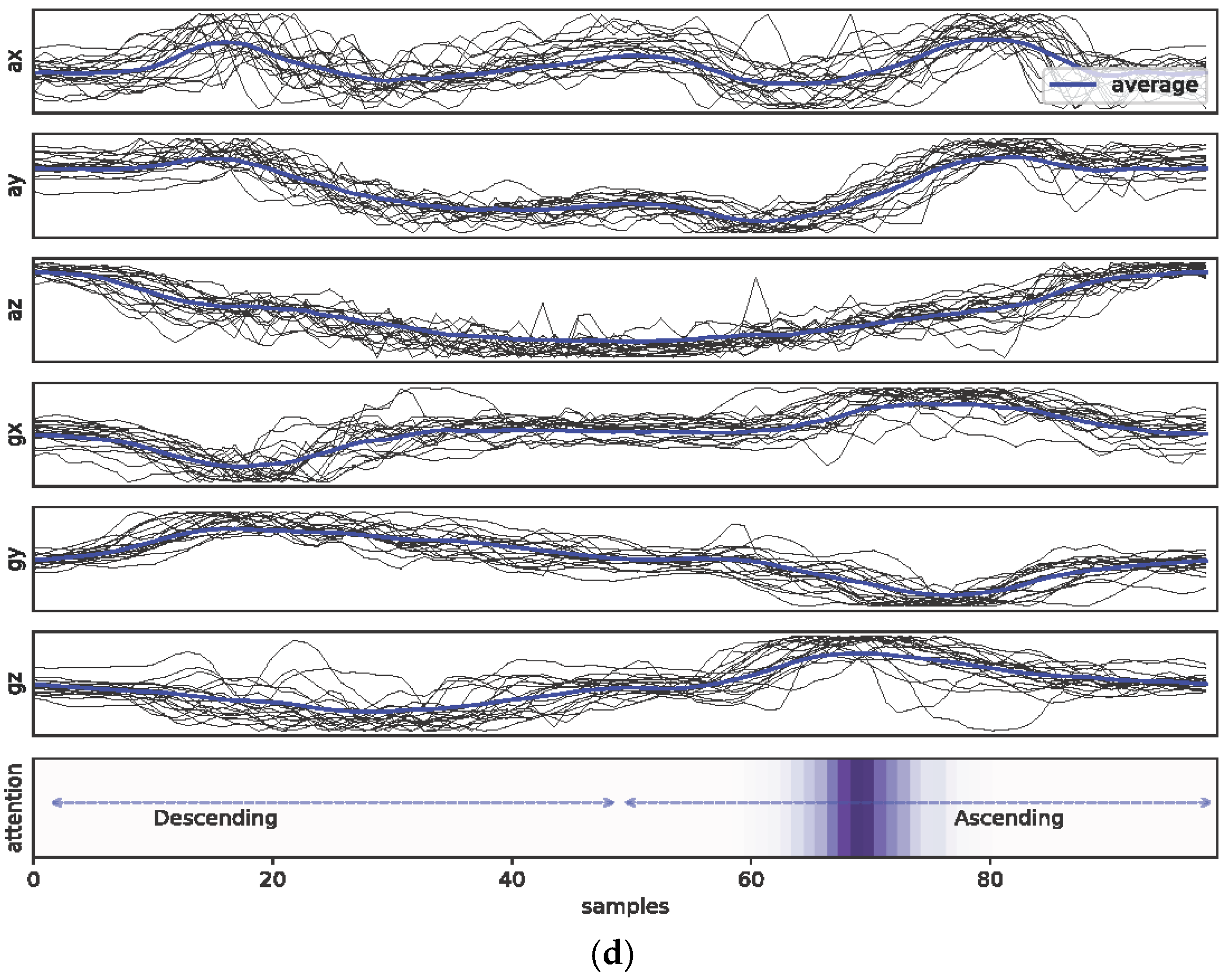

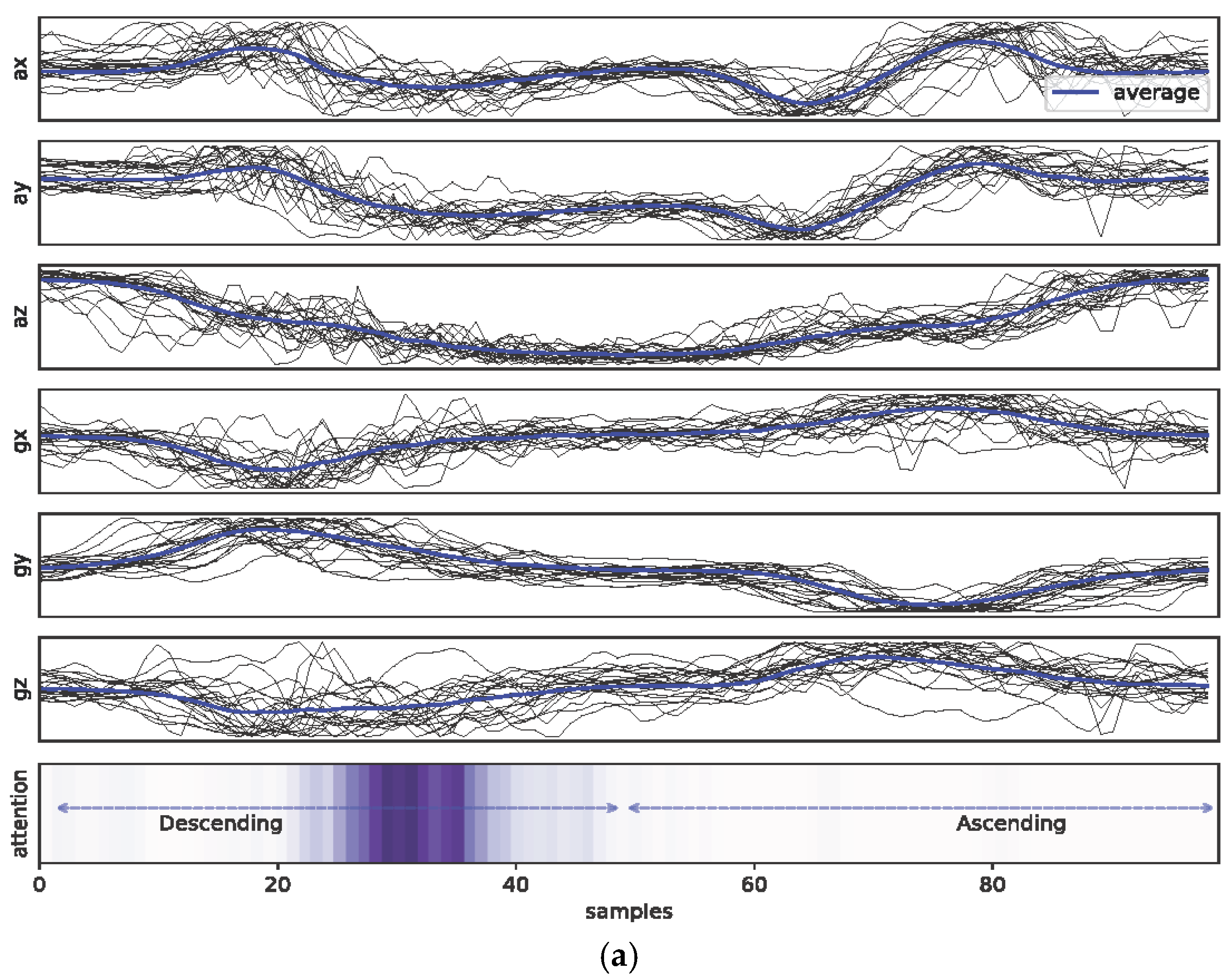

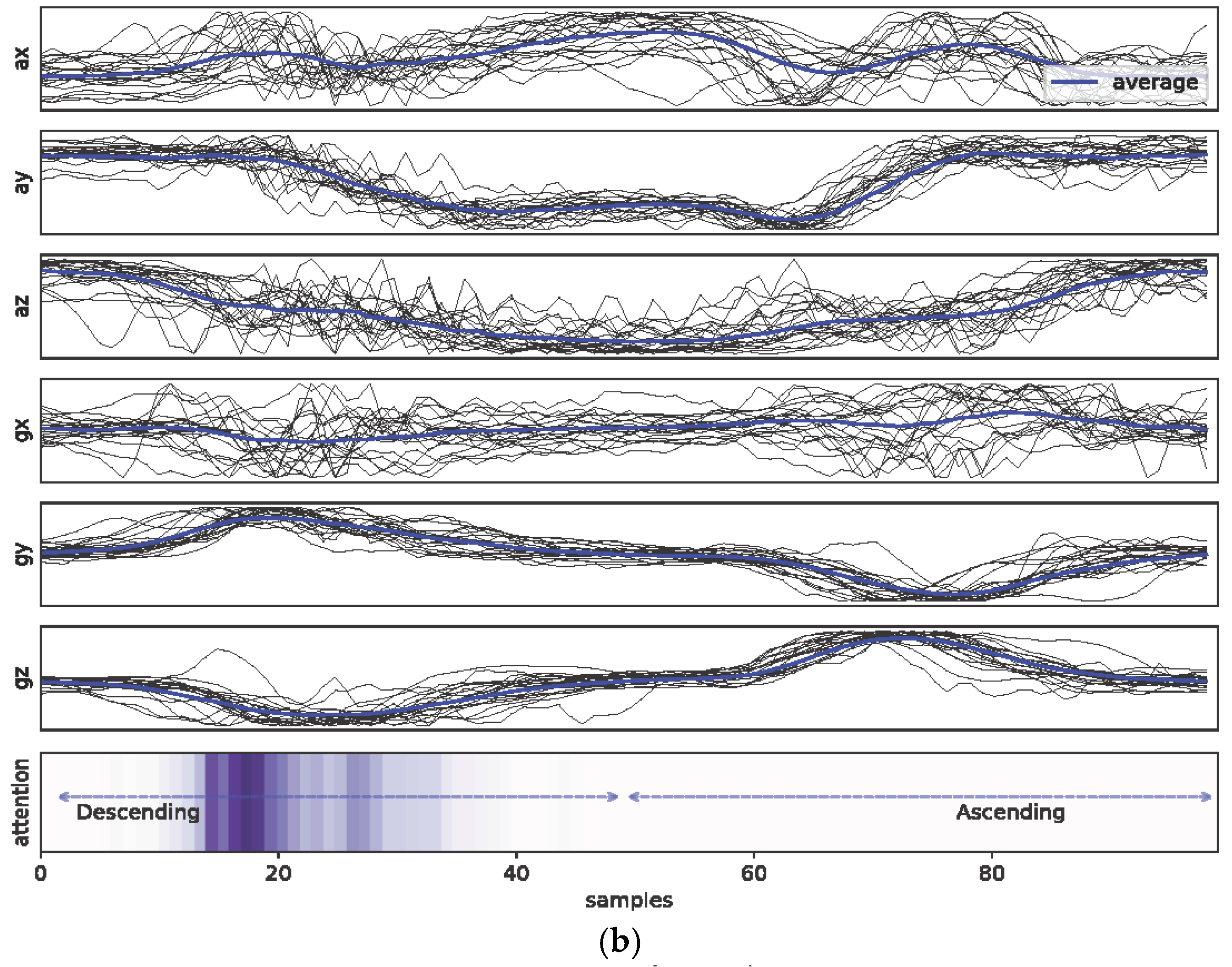

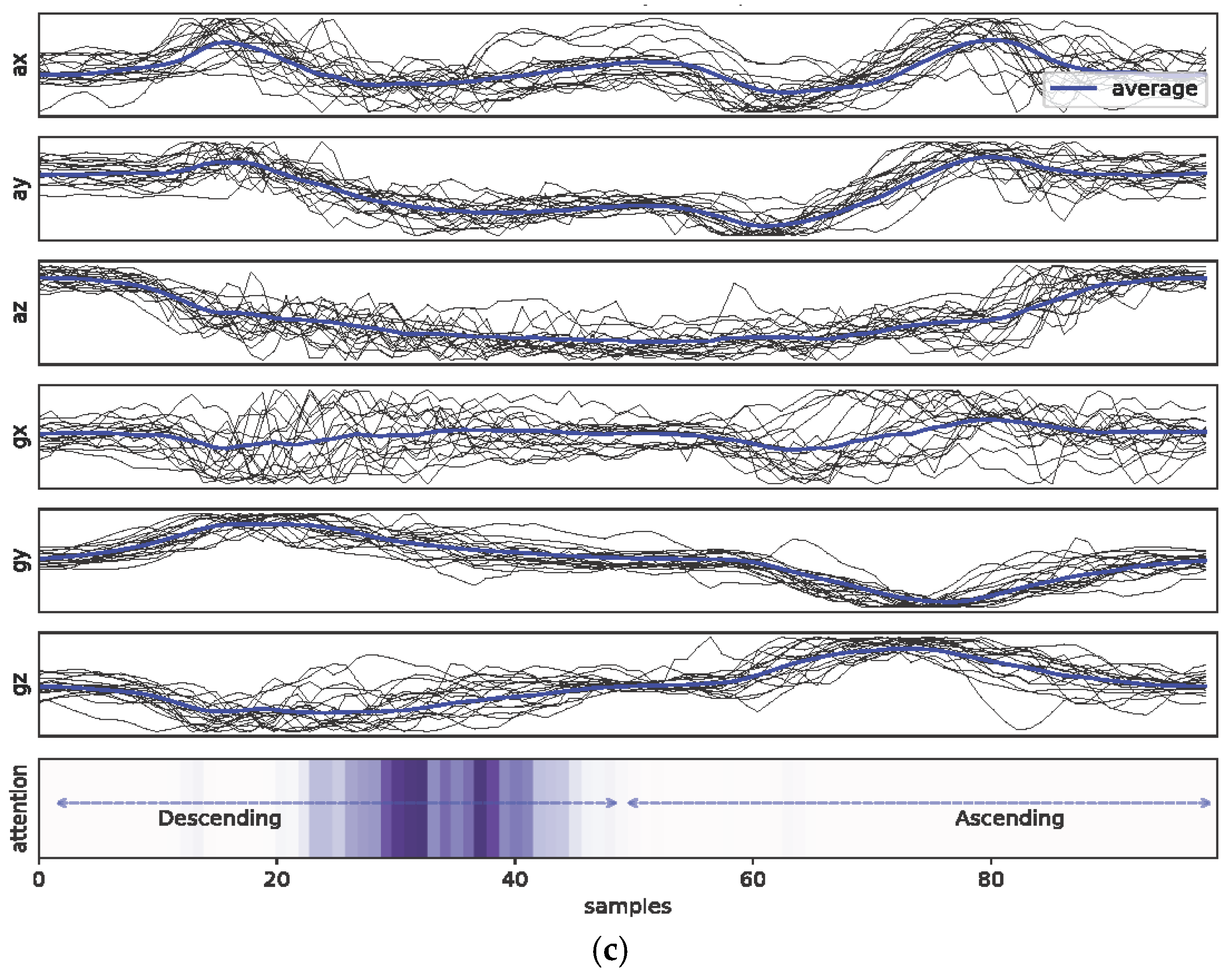

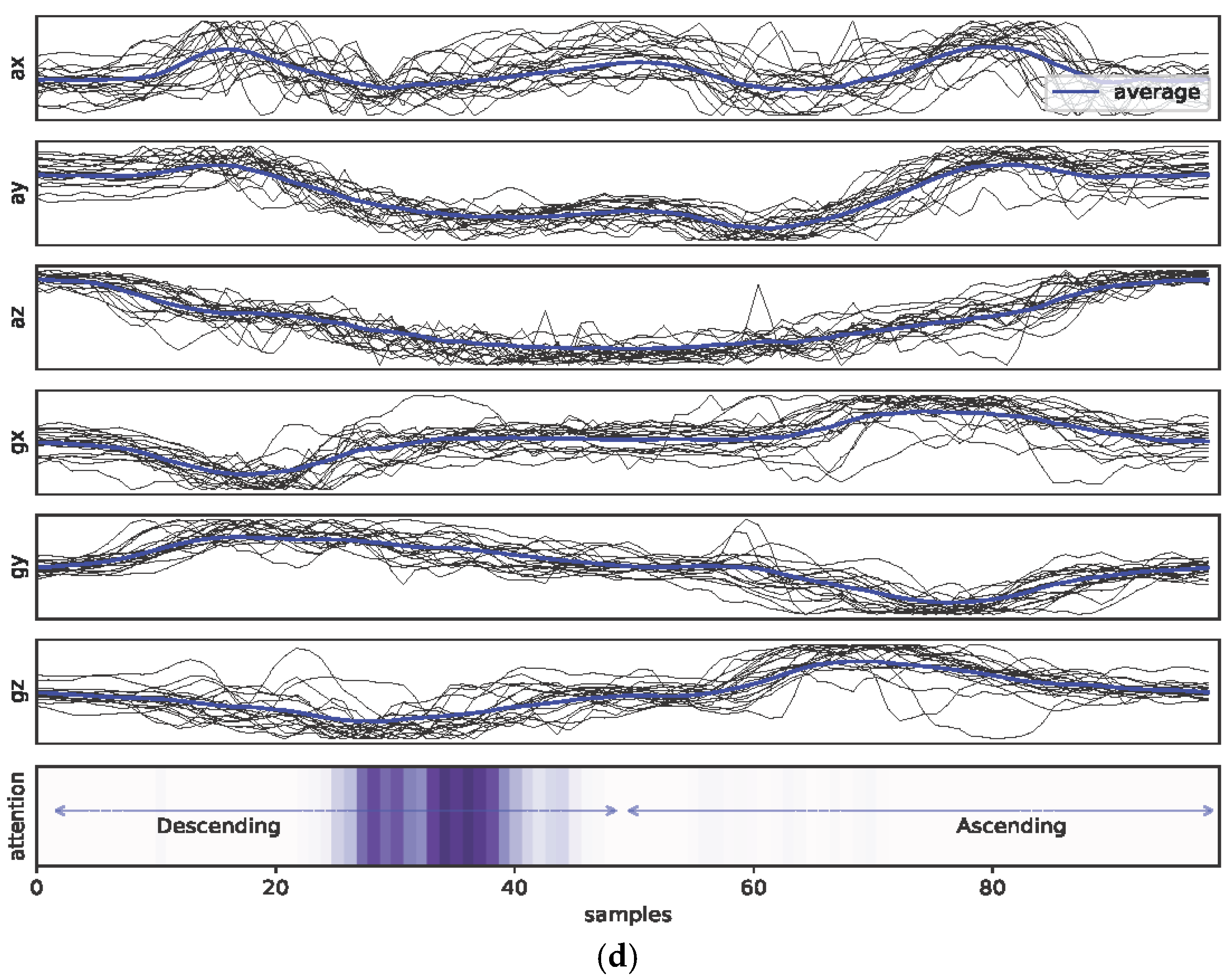

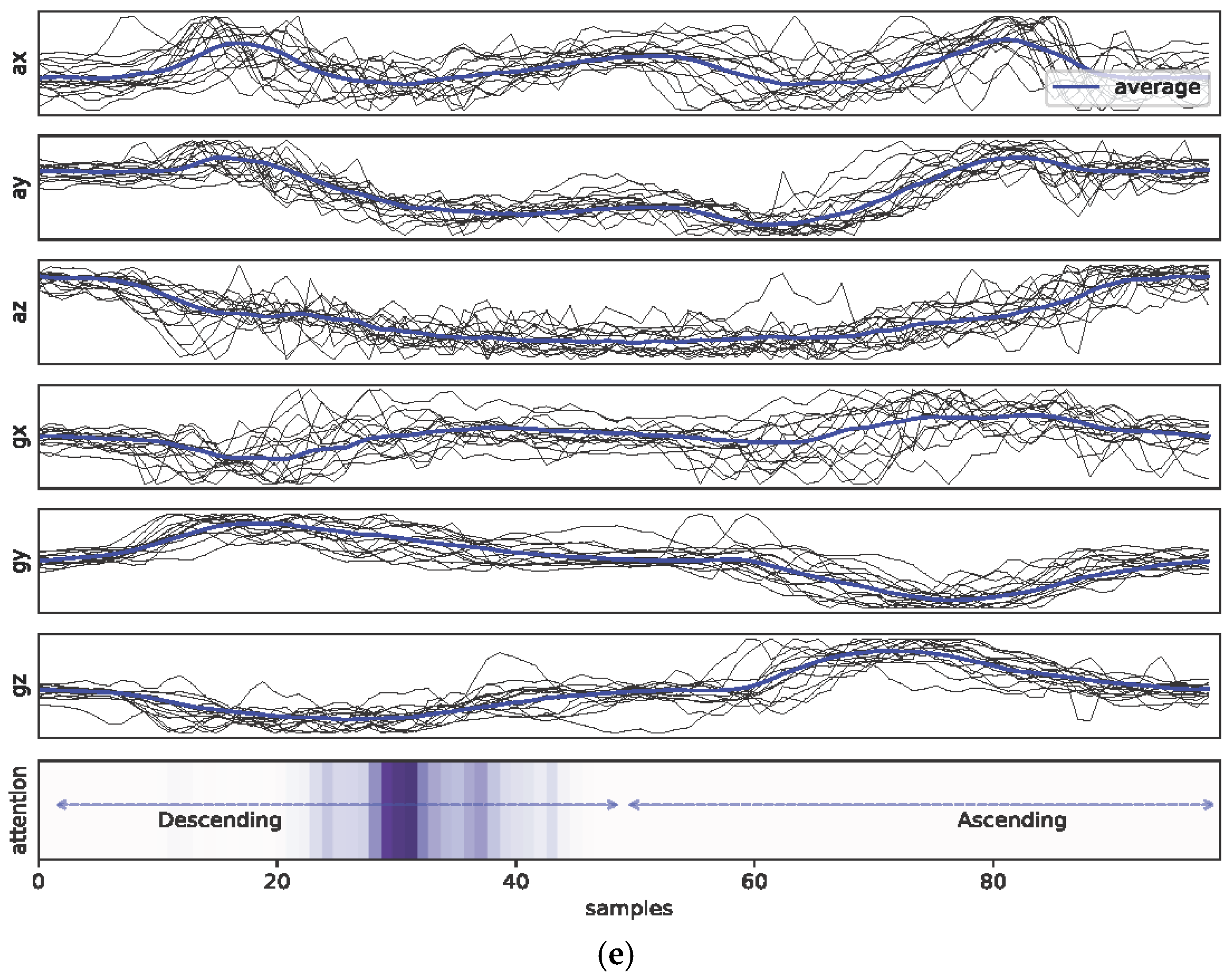

- We incorporated an attention mechanism for identifying the relative contributions of the motion sequence data during the decision-making process by the machine-learning system.

- We visualized and analyzed the machine-generated attention vectors during the inference phase.

2. Materials and Methods

2.1. Measurement Setting and Data Collection

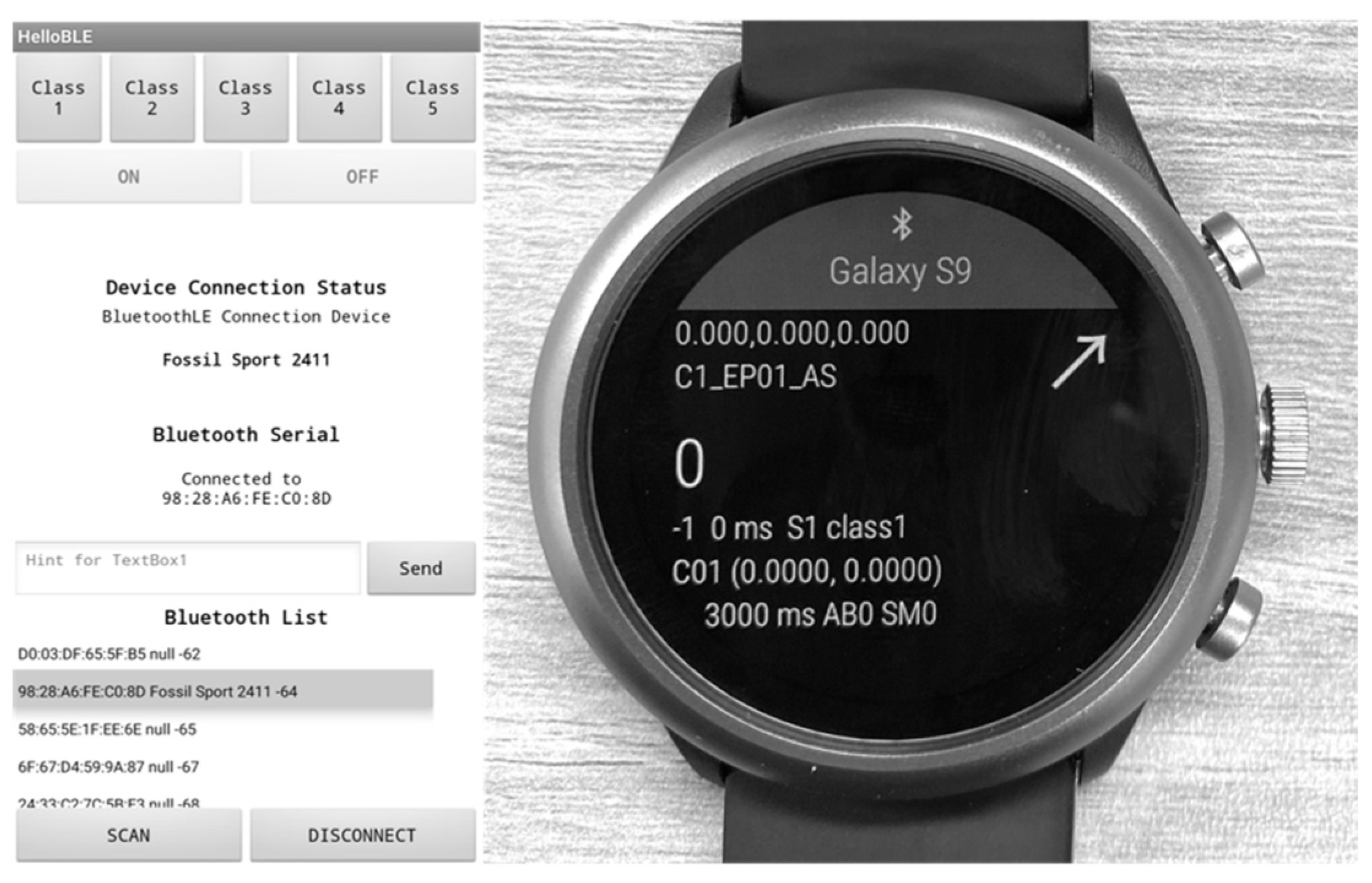

2.1.1. System for Data Collection

2.1.2. Definition of Squat Class

2.1.3. Participants

2.1.4. Procedure

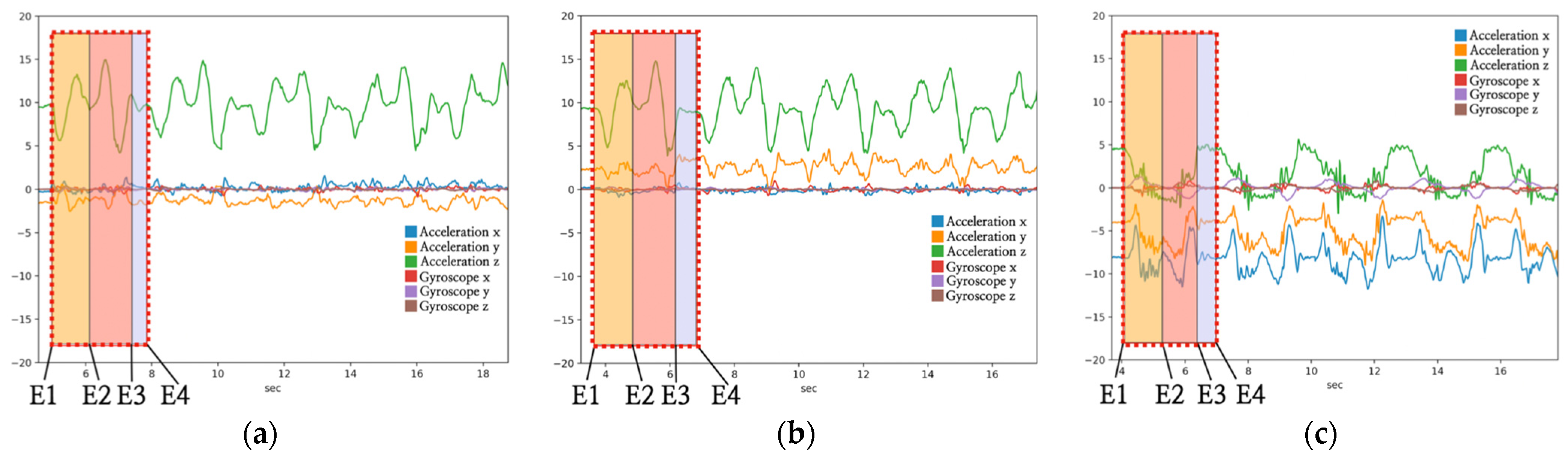

2.2. Data Preprocessing

2.3. Data Segmentation

2.4. Classification Algorithm

2.4.1. Feature-Based Machine Learning: Random Forest

2.4.2. Deep Learning-Based Models

- One-Dimensional (1D) CNN

- LSTM and gated recurrent unit (GRU)

- Attention Mechanism

3. Results

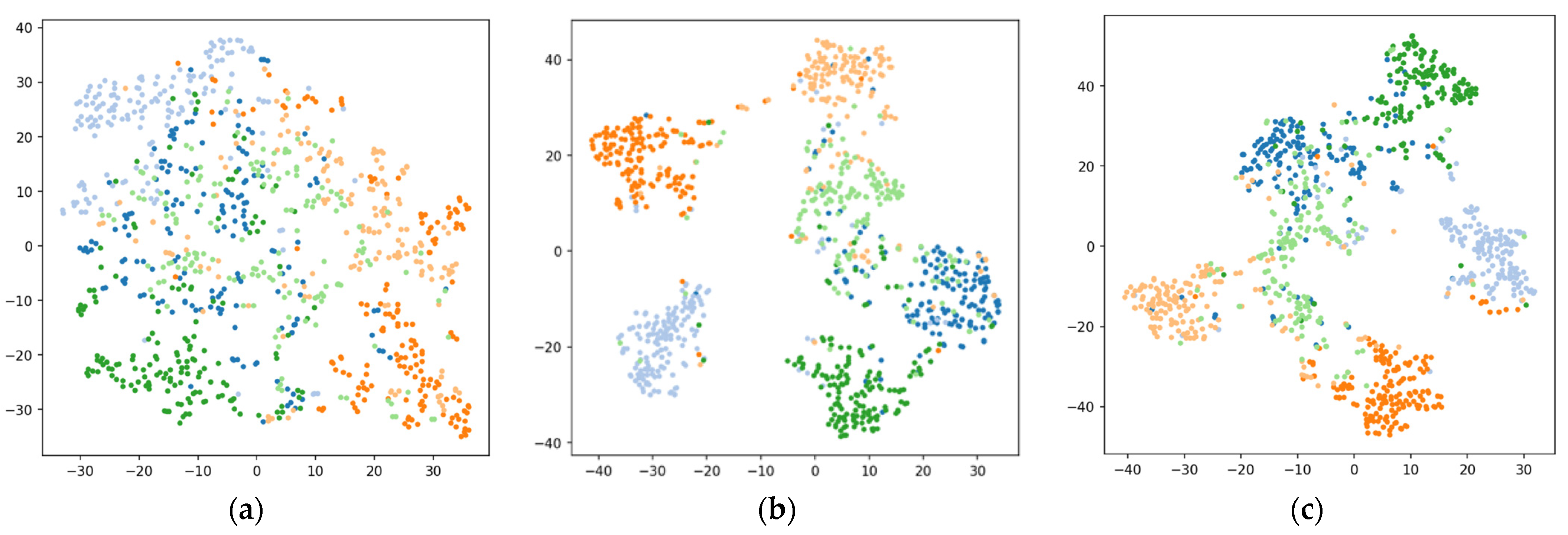

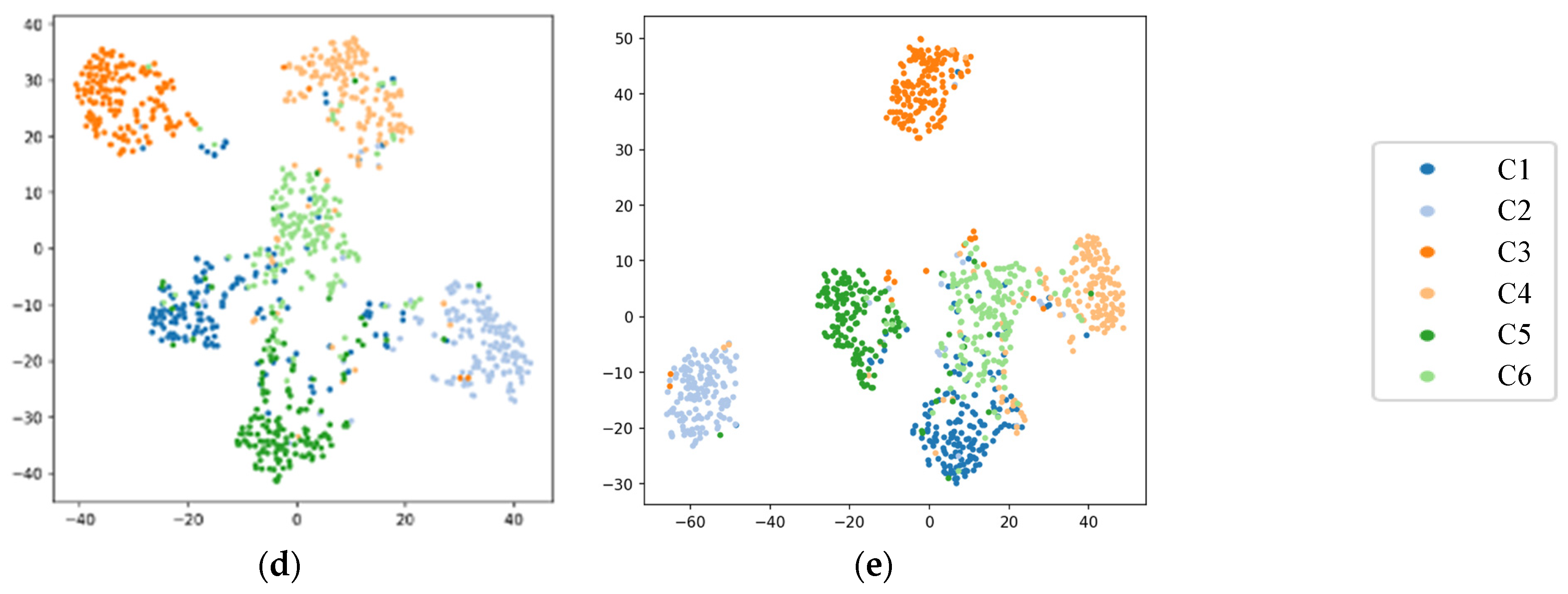

3.1. Classification Results

3.1.1. Baseline Results from a Random Forest

3.1.2. Results Using a Deep Neural Network Models

4. Discussion

4.1. State of the Art in Squat Exercise Recognition with Smartwatch

4.2. Explainable and Trustworthy AI Coaching System Based on Attentional Neural Mechanisms

4.3. Limitations and Further Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. A survey of online activity recognition using mobile phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef] [PubMed]

- Ann, O.C.; Theng, L.B. Human activity recognition: A review. In Proceedings of the 2014 IEEE international conference on control system, computing and engineering (ICCSCE 2014), Penang, Malaysia, 28–30 November 2014. [Google Scholar]

- Phatak, A.A.; Wieland, F.-G.; Vempala, K.; Volkmar, F.; Memmert, D. Artificial Intelligence Based Body Sensor Network Framework—Narrative Review: Proposing an End-to-End Framework using Wearable Sensors, Real-Time Location Systems and Artificial Intelligence/Machine Learning Algorithms for Data Collection, Data Mining and Knowledge Discovery in Sports and Healthcare. Sports Med.—Open 2021, 7, 79. [Google Scholar]

- Zhang, Z.; Wang, N.; Cui, L. Fine-Fit: A Fine-grained Gym Exercises Recognition System. In Proceedings of the 2018 24th Asia-Pacific Conference on Communications (APCC), Ningbo, China, 12–14 November 2018. [Google Scholar]

- Niewiadomski, R.; Kolykhalova, K.; Piana, S.; Alborno, P.; Volpe, G.; Camurri, A. Analysis of movement quality in full-body physical activities. ACM Trans. Interact. Intell. Syst. 2019, 9, 1–20. [Google Scholar] [CrossRef]

- Rohrbach, M.; Rohrbach, A.; Regneri, M.; Amin, S.; Andriluka, M.; Pinkal, M.; Schiele, B. Recognizing fine-grained and composite activities using hand-centric features and script data. Int. J. Comput. Vis. 2016, 119, 346–373. [Google Scholar] [CrossRef]

- Yang, Z.; Luo, T.; Wang, D.; Hu, Z.; Gao, J.; Wang, L. Learning to navigate for fine-grained classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kim, H.; Kim, H.-J.; Park, J.; Ryu, J.-K.; Kim, S.-C. Recognition of Fine-Grained Walking Patterns Using a Smartwatch with Deep Attentive Neural Networks. Sensors 2021, 21, 6393. [Google Scholar] [CrossRef]

- Lorenzetti, S.; Ostermann, M.; Zeidler, F.; Zimmer, P.; Jentsch, L.; List, R.; Taylor, W.R.; Schellenberg, F. How to squat? Effects of various stance widths, foot placement angles and level of experience on knee, hip and trunk motion and loading. BMC Sports Sci. Med. Rehabil. 2018, 10, 14. [Google Scholar] [CrossRef] [PubMed]

- Gooyers, C.E.; Beach, T.A.; Frost, D.M.; Callaghan, J.P. The influence of resistance bands on frontal plane knee mechanics during body-weight squat and vertical jump movements. Sports Biomech. 2012, 11, 391–401. [Google Scholar] [CrossRef]

- Rungsawasdisap, N.; Yimit, A.; Lu, X.; Hagihara, Y. Squat movement recognition using hidden Markov models. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018. [Google Scholar]

- O’Reilly, M.; Whelan, D.; Chanialidis, C.; Friel, N.; Delahunt, E.; Ward, T.; Caulfield, B. Evaluating squat performance with a single inertial measurement unit. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015. [Google Scholar]

- Lee, J.; Joo, H.; Lee, J.; Chee, Y. Automatic classification of squat posture using inertial sensors: Deep learning approach. Sensors 2020, 20, 361. [Google Scholar] [CrossRef]

- O’Reilly, M.A.; Whelan, D.F.; Ward, T.E.; Delahunt, E.; Caulfield, B.M. Technology in strength and conditioning: Assessing bodyweight squat technique with wearable sensors. J. Strength Cond. Res. 2017, 31, 2303–2312. [Google Scholar] [CrossRef] [PubMed]

- Tian, D.; Xu, X.; Tao, Y.; Wang, X. An improved activity recognition method based on smart watch data. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017. [Google Scholar]

- Kim, H.-J.; Kim, H.; Park, J.; Oh, B.; Kim, S.-C. Recognition of Gait Patterns in Older Adults Using Wearable Smartwatch Devices: Observational Study. J. Med. Internet Res. 2022, 24, e39190. [Google Scholar] [CrossRef]

- Laput, G.; Harrison, C. Sensing Fine-Grained Hand Activity with Smartwatches. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Haque, M.N.; Mahbub, M.; Tarek, M.H.; Lota, L.N.; Ali, A.A. Nurse Care Activity Recognition: A GRU-based approach with attention mechanism. In Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019. [Google Scholar]

- Ma, H.; Li, W.; Zhang, X.; Gao, S.; Lu, S. AttnSense: Multi-level Attention Mechanism For Multimodal Human Activity Recognition. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19), Macao, China, 10–16 August 2019. [Google Scholar]

- Joo, H.; Kim, H.; Ryu, J.-K.; Ryu, S.; Lee, K.-M.; Kim, S.-C. Estimation of Fine-Grained Foot Strike Patterns with Wearable Smartwatch Devices. Int. J. Environ. Res. Public Health 2022, 19, 1279. [Google Scholar] [CrossRef] [PubMed]

- Raffel, C.; Ellis, D.P. Feed-forward networks with attention can solve some long-term memory problems. arXiv 2015, arXiv:1512.08756. [Google Scholar]

- Luong, M.-T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Liu, X.; Duh, K.; Liu, L.; Gao, J. Very deep transformers for neural machine translation. arXiv 2020, arXiv:2008.07772. [Google Scholar]

- Ran, X.; Shan, Z.; Fang, Y.; Lin, C. An LSTM-based method with attention mechanism for travel time prediction. Sensors 2019, 19, 861. [Google Scholar] [CrossRef] [PubMed]

- Zeng, M.; Gao, H.; Yu, T.; Mengshoel, O.J.; Langseth, H.; Lane, I.; Liu, X. Understanding and improving recurrent networks for human activity recognition by continuous attention. In Proceedings of the 2018 ACM International Symposium on Wearable Computers, Singapore, 8–12 October 2018. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M.L. Activity Recognition from Accelerometer Data; Aaai: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Coburn, J.; Malek, M. National Strength and Conditioning Association (US). In NSCA’s Essentials of Personal Training; Human Kinetics: Champaign, IL, USA, 2012. [Google Scholar]

- Comfort, P.; Kasim, P. Optimizing squat technique. Strength. Cond. J. 2007, 29, 10. [Google Scholar] [CrossRef]

- Myer, G.D.; Ford, K.R.; Di Stasi, S.L.; Foss, K.D.B.; Micheli, L.J.; Hewett, T.E. High knee abduction moments are common risk factors for patellofemoral pain (PFP) and anterior cruciate ligament (ACL) injury in girls: Is PFP itself a predictor for subsequent ACL injury? Br. J. Sports Med. 2015, 49, 118–122. [Google Scholar] [CrossRef]

- Fourneret, P.; Jeannerod, M. Limited conscious monitoring of motor performance in normal subjects. Neuropsychologia 1998, 36, 1133–1140. [Google Scholar] [CrossRef]

- Locke, S.M.; Mamassian, P.; Landy, M.S. Performance monitoring for sensorimotor confidence: A visuomotor tracking study. Cognition 2020, 205, 104396. [Google Scholar] [CrossRef]

- Santos, L.; Khoshhal, K.; Dias, J. Trajectory-based human action segmentation. Pattern Recognit. 2015, 48, 568–579. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by random forest. R News 2002, 2, 18–22. [Google Scholar]

- Lee, K.-W.; Kim, S.-C.; Lim, S.-C. DeepTouch: Enabling Touch Interaction in Underwater Environments by Learning Touch-Induced Inertial Motions. IEEE Sens. J. 2022, 22, 8924–8932. [Google Scholar] [CrossRef]

- Kang, G.; Kim, S.-C. DeepEcho: Echoacoustic Recognition of Materials using Returning Echoes with Deep Neural Networks. IEEE Trans. Emerg. Top. Comput. 2020, 10, 450–462. [Google Scholar] [CrossRef]

- Christ, M.; Braun, N.; Neuffer, J.; Kempa-Liehr, A.W. Time series feature extraction on basis of scalable hypothesis tests (tsfresh–a python package). Neurocomputing 2018, 307, 72–77. [Google Scholar] [CrossRef]

- Christ, M.; Braun, N.; Neuffer, J.; Kempa-Liehr, A.W. Overview on Extracted Features, Tsfresh Python Package. 2018. Available online: https://tsfresh.readthedocs.io/en/latest/text/list_of_features.html (accessed on 16 February 2022).

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Ryu, S.; Kim, S.-C. Embedded identification of surface based on multirate sensor fusion with deep neural network. IEEE Embed. Sys. Lett. 2020, 13, 49–52. [Google Scholar] [CrossRef]

- Han, B.-K.; Ryu, J.-K.; Kim, S.-C. Context-Aware winter sports based on multivariate sequence learning. Sensors 2019, 19, 3296. [Google Scholar] [CrossRef] [PubMed]

- Perol, T.; Gharbi, M.; Denolle, M. Convolutional neural network for earthquake detection and location. Sci. Adv. 2018, 4, e1700578. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Han, B.-K.; Kim, S.-C.; Kwon, D.-S. DeepSnake: Sequence Learning of Joint Torques Using a Gated Recurrent Neural Network. IEEE Access 2018, 6, 76263–76270. [Google Scholar] [CrossRef]

- Kim, S.-C.; Han, B.-K. Emulating Touch Signals from Multivariate Sensor Data Using Gated RNNs. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019. [Google Scholar]

- Pienaar, S.W.; Malekian, R. Human activity recognition using LSTM-RNN deep neural network architecture. In Proceedings of the 2019 IEEE 2nd Wireless Africa Conference (WAC), Pretoria, South Africa, 18–20 August 2019. [Google Scholar]

- Ullah, M.; Ullah, H.; Khan, S.D.; Cheikh, F.A. Stacked lstm network for human activity recognition using smartphone data. In Proceedings of the 2019 8th European Workshop on Visual Information Processing (EUVIP), Roma, Italy, 28–31 October 2019. [Google Scholar]

- Yu, S.; Qin, L. Human activity recognition with smartphone inertial sensors using bidir-lstm networks. In Proceedings of the 2018 3rd International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Huhhot, China, 14–16 September 2018. [Google Scholar]

- Sen, C.; Hartvigsen, T.; Yin, B.; Kong, X.; Rundensteiner, E. Human attention maps for text classification: Do humans and neural networks focus on the same words? In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Maaten, L.v.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn Res. 2008, 9, 2579–2605. [Google Scholar]

- Alsheikh, M.A.; Selim, A.; Niyato, D.; Doyle, L.; Lin, S.; Tan, H.-P. Deep activity recognition models with triaxial accelerometers. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Lin, S.; Yang, B.; Birke, R.; Clark, R. Learning Semantically Meaningful Embeddings Using Linear Constraints. In Proceedings of CVPR Workshops; The Computer Vision Foundation: New York, NY, USA, 2019. [Google Scholar]

| Class | Description | Figure | Class | Description | Figure |

|---|---|---|---|---|---|

| C1 | Normal |  | C4 | Left-knee valgus |  |

| C2 | Insufficient depth |  | C5 | Right-knee valgus |  |

| C3 | Insufficient depth with posterior tilting and knee valgus |  | C6 | Both-knee valgus |  |

| Arm Posture | Class | Min (Hour) | Data Points (Train) |

|---|---|---|---|

| Straight arm (SA) | C1 | 25.8 (0.43) | 103,200 (72,000) |

| C2 | 25.9 (0.43) | 103,500 (72,000) | |

| C3 | 25.95 (0.43) | 103,800 (72,000) | |

| C4 | 25.9 (0.43) | 103,600 (72,000) | |

| C5 | 25.3 (0.42) | 101,200 (68,000) | |

| C6 | 25.95 (0.43) | 103,800 (72,000) | |

| Crossed arm (CA) | C1 | 25.95 (0.43) | 103,800 (72,000) |

| C2 | 25.9 (0.43) | 103,600 (72,000) | |

| C3 | 25.1 (0.42) | 100,400 (68,000) | |

| C4 | 25.6 (0.43) | 102,400 (68,000) | |

| C5 | 25.85 (0.43) | 103,400 (72,000) | |

| C6 | 25.7 (0.43) | 102,800 (68,000) | |

| Hands on waist (HW) | C1 | 25.9 (0.43) | 103,600 (72,000) |

| C2 | 25.95 (0.43) | 103,800 (72,000) | |

| C3 | 25.95 (0.43) | 103,800 (72,000) | |

| C4 | 25.95 (0.43) | 103,800 (72,000) | |

| C5 | 25.8 (0.43) | 103,200 (72,000) | |

| C6 | 25.8 (0.43) | 103,200 (72,000) | |

| Total | 464.25 (7.72) | 1,856,900 (1,280,000) |

| Feature Name | Descriptions |

|---|---|

| fft_coefficient | Fourier coefficients of the one-dimensional discrete Fourier transform for real input by fast Fourier transform algorithm |

| change_quantiles | Average, absolute value of consecutive changes of the time series inside the corridor |

| abs_energy | Absolute energy of the time series, which is the sum over the squared values |

| variance | Variance of time series |

| standard_deviation | Standard deviation of time series |

| absolute_sum_of_changes | Sum over the absolute value of consecutive changes |

| Root_mean_square | Root mean square (rms) of the time series |

| mean_abs_change | Average over first differences |

| ratio_value_number_to_time_series_length | Factor, which is 1 if all values in the time series occur only once, and below one if this is not the case |

| linear_trend | A linear least-squares regression for the values of the time series versus the sequence from 0 to length of the time series minus one |

| Arm Posture | ||

|---|---|---|

| Straight Arm (SA) | Test/Train accuracy | 0.609/0.73 |

| Test/Train F1-score | 0.591/0.718 | |

| Crossed Arm (CA) | Test/Train accuracy | 0.619/0.725 |

| Test/Train F1-score | 0.62/0.726 | |

| Hands on Waist (HW) | Test/Train accuracy | 0.533/0.703 |

| Test/Train F1-score | 0.512/0.696 |

| Arm Posture | 1D-CNN | Bidirectional LSTM | Bidirectional GRU | BiLSTM with Attention | BiGRU with Attention | |

|---|---|---|---|---|---|---|

| Straight Arm (SA) | Test/Train accuracy | 0.513/0.628 | 0.61/1.0 | 0.571/1.0 | 0.663/1.0 | 0.635/1.0 |

| Test/Train F1-score | 0.507/0.624 | 0.61/1.0 | 0.569/1.0 | 0.663/1.0 | 0.633/1.0 | |

| Crossed Arm (CA) | Test/Train accuracy | 0.597/0.707 | 0.641/1.0 | 0.653/1.0 | 0.663/1.0 | 0.568/1.0 |

| Test/Train F1-score | 0.586/0.702 | 0.64/1.0 | 0.651/1.0 | 0.663/1.0 | 0.565/1.0 | |

| Hands on Waist (HW) | Test/Train accuracy | 0.711/0.781 | 0.828/1.0 | 0.829/1.0 | 0.871/1.0 | 0.854/1.0 |

| Test/Train F1-score | 0.71/0.78 | 0.83/1.0 | 0.829/1.0 | 0.871/1.0 | 0.856/1.0 |

| Title 1 | Arm Postures | ||

|---|---|---|---|

| SA | CA | HW | |

| Random forest | 0.339 | 0.314 | 0.243 |

| 1D-CNN | 0.301 | 0.355 | 0.533 |

| Bidirectional LSTM | 0.363 | 0.391 | 0.703 |

| Bidirectional GRU | 0.323 | 0.400 | 0.663 |

| BiLSTM with attention | 0.471 | 0.503 | 0.739 |

| BiGRU with attention | 0.396 | 0.416 | 0.732 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yun, S.-H.; Kim, H.-J.; Ryu, J.-K.; Kim, S.-C. Fine-Grained Motion Recognition in At-Home Fitness Monitoring with Smartwatch: A Comparative Analysis of Explainable Deep Neural Networks. Healthcare 2023, 11, 940. https://doi.org/10.3390/healthcare11070940

Yun S-H, Kim H-J, Ryu J-K, Kim S-C. Fine-Grained Motion Recognition in At-Home Fitness Monitoring with Smartwatch: A Comparative Analysis of Explainable Deep Neural Networks. Healthcare. 2023; 11(7):940. https://doi.org/10.3390/healthcare11070940

Chicago/Turabian StyleYun, Seok-Ho, Hyeon-Joo Kim, Jeh-Kwang Ryu, and Seung-Chan Kim. 2023. "Fine-Grained Motion Recognition in At-Home Fitness Monitoring with Smartwatch: A Comparative Analysis of Explainable Deep Neural Networks" Healthcare 11, no. 7: 940. https://doi.org/10.3390/healthcare11070940

APA StyleYun, S.-H., Kim, H.-J., Ryu, J.-K., & Kim, S.-C. (2023). Fine-Grained Motion Recognition in At-Home Fitness Monitoring with Smartwatch: A Comparative Analysis of Explainable Deep Neural Networks. Healthcare, 11(7), 940. https://doi.org/10.3390/healthcare11070940