Developing an Instrument to Evaluate the Quality of Dementia Websites

Abstract

:1. Introduction

2. Materials and Methods

2.1. Step 1: Existing Instrument Identification

2.2. Step 2: Criteria Determination

2.3. Step 3: Measurement Statement Selection and Revision

2.4. Step 4: Instrument Validation

3. Results

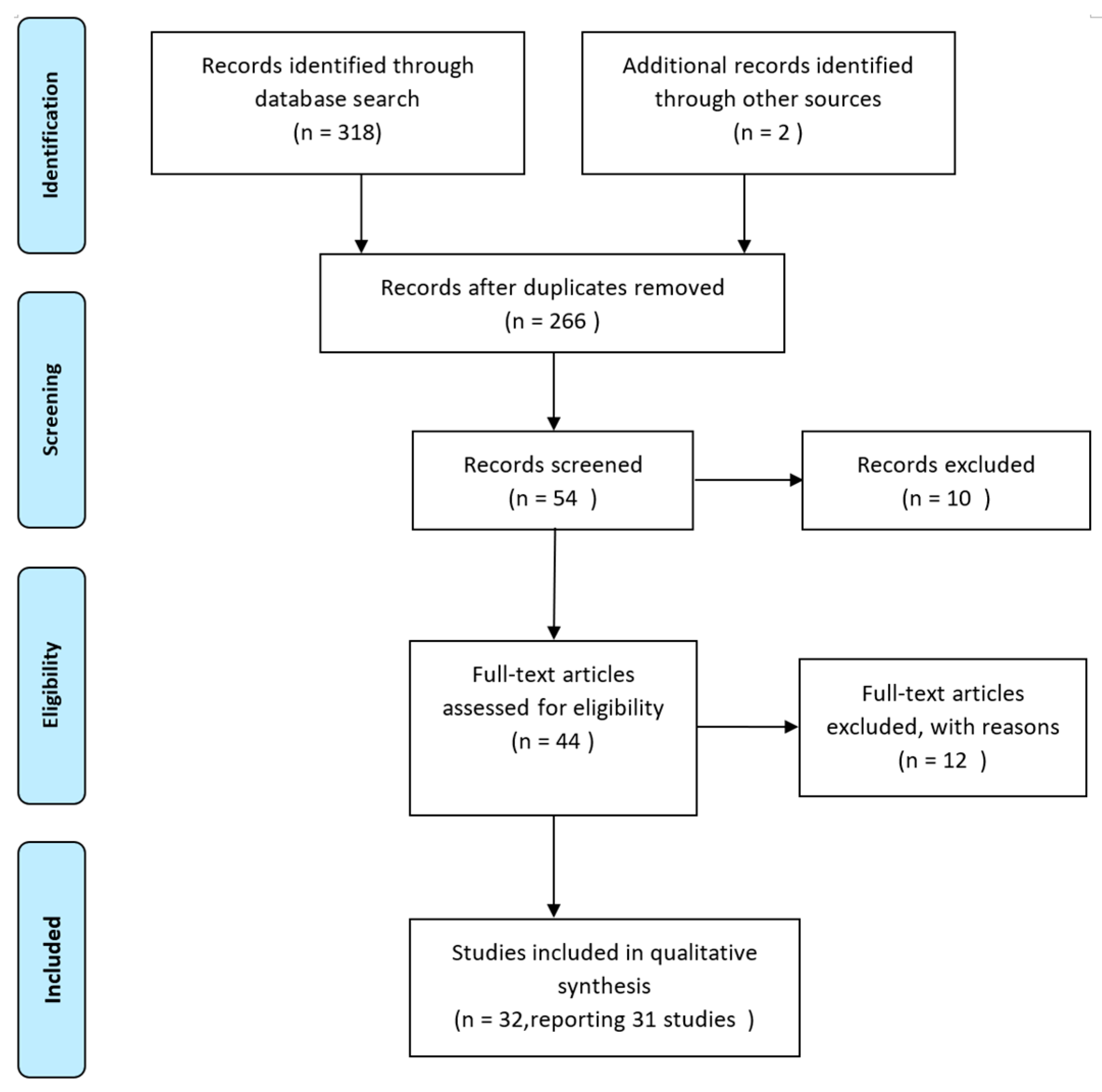

3.1. Step 1: Existing Instrument Identification

3.2. Step 2: Criteria Determination

3.3. Step 3. Measurement Statement Selection and Revision

3.4. Step 4: Instrument Validation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Search strategies | |

| Database | PubMed, Scope, CINAHL Plus with full text, and Web of Science |

| Extra resource | Google Scholar, Forward tracked papers (the most recent systematic review of the quality of online dementia information for consumers) |

| Search terms and combination | Searching field | Limit | Database | Result | |

| Keywords | “health” | Title, abstract | Data range (inclusive): Open access Full text English | PubMed PubMed Central MEDLINE with Full Text Scopus CINAHL Plus with Full Text Web of Science Other resources | 66 34 40 73 40 65 2 |

| AND | “web” or “website” or “site” or “internet” or “online” | Title, abstract | |||

| AND | “quality” or “design” or “evaluat *” or "assessment" or “credibility” or “criteri *” | Title/Abstract/All Text | |||

| AND | “information” | All Fields |

Appendix B

| Author(s) (Year) | Country of Origin | Health Domain | Evaluation Instrument | Scoring System | Target User Group |

|---|---|---|---|---|---|

| Ahmed, O.H., et al. (2012) [38] | New Zealand | Concussion | 1. HONcode 2. CONcheck 3. FRES 4. FKGL | 1. YES/NO response format 2. 3-point Likert Scales 3. 0–100 score(s) 4. 0–12 grade(s) | Health consumers |

| Alamoudi, U., Hong, P. (2012) [44] | Canada | Microtia and Aural atresia | 1. DISCERN 2. HONcode 3. FRES 4. FKGL | 1. 5-point Likert Scales 2. YES/NO response format 3. 0–100 score(s) 4. 0–12 grade(s) | Health consumers |

| Alsoghier, A., et al. (2018) [45] | UK | Oral epithelial dysplasia | 1. DISCERN 2. JAMA 3. HONcode 4. FRES 5. FKGL | 1. 5-point Likert Scales 2. 4-point Likert Scales 3. YES/NO response format 4. 0–100 score(s) 5. 0–12 grade(s) | Patients |

| Anderson, K.A., et al. (2009) [46] | USA | Dementia | DCET | 3-point Likert Scales | Caregivers |

| Arif, N., Ghezzi, P. (2018) [43] | UK | Breast cancer | 1. JAMA 2. HONcode 3. FKGL 4. SMOG | 1. 4-point Likert Scales 2. YES/NO response format 3. 0–12 grade(s) 4. 5–18 grades | Patients |

| Arts et al. (2019) [47] | UK | Eating disorder | 1. DISCERN 2. FRES | 1. 5-point Likert Scales 2. 0–100 score(s) | Health consumers |

| Borgmann, H., et al. (2017) [48] | Germany | Prostate Cancer | 1. DISCERN 2. JAMA 3. HONcode 4. LIDA tool 5. FKGL 6. FRES | 1. 5-point Likert Scales 2. 4-point Likert Scales 3. YES/NO response format 4. 4-point Likert Scales 5. 0–100 score(s) 6. 0–12 grade(s) | Patients |

| Daraz, L., et al. (2011) [35] | Canada | Fibromyalgia | 1. DISCERN 2. Quality checklist 3. FRES 4. FKGL | 1. 5-point Likert Scales 2. YES/NO response format 3. 0–100 score(s) 4. 0–12 grade(s) | Health consumers |

References

- Wang, X.; Shi, J.; Kong, H. Online health information seeking: A review and meta-analysis. Health Commun. 2021, 36, 1163–1175. [Google Scholar] [CrossRef] [PubMed]

- Dhana, K.; Beck, T.; Desai, P.; Wilson, R.S.; Evans, D.A.; Rajan, K.B. Prevalence of Alzheimer’s disease dementia in the 50 US states and 3142 counties: A population estimate using the 2020 bridged-race postcensal from the National Center for Health Statistics. Alzheimer’s Dement. 2023, 19, 4388–4395. [Google Scholar] [CrossRef] [PubMed]

- Dementia Information. Available online: https://www.dementiasplatform.com.au/dementia-information (accessed on 1 December 2023).

- Daraz, L.; Morrow, A.S.; Ponce, O.J.; Beuschel, B.; Farah, M.H.; Katabi, A.; Alsawas, M.; Majzoub, A.M.; Benkhadra, R.; Seisa, M.O.; et al. Can patients trust online health information? A meta-narrative systematic review addressing the quality of health information on the internet. J. Gen. Intern. Med. 2019, 34, 1884–1891. [Google Scholar] [CrossRef]

- Quinn, S.; Bond, R.; Nugent, C. Quantifying health literacy and eHealth literacy using existing instruments and browser-based software for tracking online health information seeking behavior. Comput. Hum. Behav. 2017, 69, 256–267. [Google Scholar] [CrossRef]

- Soong, A.; Au, S.T.; Kyaw, B.M.; Theng, Y.L.; Tudor Car, L. Information needs and information seeking behaviour of people with dementia and their non-professional caregivers: A scoping review. BMC Geriatr. 2020, 20, 61. [Google Scholar] [CrossRef] [PubMed]

- Zhi, S.; Ma, D.; Song, D.; Gao, S.; Sun, J.; He, M.; Zhu, X.; Dong, Y.; Gao, Q.; Sun, J. The influence of web-based decision aids on informal caregivers of people with dementia: A systematic mixed-methods review. Int. J. Ment. Health Nurs. 2023, 32, 947–965. [Google Scholar] [CrossRef]

- Alibudbud, R. The Worldwide Utilization of Online Information about Dementia from 2004 to 2022: An Infodemiological Study of Google and Wikipedia. Ment. Health Nurs. 2023, 44, 209–217. [Google Scholar] [CrossRef]

- Monnet, F.; Pivodic, L.; Dupont, C.; Dröes, R.M.; van den Block, L. Information on advance care planning on websites of dementia associations in Europe: A content analysis. Aging Ment. Health 2023, 27, 1821–1831. [Google Scholar] [CrossRef]

- Steiner, V.; Pierce, L.L.; Salvador, D. Information needs of family caregivers of people with dementia. Rehabil. Nurs. 2016, 41, 162–169. [Google Scholar] [CrossRef]

- Efthymiou, A.; Papastavrou, E.; Middleton, N.; Markatou, A.; Sakka, P. How caregivers of people with dementia search for dementia-specific information on the internet: Survey study. JMIR Aging 2020, 3, e15480. [Google Scholar] [CrossRef]

- Allison, R.; Hayes, C.; McNulty, C.A.; Young, V. A comprehensive framework to evaluate websites: Literature review and development of GoodWeb. JMIR Form. Res. 2019, 3, e14372. [Google Scholar] [CrossRef] [PubMed]

- Sauer, J.; Sonderegger, A.; Schmutz, S. Usability, user experience and accessibility: Towards an integrative model. Ergonomics 2020, 63, 1207–1220. [Google Scholar] [CrossRef] [PubMed]

- Henry, L.S. User Experiences and Benefits to Organizations. Available online: https://www.w3.org/WAI/media/av/users-orgs/ (accessed on 1 December 2023).

- Dror, A.A.; Layous, E.; Mizrachi, M.; Daoud, A.; Eisenbach, N.; Morozov, N.; Srouji, S.; Avraham, K.B.; Sela, E. Revealing global government health website accessibility errors during COVID-19 and the necessity of digital equity. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Bandyopadhyay, M.; Stanzel, K.; Hammarberg, K.; Hickey, M.; Fisher, J. Accessibility of web-based health information for women in midlife from culturally and linguistically diverse backgrounds or with low health literacy. Aust. N. Z. J. Public Health 2022, 46, 269–274. [Google Scholar] [CrossRef]

- Boyer, C.; Selby, M.; Scherrer, J.R.; Appel, R.D. The health on the net code of conduct for medical and health websites. Comput. Biol. Med. 1998, 28, 603–610. [Google Scholar] [CrossRef]

- Web Médica Acreditada. Available online: https://wma.comb.es/es/home.php (accessed on 1 December 2023).

- Närhi, U.; Pohjanoksa-Mäntylä, M.; Karjalainen, A.; Saari, J.K.; Wahlroos, H.; Airaksinen, M.S.; Bell, S.J. The DARTS tool for assessing online medicines information. Pharm. World Sci. 2008, 30, 898–906. [Google Scholar] [CrossRef]

- Provost, M.; Koompalum, D.; Dong, D.; Martin, B.C. The initial development of the WebMedQual scale: Domain assessment of the construct of quality of health web sites. Int. J. Med. Inform. 2006, 75, 42–57. [Google Scholar] [CrossRef]

- Dillon, W.A.; Prorok, J.C.; Seitz, D.P. Content and quality of information provided on Canadian dementia websites. Can. Geriatr. J. 2013, 16, 6. [Google Scholar] [CrossRef]

- Bath, P.A.; Bouchier, H. Development and application of a tool designed to evaluate web sites providing information on alzheimer’s disease. J. Inf. Sci. 2003, 29, 279–297. [Google Scholar] [CrossRef]

- Zelt, S.; Recker, J.; Schmiedel, T.; vom Brocke, J. Development and validation of an instrument to measure and manage organizational process variety. PLoS ONE 2018, 13, e0206198. [Google Scholar] [CrossRef]

- Ayre, C.; Scally, A.J. Critical values for Lawshe’s content validity ratio: Revisiting the original methods of calculation. Measurement and evaluation in counseling and development. Meas. Eval. Couns. Dev. 2014, 47, 79–86. [Google Scholar] [CrossRef]

- Welch, V.; Brand, K.; Kristjansson, E.; Smylie, J.; Wells, G.; Tugwell, P. Systematic reviews need to consider applicability to disadvantaged populations: Inter-rater agreement for a health equity plausibility algorithm. BMC Med. Res. Methodol. 2012, 12, 187. [Google Scholar] [CrossRef] [PubMed]

- Charnock, D. Quality criteria for consumer health information on treatment choices. In The DISCERN Handbook; University of Oxford and The British Library: Radcliffe, UK, 1998; Volume 21, pp. 53–55. [Google Scholar]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; Sage: Newcastle upon Tyne, UK, 2013. [Google Scholar]

- Leite, P.; Gonçalves, J.; Teixeira, P.; Rocha, Á. A model for the evaluation of data quality in health unit websites. Health Inform. J. 2016, 22, 479–495. [Google Scholar] [CrossRef] [PubMed]

- Minervation. Available online: https://www.minervation.com/wp-content/uploads/2011/04/Minervation-LIDA-instrument-v1-2.pdf (accessed on 1 December 2023).

- Martins, E.N.; Morse, L.S. Evaluation of internet websites about retinopathy of prematurity patient education. Br. J. Ophthalmol. 2005, 89, 565–568. [Google Scholar] [CrossRef] [PubMed]

- Prusti, M.; Lehtineva, S.; Pohjanoksa-Mäntylä, M.; Bell, J.S. The quality of online antidepressant drug information: An evaluation of English and Finnish language Web sites. Res. Social Adm. Pharm. 2012, 8, 263–268. [Google Scholar] [CrossRef] [PubMed]

- Kashihara, H.; Nakayama, T.; Hatta, T.; Takahashi, N.; Fujita, M. Evaluating the quality of website information of private-practice clinics offering cell therapies in Japan. Interact. J. Med. Res. 2016, 5, e5479. [Google Scholar] [CrossRef] [PubMed]

- Keselman, A.; Arnott Smith, C.; Murcko, A.C.; Kaufman, D.R. Evaluating the quality of health information in a changing digital ecosystem. J. Med. Internet Res. 2019, 21, e11129. [Google Scholar] [CrossRef] [PubMed]

- Silberg, W.M.; Lundberg, G.D.; Musacchio, R.A. Assessing, controlling, and assuring the quality of medical information on the Internet: Caveant lector et viewor—Let the reader and viewer beware. JAMA 1997, 277, 1244–1245. [Google Scholar] [CrossRef]

- Daraz, L.; MacDermid, J.C.; Wilkins, S.; Gibson, J.; Shaw, L. The quality of websites addressing fibromyalgia: An assessment of quality and readability using standardised tools. BMJ Open 2011, 1, e000152. [Google Scholar] [CrossRef]

- Pealer, L.N.; Dorman, S.M. Evaluating health-related Web sites. J. Sch. Health 1997, 67, 232–235. [Google Scholar] [CrossRef]

- Duffy, D.N.; Pierson, C.; Best, P. A formative evaluation of online information to support abortion access in England, Northern Ireland and the Republic of Ireland. BMJ Sex. Reprod. Health 2019, 45, 32–37. [Google Scholar] [CrossRef]

- Ahmed, O.H.; Sullivan, S.J.; Schneiders, A.G.; McCrory, P.R. Concussion information online: Evaluation of information quality, content and readability of concussion-related websites. Br. J. Sports Med. 2012, 46, 675–683. [Google Scholar] [CrossRef]

- Schmitt, P.J.; Prestigiacomo, C.J. Readability of neurosurgery-related patient education materials provided by the American Association of Neurological Surgeons and the National Library of Medicine and National Institutes of Health. World Neurosurg. 2013, 80, e33–e39. [Google Scholar] [CrossRef]

- Rolstad, S.; Adler, J.; Rydén, A. Response burden and questionnaire length: Is shorter better? A review and meta-analysis. Value Health 2011, 14, 1101–1108. [Google Scholar] [CrossRef] [PubMed]

- Herzog, A.R.; Bachman, J.G. Effects of questionnaire length on response quality. Public Opin. Q. 1981, 45, 549–559. [Google Scholar] [CrossRef]

- Galesic, M.; Bosnjak, M. Effects of questionnaire length on participation and indicators of response quality in a web survey. Public Opin. Q. 2009, 73, 349–360. [Google Scholar] [CrossRef]

- Arif, N.; Ghezzi, P. Quality of online information on breast cancer treatment options. Breast 2018, 37, 6–12. [Google Scholar] [CrossRef]

- Alamoudi, U.; Hong, P. Readability and quality assessment of websites related to microtia and aural atresia. Int. J. Pediatr. Otorhinolaryngol. 2012, 79, 151–156. [Google Scholar] [CrossRef]

- Alsoghier, A.; Riordain, R.N.; Fedele, S.; Porter, S. Web-based information on oral dysplasia and precancer of the mouth–quality and readability. Oral Oncol. 2018, 82, 69–74. [Google Scholar] [CrossRef] [PubMed]

- Anderson, K.A.; Nikzad-Terhune, K.A.; Gaugler, J.E. A systematic evaluation of online resources for dementia caregivers. J. Consum. Health Internet 2009, 13, 1–13. [Google Scholar] [CrossRef]

- Arts, H.; Lemetyinen, H.; Edge, D. Readability and quality of online eating disorder information—Are they sufficient? A systematic review evaluating websites on anorexia nervosa using DISCERN and Flesch Readability. Int. J. Eat. Disord. 2020, 53, 128–132. [Google Scholar] [CrossRef] [PubMed]

- Borgmann, H.; Wölm, J.H.; Vallo, S.; Mager, R.; Huber, J.; Breyer, J.; Salem, J.; Loeb, S.; Haferkamp, A.; Tsaur, I. Prostate cancer on the web—expedient tool for patients’ decision-making? J. Cancer Educ. 2017, 32, 135–140. [Google Scholar] [CrossRef] [PubMed]

Inclusion criteria

|

Exclusion criteria

|

Inclusion criteria

|

Exclusion criteria

|

| Criteria | Definition | References |

|---|---|---|

| Relevance | Relevance refers to the degree to which the information and hyperlinks are pertinent to the user’s needs. | [20,21,22] |

| Credibility | Credibility means the information is truthful or not biased. It is also referred to as trustworthiness. It is measured by three indicators: “Authorship”, “Attribution”, and “Disclosure”. | [17,20,29,32,34] |

| Currency | Currency refers to whether the content is up to date. The main indicators include the publication date and the time of the last update. | [19,20,32,40] |

| Accessibility | Accessibility refers to whether a website can be easily accessed and navigated around. The indicators include access by multiple languages; the provision of additional support; availability of search mechanisms; link to social media, relevant websites, and organisations; and providing access for consumers with disabilities. | [20,29,32] |

| Interactivity | Interactivity involves features that enable users to interact with the content, provide feedback, communicate with other users or the website’s author, and actively participate in various activities offered on the website. Essentially, it is about the responsiveness and ability of a system to engage users in two-way communication or participation rather than being a passive recipient of information. The indicators include whether the website provides opportunities to give feedback, whether users can exchange information with others, and whether the author can be contacted. | [20,29] |

| Attractiveness | Attractiveness refers to the look and feel of a site. The major indicators are site layout, the use of images, and the use of headings. | [20,29,32] |

| Privacy | Privacy refers to whether a website respects the privacy of personal data submitted by consumers. The privacy policy describes what information is collected, how it is used, and who can access it. | [20,22] |

| Reason for Exclusion | Excluded Measurement Statements |

|---|---|

| The measurement statements guide web designers to evaluate the accessibility of the websites but may not be observable to the consumers. | “Does the site state expect response times for feedback?” “Does the site have any unnecessary links, layers, or clicks between documents or pages?” “Does the site provide instructions about how to disable cookies?” “Does the site present a policy statement or criteria for selecting links?” “Does the site clearly state that links have been reviewed?” |

| The measurement statements are about other diseases. | “Are links provided to regulate online and offline abortion services?” |

| The measurement statements require subjective evaluation. | “Are you in agreement with the entire website’s content?” “Is it easy to find the information you need?” “Is the content comprehensive within the given area?” |

| The measurement statements were vague or too general. | “Is the website organised logically?” “Is the website eye-pleasing?” “Is the page layout logical?” |

| Criteria | Measurement Statement |

|---|---|

| Relevance | The website explores diverse aspects of dementia. |

| ☐ Yes ☐ No | |

| The website provides valuable insights for individuals living with dementia. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| Credibility | The website cites references for the information presented. |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| Any potential conflicts of interest arising from the website’s support are fully disclosed. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The qualifications of the website owner(s) are clearly displayed. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The author is recognized in the profession of health education or a related field. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The website clearly states who is responsible for its content. | |

| ☐ Yes ☐ No | |

| Currency | The website displays the date of content creation. |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The website indicates the date when the content was last updated. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The website provides recent events or advancements related to dementia. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| Accessibility | The website features a user-friendly search mechanism, enabling visitors to find information efficiently. |

| ☐ Yes ☐ No | |

| The website offers content in the language preferred by the consumers. | |

| ☐ Yes ☐ No | |

| The website is user-friendly for individuals with disabilities. | |

| ☐ Yes ☐ No | |

| The website provides a link(s) to social media. | |

| ☐ Yes ☐ No | |

| The website provides a link(s) to relevant external websites for further support and resources. | |

| ☐ Yes ☐ No | |

| The website provides information about the related organization (health service or support organization). | |

| ☐ Yes ☐ No | |

| The website connects users to other types of media (pamphlets, books, etc.) for additional information. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| Interactivity | The website provides an opportunity for users to give feedback. |

| ☐ Yes ☐ No | |

| The website offers interactive features like discussion rooms or message boards for user engagement. | |

| ☐ Yes ☐ No | |

| Author(s) can be contacted (by email, telephone or post). | |

| ☐ Yes ☐ No | |

| The website layout and design are intuitive, enhancing my overall experience. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| I can easily navigate the website and find the information I am looking for. | |

| ☐ Yes ☐ No | |

| I find the color scheme and visual elements engaging and pleasant. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| The website’s graphics enhance my understanding and engagement. | |

| ☐ Strongly agree ☐ Agree ☐ Neither agree nor disagree ☐ Disagree ☐ Strongly Disagree | |

| Privacy | The website states a privacy policy. |

| ☐ Yes ☐ No |

| Instrument | Kappa Value Mean (95% CI) | ICC Mean (95% CI) |

|---|---|---|

| TEST | 0.61 (0.34–0.91) | 0.97 (0.95–0.98) |

| DISCERN | 0.34 (0.33–0.39) | 0.80 (0.68–0.89) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Song, T.; Zhang, Z.; Yu, P. Developing an Instrument to Evaluate the Quality of Dementia Websites. Healthcare 2023, 11, 3163. https://doi.org/10.3390/healthcare11243163

Zhu Y, Song T, Zhang Z, Yu P. Developing an Instrument to Evaluate the Quality of Dementia Websites. Healthcare. 2023; 11(24):3163. https://doi.org/10.3390/healthcare11243163

Chicago/Turabian StyleZhu, Yunshu, Ting Song, Zhenyu Zhang, and Ping Yu. 2023. "Developing an Instrument to Evaluate the Quality of Dementia Websites" Healthcare 11, no. 24: 3163. https://doi.org/10.3390/healthcare11243163

APA StyleZhu, Y., Song, T., Zhang, Z., & Yu, P. (2023). Developing an Instrument to Evaluate the Quality of Dementia Websites. Healthcare, 11(24), 3163. https://doi.org/10.3390/healthcare11243163